Abstract

Gradients of smooth functions with nonindependent variables are relevant for exploring complex models and for the optimization of the functions subjected to constraints. In this paper, we investigate new and simple approximations and computations of such gradients by making use of independent, central, and symmetric variables. Such approximations are well suited for applications in which the computations of the gradients are too expansive or impossible. The derived upper bounds of the biases of our approximations do not suffer from the curse of dimensionality for any 2-smooth function, and they theoretically improve the known results. Also, our estimators of such gradients reach the optimal (mean squared error) rates of convergence (i.e., ) for the same class of functions. Numerical comparisons based on a test case and a high-dimensional PDE model show the efficiency of our approach.

Keywords:

dependent variables; gradients; high-dimensional models; optimal estimators; tensor metric of nonindependent variables MSC:

26A24; 60H25; 62Gxx; 49Qxx

1. Introduction

Nonindependent variables arise when at least two variables do not vary independently, and such variables are often characterized by their covariance matrices, distribution functions, copulas, and weighted distributions (see, e.g., [1,2,3,4,5,6,7]). Recently, dependency models provide explicit functions that link these variables together by means of additional independent variables [8,9,10,11,12]. Models with nonindependent input variables, including functions subjected to constraints, are widely encountered in different scientific fields, such as data analysis, quantitative risk analysis, and uncertainty quantification (see, e.g., [13,14,15]).

Analyzing such functions requires being able to calculate or to compute their dependent gradients, that is, the gradients that account for the dependencies among the inputs. Recall that gradients are involved in (i) inverse problems and optimization (see, e.g., [16,17,18,19,20]), (ii) exploring complex mathematical models or simulators (see [21,22,23,24,25,26,27,28] for independent inputs and [9,15] for nonindependent variables); (iii) Poincaré inequalities and equalities [9,28,29,30], and recently in (iv) the derivative-based ANOVA (i.e., exact expansions) of functions [28]. While the first-order derivatives of functions with nonindependent variables have been derived in [9] for screening dependent inputs of high-dimensional models, the theoretical expressions of the gradients of such functions (dependent gradients) have been introduced in [15], thereby enhancing the difference between the gradients and the first-order partial derivatives when the input variables are dependent or correlated.

In high-dimensional settings and for time-demanding models, having an efficient approach for computing the dependent gradients provided in [15] using a few model evaluations is worth investigating. So far, the adjoint methods can provide the exact classical gradients for some classes of PDE/ODE-based models [31,32,33,34,35,36]. Additionally, Richardson’s extrapolation and its generalization considered in [37] provide accurate estimates of the classical gradients using a number of model runs that strongly depends on the dimensionality. In contrast, the Monte Carlo approach allows for computing the classical gradients using a number of model runs that can be very less than the dimensionality (i.e., ) [17,38,39]. The Monte Carlo approach is a consequence of the Stokes theorem, which claims that the expectation of a function evaluated at a random point about is the gradient of a certain function. Such a property leads to randomized approximations of the classical gradients in derivative-free optimization or zero-order stochastic optimization (see [16,18,19,20] and the references therein). Such approximations are also relevant for applications in which the computations of the gradients are impossible [20].

Most of the randomized approximations of the classical gradients, including the Monte Carlo approach, rely on randomized kernels and/or random vectors that are uniformly distributed on the unit ball. The qualities of such approximations are often assessed by the upper bounds of the biases and the rates of convergence. The upper bounds provided in [19,20,40] depend on the dimensionality in general.

In this paper, we propose new surrogates of the gradients of smooth functions with nonindependent inputs and the associated estimators that comply with the following requirements:

- They are simple and applicable to a wide class of functions by making use of model evaluations at randomized points, which are only based on independent, central, and symmetric variables;

- They lead to a dimension-free upper bound of the bias, and they improve the best known upper bounds of the bias for the classical gradients;

- They lead to the optimal and parametric (mean squared error) rates of convergence;

- They are going to increase the computational efficiency and accuracy of the gradients estimates by means of a set of constraints.

The surrogates of the dependent gradients are derived in Section 3 by combining the properties of (i) the generalized Richardson extrapolation approach thanks to a set of constraints and (ii) the Monte Carlo approach based only on independent random variables that are symmetrically distributed about zero. Such expressions are followed by their order of approximations, biases, and a comparison with known results for the classical gradients. We also provide the estimators of such surrogates and their associated mean squared errors, including the rates of convergence for a wide class of functions (see Section 3.3). A number of numerical comparisons is considered so as to assess the efficiency of our approach. Section 4 presents comparisons of our approach to other methods and simulations based on a high-dimensional PDE (spatiotemporal) model with given autocollaborations among the initial conditions, which are considered in Section 5 to compare our approach to the adjoint-based methods. We conclude this work in Section 6.

2. Preliminaries

For an integer , let be a random vector of continuous and nonindependent variables having F as the joint cumulative distribution function (CDF) (i.e., ). For any , we use or for the marginal CDF of and for its inverse. Also, we use and . The equality (in distribution) means that X and Z have the same CDF.

As the sample values of are dependent, here we use for the formal partial derivative of f with respect to , that is, the partial derivative obtained by considering other inputs as constant or independent of . Thus, stands for the formal or classical gradient of f.

Given an open set , consider a weak partial differentiable function [41,42]. Given , denote ; , , and consider the Hölder space of -smooth functions given by

with and . We use for the Euclidean norm, for the norm, for the expectation, and for the variance.

For the stochastic evaluations of functions, consider , with , , and denote with the d-dimensional random vectors of independent variables satisfying the following: ,

The random vectors of the independent variables that are symmetrically distributed about zero are instances of , including the standard Gaussian random vector and the symmetric uniform distributions about zero.

Also, denote ; and . The real s are used for controlling the order of approximations and the order of derivatives (i.e., ) that we are interested in. Finally, s are used to define a neighborhood of a sample point of (i.e., ). Thus, using and keeping in mind the variance of , we assume that ,

Assumption 1.

or equivalently for bounded .

3. Main Results

This section aims at providing new expressions of the gradient of a function with nonindependent variables and the associated order of approximations. We are also going to derive the estimators of such a gradient, including the optimal and parametric rates of convergence. Recall that the input variables are said to be nonindependent whenever there exist at least two variables such that the joint CDF .

3.1. Stochastic Expressions of the Gradients of Functions with Dependent Variables

Using the fact that , with , we are able to model as follows [8,9,10,11,12,14,43]:

where ; ; and are independent. Moreover, we have , and it is worth noting that the function is invertible with respect to for continuous variables, that is,

Note that the formal Jacobian matrix of , is the identity matrix. As is a sample value of , the dependent Jacobean of g based on the above dependency function is clearly not the identity matrix due to the fact that such a matrix accounts for the dependencies among the elements of . The dependent partial derivatives of with respect to are then given by [9,15]

and the dependent Jacobian matrix becomes (see [15] for more details)

Moreover, the gradient of f with nonindependent variables is given by [15]

with being the tensor metric and being its generalized inverse. Based on the above framework, Theorem 1 provides the stochastic expression of . In what follows, denote .

Theorem 1.

Assume that , with , Assumption 1 holds and that the s are distinct. Then, there exists and reals coefficients such that

Proof.

See Appendix A for the detailed proof. □

Using the Kronecker symbol , the setting , or the constraints lead to the order of approximation , while the constraints allow for increasing that order up to . For distinct s, the above constraints lead to the existence of the constants . Indeed, some constraints rely on the Vandermonde matrix of the form

which is invertible for distinct values of the s (i.e., ), because the determinant .

Remark 1.

For an even integer L, the following nodes may be considered: . When L is odd, one may add 0 to the above set. Of course, there are other possibilities, provided that .

Beyond the strong assumption made on the functions in Theorem 1, and knowing that increasing L will require more evaluations of f at random points, we are going to derive the upper bounds of the biases of our appropriations under different structural assumptions on the deterministic functions f and , such as , with . To that end, denote as a d-dimensional random vector of independent variables that are centered about zero and standardized (i.e., , ), and let be the set of such random vectors. Define

with the matrix obtained by putting the entries of in the absolute value.

When , only or can be considered for any function that belongs to . To be able to derive the parametric rates of convergence, Corollary 1 starts providing the upper bounds of the bias when .

Corollary 1.

Consider ; ; and . If and Assumption 1 hold, then there exists such that

Proof.

Detailed proofs are provided in Appendix B. □

For a particular choice of , we obtain the results below.

Corollary 2.

Consider ; ; . If , where , , , and Assumption 1 hold, then

Proof.

Since , we have , and the results hold using the following upper bounds: and obtained in Appendix B. □

It is worth noting that choosing and leads to the dimension-free upper bound of the bias, that is,

because is a function of d in general.

For the sequel of generality, Corollary 3 provides the bias of our approximations for highly smooth functions. To that end, define

Corollary 3.

For an odd integer , consider . If and Assumption 1 hold, then there exists such that

Moreover, if , with and , then

Proof.

The proofs are similar to those of Corollary 1 (see Appendix B). □

In view of the results provided in Corollary 3, finding the s and Cs that minimize the quantity might be helpful for improving the above upper bounds.

3.2. Links to Other Works for Independent Input Variables

Recall that for independent input variables, the matrix comes down to the identity matrix, and . Thus, Equation (7) becomes

when . Taking leads to the upper bound .

Other results about the upper bounds of the bias of the (formal) gradient approximations have been provided in [19,20] (and the references therein) under the same assumptions made on f and the evaluations of f. Such results rely on a random vector that is uniformly distributed on the unit ball and a kernel K. Under such a framework, the upper bound derived in [19,20] is

where is independent of . Therefore, our results improve the upper bound obtained in [19,20] when , for instance.

3.3. Computation of the Gradients of Functions with Dependent Variables

Consider a sample of given by . Using Equation (3), the estimator of is derived as follows:

To assess the quality of such an estimator, it is common to use the mean squared error (MSE), including the rates of convergence. The MSEs are often used in statistics for determining the optimal value of as well. Theorem 2 and Corollary 4 provide such quantities of interest. To that end, define

Theorem 2.

Consider ; ; and . If and Assumption 1 hold, then

Moreover, if , with , and , then

Proof.

See Appendix C. □

Using a uniform bandwidth, that is, , with , the upper bounds of MSEs provided in Theorem 2 have simple expressions. Indeed, the upper bounds in Equations (8) and (9) become, respectively,

It comes out that the second terms of the above upper bounds do not depend on the bandwidth h. This key observation leads to the derivation of the optimal and parametric rates of convergence of the proposed estimator.

Corollary 4.

Under the assumptions made in Theorem 2, if and , with , then we have

Proof.

The proof is straightforward, since and when . □

It is worth noting that the upper bound of the squared bias obtained in Corollary 4 does not depend on the dimensionality, thanks to the choice of . But, the derived rate of convergence depends on , thus meaning that our estimator suffers from the curse of dimensionality. In higher dimensions, an attempt to improve our results consists of controlling the upper bound of the second-order moment of the estimator through .

Remark 2.

For highly smooth functions (i.e., , with ) and under the assumptions made in Corollary 3, we can check that (see Appendix C)

4. Computations of the Formal Gradient of Rosenbrock’s Function

For comparing our approach to (i) the finite differences method (FDM) using the R package numDeriv [44], with , and (ii) the Monte Carlo (MC) approach provided in [17], with , let us consider the Rosenbrock function given as follows: ,

The gradient of that function at is (see [17]). To assess the numerical accuracy of each approach, the following measure is considered:

where is the estimated value of the gradient. Table 1 reports the values of for the three approaches. To obtain the results using our approach, we have used , with N as the sample size and , with . Also, the Sobol sequence has been used for generating the values of the s, and the Gram–Schmidt algorithm is applied to obtain (perfect) orthogonal vectors for a given N.

Table 1.

Values of for three different approximations of the formal gradients.

Based on Table 1, our approach provides efficient results compared to other methods. Since the FDM is not possible when , it comes out that our approach is quite flexible thanks to L and the fact that the gradient can be computed for every value of N. Increasing N improved our results, as expected.

5. Application to a Heat PDE Model with Stochastic Initial Conditions

5.1. Heat Diffusion Model and Its Formal Gradient

Consider a time-dependent model defined by the one-dimensional (1-D) diffusion PDE with stochastic initial conditions, that is,

where represents the diffusion coefficient. It is common to consider as the quantity of interest (QoI). The spatial discretisation consists in subdividing the spatial domain in d equally sized cells, which leads to d initial conditions or inputs given by with . Given zero-mean random variables , assume that , where represents the inverse precision about our knowledge on the initial conditions. For the dynamic aspect, a time step of is considered starting from 0 up to .

Given a direction and the Gâteaux derivative , the tangent linear model is derived as follows:

and we can check that the adjoint model (AM) (i.e., ) is given by

The formal gradient of with respect to the inputs is . Remark that the above gradient relies on , and only one evaluation of such a function is needed.

5.2. Spatial Autocorrelations of Initial Conditions and the Tensor Metric

Recall that the above gradient is based on the assumption of independent input variables, thus suggesting that the initial conditions within different cells are uncorrelated. To account for the spatial autocorrelations between different cells, assume that the d input variables follow the Gaussian process with the following autocorrelation function:

where if and is zero otherwise. Such spatial autocorrelations lead to the correlation matrix of the form

Using the same standard deviation leads to the following covariance matrix , and , with , and being the centers of the cells. The associated dependency model is given below.

Consider the diagonal matrix and the Gaussian random vector . Denote with the matrix obtained by moving the row and column of to the the first row and column; is the Cholesky factor of , and . We can see that , and the dependency model is given by [10]

Based on Equation (10), we have . Thus, we can deduce that , with being the column of , and the dependent Jacobian becomes , since , and . The tensor metric is given by .

5.3. Comparisons between Exact Gradient and Estimated Gradients

For running the above PDE-based model using the R package deSolve [45], we are given and . The exact and formal gradient associated with the mean values of the initial conditions is obtained by running the corresponding adjoint model. For estimating the gradient using the proposed estimators, we consider and . We also use and . The Sobol sequence is used for generating the random values of the s, and the Gram–Schmidt algorithm is applied to obtain perfect orthogonal vectors for a given N.

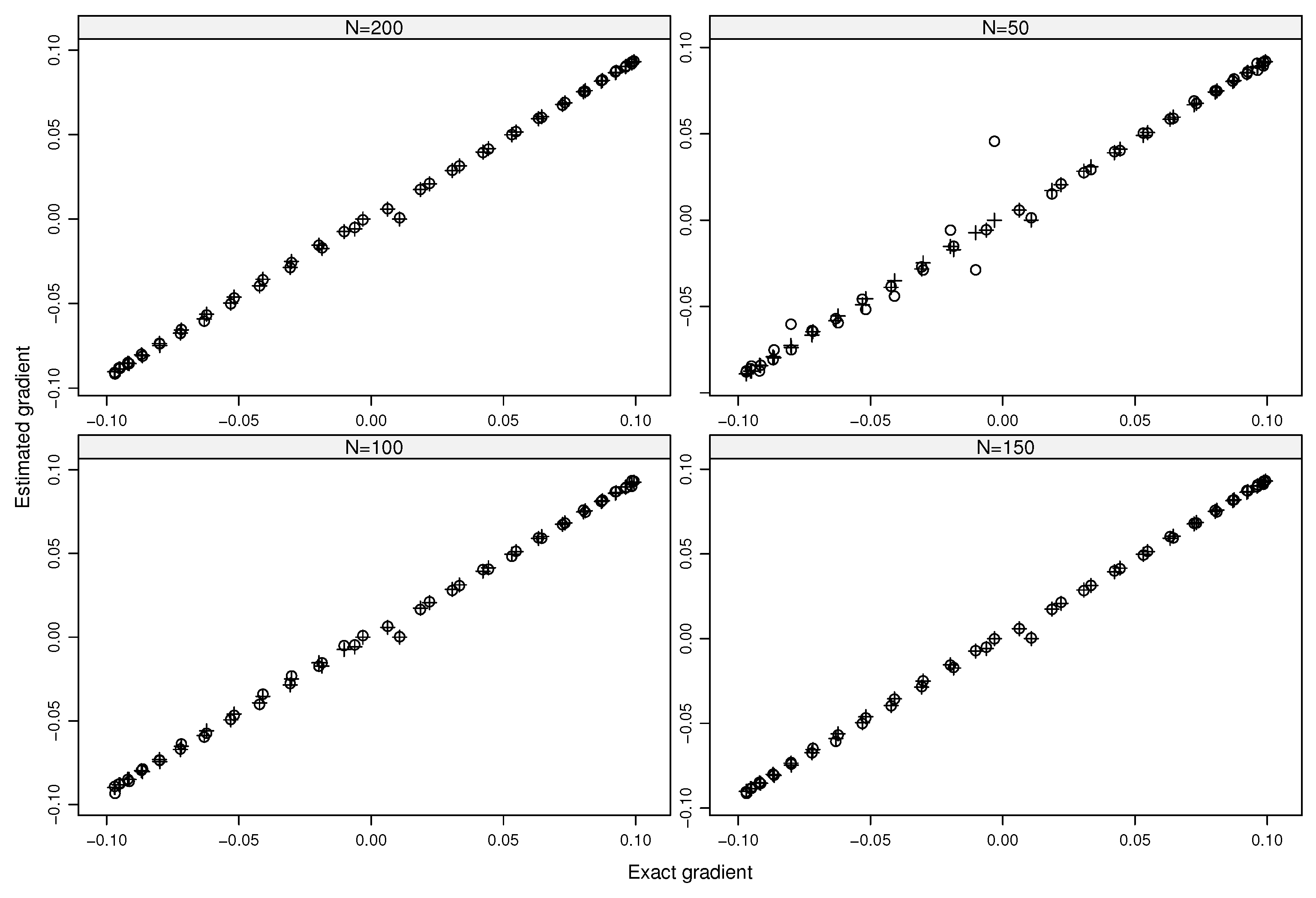

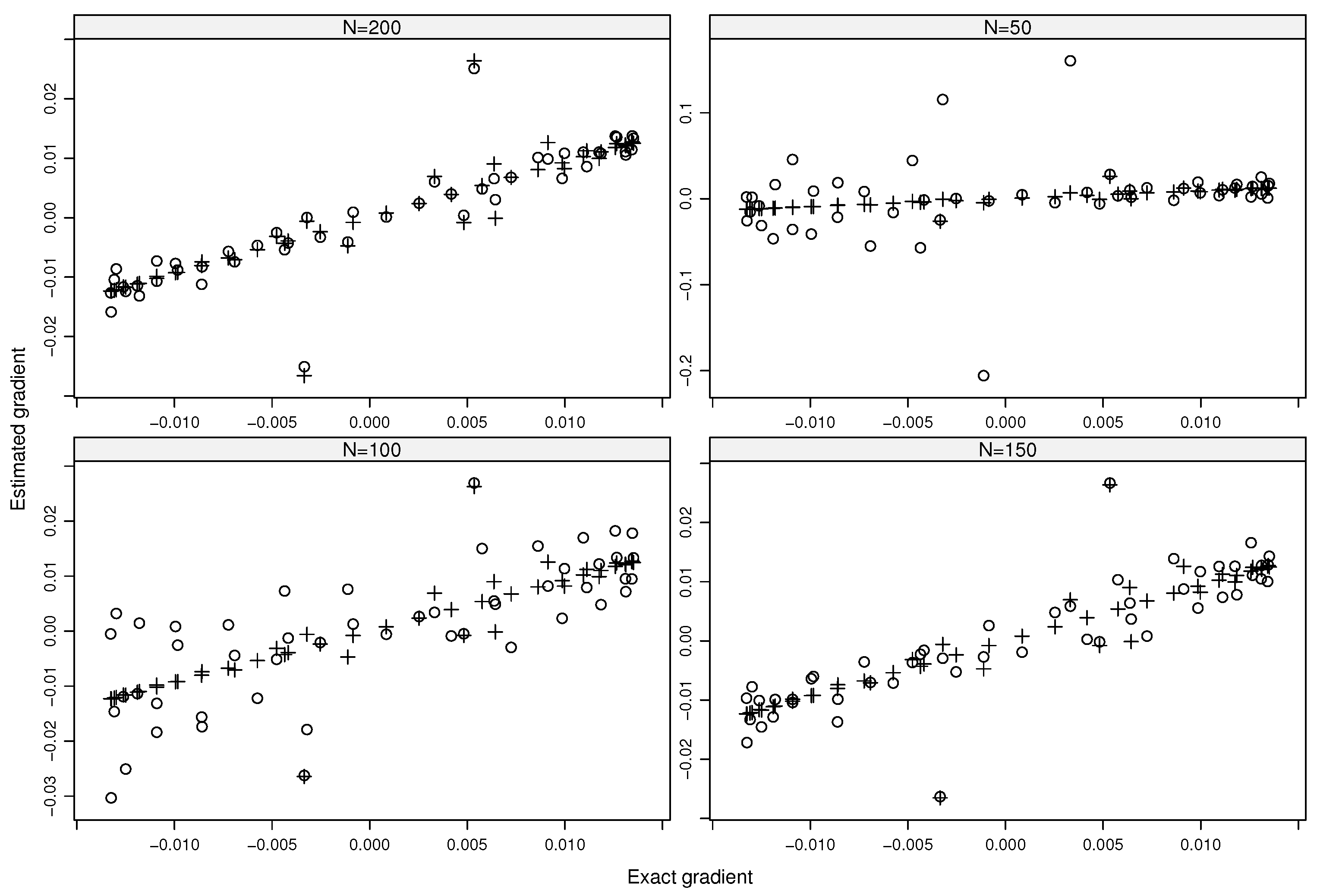

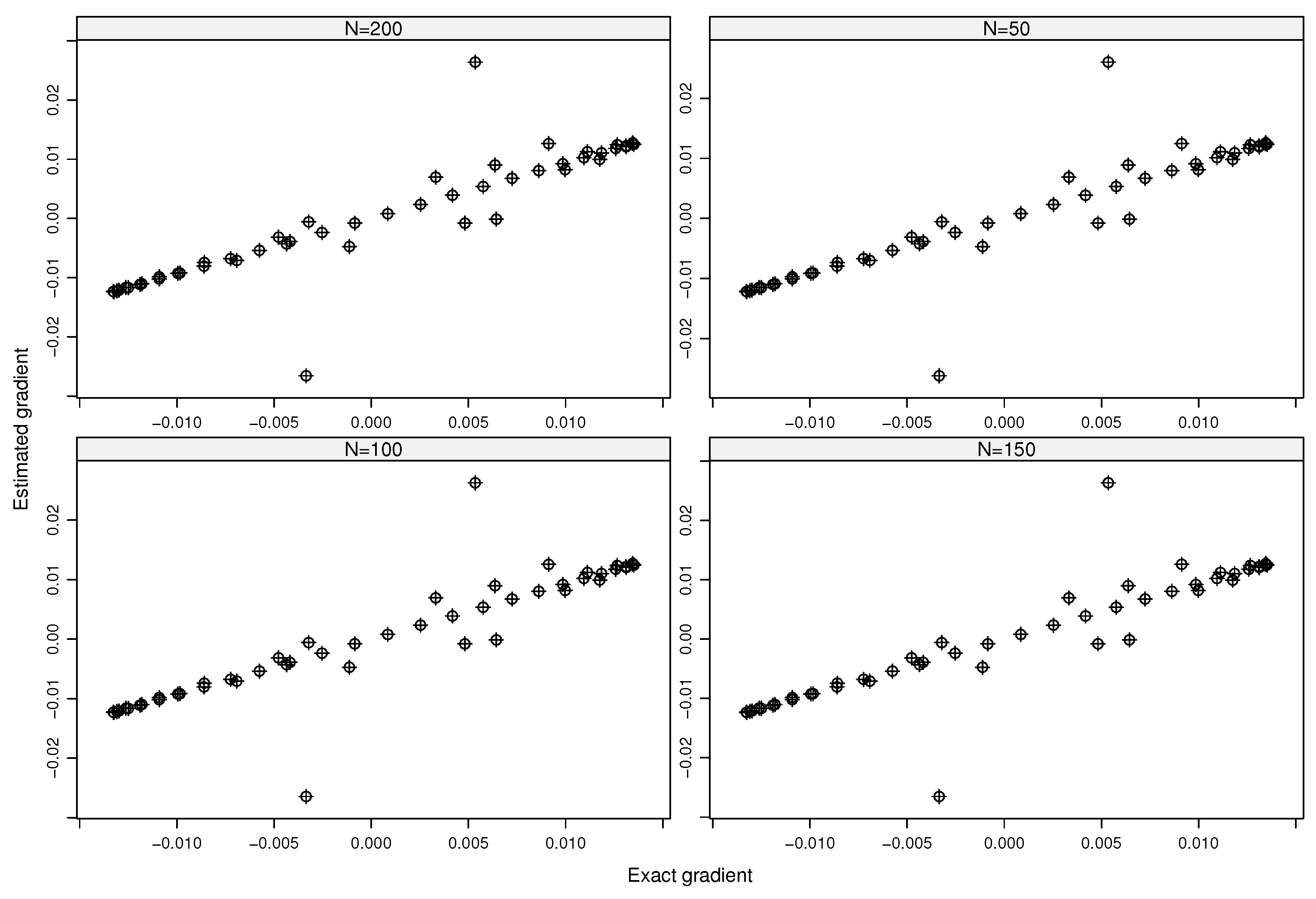

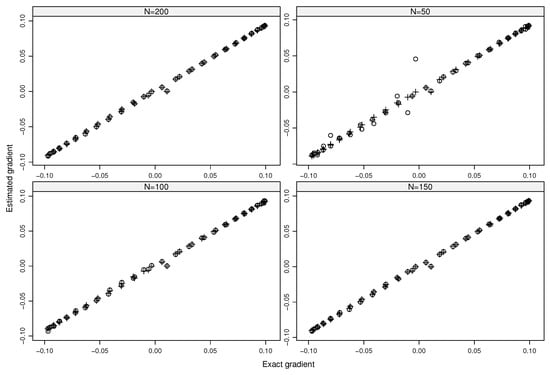

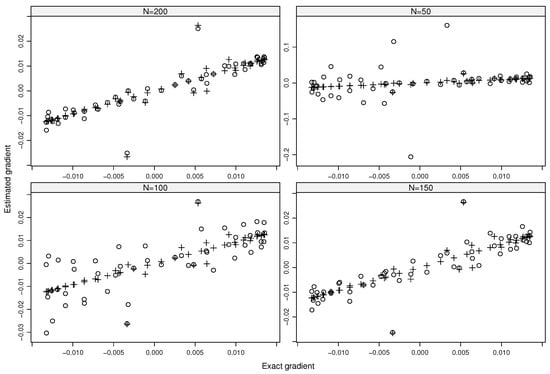

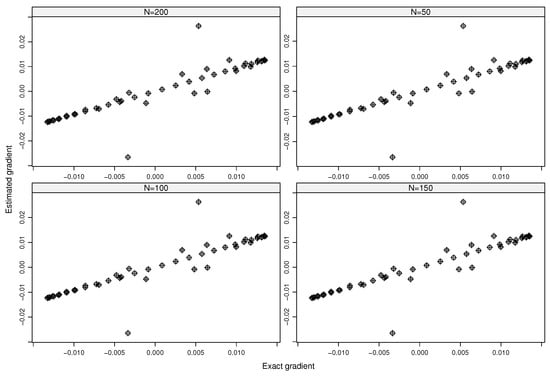

Figure 1 shows the comparisons between the estimated and the exact values of the formal gradient (i.e., ) for . Likewise, Figure 2 and Figure 3 depict the dependent gradient and its estimation. The estimates of both gradients are in line with the exact values using only (respectively, ) model evaluations when and (respectively, and or and ). Increasing the values of L and N gives the same quasiperfect results for both the formal and dependent gradients (see Figure 3).

Figure 1.

Exact gradient versus estimated gradients using (∘) and (+) of the QoI by considering the inputs as independent (formal gradients).

Figure 2.

Exact gradient versus estimated gradients using (∘) and (+) of the QoI by considering the autocorrelations among the inputs (dependent gradients).

Figure 3.

Exact gradient versus estimated gradients using (∘) and (+) of the QoI by considering the autocorrelations anong the inputs (dependent gradients).

6. Conclusions

In this paper, we have proposed new, simple, and generic approximations of the gradients of functions with nonindependent input variables by means of independent, central, and symmetric variables and a set of constraints. It comes out that the biases of our approximations for a wide class of functions, such as 2-smooth functions, do not suffer from the curse of dimensionality by properly choosing the set of independent, central, and symmetric variables. For functions including only independent input variables, a theoretical comparison has shown that the upper bounds of the bias of the formal gradient derived in this paper outperformed the best known results.

For computing the dependent gradient of the function of interest, we have provided estimators of such a gradient by making use of evaluations of that function at randomized points. Such estimators reach the optimal (mean squared error) rates of convergence (i.e., ) for a wide class of functions. Numerical comparisons using a test case and simulations based on a PDE model with given autocollaborations among the initial conditions have shown the efficiency of our approach, even when constraints were used. Our approach is therefore flexible, thanks to L and the fact that the gradient can be computed for every value of the sample size N in general.

While the proposed estimators reach the parametric rate of convergence, note that the second-order moments of such estimators depend on . An attempt to reach a dimension-free rate of convergence requires working in rather than when . In the future, it is worth investigating the derivation of the optimal rates of convergence that are dimension-free or (at least) are linear with respect to d by considering constraints. Also, combining such a promising approach with a transformation of the original space might be helpful for reducing the number of model evaluations in higher dimensions.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We would like to thank the reviewers for their comments that have helped to improve our manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

As , let and . Multiplying the Taylor expansion of about , that is,

by and the constant , as well as taking the sum over , we can see that the expectation becomes

Firstly, for a given and by independence, we can see that

if ; thus, for any , with , which implies that . Thus, one obtains when , and the fact that ; ; and . At this point, by taking and setting and , this results in the approximation of of order , because when , .

Secondly, for the constraints , we eliminate some higher-order terms so as to reach the order . Using other constraints, we complete the proof, thus bearing in mind Equation (2).

Appendix B. Proof of Corollary 1

For ; and , consider . As , we can write

with the remainder term

Denote , and remark that . Using Theorem 1, we can see that the absolute value of the bias, that is,

is given by

using the expansion of the product between matrices.

Using the same reasoning and taking the Euclidean norm, we obtain

The results hold using .

Appendix C. Proof of Theorem 2

Firstly, remark that is given by

Since the bias has been derived in previous Corollaries, we are going to treat the second-order moment.

Secondly, since implies that , we have Also, since , we then have

which leads to and

Thirdly, using (3), we can see that . Bearing in mind the definition of the Euclidean norm and the variance, the centered second-order moment, that is, is given by

when bearing in mind the Hölder inequality. The results hold using and the fact that when , , and .

References

- Rosenblatt, M. Remarks on a Multivariate Transformation. Ann. Math. Statist. 1952, 23, 470–472. [Google Scholar] [CrossRef]

- Nataf, A. Détermination des distributions dont les marges sont données. Comptes Rendus L’Académie Des Sci. 1962, 225, 42–43. [Google Scholar]

- Joe, H. Dependence Modeling with Copulas; Chapman & Hall/CRC: London, UK, 2014. [Google Scholar]

- McNeil, A.J.; Frey, R.; Embrechts, P. Quantitative Risk Management; Princeton University Press: Princeton, NJ, USA; Oxford, UK, 2015. [Google Scholar]

- Navarro, J.; Ruiz, J.M.; Aguila, Y.D. Multivariate weighted distributions: A review and some extensions. Statistics 2006, 40, 51–64. [Google Scholar] [CrossRef]

- Sklar, A. Fonctions de Rpartition à n Dimensions et Leurs Marges. Publ. l’Institut Stat. L’Université Paris 1959, 8, 229–231. [Google Scholar]

- Durante, F.; Ignazzi, C.; Jaworski, P. On the class of truncation invariant bivariate copulas under constraints. J. Math. Anal. Appl. 2022, 509, 125898. [Google Scholar] [CrossRef]

- Skorohod, A.V. On a representation of random variables. Theory Probab. Appl. 1976, 21, 645–648. [Google Scholar]

- Lamboni, M.; Kucherenko, S. Multivariate sensitivity analysis and derivative-based global sensitivity measures with dependent variables. Reliab. Eng. Syst. Saf. 2021, 212, 107519. [Google Scholar] [CrossRef]

- Lamboni, M. Efficient dependency models: Simulating dependent random variables. Math. Comput. Simul. 2022, 200, 199–217. [Google Scholar] [CrossRef]

- Lamboni, M. On exact distribution for multivariate weighted distributions and classification. Methodol. Comput. Appl. Probab. 2023, 25, 41. [Google Scholar] [CrossRef]

- Lamboni, M. Measuring inputs-outputs association for time-dependent hazard models under safety objectives using kernels. Int. J. Uncertain. Quantif. 2024, 1–17. [Google Scholar] [CrossRef]

- Kucherenko, S.; Klymenko, O.; Shah, N. Sobol’ indices for problems defined in non-rectangular domains. Reliab. Eng. Syst. Saf. 2017, 167, 218–231. [Google Scholar] [CrossRef]

- Lamboni, M. On dependency models and dependent generalized sensitivity indices. arXiv 2021, arXiv:2104.12938. [Google Scholar]

- Lamboni, M. Derivative Formulas and Gradient of Functions with Non-Independent Variables. Axioms 2023, 12, 845. [Google Scholar] [CrossRef]

- Nemirovsky, A.; Yudin, D. Problem Complexity and Method Efficiency in Optimization; Wiley & Sons: New York, NY, USA, 1983; p. 404. [Google Scholar]

- Patelli, E.; Pradlwarter, H. Monte Carlo gradient estimation in high dimensions. Int. J. Numer. Methods Eng. 2010, 81, 172–188. [Google Scholar] [CrossRef]

- Agarwal, A.; Dekel, O.; Xiao, L. Optimal Algorithms for Online Convex Optimization with Multi-Point Bandit Feedback. In Proceedings of the The 23rd Conference on Learning Theory, COLT 2010, Haifa, Israel, 27–29 June 2010; pp. 28–40. [Google Scholar]

- Bach, F.; Perchet, V. Highly-Smooth Zero-th Order Online Optimization. In Proceedings of the 29th Annual Conference on Learning Theory, New York, NY, USA, 23–26 June 2016; Volume 49, pp. 257–283. [Google Scholar]

- Akhavan, A.; Pontil, M.; Tsybakov, A.B. Exploiting Higher Order Smoothness in Derivative-Free Optimization and Continuous Bandits. Red Hook, NY, USA, 2020. NIPS’20. Available online: https://arxiv.org/abs/2006.07862 (accessed on 18 June 2024).

- Sobol, I.M.; Kucherenko, S. Derivative based global sensitivity measures and the link with global sensitivity indices. Math. Comput. Simul. 2009, 79, 3009–3017. [Google Scholar] [CrossRef]

- Kucherenko, S.; Rodriguez-Fernandez, M.; Pantelides, C.; Shah, N. Monte Carlo evaluation of derivative-based global sensitivity measures. Reliab. Eng. Syst. Saf. 2009, 94, 1135–1148. [Google Scholar] [CrossRef]

- Lamboni, M.; Iooss, B.; Popelin, A.L.; Gamboa, F. Derivative-based global sensitivity measures: General links with Sobol’ indices and numerical tests. Math. Comput. Simul. 2013, 87, 45–54. [Google Scholar] [CrossRef]

- Roustant, O.; Fruth, J.; Iooss, B.; Kuhnt, S. Crossed-derivative based sensitivity measures for interaction screening. Math. Comput. Simul. 2014, 105, 105–118. [Google Scholar] [CrossRef]

- Fruth, J.; Roustant, O.; Kuhnt, S. Total interaction index: A variance-based sensitivity index for second-order interaction screening. J. Stat. Plan. Inference 2014, 147, 212–223. [Google Scholar] [CrossRef]

- Lamboni, M. Derivative-based generalized sensitivity indices and Sobol’ indices. Math. Comput. Simul. 2020, 170, 236–256. [Google Scholar] [CrossRef]

- Lamboni, M. Derivative-based integral equalities and inequality: A proxy-measure for sensitivity analysis. Math. Comput. Simul. 2021, 179, 137–161. [Google Scholar] [CrossRef]

- Lamboni, M. Weak derivative-based expansion of functions: ANOVA and some inequalities. Math. Comput. Simul. 2022, 194, 691–718. [Google Scholar] [CrossRef]

- Bobkov, S. Isoperimetric and Analytic Inequalities for Log-Concave Probability Measures. Ann. Probab. 1999, 27, 1903–1921. [Google Scholar] [CrossRef]

- Roustant, O.; Barthe, F.; Iooss, B. Poincaré inequalities on intervals-application to sensitivity analysis. Electron. J. Statist. 2017, 11, 3081–3119. [Google Scholar] [CrossRef]

- Le Dimet, F.X.; Talagrand, O. Variational algorithms for analysis and assimilation of meteorological observations: Theoretical aspects. Tellus A Dyn. Meteorol. Oceanogr. 1986, 38, 97–110. [Google Scholar] [CrossRef]

- Le Dimet, F.X.; Ngodock, H.E.; Luong, B.; Verron, J. Sensitivity analysis in variational data assimilation. J.-Meteorol. Soc. Jpn. 1997, 75, 245–255. [Google Scholar] [CrossRef]

- Cacuci, D.G. Sensitivity and Uncertainty Analysis—Theory; Chapman & Hall/CRC: Boca Raton, FL, USA, 2005. [Google Scholar]

- Gunzburger, M.D. Perspectives in Flow Control and Optimization; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- Borzi, A.; Schulz, V. Computational Optimization of Systems Governed by Partial Differential Equations; SIAM: Philadelphia, PA, USA, 2012. [Google Scholar]

- Ghanem, R.; Higdon, D.; Owhadi, H. Handbook of Uncertainty Quantification; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Guidotti, E. Calculus: High-Dimensional Numerical and Symbolic Calculus in R. J. Stat. Softw. 2022, 104, 1–37. [Google Scholar] [CrossRef]

- Ancell, B.; Hakim, G.J. Comparing Adjoint- and Ensemble-Sensitivity Analysis with Applications to Observation Targeting. Mon. Weather Rev. 2007, 135, 4117–4134. [Google Scholar] [CrossRef]

- Pradlwarter, H. Relative importance of uncertain structural parameters. Part I: Algorithm. Comput. Mech. 2007, 40, 627–635. [Google Scholar] [CrossRef]

- Polyak, B.; Tsybakov, A. Optimal accuracy orders of stochastic approximation algorithms. Probl. Peredachi Inf. 1990, 26, 45–53. [Google Scholar]

- Zemanian, A. Distribution Theory and Transform Analysis: An Introduction to Generalized Functions, with Applications; Dover Books on Advanced Mathematics; Dover Publications: Mineola, NY, USA, 1987. [Google Scholar]

- Strichartz, R. A Guide to Distribution Theory and Fourier Transforms; Studies in Advanced Mathematics; CRC Press: Boca, FL, USA, 1994. [Google Scholar]

- Lamboni, M. Kernel-based Measures of Association Between Inputs and Outputs Using ANOVA. Sankhya A 2024. [Google Scholar] [CrossRef]

- Gilbert, P.; Varadhan, R. R-Package numDeriv: Accurate Numerical Derivatives; CRAN Repository. 2019. Available online: http://optimizer.r-forge.r-project.org/ (accessed on 18 June 2024).

- Soetaert, K.; Petzoldt, T.; Setzer, R.W. Solving Differential Equations in R: Package deSolve. J. Stat. Softw. 2010, 33, 1–25. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).