Using Artificial Neural Networks to Solve the Gross–Pitaevskii Equation

Abstract

1. Introduction

2. The Proposed Method

2.1. The GPE Equation

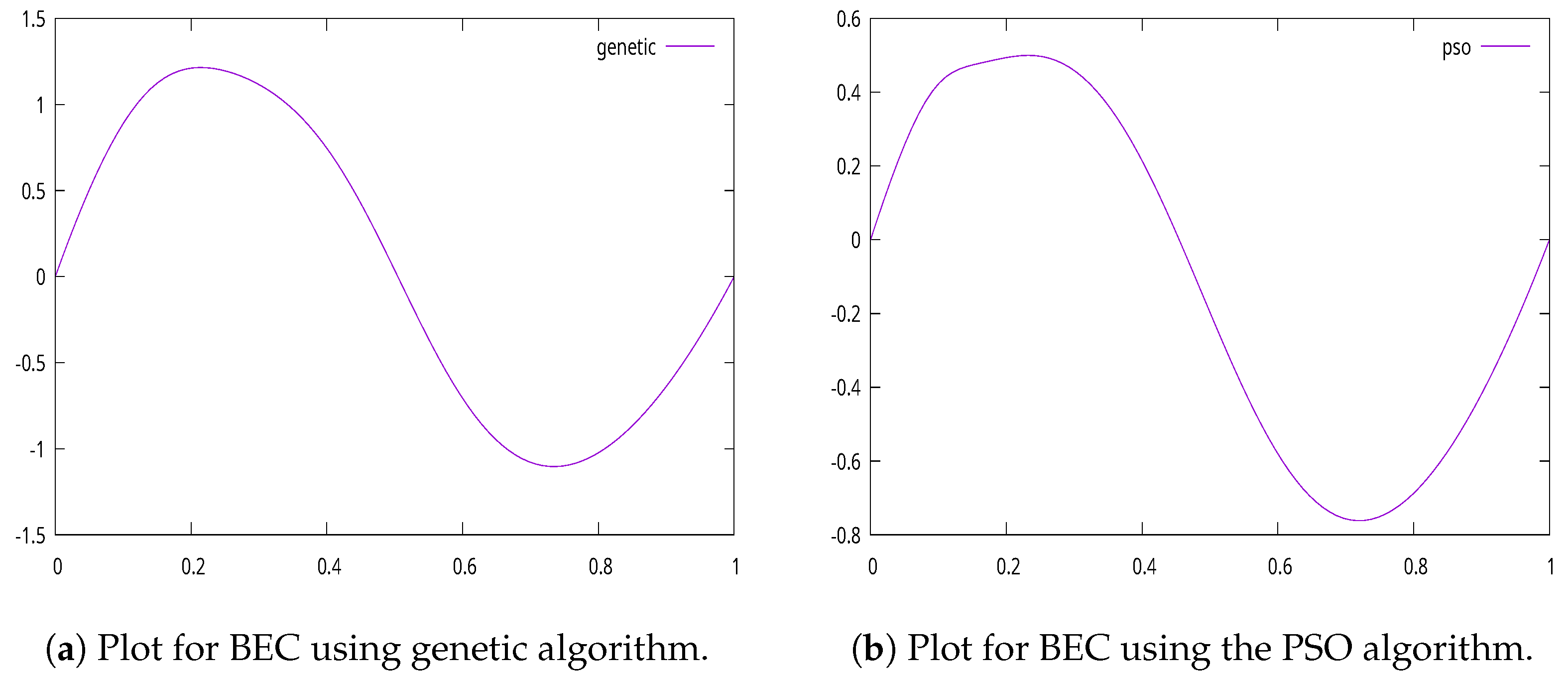

2.1.1. Bose–Einstein Condensates (BEC) in a Box

2.1.2. Gravitational Trap

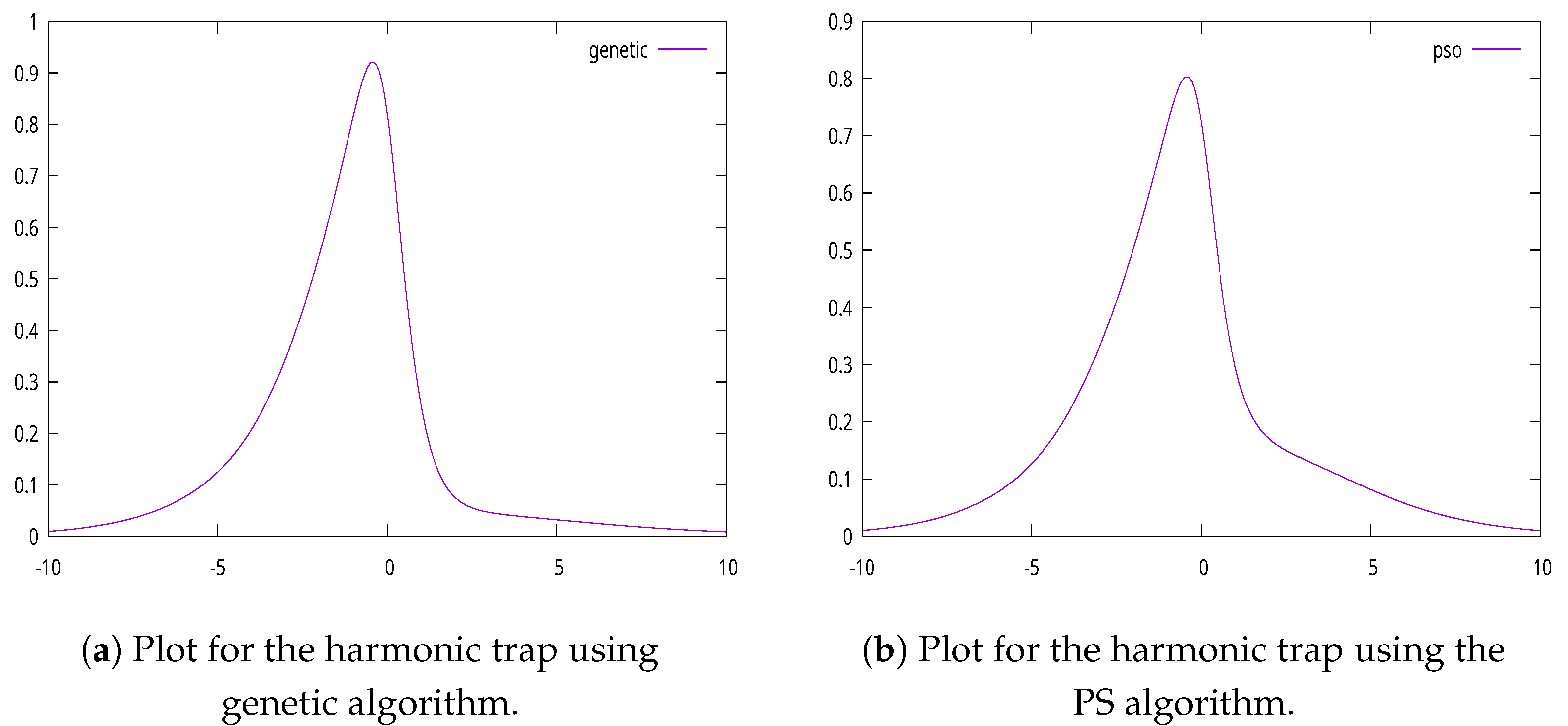

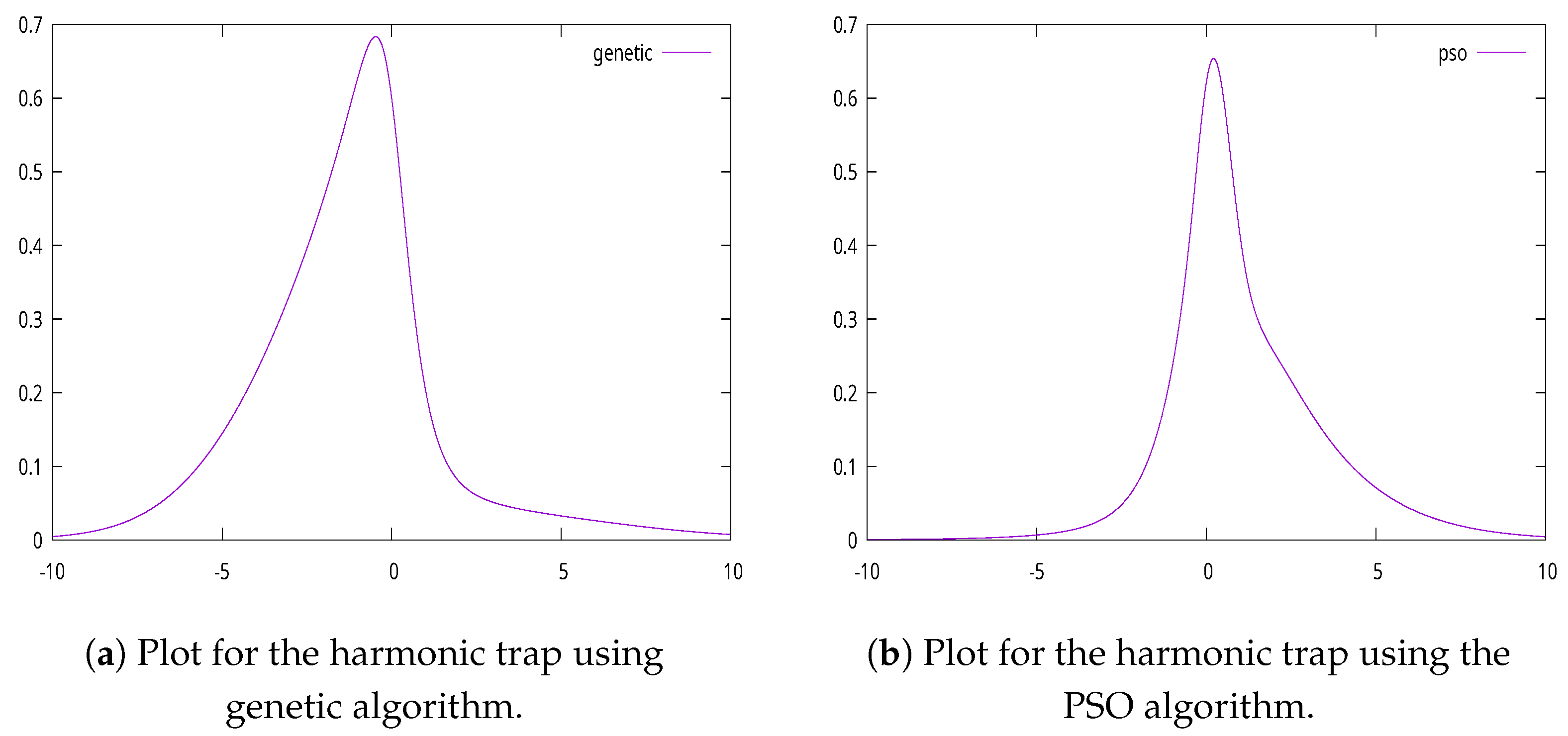

2.1.3. Harmonic Trap

2.2. The Used Model

2.3. The Used Genetic Algorithm

- Initialization Step

- (a)

- Define as the number of chromosomes and as the number of allowed generations.

- (b)

- Set K as the number of processing nodes (weights) for the artificial neural network.

- (c)

- Randomly produce chromosomes. Every chromosome is considered a potential set of parameters for the artificial neural network.

- (d)

- Set as the selection rate and as the mutation rate with the assumption that and .

- (e)

- Set iter = 0 as the generation number.

- Fitness calculation

- (a)

- For , do

- Create the neural network for chromosome .

- Create the model for chromosome asusing Equation (11).

- Calculate the fitness as

- (b)

- EndFor

- Genetic operations

- (a)

- Selection procedure. Initially, the chromosomes are sorted according to their fitness, the first are copied without changes to the next generation and the remaining are substituted by chromosomes that will produced in the crossover procedure.

- (b)

- Crossover procedure. During this step, for every couple of produced offsprings, two chromosomes denoted as z and w are selected from the current population using tournament selection. The new chromosomes are constructed using the following equations:where is a random number with the property [64].

- (c)

- Mutation procedure. In this procedure, a random number r is selected for each element j of every chromosome . If , then the corresponding element is changed randomly using the following scheme [64]:where t is a random number that accepts the values 0 or 1. The function is defined as:The number r is a random number in and the parameter b controls the magnitude of change in the chromosome.

- Termination Check

- (a)

- Set

- (b)

- A termination rule that was initially introduced in the work of Tsoulos [65] is used here.At each iteration iter, the algorithm calculates the variance, denoted as , of the best fitness value up to that iteration. This variance will continually drop as the algorithm will discover lower values or these values will remain constant. In this case, the variance for the last iteration where a lower value was discovered is recorded, and if the variance falls below half of this record, then there is probably no new minimum for the algorithm to find and therefore it should terminate. The suggested termination rule is defined as:where is the last generation where a new lower value for the best fitness was located.

- (c)

- If the termination rule does not hold, then go to step 2.

- Application of local optimization

- (a)

- Obtain the chromosome with the lowest fitness value in the population and denote it as .

- (b)

- Apply a local search procedure to chromosome . In the current work, a Broyden–Fletcher–Goldfarb–Shannon (BFGS) variant of Powell [66] was used. The use of local minimization techniques is important in order to reliably find a true local minimum from the global optimization method. In the optimization theory, the region of attraction of some local minimum is defined as:where stands for the used local optimization method. Global optimization methods, in most cases, identify points that are inside the region of attraction of a local minimum but not necessarily the actual local minimum. For this reason, it is considered necessary to have a combination of the global optimization method with local optimization techniques. Moreover, according to the relevant literature, the error function of artificial neural networks may contain a very large number of local minimums, making the aforementioned combination important in the process of finding an optimal set of parameters for the artificial neural network.

2.4. The Used PSO Variant

- Initialization

- (a)

- Set as the iteration counter.

- (b)

- Define as the number of particles and as the number of allowed generations.

- (c)

- Set as the local search rate.

- (d)

- Set the parameters in the range .

- (e)

- Initialize the m particles . Each particle represents a set of parameters for the artificial neural network.

- (f)

- Randomly initialize the corresponding velocities .

- (g)

- For , carry out . The vector denotes the best-located position for the particle .

- (h)

- Set , where the function denotes the fitness value of particle p.

- Check for termination. The same termination scheme as in the genetic algorithm is used here.

- For , Do

- (a)

- Compute the velocity as:with

- The parameters are randomly selected numbers in [0,1].

- The variable stands for the inertia value and is computed as:where r denotes a random number with the property [77]. The above inertia calculation mechanism gives the particle optimization method the ability to more efficiently explore the search space of the objective function and consequently identify regions where the artificial neural network parameters will more effectively solve the equation.

- (b)

- Compute the new position of the particle as

- (c)

- Draw a random number . If , then , where is the same local search optimization procedure used in the genetic algorithm.

- (d)

- Create the neural network for particle .

- (e)

- Create the associated model for particle asusing Equation (11).

- (f)

- Calculate the corresponding fitness value of using Equation (13).

- (g)

- If , then

- End For

- Set

- Set .

- Go to Step 2 to check for termination.

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ueda, M. Fundamentals and New Frontiers of Bose–Einstein Condensation, 1st ed.; Wspc: Singapore, 2010. [Google Scholar]

- Rogel-Salazar, J. The Gross–Pitaevskii equation and Bose–Einstein condensates. Eur. J. Phys. 2013, 34, 247–257. [Google Scholar] [CrossRef]

- Gardiner, C.W.; Anglin, J.R.; Fudge, T.I.A. The stochastic Gross–Pitaevskii equation. J. Phys. B At. Mol. Opt. Phys. 2002, 35, 1555–1582. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Yukalova, E.P.; Bagnato, V.S. Nonlinear coherent modes of trapped Bose–Einstein condensates. Phys. Rev. A 2002, 66, 043602. [Google Scholar] [CrossRef]

- Marojević, Z.; Göklü, E.; Lämmerzahl, C. Energy eigenfunctions of the 1D Gross–Pitaevskii equation. Comput. Phys. Commun. 2013, 184, 1920–1930. [Google Scholar] [CrossRef][Green Version]

- Pokatov, S.P.; Ivanova, T.Y.; Ivanov, D.A. Solution of inverse problem for Gross–Pitaevskii equation with artificial neural networks. Laser Phys. Lett. 2023, 20, 095501. [Google Scholar] [CrossRef]

- Zhong, M.; Gong, S.; Tian, S.-F.; Yan, Z. Data-driven rogue waves and parameters discovery in nearly integrable-symmetric Gross–Pitaevskii equations via PINNs deep learning. Physica D 2022, 439, 133430. [Google Scholar] [CrossRef]

- Holland, M.J.; Jin, D.S.; Chiofalo, M.L.; Cooper, J. Emergence of Interaction Effects in Bose–Einstein Condensation. Phys. Rev. Lett. 1997, 78, 3801–3805. [Google Scholar] [CrossRef]

- Cerimele, M.M.; Chiofalo, M.L.; Pistella, F.; Succi, S.; Tosi, M.P. Numerical solution of the Gross–Pitaevskii equation using an explicit ®nite-difference scheme: An application to trapped Bose–Einstein condensates. Phys Rev. E 2000, 62, 1382–1389. [Google Scholar] [CrossRef]

- Zou, J.; Han, Y.; So, S.S. Overview of artificial neural networks. In Artificial Neural Networks: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 14–22. [Google Scholar]

- Wu, Y.C.; Feng, J.W. Development and application of artificial neural network. Wirel. Pers. Commun. 2018, 102, 1645–1656. [Google Scholar] [CrossRef]

- Baldi, P.; Cranmer, K.; Faucett, T.; Sadowski, P.; Whiteson, D. Parameterized neural networks for high-energy physics. Eur. Phys. J. C 2016, 76, 235. [Google Scholar] [CrossRef]

- Valdas, J.J.; Bonham-Carter, G. Time dependent neural network models for detecting changes of state in complex processes: Applications in earth sciences and astronomy. Neural Netw. 2006, 19, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Carleo, G.; Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 2017, 355, 602–606. [Google Scholar] [CrossRef] [PubMed]

- Shirvany, Y.; Hayati, M.; Moradian, R. Multilayer perceptron neural networks with novel unsupervised training method for numerical solution of the partial differential equations. Appl. Soft Comput. 2009, 9, 20–29. [Google Scholar] [CrossRef]

- Malek, A.; Beidokhti, R.S. Numerical solution for high order differential equations using a hybrid neural network—Optimization method. Appl. Math. Comput. 2006, 183, 260–271. [Google Scholar] [CrossRef]

- EKaul, M.; Hill, R.L.; Walthall, C. Artificial neural networks for corn and soybean yield prediction. Agric. Syst. 2005, 85, 1–18. [Google Scholar]

- Dahikar, S.S.; Rode, S.V. Agricultural crop yield prediction using artificial neural network approach. Int. J. Innov. Res. Electr. Electron. Instrum. Control Eng. 2014, 2, 683–686. [Google Scholar]

- Behler, J. Neural network potential-energy surfaces in chemistry: A tool for large-scale simulations. Phys. Chem. Chem. Phys. 2011, 13, 17930–17955. [Google Scholar] [CrossRef]

- Manzhos, S.; Dawes, R.; Carrington, T. Neural network-based approaches for building high dimensional and quantum dynamics-friendly potential energy surfaces. Int. J. Quantum Chem. 2015, 115, 1012–1020. [Google Scholar] [CrossRef]

- Enke, D.; Thawornwong, S. The use of data mining and neural networks for forecasting stock market returns. Expert Syst. Appl. 2005, 29, 927–940. [Google Scholar] [CrossRef]

- Falat, L.; Pancikova, L. Quantitative Modelling in Economics with Advanced Artificial Neural Networks. Procedia Econ. Financ. 2015, 34, 194–201. [Google Scholar] [CrossRef]

- Angelini, E.; Di Tollo, G.; Roli, A. A neural network approach for credit risk evaluation. Q. Rev. Econ. Financ. 2008, 48, 733–755. [Google Scholar] [CrossRef]

- Moghaddam, A.H.; Moghaddam, M.H.; Esfandyari, M. Stock market index prediction using artificial neural network. J. Econ. Financ. Adm. Sci. 2016, 21, 89–93. [Google Scholar] [CrossRef]

- Amato, F.; López, A.; Peña-Méndez, E.M.; Vaňhara, P.; Hampl, A.; Havel, J. Artificial neural networks in medical diagnosis. J. Appl. Biomed. 2013, 11, 47–58. [Google Scholar] [CrossRef]

- Sidey-Gibbons, J.A.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. Bmc Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Tsoulos, I.; Gavrilis, D.; Glavas, E. Neural network construction and training using grammatical evolution. Neurocomputing 2008, 72, 269–277. [Google Scholar] [CrossRef]

- Lagaris, I.E.; Likas, A.; Fotiadis, D.I. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 1998, 9, 987–1000. [Google Scholar] [CrossRef] [PubMed]

- Aarts, L.P.; van der Veer, P. Neural Network Method for Solving Partial Differential Equations. Neural Process. Lett. 2001, 14, 261–271. [Google Scholar] [CrossRef]

- Parisi, D.R.; Mariani, M.C.; Laborde, M.A. Solving differential equations with unsupervised neural networks. Chem. Eng. Process. Process Intensif. 2003, 42, 715–721. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Gavrilis, D.; Glavas, E. Solving differential equations with constructed neural networks. Neurocomputing 2009, 72, 2385–2391. [Google Scholar] [CrossRef]

- Ryan, C.; Collins, J.J.; Neill, M.O. Grammatical evolution: Evolving programs for an arbitrary language. In Proceedings of the Genetic Programming: First European Workshop, EuroGP’98, Paris, France, 14–15 April 1998; Proceedings 1. Springer: Berlin/Heidelberg, Germany, 1998; pp. 83–96. [Google Scholar]

- Kumar, M.; Yadav, N. Multilayer perceptrons and radial basis function neural network methods for the solution of differential equations: A survey. Comput. Math. Appl. 2011, 62, 3796–3811. [Google Scholar] [CrossRef]

- Zhang, T.; Li, Y. Global exponential stability of discrete-time almost automorphic Caputo–Fabrizio BAM fuzzy neural networks via exponential Euler technique. Knowl.-Based Syst. 2022, 246, 108675. [Google Scholar] [CrossRef]

- Lagaris, I.E.; Likas, A.; Fotiadis, D.I. Artificial neural network methods in quantum mechanics. Comput. Phys. Commun. 1997, 104, 1–14. [Google Scholar] [CrossRef]

- Kolanoski, H. Application of Artificial Neural Networks in Particle Physics. In Artificial Neural Networks—ICANN 96; Von der Malsburg, C., von Seelen, W., Vorbrüggen, J.C., Sendhoff, B., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1996; Volume 1112. [Google Scholar]

- Cai, Z.; Liu, J. Approximating quantum many-body wave functions using artificial neural networks. Phys. Rev. B 2018, 97, 035116. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Karniadakis, G.E. Physics-Informed Neural Networks for Heat Transfer Problems. J. Heat Transf. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Vora, K.; Yagnik, S. A survey on backpropagation algorithms for feedforward neural networks. Int. J. Eng. Dev. Res. 2014, 1, 193–197. [Google Scholar]

- Riedmiller, M.; Braun, H. A Direct Adaptive Method for Faster Backpropagation Learning: The RPROP algorithm. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993; pp. 586–591. [Google Scholar]

- Hermanto, R.P.S.; Nugroho, A. Waiting-time estimation in bank customer queues using RPROP neural networks. Procedia Comput. Sci. 2018, 135, 35–42. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Törn, A.; Ali, M.M.; Viitanen, S. Stochastic global optimization: Problem classes and solution techniques. J. Glob. Optim. 1999, 14, 437–447. [Google Scholar] [CrossRef]

- Floudas, C.A.; Pardalos, P.M. (Eds.) State of the Art in Global Optimization: Computational Methods and Applications; Springer: New York, NY, USA, 2013. [Google Scholar]

- Liu, Z.; Liu, A.; Wang, C.; Niu, Z. Evolving neural network using real coded genetic algorithm (GA) for multispectral image classification. Future Gener. Comput. Syst. 2004, 20, 1119–1129. [Google Scholar] [CrossRef]

- Carvalho, M.; Ludermir, T.B. Particle swarm optimization of neural network architectures andweights. In Proceedings of the 7th International Conference on Hybrid Intelligent Systems (HIS 2007), Kaiserslautern, Germany, 17–19 September 2007; pp. 336–339. [Google Scholar]

- Kiranyaz, S.; Ince, T.; Yildirim, A.; Gabbouj, M. Evolutionary artificial neural networks by multi-dimensional particle swarm optimization. Neural Netw. 2009, 22, 1448–1462. [Google Scholar] [CrossRef]

- Ilonen, J.; Kamarainen, J.K.; Lampinen, J. Differential evolution training algorithm for feed-forward neural networks. Neural Process. Lett. 2003, 17, 93–105. [Google Scholar] [CrossRef]

- Slowik, A.; Bialko, M. Training of artificial neural networks using differential evolution algorithm. In Proceedings of the 2008 Conference on Human System Interactions, Krakow, Poland, 25–27 May 2008; pp. 60–65. [Google Scholar]

- Salama, K.M.; Abdelbar, A.M. Learning neural network structures with ant colony algorithms. Swarm Intell. 2015, 9, 229–265. [Google Scholar] [CrossRef]

- Mirjalili, S. How effective is the Grey Wolf optimizer in training multi-layer perceptrons. Appl. Intell. 2015, 43, 150–161. [Google Scholar] [CrossRef]

- Aljarah, I.; Faris, H.; Mirjalili, S. Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 2018, 22, 1–15. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Stender, J. Parallel Genetic Algorithms: Theory & Applications; IOS Press: Amsterdam, The Netherlands, 1993. [Google Scholar]

- Haupt, R.L.; Werner, D.H. Genetic Algorithms in Electromagnetics; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Grady, S.A.; Hussaini, M.Y.; Abdullah, M.M. Placement of wind turbines using genetic algorithms. Renew. Energy 2005, 30, 259–270. [Google Scholar] [CrossRef]

- Oh, I.S.; Lee, J.S.; Moon, B.R. Hybrid genetic algorithms for feature selection. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1424–1437. [Google Scholar]

- Bakirtzis, A.G.; Biskas, P.N.; Zoumas, C.E.; Petridis, V. Optimal power flow by enhanced genetic algorithm. IEEE Trans. Power Syst. 2002, 17, 229–236. [Google Scholar] [CrossRef]

- Zegordi, S.H.; Nia, M.B. A multi-population genetic algorithm for transportation scheduling. Transp. Res. Part E Logist. Transp. Rev. 2009, 45, 946–959. [Google Scholar] [CrossRef]

- Leung, F.H.F.; Lam, H.K.; Ling, S.H.; Tam, P.K.S. Tuning of the structure and parameters of a neural network using an improved genetic algorithm. IEEE Trans. Neural Netw. 2003, 14, 79–88. [Google Scholar] [CrossRef] [PubMed]

- Sedki, A.; Ouazar, D.; El Mazoudi, E. Evolving neural network using real coded genetic algorithm for daily rainfall—Runoff forecasting. Expert Syst. Appl. 2009, 36, 4523–4527. [Google Scholar] [CrossRef]

- Majdi, A.; Beiki, M. Evolving neural network using a genetic algorithm for predicting the deformation modulus of rock masses. Int. J. Rock Mech. Min. Sci. 2010, 47, 246–253. [Google Scholar] [CrossRef]

- Kaelo, P.; Ali, M.M. Integrated crossover rules in real coded genetic algorithms. Eur. J. Oper. Res. 2007, 176, 60–76. [Google Scholar] [CrossRef]

- Tsoulos, I.G. Modifications of real code genetic algorithm for global optimization. Appl. Math. Comput. 2008, 203, 598–607. [Google Scholar] [CrossRef]

- Powell, M.J.D. A Tolerant Algorithm for Linearly Constrained Optimization Calculations. Math. Program. 1989, 45, 547–566. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization An Overview. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Robinson, J.; Rahmat-Samii, Y. Particle swarm optimization in electromagnetics. IEEE Trans. Antennas Propag. 2004, 52, 397–407. [Google Scholar] [CrossRef]

- Pace, F.; Santilano, A.; Godio, A. A review of geophysical modeling based on particle swarm optimization. Surv. Geophys. 2021, 42, 505–549. [Google Scholar] [CrossRef] [PubMed]

- Call, S.T.; Zubarev, D.Y.; Boldyrev, A.I. Global minimum structure searches via particle swarm optimization. J. Comput. Chem. 2007, 28, 1177–1186. [Google Scholar] [CrossRef]

- Halter, W.; Mostaghim, S. Bilevel optimization of multi-component chemical systems using particle swarm optimization. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 1240–1247. [Google Scholar]

- Chakraborty, S.; Samanta, S.; Biswas, D.; Dey, N.; Chaudhuri, S.S. Particle swarm optimization based parameter optimization technique in medical information hiding. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Computing Research, Enathi, India, 26–28 December 2013; pp. 1–6. [Google Scholar]

- Harb, H.M.; Desuky, A.S. Feature selection on classification of medical datasets based on particle swarm optimization. Int. J. Comput. Appl. 2014, 104, 5. [Google Scholar]

- Maschek, M.K. Particle Swarm Optimization in Agent-Based Economic Simulations of the Cournot Market Model. Intelligent Systems in Accounting. Financ. Manag. 2015, 22, 133–152. [Google Scholar]

- Yu, J.; Xi, L.; Wang, S. An improved particle swarm optimization for evolving feedforward artificial neural networks. Neural Process. Lett. 2007, 26, 217–231. [Google Scholar] [CrossRef]

- Charilogis, V.; Tsoulos, I.G. Toward an Ideal Particle Swarm Optimizer for Multidimensional Functions. Information 2022, 13, 217. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y.H. Tracking and optimizing dynamic systems with particle swarms. In Proceedings of the Congress on Evolutionary Computation, Seoul, Republic of Korea, 27–30 May 2001. [Google Scholar]

- Park, J.; Sandberg, I.W. Universal Approximation Using Radial-Basis-Function Networks. Neural Comput. 1991, 3, 246–257. [Google Scholar] [CrossRef]

- Zhang, Y. An accurate and stable RBF method for solving partial differential equations. Appl. Math. Lett. 2019, 97, 93–98. [Google Scholar] [CrossRef]

- Gabriel, E.; Fagg, G.E.; Bosilca, G.; Angskun, T.; Dongarra, J.J.; Squyres, J.M.; Sahay, V.; Kambadur, P.; Barrett, B.; Lumsdaine, A.; et al. Woodall. Open MPI: Goals, concept, and design of a next generation MPI implementation. In Proceedings of the Recent Advances in Parallel Virtual Machine and Message Passing Interface: 11th European PVM/MPI Users’ Group Meeting, Budapest, Hungary, 19–22 September 2004; Proceedings 11. Springer: Berlin/Heidelberg, Germany, 2004; pp. 97–104. [Google Scholar]

- Ayguadé, E.; Copty, N.; Duran, A.; Hoeflinger, J.; Lin, Y.; Massaioli, F.; Teruel, F.; Unnikrishnan, P.; Zhang, G. The design of OpenMP tasks. IEEE Trans. Parallel Distrib. Syst. 2008, 20, 404–418. [Google Scholar] [CrossRef]

| Parameter | Meaning | Value |

|---|---|---|

| m | Number of points used to divide the interval | 100 |

| Number of chromosomes/particles | 500 | |

| Maximum number of allowed generations | 200 | |

| Selection rate | 0.90 | |

| Mutation rate | 0.05 | |

| Local search rate | 0.01 |

| Method | BEC1 | BEC2 |

|---|---|---|

| Adam | 77.93 | 12.24 |

| Bfgs | 3.35 | 8.97 |

| Differential Evolution | 3.17 | 8.87 |

| Genetic | ||

| PSO |

| Chromosomes | Execution Time | Minimum Fitness |

|---|---|---|

| 100 | 102.64 | 0.113 |

| 200 | 187.40 | 0.0065 |

| 400 | 416.65 | 0.0057 |

| 500 | 830.81 | |

| 600 | 967.19 |

| pl | Execution Time | Minimum Fitness |

|---|---|---|

| 0.001 | 61.54 | 0.0095 |

| 0.002 | 72.38 | 0.0073 |

| 0.005 | 255.342 | 0.0069 |

| 0.01 | 830.81 | |

| 0.02 | 1091.374 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsoulos, I.G.; Stavrou, V.N.; Tsalikakis, D. Using Artificial Neural Networks to Solve the Gross–Pitaevskii Equation. Axioms 2024, 13, 711. https://doi.org/10.3390/axioms13100711

Tsoulos IG, Stavrou VN, Tsalikakis D. Using Artificial Neural Networks to Solve the Gross–Pitaevskii Equation. Axioms. 2024; 13(10):711. https://doi.org/10.3390/axioms13100711

Chicago/Turabian StyleTsoulos, Ioannis G., Vasileios N. Stavrou, and Dimitrios Tsalikakis. 2024. "Using Artificial Neural Networks to Solve the Gross–Pitaevskii Equation" Axioms 13, no. 10: 711. https://doi.org/10.3390/axioms13100711

APA StyleTsoulos, I. G., Stavrou, V. N., & Tsalikakis, D. (2024). Using Artificial Neural Networks to Solve the Gross–Pitaevskii Equation. Axioms, 13(10), 711. https://doi.org/10.3390/axioms13100711