Abstract

Green matrices are interpreted as discrete version of Green functions and are used when working with inhomogeneous linear system of differential equations. This paper discusses accurate algebraic computations using a recent procedure to achieve an important factorization of these matrices with high relative accuracy and using alternative accurate methods. An algorithm to compute any minor of a Green matrix with high relative accuracy is also presented. The bidiagonal decomposition of the Hadamard product of Green matrices is obtained. Illustrative numerical examples are included.

MSC:

65D17; 65G50; 41A10; 41A15; 41A20

1. Introduction

A real value is said to be computed to HRA whenever the obtained satisfies

where u is the unit round-off and C is a constant, which does not depend on either the conditioning of the corresponding problem or arithmetic precision. An algorithm can be computed to high relative accuracy (HRA) when it only uses multiplications, divisions and sums of numbers with the same sign or subtractions of initial data (cf. [1]). In other words, the only operation that breaks HRA is the sum of numbers with opposite signs. In fact, this computation keeps HRA for initial and exact data if the floating-point arithmetic is well-implemented. The problem of obtaining an adequate parameterization of the considered classes of matrices is the cornerstone to devise HRA algorithms. A matrix is said to be totally positive (TP) if all its minors are greater than or equal to zero and it is said to be strictly totally positive (STP) if they are greater than zero (see [2,3,4]). In the literature some authors have also called TP and STP matrices as totally nonnegative and totally positive matrices, respectively (see [5]). TP matrices emerge in many applications in Approximation Theory, Statistics, Combinatory, Differential Equations or Economy (cf. [2,3,4,5,6]) and, in particular, in connection with optimal bases in Computer Aided Geometric Design (cf. [7,8]). HRA algorithms have been developed for some classes of matrices. Among them, first we mention here some few subclasses of nonsingular TP matrices and matrices related with them. For instance, for Generalized Vandermonde matrices [9], for Bernstein-Vandermonde matrices [10,11,12], for Said-Ball-Vandermonde matrices [13], for Cauchy-Vandermonde matrices [14], for Schoenmakers-Coffey matrices [15], for h-Bernstein-Vandermonde matrices [16] or for tridiagonal Toeplitz matrices [17], and much more subclasses of TP matrices. HRA algorithms for some algebraic problems related with other class of matrices have also been developed. For example, in [18] an HRA algorithm for the computation of the singular values was devised for a class of negative matrices. In [19] the authors develop a method to solve linear systems associated with a wide class of rank-structured matrices with HRA. These matrices contain the well-known Vandermonde and Cauchy matrices.

If the bidiagonal decomposition, , of a nonsingular TP matrix A is known to HRA, then many algebraic computations can be carried out with HRA by using the functions in the software library [20]. For instance, computing its eigenvalues, its singular values, its inverse and the solution of linear systems , where b has alternating signs. In [21] a method to get bidiagonal factorizations of Green matrices to HRA with elementary operations was introduced. Now we shall deepen on this method and its consequences as well as we shall show alternative accurate methods for Green matrices and provide further properties of these matrices. Green matrices (see [2,3,22]) are usually interpreted as discrete version of Green functions (see p. 237 of [22]) and are used when working with inhomogeneous systems of of differential equations. Green functions emerge in the Sturm-Liouville boundary-value problem. They have important applications in many scientific fields, such as Mechanics (see [22]) or Econometrics (see [23]).

Schoenmakers-Coffey matrices form a subclass of Green matrices with important financial applications (see [24,25,26,27,28,29]). In [15] Schoenmakers-Coffey matrices were characterized by a parametrization containing n parameters. The parametrization was used in [15] to perform HRA algebraic computations. In [21], a parametrization of parameters leading to HRA computations for nonsingular TP Green matrices was presented. In Section 2, devoted to basic notations and auxiliary results, we recall this parametrization and, in the general case of nonsingular Green matrices, we also use it in Section 3 (in Theorem 4) to obtain alternative methods to compute the determinant and the inverse with HRA. In Section 4 we provide a method to compute all minors of a Green matrix with HRA. In Section 5, the bidiagonal factorization of the Hadamard product of Green matrices is obtained. In contrast to the Hadamard product of nonsingular Green matrices, we also prove that the the Hadamard product of nonsingular TP Green matrices is also a nonsingular TP Green matrix. Section 6 includes a family of numerical experiments confirming the advantages of using the HRA methods. Finally, Section 7 summarizes the conclusions of the paper.

2. Basic Notations and Auxilliary Results

A desirable goal in the construction of numerical algorithms is the high relative accuracy (cf. [30,31]). This goal has only been achieved for a few classes of matrices. We say that an algorithm for the solution of an algebraic problem is performed to high relative accuracy in floating point arithmetic if the relative errors in the computations have the order of the unit round-off (or machine precision), without being affected by the dimension or the conventional conditionings of the problem (see [31]). It is well known that algorithms to high relative accuracy are those avoiding subtractive cancellations, that is, only requiring the following arithmetics operations: products, quotients, and additions of numbers of the same sign (see p. 52 in [1]). Moreover, if the floating-point arithmetic is well-implemented, the subtraction of initial data can also be done without loosing high relative accuracy (see p. 53 in [1]).

As mentioned in the Introduction, computations to high relative accuracy with totally positive matrices can be achieved by means of a proper representation of the matrices in terms of bidiagonal factorizations, which is in turn closely related to their Neville elimination (cf. [32,33]). Roughly speaking, Neville elimination is a procedure to make zeros in a column of a given matrix by adding to each row an appropriate multiple of the previous one.

Given , Neville elimination consists of major steps

where U is an upper triangular matrix. Every major step in turn consists of two steps. In the first of these two steps, the Neville elimination obtains from the matrix by moving to the bottom the rows with a zero entry in column k below the main diagonal, if necessary. Then, in the second step the matrix is obtained from according to the following formula

The process finishes when is an upper triangular matrix. The entry

is the pivot and is called the i-th diagonal pivot of the Neville elimination of A. The Neville elimination of A can be performed without row exchanges if all the pivots are nonzero. Then the value

is called the multiplier. The complete Neville elimination of A consists of performing the Neville elimination to obtain the upper triangular matrix and next, the Neville elimination of the lower triangular matrix .

Neville elimination is an important tool to analyze if a given matrix is STP, as shown in this characterization derived from Theorem 4.1 and Corollary 5.5 of [32], and the arguments of p. 116 of [34].

Theorem 1.

Let A be a nonsingular matrix. A is STP (resp., TP) if and only if the Neville elimination of A and can be performed without row exchanges, all the multipliers of the Neville elimination of A and are positive (resp., nonnegative), and the diagonal pivots of the Neville elimination of A are all positive.

In [34], it is shown that a nonsingular totally positive matrix can be decomposed as follows,

where (respectively, ) is the TP, lower (respectively, upper) triangular bidiagonal matrix given by

and D is a diagonal matrix whose diagonal entries are , . The diagonal elements are the diagonal pivots of the Neville elimination of A. Moreover, the elements and are the multipliers of the Neville elimination of A and , respectively. The bidiagonal factorization of (4) is usually compacted in matrix form as follows

The transpose of a nonsingular totally positive matrix A is also totally positive and, using the factorization (4), it can be written as follows

If, in addition, A is symmetric, then we have that , , and then

where , , are the lower triangular bidiagonal matrices described in (5), whose off-diagonal entries coincide with the multipliers of the Neville elimination of A and D is the diagonal matrix with the diagonal pivots of the Neville elimination of A.

Under certain conditions (cf. [34]), the factorization (4) is unique. On the other hand, Neville elimination can also allow us to obtain bidiagonal factorizations as in (4) for some matrices that are singular and that are not TP. This will happen in the following sections. When the matrix is singular some diagonal entries of the matrix D of (4) can be zero.

Many of the subclasses of TP matrices mentioned in the introduction are very ill-conditioned, so that classical error analysis (cf. [35]) expects great roundoff errors when performing algebraic calculations with those matrices. However, the parametrization provided by the bidiagonal decomposition allows us to compute many algebraic calculations with high relative accuracy. Let us recall that, given a nonsingular and totally positive matrix A, by providing its bidiagonal factorization to high relative accuracy, the Matlab functions avalaible in the software library TNTools in [20] compute to high relative accuracy (using the algorithm presented in [36]), the solution of , for vectors b with alternating signs, and the singular values of A, which coincide with the eigenvalues when the matrix is also symmetric, as it happens with Green matrices. In particular:

- TNInverseExpand returns , requiring ) elementary operations.

- TNSolve returns the solution c of . It requires ) elementary operations.

- TNSingularValues(B) returns the singular values of A. Its computational cost is .

Following [4], we are going to recall the definition of a Green matrix. Given two sequences of nonzero real numbers , , a Green matrix is the symmetric matrix given by if (or, equivalently, for all ).

The initial parameters used in [21] to get HRA algorithms were:

Then, the elements of the Green matrix can be expressed as for . We also have that for all .

In Theorem 4.2 of [4] or p. 214 of [2] a result characterizing TP Green matrices was presented. Let us now recall it. Using (8), it can be stated as follows.

Theorem 2.

A Green matrix is TP if and only if the parameters (8) are formed by numbers of the same stric sign and

The parameters (8) can be seen as natural parameters for a Green matrix taking into account Theorem 2 and the characterization of nonsingular Green matrices that we shall show below in Section 3.

Recently, a bidiagonal factorization of a Green matrix A to HRA has been derived. Let us now recall this decomposition of A. Using it, when A is also nonsingular TP, we can also carry out some algebraic computations of A to HRA: computation of its eigenvalues (which coincide with its singular values by the symmetry of the matrix), of its inverse and of the solution of some linear systems. Although this result is presented in [21], we include it with its proof for the sake of completeness.

Theorem 3.

Let A be a Green matrix where the parameters (8) are known. Then, the bidiagonal factorization of A can be computed to HRA. If, in addition, A is nonsingular TP, then its eigenvalues, and the solution of linear systems (where b has alternating signs) can also be computed with HRA.

Proof.

The result in [21] was proved by systematically applying complete Neville elimination to A and keeping track the corresponding steps in matrix form. So, the first major step of the complete Neville elimination (it makes zeros the entries and by performing row an column matrix elementary operations) was expressed in matrix form as

where () denotes the elementary matrix coinciding with the order identity matrix except for the place , which is instead of 0. With respect to the matrix M, all the elements of its last row and its last column are zero except for the entry , which is given by

Performing the major steps of the complete Neville elimination to convert the entries , , to zeros, the following factorization was obtained

where is the diagonal matrix given by

From (11) the following factorization of A was deduced

that is, . Observe that all elements of the decomposition (13) can be obtained with HRA.

If A is a nonsingular and totally positive matrix, by using the algorithms devised in [36,37], the announced algebraic computations can be carried out to HRA from the bidiagonal factorization of A. □

Observe in the proof of the previous result that the only nonzero entries of the compacted matrix form of the bidiagonal factorization (commented above) of a Green matrix lie in the first row, the first column or the diagonal.

In Remark 2.2 of [21] we discussed the computational cost of the algorithm given in the proof of Theorem 3 and taking into account the computational costs of the algorithms given in [36,37], the computational cost of its application to compute the eigenvalues, the inverse or the solution of a linear system of equations for a Green matrix.

3. Accurate Method for the Inverse and Determinant of a Green Matrix

The next result is valid for all nonsingular Green matrices, which are characterized in terms of the parameters (8). For nonsingular TP Green matrices, it also provides an alternative way to that of Theorem 3 for calculating their inverses with HRA.

Theorem 4.

Proof.

With the notations of the proof of Theorem 3, observe that the matrix

is upper triangular, it has as diagonal entries () given by (12) and

Therefore, if we know the parameters (8), then we can compute by (12) and (14) with HRA and the computational cost is elementary operations. Besides, Equation (14) also implies that if and only if for all , which is equivalent by (12) to the fact that for all .

Besides, if we know the parameters (8), then we can also compute the parameters with HRA for all . Finally, in the nonsingular case of with if , by Theorem 1 of [15] its inverse is the tridiagonal matrix given by

Observe that, for ,

and, for ,

Observe that the proof of Theorem 4 also provides accurately the null determinant when the Green matrix is singular because, in this case, one of the pivots is zero and so by (14).

Recall that a TP matrix is oscillatory if a certain power is STP. The following remark shows that, if we have a nonsingular and TP Green matrix A, then A is oscillatory.

Remark 1.

Taking into account Theorems 3 and 4, when the inequalities of (9) are strict then we have a nonsingular TP matrix . In fact, since all entries are nonzero (), then by Theorem 4.2 of [2] A is oscillatory. Remember (cf. Theorem 6.5 of [2]) that an oscillatory matrix has all its eigenvalues distinct and positive.

4. Accurate Method for the Minors of a Green Matrix

Let be a real square matrix and k a positive integer satisfying . With will be denoted the set of all strictly increasing sequences of k natural numbers less than or equal to n:

Given , then is by definition the submatrix of A containing rows numbered by and columns numbered by .

As shown in p. 4 of [15], a Green matrix () cannot be strictly totally positive because it always has zero minors. In fact, for any with , . The following result will show that we can compute all minors of a Green matrix with high relative accuracy. Its proof includes an explicit formula for the computation of nonzero minors that can be performed with high relative accuracy.

Theorem 5.

If is a Green matrix with known parameters (8), then we can compute any minor of A with HRA.

Proof.

As proved in pp. 110–111 of [3], any minor of a Green matrix A satisfies

where and , provided (). In all other cases, the minor is zero.

Taking into account that and that, for each ,

we can deduce from (17) that all nonzero minors of A can be calculated as

5. Hadamard Product of Green Matrices

Given two matrices and the Hadamard (or entrywise) product of A and B is the matrix defined by . There are some properties of the ordinary product of totally positive matrices that do not hold for the Hadamard product. A first property is the fact that the product of two totally positive matrices is totally positive (see Theorem 3.1 of [2]). However, in general, the class of totally positive matrices is not closed under the operation of Hadamard products (see p. 120 of [4]). For instance, consider the TP matrix A with 0 in entry and 1 elsewhere. Then A and are TP, but has determinant and so it is not TP. Let us see this counterexample with more detail:

and then

However, the Hadamard product of totally positive Green matrices is a totally positive Green matrix (see p. 120 of [4]).

The second announced property deals with the bidiagonal factorization. If we know the bidiagonal factorizations and of two nonsingular totally positive matrices A and B, then we can find in [37] a method to obtain the bidiagonal factorization of their ordinary product . This method has not been extended to the Hadamard product. However, we are going to see that, if we know the parameters (8) of two Green matrices, then we can obtain the bidiagonal factorization (4) of their Hadamard product.

Theorem 6.

If A and B are Green matrices with parameters (8) given by , and , , respectively, then the bidiagonal factorization of the Hadamard product is given by

where is matrix given by

Proof.

Neville elimination must be performed. First, times the last but one row is substracted to the last row in order to transform the place of to zero. Like in the case of the Neville elimination on a Green matrix, this elementary operation also converts the remaining off-diagonal elements of the last row to zero. After carrying out the previous operation, the place of is transformed to

By symmetry, if times the last but one column is subtracted to the last column, a matrix, where all the entries of the last column up to the place are zero, is obtained. The entry does not change and remains. So, this first major step can be expressed in matrix form as

If this process is continued to transform the entries to zeros, the following decomposition is obtained

where D is the diagonal matrix with diagonal entries in (20).

Taking into account the formula for the inverse of , the following bidiagonal factorization of is obtained:

□

Observe that Theorem 6 does not require any additional property for the Green matrices that are factors of the Hadamard product: neither total positivity neither singularity.

Remark 2.

As recalled above, the Hadamard product of two totally positive Green matrices is a totally positive Green matrix. However, the Hadamard product of nonsingular Green matrices can be singular. Now let us illustrate this with an example. Let us consider two nonsingular Green matrices A and B given by the sequences , and , . The Green matrices A and B can be written explicitly as

We can observe that A and B are both nonsingular. In contrast, the Hadamard product of A and B

is clearly singular.

In contrast to the previous remark, the next result will show that the Hadamard product of two nonsingular TP Green matrices is again a nonsingular TP Green matrix.

Theorem 7.

If A and B are nonsingular TP Green matrices, then the Hadamard product is also a nonsingular TP Green matrix.

Proof.

It is known that the Hadamard product of totally positive Green matrices is a totally positive Green matrix (see p. 120 of [4]). Let , and , () be the parameters (8) of A and B, respectively. Since A and B are totally positive, by Theorem 2 we have that and that and since they are also nonsingular, we deduce from Theorem 4 that

Taking into account the bidiagonal factorization of a Green matrix given in Theorem 3 and the bidiagonal factorization of the Hadamard product of Green matrices given in Theorem 6, we deduce that the parameters of are given by . Then, by (22), for all and so, by Theorem 4, we deduce that is nonsingular. □

6. Numerical Tests

Given an square nonsingular TP matrix A, if the bidiagonal decomposition of A is known to HRA, Koev ([37,38]) developed HRA algorithms to perform the following algebraic tasks:

- computation of the eigenvalues of A,

- computation of the singular values of A, and

- computation of the solution of linear systems of equations , where b has an alternating sign pattern.

These methods were implemented by Koev in the software library TNTool available for download in [20] for the MATLAB and Octave environment. The corresponding functions are TNEigenvalues, TNSingularValues, and TNSolve, respectively. In addition, in [36] an algorithm to compute the inverse of A was developed. This algorithm was implemented by the authors in the function TNInverseExpand and contributed to the software library [20]. The previous four functions require as input argument to HRA and, for the case of TNSolve, the vector b of the linear system to be solved is also necessary as second argument.

In the proof of Theorem 3 the bidiagonal decomposition of a Green matrix was recalled. The corresponding method to obtain this bidiagonal decomposition was implemented in a Matlab/Octave function TNBDGreen to be used together with the software library [20]. In Algorithm 1 we can see the pseudocode of this function.

| Algorithm 1 Computation of the bidiagonal decomposition of a Green matrix A |

| Require: and |

| Ensure: B bidiagonal decomposition of A |

| for do |

| end for |

Now we will illustrate some of the theoretical results presented along this paper with numerical examples. First, in our numerical tests, we have considered the Green matrix of order 30 whose bidiagonal decomposition is given by the parameters and defined by

It is the Green matrix with parameters given by the sequence v and the sequence defined by for . By Theorem 2, the matrix is TP and, by Theorem 4, the matrix is also nonsingular. In fact, by Remark 1, is oscillatory. Then, by Theorem 3, can be computed to HRA and, in addition, using this bidiagonal decomposition and the software library [20] for example the eigenvalues of can also be computed to HRA.

So the eigenvalues of has been calculated with Mathematica using a 200-digit precision. In addition, these eigenvalues have also been computed with Matlab in two different ways:

- by using the usual function eig, and

- by using the function TNEigenvalues using the bidiagonal decomposition computed to HRA with TNBDGreen.

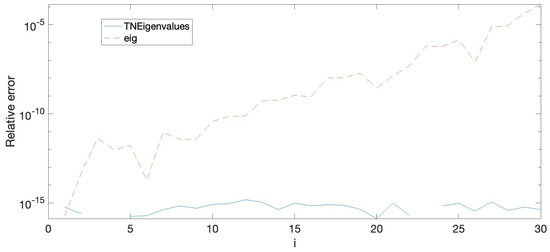

Then, in order to compare the accuracy of the approximations to the eigenvalues obtained with Matlab in these two ways (eig and TNEigenvalues) we have obtained the relative errors considering the eigenvalues obtained with Mathematica as exact. The eigenvalues of have been arranged in a decreasing order () to illustrate the computed relative errors. In Figure 1, the relative errors of the approximation to these eigenvalues by both methods have been displayed. Although we have calculated the relative errors of both methods for a discrete number of eigenvalues, in order to improve the readability of the picture, the piecewise linear interpolant of these errors has been plotted for each method (piecewise linear interpolants have also been used in the following figures of the paper). The figure shows that using to HRA together with the function TNEigenvalues of Koev software library ([20]) provides much better results than these obtained by using the usual Matlab function eig from the point of view of accuracy.

Figure 1.

Relative errors when computing the eigenvalues , , with Matlab.

Figure 1 shows that, for the lower eigenvalues, eig Matlab command does not provide satisfactory approximations in contrast to the accurate approximations provided by the HRA bidiagonal decomposition of A joint with the function TNEigenvalues of Plamen Koev software library. Taking into account that the lowest eigenvalues present the most difficulties to be approximated, now we consider the Green matrices , , of order n given by the parameters and defined by

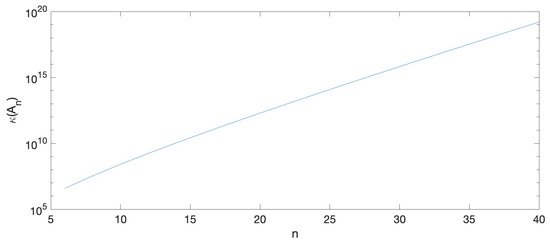

The matrix corresponds to the Green matrix given by the sequence and the sequence defined by for . We have computed the 2-norm condition numbers of these matrices , : . These condition numbers can be seen in Table 1. For a more intuitive representation of these condition numbers you can see Figure 2. Due to these huge condition numbers we cannot expect accurate enough results when performing algebraic computations by the usual methods for those matrices. In this case, algorithms taking into account the structure of the matrices are desirable in order to obtain good numerical results. Green matrices , , are nonsingular TP matrices and their bidiagonal decompositions can also be computed to HRA.

Table 1.

2-norm condition number for .

Figure 2.

2-norm condition number for .

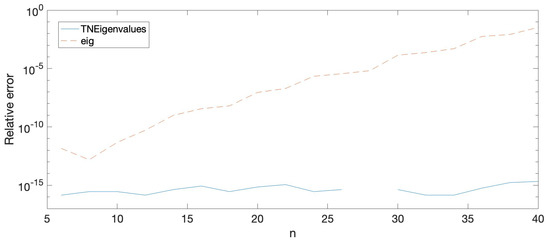

Approximations of the lowest eigenvalue of these matrices by HRA methods and by Matlab command eig have been considered. Then we have computed those eigenvalues with Mathematica using a 200 digits precision. The relative errors for the approximations of the eigenvalues obtained previously have been calculated considering the eigenvalues provided by Mathematica as exact. Table 2 shows the relative errors of the approximation to the lowest eigenvalue of each matrix for . In Figure 3 we can see these same relative errors in a graphical way.

Table 2.

Relative errors when computing the eigenvalues of for .

Figure 3.

Relative errors when computing the eigenvalues for , with Matlab.

The bidiagonal decomposition of a Green matrix A to HRA together with the functions TNInverseExpand and TNSolve of the software library [20] allow us to compute its inverse and the solution of , where b has alternating signs, to HRA. Now let us compare numerically the accuracy of our HRA methods with this of the usual methods in Matlab. In this respect we have computed the inverse of the considered Green matrix of order 40, , with Mathematica using a 200-digit precision. We have also computed with Matlab the inverse with the usual function inv and with the function TNInverseExpand using to HRA obtained with the function TNBDGreen. Then we have computed the componentwise relative errors for both Matlab approximations. Finally, we have calculated the average of the componentwise relative errors for both approximations and the greatest componentwise relative error. These data can be seen in Table 3.

Table 3.

Relative errors when computing .

Now let us consider a system of linear equations , where b has an alternating pattern of signs with the absolute value of its entries randomly generated as integers in the interval . First we have calculated the exact solution x of the linear system with Mathematica. Then we have obtained approximations to the exact solution of the system with the usual Matlab command \ and with TNSolve of the software library [20] using the bidiagonal factorization provided by TNBDGreen to HRA. Finally we have computed the relative errors componentwise. These componentwise relative errors are shown in Table 4.

Table 4.

Componentwise relative error when solving .

7. Conclusions

In [21] a method with HRA to obtain the bidiagonal factorization of a Green matrix was presented and used to derive accurate methods for algebraic computations with nonsingular TP matrices. In this paper we illustrate with a family of matrices the advantages of using this method and we show that the parametrization used in [21] can be also used to construct accurate alternative methods and for other related problems. For instance, to obtain HRA methods to compute the inverse or the determinant of any nonsingular Green matrix and a method to compute with HRA any minor of a Green matrix. The bidiagonal factorization of the Hadamard product of two Green matrices is also obtained. Other related properties are analyzed. In particular, the Hadamard product is closed for nonsingular TP Green matrices, in contrast to the Hadamard product of nonsingular Green matrices.

Author Contributions

The authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Spanish research grants PGC2018-096321-B-I00 (MCIU/AEI) and RED2022-134176-T (MCI/AEI), and by Gobierno de Aragón (E41_23R).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HRA | High relative accuracy |

| TP | Totally positive |

| STP | Strictly totally positive |

References

- Demmel, J.; Gu, M.; Eisenstat, S.; Slapnicar, I.; Veselic, K.; Drmac, Z. Computing the singular value decomposition with high relative accuracy. Linear Algebra Appl. 1999, 299, 21–80. [Google Scholar] [CrossRef]

- Ando, T. Totally positive matrices. Linear Algebra Appl. 1987, 90, 165–219. [Google Scholar] [CrossRef]

- Karlin, S. Total Positivity; Stanford University Press: Stanford, CA, USA, 1968; Volume 1. [Google Scholar]

- Pinkus, A. Totally Positive Matrices, Cambridge Tracts in Mathematics 181; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Fallat, S.M.; Johnson, C.R. Totally Nonnegative Matrices; Princeton Series in Applied Mathematics; Princeton University Press: Princeton, NJ, USA, 2011. [Google Scholar]

- Gasca, M.; Micchelli, C.A. Total Positivity and Its Applications; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Delgado, J.; Orera, H.; Peña, J.M. Optimal properties of tensor product of B-bases. Appl. Math. Lett. 2021, 121, 107473. [Google Scholar] [CrossRef]

- Delgado, J.; Peña, J.M. Extremal and optimal properties of B-bases collocation matrices. Numer. Math. 2020, 146, 105–118. [Google Scholar] [CrossRef]

- Demmel, J.; Koev, P. The accurate and efficient solution of a totally positive generalized Vandermonde linear system. SIAM J. Matrix Anal. Appl. 2005, 27, 42–52. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J. A fast and accurate algorithm for solving Bernstein-Vandermonde linear systems. Linear Algebra Appl. 2007, 422, 616–628. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J. Polynomial least squares fitting in the Bernstein basis. Linear Algebra Appl. 2010, 433, 1254–1264. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J. Accurate computations with totally positive Bernstein-Vandermonde matrices. Electron. J. Linear Algebra 2013, 26, 357–380. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J. Accurate computations with Said-Ball-Vandermonde matrices. Linear Algebra Appl. 2010, 432, 2894–2908. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J.; Peña, J.M. Accurate bidiagonal decomposition of totally positive Cauchy-Vandermonde matrices and applications. Linear Algebra Appl. 2017, 517, 63–84. [Google Scholar] [CrossRef]

- Delgado, J.; Peña, G.; Peña, J.M. Accurate and fast computations with positive extended Schoenmakers-Coffey matrices. Numer. Linear Algebra Appl. 2016, 23, 1023–1031. [Google Scholar] [CrossRef]

- Marco, A.; Martínez, J.J.; Viaña, R. Accurate bidiagonal decomposition of totally positive h-Bernstein-Vandermonde matrices and applications. Linear Algebra Appl. 2019, 579, 320–335. [Google Scholar] [CrossRef]

- Delgado, J.; Orera, H.; Peña, J.M. Characterizations and accurate computations for tridiagonal Toeplitz matrices. Linear Multilinear Algebra 2022, 70, 4508–4527. [Google Scholar] [CrossRef]

- Huang, R.; Xue, J. Accurate singular values of a class of parameterized negative matrices. Adv. Comput. Math. 2021, 47, 73. [Google Scholar] [CrossRef]

- Huang, R. Accurate solutions of product linear systems associated with rank-structured matrices. J. Comput. Appl. Math. 2019, 347, 108–127. [Google Scholar] [CrossRef]

- Koev, P. TNTool. Available online: https://math.mit.edu/~plamen/software/TNTool.html (accessed on 10 March 2023).

- Delgado, J.; Peña, G.; Peña, J.M. Accurate and fast computations with Green matrices. Appl. Math. Lett. 2023, 145, 108778. [Google Scholar] [CrossRef]

- Gantmacher, F.P.; Krein, M.G. Oscillation Matrices and Kernels and Small Vibrations of Mechanical Systems, Revised ed.; AMS Chelsea: Providence, RI, USA, 2002. [Google Scholar]

- Ramsay, J.O.; Ramsey, J.B. Functional data analysis of the dynamics of the monthly index of nondurable goods production. J. Econom. 2002, 107, 327–344. [Google Scholar] [CrossRef]

- Lord, R.; Pelsser, A. Level, Slope and Curvature: Art or Artefact? Appl. Math. Financ. 2007, 14, 105–130. [Google Scholar] [CrossRef]

- Salinelli, E.; Serra-Capizzano, S.; Sesana, D. Eigenvalue-eigenvector structure of Schoenmakers-Coffey matrices via Toeplitz technology and applications. Linear Algebra Appl. 2016, 491, 138–160. [Google Scholar] [CrossRef]

- Salinelli, E.; Sgarra, C. Correlation matrices of yields and total positivity. Linear Algebra Appl. 2006, 418, 682–692. [Google Scholar] [CrossRef][Green Version]

- Salinelli, E.; Sgarra, C. Shift, slope and curvature for a class of yields correlation matrices. Linear Algebra Appl. 2007, 426, 650–666. [Google Scholar] [CrossRef]

- Salinelli, E.; Sgarra, C. Some results on correlation matrices for interest rates. Acta Appl. Math. 2011, 115, 291–318. [Google Scholar] [CrossRef]

- Schoenmakers, J.; Coffey, B. Systematic Generation of Parametric Correlation Structures for the LIBOR Market Model. Int. J. Theor. Appl. Financ. 2003, 6, 507–519. [Google Scholar] [CrossRef]

- Demmel, J. Accurate singular value decompositions of structured matrices. SIAM J. Matrix Anal. Appl. 1999, 21, 562–580. [Google Scholar] [CrossRef]

- Demmel, J.; Dumitriu, I.; Holtz, O.; Koev, P. Accurate and efficient expression evaluation and linear algebra. Acta Numer. 2008, 17, 87–145. [Google Scholar] [CrossRef]

- Gasca, M.; Peña, J.M. Total positivity and Neville elimination. Linear Algebra Appl. 1992, 165, 25–44. [Google Scholar] [CrossRef]

- Gasca, M.; Peña, J.M. A matricial description of Neville elimination with applications to total positivity. Linear Algebra Appl. 1994, 202, 33–53. [Google Scholar] [CrossRef]

- Gasca, M.; Peña, J.M. On Factorizations of Totally Positive Matrices. In Total Positivity and Its Applications; Gasca, M., Micchelli, C.A., Eds.; Kluwer Academic: Dordrecht, The Netherlands, 1996; pp. 109–130. [Google Scholar]

- Higham, N.J. Accuracy and Stability of Numerical Algorithms, 2nd ed.; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Marco, A.; Martínez, J.J. Accurate computation of the Moore–Penrose inverse of strictly totally positive matrices. J. Comput. Appl. Math. 2019, 350, 299–308. [Google Scholar] [CrossRef]

- Koev, P. Accurate computations with totally nonnegative matrices. SIAM J. Matrix Anal. Appl. 2007, 29, 731–751. [Google Scholar] [CrossRef]

- Koev, P. Accurate eigenvalues and SVDs of totally nonnegative matrices. SIAM J. Matrix Anal. Appl. 2005, 27, 1–23. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).