Abstract

In this manuscript, we investigate some convergence and stability results for reckoning fixed points using a faster iterative scheme in a Banach space. Also, weak and strong convergence are discussed for close contraction mappings in a Banach space and for Suzuki generalized nonexpansive mapping in a uniformly convex Banach space. Our method opens the door to many expansions in the problems of monotone variational inequalities, image restoration, convex optimization, and split convex feasibility. Moreover, some experimental examples were conducted to gauge the usefulness and efficiency of the technique compared with the iterative methods in the literature. Finally, the proposed approach is applied to solve the nonlinear Volterra integral equation with a delay.

MSC:

47H09; 47A56

1. Introduction

Many problems in mathematics and other fields of science may be modeled into an equation with a suitable operator. Therefore, it is self-evident that the existence of a solution to such issues is equivalent to finding the fixed points (FPs) of the aforementioned operators.

FP techniques are applied in many solid applications due to their ease and smoothness; these include optimization theory, approximation theory, fractional derivatives, dynamic theory, and game theory. This is the reason why researchers are attracted to this technique. Also, this technique plays a significant role not only in the above applications, but also in nonlinear analysis and many other engineering sciences. One of the important trends in FP methods is the study of the behavior and performance of algorithms that contribute greatly to real-world applications; see [1,2,3,4,5,6] for more details.

Throughout this paper, we assume that is a Banach space (BS); is a nonempty, closed, and convex subset (CCS) of an ; ; and is the set of natural numbers. Further, ⇀ and ⟶ stand for weak and strong convergence, respectively.

Suppose that refers to the class of all FPs of the operator , which is described as an element such that an equation is true.

In [7], a new class of contractive mappings was introduced by Berinde as follows:

where , and The mapping ℑ is called an almost contraction mapping (ACM, for short).

The same author showed that the contractive condition (1) is more general than the contractive condition of Zamfirescu in [8].

In 2003, the ACM (1) was generalized by Imoru and Olantiwo [9] by replacing the constant with a strictly increasing continuous function so that as follows:

where and ℑ here is called a contractive-like mapping. Clearly, (2) generalizes the mapping classes taken into account by Berinde [7] and Osilike et al. [10].

Many authors tended to create many iterative methods for approximating FPs in terms of improving the performance and convergence behavior of algorithms for nonexpansive mappings. Over the past 20 years, a wide range of iterative techniques have been created and researched in order to approximate the FPs of various kinds of operators.

In the literature, the following are some common iterative techniques: Mann [11], Ishikawa [12], Noor [13], Argawal et al. [14], Abbas and Nazir [15], and HR [16,17].

Let and be sequences in Consider the following iterations:

The above procedures are known as the S algorithm [14], Picard-S algorithm [18], Thakur algorithm [19], and algorithm [20], respectively.

For contractive-like mappings, it is verified that technique (6) converges more quickly than both Karakaya et al. [21], (3)–(5) analytically and numerically.

On the other hand, nonlinear integral equations (NIEs) are used to describe mathematical models arising from mathematical physics, engineering, economics, biology, etc. [22]. In particular, spatial and temporal epidemic modeling challenges and boundary value problems lead to NIEs. Many academics have recently turned to iterative approaches to solve NIEs; for examples, see [23,24,25,26,27].

The choice of one iterative method over another is influenced by a few key elements, including speed, stability, and dependence. In recent years, academics have become increasingly interested in iterative algorithms with FPs that depend on data; for further information, see [28,29,30,31].

Inspired by the above work, in this paper, we develop a new faster iterative scheme as follows:

where and are sequences in

The rest of the paper is arranged as follows: An analytical analysis of the performance and convergence rate of our approaches is presented in Section 3. We observed that the convergence rate is acceptable for ACMs in a BS. Also, Section 4 covers the weak and strong convergence of the suggested technique for SGNMs in the context of uniformly convex Banach spaces (UCBSs, for short). Moreover, in Section 5, we discuss the stability results of our iterative approach. In addition, some numerical examples are involved in Section 6 to study the efficacy and effectiveness of the proposed method. Ultimately, in Section 7, the solution to a nonlinear Volterra integral problem is presented using the method under consideration.

2. Preliminaries

This part is intended to give some definitions, propositions and lemmas that will assist the reader in understanding our manuscript and will be useful in the sequel.

Definition 1.

A mapping is called a SGNM if

Definition 2.

A BS Ω is called a uniformly convex, if for each there exists such that for satisfying , and we have

Definition 3.

A BS Ω is called satisfy Opial’s condition, if for any sequence in Ω such that implies

for all where .

Definition 4.

Assume that is a bounded sequence in For , we set

The asymptotic radius and center of relative to Ω are described as

The asymptotic center of relative to Ω is defined by

Clearly, contains one single point in a UCBS.

Definition 5

([32]). Let and { be nonnegative real sequences converge to σ and κ, respectively. If there exists such that , then, we have the following possibilities:

- If then converges to σ faster than does to

- If then the two sequences have the same rate of convergence.

Definition 6

([33]). Let Ω be a BS. A mapping is said to be satisfy Condition if the inequality below holds

for all where

Proposition 1

([34]). For a self-mapping we have

- ℑ is a SGNM if ℑ is nonexpansive.

- If ℑ is a SGNM, then it is a quasi-nonexpansive mapping.

Lemma 1

([34]). Assume that Θ is any subset of a BS which verifies Opial’s condition. Let be a SGNM. If and then is demiclosed at zero and .

Lemma 2

([34]). If is a SGNM, and Θ is a weakly compact convex subset of a BS then, ℑ owns a FP.

Lemma 3

([32]). Let and be nonnegative real sequences such that

if and then

Lemma 4

([35]). Let and be nonnegative real sequences such that

if and then

Lemma 5

([36]). Let Ω be a UCBS and be a sequence such that for all Assume that and are two sequences in Ω such that for some

Then,

3. Speed of Convergence

In this section, we discuss the speed of convergence of our iterative scheme under ACMs.

Theorem 1.

Proof.

Let ; using (7), one has

As and for all then

Hence, (11) takes the form

From (12), we deduce that

It follows from (13) that

From the definition of and , we have Since for all the inequality (14) can be written as

Passing in (15), we get i.e.,

For uniqueness. Let such that then

which is a contradiction; therefore, □

According to Definition 5, the following theorem demonstrates that our method (7) converges faster than the iteration (6).

Theorem 2.

4. Convergence Results

In this section, we obtain some convergence results for our iteration scheme (7) using SGNMs in the setting of UCBSs. We begin with the following lemmas:

Lemma 6.

Assume that Θ is a nonempty CCS of a BS Ω and is a SGNM with Suppose that the sequence would be proposed by (7), then, exists for each

Proof.

For assume that . From Proposition 1 , one has

Utilizing (7), one gets

Lemma 7.

Let Θ be a nonempty CCS of a UCBS Ω and be a SGNM. If the sequence would be considered by (7), then if and only if is bounded and

Proof.

Let and Thank to Lemma 6, is bounded and exists. Set

Based on Proposition 1 we get

Hence,

Otherwise, let is bounded and Also, consider ; then, according to Definition 4, one has

which implies that As is uniformly convex and has exactly one point, then we have □

Theorem 3.

Let be a sequence iterated by (7) and let Θ and ℑ be defined as in Lemma 7. Then provided that Λ fulfills Opial’s condition and .

Proof.

Assume that ; thanks to Lemma 6, exists.

Next, we show that has a weak sequential limit in In this regard, consider with and for all From Lemma 7, one gets Using Lemma 1 and since is demiclosed at 0, one has which implies that Similarly

Now, if then by Opial’s condition, we get

which is a contradiction, hence and □

Theorem 4.

Proof.

Thank to Lemmas 2 and 7, and Since is compact, then there exists a subsequence so that for any Clearly,

Letting we get i.e., From Lemma 6, we conclude that exists for each hence □

Theorem 5.

Let be a sequence iterated by (7) and let Θ and ℑ be defined as in Lemma 7. Then if and only if where

Proof.

It is clear that the necessary condition is fulfilled. Consider Using Lemma 6, one can see that exists for each which leads to the finding that exists. Hence,

Now, we claim that is a Cauchy sequence in Since for every there exists so that

Therefore

Thus is a Cauchy sequence in The closeness of implies that there exists such that As then Therefore, and this completes the proof. □

5. Stability Analysis

This section demonstrates the stability of our iteration approach (7).

Theorem 6.

Proof.

In order to show that ℑ is stable, it is sufficient to prove that

Since , then Therefore, all assumptions of Lemma 3 hold, consequently i.e., .

Conversely, let then

Passing , we obtain This finishes the proof. □

To support Theorem 6, we investigate the following example:

Example 1.

Let and It is clear that, 0 is a FP of

First, we show that ℑ satisfies the condition (1). For this, take and for all we can write

Next, we prove that the iterative (7) is stable. For this regard, take and then

Set Clearly, for all Thank to Lemma 3, Consider we get

taking we get This proves that the suggested method (7) is stable.

6. Numerical Experiments

The example that follows examines how well and quickly our method performs when compared to other algorithms, while also illuminating the analytical findings from Theorem 2.

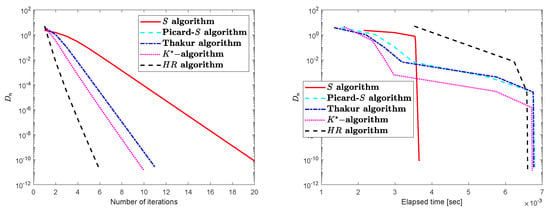

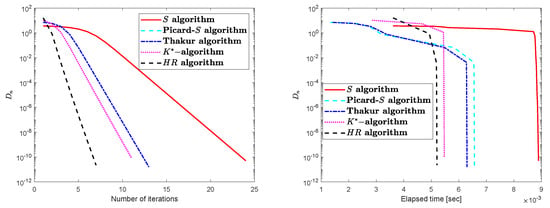

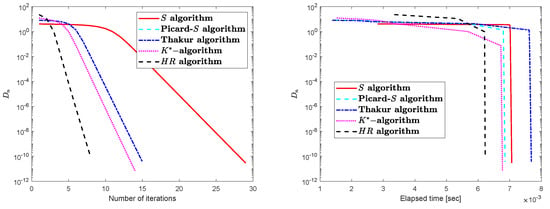

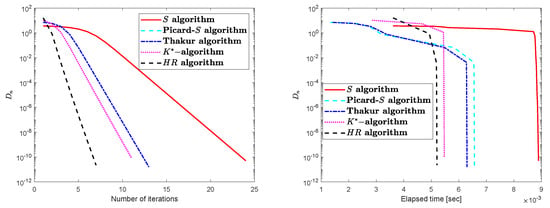

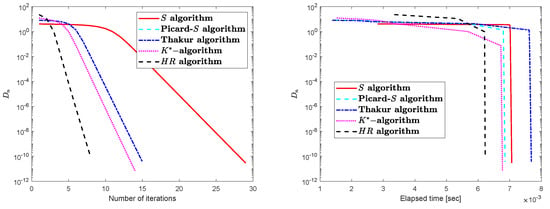

Example 2.

Let , , and be a mapping described as

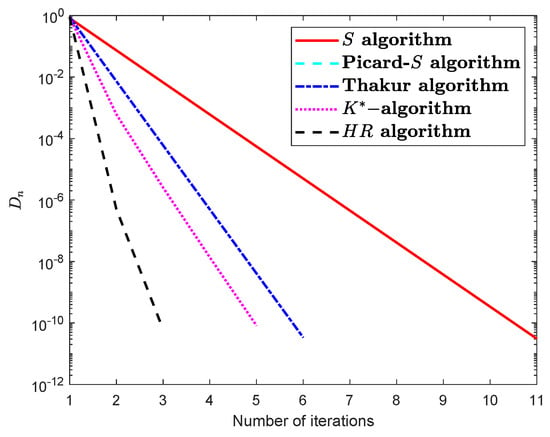

Obviously, 6 is a unique FP of Consider with distinct starting points. Then, we get Table 1, Table 2 and Table 3 and Figure 1, Figure 2 and Figure 3 for comparing the different iterative techniques.

Table 1.

Example 2: (algorithm) at .

Table 2.

Example 2: algorithm at .

Table 3.

Example 2: algorithm at .

Figure 1.

The suggested algorithm (algorithm) at .

Figure 2.

algorithm at .

Figure 3.

algorithm at .

The example below illustrates how our technique (7) performs better than some of the best iterative algorithms in the prior literature in terms of convergence speed under specified circumstances.

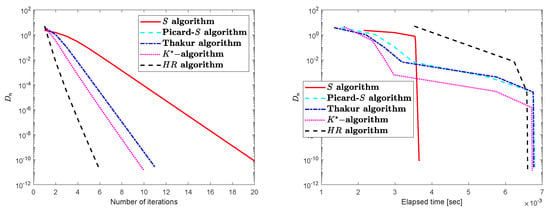

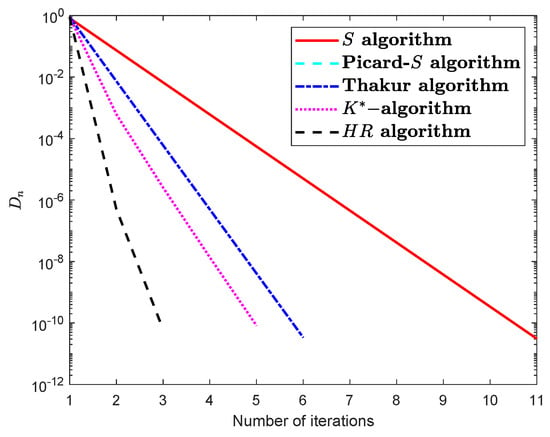

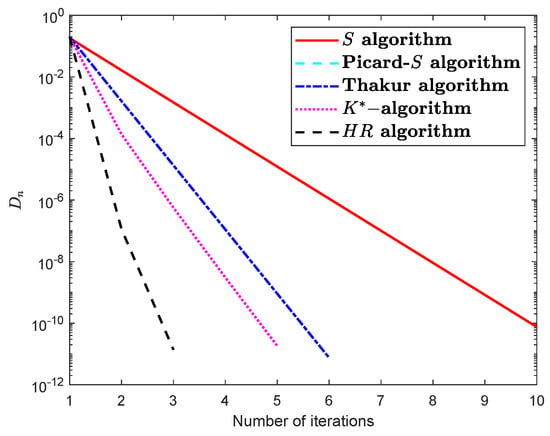

Example 3.

Define the mapping by

First, we claim that the mapping ℑ is SGNM but not nonexpansive. Put and one has

and

Hence This proves that ℑ is not nonexpansive mapping.

After that, to prove the other part of what is required, we discuss the following cases:

(i) If we have

since then we must write Obviously, is impossible. So, Hence, which implies that , thus Now,

and

Therefore,

(ii) If we get

For we obtain which triggers the following positions:

If one can write

Hence,

So, we have

If one has

Since and we have

Clearly, when and is similar to case ; so, we shall discuss when and Consider

and

which implies that

Based on the above cases, we conclude that ℑ is an SGNM.

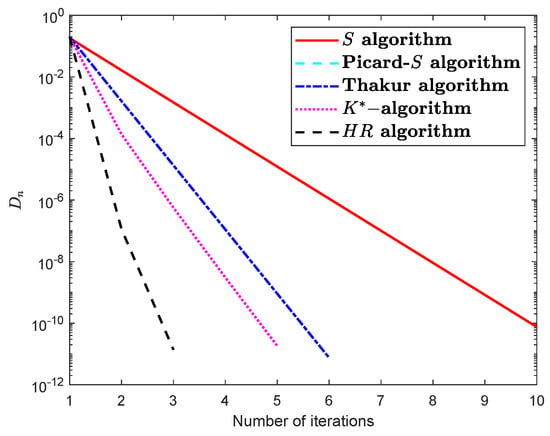

Finally, by employing various control circumstances , we will describe the behavior of technique (7) and show how it is faster than the S, Tharkur, and iteration procedures; see Table 4 and Table 5 and Figure 4 and Figure 5.

Table 4.

Example 3: Comparison of the suggested algorithm numerically (algorithm) at .

Table 5.

Example 3: Comparison of the suggested algorithm numerically (algorithm) when .

Figure 4.

Comparison of the suggested algorithm visually (algorithm) at .

Figure 5.

Comparison of the suggested algorithm visually (algorithm) when .

7. Solving a Nonlinear Volterra Equation with Delay

In this section, we use the algorithm (7) that we developed to solve the following nonlinear Volterra equation with delay:

with the condition

where and . Clearly, the space is a BS, where the norm is described as and is the space of all continuous functions defined on

Now, we present the main theorem in this part.

Theorem 7.

Suppose that Θ is a nonempty CCS of a BS ℶ and is a sequence generated by (7) with Let be an operator described as

with Also, assume that the statements below are true:

- the functions and are continuous;

- there exists a constant such thatfor all and

- for each

8. Conclusions and Open Problems

The effectiveness and success of iterative techniques are largely determined by two essential factors that are widely acknowledged. The two primary factors are the rate of convergence and the number of iterations; if convergence occurs more quickly with fewer repetitions, the method is successful in approximating the FPs. As a result, we have shown analytically and numerically in this work that, in terms of convergence speed, our method performs better than some of the most popular iterative algorithms, like the S algorithm [14], the Picard-S algorithm [18], the Thakur algorithm [19], and the algorithm [20]. Furthermore, comparison graphs of computations showed the frequency and speed of convergence and stability results. A solution to a fundamental problem served as an application that ultimately reinforced our methodology. Ultimately, we deem the following findings of this paper as potential contributions to future work:

- The variational inequality problem can be solved using our iteration (7) if we define the mapping ℑ in a Hilbert space endowed with an inner product space. This problem can be described as: find such thatwhere is a nonlinear mapping. In several disciplines, including engineering mechanics, transportation, economics, and mathematical programming, variational inequalities are a crucial and indispensable modeling tool; see [37,38] for more details.

- Our methodology can be extended to include gradient and extra-gradient projection techniques, which are crucial for locating saddle points and resolving a variety of optimization-related issues; see [39].

- We can accelerate the convergence of the proposed algorithm by adding shrinking projections and CQ terms. These methods stimulate algorithms and improve their performance to obtain strong convergence; for more details, see [40,41,42,43].

- If we consider the mapping ℑ as an inverse strongly monotone and if the inertial term is added to our algorithm, then we have the inertial proximal point algorithm. This algorithm is used in many applications, such as monotone variational inequalities, image restoration problems, convex optimization problems, and split convex feasibility problems [44,45]. For more accuracy, these problems can be expressed as mathematical models such as machine learning and the linear inverse problem.

- Second-order differential equations and fractional differential equations, which Green’s function can be used to transform into integral equations, can be solved using our approach. Therefore, it is simple to treat and resolve using the same method as in Section 7.

Author Contributions

H.A.H. contributed to the conceptualization and writing of the theoretical results; D.A.K. contributed to the conceptualization, writing and editing. All authors have read and agreed to the published version of this manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| FPs | Fixed points |

| BSs | Banach spaces |

| CCS | Closed convex subset |

| ⇀ | Weak convergence |

| ⟶ | Strong convergence |

| ACMs | Almost contraction mappings |

| NIEs | Nonlinear integral equations |

| SGNMs | Suzuki generalized nonexpanssive mappings |

| UCBSs | Uniformly convex Banach spaces |

| US | Unique solution |

References

- Berinde, V. Iterative Approximation of Fixed Points; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Karapınar, E.K.; Abdeljawad, T.; Jarad, F. Applying new fixed point theorems on fractional and ordinary differential equations. Adv. Differ. Equ. 2019, 2019, 421. [Google Scholar] [CrossRef]

- Khan, S.H. A Picard-Mann hybrid iterative process. Fixed Point Theory Appl. 2013, 2013, 69. [Google Scholar] [CrossRef]

- Phuengrattana, W.; Suantai, S. On the rate of convergence of Mann, Ishikawa, Noor and SP-iterations for continuous functions on an arbitrary interval. J. Comput. Appl. Math. 2011, 235, 3006–3014. [Google Scholar] [CrossRef]

- Karahan, I.; Ozdemir, M. A general iterative method for approximation of fixed points and their applications. Adv. Fixed Point Theory 2013, 3, 510–526. [Google Scholar]

- Chugh, R.; Kumar, V.; Kumar, S. Strong convergence of a new three step iterative scheme in Banach spaces. Am. J. Comp. Math. 2012, 2, 345–357. [Google Scholar] [CrossRef]

- Berinde, V. On the approximation of fixed points of weak contractive mapping. Carpath. J. Math. 2003, 19, 7–22. [Google Scholar]

- Zamfirescu, T. Fixed point theorems in metric spaces. Arch. Math. 1972, 23, 292–298. [Google Scholar] [CrossRef]

- Imoru, C.O.; Olantiwo, M.O. On the stability of Picard and Mann iteration processes. Carpath. J. Math. 2003, 19, 155–160. [Google Scholar]

- Osilike, M.O.; Udomene, A. Short proofs of stability results for fixed point iteration procedures for a class of contractive-type mappings. Indian J. Pure Appl. Math. 1999, 30, 1229–1234. [Google Scholar]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Ishikawa, S. Fixed points by a new iteration method. Proc. Am. Math. Soc. 1974, 44, 147–150. [Google Scholar] [CrossRef]

- Noor, M.A. New approximation schemes for general variational inequalities. J. Math. Anal. Appl. 2000, 251, 217–229. [Google Scholar] [CrossRef]

- Agarwal, R.P.; Regan, D.O.; Sahu, D.R. Iterative construction of fixed points of nearly asymptotically nonexpansive mappings. J. Nonlinear Convex Anal. 2007, 8, 61–79. [Google Scholar]

- Abbas, M.; Nazir, T. A new faster iteration process applied to constrained minimization and feasibility problems. Math. Vesn. 2014, 66, 223–234. [Google Scholar]

- Hammad, H.A.; ur Rehman, H.; De la Sen, M. A novel four-step iterative scheme for approximating the fixed point with a supportive application. Inf. Sci. Lett. 2021, 10, 333–339. [Google Scholar]

- Hammad, H.A.; ur Rehman, H.; De la Sen, M. A New four-step iterative procedure for approximating fixed points with Application to 2D Volterra integral equations. Mathematics 2022, 10, 4257. [Google Scholar] [CrossRef]

- Gursoy, F.; Karakaya, V. A Picard−S hybrid type iteration method for solving a differential equation with retarded argument. arXiv 2014, arXiv:1403.2546v2. [Google Scholar]

- Thakur, B.S.; Thakur, D.; Postolache, M. A new iterative scheme for numerical reckoning fixed points of Suzuki’s generalized nonexpansive mappings. Appl. Math. Comput. 2016, 275, 147–155. [Google Scholar] [CrossRef]

- Ullah, K.; Arshad, M. Numerical reckoning fixed points for Suzuki’s generalized nonexpansive mappings via new iteration process. Filomat 2018, 32, 187–196. [Google Scholar] [CrossRef]

- Karakaya, V.; Atalan, Y.; Dogan, K.; Bouzara, N.E.H. Some fixed point results for a new three steps iteration process in Banach spaces. Fixed Point Theory 2017, 18, 625–640. [Google Scholar] [CrossRef]

- Maleknejad, K.; Torabi, P. Application of fixed point method for solving Volterra-Hammerstein integral equation. UPB Sci. Bull. Ser. A 2012, 74, 45–56. [Google Scholar]

- Hammad, H.A.; Zayed, M. Solving systems of coupled nonlinear Atangana–Baleanu-type fractional differential equations. Bound Value Probl. 2022, 2022, 101. [Google Scholar] [CrossRef]

- Hammad, H.A.; Agarwal, P.; Momani, S.; Alsharari, F. Solving a fractional-Order differential equation using rational symmetric contraction mappings. Fractal Fract. 2021, 5, 159. [Google Scholar] [CrossRef]

- Atlan, Y.; Karakaya, V. Iterative solution of functional Volterra-Fredholm integral equation with deviating argument. J. Nonlinear Convex Anal. 2017, 18, 675–684. [Google Scholar]

- Lungu, N.; Rus, I.A. On a functional Volterra Fredholm integral equation via Picard operators. J. Math. Ineq. 2009, 3, 519–527. [Google Scholar] [CrossRef]

- Hammad, H.A.; De la Sen, M. A technique of tripled coincidence points for solving a system of nonlinear integral equations in POCML spaces. J. Inequal. Appl. 2020, 2020, 211. [Google Scholar] [CrossRef]

- Ali, F.; Ali, J. Convergence, stability, and data dependence of a new iterative algorithm with an application. Comput. Appl. Math. 2020, 39, 267. [Google Scholar] [CrossRef]

- Hudson, A.; Joshua, O.; Adefemi, A. On modified Picard-S-AK hybrid iterative algorithm for approximating fixed point of Banach contraction map. MathLAB J. 2019, 4, 111–125. [Google Scholar]

- Ahmad, J.; Ullah, K.; Hammad, H.A.; George, R. On fixed-point approximations for a class of nonlinear mappings based on the JK iterative scheme with application. AIMS Math. 2023, 8, 13663–13679. [Google Scholar] [CrossRef]

- Zălinescu, C. On Berinde’s method for comparing iterative processes. Fixed Point Theory Algorithms Sci. Eng. 2021, 2021, 2. [Google Scholar] [CrossRef]

- Berinde, V. Picard iteration converges faster than Mann iteration for a class of quasicontractive operators. Fixed Point Theory Appl. 2004, 2, 97–105. [Google Scholar]

- Senter, H.F.; Dotson, W. Approximating fixed points of nonexpansive mapping. Proc. Am. Math. Soc. 1974, 44, 375–380. [Google Scholar] [CrossRef]

- Suzuki, T. Fixed point theorems and convergence theorems for some generalized nonexpansive mappings. J. Math. Anal. Appl. Math. 2008, 340, 1088–1095. [Google Scholar] [CrossRef]

- Soltuz, S.M.; Grosan, T. Data dependence for Ishikawa iteration when dealing with contractive like operators. Fixed Point Theory Appl. 2008, 2008, 242916. [Google Scholar] [CrossRef]

- Schu, J. Weak and strong convergence to fixed points of asymptotically nonexpansive mappings. Bull. Aust. Math. Soc. 1991, 43, 153–159. [Google Scholar] [CrossRef]

- Konnov, I.V. Combined Relaxation Methods for Variational Inequalities; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer Series in Operations Research; Springer: New York, NY, USA, 2003; Volume II. [Google Scholar]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Ekon. Mat. Metody 1976, 12, 747–756. [Google Scholar]

- Martinez-Yanes, C.; Xu, H.K. Strong convergence of the CQ method for fixed point iteration processes. Nonlinear Anal. 2006, 64, 2400–2411. [Google Scholar] [CrossRef]

- Hammad, H.A.; ur Rehman, H.; De la Sen, M. Shrinking projection methods for accelerating relaxed inertial Tseng-type algorithm with applications. Math. Probl. Eng. 2020, 2020, 7487383. [Google Scholar] [CrossRef]

- Tuyen, T.M.; Hammad, H.A. Effect of shrinking projection and CQ-methods on two inertial forward-backward algorithms for solving variational inclusion problems. Rend. Circ. Mat. Palermo II Ser. 2021, 70, 1669–1683. [Google Scholar] [CrossRef]

- Hammad, H.A.; Cholamjiak, W.; Yambangwai, W.; Dutta, H. A modified shrinking projection methods for numerical reckoning fixed points of G−nonexpansive mappings in Hilbert spaces with graph. Miskolc Math. Notes 2019, 20, 941–956. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.l. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Bauschke, H.H.; Borwein, J.M. On projection algorithms for solving convex feasibility problems. SIAM Rev. 1996, 38, 367–426. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).