Abstract

This paper discusses inferential approaches for the problem of constant-stress accelerated life testing when the failure data are progressive type-I interval censored. Both frequentist and Bayesian estimations are carried out under the assumption that the log-normal location parameter is nonconstant and follows a log-linear life-stress model. The confidence intervals of unknown parameters are also constructed based on asymptotic theory and Bayesian techniques. An analysis of a real data set is combined with a Monte Carlo simulation to provide a thorough assessment of the proposed methods.

Keywords:

constant-stress accelerated life test; progressive type-I interval censoring; maximum likelihood estimation; EM algorithm; Bayesian estimation; Metropolis–Hastings algorithm; Tierney and Kadane’s approximation; log-normal distribution MSC:

62N02; 62N05; 62F15

1. Introduction

Accelerated life testing (ALT) is a valuable technique used in manufacturing design to test the reliability and longevity of products in a cost-effective and efficient manner. ALTs involve subjecting the units to higher-than-use stress conditions, thereby accelerating the aging and failure processes, and then estimating the lifetime distribution features at the use condition through a statistically appropriate model. The constant-stress ALT (CS-ALT) model is one of the two main methods used in ALTs. In this method, the units are subjected to a constant stress level throughout the test cycle. The second method is the step-stress model, which involves subjecting the units to a series of increasing stress levels in a stepwise manner. For an extensive coverage of various aspects of the ALT models, including test designs, data analysis methods, and reliability estimation, one can consult the monographs written by Nelson [1], Meeker and Escobar [2], Bagdonavicius and Nikulin [3], and Limon et al. [4].

Most test plans that are published use the concepts of type-I or type-II censoring for testing items under design stress in an accelerated setting. Type-I censoring involves stopping testing after a fixed duration, which provides the advantage of a precise experiment duration but leads to uncertainty about the number of failures observed. On the other hand, type-II censoring involves stopping testing after a predetermined number of failures, which provides certainty about the number of failures but leads to uncertainty about the experiment’s duration. In reliability testing, type-II censoring schemes are often employed when conducting experiments to determine the reliability or failure rate of a product or system. Type-II censoring occurs when the experiment is terminated after a predetermined number of failures, and the remaining items are considered censored observations. In this context, Zheng [5] expressed the asymptotic Fisher information for type-II censored data, relating it to the hazard function. Additionally, they demonstrated that the factorization of the hazard function can be characterized by the linear property of the Fisher information in the context of type-II censoring. Moreover, Xu and Fei [6] explored theories related to the approximate optimal design for a simplified step-stress ALT method under type-II censoring. The researchers develop statistically approximated optimal ALT plans with the objective of minimizing the asymptotic variance of the maximum likelihood estimators for the p-th percentile of lifetime at the design stress level. Utilizing data from CS-ALT experiments with type-II censoring, Wenhui et al. [7] studied the interval estimate of the two-parameter exponential distribution. Furthermore, they derived generalized confidence intervals for the parameters in the life-stress model, including the location parameter, as well as the mean and reliability function at the designed stress level.

These two types of censoring are more distinct with smaller sample sizes, but their differences become less noticeable as the sample size increases. In this regard, several studies that are relevant to this issue at hand are present in Nelson and Kielpinski [8], Bai et al. [9], and Tang et al. [10], while the two types of censoring both have benefits, they share a significant disadvantage in that they permit the elimination of units solely after the conclusion of an experiment. In contrast, “progressive censoring”, which is a more comprehensive censoring approach, permits units to be removed during a test before its completion. For a more in-depth examination of progressive censoring, Balakrishnan and Aggarwala’s research [11] can be referenced. In certain situations, continuous monitoring of experiments to observe failure times is necessary. However, this may not always be feasible due to time and cost constraints. In such cases, the number of failures is recorded at predetermined time intervals, which is known as interval censoring. Aggarwala [12] developed a more generalized interval censoring scheme called progressive type-I interval censoring by combining interval censoring and progressive type-I censoring. In this scheme, the experimental units are observed at predetermined intervals, and only those units that have not failed up to a certain point are subjected to more frequent monitoring. This approach allows for more efficient use of resources and provides more precise estimates of failure times. Progressive type-I interval censoring has been the focus of significant attention by many authors in recent years. For instance, Chandrakant and Tripathi [13], Singh and Tripathi [14], Chen et al. [15], and Arabi Belaghi et al. [16] have conducted research and developed various methods for estimating the unknown parameters of different lifetime models under progressive type-I interval censoring scheme.

Several studies have been conducted on statistical inference for ALT models under different types of censoring schemes, such as type-I, type-II, and hybrid censoring (see, Abd El-Raheem [17,18,19], Sief et al. [20], Feng et al. [21], Balakrishnan et al. [22], and Nassar et al. [23]). However, the study of ALT models under a progressive type-I interval censoring scheme is still lacking in the literature, and as such, we aim to address this gap by exploring inferential approaches for CS-ALT models when the failure data are progressive type-I interval censored and are log-normally distributed.

The log-normal distribution is indeed widely used in failure time analysis and has proven to be a flexible and effective model for analyzing various physical phenomena. One notable characteristic of the log-normal distribution is that its hazard rate starts at zero, indicating a low failure rate at the beginning, then gradually increases to its maximum value, and eventually approaches zero as the variable x approaches infinity. This behavior makes the log-normal distribution suitable for modeling phenomena that exhibit initial low failure rates, followed by an increasing failure rate, and then a declining failure rate as time progresses. The applications of the log-normal distribution extend to various fields of study, including actuarial science, business, economics, and lifetime analysis of electronic components. Moreover, the log-normal distribution is valuable for analyzing both homogeneous and heterogeneous data. It can handle skewed data that may deviate from a normal distribution, making it suitable for modeling real-world datasets that exhibit asymmetry. This versatility has led to its application in a wide range of practical studies. One can refer to [24,25,26] for further insights into the applications of the log-normal distribution in various fields and to demonstrate its usefulness in analyzing different types of data.

Assuming that the lifetime of the test units represented by a random variable T follows a log-normal distribution with a nonconstant location parameter is affected by stress and a scale parameter , then probability density function (PDF) and the cumulative distribution function (CDF) of the log-normal distribution can be expressed as follows:

where is the standard normal CDF.

The article is structured into several sections, which are summarized as follows: Section 2 provides a description of the test process and the assumptions that underlie it. In Section 3, the maximum likelihood estimates along with their associated asymptotic standard error are discussed. Section 4 focuses on the discussion of Bayesian estimation techniques. The proposed methods in Section 3 and Section 4 are then evaluated in Section 5 using simulation studies. Finally, Section 6 provides a summary of the findings as a conclusion.

2. Model and Underlying Assumptions

2.1. Model Description

In the CS-ALT method, the test units are divided into groups and each group is subjected to a higher stress level than the typical stress level. The stress levels are denoted as for the standard stress level and for k different test stress levels. The data is collected using a progressive type-I interval censored sampling approach for each stress level .

In this approach, a set of identical units is simultaneously tested at time for each stress level . Inspections are performed at predetermined times , with being the planned end time of the experiment. During each inspection, the number of failed units within the interval is recorded. Additionally, at each inspection time , a random selection process eliminates surviving units from the test, where should not exceed the number of remaining units . The value of is determined as a specified percentage of the remaining units at , using the formula , where . The percentage values are pre-specified, with , indicating that all remaining units are eliminated at the final inspection time.

In this scenario, a progressive type-I interval censored sample of size can be represented as:

Here, the total sample size n is given by the sum of the number of units in each stress level, which is defined as .

2.2. Basic Assumptions

In the CS-ALT context, the following assumptions are considered:

- The lifetime of test units follows a log-normal distribution at stress level , with PDF given by

- For the log-normal location parameter , the life-stress model is assumed to be log-linear, i.e., it is described as

Here, a and b (where ) are unknown coefficients that dependent on the product’s nature and the test method used. Using this log-linear model, can be further expressed as , where represents the location parameter of the log-normal distribution under the reference stress level . Additionally, , and satisfies . These assumptions provide the basis for analyzing and modeling the lifetime behavior of test units under different stress levels in CS-ALT experiments. Further details can be found in Chapter 2 of Nelson’s book [1].

3. Maximum Likelihood Estimation

Based on the observed lifetime data and the assumptions 1 and 2, the likelihood function for and is given by:

where .

The corresponding log-likelihood function denoted by . When the partial derivatives of with respect to , and are set to zero, the maximum likelihood estimators (MLEs) of and can then be obtained by simultaneously solving the following equations:

To simplify the expressions, we used the notations and . Here, represents the standard normal PDF. Since the solutions to the aforementioned equations cannot be found in a closed form, the Newton–Raphson method is frequently employed in these circumstances to obtain the desired MLEs.

3.1. EM Algorithm

The expectation–maximization (EM) algorithm is a widely used tool for handling missing or incomplete data situations. It is a powerful iterative algorithm that seeks to maximize the likelihood function by estimating the missing data and the model parameters in an iterative manner. The EM algorithm is particularly useful when dealing with large amounts of missing data. Compared to other optimization methods such as the Newton–Raphson method, the EM algorithm is generally slower but more reliable in such cases.

The EM algorithm was first introduced by Dempster et al. [27], and has since been widely used in many different fields. McLachlan and Krishnan [28] provide a comprehensive treatment of the EM algorithm, while Little and Rubin [29] have highlighted the advantages of the EM algorithm over other methods for handling missing data. Considering progressive type-I interval censoring, the complete sample under stress level can be expressed as , where represent the lifetimes of the units within the jth interval and denote to the lifetimes for the units that were removed at time , for . As a result, we can express the log-likelihood function of the complete data set as

By taking partial derivatives of Equation (6) with respect to , , and , we can obtain the associated log-likelihood equations as follows:

In the EM algorithm, two main steps are involved: The expectation step (E-step) and the maximization step (M-step). In the E-step, the observed and censored observations are replaced by their respective expected values. This step helps in estimating the missing or censored data. The process of finding the expected values in the E-step of the EM algorithm in our case involves calculating the expectations of four quantities

Since and are independent (see Ng et al. [30]), the process can be simplified using the following lemma (see Ng and Wang [31]).

Lemma 1.

Given and for and , the conditional distributions of W and can be expressed as follows:

Proof.

The conditional distribution of : the probability of W falling within the interval is given by:

where is CDF of W. To normalize the distribution within this interval, the PDF of W is divided by the probability of W falling within the interval:

This expression represents the conditional distribution of . Similarly, we can directly deduce the conditional distribution of using a similar approach. □

Thus, based on this result, we can readily acquire the necessary expected values in the following formulas.

Subsequently, during the M-step, the goal is to maximize the results obtained from the E-step. Thus, if we denote the estimate of at the o-th stage as , applying the M-step will lead to updated estimates at the -th stage. The updated estimates , can be derived as the solution of the following equations.

where . While the updated value can be obtained as

where . The iterative process continues until the desired convergence is achieved, which is determined by checking if the absolute differences between the updated and the previous values of , , and are all less than or equal to a predefined value threshold . In mathematical terms, the convergence criterion is given by .

3.2. Midpoint Approximation Method

In this context, we assume that of failures within each subinterval occurred at the midpoint of the interval, denoted as . Additionally, there are censored items withdrawn at the censoring time . Hence, the likelihood function can be approximated as:

The associated log-likelihood function for is denoted as . To find the midpoint (MP) estimators of , and , we set the partial derivatives of with respect to , and to zero.

where . By simultaneously solving Equations (15)–(17), we can obtain the MP estimators for the parameters , , and .

The advantage of the MP likelihood equations over the original likelihood equations is that they are often less complex and may lead to simpler numerical optimization procedures. This can enhance computational efficiency and facilitate the implementation of the estimation process.

3.3. Asymptotic Standard Errors

According to the missing information principle of Louis [32], the observed Fisher information matrix can be obtained as

where , , and represent observed information, complete, and missing information matrices. The complete information matrix, , for the data from a log-normal distribution is provided by

Moreover, the missing information matrix can be expressed as

Here, represents the information matrix for a single observation, conditioned on the event where . Additionally, denotes the information matrix for a single observation that is censored at the failure time, , conditioned on the event where . The elements of both matrices can be obtained easily by utilizing Lemma 1 in the following manner:

where

and

where

Afterward, the asymptotic variance-covariance matrix of the MLEs can be obtained by inverting the matrix . The asymptotic standard errors (ASEs) of MLEs can be easily obtained by taking the square root of the diagonal elements of the asymptotic variance-covariance matrix. Furthermore, the asymptotic two-sided confidence interval (CI) for with a confidence level of where , is given by:

where corresponds to the -th percentile of the standard normal distribution, and denotes the ASE of the estimated parameter.

4. Bayesian Estimation

In this study, we utilize Markov Chain Monte Carlo (MCMC) and Tierney–Kadane (T-K) approximation methods to investigate Bayesian estimates (BEs) of unknown parameters. The selection of an appropriate decision in decision theory relies on specifying an appropriate loss function. Therefore, we consider the squared error (SE) loss function, LINEX loss function, and general entropy (GE) loss function.

The SE loss function is suitable when the effects of overestimation and underestimation of the same magnitude are considered equally important. This loss function quantifies the discrepancy between the estimated and true values using the squared difference.

On the other hand, asymmetric loss functions are employed to capture the varying effects of errors when the true loss is not symmetric in terms of overestimation and underestimation. The LINEX loss function is an example of an asymmetric loss function that allows for different weighting of overestimation and underestimation errors.

Furthermore, the GE loss function takes into account a broader range of loss structures and provides flexibility in capturing the impact of different errors.

Under the SE loss function, the BE of the parameter is given by its posterior mean. In the case of the LINEX and GE loss functions, the BE of is determined differently. For the LINEX loss function, the BE of is given by:

Here, the sign of indicates the direction of the loss function (whether it penalizes overestimation or underestimation more), while the magnitude of indicates the degree of symmetry in the loss function.

For the GE loss function, the BE of is given by:

In this case, the shape parameter of the GE loss function is related to the deviation from symmetry in the loss function.

By using these specific formulas, we can determine the BE of under the LINEX and GE loss functions, taking into account their respective characteristics and the implications of asymmetry or deviation from symmetry in the loss functions.

In situations where it is challenging to select priors in a straightforward manner, Arnold and Press [33] suggest adopting a piecewise independent approach for specifying priors. More specifically, we adopt a piecewise independent prior specification for the parameters, where the parameter follows a normal prior distribution, is assigned a gamma prior distribution, and is assumed to have a uniform prior. Therefore, we can represent the joint prior distribution of , , and as follows:

By incorporating the likelihood function described in Equation (5) with the joint prior distribution outlined in Equation (21), we can derive the joint posterior distribution for , , and in the following manner:

The posterior mean of the function in terms of , , and can be determined as follows:

However, it is not feasible to obtain an analytical closed-form solution for the integral ratio in Equation (23). As a result, it is advisable to employ an approximation technique to compute the desired estimates. In the subsequent subsections, we will discuss various approximation methods that can be employed for this purpose.

4.1. MCMC Method

In this approach, we adopt the MCMC method to generate sequences of samples from the complete conditional distributions of the parameters. Roberts and Smith [34] introduced the Gibbs sampling method, which is an efficient MCMC technique when the complete conditional distributions can be easily sampled. Alternatively, by using the Metropolis–Hastings (M-H) algorithm, random samples can be obtained from any complex target distribution of any dimension, as long as it is known up to a normalizing constant. The original work by Metropolis et al. [35] and its subsequent extension by Hastings [36] form the foundation of the M-H algorithm.

To implement the Gibbs algorithm, the conditional probability densities of the parameters , , and should be determined as follows:

Since the posterior conditional distributions of , , and are unknown, we employ the M-H algorithm to generate random numbers. In this case, we choose a normal distribution as our proposal density. The process of generating samples using the MCMC method follows the steps outlined in Algorithm 1.

| Algorithm 1: M-H algorithm |

|

After running the algorithm for a sufficient number of iterations, the first simulated values (burn-in period) are discarded to eliminate the influence of the initial value selection. The remaining values for (where N is the total number of iterations) form an approximate posterior sample that can be used for Bayesian inference.

Based on this posterior sample, BEs for a function of the parameters are provided under SE, LINEX, and GE loss functions, respectively, as

The Bayesian credible CIs for any parameter, such as , can be determined using the posterior MCMC sample after the burn-in period . The MCMC sample should be sorted in ascending order as . Based on this sorted sample, the two-sided Bayesian credible CI for at a confidence level of is given by . Similarly, we can create Bayesian credible CIs for the parameters and in a similar approach.

4.2. Tierney–Kadane Method

Tierney and Kadane [37] proposed the T-K methodology, which is a technique for approximating the BE of a target function .

The BE of the of a target function g using the T-K methodology is given by:

In this formula, , , and are the values that maximize the function , while , , and maximize the function . The functions and are defined as:

The quantities and in Equation (27) correspond to the determinants of the negative Hessian matrices of and , respectively, evaluated at and , respectively.

The T-K methodology provides an approximation for the BE by incorporating the likelihood, prior, and target function. It utilizes maximum likelihood estimation to find the values that maximize the functions and takes into account the curvature of the log-likelihood and log-prior functions using Hessian matrices.

Here, in our case, Equation (22) can be directly used to obtain as follows:

Thus, are obtained by solving the following non-linear equations

We may obtain as follows based on the second-order derivative of ,

where

In order to compute the BE of , we set . So,

Further, can be obtained by solving the following equations

and can be computed from

where

Therefore, the BE for under the SE loss function, using the T-K methodology, can be expressed as follows:

By following the same reasoning, the BEs for and under the SE loss function, using the T-K methodology, can be computed straightforwardly.

5. Simulation Study and Data Analysis

5.1. Monte Carlo Simulation Study

Based on theoretical principles, it is not possible to directly compare various estimation methods or censoring schemes. Therefore, the purpose of this section is to evaluate the performance of several estimates that were discussed in previous sections using Monte Carlo simulations. The assessment of point estimates is based on their mean square error (MSE) and relative absolute bias (RAB), while the evaluation of interval estimates is based on their coverage probability (CP) and average width (AW). These measures provide insights into the accuracy and precision of the estimates.

To simulate data under progressive type-I interval censoring, we utilize the Aggarwala algorithm (Aggarwala et al. [12]) for a given set of parameters including the specified stress level , the sample sizes , and the number of subintervals . We also consider prefixed inspection times and censoring schemes. Under each stress level , , starting with a sample of size , which is subjected to a life test at time , we simulate the number of failed items for each subinterval as follows: Let and and for

In the simulation study, we examine three distinct removal schemes, denoted as , , and , each characterized by different probabilities of removing items during intermediate inspection times where , and . The third Scheme, , resembles a conventional interval censoring scheme, where no removals occur during the intermediate inspection times. In addition to the regular stress level , we include four stress levels: , , , and . These stress levels represent different levels of intensity or severity in the testing process. Additionally, for each stress level , we incorporate the same inspection times to account for varying durations of observation. The inspection times included are , , , , and . These inspection times correspond to specific intervals during which the items are assessed or observed.

Furthermore, we consider various sample sizes to assess the impact of the number of items tested. The sample sizes considered are , , , and . These different sample sizes allow us to investigate the influence of the number of tested items on the estimation performance. The generated data follows a log-normal distribution with true parameters values , , and .

For the Bayesian analyses, informative priors are employed with specific hyperparameters. The hyperparameters are chosen such that the prior means correspond to the true parameter values. The hyperparameters are set as follows: , , , and . The T-K BEs are calculated under the SE loss function. On the other hand, the MCMC BEs are computed using the SE loss function as well as the LINEX loss function with different values of (, 0.001, and 2) and the GE loss function with different values of (, 0.001, and 2).

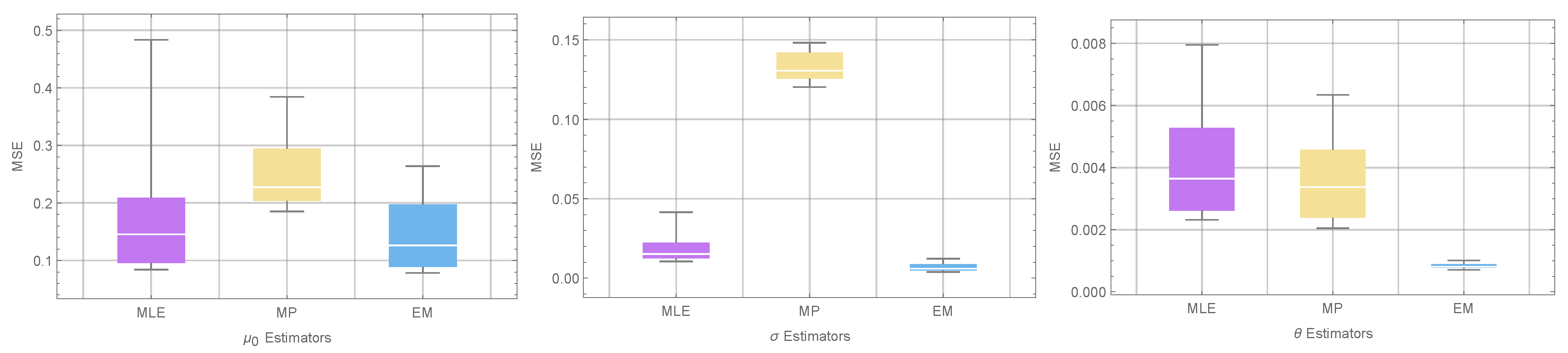

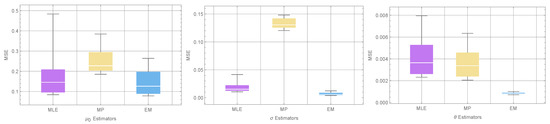

In Table 1, the MSE and RAB values are provided for the classical estimators of the parameters. The table clearly demonstrates that the EM estimators outperform the MLE and MP estimators in terms of having the lowest MSE and RAB values. This indicates that the EM estimators provide more accurate and less biased parameter estimates compared to their counterparts, as clearly demonstrated in Figure 1.

Table 1.

The MSEs of the classical estimates for , and , along with their corresponding RAB values, are provided within brackets.

Figure 1.

Comparison of classical estimators: MSE values for different parameters estimators.

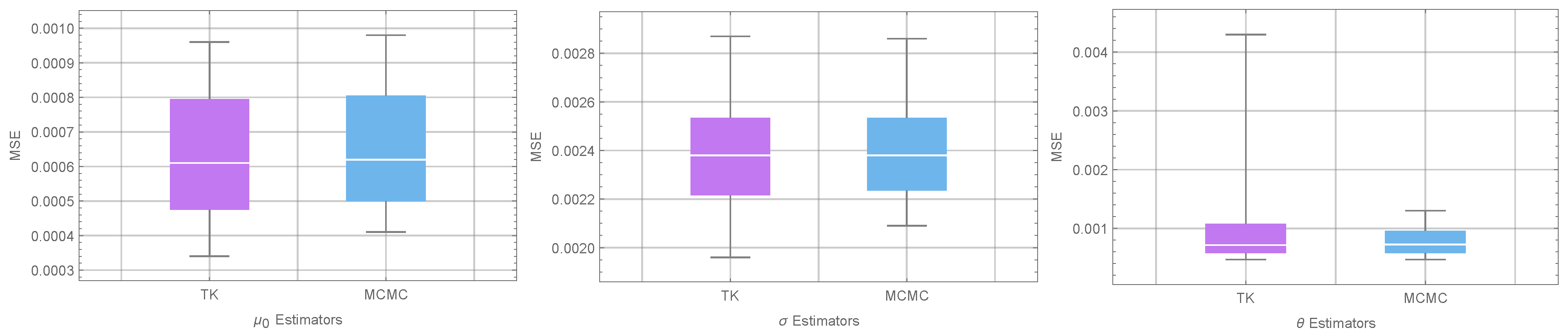

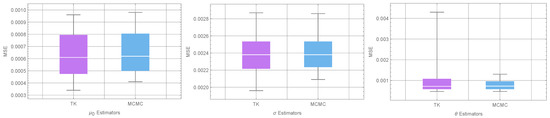

In Table 2, the MSE and RAB values are presented for the BEs of the parameters, specifically under the SE loss function. The table includes results obtained using the T-K and MCMC techniques. The tabulated results indicate that the performance of the two methods under different schemes is almost identical, as visually depicted in Figure 2 as well. Suggesting that both T-K and MCMC methods yield similar results in terms of MSE and RAB values.

Table 2.

The MSEs of the BEs evaluated using the SE loss function, along with their corresponding RABs (shown in parentheses).

Figure 2.

Comparison of BEs under SE loss function: MSE values for different parameters estimators.

Additionally, in Table 3 and Table 4, the MSE and RAB values are presented for the MCMC BEs of the parameters for three distinct choices of the shape parameter for the LINEX and GE loss functions. Table 3 corresponds to the LINEX. Based on the tabulated results, we can conclude that, for the parameters and under the LINEX loss function, the MCMC BE shows higher accuracy when the parameter is set to 2. This means that for a specific value of (in this case, ), the MCMC BE provides more precise and reliable estimates for and under the LINEX loss function compared to other values of . On the other hand, for the parameter under the same LINEX loss function, the MCMC BE performs better (i.e., shows higher accuracy) when is set to .

Table 3.

The MSEs of the BEs evaluated under LINEX loss function with different values of (, 0.001, and 2), along with their corresponding RABs (shown in parentheses).

Table 4.

The MSEs of the BEs evaluated under GE loss function with different values of (, 0.001, and 2), along with their corresponding RABs (shown in parentheses).

Moreover, when considering the GE loss function, the MCMC BE of shows higher accuracy when is assigned a value of 2. Similarly, for the parameter , the MCMC BE exhibits better performance in terms of lower MSE and RAB values when is set to 0.001. On the other hand, for the parameter , the MCMC BE demonstrates better performance when is set to .

Moreover, Table 5 presents the AWs and coverage probabilities (CPs) of the asymptotic and Bayesian credible CIs for the parameters , , and . The table clearly indicates that the Bayesian credible CIs have narrower widths compared to the asymptotic CIs. This suggests that the Bayesian credible CIs provide more precise and tighter estimation intervals for the parameters. Also, the Bayesian credible CIs demonstrate better overall performance, as indicated by the higher coverage probabilities, implying that they achieve a higher proportion of correctly capturing the true parameter values within the confidence intervals.

Table 5.

Comparison of AWs and CPs of 95% asymptotic and Bayesian Credible CIs for , , and .

Based on the tabulated results in Table 1, Table 2, Table 3, Table 4 and Table 5, the following general concluding remarks can be drawn:

- For a fixed censoring scheme, the trend observed in the tabulated results indicates that as the sample size n increases, the MSE and RAB values of all estimates decrease. This trend aligns with the statistical theory, which suggests that larger sample sizes tend to result in more accurate parameter estimates.

- The Bayesian estimators consistently outperform the MLEs, EM estimators, and MP estimators in terms of MSE and RAB values. This highlights the superior performance of the Bayesian approach in estimation tasks.

- Among the different progressive censoring schemes , and , all the estimates obtained under scheme (traditional type-I interval censoring) exhibit the smallest MSE and RAB values compared to schemes and . This result is in line with expectations, as longer testing duration and lower censoring rates generally lead to more accurate parameter estimation.

- The BEs of the parameters under the LINEX loss function display higher accuracy compared to the estimators under the SE and GE loss functions.

These conclusions provide insights into the behavior and performance of different estimation methods, sample sizes, censoring schemes, and loss functions based on the tabulated results.

5.2. Data Analysis

The life data from steel samples, which were randomly divided into groups of 20 items, indicates that each group experienced varying levels of stress intensity (Kimber [38]; Lawless [39]). Cui et al. [40] demonstrated that the data could be well described by a log-normal distribution. Our analysis specifically focused on the data obtained from stress levels ranging from 35 to 36 MPa, with a normal stress level set at 30 MPa. To facilitate convenience, the data is replicated in Table 6 for easy reference.

Table 6.

Data on steel specimens’ life under different stress levels.

A progressively type-I interval censored sample is generated randomly from this dataset, taking into account the predetermined inspection times , under the scheme . The resulting simulated sample is presented in Table 7.

Table 7.

The progressively type-I interval censored sample.

Table 8, Table 9, Table 10, Table 11 and Table 12 provide the corresponding point and interval estimates for the parameters , , and . Non-informative priors are utilized to obtain the BEs since there is insufficient prior information available.

Table 8.

The classical point estimates for , and of the real data.

Table 9.

The BEs under the SE loss function of the real data.

Table 10.

The BEs under LINEX loss function with different values of for the real data.

Table 11.

The BEs under GE loss function with different values of for the real data.

Table 12.

The ACI and BCI of , and for the real data.

6. Conclusions

This article discusses statistical analysis in the context of progressive type-I interval censoring for the log-normal distribution in a CS-ALT setting. Both classical and Bayesian inferential procedures are applied to estimate the unknown parameters. To approximate the MLEs of the model parameters, the EM algorithm and mid-point approximation method are employed. For the Bayesian approach, the BEs are obtained based on different loss functions, namely the SE, LINEX, and GE loss functions. The Tierney–Kadane and MCMC methods are used to obtain approximate BEs. Additionally, the article derives the asymptotic confidence intervals based on the normality assumption of the MLEs and the Bayesian credible intervals using the MCMC procedure. The performance of the different estimation methods is evaluated through a simulation study. The results indicate that the BEs perform well based on measures such as mean squared error and relative absolute bias of the estimates.

While this article focuses on CS-ALT with progressive type-I interval censoring and the log-normal distribution, the same methodology can be applied to other lifetime distributions under different censoring schemes as well.

Overall, this article provides a comprehensive analysis of statistical procedures for estimating parameters in CS-ALT with progressive type-I interval censoring, specifically for the log-normal distribution.

Author Contributions

Conceptualization, A.E.-R.M.A.E.-R. and M.S.; methodology, M.S.; software, M.S. and A.E.-R.M.A.E.-R.; validation, X.L., M.S. and M.H.; formal analysis, M.S.; investigation, M.S.; resources, M.S.; data curation, M.S.; writing—original draft preparation, M.S.; writing—review and editing, M.S.; visualization, M.S.; supervision, X.L. project administration, M.H.; funding acquisition, M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The article incorporates the numerical data that were utilized to substantiate the findings of the study.

Acknowledgments

The third author extends her appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through a research groups program under grant RGP2/310/44.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nelson, W.B. Accelerated Testing: Statistical Models, Test Plans, and Data Analysis; John Wiley & Sons: New York, NY, USA, 2009; Volume 344. [Google Scholar]

- Meeker, W.Q.; Escobar, L.A. Statistical Methods for Reliability Data; John Wiley & Sons: New York, NY, USA, 2014. [Google Scholar]

- Bagdonavicius, V.; Nikulin, M. Accelerated Life Models: Modeling and Statistical Analysis; Chapman and Hall: Boca Raton, FL, USA, 2001. [Google Scholar]

- Limon, S.; Yadav, O.P.; Liao, H. A literature review on planning and analysis of accelerated testing for reliability assessment. Qual. Reliab. Eng. Int. 2017, 33, 2361–2383. [Google Scholar] [CrossRef]

- Zheng, G. A characterization of the factorization of hazard function by the Fisher information under type-II censoring with application to the Weibull family. Stat. Probab. Lett. 2001, 52, 249–253. [Google Scholar] [CrossRef]

- Xu, H.; Fei, H. Approximated optimal designs for a simple step-stress model with type-II censoring, and Weibull distribution. In Proceedings of the 2009 8th International Conference on Reliability, Maintainability and Safety, Chengdu, China, 20–24 July 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1203–1207. [Google Scholar]

- Wu, W.; Wang, B.X.; Chen, J.; Miao, J.; Guan, Q. Interval estimation of the two-parameter exponential constant stress accelerated life test model under type-II censoring. Qual. Technol. Quant. Manag. 2022, 1–12. [Google Scholar] [CrossRef]

- Nelson, W.; Kielpinski, T.J. Theory for optimum censored accelerated life tests for normal and lognormal life distributions. Technometrics 1976, 18, 105–114. [Google Scholar] [CrossRef]

- Bai, D.S.; Kim, M.S. Optimum simple step-stress accelerated life tests for Weibull distribution and type I censoring. Nav. Res. Logist. (NRL) 1993, 40, 193–210. [Google Scholar] [CrossRef]

- Tang, L.; Goh, T.; Sun, Y.; Ong, H. Planning accelerated life tests for censored two-parameter exponential distributions. Nav. Res. Logist. (NRL) 1999, 46, 169–186. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Aggarwala, R. Progressive Censoring: Theory, Methods, and Applications, 1st ed.; Statistics for Industry and Technology; Birkhäuser: Basel, Switzerland, 2000. [Google Scholar]

- Aggarwala, R. Progressive interval censoring: Some mathematical results with applications to inference. Commun. Stat. Theory Methods 2001, 30, 1921–1935. [Google Scholar] [CrossRef]

- Lodhi, C.; Tripathi, Y.M. Inference on a progressive type-I interval censored truncated normal distribution. J. Appl. Stat. 2020, 47, 1402–1422. [Google Scholar] [CrossRef]

- Singh, S.; Tripathi, Y.M. Estimating the parameters of an inverse Weibull distribution under progressive type-I interval censoring. Stat. Pap. 2018, 59, 21–56. [Google Scholar] [CrossRef]

- Chen, D.G.; Lio, Y.; Jiang, N. Lower confidence limits on the generalized exponential distribution percentiles under progressive type-I interval censoring. Commun. Stat.-Simul. Comput. 2013, 42, 2106–2117. [Google Scholar] [CrossRef]

- Arabi Belaghi, R.; Noori Asl, M.; Singh, S. On estimating the parameters of the BurrXII model under progressive type-I interval censoring. J. Stat. Comput. Simul. 2017, 87, 3132–3151. [Google Scholar] [CrossRef]

- Abd El-Raheem, A.M. Optimal design of multiple constant-stress accelerated life testing for the extension of the exponential distribution under type-II censoring. J. Comput. Appl. Math. 2021, 382, 113094. [Google Scholar] [CrossRef]

- Abd El-Raheem, A.M. Optimal design of multiple accelerated life testing for generalized half-normal distribution under type-I censoring. J. Comput. Appl. Math. 2020, 368, 112539. [Google Scholar] [CrossRef]

- Abd El-Raheem, A.M. Optimal plans of constant-stress accelerated life tests for extension of the exponential distribution. J. Test. Eval. 2018, 47, 1586–1605. [Google Scholar] [CrossRef]

- Sief, M.; Liu, X.; Abd El-Raheem, A.M. Inference for a constant-stress model under progressive type-I interval censored data from the generalized half-normal distribution. J. Stat. Comput. Simul. 2021, 91, 3228–3253. [Google Scholar] [CrossRef]

- Feng, X.; Tang, J.; Tan, Q.; Yin, Z. Reliability model for dual constant-stress accelerated life test with Weibull distribution under Type-I censoring scheme. Commun. Stat. Theory Methods 2022, 51, 8579–8597. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Castilla, E.; Ling, M.H. Optimal designs of constant-stress accelerated life-tests for one-shot devices with model misspecification analysis. Qual. Reliab. Eng. Int. 2022, 38, 989–1012. [Google Scholar] [CrossRef]

- Nassar, M.; Dey, S.; Wang, L.; Elshahhat, A. Estimation of Lindley constant-stress model via product of spacing with Type-II censored accelerated life data. Commun. Stat. Simul. Comput. 2021, 1–27. [Google Scholar] [CrossRef]

- Serfling, R. Efficient and robust fitting of lognormal distributions. N. Am. Actuar. J. 2002, 6, 95–109. [Google Scholar] [CrossRef]

- Punzo, A.; Bagnato, L.; Maruotti, A. Compound unimodal distributions for insurance losses. Insur. Math. Econ. 2018, 81, 95–107. [Google Scholar] [CrossRef]

- Limpert, E.; Stahel, W.A.; Abbt, M. Log-normal distributions across the sciences: Keys and clues: On the charms of statistics, and how mechanical models resembling gambling machines offer a link to a handy way to characterize log-normal distributions, which can provide deeper insight into variability and probability-normal or log-normal: That is the question. BioScience 2001, 51, 341–352. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 1977, 39, 1–22. [Google Scholar]

- McLachlan, G.J.; Krishnan, T. The EM Algorithm and Extensions, 2nd ed.; Wiley: New York, NY, USA, 2008; pp. 159–218. [Google Scholar]

- Little, R.J.A.; Rubin, D.B. Incomplete data. In Encyclopedia of Statistical Sciences; Kotz, S., Johnson, N.L., Eds.; Wiley: New York, NY, USA, 1983; Volume 4, pp. 46–53. [Google Scholar]

- Ng, H.; Chan, P.; Balakrishnan, N. Estimation of parameters from progressively censored data using EM algorithm. Comput. Stat. Data Anal. 2002, 39, 371–386. [Google Scholar] [CrossRef]

- Ng, H.K.T.; Wang, Z. Statistical estimation for the parameters of Weibull distribution based on progressively type-I interval censored sample. J. Stat. Comput. Simul. 2009, 79, 145–159. [Google Scholar] [CrossRef]

- Louis, T.A. Finding the observed information matrix when using the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 1982, 44, 226–233. [Google Scholar]

- Arnold, B.C.; Press, S. Bayesian inference for Pareto populations. J. Econom. 1983, 21, 287–306. [Google Scholar] [CrossRef]

- Smith, A.F.; Roberts, G.O. Bayesian computation via the Gibbs sampler and related Markov chain Monte Carlo methods. J. R. Stat. Soc. Ser. B (Methodol.) 1993, 55, 3–23. [Google Scholar] [CrossRef]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of State Calculations by Fast Computing Machines. J. Chem. Phys. 1953, 21, 1087. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Tierney, L.; Kadane, J.B. Accurate approximations for posterior moments and marginal densities. J. Am. Stat. Assoc. 1986, 81, 82–86. [Google Scholar] [CrossRef]

- Kimber, A. Exploratory data analysis for possibly censored data from skewed distributions. J. R. Stat. Soc. Ser. C Appl. Stat. 1990, 39, 21–30. [Google Scholar] [CrossRef]

- Lawless, J.F. Statistical Models and Methods for Lifetime Data, 2nd ed.; Wiley Series in Probability and Statistics; Wiley-Interscience: Hoboken, NJ, USA, 2002. [Google Scholar]

- Cui, W.; Yan, Z.Z.; Peng, X.Y.; Zhang, G.M. Reliability analysis of log-normal distribution with nonconstant parameters under constant-stress model. Int. J. Syst. Assur. Eng. Manag. 2022, 13, 818–831. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).