Abstract

This paper is devoted to the investigation of optimality conditions and saddle point theorems for robust approximate quasi-weak efficient solutions for a nonsmooth uncertain multiobjective fractional semi-infinite optimization problem (NUMFP). Firstly, a necessary optimality condition is established by using the properties of the Gerstewitz’s function. Furthermore, a kind of approximate pseudo/quasi-convex function is defined for the problem (NUMFP), and under its assumption, a sufficient optimality condition is obtained. Finally, we introduce the notion of a robust approximate quasi-weak saddle point to the problem (NUMFP) and prove corresponding saddle point theorems.

1. Introduction

Recently, much attention had been paid to semi-infinite optimization problems; see [1,2,3]. Specially, multiobjective semi-infinite optimization refers to finding values of decision variables that give the optimum of more than one objective, and many interesting results have been presented in [4] and the references therein. Moreover, fractional optimization is a ratio of two functions, and it is widely used in the fields of information technology, resource allocation and engineering design; see [5,6,7,8]. It is worth noting that in many practical problems the objective or constraint functions to optimization models are nonsmooth and are affected by various uncertain information. Therefore, it is meaningful to investigate nonsmooth uncertain optimization problems; see [9,10,11]. Robust optimization [12,13] is one of the powerful tools to deal with optimization problems with data uncertainty. The aim of the robust optimization approach is to find the worst-case solution, which is immunized against the data uncertainty to optimization problems. However, most of solutions obtained by numerical algorithms are approximate solutions. In these situations, the study of approximate solutions is very significant from both the theoretical aspect and practical application. This paper intends to investigate the properties of a problem (NUMFP) with respect to approximate quasi-weak efficient solutions by the robust approach.

Optimality conditions and saddle point theorems are two important contents of nonsmooth optimization problems. The subdifferential is a powerful tool to characterize optimality conditions. For a nonsmooth multiobjective optimization problem, Caristi et al. [14] and Kabgani et al. [15] investigated optimality conditions of weakly efficient solutions by using the Michel–Penot subdifferential and convexificator, respectively. Chuong [5] obtained optimality theorems for robust efficient solutions to a nonsmooth multiobjective fractional optimization problem based on the Mordukhovich subdifferential. It is important to mention that the Clarke subdifferential has attracted much attention because of its good properties [16]. Fakhar et al. [17] constructed optimality conditions and saddle point theorems of robust efficient solutions for a nonsmooth multiobjective optimization problem by utilizing the Clarke subdifferential. The purpose of this article is to examine optimality conditions and saddle point theorems of robust approximate quasi-weak efficient solutions for a problem (NUMFP) under the Clarke subdifferential. Moreover, Lee et al. [18] employed a separation theorem to established necessary conditions for approximate solutions. Chen et al. [19] used a generalized alternative theorem to obtain necessary optimality conditions for weakly robust efficient solutions. It is worth noting that the Gerstewitz’s function is an important nonlinear scalar function, which plays a significant role in solving optimization problems due to its good properties, such as convexity, positive homogeneity and continuity; see [20,21]. This paper will use the Gerstewitz’s function to examine a necessary optimality condition of robust approximate quasi-weak efficient solutions for a problem (NUMFP).

In addition, convexity and its generalization play an important role in establishing sufficient optimality conditions of optimization problems. In this paper, we will define a class of approximate (pseudo quasi) convex functions for the objective and constraint functions of a problem (NUMFP) and establish a sufficient optimality condition and saddle point theorems for robust approximate quasi-weak efficient solutions under their assumptions.

This paper is organized as follows. Section 2 provides some basic concepts and lemmas, which will be used in the subsequent sections. In Section 3, we establish optimality conditions for robust approximate quasi-weak efficient solutions to a problem (NUMFP). In Section 4, we introduce the concept of a robust approximate quasi-weak saddle point to a problem (NUMFP) and prove corresponding saddle point theorems.

2. Preliminaries

Throughout this paper, and stand for the set of natural numbers and n-dimensional Euclidean space, respectively. denotes the open ball with center and radius ; represents the closed unit ball of . The inner product in is denoted by for any . We set

and utilize the following symbols to represent an order relation in :

Let be a nonempty subset and and stand for the interior, the closure, and the convex hull of C, respectively. The Clarke contingent cone and normal cone to C at point are defined, respectively, by the following (see [16]):

The conical convex hull (see [22]) of the set C is defined as

Let . F is said to be locally Lipschitz at if there exist constant and such that

If F is locally Lipschitz at x for any , then F is called locally Lipschitz mapping. Particularly, for a real value locally Lipschitz function ( denotes real number), the Clarke generalized directional derivative of f at in the direction is given by (see [16])

and the Clarke subdifferential (see [16]) of f at is denoted by

Let be a nonempty subset, and the indicator function of C is defined as

It is pointed out in [16] that

The following lemmas characterize some properties of the Clarke subdifferential.

Lemma 1

([16]). Let be locally Lipschitz at . Then, the following applies:

- (i)

- is nonempty compact convex;

- (ii)

- for any

- (iii)

- (iv)

- if f attains a local minimum at , then .

Lemma 2

([16]). Let be locally Lipschitz at , and . Then, is also locally Lipschitz at , and

Lemma 3

([16]). Let be locally Lipschitz at and be locally Lipschitz at . Then,

Next, we give a scalar function, which will play an essential role in the proof of optimality conditions in Section 3.

Definition 1

([20]). Let be a pointed closed convex cone, . The Gerstewitz’s function is defined as

Some properties of the Gerstewitz’s function are summarized in the following Lemma 4.

Lemma 4

([20]). Let be a pointed closed convex cone, . Then, the following applies:

- (i)

- is continuous and locally a Lipschitz function;

- (ii)

- ;

- (iii)

- ;

- (iv)

- ;

- (v)

- ,

where represents the dual cone of C.

Lemma 5

([22]). Let be an arbitrary collection of nonempty convex sets in and K be the convex cone generated by the union of the collection. Then, every nonzero vector of K can be expressed as a nonnegative linear combination of n or fewer linearly independent vectors, each belonging to a different .

Let T be a nonempty and arbitrary index set. Considering the following nonsmooth uncertain multiobjective fractional semi-infinite optimization problem (NUMFP)

where , are uncertain parameters from the uncertainty set . , , are locally Lipschitz functions, and the uncertainty map is defined as .

We consider the robust counterpart of the problem (NUMFP) as follows:

where the feasible set of the problem (NRMFP) is denoted by

Definition 2.

Let . is called a robust quasi-weak ε-efficient solution of the problem (NUMFP) if is a quasi-weak ε-efficient solution of the problem (NRMFP); that is,

Remark 1.

When , the quasi-weak ε-efficient solution degrades into the weak efficient solution in [8].

3. Optimality Conditions

In this section, we will establish necessary and sufficient optimality conditions for the quasi-weak -efficient solutions to the problem (NRMFP). We begin with the following constraint qualification.

Definition 3

([23]). For , let and

where .

Definition 4

([16]). Let . The Basic Constraint Qualification (BCQ) holds at if .

Next, we present a necessary optimality condition for the quasi-weak -efficient solutions to the problem (NRMFP) by using the properties of the Gerstewitz’s function.

Theorem 1.

In problem (NRMFP), let . If is a quasi-weak ε-efficient solution of the problem (NRMFP) and the BCQ holds at , then there exist and , such that

Proof.

Since is a quasi-weak -efficient solution of the problem (NRMFP), we obtain

Let , by Lemma 4 (iii), then we obtain

As , thus,

Note that then , and it follows from Lemma 4 (ii) that

together with (3), and then we have

Therefore,

and this means that is a local minimizer of on , and is also a local minimizer of on . By Lemma 1 (iii), we obtain

Due to

combined with (4), we arrive at

Since is locally Lipschitz at and is locally Lipschitz at , it follows from Lemma 3 that

According to Lemma 4 (v), there exists such that

From Lemma 1 (i), it leads to

According to Lemma 2, we obtain

Since the BCQ holds, it leads to

By Lemma 5, there exist and such that

Let . If , then (1) and (2) hold due to . When , we take multipliers in (5) to obtain the desired result and complete the proof. □

Remark 2.

In studies [18,19], the necessary optimality conditions were obtained by utilizing a separation theorem and an alternative theorem, respectively. Different from [18,19], the necessary optimality condition of the above Theorem 1 is directly proved by using the properties of the Gerstewitz’s function. However, if and T is a finite index set, then Theorem 1 of this paper will reduce to Theorem 1 in [8].

Before we establish a sufficient optimality condition of quasi-weak -efficient solutions for the problem (NRMFP), we next introduce the following two kinds of generalized convexities for the objective and constraint functions of the problem (NRMFP).

Definition 5.

In the problem (NRMFP), is called an approximate convex function at if for any , , , and , such that

Here is an example of an approximate convex function.

Example 1.

In the problem (NRMFP), let , , , , and

By a simple calculation, we obtain . Let , then we have , and . For any , , , , , since

We obtain an approximate convex function at .

Definition 6.

In the problem (NRMFP), let . is called an approximate pseudo/quasi-convex function at if for any , , , , , and , , such that

Remark 3.

Clearly, if is an approximate convex function at , then is an approximate pseudo/quasi-convex function at ; conversely, it is not true (see the following Example 2).

Example 2.

In problem (NRMFP), let ,

where

, , ,

Through a clear calculation, we obtain . Taking , , we obtain and . For any , , , , and , since

We obtain , an approximate pseudo/quasi-convex function, at . However, for and , one has

Therefore, is not an approximate convex function at .

The following Theorem 2 presents a sufficient optimality condition for the quasi-weak -efficient solutions to the problem (NRMFP).

Theorem 2.

In problem (NRMFP), let and be an approximate pseudo/quasi-convex function at . If there exist multipliers and , such that (1) and (2) hold, then is a quasi-weak ε-efficient solution of the problem (NRMFP).

Proof.

It follows from (1) that there exist , , and , such that

Due to , for any , , we obtain

This is equivalent to

When , . If , from (2), we derive

Since is an approximate pseudo/quasi-convex function at , for , we have

Combining with (6) and (7), we obtain

Conversely, suppose that is not a quasi-weak -efficient solution of the problem (NRMFP). Then, there exists such that

which implies that

Since is an approximate pseudo/quasi-convex at , for , and , , we obtain

Noticing that , we arrive at

which contradicts (8). Hence, is a quasi-weak -efficient solution to the problem (NRMFP).

□

4. Saddle Point Theorems

In this section, we establish saddle point theorems of quasi-weak -efficiency. We first give the definition of a quasi -weak saddle point for the problem (NRMFP).

Let and . The Lagrangian function of the problem (NRMFP) is defined as

where

Definition 7.

In the problem (NRMFP), let . is said to be a quasi ε-weak saddle point if

The following example is presented to illustrate Definition 7.

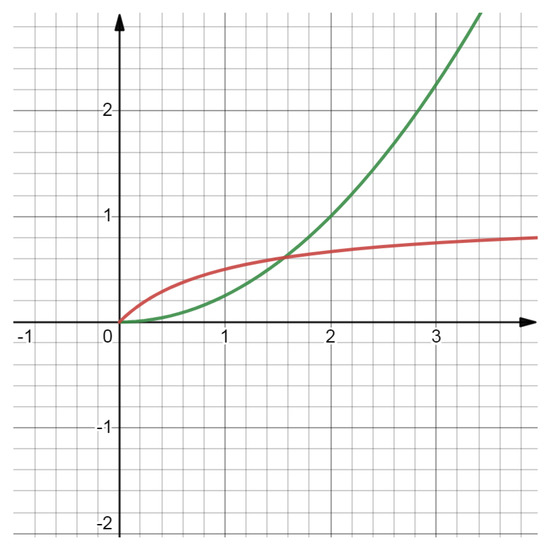

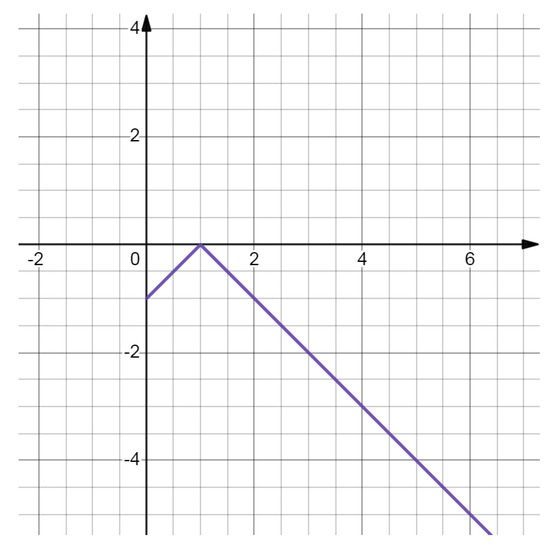

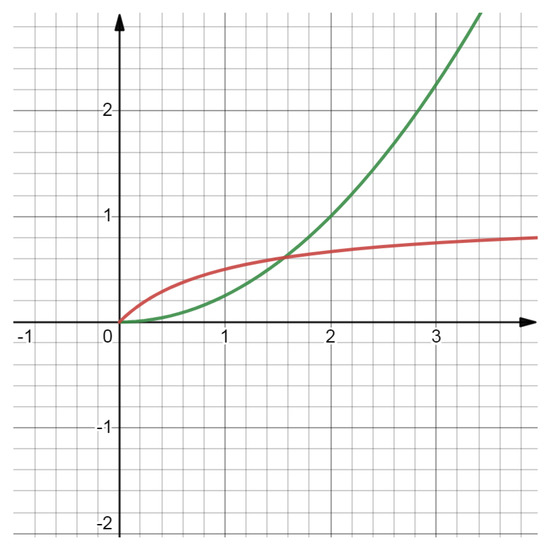

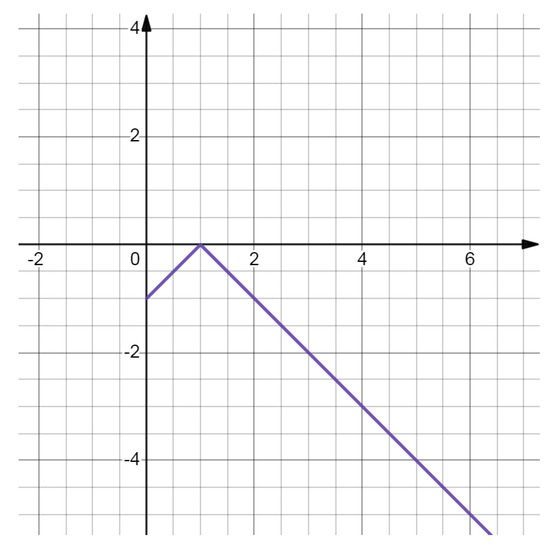

Example 3.

In the problem (NRMFP), let , , , and . Define

Via calculation, we obtain . For any , the Lagrangian function of the problem (NRMFP) is

Let , , and . It is easy to obtain

Hence,

The above result is seen in the following Figure 1 and Figure 2. Therefore, is a quasi ε-weak saddle point of the problem (NRMFP).

Figure 1.

The illustration of .

Figure 2.

The illustration of .

Theorem 3.

In the problem (NRMFP), suppose that is a quasi-weak ε-efficient solution of the problem (NRMFP) and Theorem 1 is satisfied. If is an approximate convex function at , then is a quasi -weak saddle point, where .

Proof.

Firstly, we verify (10) holds. Since is a quasi-weak -efficient solution of the NRMFP, it follows from Theorem 1 that

Therefore, there exist , , , and such that

Due to , for , , we have

Suppose that is not a quasi -weak saddle point of the problem (NRMFP); then, there exists such that

From (9), we deduce

Thus,

Since is an approximate convex function at , there exist , and such that

Let , and then together with (13) we have

Then, we obtain

which contradicts (12).

Next, we prove (11) holds. Since , for any , we have ; hence,

That is,

which implies that

The next Theorem 4 shows that a quasi -weak saddle point is a quasi-weak -efficient solution of the problem (NRMFP). □

Theorem 4.

In the problem (NRMFP), if is a quasi -weak saddle point and is an optimal solution of the problem , then is a quasi-weak ε-efficient solution, where .

Proof.

Since is a quasi -weak saddle point of the problem (NRMFP), it follows from (10) that

Because is an optimal solution of the problem , it holds that

Together with (14) and (15), we obtain

Note that , and we obtain

Hence, is a quasi-weak -efficient solution of the problem (NRMFP). □

5. Conclusions

We have established a necessary condition for robust approximate quasi-weak efficient solutions of a problem (NUMFP) based on the properties of the Gerstewitz’s function. We have also introduced two kinds of generalized convex function pairs for the problem (NUMFP), and under their assumptions we have presented sufficient conditions and saddle point theorems for robust approximate quasi-weak efficient solutions.

It would be meaningful to further investigate the proper efficient solutions, duality theorems and some special applications for the problem (NUMFP), such as multiobjective optimization problems and minimax optimization problems. Indeed, ref. [4] has discussed duality theorems and special applications for a nonsmooth semi-infinite multiobjective optimization problem, respectively. Therefore, the further works seem feasible.

Author Contributions

Conceptualization, L.G. and G.Y.; methodology, L.G., G.Y. and W.H.; writing—original draft, L.G.; writing—review and editing, L.G., G.Y. and W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Fundamental Research Funds for the Central Universities (No. 2021KYQD23, No. 2022XYZSX03), in part by the Natural Science Foundation of Ningxia Provincial of China (No. 2022AAC03260), in part by the Key Research and Development Program of Ningxia (Introduction of Talents Project) (No. 2022BSB03046), in part by the Natural Science Foundation of China under Grant No. 11861002 and the Key Project of North Minzu University under Grant No. ZDZX201804, Postgraduate Innovation Project of North Minzu University (YCX23081).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khantree, C.; Wangkeeree, R. On quasi approximate solutions for nonsmooth robust semi-infinite optimization problems. Carpathian J. Math. 2019, 35, 417–426. [Google Scholar] [CrossRef]

- Kanzi, N.; Nobakhtian, S. Nonsmooth semi-infinite programming problems with mixed constraints. J. Math. Anal. Appl. 2009, 351, 170–181. [Google Scholar] [CrossRef]

- Sun, X.K.; Teo, K.L.; Zeng, J. Robust approximate optimal solutions for nonlinear semi-infinite programming with uncertainty. Optimization 2020, 69, 2109–2129. [Google Scholar] [CrossRef]

- Pham, T.H. On Isolated/Properly Efficient Solutions in Nonsmooth Robust Semi-infinite Multiobjective Optimization. Bull. Malays. Math. Sci. Soc. 2023, 46, 73. [Google Scholar] [CrossRef]

- Chuong, T.D. Nondifferentiable fractional semi-infinite multiobjective optimization problems. Oper. Res. 2016, 44, 260–266. [Google Scholar] [CrossRef]

- Mishra, S.K.; Jaiswal, M. Optimality and duality for nonsmooth multiobjective fractional semi-infinite programming problem. Adv. Nonlinear Var. Inequalities 2013, 16, 69–83. [Google Scholar]

- Antczak, T. Sufficient optimality conditions for semi-infinite multiobjective fractional programming under (Φ,ρ)-V-invexity and generalized (Φ,ρ)-V-invexity. Filomat 2017, 30, 3649–3665. [Google Scholar] [CrossRef]

- Pan, X.; Yu, G.L.; Gong, T.T. Optimality conditions for generalized convex nonsmooth uncertain multi-objective fractional programming. J. Oper. Res. Soc. 2022, 1, 1–18. [Google Scholar] [CrossRef]

- Dem’yanov, V.F.; Vasil’ev, L.V. Nondifferentiable Optimization. In Optimization Software; Inc. Publications Division: New York, NY, USA, 1985. [Google Scholar]

- Han, W.Y.; Yu, G.L.; Gong, T.T. Optimality conditions for a nonsmooth uncertain multiobjective programming problem. Complexity 2020, 2020, 1–8. [Google Scholar] [CrossRef]

- Long, X.J.; Huang, N.J.; Liu, Z.B. Optimality conditions, duality and saddle points for nondifferentiable multiobjective fractional programs. J. Ind. Manag. Optim. 2017, 4, 287–298. [Google Scholar] [CrossRef]

- Kuroiwa, D.; Lee, G.M. On robust multiobjective optimization. J. Nonlinear Convex. Anal. 2012, 40, 305–317. [Google Scholar]

- Ben-Tal, A.; Ghaoui, L.E.; Nemirovski, A. Robust Optimization; Princeton University Press: Princeton, NJ, USA, 2009; pp. 16–64. [Google Scholar]

- Caristi, G.; Ferrara, M. Necessary conditions for nonsmooth multiobjective semi-infinite problems using Michel-Penot subdifferential. Decis. Econ. Financ. 2017, 40, 103–113. [Google Scholar] [CrossRef]

- Kabgani, A.; Soleimani-damaneh, M. Characterization of (weakly/properly/robust) efficient solutions in nonsmooth semi-infinite multiobjective optimization using convexificators. Optimization 2018, 67, 217–235. [Google Scholar] [CrossRef]

- Clarke, F.H. Optimization and Nonsmooth Analysis; Wiley: New York, NY, USA, 1983; pp. 46–78. [Google Scholar]

- Fakhar, M.; Mahyarinia, M.R.; Zafarani, J. On nonsmooth robust multiobjective optimization under generalized convexity with applications to portfolio optimization. Eur. J. Oper. Res. 2018, 265, 39–48. [Google Scholar] [CrossRef]

- Lee, G.M.; Kim, G.S.; Dinh, N. Optimality Conditions for Approximate Solutions of Convex Semi-Infinite Vector Optimization Problems. Recent Dev. Vector Optim. 2012, 1, 275–295. [Google Scholar]

- Chen, J.W.; Köbis, E.; Yao, J.C. Optimality Conditions and Duality for Robust Nonsmooth Multiobjective Optimization Problems with Constraints. J. Optim. Theory Appl. 2019, 265, 411–436. [Google Scholar] [CrossRef]

- Gerth, C.; Weidner, P. Nonconvex separation theorems and some applications in vector optimization. J. Optim. Theory Appl. 1990, 67, 297–320. [Google Scholar] [CrossRef]

- Han, Y. Connectedness of the approximate solution sets for set optimization problems. Optimization 2022, 71, 4819–4834. [Google Scholar] [CrossRef]

- Rockafellar, R.T. Convex Analysis; Princeton University Press: Princeton, NJ, USA, 1997; pp. 10–32. [Google Scholar]

- Tung, N.M.; Van Duy, M. Constraint qualifications and optimality conditions for robust nonsmooth semi-infinite multiobjective optimization problems. 4OR 2022, 21, 151–176. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).