Abstract

The stochastic inertial bidirectional associative memory neural networks (SIBAMNNs) on time scales are considered in this paper, which can unify and generalize both continuous and discrete systems. It is of primary importance to derive the criteria for the existence and uniqueness of both periodic and almost periodic solutions of SIBAMNNs on time scales. Based on that, the criteria for their exponential stability on time scales are studied. Meanwhile, the effectiveness of all proposed criteria is demonstrated by numerical simulation. The above study proposes a new way to unify and generalize both continuous and discrete SIBAMNNs systems, and is applicable to some other practical neural network systems on time scales.

Keywords:

time-scale analysis in control systems; SIBAMNNs on time scales; periodic solution; almost periodic solution; exponential stability MSC:

03F03

1. Introduction

Bidirectional associative memory neural networks (BAMNNs) are a special class of recurrent neural networks that can store bipolar vector pairs, which were originally introduced and investigated by Kosko in 1988 [1]. BAMNNs have been widely studied due to their important applications in many fields [2,3,4,5,6,7], such as signal processing, image processing, associative memories and pattern recognition. It is well known that BAMNNs can be construed as a super damping (i.e., the damping tends to infinity) system physically, but the ones with weak damping play more important roles in actual applications. Therefore, the weak damping BAMNNs are proposed, which are also named inertial BAMNNs [8].

Artificial intelligence meets the requirements of being more intelligent and suitable for complex cases. As the most important branch of modern artificial intelligence, neural networks possess the advantages of massive parallel processing, self-learning abilities and nonlinear mapping, which motivated the development of intelligent control systems [9,10,11]. It is widely known that neural networks can be divided into continuous [12,13] and discrete cases [14,15], which have been thoroughly studied and widely applied. However, many complex systems include both continuous and discrete cases, and previous research on these two cases was carried out separately [16]. Therefore, it is challenging to consider neural networks under the unified framework, which can unify and generalize both continuous and discrete systems.

Fortunately, the calculus of time scales initiated by Hilger [17] can perfectly solve the problem of unifying and generalizing continuous and discrete cases. Many related works have sprung up that generalized classical methods under the time-scale framework, including the Darboux transformation method [18], the Hirota bilinear method [19], the Lie symmetry method [20] and the Lyapunov function method [21]. Recently, the research on neural networks on time scales has aroused wide attention, especially on the solutions of neural networks on time scales involving equilibrium [22], periodic [23], almost periodic [24], pseudo-almost periodic [25], almost automorphic solutions [26], etc. Moreover, some results on the dynamical properties of solutions have been achieved, such as their existence, stability and synchronization and periodic and almost periodic oscillatory behaviors. For instance, Yang et al. [23] studied the existence and exponential stability of periodic solutions of stochastic high-order Hopfield neural networks on time scales. Zhou et al. [24] investigated the existence and exponential stability of almost periodic solutions of neutral-type BAM neural networks on time scales.

The study of stochastic inertial BAM neural networks (SIBAMNNs) on time scales is still new, although there have been some studies on continuous or discrete cases. Considering the importance of SIBAMNNs and the significance of studying the system under a unified framework, we explore the existence, uniqueness and stability of both periodic and almost periodic solutions of the SIBAMNNs on time scales with discrete and distributed delays, which can unify and generalize the continuous and discrete cases. The analysis of SIBAMNNs on time scales built a bridge between continuous and discrete analyses. This provides an effective way of studying complex models that include both continuous and discrete factors, such as hybrid systems. The remaining paper is organized as follows: In Section 2, we give some Definitions and Lemmas for time scales. In Section 3, the criteria for the existence and uniqueness of periodic and almost periodic solutions of SIBAMNNs on time scales are explored. The exponential stability of SIBAMNNs on time scales is discussed in Section 4. The effectiveness of the proposed criteria for SIBAMNNs is demonstrated by considering numerical simulation in Section 5.

2. Preliminaries

Definition 1

([27] (periodic time scale)). A time scale is called a periodic time scale if there exists such that

Definition 2

([27] (periodic function)). Let be a periodic time scale with period p. The function is called a periodic function with period if there exists such that , for all , where ω is the smallest number satisfying .

Definition 3

([28] (almost periodic function)). Let be a periodic time scale. The function can be called almost periodic if the ε-translation set of x

is relatively dense for all . I.e., for any , there exists a constant such that each interval of length contains a such that

τ is called the ε-translation number of x and is the inclusion length of .

Definition 4

([29,30] (jump operator)). Let be a time scale.

- 1.

- The forward jump operator is defined by

- 2.

- The graininess function is defined by

Definition 5

([29,30] (rd-continuous and regressive)).

- 1.

- is called rd-continuous provided it is continuous at right-dense points in T and its left-sided limits exist (finite) at left-dense points in . The set of rd-continuous functions is denoted by .

- 2.

- A function is regressive providedfor all , where if the maximum m of is left-scattered. Otherwise, . A function is positively regressive providedfor all .

- 3.

- The set of all regressive and rd-continuous functions is denoted byThe set of all positively regressive and rd-continuous functions is denoted by

Definition 6

([29]). A function is delta-differential at if there is a number such that, for all , there exists a neighborhood U of t (i.e., for some ) such that

is called the delta derivative of f at t. f is delta-differentiable on provided exists for all .

Lemma 1

Lemma 2

([29]). If , then

Proof.

Using Theorem 2.62 in [29], (3) can be easily derived. □

Lemma 3

([29]). If and , then

- 1.

- 2.

- 3.

- The function defined by for all is also an element of .

- 4.

- .

For more properties of exponential functions on time scales, please refer to Section 2.2 in [29].

Lemma 4

([31]). For any and ,

Lemma 5

([32]). For the -stochastic integral, if , then and the -isometry

holds.

3. Existence and Uniqueness of Solution for SIBAMNNs on Time Scales

Consider the SIBAMNNs [33] on time scales

Using variable substitution

3.1. Periodic Solution

Assumption 1.

, , and are Lipschitz-continuous with positive constants , , and , respectively, and for .

Assumption 2.

with . , , , , , , , , , , , are periodic functions with period .

Lemma 6.

Suppose Assumptions 1 and 2 hold, for , is an ω-periodic solution of (6) if and only if it is the solution of

where

Proof.

Multiplying both sides of (8) by , using Lemma 2, we have

Integrating both sides of (17) from t to and choosing , we obtain

Since , then satisfies (13). Similarly, we can obtain that , , satisfy (14)–(16). Vice versa, (18) can be derived from (13) and derivative on both sides of (18), we can obtain that satisfies (8). Similarly, , , satisfy (9)–(11). □

Let

with norm

where . is the family of bounded -measurables. is the correspondent expectation operator and R is finite, which will be determined later, then is a Banach space [34].

Theorem 1

3.2. Almost Periodic Solution

Assumption 3.

with . , , , , , , , , , , , are almost periodic with period .

Lemma 7

([35]). If and for , then for and ,

Lemma 8

([36]). If , then

We give an important Proposition to be used in the study of the existence of an almost periodic solution for (6) and (7).

Lemma 9.

If with , , are almost periodic, then

Proof.

Using Lemmas 2 and 3, we obtain

and

Multiplying both sides of (40) by and using (41),

Integrating (42) from to t, we have

Similarly, we can obtain

which means (35) holds.

For (36), it follows from Lemma 2 that

Multiplying both sides of (43) by and integrating it from to t, using Lemma 3,

we have

where

by using Lemma 7. With derived from Lemma 1, it follows from (44) that

Using , and Lemmas 3 and 8, it follows from (45) that

Thus (36) holds. Similarly, (37) can be derived.

For (38), using , and Lemmas 3 and 8,

Similarly, (39) can be derived. □

Let with norm

Theorem 2

Proof.

From (60) and (64)–(66), for any , there exists a corresponding which produces

Therefore, is almost periodic.

From (8), we have

where , . As in (50), we have

Similarly, it follows from (9)–(11) that

Define an operator on with

in which

First, we prove . Using Proposition 9, we obtain

Using Lemma 4, it follows from (59) that

Since

where

Using Lemma 5 and (38),

Using Lemmas 5 and 7,

Second, we prove . It follows from (55)–(58) that

Let

From (67)–(70),

Choosing , we obtain , which means .

4. Exponential Stability

Proof.

From (80)–(83), choose

and . If (80) holds, then . Since for , then , and we obtain

for the periodic solution and

for the almost periodic solution. We claim that for

,

i.e., for any , the periodic solution satisfies

and the almost periodic solution satisfies

We give the proof by contradiction. Without a loss of generality, assume that (84) is not satisfied, then there exist and such that

Hence, there exists a constant such that

It follows from (78) that

Since , we obtain from (86), which means contradiction. Therefore, (84) holds. Similarly, we can derive that (85) holds. Therefore,

which means the exponential stability of the solutions for systems (6) and (7) is obtained. □

Let and be two solutions of systems (6) and (7) with initial data , and , and , , , , . For initial state, set , , , for . From (8), we have

Let . It follows from (78) that

where

Then, from (79), we have

Let , , . Similarly, we have

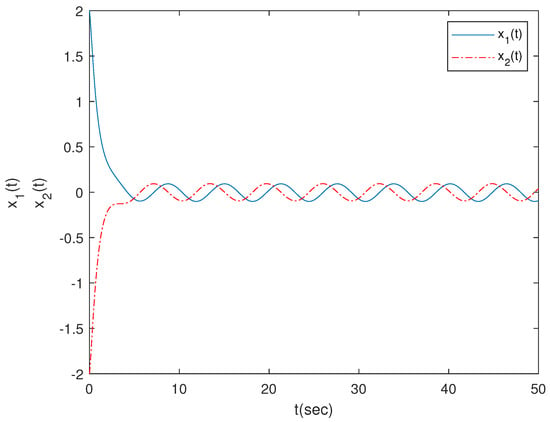

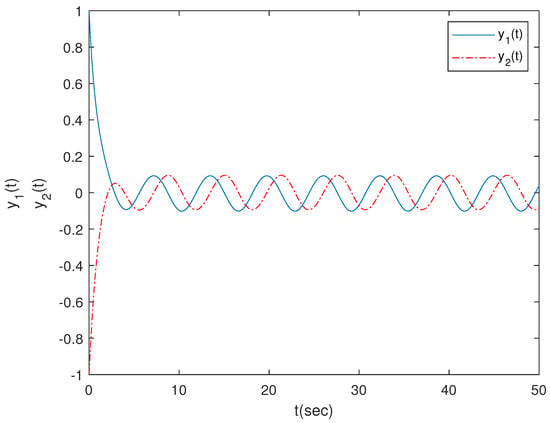

5. Numerical Example

The accuracy and effectiveness of the obtained results are demonstrated by numerical example in this section.

Let . Consider the SIBAMNNs on time scales

Choose

, , , , , , , , , , , , , , , , , , , .

(1) For , Assumption 1, Assumption 2, (19) and (77) are satisfied under the above parameters, which means (87) and (88) have a periodic solution which is exponentially stable (Figure 1 and Figure 2).

(2) For and , , , Assumptions 1 and 2 and (19) and (77) are satisfied under the above parameters, which means (87) and (88) have a periodic solution which is exponentially stable.

(3) For general time scales, Assumption 1, Assumption 3 and (77) are satisfied under the above parameters, which means (87) and (88) have an almost periodic solution which is exponentially stable.

Remark 1.

It follows from the above analysis that changes in the time scale can cause changes in the periodicity and stability of the solutions of SIBAMNNs.

6. Conclusions

We derive the criteria for the existence, uniqueness and exponential stability of both periodic and almost periodic solutions of SIBAMNNs on time scales, which unify and generalize the continuous and discrete cases and provide greater flexibility in handling time scales of practical importance. One can observe that the method for continuous-time inertial BAM neural networks in [38] and discrete-time inertial neural networks in [39] cannot directly be applied to system (6). The exponential stability of the solution on time scales is investigated without constructing a Lyapunov function, which provides a way to study the stability of neural networks that are not easy to construct Lyapunov functions for on time scales. Moreover, the inertial term, stochastic process and distributed time delay on time scales are considered simultaneously, which makes the model more applicable. Furthermore, new estimations for the exponential functions with almost periodic parameters on time scales are derived in Lemma 9. It is meaningful to study the existence and stability of periodic and almost periodic solutions for systems on time scales which can unify the continuous and discrete situations. A possible future direction is how to generalize the technique to deal with the time-scale cases and the methods used in this paper to resolve some other types of neural networks on time scales. Moreover, the study of periodic, almost periodic, pseudo-almost periodic and almost automorphic solutions of neural networks on time scales is also another possible future direction.

Author Contributions

Conceptualization, methodology, writing—original draft, validation, M.L.; supervision, funding acquisition, H.D.; resources, validation, Y.Z.; writing—reviewing and editing, validation, funding acquisition, project administration, Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 12105161, 11975143) and the Natural Science Foundation of Shandong Province (No. ZR2019 QD018).

Data Availability Statement

Not applicable.

Acknowledgments

We would like to express our great appreciation to the editors and reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kosko, B. Bidrectional associative memories. IEEE Trans. Syst. Man Cybern. 1988, 18, 49–60. [Google Scholar] [CrossRef]

- Raja, R.; Anthoni, S.M. Global exponential stability of BAM neural networks with time-varying delays: The discrete-time case. Commun. Nonlinear Sci. Numer. Simulat. 2011, 16, 613–622. [Google Scholar] [CrossRef]

- Shao, Y.F. Existence of exponential periodic attractor of BAM neural networks with time-varying delays and impulses. Nerocomputing 2012, 93, 1–9. [Google Scholar] [CrossRef]

- Yang, W.G. Existence of an exponential periodic attractor of periodic solutions for general BAM neural networks with time-varying delays and impulses. Appl. Math. Comput. 2012, 219, 569–582. [Google Scholar] [CrossRef]

- Zhang, Z.Q.; Liu, K.Y.; Yang, Y. New LMI-based condition on global asymptotic stability concerning BAM neural networks of neural type. Neurocomputing 2012, 81, 24–32. [Google Scholar] [CrossRef]

- Zhu, Q.X.; Rakkiyappan, R.; Chandrasekar, A. Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 2014, 136, 136–151. [Google Scholar] [CrossRef]

- Lin, F.; Zhang, Z.Q. Global asymptotic synchronization of a class of BAM neural networks with time delays via integrating inequality techniques. J. Syst. Sci. Complex. 2020, 33, 366–392. [Google Scholar] [CrossRef]

- Zhou, F.Y.; Yao, H.X. Stability analysis for neutral-type inertial BAM neural networks with time-varying delays. Nonlinear Dynam. 2018, 92, 1583–1598. [Google Scholar] [CrossRef]

- Roshani, G.H.; Hanus, R.; Khazaei, A.; Zych, M.; Nazemi, E.; Mosorov, V. Density and velocity determination for single-phase flow based on radiotracer technique and neural networks. Flow Meas. Instrum. 2018, 61, 9–14. [Google Scholar] [CrossRef]

- Azimirad, V.; Ramezanlou, M.T.; Sotubadi, S.V.; Janabi-Sharifi, F. A consecutive hybrid spiking-convolutional (CHSC) neural controller for sequential decision making in robots. Neurocomputing 2022, 490, 319–336. [Google Scholar] [CrossRef]

- Mozaffari, H.; Houmansadr, A. E2FL: Equal and equitable federated learning. arXiv 2022, arXiv:2205.10454. [Google Scholar]

- Lakshmanan, S.; Lim, C.P.; Prakash, M.; Nahavandi, S.; Balasubramaniam, P. Neutral-type of delayed inertial neural networks and their stability analysis using the LMI Approach. Neurocomputing 2016, 230, 243–250. [Google Scholar] [CrossRef]

- Kumar, R.; Das, S. Exponential stability of inertial BAM neural network with time-varying impulses and mixed time-varying delays via matrix measure approach. Commun. Nonlinear Sci. Numer. Simulat. 2019, 81, 105016. [Google Scholar] [CrossRef]

- Xiao, Q.; Huang, T.W. Quasisynchronization of discrete-time inertial neural networks with parameter mismatches and delays. IEEE Trans. Cybern. 2021, 51, 2290–2295. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Zhang, Y. Exponential stability of impulsive discrete-time stochastic BAM neural networks with time-varying delay. Neurocomputing 2014, 131, 323–330. [Google Scholar] [CrossRef]

- Pan, L.J.; Cao, J.D. Stability of bidirectional associative memory neural networks with Markov switching via ergodic method and the law of large numbers. Neurocomputing 2015, 168, 1157–1163. [Google Scholar] [CrossRef]

- Hilger, S. Analysis on measure chains-a unified approach to continuous and discrete calculus. Res. Math. 1990, 18, 18–56. [Google Scholar] [CrossRef]

- Cieśliński, J.L.; Nikiciuk, T.; Waśkiewicz, K. The sine-Gordon equation on time scales. J. Math. Anal. Appl. 2015, 423, 1219–1230. [Google Scholar] [CrossRef]

- Hovhannisyan, G. 3 soliton solution to Sine-Gordon equation on a space scale. J. Math. Phys. 2019, 60, 103502. [Google Scholar] [CrossRef]

- Zhang, Y. Lie symmetry and invariants for a generalized Birkhoffian system on time scales. Chaos Soliton Fractals 2019, 128, 306–312. [Google Scholar] [CrossRef]

- Federson, M.; Grau, R.; Mesquita, J.G.; Toon, E. Lyapunov stability for measure differential equations and dynamic equations on time scales. J. Differ. Equ. 2019, 267, 4192–4223. [Google Scholar] [CrossRef]

- Gu, H.B.; Jiang, H.J.; Teng, Z.D. Existence and global exponential stability of equilibrium of competitive neural networks with different time scales and multiple delays. J. Franklin I 2010, 347, 719–731. [Google Scholar] [CrossRef]

- Yang, L.; Fei, Y.; Wu, W.Q. Periodic solution for ∇-stochastic high-order Hopfield neural networks with time delays on time scales. Neural Process Lett. 2019, 49, 1681–1696. [Google Scholar] [CrossRef]

- Zhou, H.; Zhou, Z.; Jiang, W. Almost periodic solutions for neutral type BAM neural networks with distributed leakage delays on time scales. Neurocomputing 2015, 157, 223–230. [Google Scholar] [CrossRef]

- Arbi, A.; Cao, J.D. Pseudo-almost periodic solution on time-space scales for a novel class of competitive neutral-type neural networks with mixed time-varying delays and leakage delays. Neural Process Lett. 2017, 46, 719–745. [Google Scholar] [CrossRef]

- Dhama, S.; Abbas, S. Square-mean almost automorphic solution of a stochastic cellular neural network on time scales. J. Integral Equ. Appl. 2020, 32, 151–170. [Google Scholar] [CrossRef]

- Kaufmann, E.R.; Raffoul, Y.N. Periodic solutions for a neutral nonlinear dynamical equation on a time scale. J. Math. Anal. Appl. 2006, 319, 315–325. [Google Scholar] [CrossRef]

- Lizama, C.; Mesquita, J.G.; Ponce, R. A connection between almost periodic functions defined on time scales and ℝ. Appl. Anal. 2014, 93, 2547–2558. [Google Scholar] [CrossRef]

- Bohner, M.; Peterson, A. Dynamic Equations on Time Scales: An Introduction with Applications; Springer Science & Business Media: New York, NY, USA, 2001. [Google Scholar]

- Bohner, M.; Peterson, A. Advances in Dynamic Equations on Time Scales; Birkhauser: Boston, MA, USA, 2003. [Google Scholar]

- Wu, F.; Hu, S.; Liu, Y. Positive solution and its asymptotic behaviour of stochastic functional Kolmogorov-type system. J. Math. Anal. Appl. 2010, 364, 104–118. [Google Scholar] [CrossRef]

- Bohner, M.; Stanzhytskyi, O.M.; Bratochkina, A.O. Stochastic dynamic equations on general time scales. Electron. J. Differ. Equ. 2013, 2013, 1215–1230. [Google Scholar]

- Ke, Y.Q.; Miao, C.F. Stability and existence of periodic solutions in inertial BAM neural networks with time delay. Neural Comput. Appl. 2013, 23, 1089–1099. [Google Scholar]

- Yang, L.; Li, Y.K. Existence and exponential stability of periodic solution for stochastic Hopfield neural networks on time scales. Neurocomputing 2015, 167, 543–550. [Google Scholar] [CrossRef]

- Ad1var, M.; Raffoul, Y.N. Existence of periodic solutions in totally nonlinear delay dynamic equations. Electron. J. Qual. Theory Differ. Equ. 2009, 1, 1–20. [Google Scholar] [CrossRef]

- Wang, C. Almost periodic solutions of impulsive BAM neural networks with variable delays on time scales. Commun. Nonlinear Sci. Numer. Simulat. 2014, 19, 2828–2842. [Google Scholar] [CrossRef]

- Li, Y.K.; Yang, L.; Wu, W.Q. Square-mean almost periodic solution for stochastic Hopfield neural networks with time-varying delays on time scales. Neural Comput. Appl. 2015, 26, 1073–1084. [Google Scholar] [CrossRef]

- Qi, J.; Li, C.; Huang, T. Stability of inertial BAM neural network with time-varying delay via impulsive control. Neurocomputing 2015, 161, 162–167. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, Y.; Qu, H. Global mean-square exponential stability and random periodicity of discrete-time stochastic inertial neural networks with discrete spatial diffusions and Dirichlet boundary condition. Comput. Math. Appl. 2023, 141, 116–128. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).