Sensitivity Analysis of the Data Assimilation-Driven Decomposition in Space and Time to Solve PDE-Constrained Optimization Problems

Abstract

1. Introduction

- By using the forward error analysis (FEA), we derive the number of conditions of DD-DA. We find that DD-DA actually reduces the number of conditions of DA, revealing that it is much more appropriate than the standard approach that is usually implemented in most operative software;

- As the background values are used as initial conditions of local PDE models, we prove that small changes in initial values must not cause large changes in the final result. Then, we analyze the stability with respect to the time direction;

- We analyze the consistency of DD-DA in terms of local truncation errors;

- Overall, the present work complements the study reported in [16], in which the authors performed SA of DD in 3D space in the context of a variational data assimilation problem.

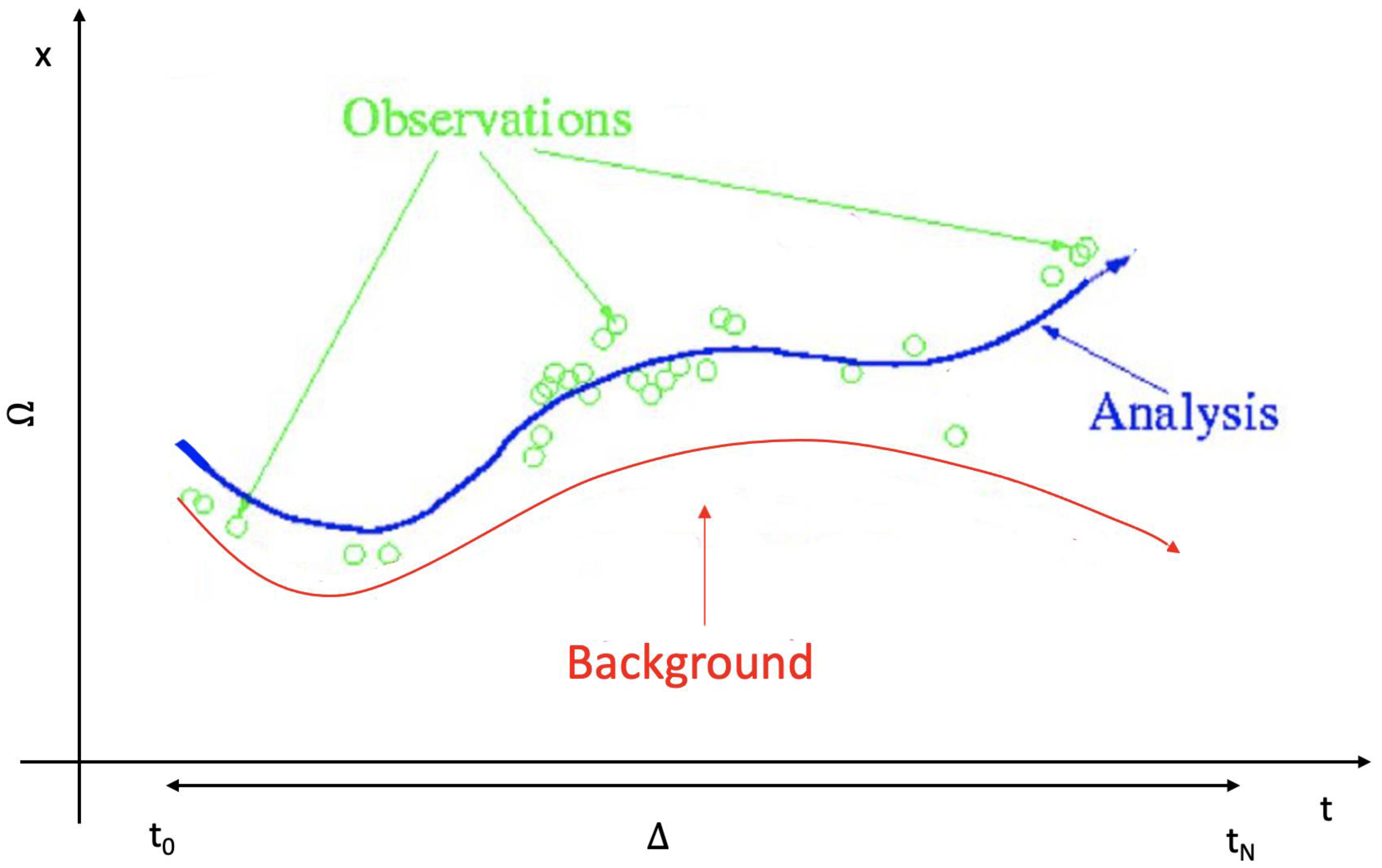

2. 4D Variational DA Formulation

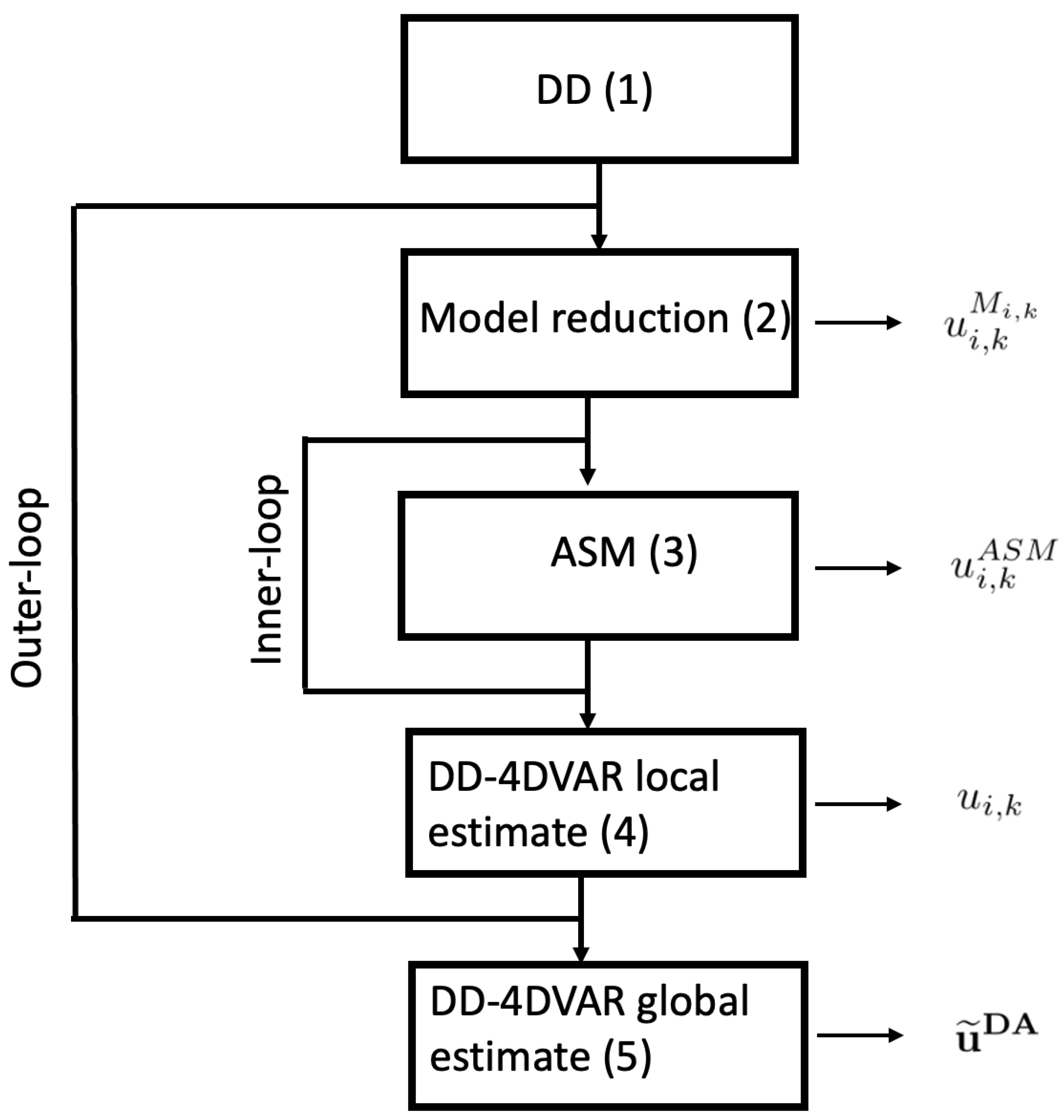

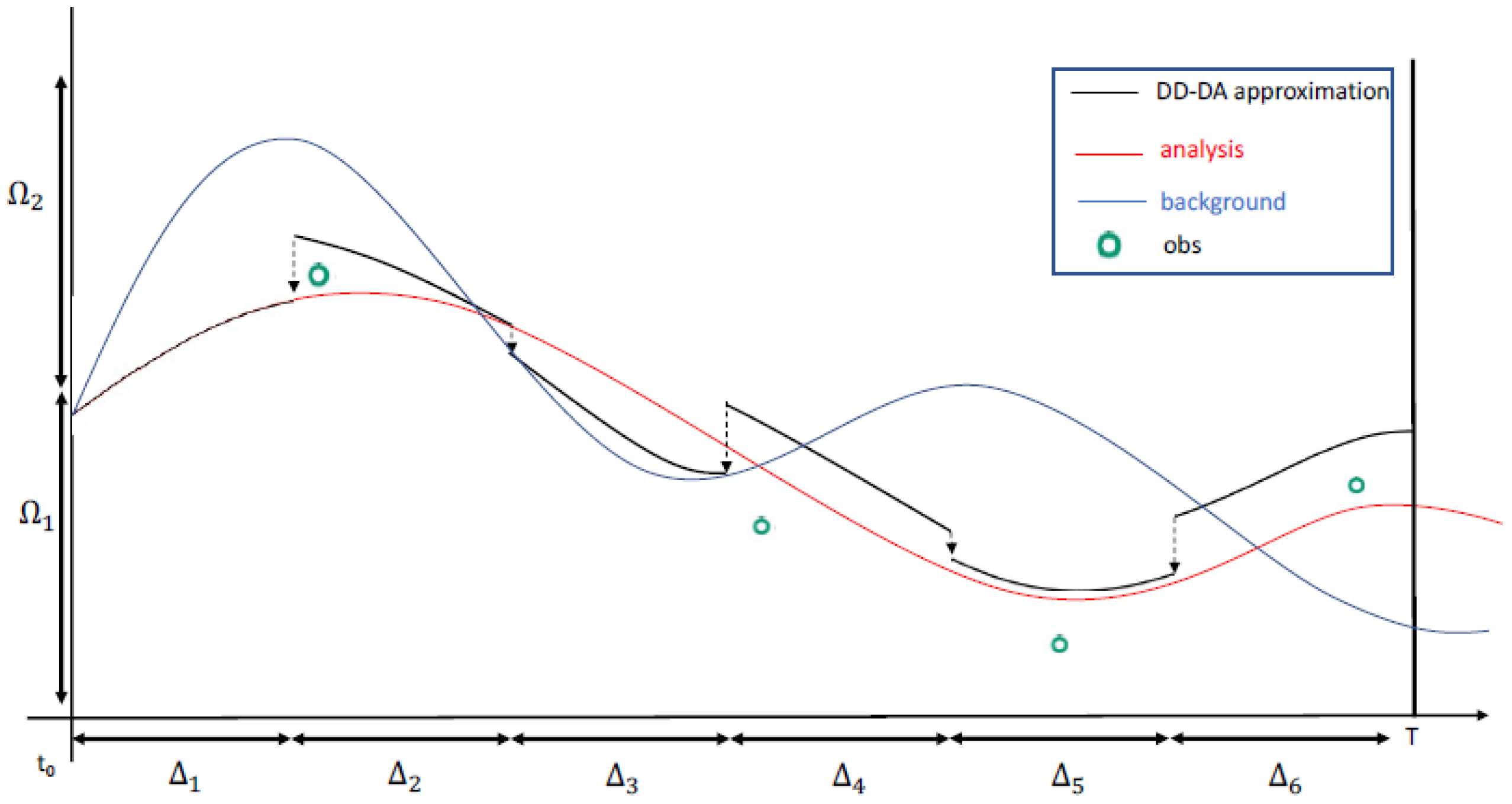

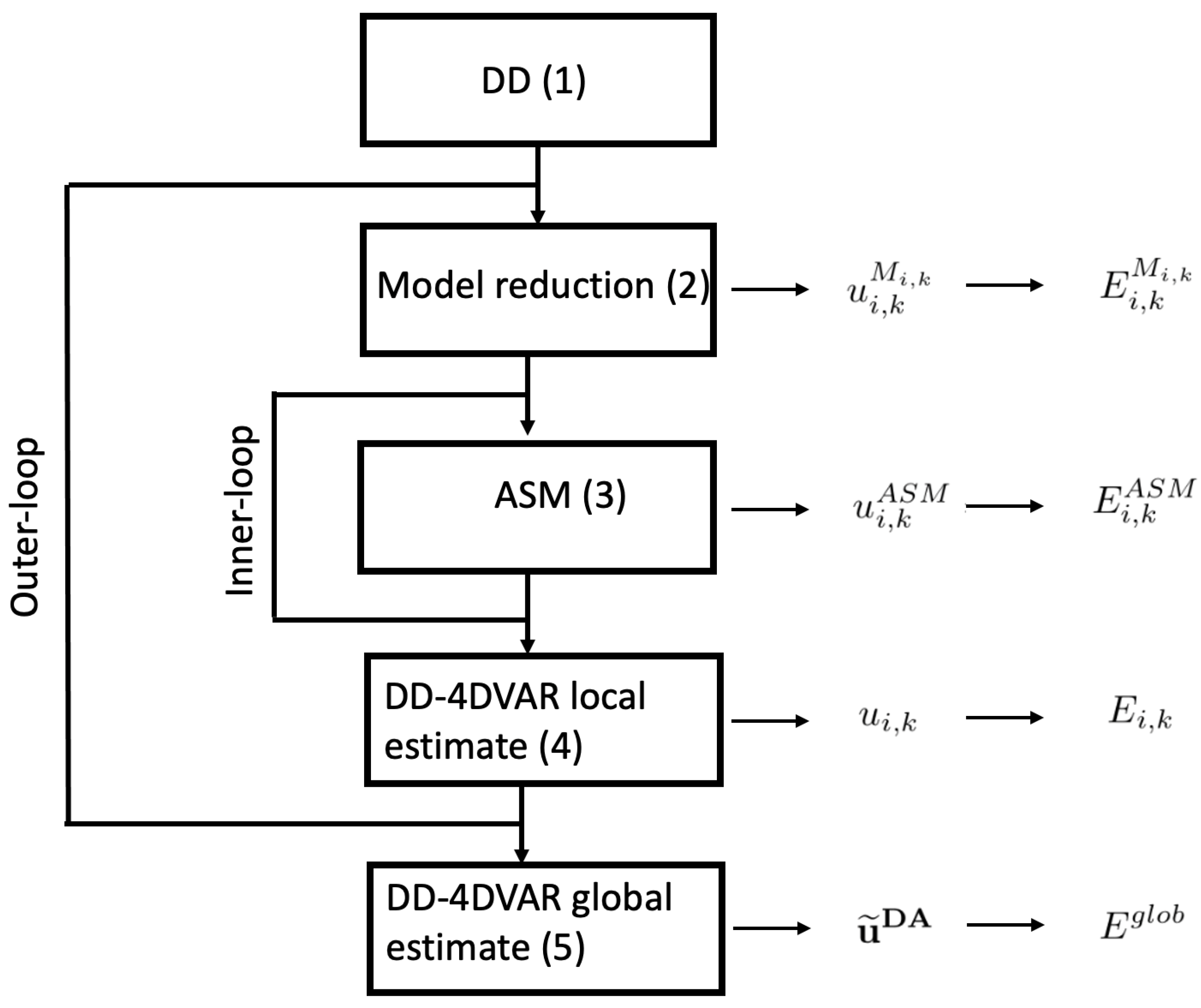

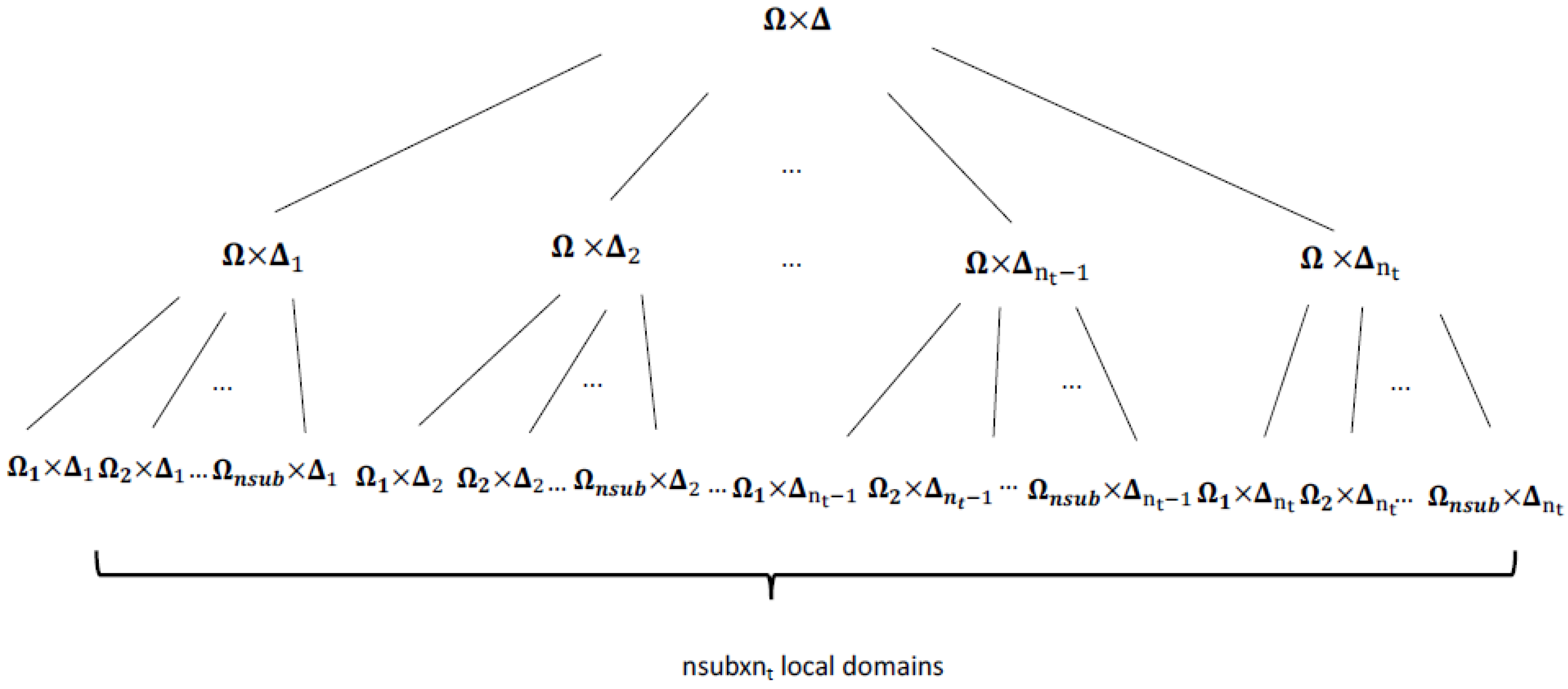

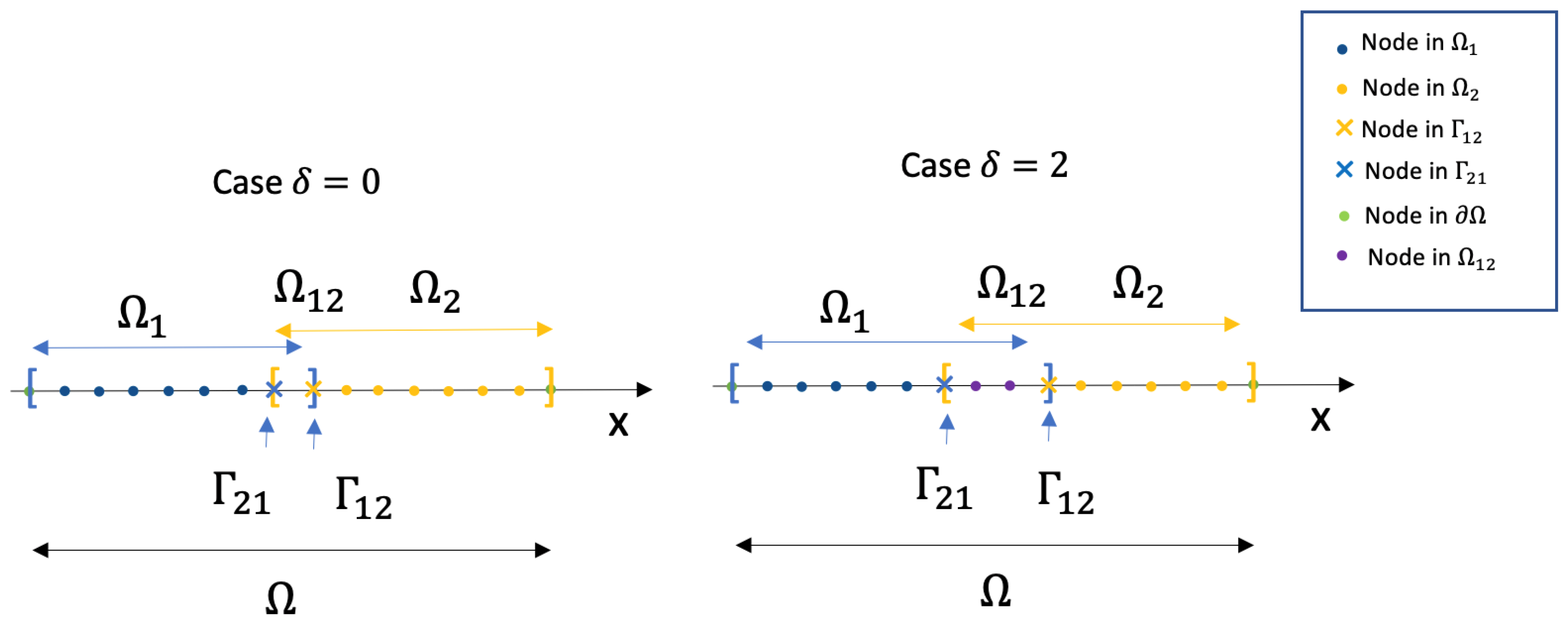

The Space and Time DA—Driven Domain Decomposition Method

3. Sensitivity Analysis

3.1. Convergence, Consistence and Stability of DD-DA Method

3.1.1. Consistence

3.1.2. Stability

4. Validation Analysis

- : spatial domain;

- : time interval;

- : numbers of inner nodes of defined in (5);

- : numbers of occurrences of time in ;

- : number of observations considered at each step ;

- : observations vector at each step . Observations are obtained choosing (randomly) these values among the values of the state function (the so called background) and perturbing (randomly) them. (We choice the observation in this way because the experimental set up is aimed to validate the sensitivity analysis of DD-DA instead of the reliability of DD-DA method);

- : piecewise linear interpolation operator whose coefficients are computed using the nodes of nearest to the observation values;

- : obtained as in (13) from the matrix , ;

- , : model and observational error variances;

- : covariance matrix of the error of the model at each step , where denotes the Gaussian correlation structure of the model errors in (91);

- : covariance matrix of the errors of the observations at each step .

- : a diagonal matrix obtained from the matrices , .

- Consistency. From Table 1, we obtain

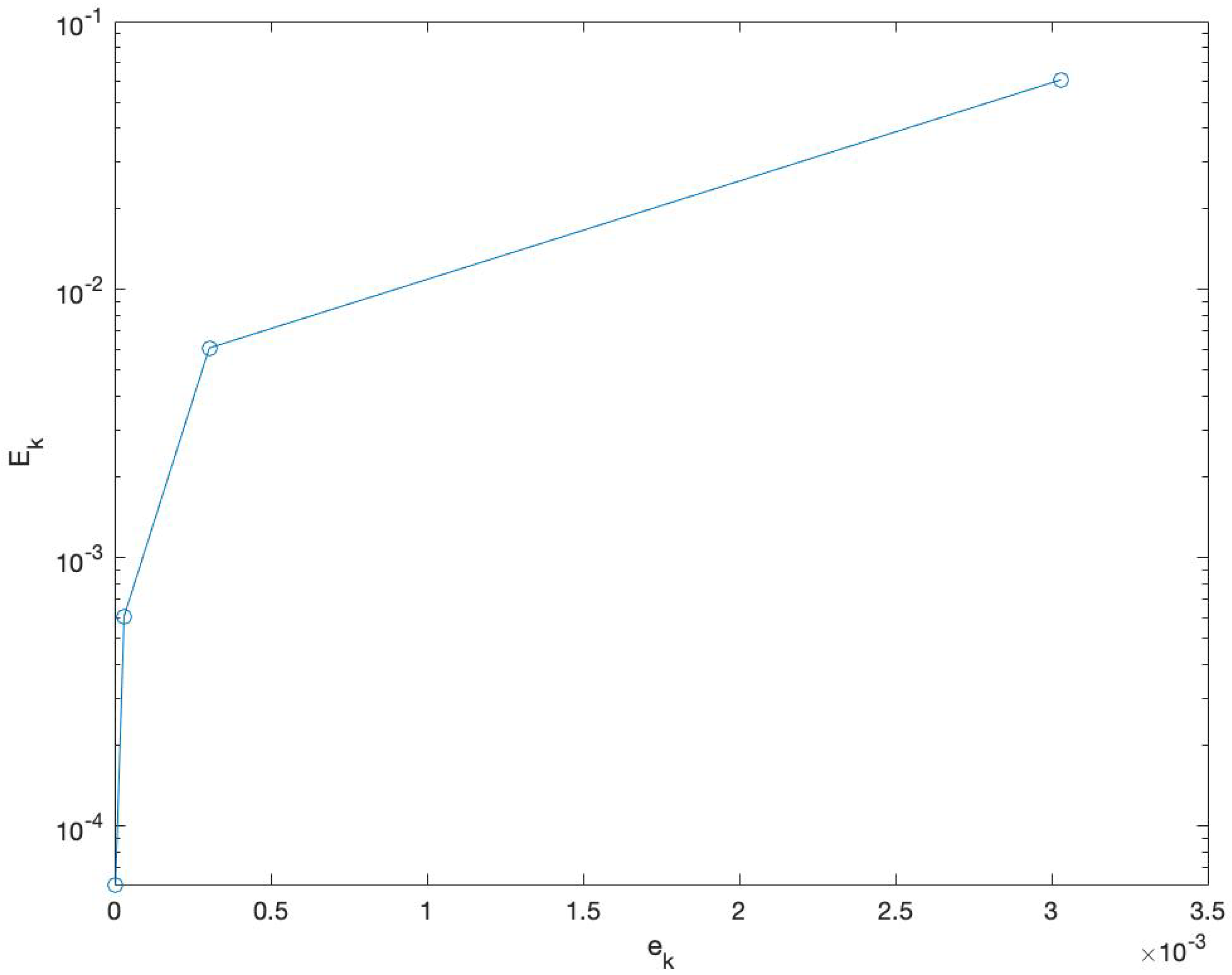

- Stability. In Table 2 and Figure 8, we report values of for different values of the perturbation on the initial condition of defined in (25). Then, we may estimate in (90). In particular, we found thatConsequently, the local problems with initial boundary problem of SWEs 1D, are well-conditioned with respect to the time direction.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ghil, M.; Malanotte-Rizzoli, P. Data assimilation in meteorology and oceanography. Adv. Geophys. 1991, 33, 141–266. [Google Scholar]

- Navon, I.M. Data Assimilation for Numerical Weather Prediction: A Review. In Data Assimilation for Atmospheric, Oceanic and Hydrologic Applications; Park, S.K., Xu, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Blum, J.; Le Dimet, F.; Navon, I. Data Assimilation for Geophysical Fluids. In Handbook of Numerical Analysis; Elsevier Inc. (Branch Office): San Diego, CA, USA, 2005; Volume XIV, Chapter 9. [Google Scholar]

- D’Elia, M.; Perego, M.; Veneziani, A. A variational data assimilation procedure for the incompressible Navier-Stokes equations in hemodynamics. J. Sci. Comput. 2012, 52, 340–359. [Google Scholar] [CrossRef]

- D’Amore, L.; Murli, A. Regularization of a Fourier series method for the Laplace transform inversion with real data. Inverse Probl. 2012, 18, 1185–1205. [Google Scholar]

- Cohn, S.E. An introduction to estimation theory (data assimilation in meteology and oceanography: Theory and practice). J. Meteorol. Soc. Jpn. Ser. II 1997, 75, 257–288. [Google Scholar] [CrossRef]

- Nichols, N.K. Mathematical concepts of data assimilation. In Data Assimilation; Springer: Berlin/Heidelberg, Germany, 2010; pp. 13–39. [Google Scholar]

- Zhang, Z.; Moore, J.C. Mathematical and Physical Fundamentals of Climate Change; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- D’Amore, L.; Marcellino, L.; Mele, V.; Romano, D. Deconvolution of 3D fluorescence microscopy images using graphics processing units, Lecture Notes in Computer Science. In Proceedings of the 2012 9th International Conference on Parallel Processing and Applied Mathematics, PPAM 2011, Torun, Poland, 11–14 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7203, pp. 690–699. [Google Scholar]

- Antonelli, L.; Carracciuolo, L.; Ceccarelli, M.; D’Amore, L.; Murli, A. Total variation regularization for edge preserving 3D SPECT imaging in high performance computing environments, Lecture Notes in Computer Science. In Proceedings of the 2002 International Conference on Computational Science, ICCS 2002, Amsterdam, The Netherlands, 21–24 April 2002; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2330, pp. 171–180. [Google Scholar]

- D’Amore, L.; Cacciapuoti, R. Model reduction in space and time for ab initio decomposition of 4D variational data assimilation problems. Appl. Numer. Math. 2021, 160, 242–264. [Google Scholar] [CrossRef]

- D’Amore, L.; Costantinescu, E.; Carracciuolo, L. A scalable space-and-time domain decomposition approach for solving large scale non linear regularized inverse ill posed problems in 4D Variational Data Assimilation. J. Sci. Comput. 2022, 91, 59. [Google Scholar] [CrossRef]

- D’Amore, L.; Arcucci, L.; Carracciuolo, L.; Murli, A. DD-ocean var: A domain decomposition fully parallel data assimilation software for the mediterranean forecasting system. In Proceedings of the 2013 13th Annual International Conference on Computational Science, ICCS 2013, Barcelona, Spain, 7–13 June 2013; Elsevier: Amsterdam, The Netherlands, 2013; Volume 18, pp. 1235–1244. [Google Scholar]

- Arcucci, R.; D’Amore, L.; Carracciuolo, L. On the problem-decomposition of scalable 4D-Var Data Assimilation models. In Proceedings of the 2015 International Conference on High Performance Computing and Simulation, HPCS 2015, Amsterdam, The Netherlands, 20–24 July 2015; pp. 589–594. [Google Scholar]

- Cacuci, D. Sensitivity and Uncertainty Analysis; Chapman & Hall/CRC: New York, NY, USA, 2003. [Google Scholar]

- Arcucci, R.; D’Amore, L.; Pistoia, J.; Toumi, R.; Murli, A. On the variational data assimilation problem solving and sensitivity analysis. J. Comput. Phys. 2017, 335, 311–326. [Google Scholar] [CrossRef]

- Schwarz, H.A. Ueber einige Abbildungsaufgaben. De Gruyter 2009, 1869, 105–120. [Google Scholar] [CrossRef]

- Lions, P.L. On the Schwarz alternating method. III: A variant for nonoverlapping subdomains. In Proceedings of the Third International Conference on Domain Decomposition Methods, Houston, TX, USA, 20–22 March 1989; SIAM: Philadelphia, PA, USA, 1990; Volume 6, pp. 202–223. [Google Scholar]

- Dryja, M.; Widlund, O.B. Some domain decomposition algorithms for elliptic problems. In Iterative Methods for Large Linear Systems (Austin, TX, 1988); Academic Press: Boston, MA, USA, 1990; pp. 273–291. [Google Scholar]

- Dalquist, G.; Bjiork, A. Numerical Methods; Dover Publication: Mineola, NY, USA, 1974. [Google Scholar]

- Dahlquist, G. Convergence and stability in the numerical integration of ordinary differential equations. Math. Scand. 1956, 4, 33–53. [Google Scholar] [CrossRef]

- LeVeque, R.J.; Leveque, R.J. Numerical Methods for Conservation Laws; Springer: Berlin/Heidelberg, Germany, 1992; Volume 132. [Google Scholar]

| d | ||||

|---|---|---|---|---|

| 1 | ||||

| 2 | ||||

| 4 | ||||

| 6 | ||||

| 8 | ||||

| 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

D’Amore, L.; Cacciapuoti, R. Sensitivity Analysis of the Data Assimilation-Driven Decomposition in Space and Time to Solve PDE-Constrained Optimization Problems. Axioms 2023, 12, 541. https://doi.org/10.3390/axioms12060541

D’Amore L, Cacciapuoti R. Sensitivity Analysis of the Data Assimilation-Driven Decomposition in Space and Time to Solve PDE-Constrained Optimization Problems. Axioms. 2023; 12(6):541. https://doi.org/10.3390/axioms12060541

Chicago/Turabian StyleD’Amore, Luisa, and Rosalba Cacciapuoti. 2023. "Sensitivity Analysis of the Data Assimilation-Driven Decomposition in Space and Time to Solve PDE-Constrained Optimization Problems" Axioms 12, no. 6: 541. https://doi.org/10.3390/axioms12060541

APA StyleD’Amore, L., & Cacciapuoti, R. (2023). Sensitivity Analysis of the Data Assimilation-Driven Decomposition in Space and Time to Solve PDE-Constrained Optimization Problems. Axioms, 12(6), 541. https://doi.org/10.3390/axioms12060541