1. Introduction and Background Information

The objective of this work is to develop novel mechanisms that offer proof of authenticity in distributed networks and protect terminal devices operating in zero-trust areas. In our differentiated approach, we consider several complementary remedies: (i) one-time use keys for each transaction, (ii) generating keys from the message digest of each file, and (iii) in zero-trust networks; transmit the data feeding a CRP mechanism through noisy wireless channels to enable the decryption on-demand of digital files. All proposed remedies are designed to handle the erratic bits without ECC. In this section, we are summarizing some of the relevant work conducted by prior authors to secure digital files and use CRP mechanisms. Storing and distributing the cryptographic keys needed to protect sensitive information and terminal devices introduces a level of risk. Of concern are replays, man in the middle attacks, loss of information in the network, side channel analysis and physical loss to the opponent of a terminal device [

1,

2,

3]. Storing non-encrypted files in the terminal introduces the same risk level as storing the secret keys that decrypt the cipher texts of these files. In distributed networks, the clients usually store the public–private key pairs in their terminal devices, which presents an element of risk. The opponents also inject noise to disturb the wireless communication between the ground operation and terminal device, introducing difficulty in the distribution of cryptographic keys without heavy ECC, fuzzy extractors, or data helpers, all of which are leaking information [

4,

5,

6].

Blockchain technology with digital signatures offers protection in exposed networks, such as the non-alterability and traceability of chains of transactions [

7]. Digital Signature Algorithms (DSAs) further enhance security by associating the blockchains to a set of known users and their public keys [

8]. Blockchains with digital signatures are exposed to a variety of possible attacks that introduce risk in validating authenticity, specifically when operating outside a reliable network [

9,

10]. Concerns include possible difficulties in verifying the legitimacy of a file, the theft of private keys, and blockchains that may be forged by associating altered messages with an altered message digest. To prove the authenticity of a message in zero-trust networks, it is important to add layers of security without imposing excessive computing power consumption.

Background information in the area of file verification and distributed networks is presented in refs. [

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21]. In [

11], a Challenge Response Authentication (CRA) of physical layers is described to avoid exposing passwords. The work presented in [

12] shows the methods to provide verification of information in a ledger. The authors of [

12] define a “cryptographic challenge nonce” and a protocol that enables third parties to verify information; the technology is based on digital signatures with public/private keys. In [

13], document tracking schemes on a distributed ledger [

13] are presented and details how to insert unique identifiers and hash values for this tracking; a processor coupled with the storage device is used in the protocols. In [

14,

15], the authors present a secure exchange of signed records. These records contain digital signatures of the senders and their public keys, which enhances security. Methods to authenticate data based on proof verification are shown in [

16,

17,

18]. Some of the data may be external to the network, protected by a blockchain and DSA with public keys. Uhr et al. describe the methods to verify certificates based on blockchains. The system compares the content of a previously known certificate by a financial institution with the content of a certificate obtained at the point of use. Sheng et al. describe computationally efficient methods to audit transactions. The methods are based on blockchains, Bloom filters, and digital signatures. Information such as using a timestamp as an entry and wallet address are part of the scheme. In [

19], a method to validate documents with blockchains and other information is presented. Only portions of the documents are shared; the full document is not revealed. Publication [

20] describes contract agreement verifications. Electronic signatures are inserted in the contracts and the blockchains with a method to share public/private key pairs. Publication [

21] presents a management system protecting enterprise data with blockchains. The computing device selects what data to incorporate in the blockchain to offer adequate protections.

The CRPs developed for PUFs provide relevant background information for our work even if they do not use a digital file [

22,

23,

24,

25,

26,

27,

28,

29,

30]. Authentication based on a challenge, a response, a PUF, and machine learning are described in [

22]. The development of secure digital signatures using PUF devices with reduced error rates is presented in [

23,

24]. Cryptographic protocols with PUF-based CRPs are shown in [

25,

26]. Biometry with a CRP mechanism is suggested in [

27,

28]. CRP mechanisms and CRA schemes are also applied to protect centralized or distributed networks [

29,

30,

31]. An example of a scheme is the formation of a look-up table with indexes in the left column and passwords in the right column. The challenges point at an index to be paired with an associated password, which avoids the problem of having to change the passwords periodically.

Some of the contributions of this work include a novel approach based on CRP schemes to protect and authenticate each digital file individually, with the objective to mitigate several vectors of attack. The idea to inject obfuscating noise during ground-to-terminal communication is developed to limit the ability of opponents to share the same wireless network for side channel analysis. To facilitate a quick adoption of the novel methods, all encryption algorithms are standardized cryptographic algorithms recommended by the National Institute of Standards and Technologies (NIST), as well as the codes under consideration for standardization by NIST for post-quantum cryptography (PQC). The paper is organized as follows:

[

Section 2] The first layer of technology needed for our proposed schemes is described, namely the design of the CRP mechanisms directly based on digital files. The algorithms extracting the responses from randomly selected challenges are presented as well as methods to generate cryptographic keys from the CRPs.

[

Section 3] The protocols to verify the authenticity of digital files with CRP mechanisms in distributed networks are presented. The algorithms for enrolment and for verification are detailed. An example of a use case that is using these protocols with an agent, storage node, and smart contract is given. A security analysis, in which we list potential issues and remedies, is also provided.

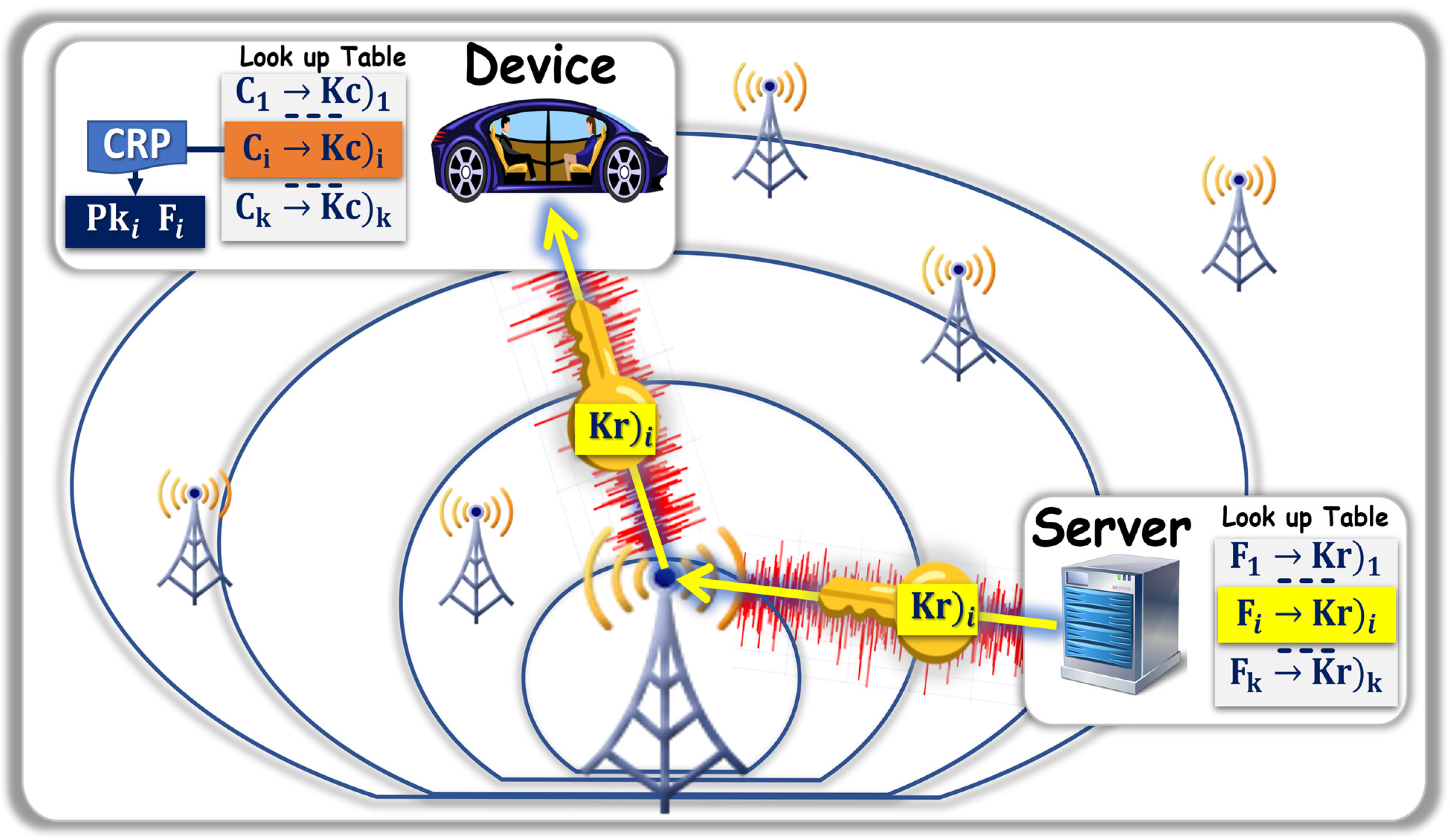

[

Section 4] The protocols to securely distribute digital files to terminal devices exposed to zero-trust networks are suggested. A use case that distributes cryptographic keys while injecting erratic bits is presented.

[

Section 5] The potential problems created by residual erratic bits in the recovery keys are examined in detail. A model is developed and verified, and light error management schemes are suggested.

Finally,

Section 6 presents the summary and future work. A table listing the acronyms used herein is presented in Attachment A, followed by the list of references.

2. CRP Mechanism Based on Digital Files

Methods to design digital file-based CRP mechanisms will now be presented. The method detailed in

Section 2.1 describes a generic response generation scheme for applications in distributed networks. The implementation detailed in

Section 2.2 is an example of protocol offering higher levels of security in distributed networks.

2.1. Response Generation with File-Based CRP Mechanism

The input data of the CRP mechanism is derived from file F. Its ciphertext C is concatenated with nonce to generate a file C* of constant length , where D is the number of digits (for example, if a desired million, then ). The resulting d bits are located at addresses varying from 1 to d. Changing a single bit in file F results in a totally different stream C*. This can be performed with a variety of methods; one possible implementation is the following:

The CRP mechanism is based on the d-bit long stream C* to generate N responses from N challenges:

Challenges: A “challenge” is defined as the digital information needed to point at a particular position in the d-bit long stream C*. A stream of bits S* is generated by hashing and extending with eXtended output Function (XoF), creating a randomly selected seed S. The stream S* is segmented into N challenges that are D-bit long. The D bits of each challenges qi are converted into number , with }, which is an address in C*, resulting in N addresses

Responses: The N addresses generate the P-bit long responses . From each address , P-bit long responses are generated from C*. The iterative method to find the P positions , and read the P-bits is the following:

The first position is:

. The other positions

are given by the linear congruent random number generator,

},

}, see Equation (1):

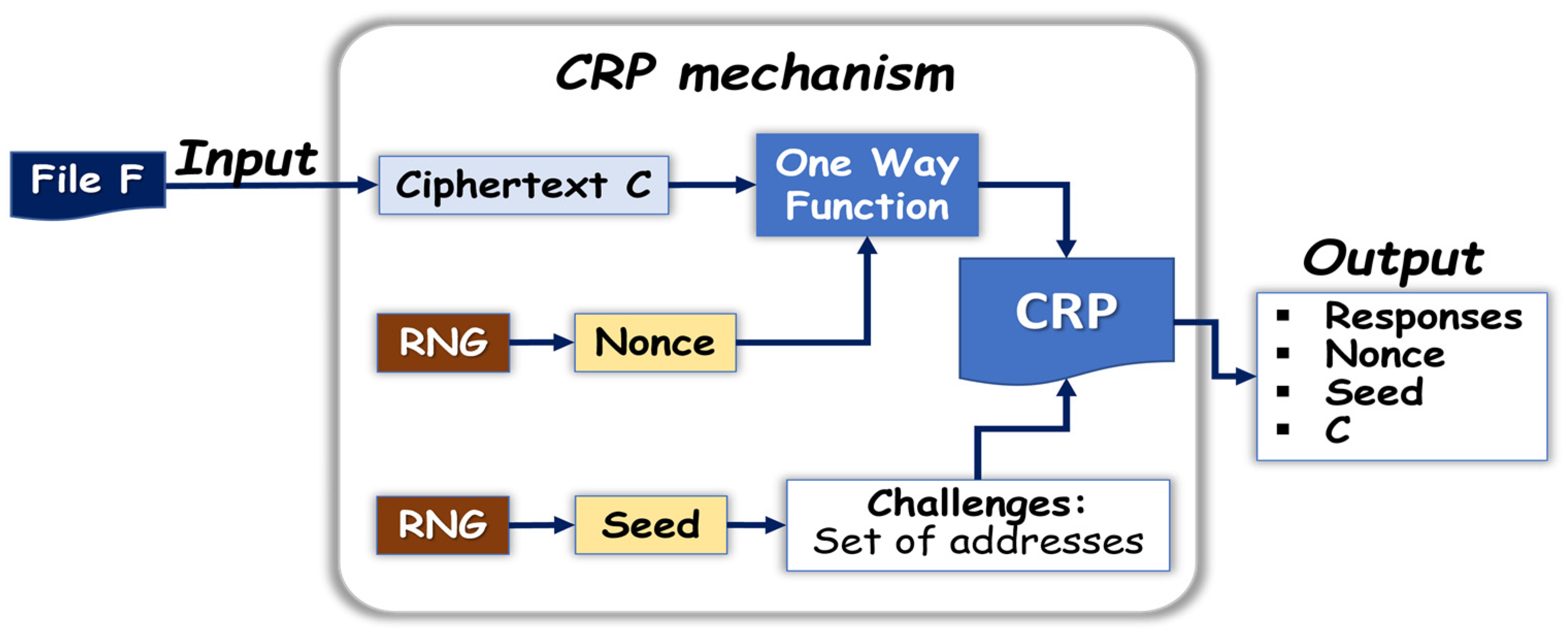

Algorithm 1 and the block diagram shown in

Figure 1 summarize the above protocol. The output after response generation is {C,

, S} and

. Such a CRP mechanism is highly secure as three totally independent streams are requested to uncover the responses which are C,

, and S. Protecting just one of the three provides acceptable security. The computing power, or “gas price”, required to run the CRP mechanism is low.

| Algorithm 1: Generate a set of responses with C |

| 1: | Variable input data: file {C} |

| 2: | Nonce {}, and stream {S} random number generator |

| 3: | → Module 1: Generate a set of responses with C, and {,S}: |

| | 3.1: | Static input data: positive integers d, D, N, P, d = 2D and , are prime |

| | 3.2: | MD Hash (C) (ex: SHA-256) |

| | 3.3: | C* XOV(concatenate (MD, )) (ex: SHAKE) |

| | | [Comment: Organize C* with bits located at addresses 1 to d] |

| | 3.4: | S* XOV (S); with S* is a ()-bit long stream |

| | 3.5: | S*; Split S* into N, D-bit long, challenges ; i{1, N}) |

| | 3.6: | ; for N positions i in C*; |

| |

3.7: | ; for all N, P-bit long, responses

For each position generate P-bit long response in the following way: |

| | | ; {1, P}

[comment: find P positions in C* with congruent linear generator] |

| | | - ○

if then

|

| | | - ○

Else, iterate:

|

| | | ;

[Comment: read the P positions in C* to generate P-bit long response ] |

| 4: | Output: C, {S, ,} and the N responses |

The probability to point several times to the same position in C* is non-negligeable. This exposes the protocol to a frequency analysis and creates collisions, as several responses ri are then identical. One solution tracks the positions with an index to generate different responses when several challenges are pointing at the same position. For example, when a position is selected a second time, the of Algorithm 1 step 3.7 can be replaced by which is a sufficient offset to generate a distinct response; iterating, the is replaced by during the third collision, et cetera.

2.2. Generation of an Orderly Subset of Responses

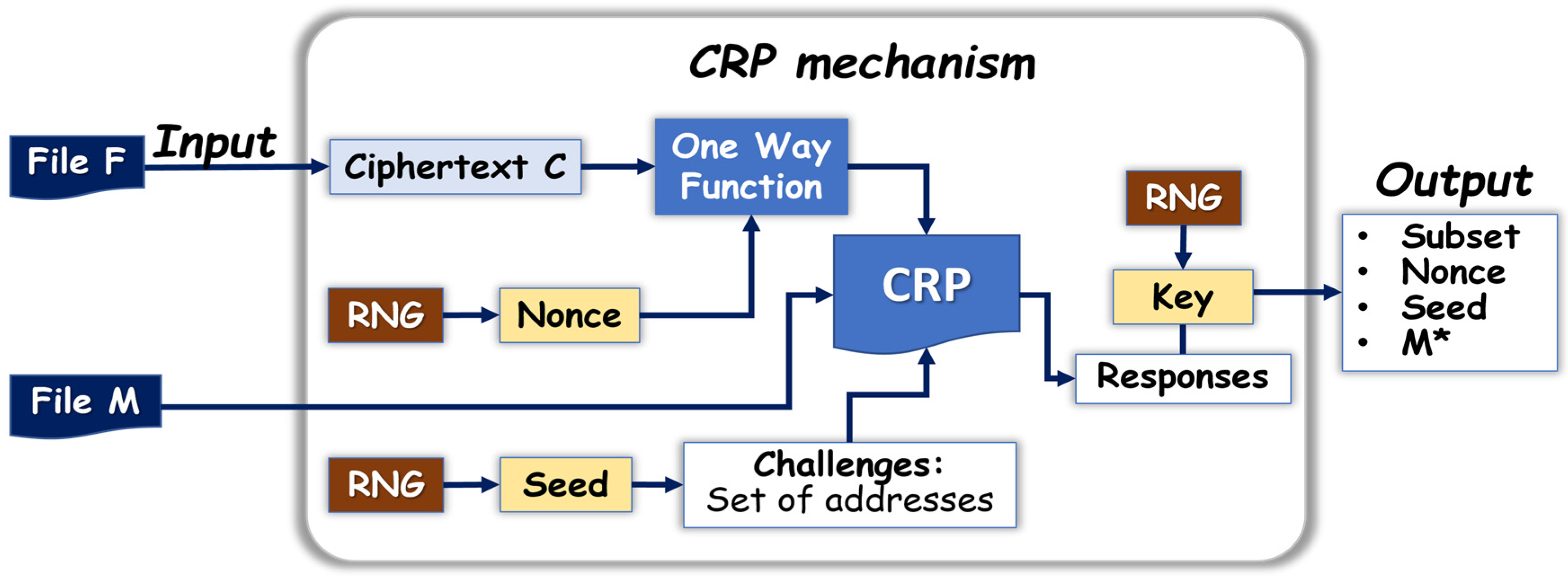

In the scheme described in

Section 2.1, the responses generated from the CRP mechanism are directly converted into cryptographic keys K to encrypt a message M. To enhance security, we developed a scheme in which the ephemeral key K is picked randomly, independent from the CRP mechanism. Algorithm 2 for encryption and Algorithm 3 for decryption are summarized in

Figure 2, and shown as follows:

| Algorithm 2: Generate a subset of responses with C, and encrypt M |

| 1: | Variable input data: {C}, {M} |

| 2: | Nonce {}, and stream {S} random number generator |

| 3: | → Use Module 1: Generate a set of responses with C, and {,S}: |

| | Output data: All N, P-bit long, responses . |

| 4: | → Module 2: Encrypt M and generate a subset of responses with: |

| | 4.1: | key K with f states of “1”: random number generator |

| | 4.2: | M* encrypt(M, K) |

| | 4.3: | Filter subset of f responses located at positions of K with state of “1” |

| | 4.4: | Erase M, K, and the responses located at positions of K with state of “0” |

| 5: | Output: C, {S, , M*} and the f responses |

| Algorithm 3: Decrypt M with C and the subset of f responses |

| 1: | Variable input data: C, {S, , M*} and the f, P-bit long, responses |

| 2: | → Use Module 1: Generate a set of responses with C, and {,S}: |

| | Output data: the N, P-bit long, responses , i {1, N} |

| 3: | → Module 3: Decrypt M from M* with the f responses, j {1, P}: |

| | 3.1: | Retrieve key K by comparing the N responses with the subset of f responses : |

| | | |

| | | |

| | 3.2: | M Decrypt(M*, K) |

| 4: | Output: M |

The key K, which is randomly picked here, has two purposes: encrypting M and generating an orderly subset of responses. Only the f positions of K with a state of “1” are pointing at positions in the sequence of N responses that are kept, while the responses at the

positions of K with a state of “0” are skipped. The resulting orderly subset of f responses is kept for key recovery. Four totally independent streams are requested to uncover the responses, which are C,

, S, and the subset of responses. The size of the responses needed to run this CRP mechanism is higher than the size as presented in

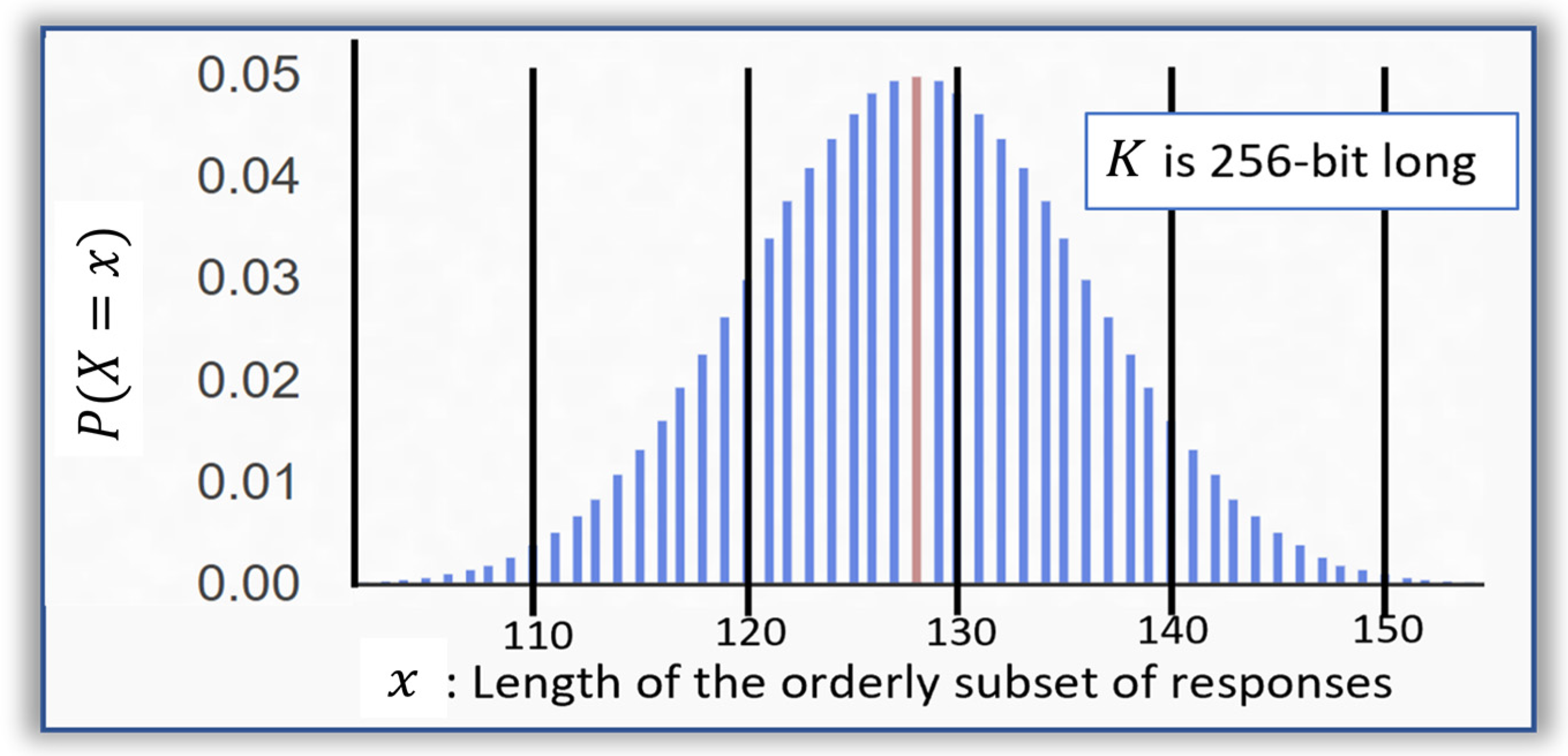

Section 2.1. For example, approximately 128 responses, that are 100-bit long, are generated when K is 256-bit long. Therefore, 1.6 Kbytes are retrieved, rather than 256 bits in the simpler protocol. We notice that the orderly subset of responses does not have a fixed length. Assuming that the random number generator of the 256-bit long K has an equal probability to get a state “0” or “1”, the probability

of obtaining an x-bit long subset is shown in

Figure 3. The median value of the distribution is 128 and varies approximately from 100 to 160. To obfuscate this information, we add nonces at the end of the subset to keep the length to 160 responses. The key recovery scheme as presented in

Section 4 is based on a search from left to right that terminates when the sequence of 256 bits is identified; additional responses are ignored.

The injection of errors in the subset of responses is detailed in

Section 4 and

Section 5. Provided the BERs remain below an acceptable threshold (i.e., below 25%) the subset of orderly responses can be recognized as part of the iterative search; therefore, ephemeral key K can be recovered. In zero-trust networks, such an obfuscation can disturb opponents sharing the noisy network. Certain attacks are mitigated since the noisy responses are different than the ones generated from F and the CRP mechanism.

3. Protocols Verifying the Authenticity of Digital Files in Distributed Networks

The objective of the protocols presented in this section is to allow third parties operating openly in the distributed network to validate the authenticity of a file without the over-burdening of computational resources. The suggested protocols are based on the CRP mechanism discussed in

Section 2 with the same symbols. The message of authenticity M was replaced by public key Pk computed internally to add a DSA.

3.1. Description of the Protocols with CRP Mechanisms

The overall mechanism to generate the responses is described in

Section 2.1. For example, if

,

,

, and

, the responses are 256-bit long. The entropy is about 200, as the number of possible CRPs is given by Equation (2):

3.1.1. Enrollment Cycle

The protocol has two steps: the initial set up or enrollment performed secretly by the client or designate, and the verification of the authenticity cycle performed openly in the distributed network. Algorithm 4 for enrolling file

F is shown as follows:

| Algorithm 4: Enrollment cycle for file F |

| 1: | Input data: Some file F |

| 2: | Nonce {}, stream {S}, and seed {L} random number generator |

| 3: | Generate ephemeral public-private key pair {Sk, Pk} from L (ex: PQC algorithm) |

| 4: | C Encrypt(F, Sk) |

| 5: | M Pk |

| 6: | → Use Module 1: Generate a set of N responses with C, and {,S}: |

| | Static input data: Positive integers d, N, P, ,

|

| | Output data: All N, P-bit long, responses

|

| 7: | K concatenate; where K is a 256-bit long key |

| 8: | M* Encrypt(Pk, K) |

| 9: | Erase: {C*, Sk, Pk, K}, and |

| 10: | Output: C, and steams {S, , M*} |

During the enrollment of File F, a randomly selected seed L generates a key pair (Sk; Pk) with an asymmetrical algorithm such as Dilithium. The file F is encrypted with Sk to generate the ciphertext C. The randomly selected nonce is concatenated with C to form a d-bit long file C* that is at the center of the CRP mechanism. A randomly picked seed S is converted into a set of N, D-bit long, challenges generating a set of N, P-bit long responses. The responses are concatenated to generate the ephemeral key K, which encrypts the public key Pk and forms ciphertext M*. After completion of the enrollment cycle, the responses, C*, and keys K, Sk, and Pk are erased. The output is C and stream {S, , M*}.

3.1.2. Verification Cycle

The verification cycle follows the same steps as the enrollment cycle. The release of ω initiates the process, while {C, S, M*} are also needed. The replay of the CRP mechanism retrieves the responses from ω and {C, S}. The responses allow the recovery of K, the deciphering of Pk from M* with K, and the deciphering of C with Pk to retrieve F. Algorithm 5 is summarizing this protocol as shown below. A variation of this protocol utilizes a message of authenticity M, instead of Pk, to generate ciphertext M* with K; where, in this case, C is decrypted separately. F can remain secret; while the proof of authenticity of C can be public, informing peers that C is valid.

| Algorithm 5: Decrypt file F |

| 1: | Variable input data: {}, and {C, S, M*} |

| 2: | → Use Module 1: Generate a set of N responses with C, and {,S}: |

| | Static input data: Positive integers d, N, P, ,

|

| | Output data: the N, P-bit long, responses

|

| 3: | K concatenate |

| 4: | Pk Decrypt(M*, K) |

| 5: | F Decrypt(C, Pk) |

| 6: | Output: F, Pk |

3.2. Example of Use Case in Distributed Networks

The schemes presented above in

Section 3.1 benefit clients operating in a distributed network [

46,

47,

48,

49,

50]. In this example, suppose a client desires to allow independent parties to verify the authenticity of a transaction contained in file F. The client keeps secret F and discloses ciphertext C. The roles of the participating parties are defined as the following:

Client: owns file F but pays the storage agent to store C for some duration [

51].

Agent: represents the client [

52].

Storage agent: paid by the client or the agent to store information [

53].

The data is public.

Smart contract: The client’s rent is kept in escrow in the contract until the rental expires. The smart contract is equipped to compute the proof of authenticity [

54,

55].

The client issues periodic challenges to check that the storage agent is faithful. If a challenge fails, the smart contract refunds the rent to the client. Since the smart contract’s state is always public, the contract cannot keep any secret data.

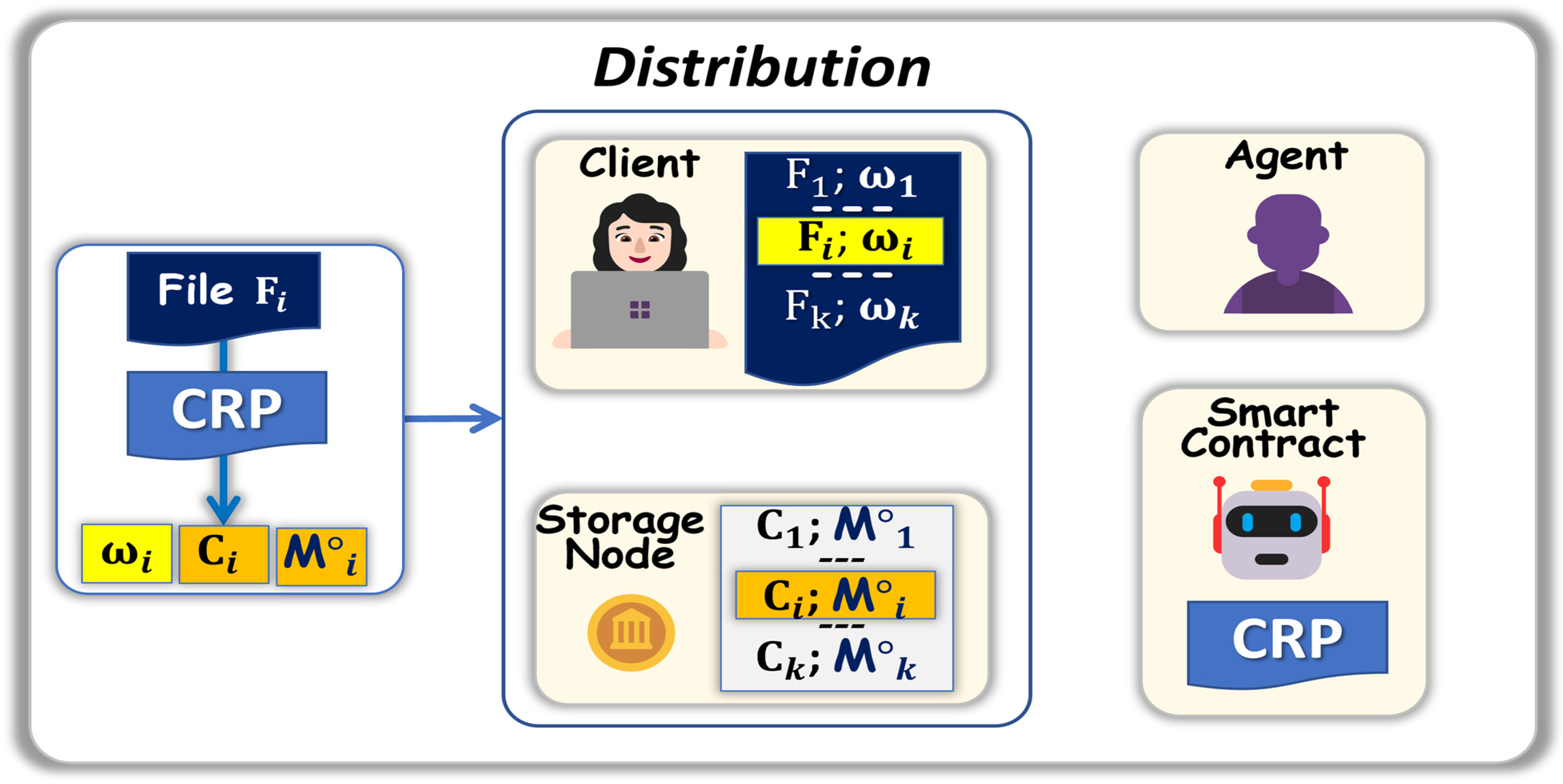

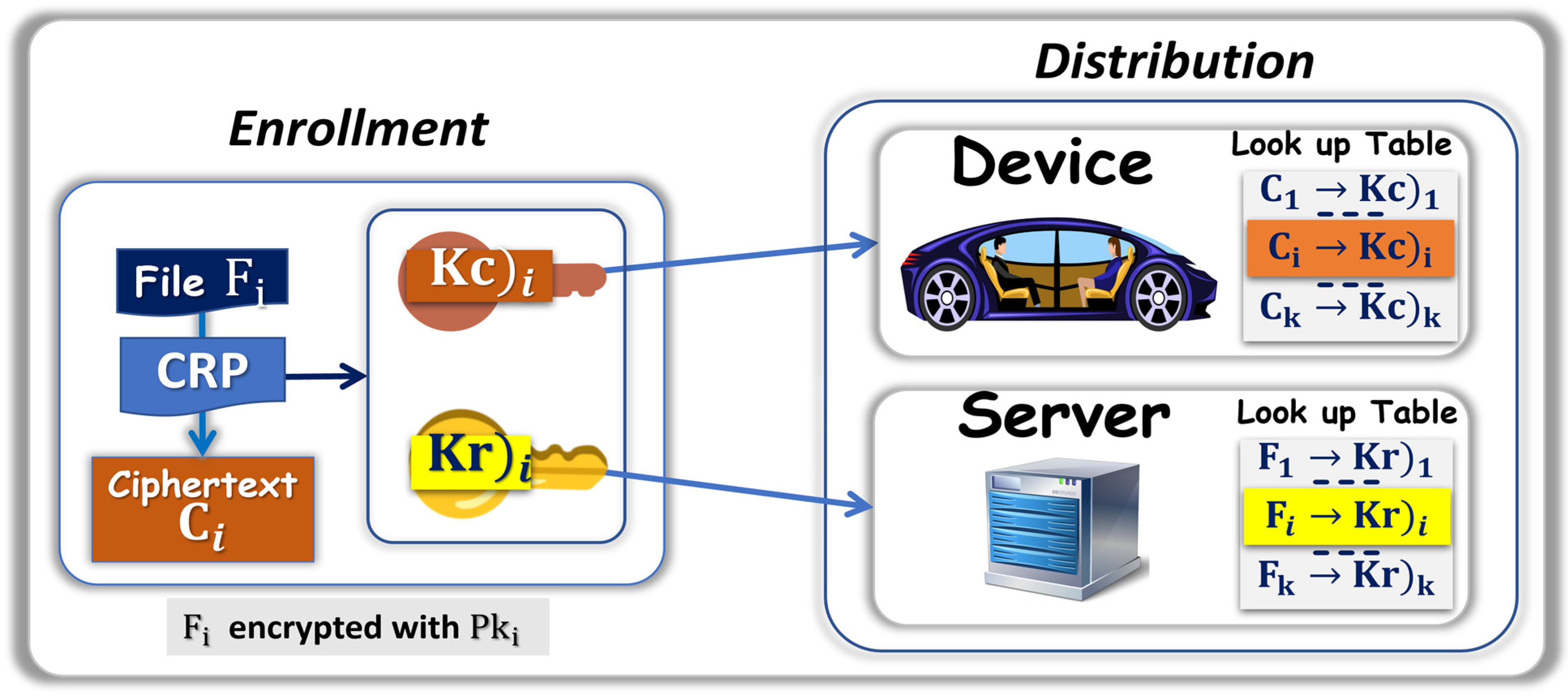

3.2.1. Initial Enrollment and Distribution of the Files

The client uses a CRP mechanism during an initial set up phase, as described in

Section 3.1, then distributes the information to the network. In most implementations, a compact seed replaces the challenges, as described in

Section 2. The client is either equipped with the software needed to operate a CRP mechanism or employs a contract agent to perform the confidential task. From F

i and nonce ω

i, the CRP mechanism generates the ciphertext C

i and the file M°

i: {S

i, M*

i}. The stream M*

i is the ciphertext encrypted with ephemeral key K of the message of authentication M

i, which can be the public key M

i = Pk

i needed for the DSA of F

i. An active participant such as an agent can take care of the distribution of C

i and M°

i. In

Figure 4, the distribution is the following:

The smart contract has the technical capability to perform CRP mechanisms.

The storage node keeps Ci and M°i.

The client keeps Fi and ωi.

3.2.2. Verification in Distributed Networks

When the verification of the authenticity of F

i is required, the client communicates ω

i to the agent. The agent then coordinates the effort between the smart contract and the storage node, executed in the protocol shown in

Figure 5, which is summarized as follows:

The smart contract, which is equipped with the CRP mechanism collects ωi, Ci, and M°i = {Si, M*i}

The CRP mechanism has the information needed to decrypt Fi and run the DSA verification with Pki.

A variation of the protocol replaces Pk

i by a message of authenticity M

i such as:

“Yes, I (client X), am confirming the authenticity of ciphertext Ci”.

This variation can be useful if the client desires to protect file Fi. The decryption of Ci can be performed separately, while outside the open network. A separate arrangement is then needed to share Pki; for example, through a public key infrastructure (PKI).

3.3. Security Analysis in Distributed Networks

The methods for verifying authenticity, as presented here, are based on the handling of several independent elements: File F, Ciphertext C, nonce

and the stream M°. The client may restrict any of these streams while distributing the other three elements. The possibility matrix is shown in

Table 1.

The respective value of each case can be summarized as follows:

Case 1 was discussed in

Section 3.2.2 The client keeps F and ω secret after enrollment and then discloses both during verification. Such a method is valuable since the entire chain of information is obfuscated before validation. After validation, both C

1 and F

1 are public information, and the use of the DSA can further enhance the transparency of the protocol.

Case 2 is a variation of Case 1 where M is a message of authenticity rather than a public key. The client can only disclose ω during verification, offering some homomorphic capabilities to the scheme as the open protocols verify the authenticity of ciphertext C without openly disclosing F.

Case 3: After enrollment, the client keeps both F and C such that the level of obfuscation is also high. During validation, the client discloses both so the smart agent can verify authenticity. This method is interesting since the client cannot lie by changing F and C after the fact.

Case 4: After enrollment, the client only keeps ω and releases both unencrypted and encrypted files. The release of the nonce enables a full verification of authenticity to include DSA. The nonce is then used as a one-time public key.

Case 5: This is a variation of Case 4 in which only M° is kept by the client, thus utilizing M° as a one-time public key.

Discussion: The first two cases offer both high levels of security and transparency since file F is kept secret after enrollment, while ciphertext C is public information. The client could be accused of modifying the file, if it is altered; therefore, the protocol offers non-alterability and non-repudiation. In addition to the proof of authenticity of the message, by releasing the nonce, the client also provides a form of authentication. In both cases, the storage node is keeping files that can be decrypted on-demand, acting as a repository of critical information. Examples of information are medical files, financial transactions, movies, games, and/or real estate transactions.

The second case offers some homomorphic properties since the encrypted file can be authenticated without knowing the content of the file. The decryption of the files can be performed separately and secretly in a transaction between the client and a third party. The client may, for example, secretly communicate the cryptographic key needed to retrieve the file. An example of a use case could be in the handling of medical files. The doctor keeps the nonce, and the key needed to decrypt the file, while the cipher text and encrypted message of authenticity are kept by a storage node. To allow a second doctor to access the file, the doctor openly transmits the nonce, which will confirm the authenticity of the ciphertext, then secretly transmits the key deciphering the file. That way, the doctors are not required to store the files on their server to retrieve the information as needed.

The suggested protocols are relatively well protected against attack vectors such as replays, man-in-the-middle, and side channel analysis. New nonces, key pairs Sk–Pk, and seeds are generated for each file and used only once. After a proof of authenticity cycle, the entire chain of information is openly distributed: the file, the ciphertext, nonce, seeds, public key, and message of authenticity. Even the knowledge of Sk, the private key, becomes irrelevant. This is a departure from many other DSA schemes used in cryptology in which the leak of the secret key is catastrophic.

A remaining vulnerability of the protocol presented in the first two cases is the leak of nonce

. Adversaries are then enabled to decrypt the files, which defeats the purpose of the protocol. A potential remedy is to use Multi-Factor Authentication (MFA). The nonce can be XORed with a password before storage by the client. During the verification cycle, the client shall XOR it again with the password to retrieve the nonce. Another option is to use the additional layer of security presented below in

Section 4.

5. Statistical Analysis of the Protocols Based on Subsets of Responses and ECC

Practical issues are expected in the implementation of the methods described above in

Section 4 since the noise injection could also generate erroneous bits in the cryptographic keys. One solution is to tolerate an approximate matching between the responses of the orderly subset generated during enrollment and the responses generated during verification. However, if the threshold of acceptance is too high, the probability of having two randomly chosen responses matching can also be too high. The term commonly used to describe such unwelcome matches is “collision” [

61,

62]. In order to optimize the protocols, we developed a predictive modeling of these collisions. We also developed error management schemes able to process small residual erratic bits.

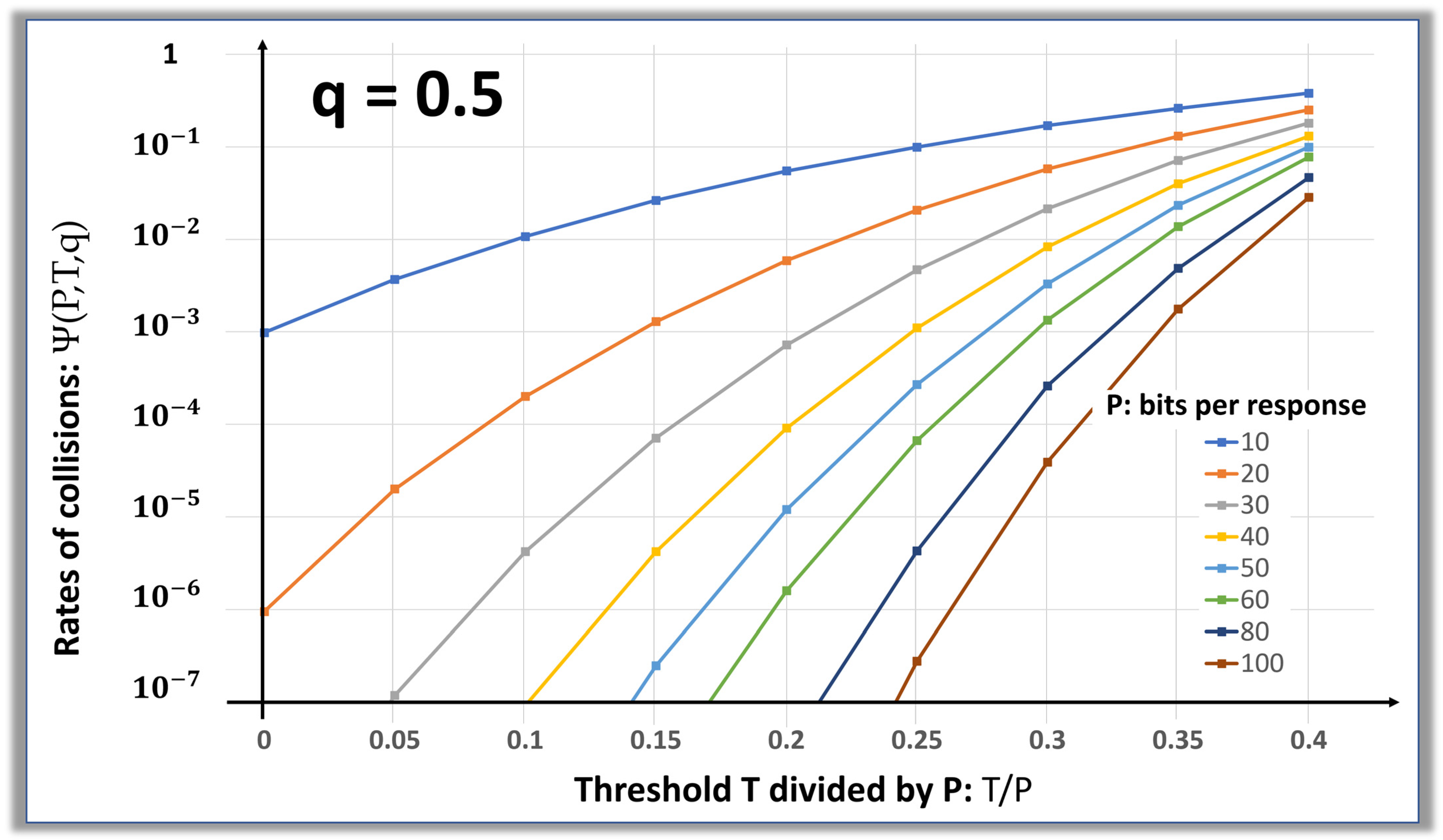

5.1. Statistical Predictive Model for Collisions

The recovery of the file, as outlined in Algorithm 6, assumes that the noise injected in the P-bit long responses creates a BER lower than 25%, an arbitrary number that can be optimized. The number of erratic bits t is bounded below the threshold T, which in this case is given by . If the threshold is too high, then collisions between the N responses become a source of errors during the retrieval of key K. A collision occurs when two randomly picked P-long responses ra and rb share at least T bits with each other. We are assuming that all responses follow a binomial distribution; one in which q, the probability to obtain a state “0”, equals the probability to obtain a state “1” (). We must ensure that the probabilities of having a collision Ψ(P,T,q) between the responses of the orderly subset and a randomly picked response are acceptably low. Thus, we developed a model to optimize the protocol that focuses on three variables of Algorithm 6: the rate of bad bits injected in the subset of responses, the number of bits P of the responses, and the number of bits of threshold T. This mode is created as follows:

Ra: ; Rb:

The parameter q is the probability q to have a 1.

The two streams are XORed, bit by bit:

The resulting stream also follows a binomial distribution with q = 0.5.

The hamming distance H(Ra,Rb) between Ra and Rb is given by:

There is a collision when the hamming distance between ra and rb is: H(ra,rb) ≤ T.

The rate of collisions is:

The plot shown in

Figure 8 was generated using T/P in the x-axis, and Ψ(P,T,q) in the y-axis. T/P varies between 0 and 40%, and Ψ(P,T,q) varies from 10

−7 and 1. In our experiment, we measured the best fit for

since the distributions are almost perfectly binomial.

If we assume that there are no responses out of the f responses having the BER between enrollment and key recovery greater than the threshold T, the expected BER (E(K) in key K after recovery is expected to be given by:

where

is the number of times each response is randomly tested in the recovery cycle. As shown in

Section 5.3.3, we used

.

- ○

→ E(K)

- ○

→ E(K)

- ○

→ E(K)

- ○

→ E(K)

The BERs are exponentially reduced by increasing the length P of the responses. The light error correcting schemes, as presented below, quickly increase if the BERs are creating more than one bad bit in a 256-bit long key, which corresponds to a BER of . This model predicts that is acceptable and that is a better operating point.

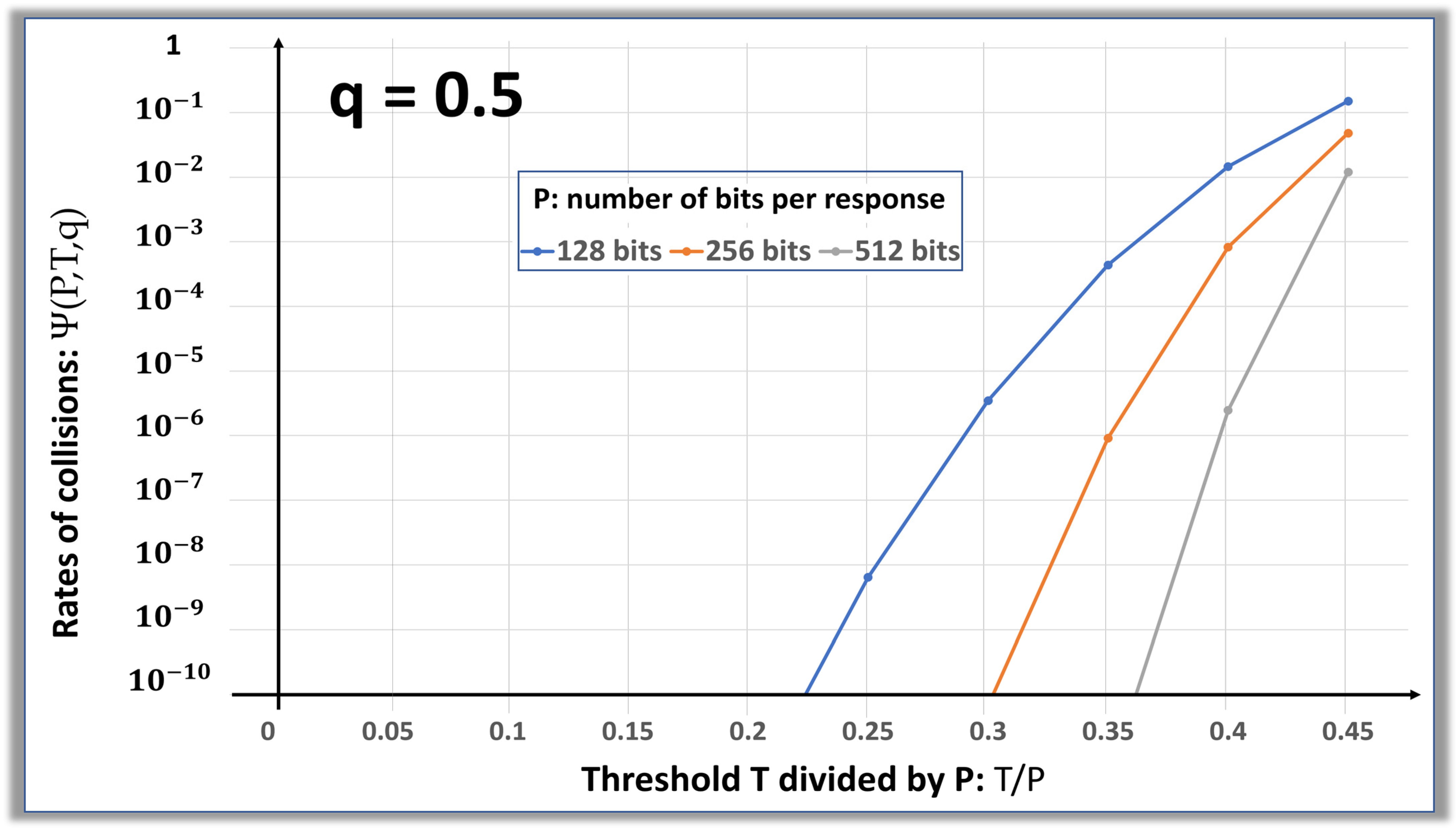

5.2. Model for Heavy Noise Injections

The information presented in

Figure 8 shows that responses in the range of 10 to 100 bits are too small to handle a noise injection of more than 30% erratic bits. A similar analysis is shown in

Figure 9 with responses in the 128- to 512-bit range, and

. An example of the predictive use yields the following results:

- ○

and → E(K) ;

- ○

and → E(K) ;

- ○

and → E(K) .

Such bit values of the error rates in K, which are in the part per million range (ppm), are widely acceptable for most applications. However, such an improvement introduces a negative impact on the latencies of the protocol, which are approximately proportional to the length of the responses. The bit error rates in the 35% range are large; thus, the penalty on latencies remains reasonable.

5.3. Error Management in the Cryptographic Keys

Mainstream ECC is difficult to implement in this approach since the data helpers needed to correct the key K are also affected by the noise injection. Thus, data helpers for the data helpers are called for, not an easy problem to resolve at high error rates. Therefore, we are proposing, in this section, several light error management schemes that can tolerate the residual errors created in K in the range of 1 to 3 bad bits per each 256-bit long key. With and , 35% BERs in the responses generate BERs in the keys, which represents less than one bad bit per 256-bit long key.

5.3.1. Error Management with Response-Based Cryptography

The Response-Based Cryptographic (RBC) is a search mechanism allowing the recovery of a key when the ciphertext of the key is known [

63,

64]. In the protocol of Algorithms 6 and 7 with the subset of responses, the key K decrypts M* (the ciphertext of the key Pk) followed by the decryption of C and the ciphertext of the file F. This can be written as:

[: decrypt “C” with key “Pk”]

Algorithm 8 verifies authenticity and decrypts Pk and F, see as follows:

| Algorithm 8: Managing erratic key K with RBC |

| 1: | Retrieve K with CRP mechanism and from file C |

| 2: | |

| 3: | Find all keys Kn, , with hamming distance of from K |

| 4: | Decrypt all files (,Kn)) |

| | |

Using our computing system, we experimentally measured the throughput of the RBC at

cycles per second for AES and at

cycles/second for CRYSTALS-Dilithium. The code tested for Dilithium is freely available online, while AES is implemented in hardware within the computing element of our system [

65]. The latencies to retrieve F through Algorithm 7 are listed in

Table 4. The latencies to retrieve F are acceptable with AES, while managing 3 bad bits with Dilithium is slow. We anticipate that the hardware implementations of Dilithium will be available in the near future.

5.3.2. Error Management of Collisions

We developed and analyzed a method to experimentally detect collisions. The approach is based on the observation that when a collision occurs between a response from the subset and a response from the full set positioned in one of the states of “0” of K, that there is also a match with a response positioned in one of the states of “1” of K; this response from the subset then contains multiple matches.

Conversely, without collision, each response from the subset has one (and only one) match in the full set of responses. After experimentally finding the

ρ positions of the subset with multiple matches, we conclude that all other positions (the number of which is f-

ρ) are error-free. This significantly limits how many possible keys have to be tested in a methodology similar to that described by the RBC. For example: if one response of the subset sees two matches, then the first of the two can be a state of “1”: while the second one is a state of “0” (and vice-versa). This leaves only two possible keys, which is quickly validated by computing F with Equation (7). If

ρ responses from the subset create one collision each, then the number of possible keys per response is 2. This results in total

possible keys required to be checked; a lower value than the number of cases checked by the brute force RBC for the same number of errors. The latencies for 256-bit long keys K are listed in

Table 5.

5.3.3. Failure to Detect Matching Responses

Consider the case where the errors injected in the responses of the subset are greater than threshold T. In this case, ρ responses see no matches. This type of failure creates only a limited number of possible keys K. The positions in the sequence of orderly response matching with the responses-located positions (ρ-1) and (ρ-2) are known, and only a small number of positions with state “0” are between the two. The number of possible keys is then also small, quickly validated with Equation (7). The method to handle the collisions and failures to detect matching responses is described below in Algorithm 9:

For each of the responses of the subset, at least successive responses of the full set are tested for a potential match. Assume that key K has been tested to have no more than 0 successive 0s. This property must be validated during the random pick of K. If K has more than 0 successive 0s, then the key can be modified by inserting a few 1s to be sure that the condition is fulfilled. We picked in our implementation.

Detect the responses of the subset with either zero, one, or more than one matches with the responses of the full set of responses.

List all possible keys.

Verify the authenticity of F with all possible keys with Equation (7) of

Section 5.3.1.

| Algorithm 9: detecting and correcting errors during recovery of K |

| 1: | Enter the subset of response 1, …, j, …, f}, the set {1, …, i, …, N}, and T |

| 2: | Start with , , and |

| 3: | While : |

| | Measure hamming distance H(′j, i+k) for all responses i+k with } |

| | [comment: to record that ′j has zero match][comment: to record that position (i+k) is matching, and that ′j has only one match][comment: to record the list of all positions (i+k) matching with ′j] |

| 4: | Find the correct key K |

| [comments: The positions ′j with only one matching response are considered correct. The positions ′j with zero match are caused by a failure to find the matching response. The positions ′j with two matching responses have at least 1 collision] |

| | 4.1: List all possible keys Kn from the analysis |

| | 4.2: Decrypt all files (,Kn)

Find readable file ; then Pk (,Kn) |

Such a combined method will fail when both collision and the failure to detect occurs within the same response of the subset. When the BERs are in the 10−3 range, the probability of such an event is in the range. In this configuration, the response in question matches with one, and only one, response of the full set of responses, as the key is unable to detect errors. One solution is to perform a brute force RBC search and in case of a failure to recover K occurs, request a new subset of response and retry.

6. Conclusions and Future Work

The suggested CRP mechanisms are directly using the digital files that must be protected and also their associated ciphertexts to generate ephemeral one-time use cryptographic keys. In these mechanisms, similarly with tracking blockchains, the digital files are hashed to be unique for non-repudiable and non-alterable operations. Two actual situations were discussed: (i) the encryption with proof of authenticity of the digital files distributed in storage nodes and (ii) the protection of the files kept in devices operating in zero-trust networks.

In distributed networks, client devices provide the data needed for verification to agents driving smart contracts and storage nodes, the proposed protocols balance both security and transparency.

In zero-trust networks, the sole priority is to enhance security and to protect the terminal devices. We developed a protocol allowing the injection of obfuscating noise in the keys transmitted by the controlling server to the exposed terminal device. BERS in the 25% range do not prevent the noisy key from decrypting the digital files stored in the device. To eliminate residual mismatches and generate error-free cryptographic keys, we developed and tested error management schemes to replace complex ECC, fuzzy extractors, and data helpers.

The continuation of this work will implement the algorithms for various applications to optimize security, reduce latencies, and add features specific to each application. We intend to perform an optimization with some of the following multi-factors:

Length d of the digital file C* that is used for the CRP mechanism. Longer files are desirable to enhance randomness and minimize collisions. Excessive lengths negatively impact latencies.

Number N of challenge–response pairs, and length P of each response. For the subset of response protocol, or more is needed, and P has to be sufficiently long to avoid collisions, but not so long as to increase latencies.

The acceptable BERs shall be injected in the subset of responses and threshold T shall be set at an acceptable level for the determination of a match.

In addition to the experimental work, we are currently developing a comprehensive multi-variate predictive tool. Additional attack vectors are also being investigated with various methods to inject noise and disturb the protocol under consideration. Lastly, the quality of true random number generation (TRNG) is critical to the levels of security of the suggested protocols as well as the actual randomness of the resulting streams of responses. We are performing statistically valid experiments to enable the quantification of randomness with tools developed by NIST, DieHarder, TestU01, and others.