Improved Cascade Correlation Neural Network Model Based on Group Intelligence Optimization Algorithm

Abstract

1. Introduction

2. Algorithm Background

2.1. Cascade Correlation Learning Algorithm

2.2. jDE Algorithm

2.3. MOEA/D Algorithm

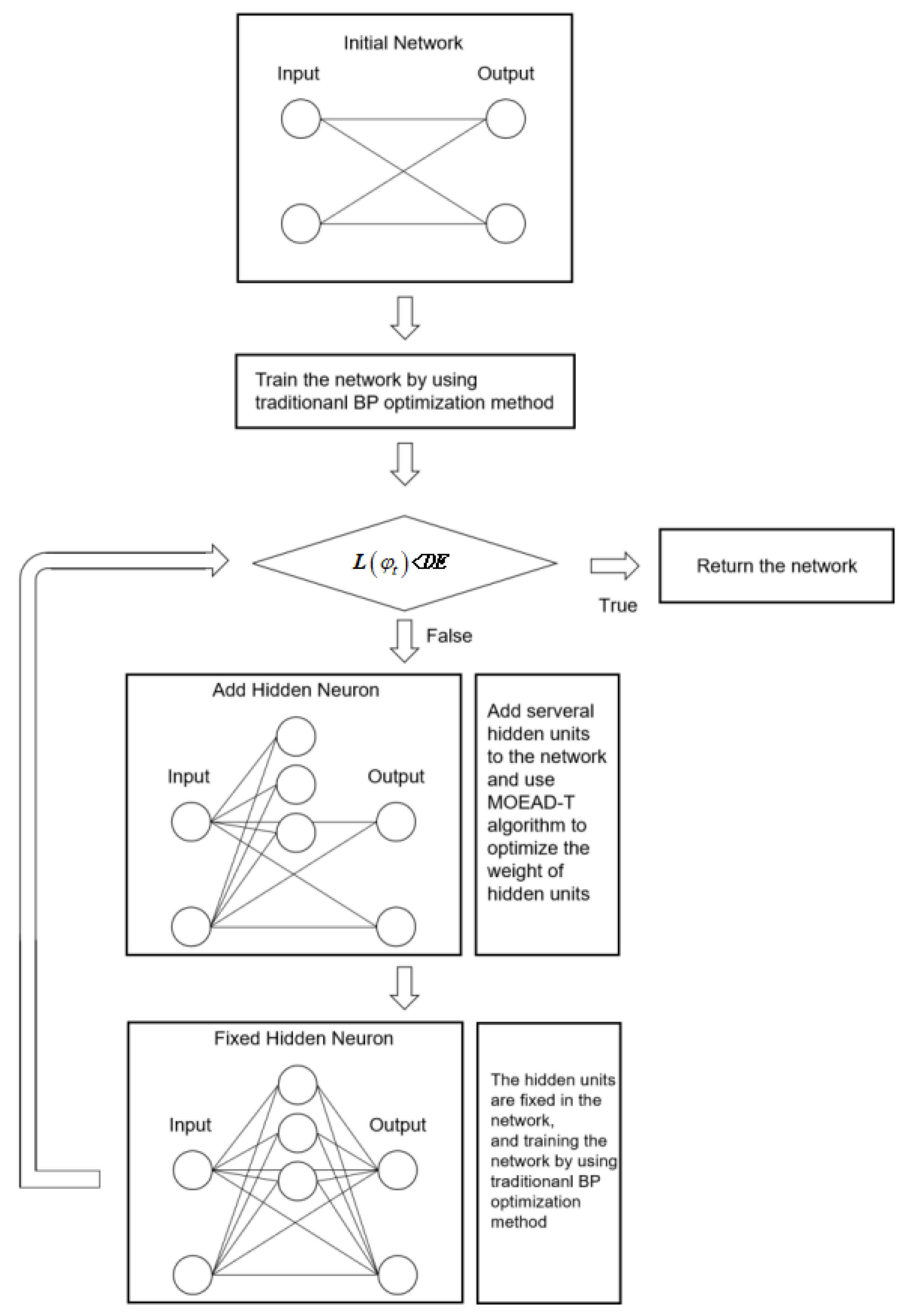

3. Improved Cascade Correlation Neural Network Model Based on Single Objective Group Intelligence Optimization Algorithm

3.1. jDE-B Algorithm

| Algorithm 1. the detailed procedures of jDE-B. |

| Require: Initialize the population , NP is the number of individuals Require: Initialize the individual control parameters Fi = 0.5; CRi = 0.9; (i ∈ {1,NP}) 1: while stopping criteria 1 is not met do 2: for each x ∈ P do 3: Change the individual control parameters with a certain probability 4: Execute the jDE mutation strategy 5: Execute the jDE crossover strategy 6: Execute the jDE selection strategy 7: end for 8: if (epoch%100 ==0) then: 9: Check population P 10: end if 11: end while |

- (1)

- stopping criteria 1: Current iterations exceed the maximum specified value

- (2)

- Change the individual control parameters with a certain probability: The biggest difference between the jDE and the DE algorithms is that there are two adaptive control parameters, namely, the scaling factor F and the crossover rate CR. Each individual has its own control parameter values, F and CR. New control parameters Fl and Fu, indicate the upper and lower bounds of F. New control parameters CRl and CRu, indicate the upper and lower bounds of CR.

- (3)

- jDE mutation strategy: A mutant vector will be generated through the jDE mutation strategy.

- (4)

- jDE crossover strategy: The i-th individual in the population crosses with the mutant vector resulting from the previous operation.

- (5)

- jDE selection strategy: In the g-th iteration, the trial individuals are produced after experiencing mutation and crossover. The i-th trial individual will be compared with the i-th individual of the original population, and those with better adaptive value will be retained. If the individual adaptation value is better, the Fi and CRi will be inherited, otherwise it will fall back to the Fi and CRi of the previous generation

- (6)

- Check population P: If the number of similarities between the best individual and the population individual is more than or equal to 50% (the difference between the best individual value and the population individual value that is less than or equal to EPS = 1 × 10−16 is similar), the population will retain the best individual and other individuals will be reinitialized.

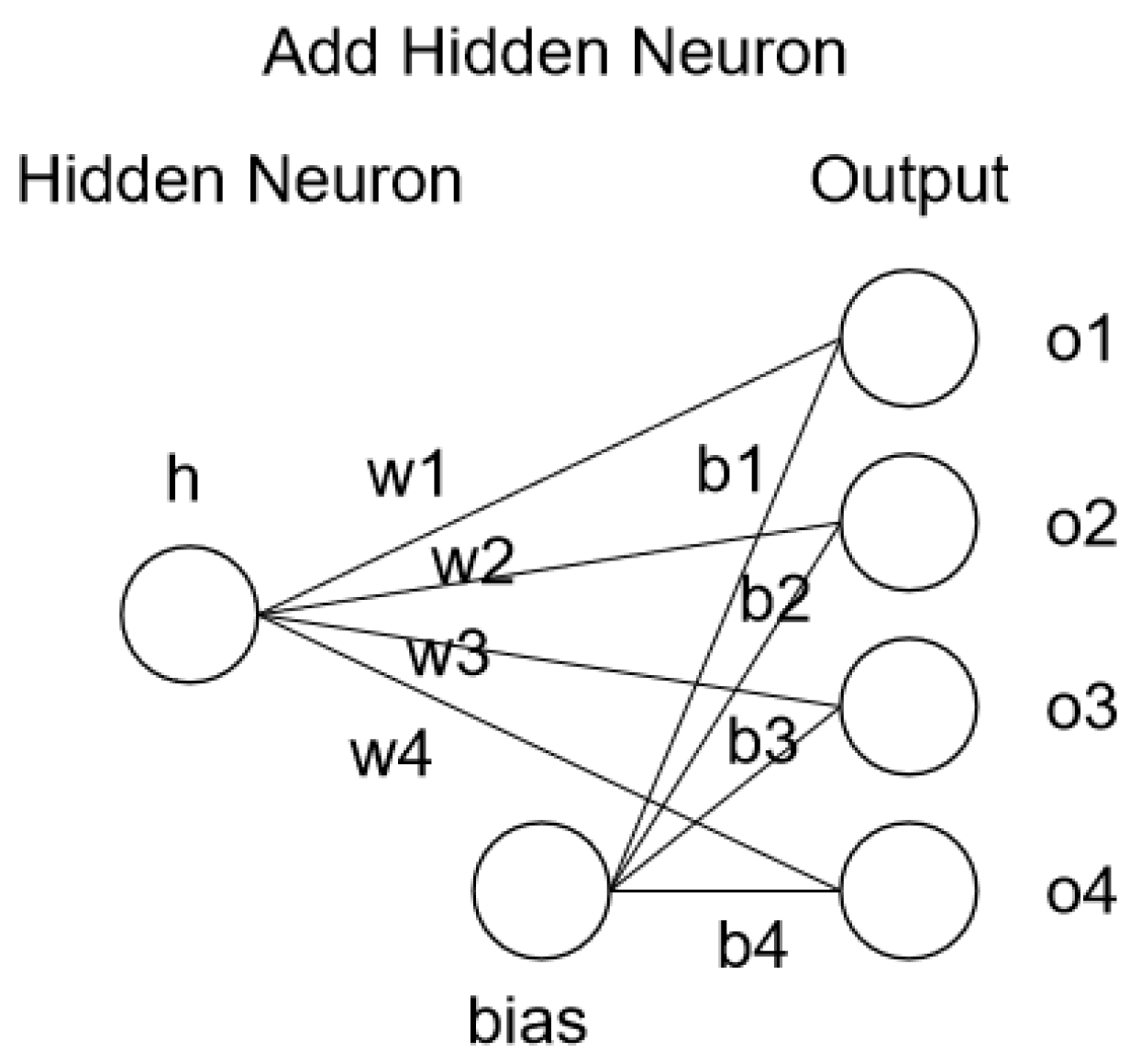

3.2. Improved Cascade Correlation Learning Algorithm Based on the jDE-B Algorithm

4. Improved Cascade Correlation Neural Network Model Based on Multi-Objective Group Intelligence Optimization Algorithm

4.1. Limitations Analysis of Single-Objective Optimization of Cascade Correlation Neural Network

4.2. MOEA-T Algorithm

| Algorithm 2. the specific steps of MOEA-T. |

| Require: Based on the number of optimized targets m, dividing the segmentation number H of per dimension, generate the uniformly distributed weight vector w, and the neighbor set Bi for each weight vector Require: Generate m populations for edge search based on the edge weight vector, Pt = Require: Generate population Pm for multi-objective optimization, Pm = , each individual corresponds to a weight vector. The ideal point Z is determined from the optimal value under the different targets of the population. 1: for each P ∈ Pt do 2: Population P was optimized with the jDE-B algorithm 3: The best individual in P cover the corresponding individual in Pm 4: end for 5: while stopping criteria 2 is not met do 6: for each i ∈ 1, 2, …, NP do 7: Neighbor were selected for evolution operations 8: Update ideal point Z 9: Update the neighbor 10: end for 11: end while |

- (1)

- stopping criteria 2: Current iterations exceed the maximum specified value

- (2)

- Neighbors were selected for evolution operations: Two neighbors were randomly selected from the neighbor set Bi of the i-th individual to perform genetic manipulation with neighbors, yielding new trial individuals y1, y2, y3. The aggregation value (Equation (6)) is calculated according to the weight vector corresponding to the i-th individual, and the best aggregation value in the attempted individual is selected and compared with the original individual, and replaced if better.

- (3)

- Update ideal point Z: The evolved new individuals are compared with the ideal point Z under different optimization targets, and the ideal point Z is updated if there are better target values.

- (4)

- Update the neighbor: Traverse the neighbor set Bi and calculate the aggregate value of the i-th individual as well as the aggregate value of the neighbors based on the corresponding weight vector of each neighbor. If the aggregation value of the i-th individual is better, the neighbors are replaced and updated.

4.3. Improved Cascade Correlation Learning Algorithm Based on the MOEA-T Algorithm

5. Experimental Selection

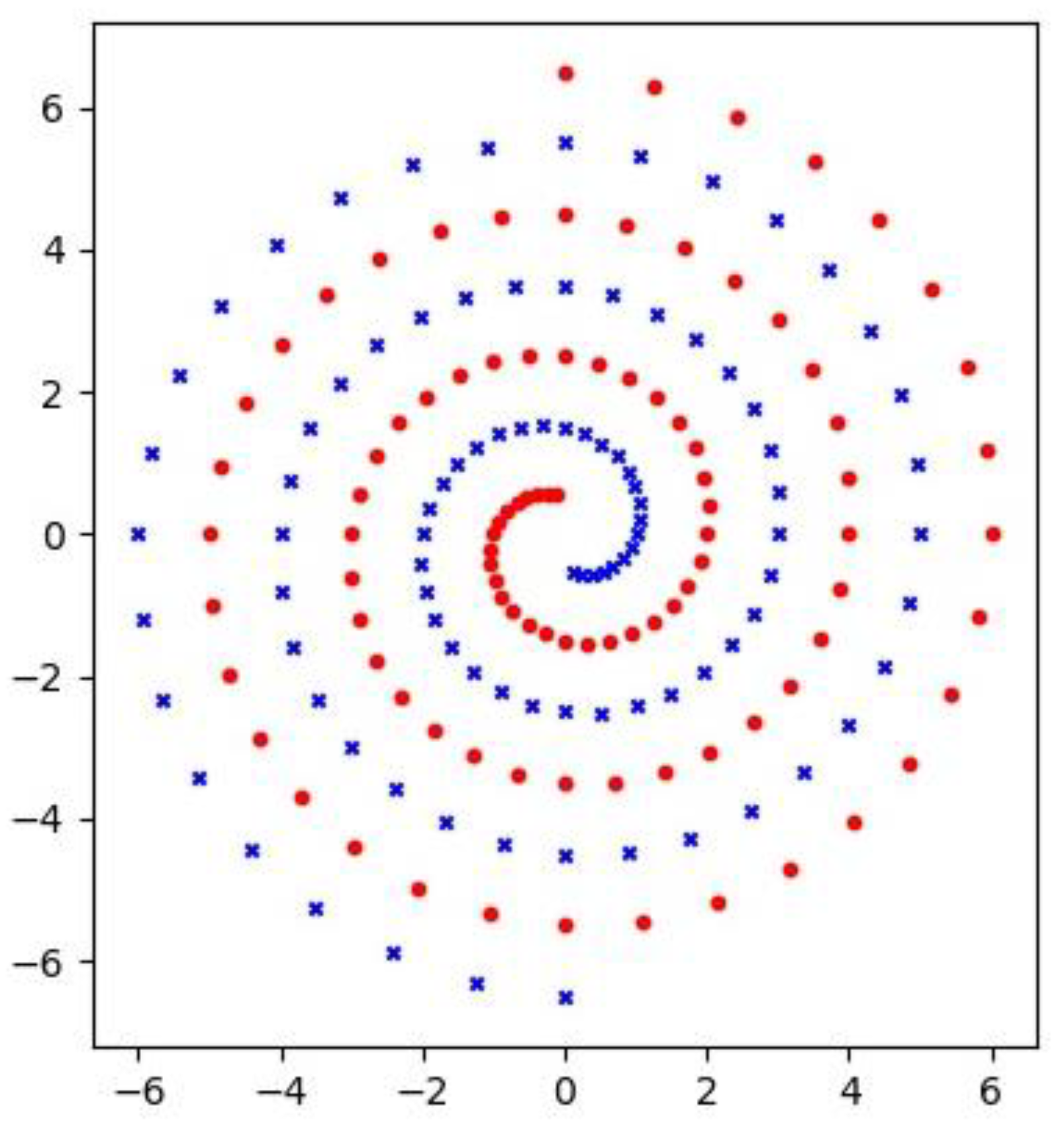

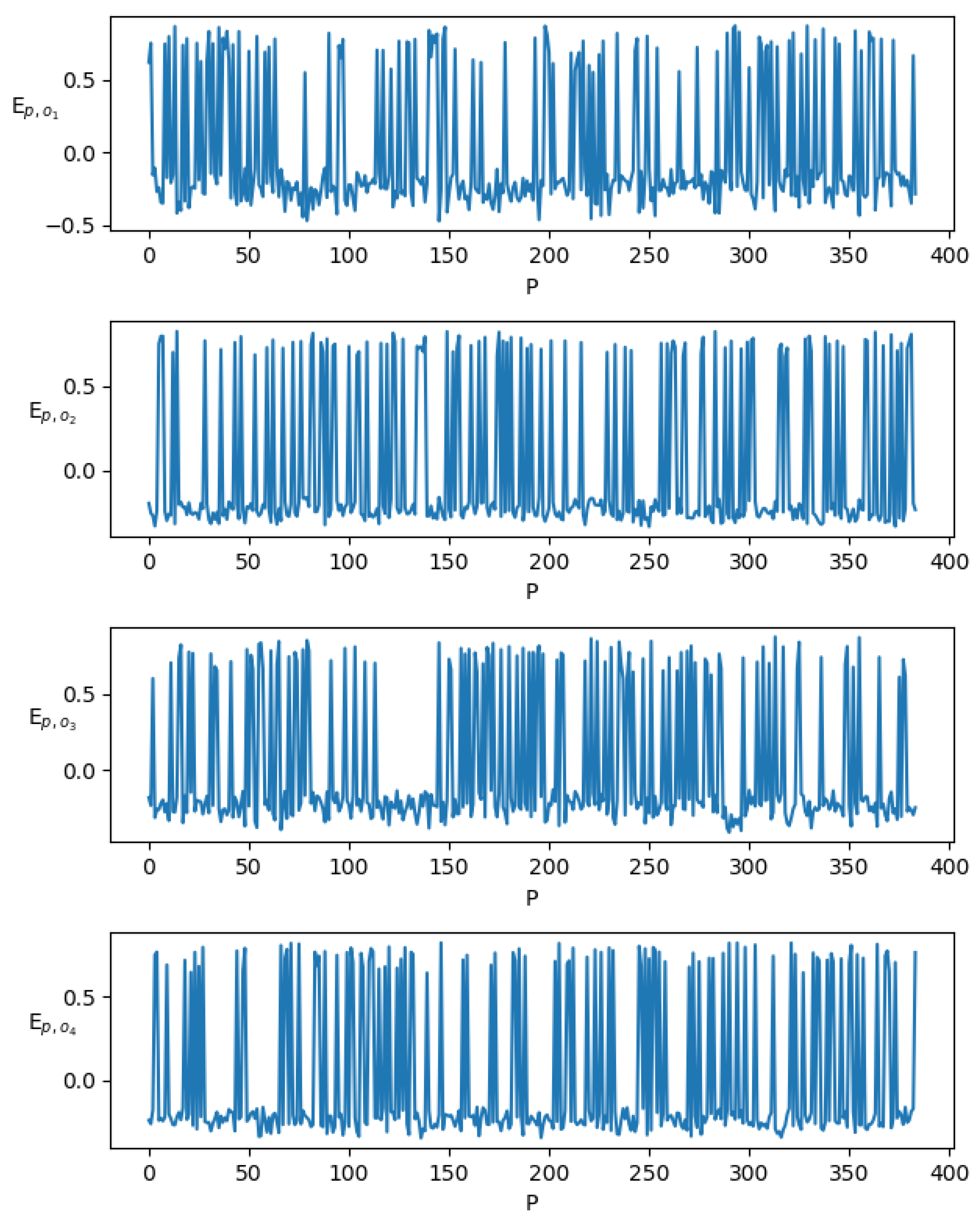

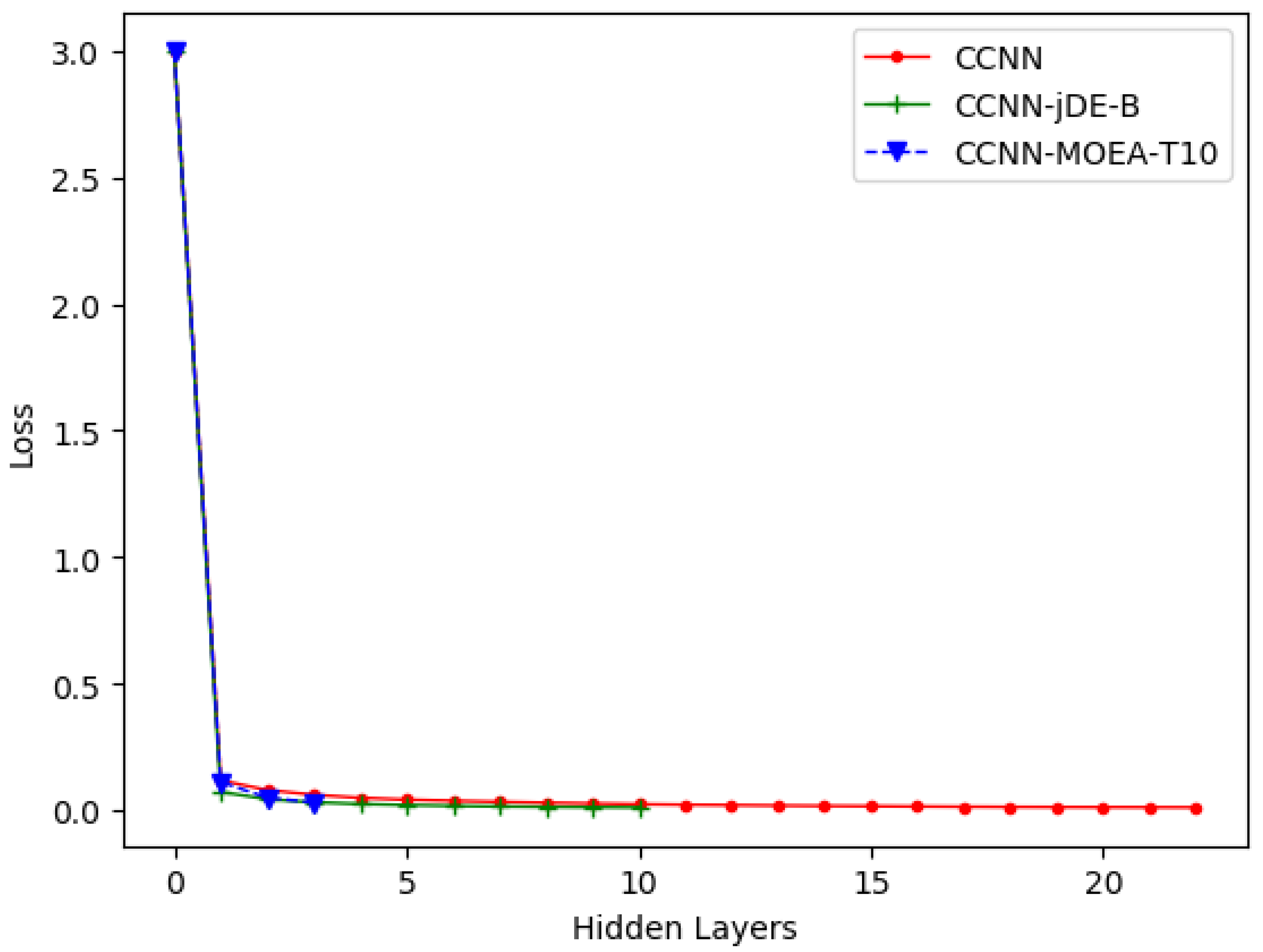

5.1. Two Spirals Classification Problem

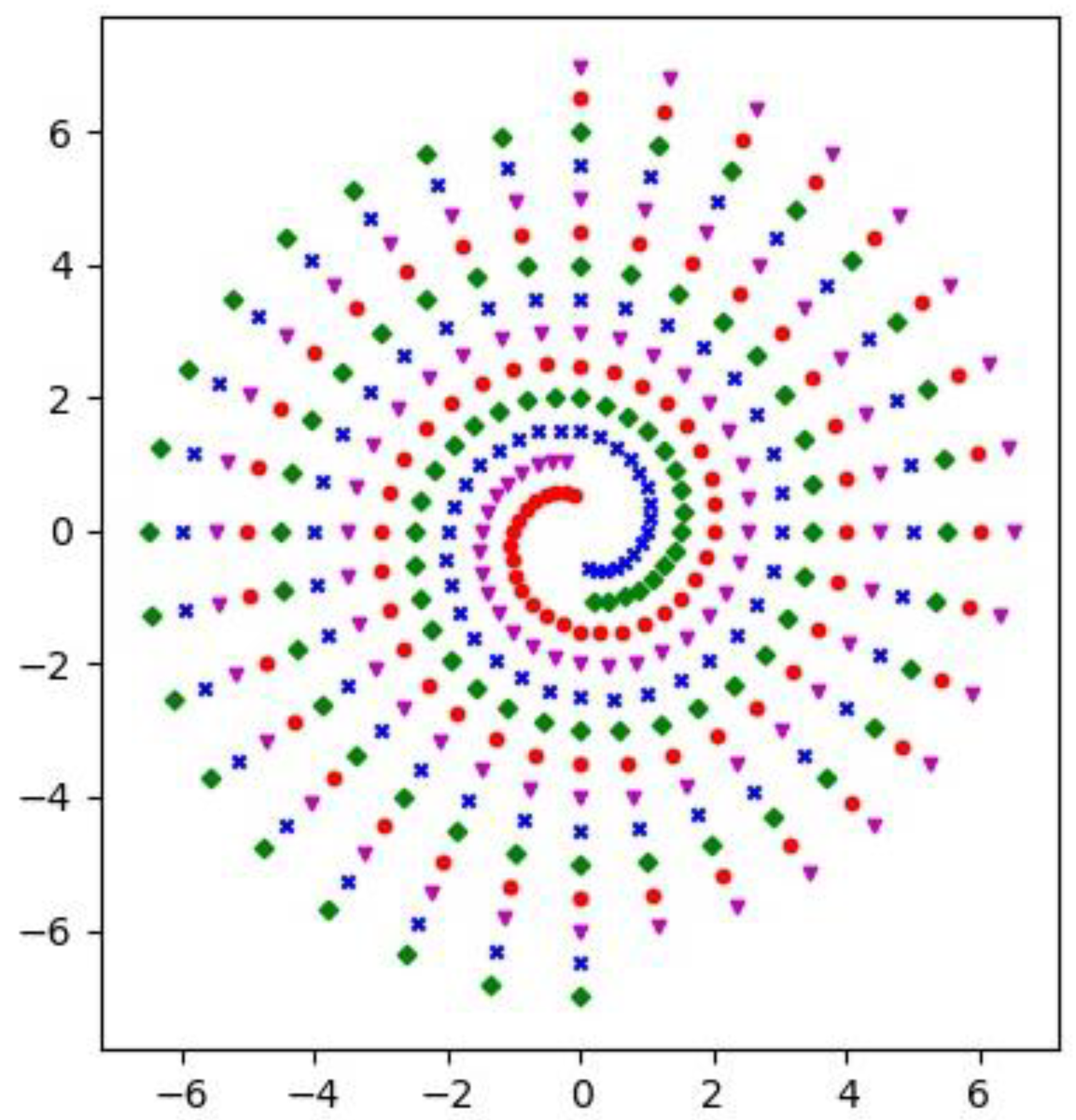

5.2. Four Spirals Classification Problem

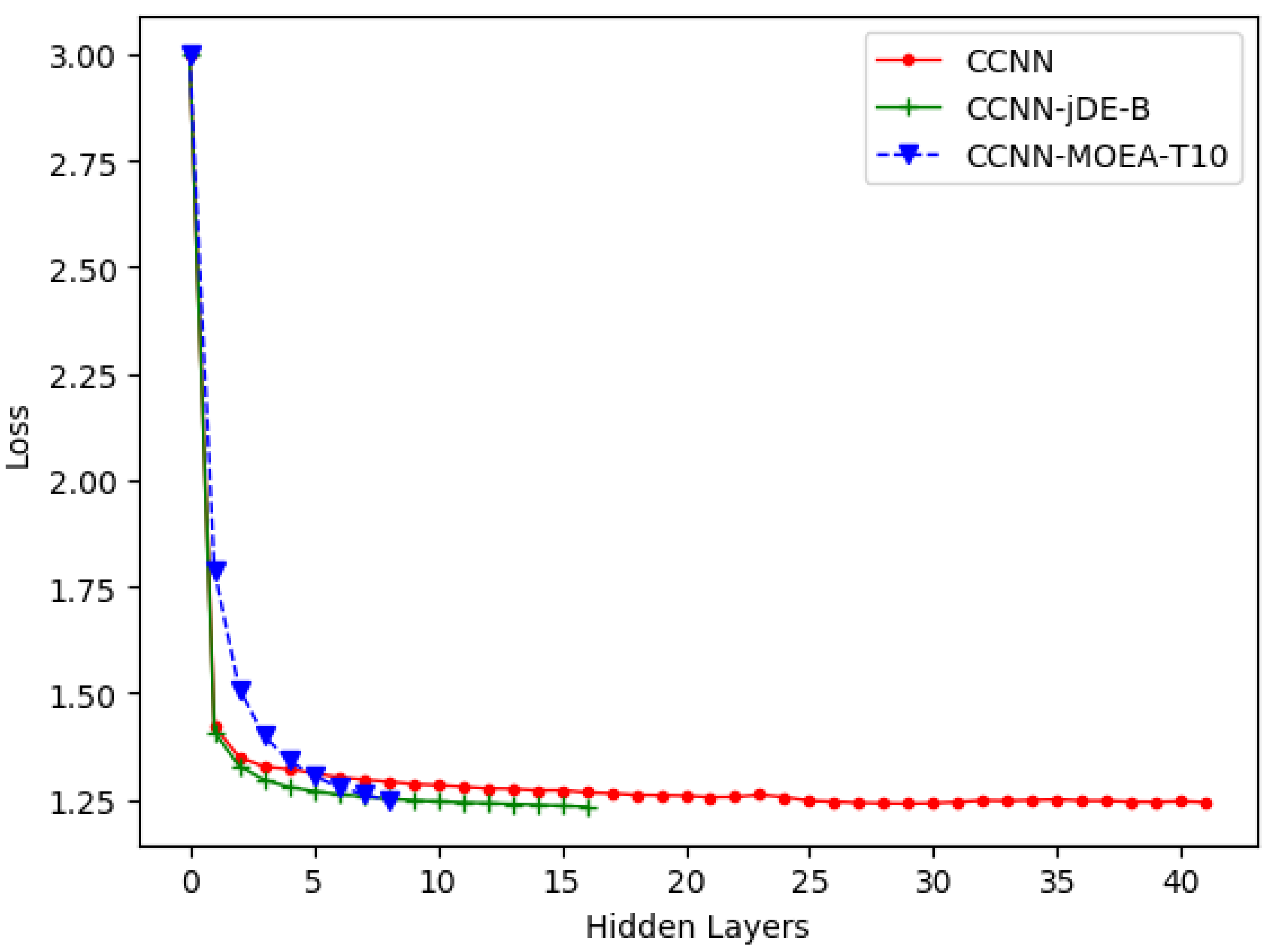

5.2.1. Experimental Content

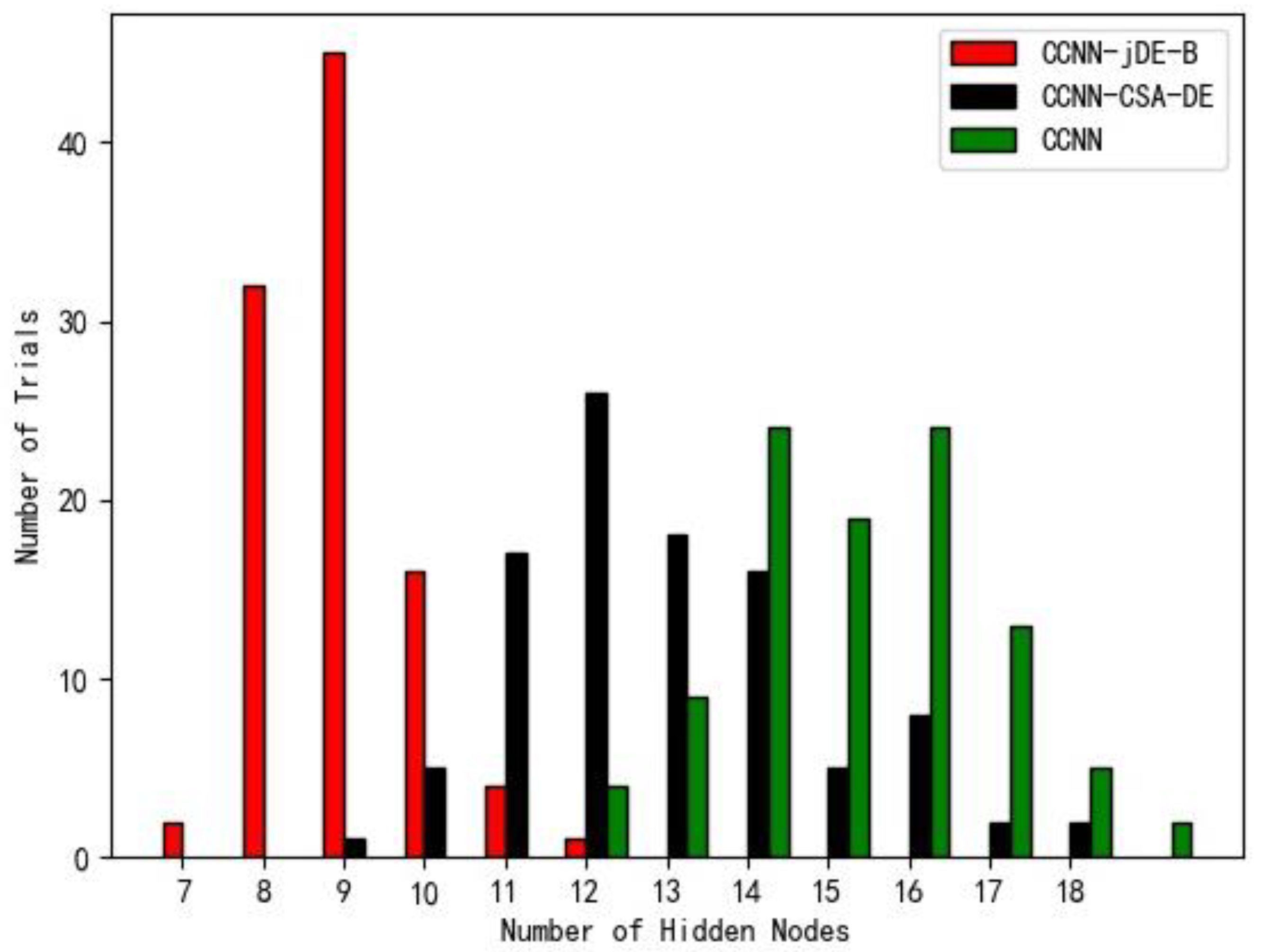

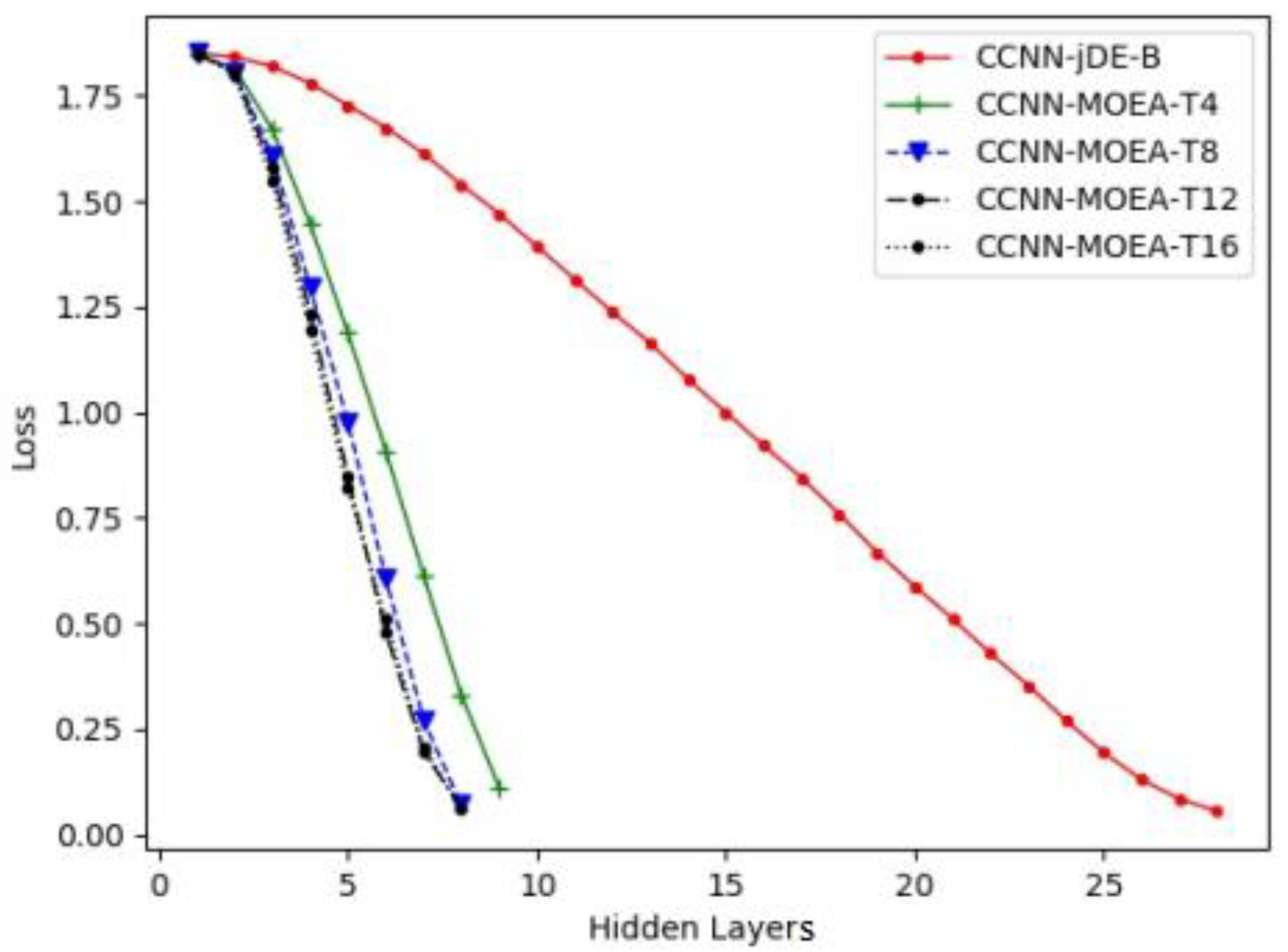

5.2.2. Comparison of Single-Object Optimization and Multi-Objective Optimization of Hidden Unit in Cascaded Correlation Neural Networks

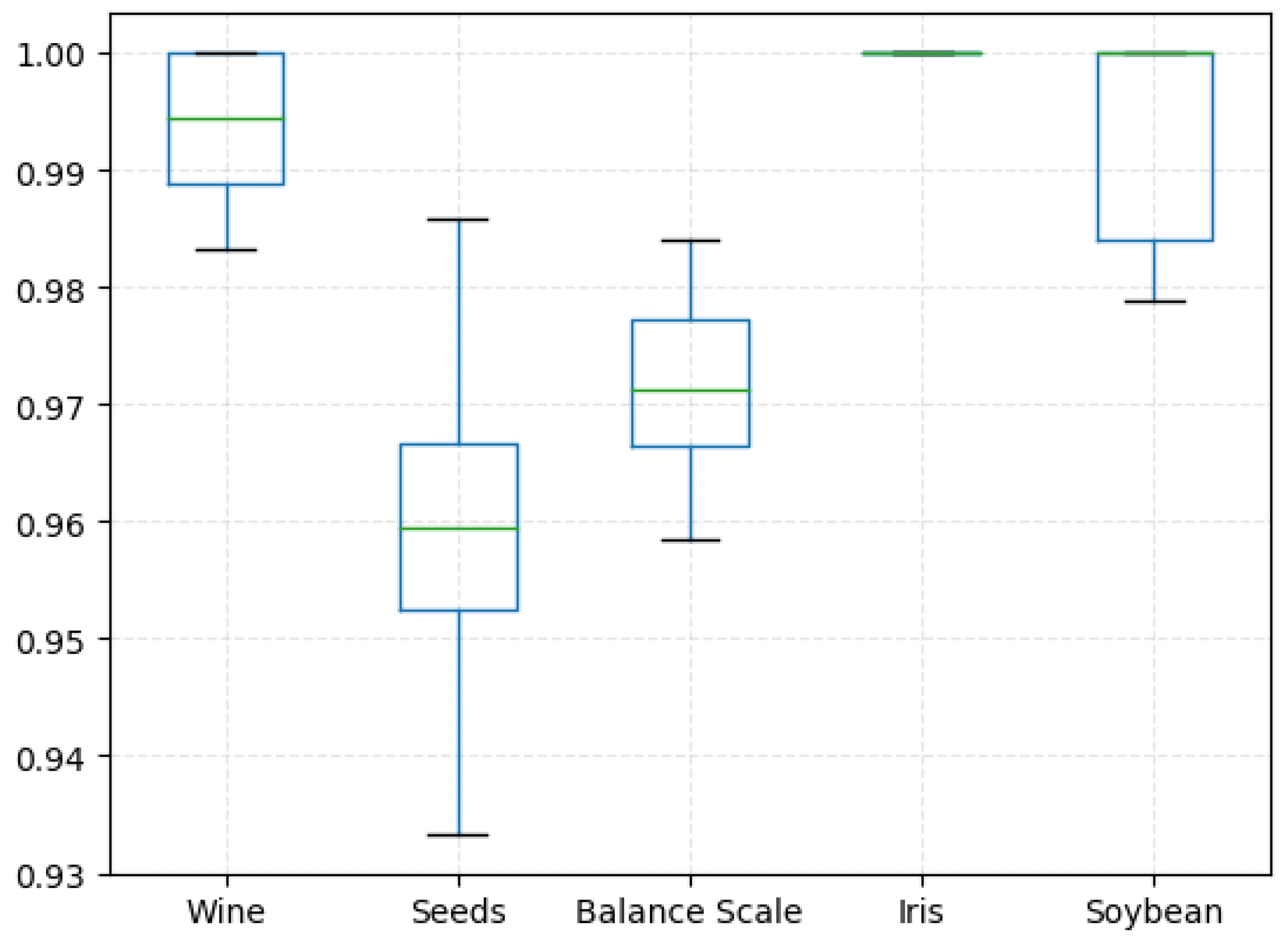

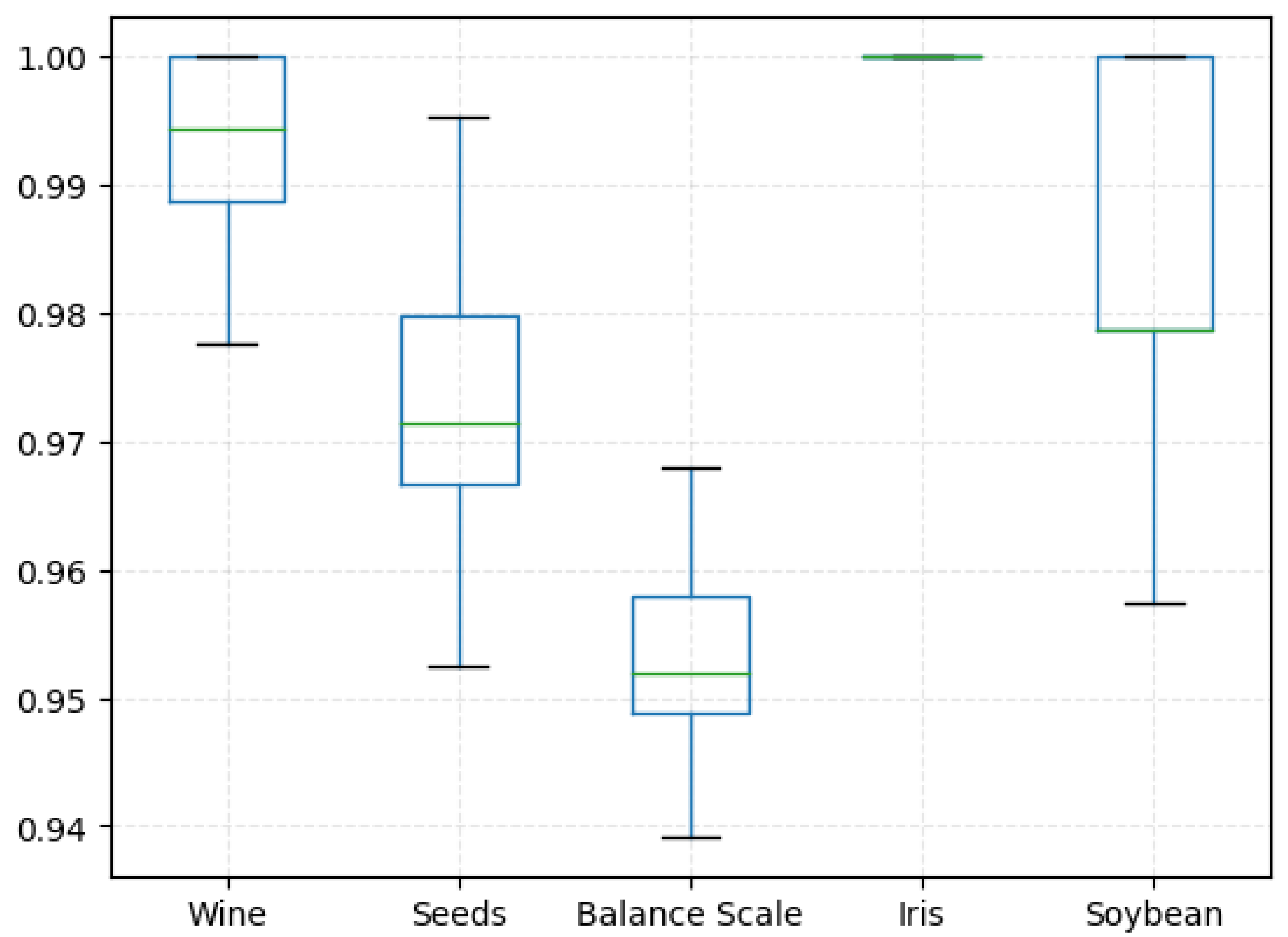

5.3. The UCI Database Experiments

5.4. The CIFAR-10 Classification Problem

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zemouri, R.; Omri, N.; Fnaiech, F.; Zerhouni, N.; Fnaiech, N. A new growing pruning deep learning neural network algorithm (GP-DLNN). Neural Comput. Appl. 2019, 32, 18143–18159. [Google Scholar] [CrossRef]

- Mohamed, E.M.; Mohamed, M.H.; Farghally, M.F. A New Cascade-Correlation Growing Deep Learning Neural Network Algorithm. Algorithms 2021, 14, 158. [Google Scholar] [CrossRef]

- Qiao, J.; Li, F.; Han, H.; Li, W. Constructive algorithm for fully connected cascade feedforward neural networks. Neurocomputing 2015, 182, 154–164. [Google Scholar] [CrossRef]

- Zhang, R.; Lan, Y.; Huang, G.B.; Xu, Z.B. Universal Approximation of Extreme Learning Machine with Adaptive Growth of Hidden Nodes. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 365–371. [Google Scholar] [CrossRef]

- Shahjahan, M.; Murase, K. A constructive algorithm for training cooperative neural network ensembles. IEEE Trans. Neural Netw. 2003, 14, 820–834. [Google Scholar]

- Augasta, M.G.; Kathirvalavakumar, T. A Novel Pruning Algorithm for Optimizing Feedforward Neural Network of Classification Problems. Neural Process. Lett. 2011, 34, 241. [Google Scholar] [CrossRef]

- Qiao, J.F.; Zhang, Y.; Han, H.G. Fast unit pruning algorithm for feedforward neural network design. Appl. Math. Comput. 2008, 205, 622–627. [Google Scholar] [CrossRef]

- Han, H.G.; Qiao, J.F. A structure optimisation algorithm for feedforward neural network construction. Neurocomputing 2013, 99, 347–357. [Google Scholar] [CrossRef]

- Wan, W.; Mabu, S.; Shimada, K.; Hirasawa, K.; Hu, J. Enhancing the generalization ability of neural networks through controlling the hidden layers. Appl. Soft Comput. 2009, 9, 404–414. [Google Scholar] [CrossRef]

- Han, H.G.; Zhang, S.; Qiao, J.F. An Adaptive Growing and Pruning Algorithm for Designing Recurrent Neural Network. Neurocomputing 2017, 242, 51–62. [Google Scholar] [CrossRef]

- Narasimha, P.L.; Delashmit, W.H.; Manry, M.T.; Li, J.; Maldonado, F. An integrated growing-pruning method for feedforward network training. Neurocomputing 2008, 71, 2831–2847. [Google Scholar] [CrossRef]

- Fahlman, S.E.; Lebiere, C. The Cascade-Correlation Learning Architecture. In Advances in Neural Information Processing Systems; Morgan Kaufmann Pub.: San Francisco, CA, USA, 1990. [Google Scholar]

- Guo, Y.; Liang, B.; Lao, S.; Wu, S.; Lew, M.S. A Comparison between Artificial Neural Network and Cascade-Correlation Neural Network in Concept Classification; Springer International Publishing: Berlin, Germany, 2014. [Google Scholar]

- Huang, G.; Song, S.; Wu, C. Orthogonal Least Squares Algorithm for Training Cascade Neural Networks. IEEE Trans. Circuits Syst. I Regul. Pap. 2012, 59, 2629–2637. [Google Scholar] [CrossRef]

- Marquez, E.S.; Hare, J.S.; Niranjan, M. Deep Cascade Learning. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5475–5485. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.Z.; Wang, X.; Ovaska, S.J. Fusion of clonal selection algorithm and differential evolution method in training cascade–correlation neural network. Neurocomputing 2009, 72, 2483–2490. [Google Scholar] [CrossRef]

- Li, H.; Hu, C.X.; Li, Y. The BP neural network model and application based on genetic algorithm. In Proceedings of the 2011 International Conference on Electric Information and Control Engineering, Wuhan, China, 15–17 April 2011; IEEE: Berkeley, CA, USA; pp. 795–798. [Google Scholar]

- Qi, C.; Bi, Y.; Yong, L. Improved BP neural network algorithm model based on chaos genetic algorithm. In Proceedings of the 2017 3rd IEEE International Conference on Control Science and Systems Engineering (ICCSSE), Beijing, China, 17–19 August 2017; IEEE: Berkeley, CA, USA; pp. 679–682. [Google Scholar]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-Adapting Control Parameters in Differential Evolution: A Comparative Study on Numerical Benchmark Problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Wang, B.C.; Li, H.X.; Zhang, Q.F.; Wang, Y. Decomposition-based multiobjective optimization for constrained evolutionary optimization. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 574–587. [Google Scholar] [CrossRef]

- Trivedi, A.; Srinivasan, D.; Sanyal, K.; Ghosh, A. A Survey of Multiobjective Evolutionary Algorithms Based on Decomposition. IEEE Trans. Evol. Comput. 2017, 21, 440–462. [Google Scholar] [CrossRef]

- Asafuddoula, M.; Ray, T.; Sarker, R. A Decomposition-Based Evolutionary Algorithm for Many Objective Optimization. IEEE Trans. Evol. Comput. 2015, 19, 445–460. [Google Scholar] [CrossRef]

- Xu, B.; Zhang, Y.; Gong, D.; Guo, Y.; Rong, M. Environment Sensitivity-Based Cooperative Co-Evolutionary Algorithms for Dynamic Multi-Objective Optimization. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 15, 1877–1890. [Google Scholar] [CrossRef]

- Lee, S.-W. Optimisation of the cascade correlation algorithm to solve the two-spiral problem by using CosGauss and Sigmoid activation functions. Int. J. Intell. Inf. Database Syst. 2014, 8, 97–115. [Google Scholar] [CrossRef]

- Baluja, S.; Fahlman, S.E. Reducing Network Depth in the Cascade-Correlation Learning Architecture; Carnegie Mellon University: Pittsburgh, PA, USA, 1994. [Google Scholar]

- Wang, Z.; Khan, W.A.; Ma, H.L.; Wen, X. Cascade neural network algorithm with analytical connection weights determination for modelling operations and energy applications. Int. J. Prod. Res. 2020, 58, 7094–7111. [Google Scholar] [CrossRef]

- Ghorbani, M.A.; Deo, R.C.; Kim, S.; Kashani, M.H.; Karimi, V.; Izadkhah, M. Development and evaluation of the cascade correlation neural network and the random forest models for river stage and river flow prediction in Australia. Soft Comput. 2020, 24, 12079–12090. [Google Scholar] [CrossRef]

- Elbisy, M.S.; Ali, H.M.; Abd-Elall, M.A.; Alaboud, T.M. The use of feed-forward back propagation and cascade correlation for the neural network prediction of surface water quality parameters. Water Resour. 2014, 41, 709–718. [Google Scholar] [CrossRef]

- Velusamy, K.; Amalraj, R. Performance of the cascade correlation neural network for predicting the stock price. In Proceedings of the 2017 Second International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 22–24 February 2017. [Google Scholar]

- Das, S.; Suganthan, P.N. Differential Evolution: A Survey of the State-of-the-Art. IEEE Trans. Evol. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Neri, F.; Tirronen, V. Recent advances in differential evolution: A survey and experimental analysis. Artif. Intell. Rev. 2010, 33, 61–106. [Google Scholar] [CrossRef]

- Das, S.; Mullick, S.S.; Suganthan, P.N. Recent advances in differential evolution—An updated survey. Swarm Evol. Comput. 2016, 27, 1–30. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive Differential Evolution with Optional External Archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Zhang, Q.; Hui, L. MOEA/D: A Multiobjective Evolutionary Algorithm Based on Decomposition. IEEE Trans. Evol. Comput. 2008, 11, 712–731. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar]

- Deb, K.; Jain, H. An Evolutionary Many-Objective Optimization Algorithm Using Reference-Point-Based Nondominated Sorting Approach, Part I: Solving Problems with Box Constraints. IEEE Trans. Evol. Comput. 2014, 18, 577–601. [Google Scholar] [CrossRef]

- Jain, H.; Deb, K. An Evolutionary Many-Objective Optimization Algorithm Using Reference-Point Based Nondominated Sorting Approach, Part II: Handling Constraints and Extending to an Adaptive Approach. IEEE Trans. Evol. Comput. 2014, 18, 602–622. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Zhang, Q.; Zhang, Z.; Gao, S. Cooperative Multiobjective Evolutionary Algorithm with Propulsive Population for Constrained Multiobjective Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 3476–3491. [Google Scholar] [CrossRef]

- Lécun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

| CCNN- jDE-B | CCNN | CCNN- CSA-DE | CCG- DLNN | GP- DLNN | Sibling/Descendant CCNN | |

|---|---|---|---|---|---|---|

| Hidden Units | 8.92 | 15.2 | 12.9 | 22 | 70 | 14.6 |

| Hidden Layers | 8.92 | 15.2 | 12.9 | 2 | 3 | 7.3 |

| Accuracy | 100% | 100% | 100% | 99.5% | 92.23% | 100% |

| Algorithm | Hidden Units | Hidden Layers | Accuracy | |

|---|---|---|---|---|

| CCNN | 39.5 | 39.5 | 100% | |

| CCNN-jDE-B | 27.52 | 27.52 | 100% | |

| CCNN-MOEA-T | m = 4 | 36.8 | 9.2 | 100% |

| m = 8 | 62.72 | 7.84 | 100% | |

| m = 12 | 92.16 | 7.68 | 100% | |

| m = 16 | 122.24 | 7.64 | 100% | |

| Sibling/Descendant CCNN | = 1.0 | 39.2 | 28.2 | 100% |

| = 0.95 | 43.3 | 23.8 | 100% | |

| = 0.9 | 39.9 | 21.2 | 100% | |

| = 0.8 | 40.9 | 14.2 | 100% | |

| Dataset | Sample Size | Characteristics Number | Classification Number |

|---|---|---|---|

| Wine | 178 | 13 | 3 |

| Seeds | 210 | 7 | 3 |

| Balance Scale | 625 | 4 | 3 |

| Iris | 150 | 4 | 3 |

| Soybean | 47 | 35 | 4 |

| Dataset | Maximum Accuracy | Minimum Accuracy | Average Accuracy | Average Number of Hidden Units | Average Number of Hidden Layers |

|---|---|---|---|---|---|

| Wine | 100% | 98.31% | 99.37% | 1 | 1 |

| Seeds | 98.57% | 93.33% | 95.90% | 4.9 | 4.9 |

| Balance Scale | 98.40% | 95.84% | 97.12% | 8.08 | 8.08 |

| Iris | 100% | 98.66% | 99.95% | 1 | 1 |

| Soybean | 100% | 93.61% | 99.23% | 4.24 | 4.24 |

| Dataset | Maximum Accuracy | Minimum Accuracy | Average Accuracy | Average Number of Hidden Units | Average Number of Hidden Layers |

|---|---|---|---|---|---|

| Wine | 100% | 97.75% | 99.29% | 3 | 1 |

| Seeds | 99.52% | 95.23% | 97.22% | 3.42 | 1.14 |

| Balance Scale | 96.80% | 93.92% | 95.31% | 17.16 | 5.72 |

| Iris | 100% | 98.00% | 99.74% | 3.42 | 1.14 |

| Soybean | 100% | 93.61% | 98.12% | 10.96 | 2.74 |

| LeNet-5 | CCNN | CCNN-jDE-B | CCNN-MOEA-T | |

|---|---|---|---|---|

| Number of hidden units in the connection layer | 204 | 40 | 15 | 70 |

| Number of connected layers | 2 | 40 | 15 | 7 |

| Training set accuracy | 60.3% | 60.7% | 60.9% | 60.8% |

| Test set accuracy | 58.1% | 57.1% | 57.8% | 56.7% |

| AlexNet | CCNN | CCNN-jDE-B | CCNN-MOEA-T | |

|---|---|---|---|---|

| Number of hidden units in the connection layer | 12,288 | 21 | 9 | 20 |

| Number of connected layers | 3 | 21 | 9 | 2 |

| Training set accuracy | 93.2% | 99.9% | 99.9% | 99.7% |

| Test set accuracy | 78.8% | 77.4% | 77.9% | 76.7% |

| AlexNet | CCNN | CCNN-jDE-B | CCNN-MOEA-T10 | |

|---|---|---|---|---|

| Number of hidden units in the connection layer | 12,288 | 5 | 2 | 10 |

| Number of connected layers | 3 | 5 | 2 | 1 |

| Training set accuracy | 100% | 100% | 100% | 100% |

| Test set accuracy | 85.6% | 82.6% | 83.1% | 81.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, J.; Li, Q.; Wei, W. Improved Cascade Correlation Neural Network Model Based on Group Intelligence Optimization Algorithm. Axioms 2023, 12, 164. https://doi.org/10.3390/axioms12020164

Deng J, Li Q, Wei W. Improved Cascade Correlation Neural Network Model Based on Group Intelligence Optimization Algorithm. Axioms. 2023; 12(2):164. https://doi.org/10.3390/axioms12020164

Chicago/Turabian StyleDeng, Jun, Qingxia Li, and Wenhong Wei. 2023. "Improved Cascade Correlation Neural Network Model Based on Group Intelligence Optimization Algorithm" Axioms 12, no. 2: 164. https://doi.org/10.3390/axioms12020164

APA StyleDeng, J., Li, Q., & Wei, W. (2023). Improved Cascade Correlation Neural Network Model Based on Group Intelligence Optimization Algorithm. Axioms, 12(2), 164. https://doi.org/10.3390/axioms12020164