1. Introduction

The contemporary Information Society (InSoc) is characterized by the massive informatization and penetration of digital technologies in all areas. This is described in [

1] as “

the most recent long wave of humanity’s socioeconomic evolution”, with an emphasis on the fact that the current digital age “

focuses on algorithms that automate the conversion of data into actionable knowledge”. The massive globalization of processes leads to increased activity in the Internet space, which has a reflection on the efficiency of the data network [

2] due to the increased access to remote resources and use of cloud data centers [

3], data sharing in virtual environments [

4], Internet of Things (IoT), including the sensor collection of personal data [

5], and others. For example, the previously cited article confirms that today’s advanced sensor technologies “

generate a large amount of valuable data” for different applications, such as “

health care, elderly protection, human activity abnormal detection, and surveillance”. One result of technologies in the digital space is the dissemination of personal data (freely or unknowingly), which raises a serious question concerning the privacy of users and the reliable protection of their personal data. This requires that, when developing environments for remote multiple access, organizational and technical measures to protect the data provided to users are adopted. A basic requirement should be countermeasures against the illegal distribution of user data and their use for purposes other than those announced, including the introduction of strict rules for authorization and authentication [

6]. One direction for regulating the access to and use of objects and data is discussed in [

7], where it is emphasized that building systems with multiple services increases the security impact when compared with the monolithic style. In order to answer important security questions in complex environments, a systematic review of the main challenges when using mechanisms and technologies for authentication and authorization in micro-services is performed.

The purpose of this article is to present a point of view on the technological organization of personal data protection processes in an example environment (administrative system) that uses cloud services and data centers and applies the requirements of the CIA (Confidentiality, Integrity, Availability) triad. The requirements of this triad and their implementation in various technological solutions are well discussed. For example, a holistic study of the shortcomings of existing technological solutions for the IoT and cyber physical systems (CPS) is presented in [

8], and a solution involving blockchain technology is proposed with an analysis of the similarities and differences. Another study “

on the improvement of CIA triad to reduce the risk on online banking system” is presented in [

9].

Cloud computing allows us to take advantage of the leasing of infrastructure, software, and platforms offered as cloud services (IaaS, SaaS, PaaS). In this way, the costs of maintaining and administering one’s own assets are reduced. For the user, the “cloud” is a virtual environment for data processing and storage. The services offered provide various cloud capabilities, requiring the proper pre-allocation of resources to overcome congestion, resource loss, load balancing, Quality of Service (QoS) violation, migration of virtual machines (VM), etc. A primary goal of the cloud is to correctly map VM to physical machines (PM) so that the PM can be effectively used. In this respect, an extensive survey of cloud resource management schemes is presented in [

10] in order to identify the main challenges and point out possible future research directions. Another study [

11] discusses how cloud service reliability should be further evaluated in specific conditions and proposes an approach based on combining cloud service reliability indicators to obtain an effective evaluation and improve data security. Despite cloud service providers’ claims of good information security at the platform level, doubts have been expressed about the protection of personal data. To overcome possible problems, a patent has been registered to define a “Data Protection as a Service” (DPaaS) paradigm for generating dynamic updates of data protection policy using the machine-learning model and comparisons with previous instructions [

12].

When researching processes developing in a computer environment, as well as for organizing structures, various possibilities are applied, such as benchmark and synthetic workload; monitoring through hardware, software, and combined means; computer modeling (abstract, functional, analytical, simulation, empirical); as well as statistical analysis of empirical data. Each of these approaches has its advantages and peculiarities, and the use of each of them depends on the researched object and the set tasks.

One of the applied approaches when researching processes in various systems and applications is modeling based on an appropriate apparatus and instrumentation. For example, simulation modeling is applied in [

13] to study the probabilistic behavior and timing characteristics of interdependent tasks of arbitrary durations. The goal is to estimate the duration of the project and the possible risks of untimely completion. Another approach is the development of a mathematical description of processes, as is done in [

14] to study the possibilities of minimizing power losses in a distribution transformer and evaluating energy efficiency. The proposed mathematical model describes the relationship between all the parameters of the transformer using the direct global iterative algorithm technique.

It is known that processes developing in systems most often have a probabilistic nature, which determines the effectiveness of the stochastic approach to research and indicates Markov processes and, in particular, Markov chains (MC) to be a suitable apparatus. An example of this is the application of MC to study technological limitations in stochastic normalizing flows presented in [

15]. Another application of MC is discussed in [

16], where the approach is combined with the Monte Carlo method for assessing the characteristics of probability distributions and a review of the “

methods for assessing the reliability of the simulation effort, with an emphasis on those most useful in practically relevant settings” is performed. In addition, the strengths and weaknesses of these methods are discussed.

The main goal is to carry out a preliminary study of the constructed structural objects of the designed administrative system and the processes supported by them by applying the apparatus of Markov chains (MC). The choice of tool is determined by the stochastic nature of the processes in an essentially discrete hardware (computer) environment. To conduct the experiments, an author’s programming environment, as developed in the APL2 language [

17], is used and a graphic interpretation of the evaluations is additionally made. An approach using MC to conduct model research is applied in [

11] to study the reliability of network services as well as in [

18] to investigate basic performance and optimization measures in resource planning in the cloud and the IoT in order to improve performance and QoS. An efficient algorithm for infinite-time task scheduling in IaaS using MC with continuous parameters to search for an optimal solution is proposed, and a prototype is realized based on the designed model. A comparison of this prototype with a group of working models confirms the usefulness of the project.

This article is organized as follows. In the next section, an analytical representation of the researched object is presented using a Data Flow Diagram (DFD) and a mathematical description of the applied approach based on the Markov Chain (MC). The third section is devoted to the implementation of the model experiments, and a discussion of the experimental results is presented in

Section 4.

2. Materials and Methods

The right to a private life and its inviolability (“right to privacy”) are directly related to the procedures for Personal Data Protection (PDP), which is an internationally recognized right, based on the understanding that personal data are the property of the person (Data Subject). As stated, the expansion of network communications and the growing possibilities of remote access to distributed information resources impose increasingly strict requirements on the applied information security policies. The globalization of public processes, the socialization of communications, and the use of cloud services pose challenges to ensuring reliable PDP. Cloud service providers emphasize the advantages of the cloud, mainly those related to cost reduction, but the process of protecting information is not one of the main objects of discussion. Possible problems when providing personal data determine a high rate of distrust among users toward the digitalization of services (over 70%). In particular, for cloud services, this is associated with basic features such as multi-tenancy, storing copies of data in different places in the virtual environment, applying common and standard security approaches, etc. In addition, a study by the Computer Security Institute shows that a fairly high share (more than 55%) of compromised information security is due to accidental errors by staff, which necessitates paying attention to internal procedures for countering potential threats when processing personal data.

The classical organization of computer data processing takes place in an environment with a discrete structure, although the supported processes are probabilistic in nature. This allows both deterministic and probabilistic means to be used for the preliminary formalization of processes. One possibility for a deterministic description involves the State Transition Network (STN), which allows us to study the possible developments of the processes by analyzing the paths “from beginning to end”. In this direction is also the application of a Data Flow Diagram (DFD) for the formal description of the movement of data flows in a given structure, with an indication of the important places for their communication with other processes and objects. This has been applied to formalize the structural organization when conducting research, taking into account the peculiarities and requirements of the PDP procedures.

The application of the probabilistic (stochastic) approach is often based on Markov processes, and when modeling computer data processing, the apparatus of Markov chains is suitable because they are used to describe the probabilistic transitions between discrete states in determinate moments of time. The preliminary formalization in this case requires the definition of a finite set of states

S = {

s1, …,

sn} for the studied process and a matrix of transition probabilities between those states

pij =

P(

si→

sj). Stochastic analysis examines the sequence of states <

S(0),

S(1), …,

S(k)> in which the MC falls, for which it is necessary to define a vector of the initial probabilities for the formation of the initial state

S(0) =

S(

k = 0). It is usually assumed that the process starts from the first state

s1 ∊ S, i.e.,

P0 = {1, 0, …, 0}. Starting from the initial state, for each successive step

k = 1, 2, … of the process development, the conditional probability of a transition from the current state

si to the next state

sj can be determined by

pij(

k) =

P[

S(

k) =

sj/S(

k − 1) =

si] based on the full probability Formula (1):

which can also be used to calculate the final probabilities

P(

k→∞) of falling into a certain state by

To investigate the processes in the proposed technological environment, s stochastic Markov models are designed, and for their study, the developed author’s program function “MARKOV” in the APL2 language environment [

17] is used. This allows us to determine the vector of the probabilities for the states

P(

k) = {

p1(

k), …,

pn(

k)} for successive steps, the number of which is set by the user. After starting, it requires the definition of the main characteristics of the Markov chain: N—number of states; P[I,J]—the elements of the matrix of transition probabilities; and PO [1 ÷ N]—the elements of the vector of initial probabilities. A complete study can be organized through the additional program functions “PATHS’ and “ESTIMATES” [

19], which, together with “MARKOV”, create a common program space for conducting analytical experiments in the APL2 environment.

3. Preliminary Formalization

The problems of data protection related to the growing threats of illegal access and incorrect use are the subject of different documents. A basic example here are the rules of conduct established in the USA to ensure the necessary protection of information and corporate resources, which are known as the SOX rules, as consolidated in the Sarbanes-Oxley Act of 2002. A study of the impact of these rules on the possible risk in resource management is done in [

20], with an analysis of the situations before and after the adoption of these rules. Overall, the article highlights the positive impact of risk-reducing rules on resource management and increasing factor productivity and incentive compensation.

In reality, it should be noted that the security of information resources requires the provision of adequate and functional security policies, which place specific requirements on the Digital Rights Management System (DRMS). The main trends are related to the inclusion of important components aimed at protecting personal data, for example: ✓ cryptographic algorithms for information encryption; ✓ cryptographic key management strategies; ✓ access control methods; ✓ methods and means of user identification and authentication; and ✓ information content management with provenance verification and data copy control. At the heart of any DRMS are two processes, authentication and authorization, which are used to prove that the specific information is used by the individual who has pre-set rights to access it, allows all his actions to be tracked and checked, and controls what means of access is used. In this regard, all the currently used technologies for authentication, especially biometrics, are important for the reliable management of access to information resources.

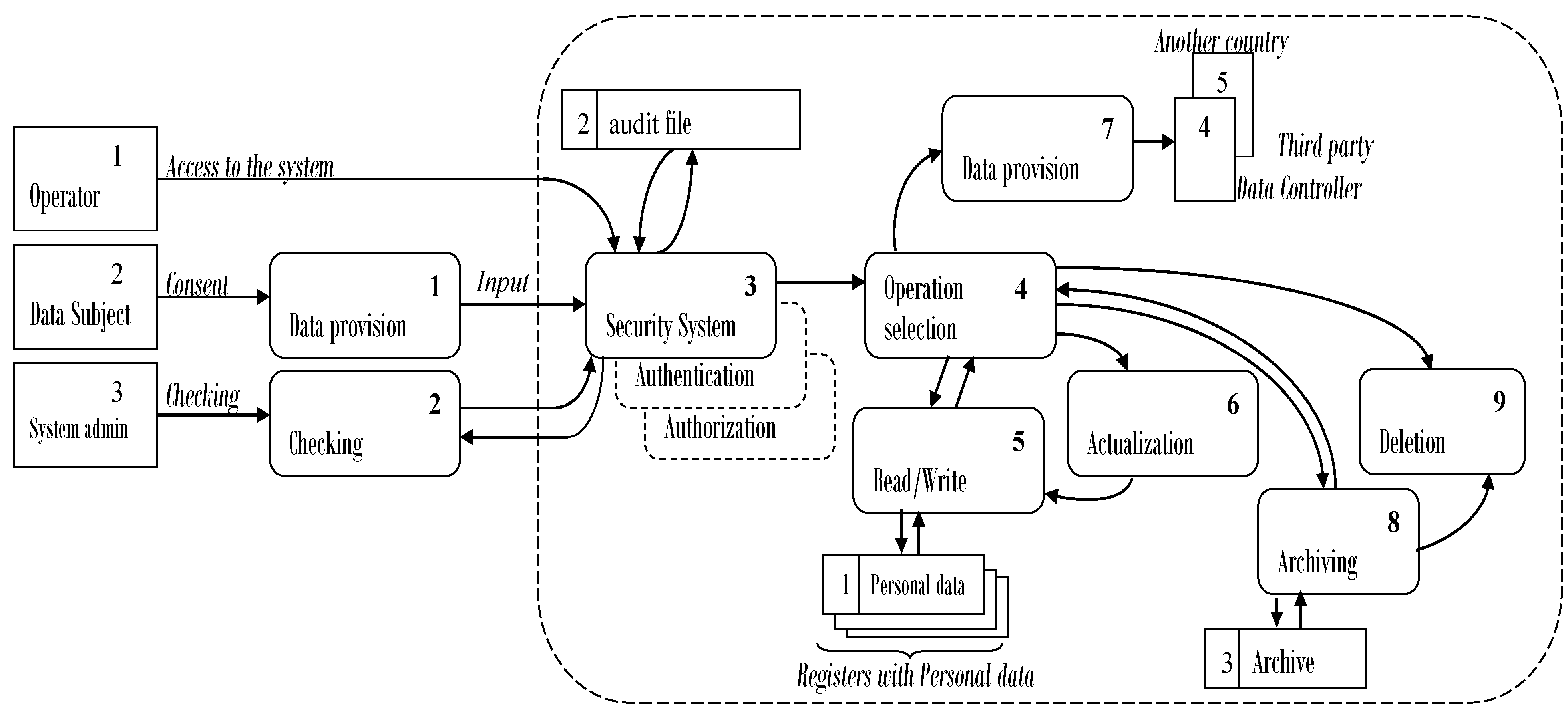

According to regulatory documents, PDP refers to any action related to them—collection, storage, updating, correction, provision to a third party, transfer to another country, archiving, destruction, etc. All these processes must be carried out under strict organizational and technological measures to protect the means of storing personal data (Personal Data Registers [PDR]). The formalization of the classical version of personal data processing in a centralized corporate system is presented in

Figure 1 by using a DFD with five external entities (source/receiver), nine basic information procedures, and three storage units. Part of the Information Security System (ISS) is the maintenance of a log (audit) file for access and activities carried out with the PDR.

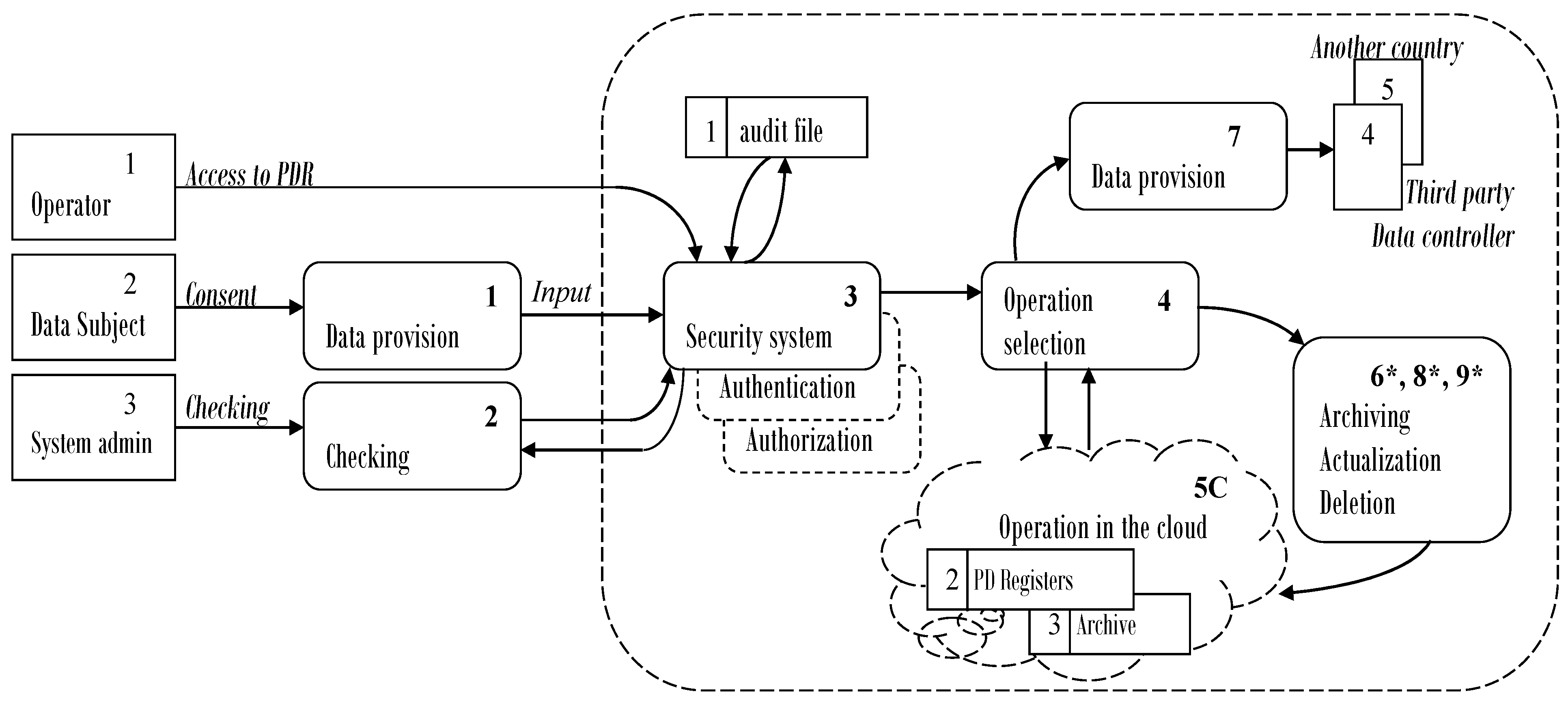

A modification to a centralized environment can be made by transferring certain activities, including the storage of personal profiles with personal data, to the cloud. This leads to the modification of the formalized description as well, introducing a generalized process “5C”, thereby uniting the undertaking of the activities of archiving, updating, and deleting personal data with the maintenance of the stored arrays and profiles in data centers. The modified DFD model of the decentralized structure is shown in

Figure 2. The introduction of the new general process requires actualizing the role of the processes that are transferred to the cloud, which this is marked in the DFD by “*” (6 *, 8 *, 9 *). In addition, the new version requires the duplication of procedures for ensuring information protection (identification, registration, authentication, authorization) with partial transfer to the cloud.

4. Stochastic Model Investigation

To conduct the model investigation, two models are defined and solved using MC for the two formal DFD descriptions presented in the previous section: traditional and “cloud” PDP. It is assumed that any random process, regardless of the source of a submitted request, begins with access to internal ISS funds. The basis for this assumption is the nature of the PD provisioning procedures by the individual.

4.1. Analytical Investigation of Traditional PDP

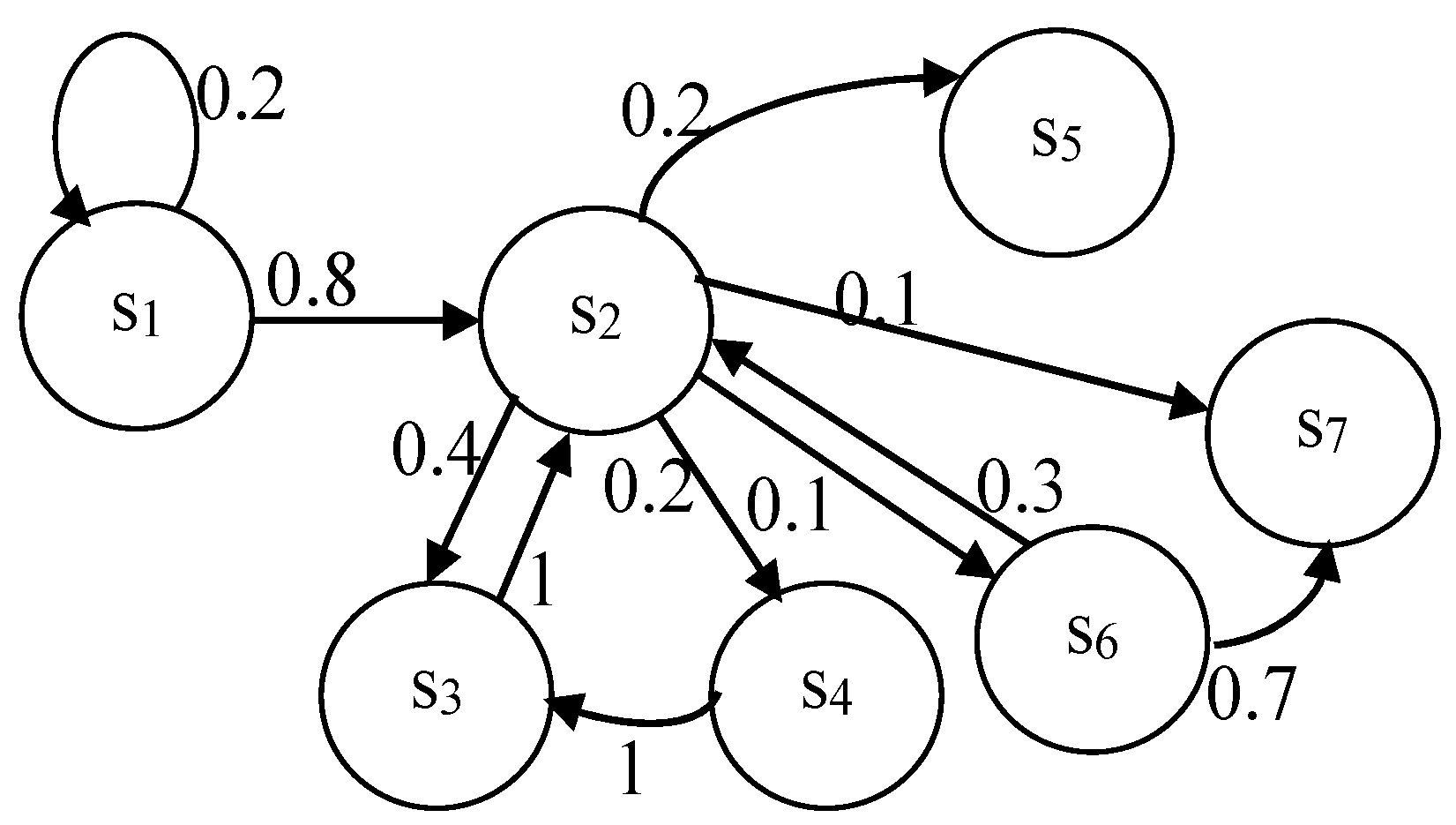

The Markov model of traditional PDP presented in

Table 1 is defined on the basis of the assumptions made regarding the start of processes and selection of typical values for the real traditional PDP values for the transition probabilities. The visual presentation of the MC graph of the states is shown in

Figure 3 with the following states:

s1—verification of the legitimacy of the request through authentication and authorization;

s2—selection of operation when access is allowed from the internal ISS;

s3—write/read to PDR;

s4—PD update in registry;

s5—provision of PD to a third party, another Data Controller, or sending abroad;

s6—archiving of PD in the presence of a legal requirement for this;

s7—destruction of PD after fulfilling the purpose for which they were collected.

The analytical definition of a model as a system of probabilities is as follows:

One possible solution to the presented system of probability permits us to calculate the values for all the final probabilities as follows:

This permits us to construct Equation (2) for the calculation of the value of the final probability

p2.

After solving Equation (2) and substituting it into the expressions, the following estimates are formed for the final probabilities of falling into each of the states:

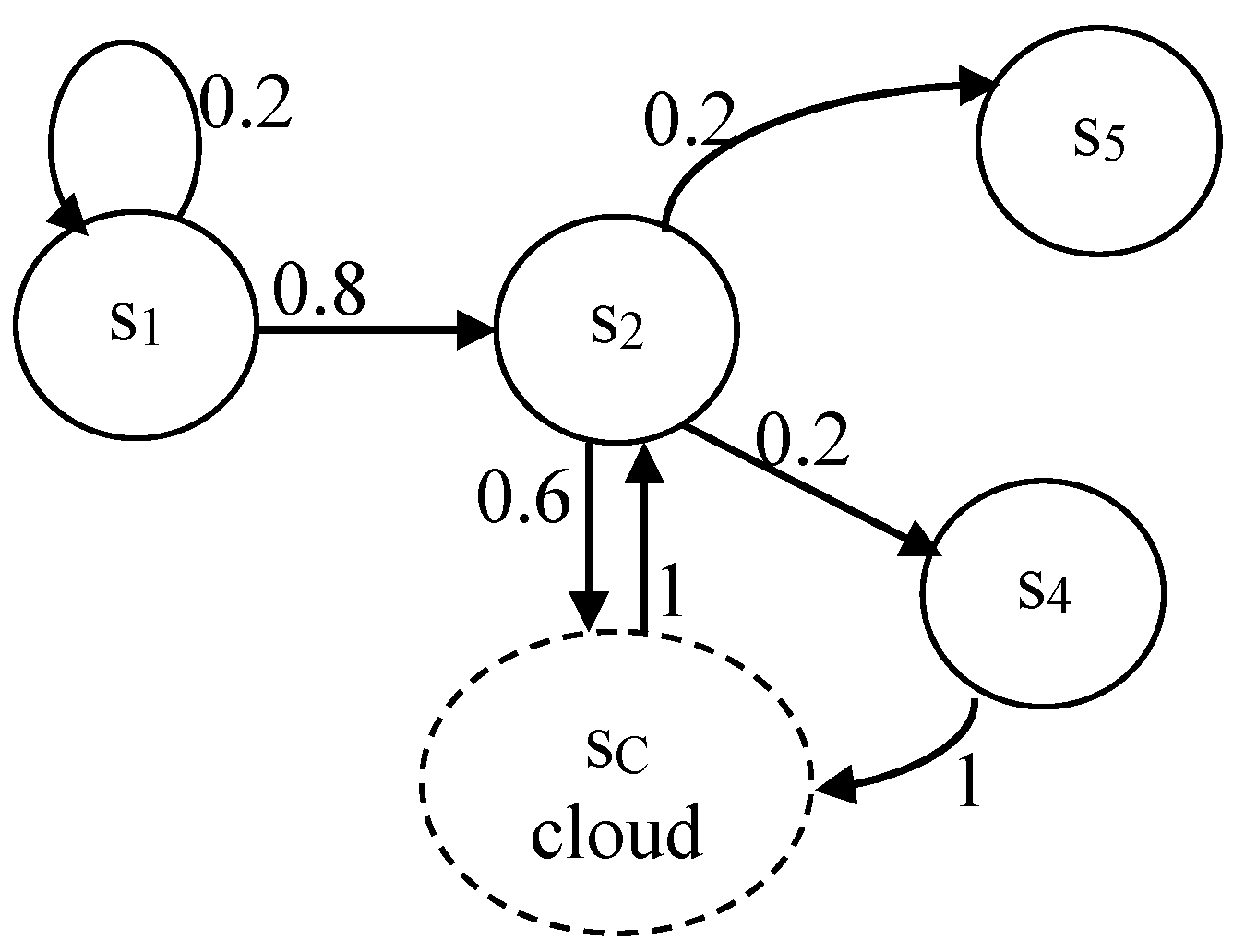

4.2. Analytical Investigation of “Cloud” PDP

The Markov model for “cloud” PDP (

Figure 4) is a modification of the previous one and corresponds to the processes from the DFD (

Figure 2). A generalized state

sC is created, replacing states s

3, s

6, and s

7, whose activities are taken over by cloud services.

As updating is a process involving incoming new (or corrected) PDs for an individual and receiving them from a Data Controller operator (employee), the

s4 state activity cannot be migrated to the cloud. The same applies to the process of providing PD, as it is related to certain regulatory requirements. The analytical Markov model notation for this situation is as follows:

After solving the system of probabilistic equations, the following estimates for the final probabilities are determined: p1 = 0.102; p2 = 0.408; p4 = 0.082; p5 = 0.082; and pC = 0.326.

4.3. Experimental Results Discussion

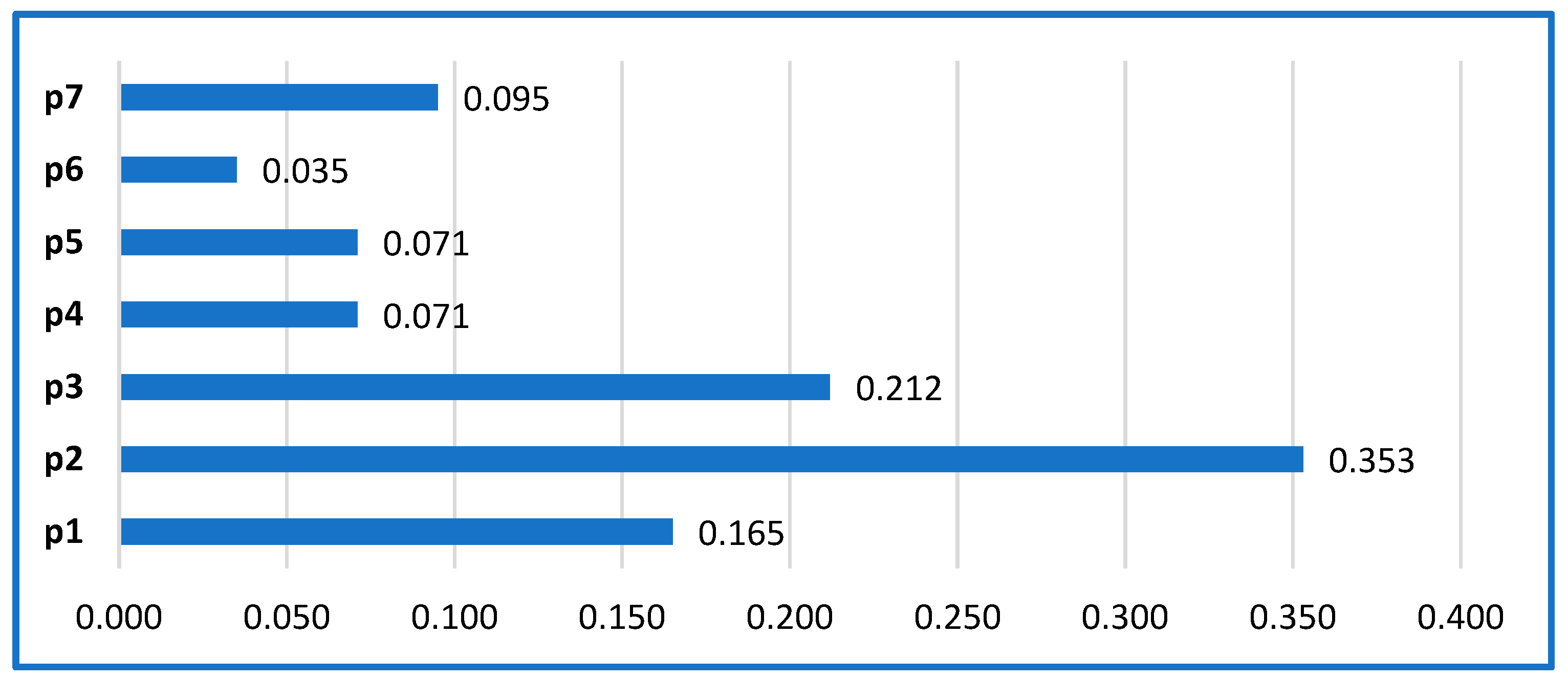

The diagram in

Figure 5 presents a visual summarization of the obtained analytical results for model “A”, allowing for easy comparison of the probabilities of falling into the separate states. It can be seen that the load of the states related to the selection operations of relevant data processing activities and operation with the registry system for their storage is the greatest.

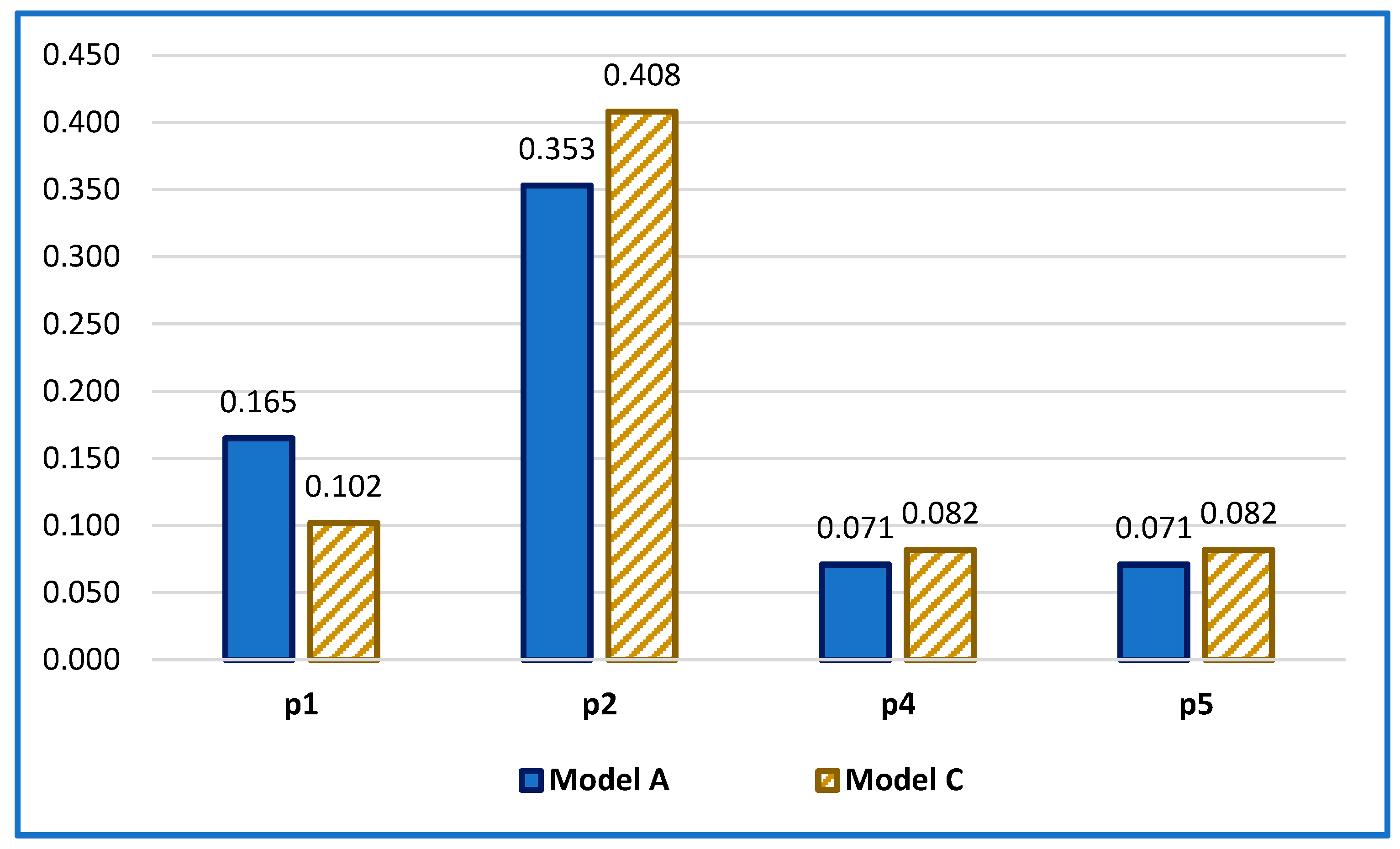

A joint visualization of the analytical results of the solutions for the two models is presented in

Figure 6, where the probabilities of performing the corresponding activities in a steady state are presented. The comparative analysis shows non-significant differences in the marginal probability values for the two situations (the two models), although model “B” (the cloud option) has a certain advantage for the main PDP fulfillment activities related to the responsibilities of the Data Controller employees (Data Operators). This confirms the high importance of complying with legal requirements and ensuring strict internal rules in the relevant institution.

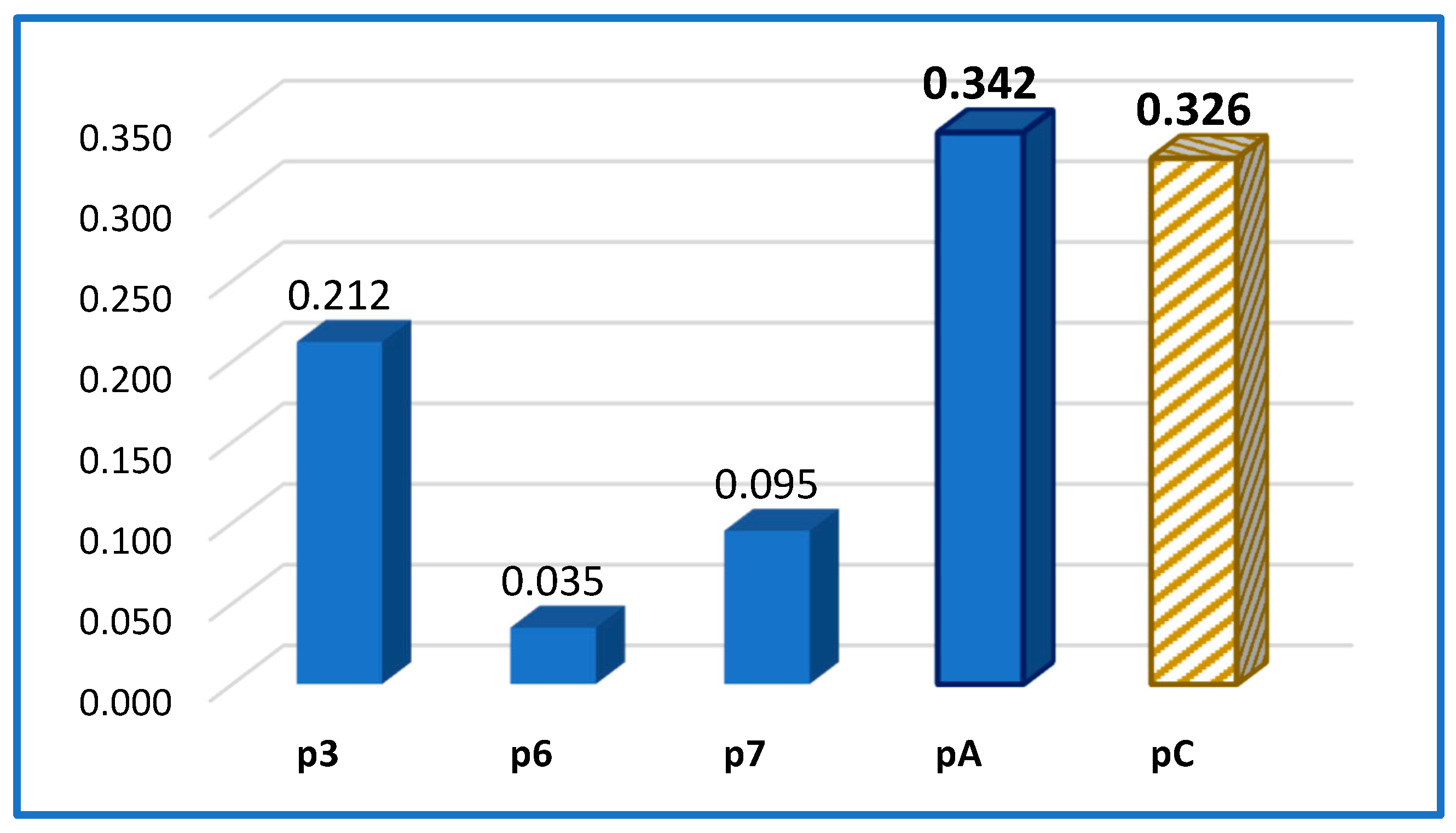

On the other hand, it should be emphasized that moving some PDP activities to the cloud has a certain effect, leading to a certain reduction in the level of employment in certain states (DFD processes). This can be seen from the joint presentation of the transferred activities in the two models, “A” and “C”, in

Figure 7.

Although moving certain process activities (6 *, 8 *, and 9 * of DFD [

Figure 2]) to the cloud eases the workload of ISS service operators, it does not change the responsibility of the Data Controller or the requirement to ensure internal rules for identification and access management. Due to the fact that standard data protection mechanisms are traditionally applied in the cloud, the use of data centers and cloud services should only take place after providing serious guarantees for ensuring adequate data protection related to access management, authorization, authentication, maintenance of audit information, implementation of architectural requirements for the cloud platform, etc. In this sense, the patent from [

12] can provide the necessary level of security when processing personal data in a distributed environment. One solution is to define authorization in depth and implement it at three levels: high level—for meta-level management of access to applications and resources; middle level—for data level access control; and low level—to control functions with specific data.

5. Conclusions

The main purpose of this article is to present a point of view for transferring certain personal data processing activities to a cloud environment using data centers (data warehouses) and the virtual environment of the cloud for multiple communication. The main problem for discussion is the implementation of adequate procedures for information security in the organization of a heterogeneous environment for maintaining information resources. This article specifically discusses the organization of a system for ensuring the reliable protection of profiles with personal data. The relevance of this problem is confirmed by the continuous development of digital technologies, which creates challenges for personal privacy [

21]. In practice, this is one stage of the overall development and investigation of the heterogeneous environment, where a stochastic approach is applied to further validate the effectiveness of the planned PDP procedures.

The main contribution is the formalization of personal data processing using the DFD apparatus and the analytical development of the presented stochastic models, allowing us to make a comparison of the features of the processes supported in the traditional and cloud versions. From the conducted model investigation and analysis of the obtained estimates in the case of stationary processes, it can be seen that regarding the obligations of the Data Controller, there is no significant difference between the relative weights of the two options. At the same time, both models maintain the importance of authorization and authentication as information security processes. This confirms the need to build a serious ISS with specific measures to protect PDR, which must meet clearly defined rules and responsibilities when working with cloud resources. Such a cloud platform should provide the following capabilities: ✓ integrity of stored user data; ✓ preventing unauthorized access to personal data; ✓ maintaining complete information about every attempt to access personal data; ✓ possibility of easy verification by the user as to whether the PDP policy is followed; and ✓ possibility of efficient and secure processing of sensitive personal data.

The obtained results of this research provide an idea of the relevance of the processes in the two selected implementation options, and the goal is to determine the effectiveness of the application of cloud services. They can be used in the selection of specific techniques and means, mainly in the organization of the security of access to personal data, as well as in the implementation of heterogeneous systems, such as proposed in [

22].

The research carried out allows for an extension in several directions defining future research, for example, an extension of the model study by applying deterministic means such as graph theory [

23] and the Petri nets apparatus [

22], as well as possibly simulation modeling of the main work processes with personal data, mainly in cloud services, with statistical analysis of the accumulated data from experiments [

24].