1. Introduction

When auxiliary variables are not available, autoregressive models are widely used to model this kind of time series data. Typically, the response is often assumed to depend linearly on its previous values. Among all autoregressive models, the autoregressive model of the first order, i.e., AR(1), is the simplest, which takes the following form:

where

and

are unknown parameters with

being the intercept item and

the autoregression coefficient, and

denotes the sequence of random errors or innovations having means of zero.

In many previous studies, a considerable amount of work has been provided on statistical inferences [

1,

2,

3,

4,

5,

6] and related applications [

7,

8,

9] for AR models. In terms of practical applications, AR models are commonly used to describe the behavior of inflation or logarithmic exchange rate, where people are interested in whether there is a unit root or persistence of related variables. However, a precondition for an accurate unit root test or persistence test is that the model is properly fitted so that the parameters can be reasonably estimated. To guarantee this, it is important to perform predefined tests, e.g., the unit root test and serial correlation test, on the rationality of using the AR model before conducting a relevant economic analysis.

Among them, the unit root test is the most commonly mentioned. Note that the limit distributions of the estimators of

and

depend on whether the process

is stationary or non-stationary, i.e., Case (i)

(stationary), Case (ii)

and

for some nonzero constant

c (nearly integrated if

, and unit root if

), and Case (iii)

and

for some nonzero constant

c (nearly integrated if

). It is well known that when the AR process has a unit root, its many statistical procedures have quite complex limit distributions, differing from that for the stationary case. Hence, various testing methods have been developed in the past decades to address the issue of unit root, including the augmented Dickey–Fuller (ADF) test [

10], the Phillips–Perron (PP) test [

11], the DF–GLS test [

3], and the KPSS test [

12], etc.

It is worth mentioning that if the true underlying innovations are correlated, the finite sample performance of the tests above may be greatly affected. To improve the efficiency of the estimation, a natural idea is to take into account the special structure of the errors if available. Note that it is common to assume that the errors further follow an AR process when they are correlated, while the performance of some testing procedures can be greatly improved once the AR structure has been addressed sufficiently, as shown in [

13,

14].

In detail, Ref. [

13] considered the following autoregressive model with AR errors:

where

denotes the vector of unknown parameters involved in the AR errors, and

denotes the random error involved in

. Compared to Model (

1), Ref. [

13] here further assumed that

follows an AR process. Note that (

2) implies

. A unified unit root test was developed by considering the special structure in

. Their test has been shown to have desirable properties, as the related statistic converges in distribution to a standard chi-squared distributed variable. However, their test depends on preconditions such that the AR structure of

has been well specified, and

p is properly predefined. The violation of these conditions may result in power loss in this method, as shown in our simulations.

To this end, we are interested in producing statistics to test whether

is equal to some given constant vector

under Cases (i)–(iii), which has not been considered in the literature to the best of our knowledge. Note that although many tests have been developed for testing the possible serial correlation in

, including the Lagrangian multiplier (LM) test [

15], Box–Pierce (BP) test [

16], and Ljung–Box (LB) test [

17], etc., they cannot be used directly to test the hypothesis above. In view of this, we propose an empirical likelihood-based statistic for testing this issue by taking into account the AR structure. Note that the setting in Case (ii) causes issues in the derivation of the asymptotic distribution, as well as the related applications. A new data-splitting idea is also employed in order to unify Cases (i)–(iii). It turns out that the proposed statistic converges in distribution to a standard chi-squared distributed variable regardless of

being stationary or non-stationary, due to the special block structure of the asymptotic covariance matrix. The simulations show that our method has a good size, as well as nontrivial power performance in finite sample cases.

As a nonparametric method, empirical likelihood (EL) was firstly proposed by [

18]. Because of its many excellent properties, i.e., no need to assume the parameter distribution in advance, it has been widely used in the literature when parametric methods do not work well to produce satisfactory results. Many authors have devoted themselves to extending this method. To name but a few, Ref. [

19] obtained confidence regions for vector-valued statistical functions, which is a multivariate generalization of the work of [

18]. Refs. [

20,

21] extended the empirical likelihood method to the setting of regression models and general estimation equations, respectively. Recently, Ref. [

22] discussed the possibility of constructing unified tests by using empirical likelihood based on a weighted technique for time series models. Ref. [

13] further extended this weighted technique to AR models with AR errors. Further, Ref. [

23] applied the empirical likelihood method to test the heteroscedasticity for errors of single-index model. Ref. [

6] developed a unified empirical likelihood inference method to test the predictability regardless of the properties of the predicting variable. Ref. [

24] considered the unified test problem in a predictive regression model. To move the effect of the possible existence of an intercept, the idea of data-splitting has also been developed in [

24]. The literature above inspired the current research.

We organize the rest of this paper as follows.

Section 2 develops the unified test for the AR structure of the AR models.

Section 3 reports the finite-sample simulation results.

Section 4 applies the proposed test to the exchange rates between the U.S. dollar and eight countries.

Section 5 concludes this paper. The detailed proof of the main theorem is specified in

Appendix A.

2. Methodologies and Asymptotic Results

Supposing the random observations

are generated from the model (

2) with possible AR errors. Formulate

and let

be its true value.

Note that when

,

is a sequence of iid variables, it is more efficient to construct a statistical procedure on

than on

, as discussed in [

13], where

for a given

. However, their method depends on an assumption that the structure of the AR errors has been correctly specified, which needs to be pretested in practice. This motivates us to consider the following hypothesis:

Remarkably, when

,

is a sequence of iid errors.

Note that when

takes the true underlying value

, we have

where

,

denotes the sigma field generated by

, and

which can be obtained by taking the partial differential to the sum of least squares, i.e.,

with respect to

. Then, similar to [

21], one can use the profile empirical likelihood method to construct a test for hypothesis

based on

.

However, following [

22], it is easy to verify that the resulting test does not converge in distribution to a standard chi-squared variable because the quantity

does not converge in probability for Case (ii), i.e.,

and

for some nonzero constant

c (nearly integrated if

, and unit root if

). As an improvement, one may use the weighted technique developed in [

22] to construct a weighted empirical likelihood-based test. Unfortunately, the resulting testing statistic still faces a similar problem in the optimization step during the process of profiling the redundant parameters; see a similar discussion in [

25].

To overcome this problem, we propose the construction of the following empirical likelihood function for

:

based on the data-splitting idea, where

with

where

with

is the floor function. That is, we use the second half of the data to handle

, and the first half of the data to handle the rest of the parameters. Here,

is mainly used for technical consideration, which can relieve the correlation among

, and consequently improve the finite sample performance of the EL test.

Since our aim is to test

related to

, we are only interested in the parameter

. To this end, we treat the other parameters as redundant parameters, as in [

21], and obtain the profile empirical likelihood ratio as

To derive the asymptotic result for , we need the following regular conditions:

(C1) Suppose follows one of the following cases:

- -

(i) (Stationary) , independent of n;

- -

(ii) (Non-stationary without an intercept) for some constant c independent of n with ;

- -

(iii) (Non-stationary with an intercept) for some constant c independent of n with ;

(C2) when , and has no common root with .

(C3) are iid random errors, and satisfy for some constant .

These conditions are quite common, and can be found in studies such as [

13]. Here, (C2) is assumed to guarantee the stationarity of

.

Under these conditions, we have the following result.

Theorem 1. Suppose Conditions (C1)–(C3) hold. Then, under the null hypothesis ,as , where denotes a chi-squared random variable with p degrees of freedom, and ‘’ denotes the convergence in distribution. Remark 1. Using a similar proof to that of Theorem 1, we can show thatwhere with r being the dimension of , which is the true value. Theorem 1 is desirable because it shows that the proposed test has a standard chi-squared distribution asymptotically, regardless of which one of the Cases (i)–(iii) is followed by . Based on Theorem 1, we may reject the null hypothesis once at the significance level , where denotes the -th quantile of .

3. Simulation Results

In this section, we conduct some simulations to investigate the finite sample performance of the proposed test in terms of both size and power. The simulations consist of three parts. In the first part, we investigate the finite sample performance of the proposed profile empirical likelihood, and compare it with a combination of the LB test and the Akaike information criterion (AIC), i.e., using firstly the LB test to detect whether there exists a serial correlation in the residuals, and then by employing the AIC to determine the order of the AR structure in residuals. In the second part, we investigate the possibility of using the proposed method to test whether or not

is equal to some given

, which may be useful when verifying the extent of the stationarity of the AR errors. Note that the combination of the LB and AIC cannot be used to fulfill this type of task. In the last part, we study the impact of misdetermining the AR structure of the errors on the finite sample performance of the unit root test developed in [

13]. The LB test is computed with the R function

Box.test.R, while for the computing of the profile empirical likelihood, we first use R package

emplik to obtain the log-empirical likelihood ratio, and then optimize this log ratio by using the

nlm.R function. All of these R functions are well-documented, and are currently available from the CRAN of the R-project.

In the first part, the random observations

are generated from the model (

2) with

, which indicates that the model has no intercept and an intercept item, respectively. We take

from

, where 0.5 indicates that

is a stationary process, and 1 indicates that it is a unit root process, while

indicates a near unit root process.

is a sequence of iid random variables with means of zero and variances of one.

follows the three different scenarios listed below.

S1: The null hypothesis , i.e., has no serial correlation. The local alternative hypothesis: for some .

S2: The null hypothesis , i.e., has first-order serial correlation. The local alternative hypothesis: for some .

S3: The null hypothesis , i.e., has second-order serial correlation. The local alternative hypothesis: for some .

In all Scenarios S1–S3, d is taken from . All computations are carried out 10,000 times with n ranging from 300 to 1200.

Table 1 reports the size performance of the proposed method with different settings at the significance levels

. We also report the ratios of determining the order of the AR error incorrectly by using the AIC of Scenarios

S1–

S3 under the condition of

for comparison. The EL method has a good performance in all Scenarios

S1–

S3. The results show that the size values of the EL method gradually converge to the significance level as the sample size

n increases, no matter whether

is a stationary process, a near unit root process, or a unit root process, and regardless of whether

is 0 or not. Conversely, for the AIC method, when

follows a stationary process, the ratios of determining the order of the AR error incorrectly are only closer to 5% in

S1. Note that it performs poorly for the rest of the settings, meaning that it is affected greatly by the stationarity of

.

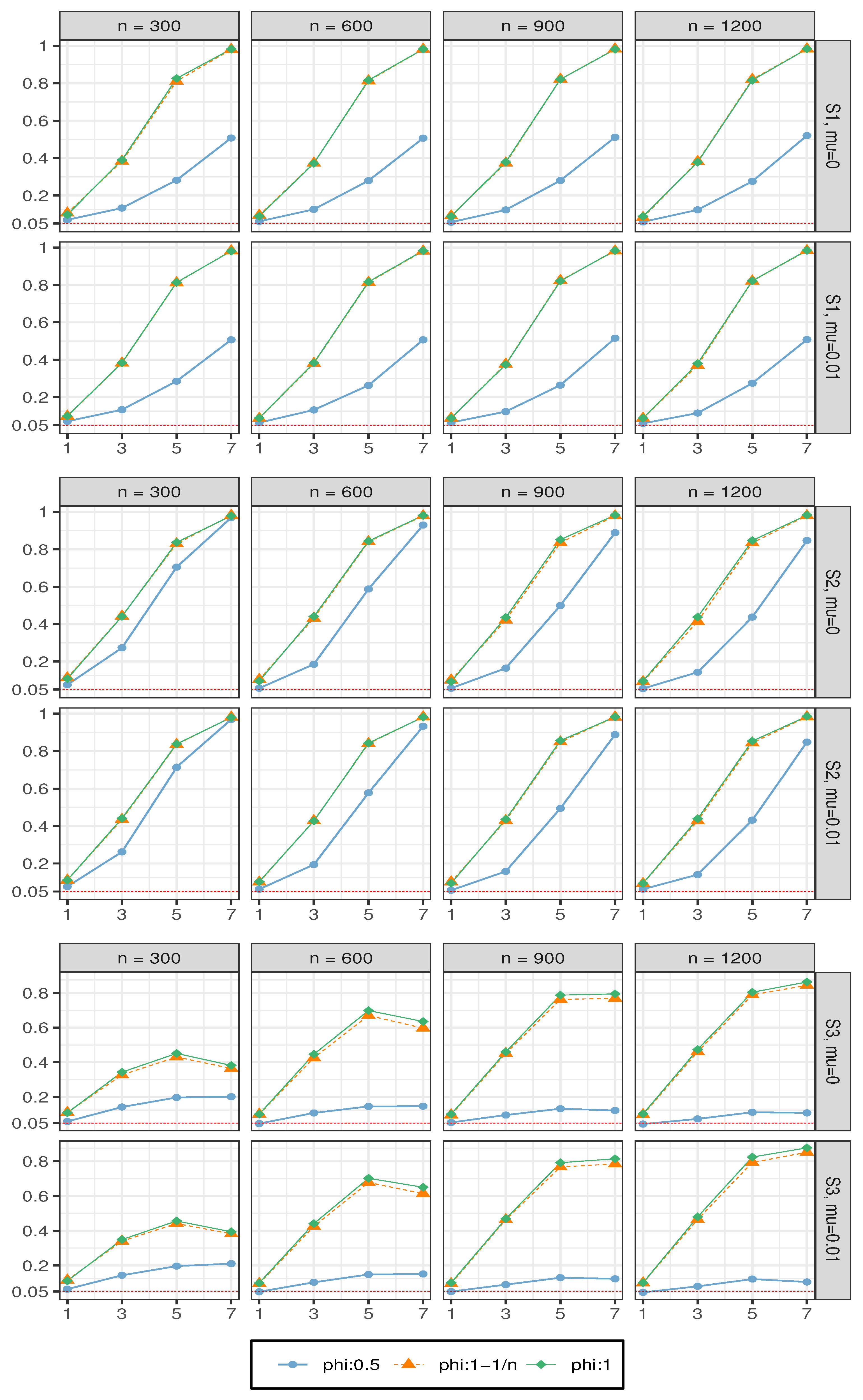

Figure 1 shows the power performance of the EL method. We can see that in

S1 and

S2, when

follows a stationary process, the convergence rate is the slowest. When

follows a near unit root process or a unit root process, as the value of

d increases, the power converges quickly to 1. In

S3, when

follows a near unit root process or a unit root process, as the value of

d increases to 7, the power values have a slightly descending tendency. This implies that although the stationarity of

does not impact on the order of the local alternative hypothesis, it does affect the power function of the EL method.

In the second part, we consider testing whether or not is equal to some given . We simulate two settings, i.e.,

(I): The null hypothesis against the local alternative hypothesis: , for some .

(II): The null hypothesis , against the local alternative hypothesis: , for some .

The other parameters are the same as those in the first part. The size (

) and power (

) performances are shown in

Figure 2. As expected, similar observations can be found in

Figure 3 as in the first part of simulations.

The simulation results in the first and second parts show that the proposed EL method has a good performance in specifying the AR error structure and testing whether or not is equal to some given , thereby confirming the theoretical result obtained in Theorem 1. It is worth noting that when taking the AR error structure into account, accurate identification is crucial, because it will affect the unit root test of the AR model. Therefore, in the third part, we conduct the following simulation to show the benefit of conducting a predefined test.

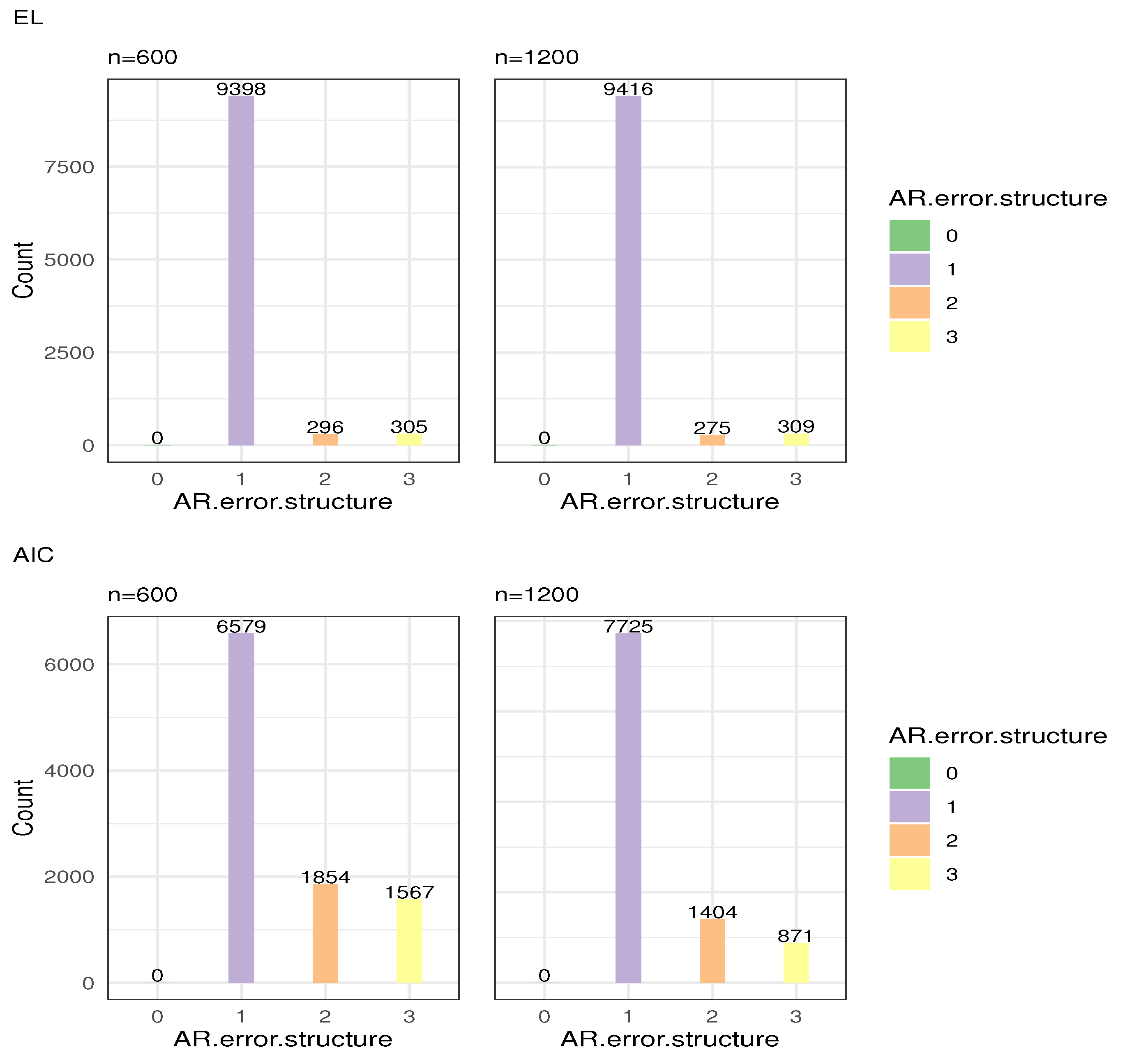

Step 1: We generate an AR model with an AR(1) error structure, and the parameters are

. Then, we use the EL and AIC methods to determine the order of the AR error. We consider sample sizes of 600 and 1200, repeat the tests 10,000 times, and record the order determination counts under the two methods. The results are shown in

Figure 3. The abscissa represents the order of the AR error, and the ordinate is the number of each order. It can be seen from

Figure 3 that under all sample sizes, the two methods show that the residuals have a serial correlation. For the EL test, in 10,000 experiments, 9398 of them are correctly ordered, and the error rate is only 6.02%. When the sample size increases to 1200, the error rate decreases to 5.84%. For the AIC, when the sample size is 600, the error rate is 34.21%, and when the sample size is 1200, the error rate is 22.75%. It is obvious that compared with the AIC method, the EL test has advantages in identifying the order of the correlated errors, which is consistent with the above simulation results.

Step 2: We use the method proposed in [

13] to test the unit root of an AR (1) model when the AR error order is correctly and incorrectly determined.

Table 2 records the probability of identifying a unit root when the real data are a near unit root. The results show that when the true underlying structure of the AR error is incorrectly specified, the power of the test proposed in [

13] suffers from a loss compared to the case when the true underlying structure of the AR error is correctly specified. This shows the necessity of correctly testing the AR error structure before conducting the unit root test if one wants to obtain a more reliable unit root test result.

To summarize, the EL method proposed in this paper has obvious advantages in identifying the AR error structure, and these two methods are crucial in the subsequent real data analysis.

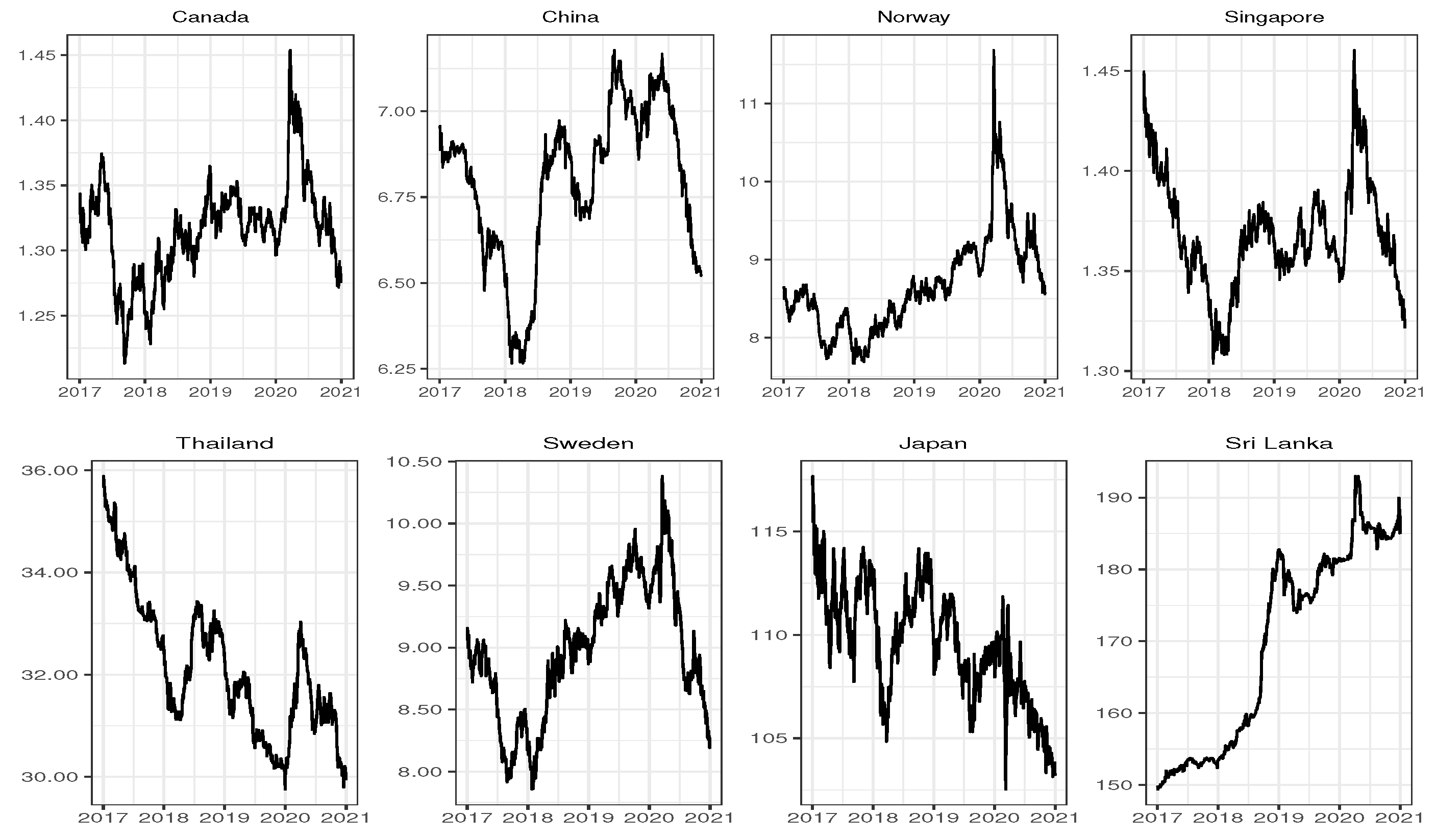

4. A Financial Real Data Application

In this section, we provide a real financial data example. The purpose of this section is to explore the error structure of different exchange rate markets. We collected the exchange rates of eight countries, including developed and developing countries, against the U.S. dollar. Currencies from developed countries include the Canadian dollar (CAD), Norwegian Kroner (NKR), Singapore dollar (SGD), Swedish Kronor (SKR) and Japanese yen (JPY). Currencies from developing countries include Chinese yuan (CNY), Thai baht (THB) and Sri Lanka Rupees (SRE). All data are downloaded from FRED database (

fred.stlouisfed.org). The sample period is the daily data from 2 January 2017 to 31 December 2020 (

). Their time series graphs are provide in

Figure 4.

We report the least squares estimation of the unknown parameters

and

, and the testing results of the EL, LB, and AIC methods, where the LB and AIC tests were conducted on residuals obtained from the least squares method. All results are listed in

Table 3, in which the second and third columns are the estimated intercept and autoregressive coefficients, respectively; the fourth column is the order determination result of the EL test; the fifth column is the

p-values of the EL test; and the last two columns are the

p-values of LB test and the order determination result of AIC, respectively. The AIC test shows that most sequences have a serial correlation, except for CNY and JPY, while the EL method indicates that only one country’s data has an AR error of up to an order of 2. Note that the AIC tends to determine the correlated errors with a higher order than for Cases (ii)–(iii), while for most cases, the estimated

is very close to 1, i.e., a near unit root. It seems that the testing results for this dataset coincide roughly with the observations in the simulations.