Accelerated Modified Tseng’s Extragradient Method for Solving Variational Inequality Problems in Hilbert Spaces

Abstract

:1. Introduction

2. Preliminaries

- (i)

- L-Lipschitz continuous withif the following is the case.Ifthen A is called a contraction mapping. Ifthen A is called nonexpansive mapping.

- (ii)

- It is monotone if the following is the case.

- (iii)

- A is called strictly monotone if for any, the following is the case:and the equality is possible only if

- (iv)

- A is called strongly monotone if for any, the following is the case:where the nonnegative functiondefined atsatisfies the conditionandwhen

- (v)

- A is called pseudomonotone if the following is the case.

- (i)

- for all ;

- (ii)

- for all ;

- (iii)

- for all

3. Main Results

- Step 0: Given for some and satisfying the following conditions:choose the initial and set

- Step 1: Set the following:and compute the following.If then stop the computation. is a solution to the problem (VIP). Otherwise, proceed to Step 2.

- Step 2: Set the following:and compute the following:whereSet and proceed to Step 1.

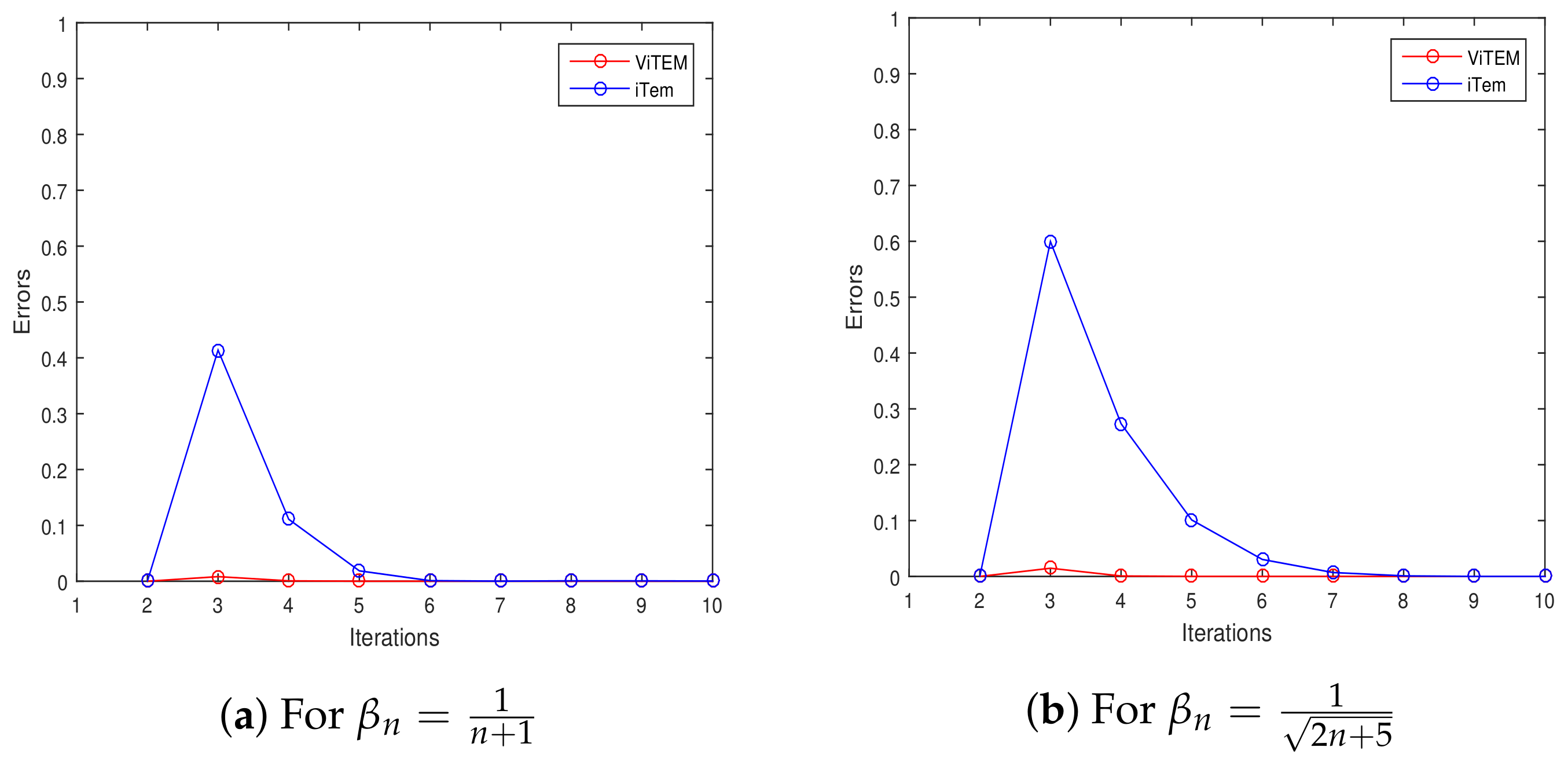

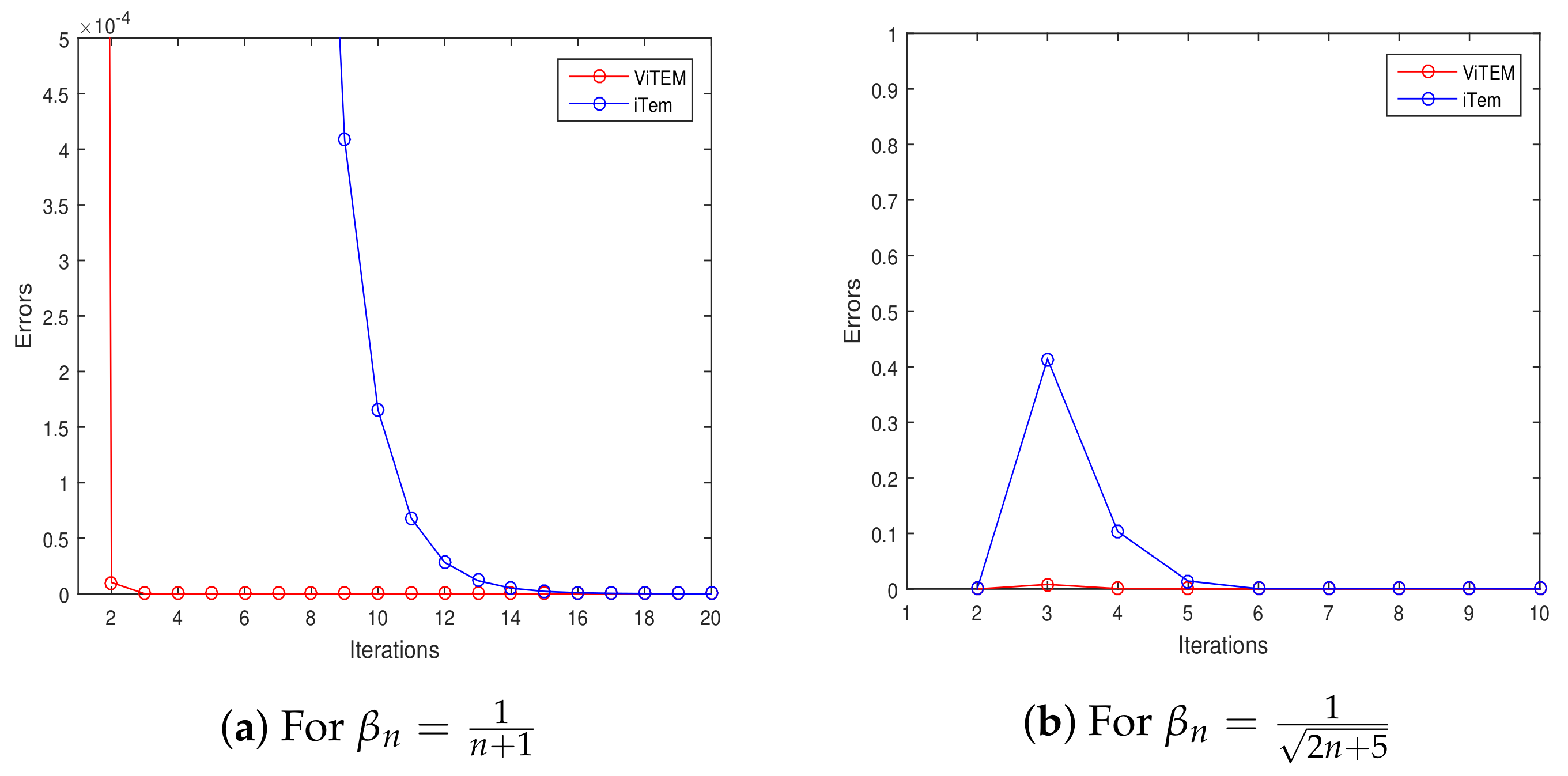

4. Numerical Illustrations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stampacchia, G. Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. 1964, 258, 4413–4416. [Google Scholar]

- Kinderleh, D.; Stampacchia, G. An introduction to variational inequalities and their applications. SIAM Class. Appl. Math. 2000, CL31, 222–277. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithm 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Khan, A.R.; Ugwunnadi, G.C.; Makukula, Z.G.; Abbas, M. Strong convergence of inertial subgradient extragradient method for solving variational inequality in Banach space. Carpathian J. Math. 2019, 35, 327–338. [Google Scholar] [CrossRef]

- Maingé, P.E. Projected subgradient techniques and viscosity for optimization with variational inequality constraints. Eur. J. Oper. Res. 2010, 205, 501–506. [Google Scholar] [CrossRef]

- Okeke, G.A.; Abbas, M.; de la Sen, M. Inertial subgradient extragradient methods for solving variational inequality problems and fixed point problems. Axioms 2020, 9, 51. [Google Scholar] [CrossRef]

- Alber, Y.I.; Iusem, A.N. Extension of subgradient techniques for nonsmooth optimization in Banach spaces. Set-Valued Anal. 2001, 9, 315–335. [Google Scholar] [CrossRef]

- Xiu, N.H.; Zhang, J.Z. Some recent advances in projection-type methods for variational inequalities. J. Comput. Appl. Math. 2003, 152, 559–587. [Google Scholar] [CrossRef] [Green Version]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Ekon. Mat. Metod. 1976, 12, 747–756. [Google Scholar]

- Antipin, A.S. On a method for convex programs using a symmetrical modification of the Lagrange function. Ekon. Mat. Metod. 1976, 12, 1164–1173. [Google Scholar]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Chen, J.; Liu, S.; Chang, X. Modified Tseng’s extragradient methods for variational inequality on Hadamard manifolds. Appl. Anal. 2021, 100, 2627–2640. [Google Scholar] [CrossRef]

- Suantai, S.; Kankam, K.; Cholamjiak, P. A novel forward-backward algorithm for solving convex minimization problem in Hilbert spaces. Mathematics 2020, 8, 42. [Google Scholar] [CrossRef] [Green Version]

- Thong, D.V.; Vinh, N.T.; Cho, Y.J. A strong convergence theorem for Tseng’s extragradient method for solving variational inequality problems. Optim. Lett. 2019, 14, 1157–1175. [Google Scholar] [CrossRef]

- Attouch, H.; Goudon, X.; Redont, P. The heavy ball with friction. I. The continuous dynamical system. Commun. Contemp. Math. 2000, 2, 1–34. [Google Scholar] [CrossRef]

- Attouch, H.; Czamecki, M.O. Asymptotic control and stabilization of nonlinear oscillators with non-isolated equilibria. J. Differ. Equ. 2002, 179, 278–310. [Google Scholar] [CrossRef] [Green Version]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Maingé, P.E. Inertial iterative process for fixed points of certain quasi-nonexpansive mappings. Set-Valued Anal. 2007, 15, 67–69. [Google Scholar] [CrossRef]

- Maingé, P.E. Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 2008, 219, 223–236. [Google Scholar] [CrossRef] [Green Version]

- Ceng, L.C. Asymptotic inertial subgradient extragradient approach for pseudomonotone variational inequalities with fixed point constraints of asymptotically nonexpansive mappings. Commun. Optim. Theory 2020, 2020, 2. [Google Scholar]

- Tian, M.; Xu, G. Inertial modified Tseng’s extragradient algorithms for solving monotone variational inequalities and fixed point problems. J. Nonlinear Funct. Anal. 2020, 2020, 35. [Google Scholar]

- Attouch, H.; Peypouquet, J.; Redont, P. A dynamical approach to an inertial forward-backward algorithm for convex minimization. SIAM J. Optim. 2014, 24, 232–256. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R.; Hendrich, C. Inertial Douglas-Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 2015, 256, 472–487. [Google Scholar]

- Chen, C.; Chan, R.H.; Ma, S.; Yang, J. Inertial proximal ADMM for linearly constrained separable convex optimization. SIAM J. Imaging Sci. 2015, 8, 2239–2267. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R. A hybrid proximal-extragradient algorithm with inertial effects. Numer. Funct. Anal. Optim. 2015, 36, 951–963. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.Q.; Cho, Y.J.; Zhong, L.L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Bot, R.I.; Csetnek, E.R. An inertial forward-backward-forward primal-dual splitting algorithm for solving monotone inclusion problems. Numer. Algor. 2016, 71, 519–540. [Google Scholar] [CrossRef] [Green Version]

- Moudafi, A. Viscosity approximations methods for fixed point problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef] [Green Version]

- Khan, S.H. A Picard-Mann hybrid iterative process. Fixed Point Theory Appl. 2013, 2013, 69. [Google Scholar] [CrossRef]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry and Nonexpansive Mappings; Marcel Dekker: New York, NY, USA; Basel, Switzerland, 1984. [Google Scholar]

- Kimura, Y.; Saejung, S. Strong convergence for a common fixed point of two different generalizations of cutter operators. Linear Nonlinear Anal. 2015, 1, 53–65. [Google Scholar]

- Saejung, S.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 2012, 75, 742–750. [Google Scholar] [CrossRef]

- Suantai, S.; Pholasa, N.; Cholamjiak, P. The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J. Ind. Manag. Optim. 2018, 14, 1595–1615. [Google Scholar] [CrossRef] [Green Version]

| , | , | |

|---|---|---|

| ViTEM | 0.039508 s (iter = 5) | 0.039197 s (iter = 5) |

| iTEM | 0.040179 s (iter = 13) | 0.040681 s (iter = 15) |

| , | , | |

|---|---|---|

| ViTEM | 0.078728 s (iter = 3) | 0.073666 s (iter = 3) |

| iTEM | 0.081701 s (iter = 15) | 0.076256 s (iter = 15) |

| , | , | |

|---|---|---|

| ViTEM | 0.022461 s (iter = 4) | 0.190571 s (iter = 5) |

| iTEM | 0.032179 s (iter > 100) | 0.590528 s (iter > 100) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Okeke, G.A.; Abbas, M.; De la Sen, M.; Iqbal, H. Accelerated Modified Tseng’s Extragradient Method for Solving Variational Inequality Problems in Hilbert Spaces. Axioms 2021, 10, 248. https://doi.org/10.3390/axioms10040248

Okeke GA, Abbas M, De la Sen M, Iqbal H. Accelerated Modified Tseng’s Extragradient Method for Solving Variational Inequality Problems in Hilbert Spaces. Axioms. 2021; 10(4):248. https://doi.org/10.3390/axioms10040248

Chicago/Turabian StyleOkeke, Godwin Amechi, Mujahid Abbas, Manuel De la Sen, and Hira Iqbal. 2021. "Accelerated Modified Tseng’s Extragradient Method for Solving Variational Inequality Problems in Hilbert Spaces" Axioms 10, no. 4: 248. https://doi.org/10.3390/axioms10040248

APA StyleOkeke, G. A., Abbas, M., De la Sen, M., & Iqbal, H. (2021). Accelerated Modified Tseng’s Extragradient Method for Solving Variational Inequality Problems in Hilbert Spaces. Axioms, 10(4), 248. https://doi.org/10.3390/axioms10040248