Efficient Multi-Modal Learning for Dual-Energy X-Ray Image-Based Low-Grade Copper Ore Classification

Abstract

1. Introduction

- We suggest using DE-XRT to classify low-grade copper ores and wastes and construct a dual-energy spectral image dataset of copper ores. Multi-modal learning is utilized by treating the differences between high-energy and low-energy spectral images as distinct modalities.

- We developed an efficient and lightweight dual-tower network that effectively integrates information from both energy spectra and employs a robust multi-layer KAN as a classifier. This architecture not only reduces computational costs and enhances operational efficiency but also improves sorting accuracy.

- We curated a dataset of 31,057 dual-energy spectrum image pairs for copper ore and waste. Our proposed method achieves competitive or superior classification performance compared to mainstream networks, all while maintaining a remarkably compact model size of merely 1.32 M parameters.

2. Related Works

2.1. Feature Engineering-Based Methods

2.2. Feature Learning-Based Methods

3. Methodology

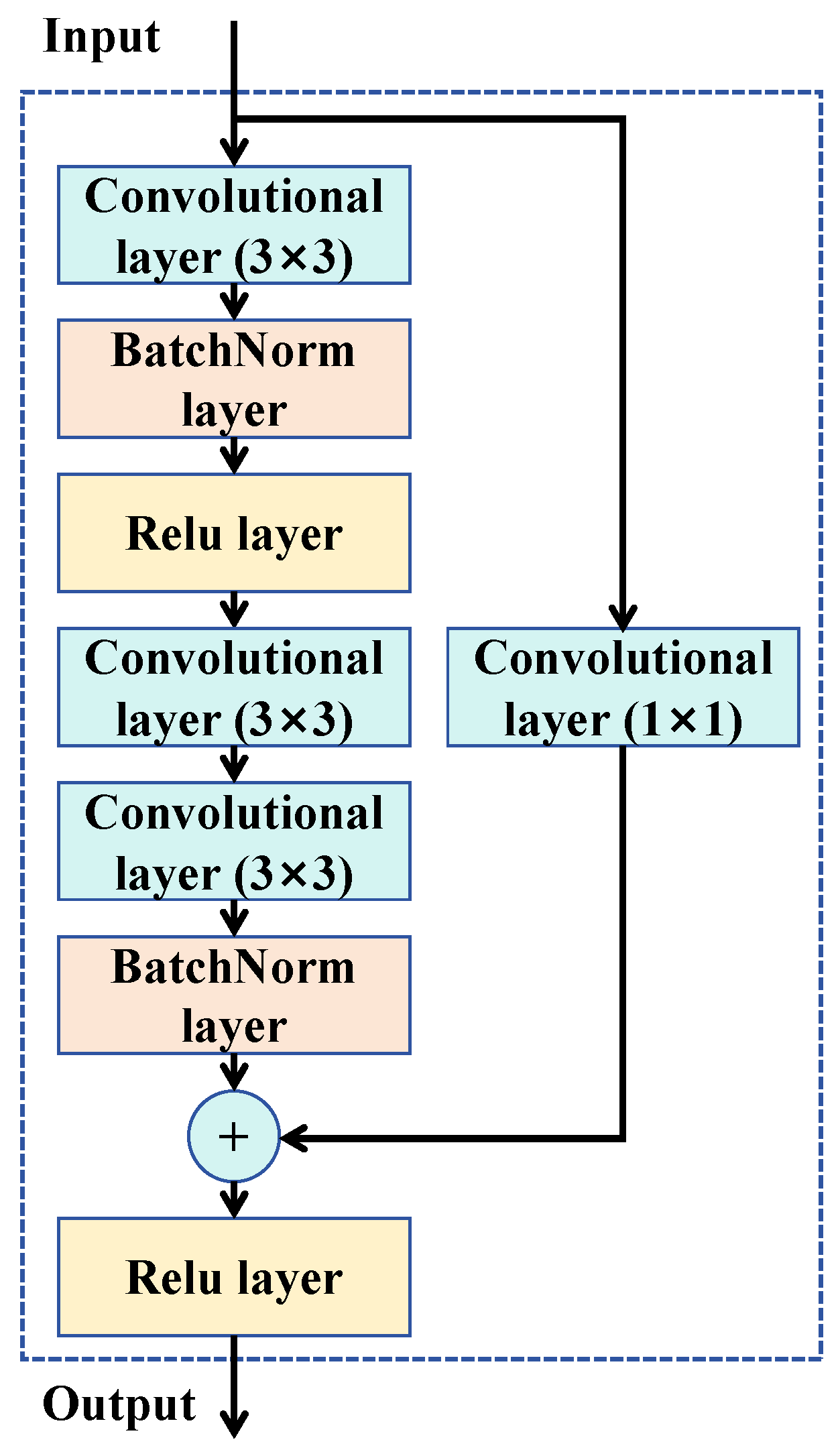

3.1. The Improved Residual Module

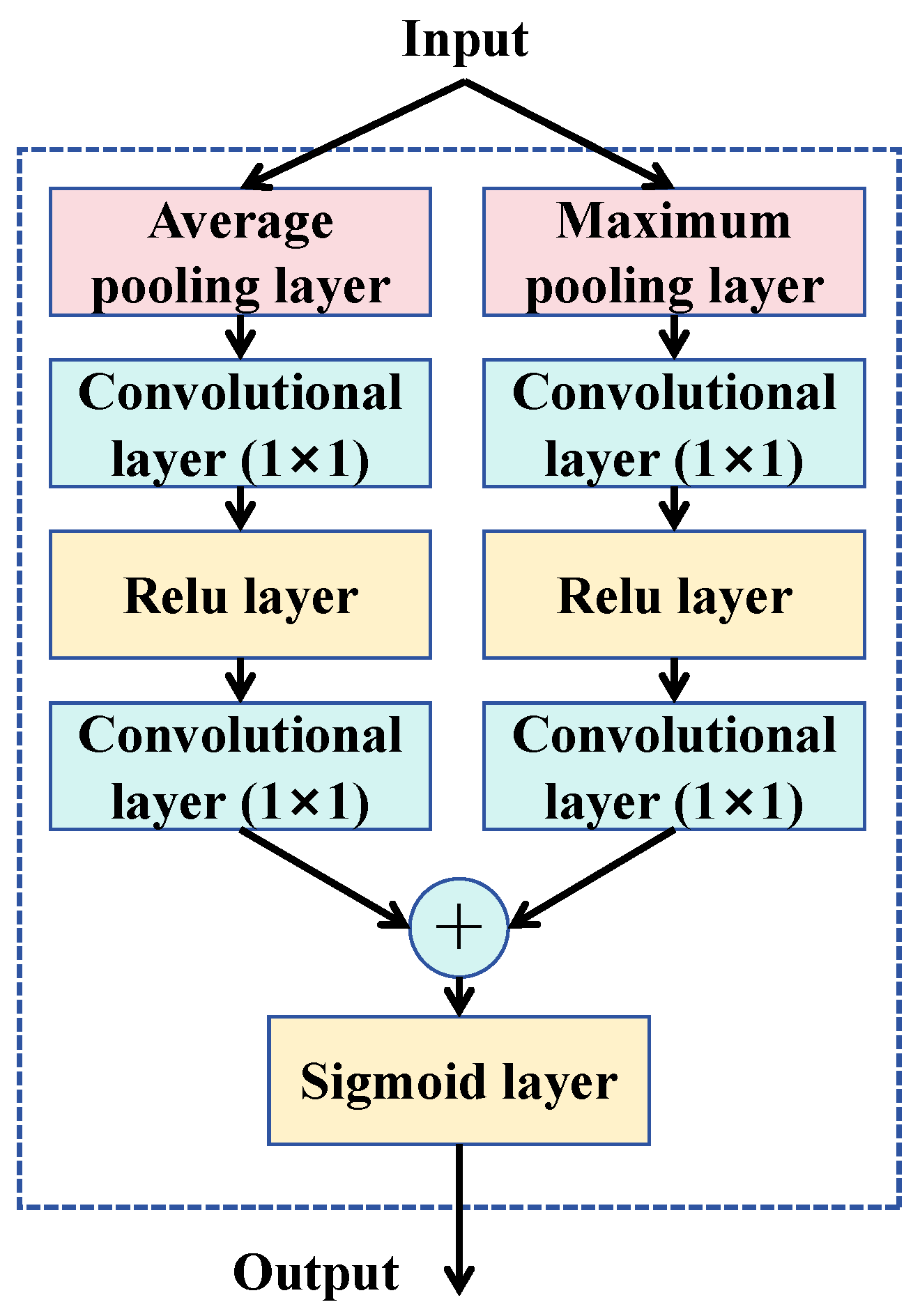

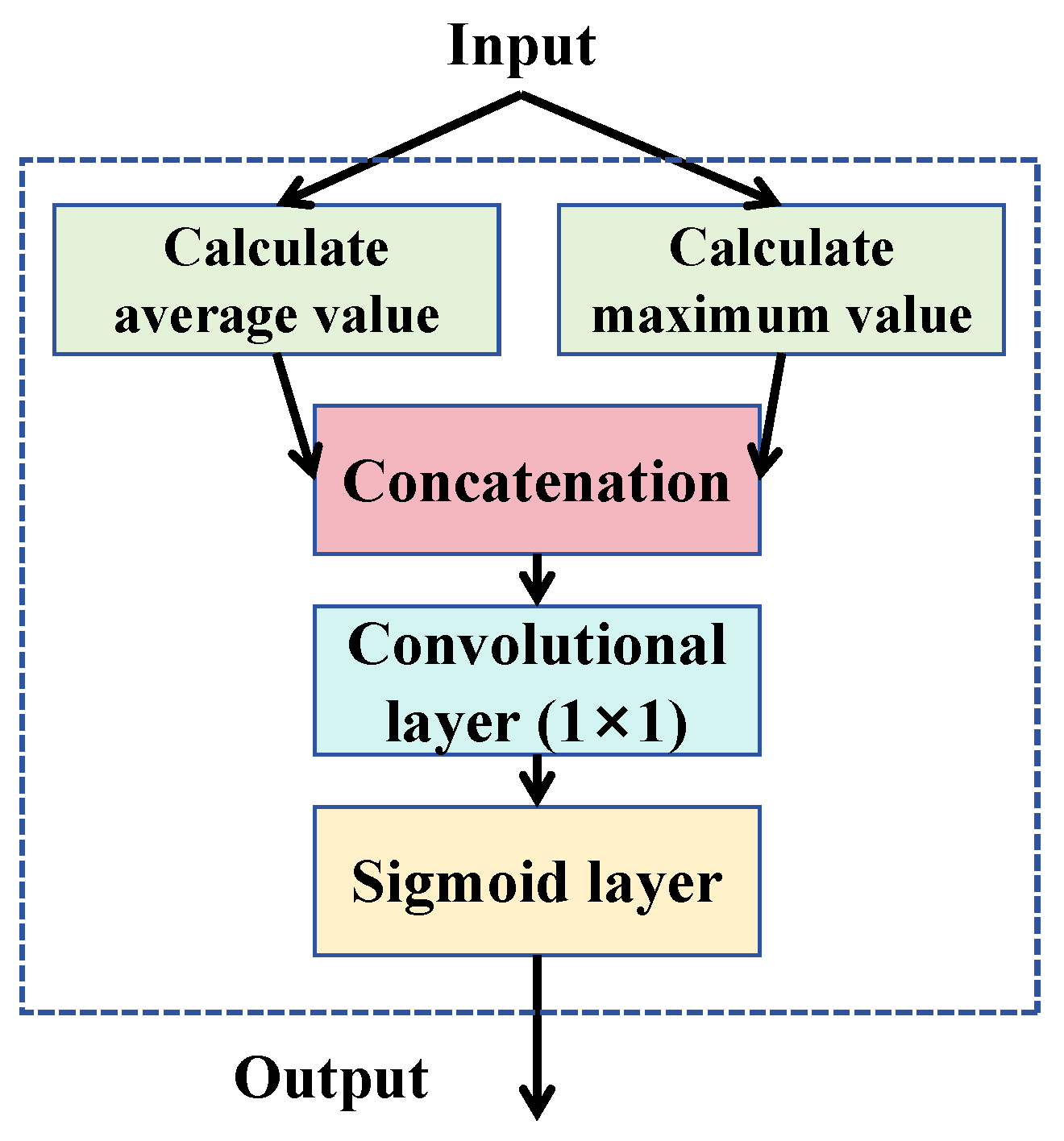

3.2. Attention Mechanism

3.3. Dual-Energy Spectrum Fusion

3.4. KAN-Based Classifier Utilizing Chebyshev Polynomials

4. Results and Discussion

4.1. Experimental Setting

4.2. Comparative Experimental Results

4.3. Ablation Studies

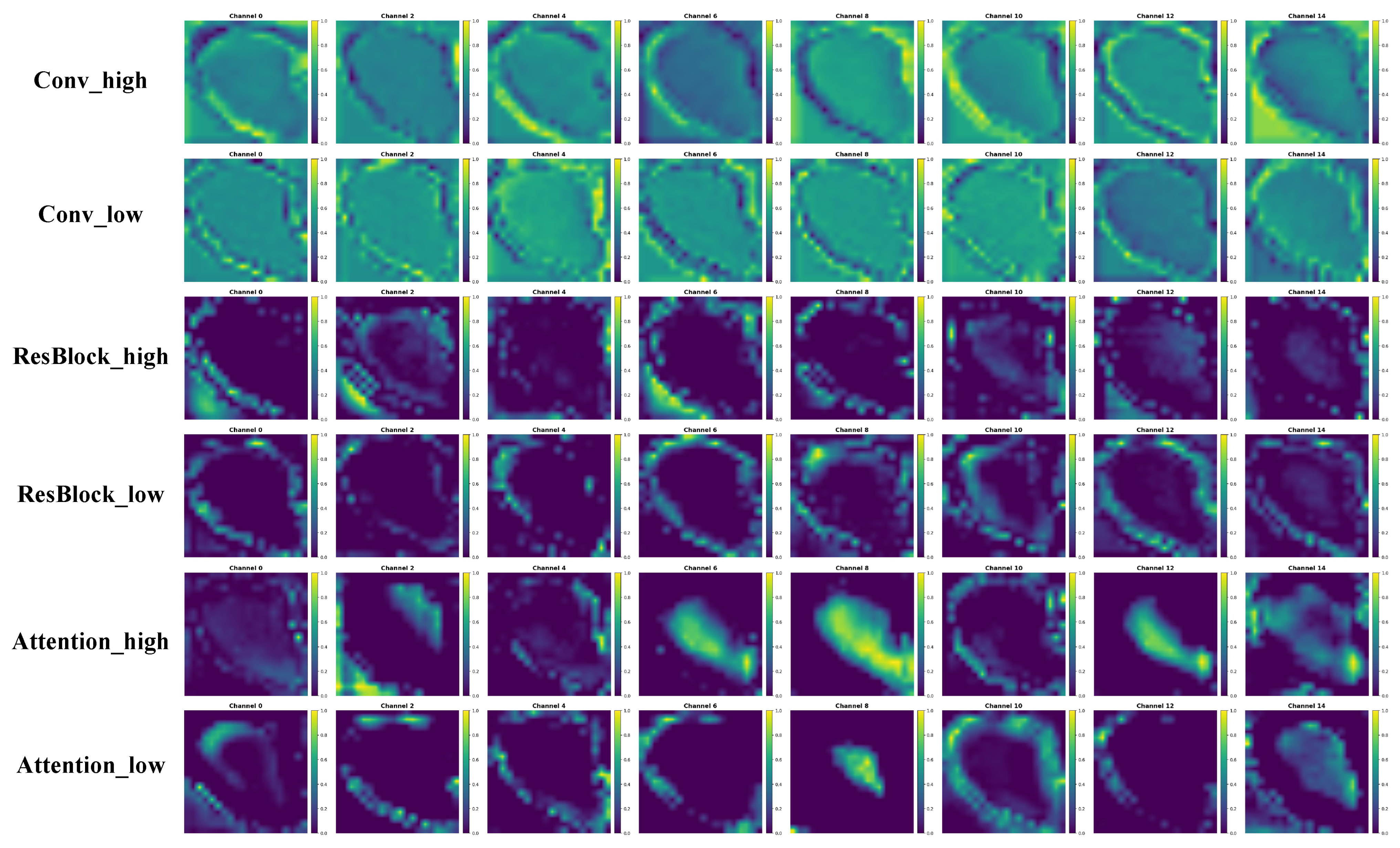

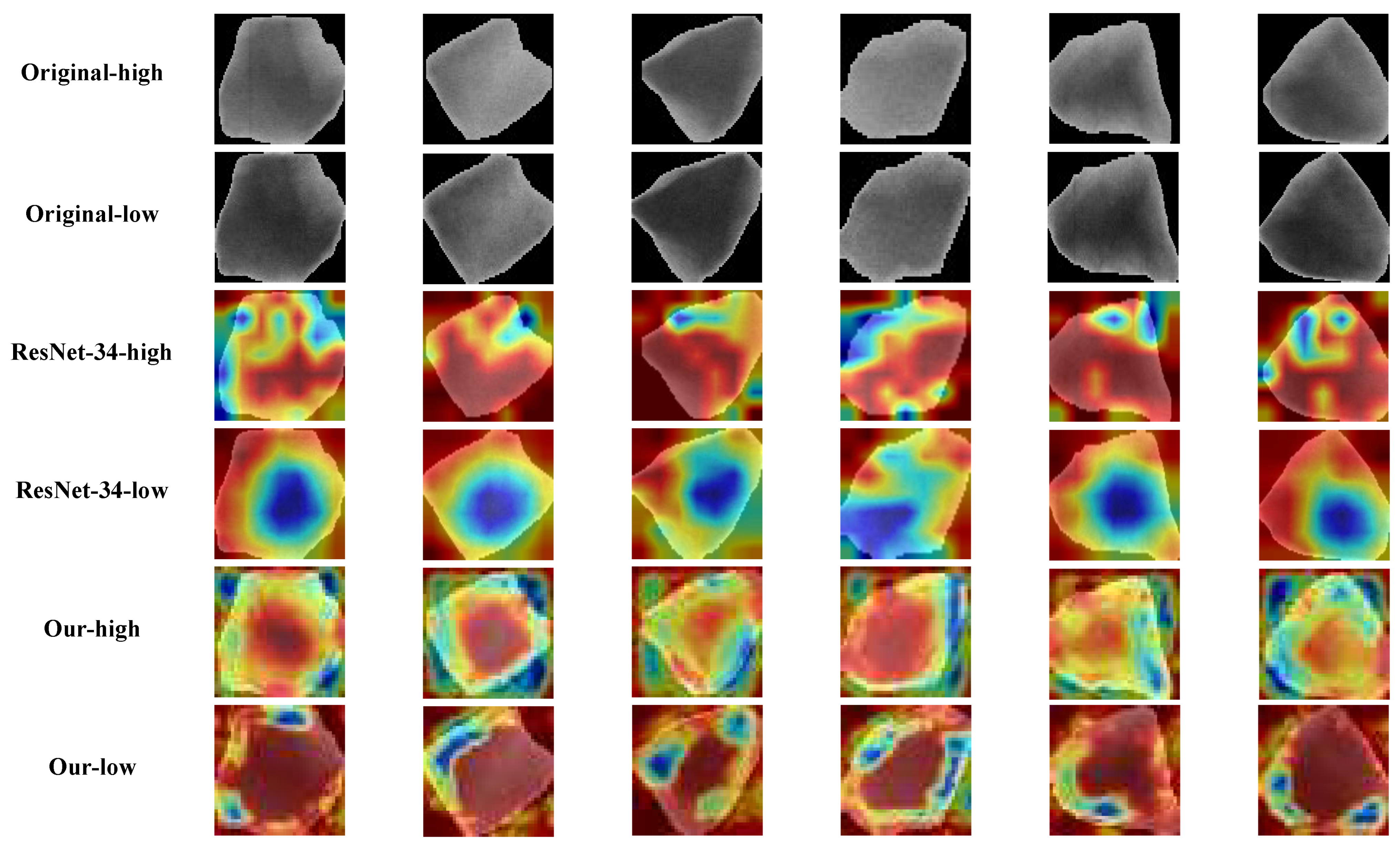

4.4. Visualization

5. Conclusions

- (1)

- The experimental results show that the fusion of dual-energy spectral image data significantly improves the classification performance of the model. Compared with the various indicators of the single energy spectrum, although the recall rate has slightly decreased, the average of the other four indicators has increased by approximately 1%. Furthermore, our model achieves the optimal comprehensive classification performance when the number of parameters is only 1.32 M (only 6.2% of ResNet-34). This result fully demonstrates the potential and effectiveness of the dual-energy spectral image combined with multi-modal learning method in the sorting of copper ore/waste rock.

- (2)

- The slightly poor performance of the model on high-energy spectrum data might be caused by the improper selection of the energy spectrum. The strong penetration ability of high-energy spectrum X-rays may lead to the loss of effective information in the image, thereby affecting the classification effect. Limited by the conditions of data collection, experiments with more energy spectrum data have not been conducted. However, the existing results have shown that the multi-energy spectrum classification method has great potential. In the future, by choosing a more appropriate energy spectrum image dataset, it is expected that the model performance will be further improved.

- (3)

- Through extensive visualizations, we have gained an in-depth understanding of the classification mechanism of the model. The visualization results show that the model is more inclined to classify by using the internal structure information of the ore rather than the external contour.

- (4)

- Although the classification method based on dual-spectral images is effective, its data collection difficulty and cost are relatively high, and the cost is higher than that of the general SE-XRT method. Therefore, exploring simpler and more efficient network structures and modal fusion methods, as well as testing more effective energy spectra, are the key directions for the optimization and improvement of this method.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yin, S.h.; Chen, W.; Fan, X.l.; Liu, J.m.; Wu, L.B. Review and prospects of bioleaching in the Chinese mining industry. Int. J. Miner. Metall. Mater. 2021, 28, 1397–1412. [Google Scholar] [CrossRef]

- Northey, S.; Mohr, S.; Mudd, G.; Weng, Z.; Giurco, D. Modelling future copper ore grade decline based on a detailed assessment of copper resources and mining. Resour. Conserv. Recycl. 2014, 83, 190–201. [Google Scholar] [CrossRef]

- Li, L.; Pan, D.; Li, B.; Wu, Y.; Wang, H.; Gu, Y.; Zuo, T. Patterns and challenges in the copper industry in China. Resour. Conserv. Recycl. 2017, 127, 1–7. [Google Scholar] [CrossRef]

- Lu, H.; Qi, C.; Chen, Q.; Gan, D.; Xue, Z.; Hu, Y. A new procedure for recycling waste tailings as cemented paste backfill to underground stopes and open pits. J. Clean. Prod. 2018, 188, 601–612. [Google Scholar] [CrossRef]

- Kiventerä, J.; Perumal, P.; Yliniemi, J.; Illikainen, M. Mine tailings as a raw material in alkali activation: A review. Int. J. Miner. Metall. Mater. 2020, 27, 1009–1020. [Google Scholar] [CrossRef]

- Ebrahimi, M.; Abdolshah, M.; Abdolshah, S. Developing a computer vision method based on AHP and feature ranking for ores type detection. Appl. Soft Comput. 2016, 49, 179–188. [Google Scholar] [CrossRef]

- Shatwell, D.G.; Murray, V.; Barton, A. Real-time ore sorting using color and texture analysis. Int. J. Min. Sci. Technol. 2023, 33, 659–674. [Google Scholar] [CrossRef]

- Tuşa, L.; Kern, M.; Khodadadzadeh, M.; Blannin, R.; Gloaguen, R.; Gutzmer, J. Evaluating the performance of hyperspectral short-wave infrared sensors for the pre-sorting of complex ores using machine learning methods. Miner. Eng. 2020, 146, 106150. [Google Scholar] [CrossRef]

- Qiu, J.; Zhang, Y.; Fu, C.; Yang, Y.; Ye, Y.; Wang, R.; Tang, B. Study on photofluorescent uranium ore sorting based on deep learning. Miner. Eng. 2024, 206, 108523. [Google Scholar] [CrossRef]

- Kern, M.; Akushika, J.N.; Godinho, J.R.; Schmiedel, T.; Gutzmer, J. Integration of X-ray radiography and automated mineralogy data for the optimization of ore sorting routines. Miner. Eng. 2022, 186, 107739. [Google Scholar] [CrossRef]

- Xu, Q.H.; Liang, Z.A.; Duan, H.; Sun, Z.M.; Wu, W.X. The efficient utilization of low-grade scheelite with X-ray transmission sorting and mixed collectors. Tungsten 2023, 5, 570–580. [Google Scholar] [CrossRef]

- Fang, Z.; Song, S.; Wang, H.; Yan, H.; Lu, M.; Chen, S.; Li, S.; Liang, W. Mineral classification with X-ray absorption spectroscopy: A deep learning-based approach. Miner. Eng. 2024, 217, 108964. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov-Arnold Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Singapore, 24–28 April 2025. [Google Scholar]

- Tessier, J.; Duchesne, C.; Bartolacci, G. A machine vision approach to on-line estimation of run-of-mine ore composition on conveyor belts. Miner. Eng. 2007, 20, 1129–1144. [Google Scholar] [CrossRef]

- Zhu, X.F.; Zhang, C.; Huang, X.W.; Song, W.L.; Lu, L.N.; Hu, Q.C.; Shao, Y.Q. Principal component analysis of mineral and element composition of ores from the Bayan Obo Nb-Fe-REE deposit: Implication for mineralization process and ore classification. Ore Geol. Rev. 2024, 167, 105972. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, C.; Hyyppa, J.; Qiu, S.; Wang, Z.; Tian, M.; Li, W.; Puttonen, E.; Zhou, H.; Feng, Z.; et al. Feasibility study of ore classification using active hyperspectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1785–1789. [Google Scholar] [CrossRef]

- Akbar, S.; Abdolmaleki, M.; Ghadernejad, S.; Esmaeili, K. Applying knowledge-based and data-driven methods to improve ore grade control of blast hole drill cuttings using hyperspectral imaging. Remote Sens. 2024, 16, 2823. [Google Scholar] [CrossRef]

- Windrim, L.; Melkumyan, A.; Murphy, R.J.; Chlingaryan, A.; Leung, R. Unsupervised ore/waste classification on open-cut mine faces using close-range hyperspectral data. Geosci. Front. 2023, 14, 101562. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Wang, L.; Xia, X. Performance evaluation of a deep learning based wet coal image classification. Miner. Eng. 2021, 171, 107126. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Lei, W.; Xia, X. Deep learning based mineral image classification combined with visual attention mechanism. IEEE Access 2021, 9, 98091–98109. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Wang, L.; Xia, X. Ore image classification based on small deep learning model: Evaluation and optimization of model depth, model structure and data size. Miner. Eng. 2021, 172, 107020. [Google Scholar] [CrossRef]

- Abdolmaleki, M.; Consens, M.; Esmaeili, K. Ore-Waste discrimination using supervised and unsupervised classification of hyperspectral images. Remote Sens. 2022, 14, 6386. [Google Scholar] [CrossRef]

- Chu, Y.; Luo, Y.; Chen, F.; Zhao, C.; Gong, T.; Wang, Y.; Guo, L.; Hong, M. Visualization and accuracy improvement of soil classification using laser-induced breakdown spectroscopy with deep learning. iScience 2023, 26, 106173. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Y.; Wang, X.; Zhang, Z.; Deng, F. OreFormer: Ore sorting transformer based on ConvNet and visual attention. Nat. Resour. Res. 2024, 33, 521–538. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, H.; Wan, Z. Ore image classification based on improved CNN. Comput. Electr. Eng. 2022, 99, 107819. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Zhang, Z.; Deng, F. Deep learning based data augmentation for large-scale mineral image recognition and classification. Miner. Eng. 2023, 204, 108411. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Wang, Q.q.; Sun, L.; Cao, Y.; Wang, X.; Qiao, Y.; Xiang, M.t.; Liu, G.b.; Sun, W. Recovery of copper and cobalt from waste rock in Democratic Republic of Congo by gravity separation combined with flotation. Trans. Nonferrous Met. Soc. China 2025, 35, 602–612. [Google Scholar] [CrossRef]

- Iyakwari, S.; Glass, H.J.; Rollinson, G.K.; Kowalczuk, P.B. Application of near infrared sensors to preconcentration of hydrothermally-formed copper ore. Miner. Eng. 2016, 85, 148–167. [Google Scholar] [CrossRef]

- Liu, Z.; Kou, J.; Yan, Z.; Wang, P.; Liu, C.; Sun, C.; Shao, A.; Klein, B. Enhancing XRF sensor-based sorting of porphyritic copper ore using particle swarm optimization-support vector machine algorithm. Int. J. Min. Sci. Technol. 2024, 34, 545–556. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dong, J.; Jiang, J.; Jiang, K.; Li, J.; Zhang, Y. Fast and Accurate Gigapixel Pathological Image Classification with Hierarchical Distillation Multi-Instance Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025; pp. 30818–30828. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning (ICML), PMLR, Virtual Event, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

| Ore | Waste | Total | |

|---|---|---|---|

| train | 13,422 | 8317 | 21,739 |

| val | 2876 | 1782 | 4658 |

| test | 2877 | 1783 | 4660 |

| Total | 19,175 | 11,882 | 31,057 |

| Model | AUC | F1 | Recall | Precision | Accuracy | Params (M) |

|---|---|---|---|---|---|---|

| AlexNet [37] | 85.81±0.17 | 86.24±0.16 | 85.36±0.43 | 87.10±0.47 | 83.18±0.22 | 14.57 |

| VGG-16 [38] | 85.59±0.07 | 86.21±0.11 | 85.32±0.26 | 87.12±0.14 | 83.15±0.12 | 134.27 |

| ResNet-34 [34] | 85.32±0.31 | 86.25±0.12 | 86.12±0.81 | 86.40±0.63 | 83.05±0.10 | 21.28 |

| MobileNet V3-small [39] | 85.30±0.19 | 85.94±0.28 | 85.48±0.45 | 86.40±0.51 | 82.73±0.36 | 1.52 |

| EfficientNet V2 [40] | 85.00±0.44 | 85.76±0.29 | 85.53±0.11 | 86.00±0.50 | 82.47±0.40 | 20.18 |

| ConvNeXt-Tiny [41] | 83.73±0.28 | 84.88±0.47 | 86.79±0.40 | 83.05±0.65 | 80.91±0.63 | 27.82 |

| ViT-Base [25] | 85.63±0.60 | 86.04±0.41 | 85.96±0.12 | 86.12±0.88 | 82.78±0.60 | 85.41 |

| Swin-Tiny [26] | 84.84±0.12 | 85.75±0.16 | 85.92±0.25 | 85.59±0.51 | 82.37±0.27 | 24.52 |

| Our Method | 85.90±0.09 | 86.28±0.09 | 85.46±0.18 | 87.13±0.31 | 83.23±0.15 | 1.32 |

| Dataset | AUC | F1 | Recall | Precision | Accuracy | Params (M) |

|---|---|---|---|---|---|---|

| High energy spectrum | 84.24±0.24 | 85.10±0.20 | 85.58±0.33 | 84.64±0.44 | 81.50±0.29 | 0.62 |

| Low energy spectrum | 84.79±0.40 | 85.29±0.50 | 85.94±0.11 | 84.66±0.29 | 81.70±0.44 | 0.62 |

| Dual-energy spectrum | 85.26±0.11 | 85.91±0.17 | 85.72±0.32 | 86.10±0.31 | 82.64±0.22 | 1.24 |

| Fusion Method | AUC | F1 | Recall | Precision | Accuracy | Params (M) |

|---|---|---|---|---|---|---|

| Data-level fusion | 85.04±0.16 | 85.16±0.64 | 87.74±1.39 | 82.78±2.28 | 81.11±1.22 | 0.62 |

| Decision-level fusion | 84.64±0.09 | 85.69±0.18 | 86.09±0.42 | 85.29±0.07 | 82.25±0.17 | 1.24 |

| Feature-level fusion | 85.23±0.29 | 85.82±0.08 | 86.53±0.80 | 85.12±0.92 | 82.34±0.28 | 1.26 |

| Hybrid fusion | 85.41±0.22 | 85.78±0.10 | 85.43±0.37 | 86.14±0.47 | 82.52±0.18 | 1.27 |

| Classifier | Layers | AUC | F1 | Recall | Precision | Accuracy | Params (M) |

|---|---|---|---|---|---|---|---|

| MLP | 2 | 84.51±0.17 | 85.51±0.20 | 86.27±0.28 | 84.77±0.48 | 81.95±0.30 | 1.24 |

| 4 | 84.96±0.46 | 85.59±0.08 | 87.23±0.40 | 84.01±0.52 | 81.86±0.20 | 1.25 | |

| 6 | 84.81±0.25 | 85.68±0.14 | 85.88±0.63 | 85.48±0.41 | 82.27±0.11 | 1.25 | |

| KAN | 2 | 85.41±0.22 | 85.78±0.10 | 85.43±0.37 | 86.14±0.47 | 82.52±0.18 | 1.27 |

| 4 | 85.45±0.20 | 86.18±0.16 | 86.05±0.71 | 86.32±0.41 | 82.96±0.09 | 1.31 | |

| 6 | 85.66±0.12 | 86.50±0.14 | 86.11±0.75 | 86.88±0.58 | 83.40±0.11 | 1.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, X.; Min, X.; Liang, Y.; Tang, X.; Gao, Z. Efficient Multi-Modal Learning for Dual-Energy X-Ray Image-Based Low-Grade Copper Ore Classification. Minerals 2025, 15, 1150. https://doi.org/10.3390/min15111150

Guo X, Min X, Liang Y, Tang X, Gao Z. Efficient Multi-Modal Learning for Dual-Energy X-Ray Image-Based Low-Grade Copper Ore Classification. Minerals. 2025; 15(11):1150. https://doi.org/10.3390/min15111150

Chicago/Turabian StyleGuo, Xiao, Xiangchuan Min, Yixiong Liang, Xuekun Tang, and Zhiyong Gao. 2025. "Efficient Multi-Modal Learning for Dual-Energy X-Ray Image-Based Low-Grade Copper Ore Classification" Minerals 15, no. 11: 1150. https://doi.org/10.3390/min15111150

APA StyleGuo, X., Min, X., Liang, Y., Tang, X., & Gao, Z. (2025). Efficient Multi-Modal Learning for Dual-Energy X-Ray Image-Based Low-Grade Copper Ore Classification. Minerals, 15(11), 1150. https://doi.org/10.3390/min15111150