Abstract

This study presents a domain-adapted retrieval-augmented generation (RAG) pipeline that integrates geological knowledge with large language models (LLMs) to support intelligent question answering in the metallogenic domain. Focusing on the Qin–Hang metallogenic belt in South China, we construct a bilingual question-answering (QA) corpus derived from 615 authoritative geological publications, covering topics such as regional tectonics, ore-forming processes, structural evolution, and mineral resources. Using the ChatGLM3-6B language model and LangChain framework, we embed the corpus into a semantic vector database via Sentence-BERT and FAISS, enabling dynamic retrieval and grounded response generation. The RAG-enhanced model significantly outperforms baseline LLMs—including ChatGPT-4, Bing, and Gemini—in a comparative evaluation using BLEU, precision, recall, and F1 metrics, achieving an F1 score of 0.8689. The approach demonstrates high domain adaptability and reproducibility. All datasets and codes are openly released to facilitate application in other metallogenic belts. This work illustrates the potential of LLM-based knowledge engineering to support digital geoscientific research and smart mining.

1. Introduction

With the rapid advancement of artificial intelligence, language models (LMs) have made significant strides in natural language processing (NLP), which are now capable of understanding, generating, and processing complex natural language texts through large-scale data training [1,2]. Recently, the continuous development of large language models (LLMs), such as GPT (Generative Pretrained Transformer) [3], BERT (Bidirectional Encoder Representations from Transformers) [4], Anthropic Claude [5], and LLaMA (Large Language Model Meta AI) [6], has driven rapid progress in NLP. Pretrained on extensive corpora, LLMs exhibit powerful general-purpose abilities across fields such as medicine [7,8], ecology [9], and civil engineering [10,11,12]. However, general-purpose LLMs, which rely on broad, nonspecific datasets, often lack the specialized knowledge required for complex tasks in specific fields [13,14,15,16]. As a result, these models may struggle with specialized questions, sometimes fabricating legal precedents [17], producing misinformation in news content [18], or even posing risks in clinical settings [19]. Without domain-specific knowledge, general LLMs frequently rely on templated responses, limiting their ability to deliver accurate and contextually relevant answers [20].

To overcome these challenges, Domain-Specific Large Language Models (DSLLMs) have emerged. Unlike general-purpose models (e.g., ChatGPT, Bing, Gemini [Google’s large language model], BERT), DSLLMs are designed and fine-tuned for specialized domains, incorporating domain-specific data to significantly enhance performance and reduce hallucinations (i.e., fabricated content) or irrelevant responses in complex tasks [21,22]. DSLLMs typically employ three approaches: prompt engineering, fine-tuning, and retrieval-augmented generation (RAG). Prompt engineering improves output quality by refining input prompts [23], and studies on prompt engineering for large models cover areas such as biomedicine, education, and academia [24,25,26]. For example, Heston et al. (2023) [27] utilized prompt engineering to optimize AI-driven generative language models (GLMs) in medical education; Lee et al. (2024) [28] developed an automated question generation (AQG) system through prompt engineering for use in English-language education; and Giray (2023) [29] examined prompt engineering’s role in enhancing ChatGPT for academic writing. In addition, Velásquez-Henao et al. (2023) [30] explored prompt engineering to improve interactions with language models in engineering applications.

Fine-tuning leverages labeled data from specific domains to further train large models, enhancing performance for domain-specific tasks [31]. Examples include BloombergGPT [32,33] and FinGPT [34] in the financial sector, as well as MedPaLM [35], HuatuoGPT [36], and BenTsao [37], which fine-tune Chinese LLMs for end-to-end medical consultations. In geosciences, models such as GeoGPT, SkyGPT, K2, and GeoGalactica specialize in geological tasks through fine-tuning. GeoGPT [38], for example, integrates geospatial data for diverse geospatial tasks, whereas SkyGPT [39] supports analysis and predictions in atmospheric science, climate change, and astronomy. Both K2 [40] and GeoGalactica [41] are trained on interdisciplinary datasets in geology, climate science, and remote sensing, catering to fields such as mathematical geology, biogeosciences, and geochemistry.

Although prompt engineering and fine-tuning enhance domain-specific performance, these methods can lead to “overfitting,” reducing effectiveness in broader tasks. To address this, retrieval-augmented generation (RAG) has been widely adopted. The RAG algorithm combines information retrieval with generation, allowing models to supplement generated content with external knowledge and reducing the likelihood of hallucinations [42]. Studies by Guu et al. (2020) [43], Gao et al. (2023) [44], and Fan et al. (2024) [45] demonstrate that combining dynamic retrieval with model pretraining addresses knowledge limitations and improves generation accuracy. Specifically, BloombergGPT uses RAG to access up-to-date financial information, enhancing accuracy and timeliness [46]. In medical dialogs, MedPaLM [47] applies RAG to improve responses to complex medical queries. RAG is also used in academic research, where SciBERT [48] leverages it to extract relevant literature content, generating research suggestions with improved relevance.

As a highly complex and data-intensive field, geology traditionally employs methods such as field surveys, geological mapping [49,50,51], numerical simulation [52,53], geophysical exploration [54,55], and remote sensing technology [56,57]. These methods provide the foundation for understanding geological phenomena. However, with the rapid growth of data volumes and the emergence of heterogeneous multisource data, traditional approaches struggle to address challenges related to multiscale problems and data integration [58]. Knowledge graphs, which offer a semantically structured approach for storing and reasoning with information, show significant application potential in this context. For example, Zhou et al. (2021) [59] constructed a knowledge graph for porphyry copper deposits, linking “earth systems, mineralization systems, exploration systems, and predictive evaluation systems,” to offer an intelligent solution for mineral resource forecasting. Zhang et al. (2024) [60] developed a knowledge graph focused on porphyry copper prospecting in the Qin–Hang metallogenic belt, integrating natural language processing and machine learning for automated knowledge extraction, reasoning, and visualization. This system provides efficient, accurate support for mineral prospecting. Zhang et al. (2024) [61] further developed an ontology library for mineral deposits via Neo4j, highlighting ontology’s role in data structuring and inferential analysis and addressing the challenges of semantic description and interpretability for multisource data.

Recently, intelligent dialog systems have introduced new tools for geological research by rapidly integrating and retrieving multisource data. Key question-answering systems in this field include GeoDeepDive [62], GeoAI [63,64], and SeisBench [65]. For example, GeoDeepDive [62] combines natural language processing (NLP) and machine learning to focus on extracting information from geological literature, allowing researchers to locate information more efficiently. This system is widely used across subfields; for example, Maio et al. [66] employed GeoDeepDive for entity linking and uncertainty management in geological research, whereas Goring et al. [67] used GeoDeepDive workflows to identify Ice-Rafted Debris (IRD) from the Pleistocene and Pliocene epochs. Additionally, Husson et al. [68] and Kumpf et al. [69] integrated GeoDeepDive with macrostrat, advancing deep-time research and accelerating the identification of geological evidence for evaporites. GeoAI [70], on the other hand, specializes in geological exploration and data analysis, combines GIS and deep learning models to address questions related to geological features and resources, playing a significant role in urban planning [70] and environmental protection [71]. SeisBench [72], an open-source deep learning tool for seismic data, integrates seismological data with NLP technologies for classifying seismic waves and event identification, supporting applications such as earthquake event detection [73,74] and source parameter estimation [62,75]. Despite their strong performance in specific areas, these models often lack broad interoperability, real-time processing capabilities, and data integration. For example, GeoDeepDive is focused primarily on geological literature extraction, whereas GeoAI specializes in geological exploration and data analysis; both tend to work with static historical data and lack real-time analysis capabilities. SeisBench also faces challenges in processing large-scale, real-time data streams, especially in responding quickly to earthquake events. Through multimodal data integration, cross-domain processing, and enhanced generalization capabilities, domain-specific large models offer a promising approach to address these limitations, providing more flexible and comprehensive support for geological research [76].

To address these challenges, this study leverages the ChatGLM3-6B model, combined with the open-source Langchain framework, to develop a domain-specific large language model tailored for geology, using the Qin–Hang metallogenic belt as a case study. By integrating literature and database resources, this study enables efficient knowledge retrieval and automated processing of heterogeneous data, building an intelligent question-answering system that encompasses multidisciplinary knowledge and enhances automated responses to questions related to the Qin–Hang metallogenic belt. The study involves the following steps: (1) Model and application development on the basis of the ChatGLM3-6B model and the Langchain framework; (2) construction of a comprehensive knowledge base covering the Qin–Hang metallogenic belt, including academic papers, geological reports, and book materials; and (3) evaluation and testing to optimize the performance of the intelligent question-answering system. This study provides a new tool for research on the Qin–Hang metallogenic belt, supporting the intelligent advancement of geological research in this region.

2. Related Research

2.1. Geological Background and Research Significance

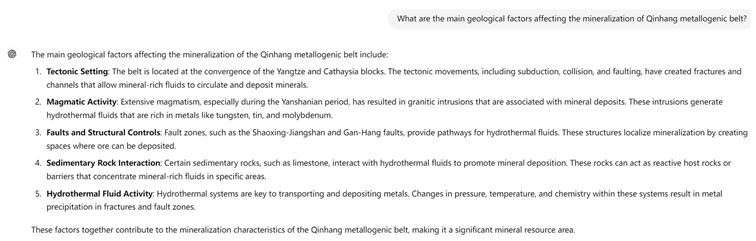

The Qin–Hang metallogenic belt, which is part of the South China metallogenic province, represents a plate junction zone formed by the Neoproterozoic collision of the Yangtze and Cathaysia ancient continental blocks. During the Mesozoic, it became the origin of the Yanshanian tectonic movement in South China, establishing itself as one of southeastern China’s most critical magmatic metallogenic belts [77,78]. This NE-trending belt spans approximately 2000 km from Qinzhou Bay in Guangxi through eastern Hunan and central Jiangxi to Hangzhou Bay in Zhejiang, with a width of 100–300 km and an overall arc-like shape [60]. Recent studies have recognized the Qin–Hang belt as a major and distinct polymetallic metallogenic zone in South China, featuring widely distributed copper, lead-zinc, tin, and tungsten deposits [79,80]. Geologically, it plays a pivotal role in South China’s tectonic framework, with unique characteristics that illuminate the region’s tectonic evolution. Analyzing its geological features and evolutionary history can support the assessment and prediction of potential geological hazards, such as earthquakes and landslides, providing a scientific basis for disaster risk mitigation [81]. The formation of this belt reflects the complexity of crustal movements and sedimentary environments and contributes valuable insights into the global Grenvillian orogeny, Rodinia supercontinent breakup, and Neoproterozoic metallogenic activities [79,82,83,84,85,86,87,88].

In geological exploration and research, however, challenges arise from scattered information, data heterogeneity, and the difficulty of integrating interdisciplinary knowledge. Data and findings related to the Qin–Hang belt are spread across various publications, databases, and exploration reports, making traditional retrieval methods inefficient and prone to omissions. Additionally, geological exploration involves diverse data types—such as geological maps, mineral distribution data, exploration records, and chemical analyses—which require significant manual processing and format conversion due to inconsistencies. Moreover, research on the Qin–Hang belt spans several disciplines, including mineralogy, geochemistry, and structural geology, making interdisciplinary data integration a complex, time-intensive task that hinders scientific progress.

As a result, developing a domain-specific large language model (DSLLM) for the Qin–Hang metallogenic belt has both academic and practical importance. By integrating extensive heterogeneous geological data and literature resources, such a model could significantly improve data processing efficiency and knowledge acquisition, streamlining traditional geological exploration workflows. This intelligent support can provide precise and efficient mineral resource assessments, drive further research on the Qin–Hang belt, and offer a scientific foundation for regional resource development.

To facilitate a better understanding of the spatial and structural characteristics of the Qin–Hang metallogenic belt, a simplified geological map illustrating the regional tectonic framework, major faults, and Yanshanian magmatic intrusions is provided in Figure 1.

Figure 1.

Simplified geological map of the Qin–Hang metallogenic belt.

2.2. Research on the ChatGLM3-6B Model

This study utilizes the ChatGLM3-6B model as its foundational framework. Developed by Tsinghua University’s Zhipu AI team and the Beijing Academy of Artificial Intelligence, ChatGLM3-6B is a third-generation bilingual pretrained model within the GLM series featuring 6 billion parameters. Despite its relatively modest parameter size, ChatGLM3-6B exhibits strong capabilities in understanding and generating Chinese text, as well as handling English, owing to its optimized architecture. These characteristics enable it to excel in cross-lingual tasks and manage complex scenarios across multiple languages [89].

Compared with its predecessors, ChatGLM3-6B retains advantages in conversational fluency, fast response, and ease of deployment while further enhancing performance, particularly in environments with limited computing resources. Its compact and efficient design allows deployment both in the cloud and on local devices, making it suitable for a broad range of applications, from personal inference tasks to enterprise-level localized services. ChatGLM3-6B’s optimizations extend to handle complex interactions, including task-oriented dialog, open-domain question answering, and multiturn conversation scenarios. In practical dialog applications, the model’s robust context memory enables coherent multiturn exchanges. Across eight benchmark Chinese and English datasets, ChatGLM3-6B has demonstrated significant improvements over earlier versions, showcasing adaptability and robustness in bilingual settings. Its performance meets both academic and practical requirements for efficiency and reliability, making it widely applicable in intelligent dialog, knowledge-based question answering, and text generation.

2.3. Overview of the LangChain Open-Source Framework

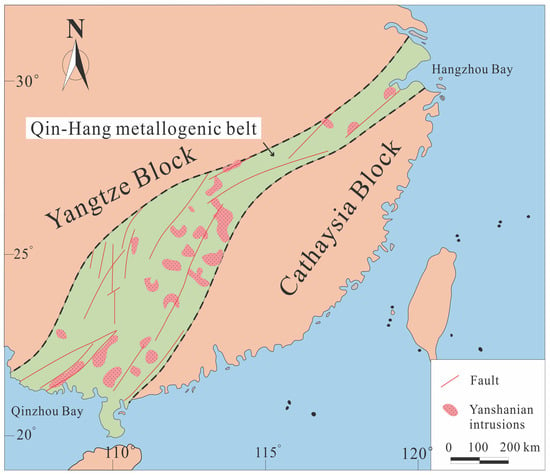

LangChain is an open-source framework designed to facilitate the development of applications based on large language models (LLMs). Key features include chain-based task execution, intelligent agents, memory mechanisms, prompt templates, and integration with external tools. These features work together to enable developers to rapidly build complex automated systems and versatile applications such as intelligent dialog systems, Q&A platforms, and information retrieval tools. As shown in Figure 2, the LangChain framework is structured into five layers: observability, deployments, cognitive architectures, integration components, and protocols. Each layer serves a distinct role in simplifying and supporting the development, integration, and deployment of LLM applications [90]. A detailed overview of each layer follows:

Figure 2.

Langchain Frame [90].

- Observability Layer: LangSmith at the top level offers tools to monitor and optimize application performance in real time. Debugging allows developers to debug applications during development to quickly identify and resolve issues. Playground: An interactive testing platform for verifying application performance and behavior in real-world conditions. Evaluation: This tool provides tools for performance and accuracy assessment, helping improve response quality. Annotation: Facilitates data annotation and labeling for effective data management during testing and training. Monitoring: Offers real-time application monitoring to identify potential issues and support preventive maintenance. The observability layer provides LangChain with comprehensive debugging and monitoring capabilities, allowing developers to test, analyze, and improve application performance effectively.

- Deployments Layer: This includes LangServe and Templates, which focus on deployment and templates for applications. LangServe enables the deployment of chain-based tasks as REST APIs, allowing LangChain applications to integrate seamlessly into broader systems. Templates: Preconfigured application templates to help developers quickly build and configure applications for common tasks. The deployment layer simplifies application integration and expansion, making it easier for developers to publish applications as REST APIs or quickly setting up specific applications via templates.

- Cognitive Architectures Layer: Comprising LangChain’s core modules, this layer is essential for building intelligent applications, including the following: Chains: Supports chain-based task execution, allowing developers to create workflows by linking operational steps. Agents: Intelligent task units that dynamically make decisions, choose tools or execute specific tasks on the basis of requirements. Retrieval strategies: These strategies offer various methods for extracting relevant information from large datasets, such as in knowledge-based Q&A. These cognitive architectures provide developers with tools to build complex and adaptable applications capable of automated decision-making and multistep tasks.

- Integration Components Layer: Contains the LangChain-Community module, which integrates components for model interaction and retrieval, providing Model I/O, which includes components for model calls, prompt templates, example selectors, and output parsing. Retrieval: This method supports functions such as document loading, vector storage, and embedding models for precise information retrieval. Agent Tooling: Offers toolkits to enhance agent interactivity and data processing within applications. These components enable rapid setup and customization for applications that interact with LLMs, increasing flexibility and scalability.

- Protocol Layer: The LangChain Expression Language (LCE-L) in this layer enables essential features such as parallelization, fault handling, and asynchronous execution, providing low-level support that ensures high efficiency and scalability across other modules.

2.4. Overview of LangChain-Chatchat

LangChain-Chatchat is a local knowledge base Q&A application built on the LangChain framework and designed for offline use in Chinese scenarios. Leveraging the LangChain framework and large language models (such as ChatGLM), LangChain-Chatchat enables efficient Chinese and English information retrieval and Q&A. By supporting custom local knowledge bases, LangChain-Chatchat allows users to perform Q&A without relying on online services. Its design is well suited to the Chinese context, handling diverse input requirements flexibly for general inquiries and specialized queries alike. Additionally, the project supports multiple open-source LLMs and embedding models, allowing it to be fully built on an open-source tech stack for private deployment with high control [91].

LangChain-Chatchat operates entirely offline, ensuring data privacy by enabling local deployment, which is especially useful for sensitive scenarios within internal corporate or organizational networks. Without relying on cloud services, LangChain-Chatchat completes data processing and storage locally, making it ideal for environments with strict privacy and security needs. With embedding models, LangChain-Chatchat converts knowledge base text into vector representations, enabling vector retrieval to quickly find answers most relevant to user queries. Large language models such as ChatGLM then generate coherent and natural responses. Users can import various document types (PDF, Word, Excel, etc.) into the knowledge base, creating rich local Q&A resources. By combining LangChain’s flexibility with ChatGLM’s generative capabilities, LangChain-Chatchat offers an efficient, secure, and easily deployable knowledge-based Q&A solution for local use.

3. Knowledge Base Construction

3.1. Technical Approach

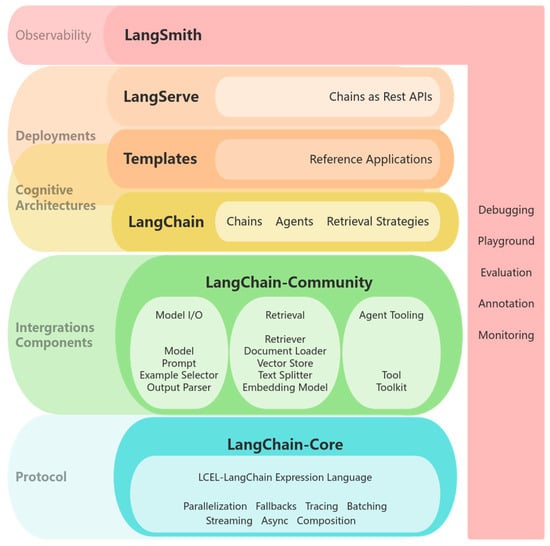

Retrieval-augmented generation (RAG) technology combines information retrieval with generative language models, enabling dialog systems to dynamically access external knowledge to generate more accurate and contextually relevant responses. Leveraging the LangChain framework, RAG facilitates the development of efficient and reliable Q&A systems by loading and processing local knowledge bases. The key steps are as follows (Figure 3) [92]:

Figure 3.

Structural diagram based on RAG [92].

- Data Loading and Preprocessing: Various file types within the local knowledge base (e.g., HTML, MD, JSON, CSV, PDF, and TXT) are parsed via an OCR parser to extract content, converting it to a standard text format for further processing. An unstructured data loader ensures compatibility with subsequent processing steps. The extracted text is then segmented on the basis of predefined content and length parameters via the RecursiveCharacterTextSplitter tokenizer, maintaining logical coherence at the sentence or paragraph level.

- Text Vectorization: Each text segment is converted into a vector representation via the bge-large-zh-v1.5 embedding model to capture its semantic features. This process encodes each segment as a high-dimensional vector, which is stored in a vector database (Faiss) to facilitate similarity-based retrieval. Text vectorization not only retains semantic content but also enables efficient similarity searches through vector operations.

- Vector Indexing for Retrieval: All text vectors are stored in the Faiss database to construct a vector index, enabling approximate nearest neighbor (ANN) searches. When a user submits a question, the system can quickly identify similar text segments within the vector space. To optimize the retrieval speed, the system chooses an appropriate indexing structure (e.g., HNSW or IVF) on the basis of the size of the knowledge base and uses cases, allowing rapid, accurate retrieval of relevant information from large datasets.

- Query Vectorization and Retrieval: Upon receiving a user query, the system first vectorizes the question. It then matches this query vector with stored vectors in the database to retrieve the top-K most relevant text segments. These retrieved segments serve as context, forming a “question + context” prompt that is fed into the generative language model to enhance response relevance.

- Answer Generation: The ChatGLM3-6B generative model processes the “question + context” prompt, synthesizing a response that draws on both the model’s intrinsic knowledge and the retrieved content. By accessing up-to-date external information from the knowledge base, the RAG approach enhances response accuracy and relevance, allowing the model to generate answers that are better tailored to complex user queries.

3.2. Data Collection and Preprocessing

A total of 615 research papers on the Qin–Hang metallogenic belt were collected from various academic platforms and publications. Specifically, the China National Knowledge Infrastructure (CNKI) database includes 213 papers, Google Scholar hosts 240 papers, the VIP database provides 7 papers, MDPI contains 27 papers, Elsevier journals feature 118 papers, and Springer journals offer 5 papers. Additionally, 5 specialized books cover research in this area.

4. Analysis and Evaluation of Intelligent Dialog Results

4.1. Dialog Interface

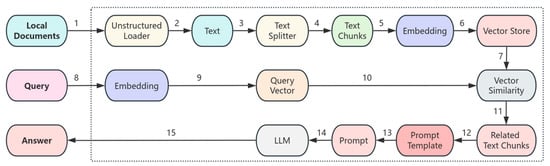

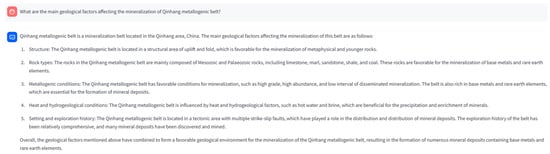

As shown in Figure 4, on the dialog interface, when a researcher asks, “What are the main geological factors affecting the mineralization of the Qin–Hang metallogenic belt?”, the model processes the query by first vectorizing it with RAG technology and retrieving the most relevant content segments from the knowledge base. These retrieved segments serve as contextual information that, combined with the user’s question, is fed into the generative model to generate a response to the geological factors influencing mineralization in the Qin–Hang belt. Once the response is generated, the model also displays the background literature and research reports it used, providing researchers with insight into the sources and rationale behind the answer. This approach not only enhances the response’s credibility but also offers researchers additional reference materials for a more thorough understanding of the geological features and mineralization processes of the Qin–Hang belt. This transparency in information improves the user experience and supports further research and learning in geology.

Figure 4.

Dialog page (knowledge base-based).

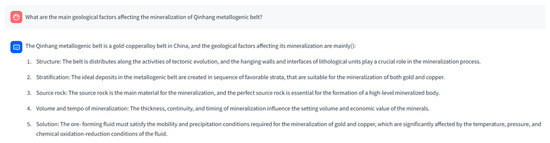

As shown in Figure 5, when the knowledge base is not utilized, the base model, ChatGLM3-6B, directly generates the response. In this case, the model relies solely on general pretrained knowledge without drawing on specialized geological information. While the base model provides broad knowledge coverage, it often lacks the specificity and depth required for specialized fields such as the Qin–Hang metallogenic belt. In contrast, integrating RAG technology with a knowledge base retrieval mechanism significantly improves the accuracy and professionalism of responses, as well as their credibility. This comparison highlights that combining RAG technology with a specialized knowledge base is essential for generating high-quality, expert-level answers, providing researchers with valuable reference information.

Figure 5.

Dialog page (based on the basic model).

4.2. Comparative Analysis of Dialog Responses

The ChatGLM3-6B RAG-based intelligent conversational knowledge base clearly demonstrates an advantage in professional expertise when generating responses related to the Qin–Hang metallogenic belt, as shown in Figure 3. It provides specialized terminology and detailed perspectives on the belt’s structural characteristics, rock types, and mineralization conditions. For example, it highlights the significance of the uplifted and folded zones within the belt, which are critical structural features linked to favorable geological conditions for mineralization. Additionally, ChatGLM3-6B lists the main rock types essential for understanding the belt’s foundational geology, offering a focused approach that presents concise, relevant information for users with some background knowledge in the field.

ChatCPT-4o [93], as shown in Table 1, also performs well in providing a clear and organized explanation of the tectonic environment, magmatic activity, and hydrothermal processes. It provides comprehensive coverage of geological factors, especially in explaining how structural intersections affect mineralizing fluids, displaying a logical and coherent structure. ChatCPT-4o’s clarity and logical flow make it particularly suitable for users seeking a broad understanding of mineralization without necessarily having deep domain knowledge.

Table 1.

Answers generated by ChatCPT- and Bing.

By comparison, Bing [94] covers essential geological factors but remains relatively introductory from a professional perspective, as shown in Table 1. While it introduces geophysical elements, such as gravity and magnetic anomalies, which add a unique perspective, its content lacks the depth seen in ChatGLM3-6B and ChatCPT-4o, particularly in the detailed explanation of key geological processes and mineralization mechanisms. This makes Bing’s response more suited as an introductory overview, potentially lacking the depth required by professional users.

In summary, the ChatGLM3-6B RAG excels in delivering specialized, domain-specific information, making it an effective knowledge support tool for the Qin–Hang metallogenic belt. ChatCPT- performs well in terms of clarity and logical structure, making it ideal for users looking for a comprehensive, general overview. Bing provides a basic overview in this domain, covering essential points but with limited depth.

4.3. Evaluation

4.3.1. Introduction to the BLEU Evaluation Method

This study uses the BLEU (bilingual evaluation under study) scoring method [95] to compare the Qin–Hang metallogenic belt knowledge content generated by five large language models (ChatGLM3-6B, ChatGLM3-6B RAG, ChatCPT-4o [93], Bing [94], Gemini [96]). BLEU is an automated evaluation metric that is based on n-gram matching and is used to measure the similarity between generated and reference texts. BLEU scoring includes the following key steps:

n-gram Matching: The BLEU segments both the generated and reference texts into n-grams and counts the occurrences of each n-gram in both texts, taking the minimum count.

n-gram Precision Calculation: The BLEU calculates precision for 1- to 4-g matches via the formula for n-gram precision PnP_nPn shown in Equation (1).

Brevity penalty (BP): To prevent excessively short responses, the BLEU applies a brevity penalty (BP) if the generated text is significantly shorter than the reference text, which is calculated as shown in Equation (2).

BLEU Score Calculation: Combining n-gram precision and the brevity penalty, the BLEU score is calculated as shown in Equation (3).

where

Pn: Precision for n-grams;

c: Length of the generated text;

r: Length of the reference text;

BP: Brevity penalty;

N: Maximum n-gram order, typically set to 4;

countgen: Count of n-grams in the generated text;

countref: Count of n-grams in the reference text;

4.3.2. Evaluation Metrics

In addition to the BLEU, this study uses the precision, recall, and F1 score to evaluate the quality of model-generated content in the Qin–Hang metallogenic belt. Each metric provides insight into different aspects of generation quality:

The precision (Equation (4)) represents the proportion of n-grams in the generated text that match the reference text, indicating accuracy.

Recall (Equation (5)) measures the proportion of reference text content that is covered by the generated text, reflecting comprehensiveness.

The F1 score (Equation (6)) balances precision and recall, providing a comprehensive assessment of overall performance.

where

M: number of matching n-grams between the generated text and reference text;

Ng: Total number of n-grams in the generated text;

Nr: Total number of n-grams in the reference text;

Precision: the ratio of matched n-grams to the total n-grams in the generated text;

Recall: the ratio of matched n-grams to the total n-grams in the reference text;

F1 Score: the harmonic mean of precision and recall, which is used to balance both metrics;

4.3.3. Evaluation Results

To provide a consistent standard for BLEU scoring, reference answers were created using verified content from Wikipedia, addressing core topics on the Qin–Hang metallogenic belt, such as geological background, structural features, resource distribution, mineralization mechanisms, and controlling factors, as shown in Table 2. These questions were reviewed and curated by experts to ensure comprehensive coverage of the key geological knowledge and mineralization rules.

Table 2.

Professional questions and answers to the standard library.

Each model’s generated content was then evaluated sentence by sentence against the reference answers, with precision, recall, and F1 score calculated. Scores range from 0 to 1, with higher scores indicating stronger similarity between the generated text and the reference answer, thus objectively reflecting each model’s ability to generate accurate and relevant responses in this specialized field.

Evaluation Results Analysis Based on the Professional Background of the Qin–Hang Metallogenic Belt (Table 3).

Table 3.

Assessment results.

Precision Comparison: The ChatGLM3-6B RAG model achieves the highest precision score at 0.8663, indicating that integrating a specialized knowledge base for the Qin–Hang metallogenic belt significantly improves the accuracy of responses, especially in detailing structural features, mineralization mechanisms, and deposit characteristics. This demonstrates the substantial impact of a specialized knowledge base on response accuracy. ChatGPT- and Bing followed with precision scores of 0.8507 and 0.8456, respectively, indicating strong accuracy as general models. However, they lack the depth needed for highly specialized geological topics. Gemini scored 0.8152, which was slightly better than that of the base ChatGLM3-6B (0.7916) but still lower than that of the models with enhanced knowledge. The base model’s lower precision highlights that general knowledge alone is insufficient for high-quality content in specialized geological contexts.

Recall Comparison: The ChatGLM3-6B RAG model’s recall score of 0.8421 indicates comprehensive coverage of essential Qin–Hang metallogenic belt knowledge, including geological background, deposit distribution, and mineralization processes. Such comprehensive information is crucial for accurately representing the belt’s geological evolution. ChatGPT-4o’s recall of 0.8521 is close but lacks specific support for detailed topics such as precise stages of mineralization due to the absence of domain-specific data. Bing and Gemini scored similarly at 0.8145 and 0.8140, reflecting limited breadth in covering detailed geological structures and deposit types. The base ChatGLM3-6B model scored only 0.8022 in recall, underscoring the importance of a knowledge base in capturing detailed domain-specific information.

F1 score comparison: With an F1 score of 0.8535, the ChatGLM3-6B RAG effectively balances precision and recall, utilizing the knowledge base to provide accurate, complete responses. This version offers reliable information for complex topics such as mineralization mechanisms, geological evolution, and resource distribution. ChatGPT-4o’s F1 score of 0.8514 indicates balanced responses, although it lacks the depth of knowledge-enhanced models. Bing and Gemini scored 0.8298 and 0.8146, respectively, achieving a compromise between accuracy and coverage, but their lack of specialized knowledge limits their performance on complex geological questions. The base ChatGLM3-6B model has the lowest F1 score at 0.7969, highlighting the challenges of achieving balanced, high-quality responses without a specialized knowledge base.

Overall, ChatGLM3-6B outperforms the other models in terms of precision, recall, and F1 score, particularly in addressing detailed questions concerning the geological background, deposit types, and mineralization mechanisms of the Qin–Hang belt. This underscores the effectiveness of a specialized knowledge base in enhancing response quality in professional domains. While ChatGPT- is stable as a general model, it lacks the detail needed for specialized geological topics. Bing and Gemini offer similar performance but lack accuracy and coverage in complex topics, such as mineralization processes and structural evolution. The base ChatGLM3-6B model scored lowest in all the metrics, especially compared with its knowledge-enhanced version, emphasizing the essential role of a knowledge base in achieving high accuracy and coverage in specialized fields.

5. Discussion

This study serves as an initial exploration into developing an intelligent conversational knowledge base for the Qin–Hang metallogenic belt via the ChatGLM3-6B large language model, with a focus on assessing the model’s performance when enhanced with a specialized knowledge base. While this approach demonstrated notable improvements in generation quality, as an early-stage exploration, certain limitations and improvement areas were identified. First, the current Qin–Hang metallogenic belt knowledge base remains limited in scope. There is significant potential for expanding and refining this belt to more comprehensively cover the geological characteristics, mineralization processes, and regional evolution specific to this belt. Moreover, as knowledge base construction mainly relies on publicly available data and literature, future involvement from domain experts could further enhance its accuracy and relevance. In terms of evaluation, this study utilized BLEU scores alongside precision, recall, and F1 scores to assess generation quality. While these automated metrics provide valuable insights into content quality, they may not fully capture the depth of professionalism and practical applicability required in specialized domains. The incorporation of additional evaluation methods, such as human expert assessments, could provide a more comprehensive evaluation of the model’s domain-specific performance.

In conclusion, building an intelligent knowledge base for the Qin–Hang metallogenic belt presents an effective pathway for improving the domain-specific performance of large language models tructuring and integrating specialized knowledge into the model significantly enhances generation quality and domain accuracy, which is especially crucial for fields such as geology that require advanced expertise [97]. Future research should focus on expanding the knowledge base’s breadth and depth and refining its integration with the generative model to increase its applicability in complex domains. Additionally, developing an automatically updated knowledge base could help keep pace with ongoing geological research, ensuring that the generated content remains current and relevant. Further exploration into incorporating multimodal data, such as geological maps and remote sensing images, may also enrich the model’s interpretive and generative capabilities in handling complex geological information.

In light of the recent emergence of various geoscience-focused LLMs, such as GeoGPT [38], GeoGalactica [41], and K2 [40], this study provides a comparative analysis to better contextualize its contributions. As shown in Table 4, unlike existing systems that primarily rely on English-language corpora or structured knowledge graphs, our system introduces a bilingual (Chinese–English) domain-specific QA corpus centered on the Qin–Hang metallogenic belt. Leveraging the LangChain framework and the ChatGLM3-6B model, we implement a modular and reproducible retrieval-augmented generation (RAG) pipeline tailored for geological question answering.

Table 4.

A comparison of representative geoscience large language models in terms of architecture, language support, geographic focus, openness, QA capabilities, and evaluation.

In addition to its flexible architecture, the proposed system demonstrates notable performance gains over general-purpose models (e.g., GPT-4o, Bing, Gemini) in terms of domain-specific precision. Furthermore, its integration of source-grounded responses enhances interpretability and transparency, making it more aligned with the expectations of scientific reasoning. All source code, configuration files, and evaluation datasets have been fully open-sourced, facilitating reproducibility and broader adoption in academic and industrial settings.

Despite these contributions, several limitations remain. First, the current knowledge base is statically constructed, lacking mechanisms for automated updates from ongoing geological research. Second, while the corpus is built from public scientific literature, it currently lacks expert-validated ontologies or structured domain knowledge, which limits its ability to handle deep reasoning tasks involving geological semantics and terminology. Additionally, the system does not yet support multimodal inputs such as geological maps, stratigraphic sections, or remote sensing imagery—elements that are increasingly important in practical geological interpretation tasks.

Future work may focus on incorporating multimodal fusion between textual and visual modalities, integrating expert knowledge graphs into the pipeline, and testing the system’s adaptability to other metallogenic zones. These directions will further enhance the domain robustness, interpretability, and practical utility of AI-driven geological QA systems.

6. Conclusions

This study leverages the ChatGLM3-6B large language model to construct a preliminary intelligent conversational knowledge base for the Qin–Hang metallogenic belt and evaluates its performance. By integrating retrieval-augmented generation (RAG) technology, this study investigates how a specialized knowledge base can enhance the effectiveness of large language models in specific domains. The results indicate that the ChatGLM3-6B model, augmented with the Qin–Hang metallogenic knowledge base, achieves a significantly higher generation quality, with an F1 score of 0.8689, outperforming the base version without the knowledge base (0.7969) and other mainstream large language models, including ChatGPT-4 (0.8514), Bing (0.8298), and Gemini (0.8146). The combined ChatGLM3-6B model demonstrates superior generation accuracy and content coverage for the Qin–Hang metallogenic belt, particularly in addressing complex questions on mineralization mechanisms, geological background, and ore distribution. These findings suggest that integrating a specialized knowledge base with RAG technology effectively addresses the limitations of general language models in domain-specific knowledge, enhancing the authority and precision of generated responses.

Future work will focus on expanding the scope and depth of the knowledge base, refining the integration between the knowledge base and the language model, and incorporating evaluations from human experts for a more comprehensive assessment. These advancements aim to strengthen the model’s applicability in complex professional fields, laying a solid foundation for intelligent conversational systems in geological research and applications.

Author Contributions

Conceptualization, J.M. and Y.Z. (Yuqing Zhang); methodology, L.H.; software, Y.Z. (Yongzhang Zhou); validation, L.H., Q.Z. and J.M.; formal analysis, M.A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was financially supported by the National Key Research and Development Program of China (Grant No. 2022YFF0801201), the National Natural Science Foundation of China (Grant No. U1911202), and the Key-Area Research and Development Program of Guangdong Province (Grant No. 2020B1111370001).

Data Availability Statement

All data included in this study are available upon request by contacting the corresponding author.

Acknowledgments

We are grateful to anonymous reviewers for their constructive reviews on the manuscript, and to the editors for carefully revising the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhou, Y.Z.; Xiao, F. Overview: A glimpse of the latest advances in artificial intelligence and big data geoscience research. Earth Sci. Front. 2024, 31, 1–6. [Google Scholar] [CrossRef]

- Liu, Y.; He, H.; Han, T.; Zhang, X.; Liu, M.; Tian, J.; Zhang, Y.; Wang, J.; Gao, X.; Zhong, T.; et al. Understanding LLMs: A Comprehensive Overview from Training to Inference. arXiv 2024, arXiv:2401.02038. [Google Scholar] [CrossRef]

- Liu, Y.; Han, T.; Ma, S.; Zhang, J.; Yang, Y.; Tian, J.; He, H.; Li, A.; He, M.; Liu, Z.; et al. Summary of chatgpt-related research and perspective towards the future of large language models. Meta-Radiology 2023, 1, 100017. [Google Scholar] [CrossRef]

- Boitel, E.; Mohasseb, A.; Haig, E. A Comparative Analysis of GPT-3 and BERT Models for Text-Based Emotion Recognition: Performance, Efficiency, and Robustness; UK Workshop on Computational Intelligence; Springer: Cham, Switzerland, 2023; pp. 567–579. [Google Scholar] [CrossRef]

- Anthropic, A.I. The Claude 3 Model Family: Opus, Sonnet, Haiku; 2024; Claude-3 Model Card; pp. 1–42. Available online: https://www-cdn.anthropic.com/de8ba9b01c9ab7cbabf5c33b80b7bbc618857627/Model_Card_Claude_3.pdf (accessed on 29 July 2025).

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Scialom, T. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Clusmann, J.; Kolbinger, F.R.; Muti, H.S. The future landscape of large language models in medicine. Commun. Med. 2023, 3, 141. [Google Scholar] [CrossRef] [PubMed]

- Karabacak, M.; Margetis, K. Embracing Large Language Models for Medical Applications: Opportunities and Challenges. Cureus 2023, 15, e39305. [Google Scholar] [CrossRef]

- Castro, A.; Pinto, J.; Reino, L.; Pipek, P.; Capinha, C. Large language models overcome the challenges of unstructured text data in ecology. bioRxiv 2024, 82, 102742. [Google Scholar] [CrossRef]

- Tan, S.Z.; Zheng, Z.; Lu, X.Z. Exploring and Discussion on the Application of Large Language Models in Construction Engineering. Ind. Constr. 2023, 53, 162–169. [Google Scholar] [CrossRef]

- Smetana, M.; Salles de Salles, L.; Sukharev, I.; Khazanovich, L. Highway Construction Safety Analysis Using Large Language Models. Appl. Sci. 2024, 14, 1352. [Google Scholar] [CrossRef]

- Dudhee, V.; Vukovic, V. How large language models and artificial intelligence are transforming civil engineering. Proc. Inst. Civil Eng. 2023, 176, 4. [Google Scholar] [CrossRef]

- Chen, Z.; Lin, M.; Wang, Z.; Zang, M.; Bai, Y. PreparedLLM: Effective pre-pretraining framework for domain-specific large language models. Big Earth Data 2024, 8, 649–672. [Google Scholar] [CrossRef]

- Lin, M.; Jin, M.; Li, J.; Bai, Y. GEOSatDB: Global civil earth observation satellite semantic database. Big Earth Data 2024, 8, 522–539. [Google Scholar] [CrossRef]

- Wang, D.; Tong, X.; Dai, C.; Guo, C.; Lei, Y.; Qiu, C.; Li, H.; Sun, Y. Voxel modeling and association of ubiquitous spatiotemporal information in natural language texts. Int. J. Digit. Earth 2023, 16, 868–890. [Google Scholar] [CrossRef]

- Augenstein, I.; Baldwin, T.; Cha, M.; Chakraborty, T.; Ciampaglia, G.L.; Corney, D.; Zagni, G. Factuality challenges in the era of large language models and opportunities for fact-checking. Nat. Mach. Intell. 2024, 6, 852–863. [Google Scholar] [CrossRef]

- Weiser, B.; Schweber, N. Lawyer who used ChatGPT faces penalty for made up citations. New York Times. 8 June 2023, p. 8. Available online: https://www.nytimes.com/2023/06/08/nyregion/lawyer-chatgpt-sanctions.html (accessed on 27 December 2023).

- Opdahl, A.L.; Tessem, B.; Dang-Nguyen, D.T.; Motta, E.; Setty, V.; Throndsen, E.; Trattner, C. Trustworthy journalism through AI. Data. Knowl. Eng. 2023, 146, 102182. [Google Scholar] [CrossRef]

- Shen, Y.; Heacock, L.; Elias, J.; Hentel, K.D.; Reig, B.; Shih, G.; Moy, L. ChatGPT and other large language models are double-edged swords. Radiology 2023, 307, e230163. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Ling, C.; Zhao, X.; Lu, J.; Deng, C.; Zheng, C.; Wang, J.; Zhao, L. Domain specialization as the key to make large language models disruptive: A comprehensive survey. arXiv 2023, arXiv:2305.18703. [Google Scholar] [CrossRef]

- Lu, R.S.; Lin, C.C.; Tsao, H.Y. Empowering Large Language Models to Leverage Domain-Specific Knowledge in E-Learning. Appl. Sci. 2024, 14, 5264. [Google Scholar] [CrossRef]

- Marvin, G.; Hellen, N.; Jjingo, D.; Nakatumba-Nabende, J. Prompt Engineering in Large Language Models. In Data Intelligence and Cognitive Informatics; Jacob, I.J., Piramuthu, S., Falkowski-Gilski, P., Eds.; ICDICI 2023; Algorithms for Intelligent Systems; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Q.; Du, J.; Peng, X.; Keloth, V.K.; Zuo, X.; Xu, H. Improving large language models for clinical named entity recognition via prompt engineering. J. Am. Med. Inform. Assoc. 2024, 31, 1812–1820. [Google Scholar] [CrossRef]

- Hsueh, C.Y.; Zhang, Y.; Lu, Y.W.; Han, J.C.; Meesawad, W.; Tsai, R.T.H. NCU-IISR: Prompt Engineering on GPT-4 to Stove Biological Problems in BioASQ 11b Phase B. In Proceedings of the CLEF, Thessaloniki, Greece, 18–21 September 2023; pp. 114–121. Available online: https://api.semanticscholar.org/CorpusID:264441290 (accessed on 27 December 2023).

- Knoth, N.; Tolzin, A.; Janson, A.; Leimeister, J.M. AI literacy and its implications for prompt engineering strategies. Comput. Educ. Artif. Intell. 2024, 6, 100225. [Google Scholar] [CrossRef]

- Heston, T.F.; Khun, C. Prompt Engineering in Medical Education. Int. Med. Educ. 2023, 2, 198–205. [Google Scholar] [CrossRef]

- Lee, U.; Jung, H.; Jeon, Y.; Sohn, Y.; Hwang, W.; Moon, J.; Kim, H. Few-shot is enough: Exploring ChatGPT prompt engineering method for automatic question generation in english education. Educ. Inf. Technol. 2024, 29, 11483–11515. [Google Scholar] [CrossRef]

- Giray, L. Prompt Engineering with ChatGPT: A Guide for Academic Writers. Ann. Biomed. Eng. 2023, 51, 2629–2633. [Google Scholar] [CrossRef]

- Velásquez-Henao, J.D.; Franco-Cardona, C.J.; Cadavid-Higuita, L. Prompt Engineering: A methodology for optimizing interactions with AI-Language Models in the field of engineering. Dyna 2023, 90, 9–17. [Google Scholar] [CrossRef]

- Borzunov, A.; Ryabinin, M.; Chumachenko, A.; Baranchuk, D.; Dettmers, T.; Belkada, Y.; Raffel, C.A. Distributed inference and fine-tuning of large language models over the internet. Adv. Neural Inf. Process. Syst. 2024, 36, 12312–12331. [Google Scholar]

- Li, Y.; Wang, S.; Ding, H.; Chen, H. Large language models in finance: A survey. In Proceedings of the 4th ACM International Conference on AI in Finance, Brooklyn, NY, USA, 27–29 November 2023; pp. 374–382. [Google Scholar] [CrossRef]

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Mann, G. Bloomberggpt: A large language model for finance. arXiv 2023, arXiv:2303.17564. [Google Scholar] [CrossRef]

- Liu, X.Y.; Wang, G.; Yang, H.; Zha, D. Fingpt: Democratizing internet-scale data for financial large language models. arXiv 2023, arXiv:2307.10485. [Google Scholar] [CrossRef]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Hou, L.; Natarajan, V. Towards expert-level medical question answering with large language models. arXiv 2023, arXiv:2305.09617. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Chen, J.; Jiang, F.; Yu, F.; Chen, Z.; Li, J.; Chen, G.; Wu, X.; Zhang, Z.; Xiao, Q.; et al. HuatuoGPT, towards Taming Language Model to Be a Doctor. arXiv 2023, arXiv:2305.15075. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Xi, N.; Qiang, Z.; Zhao, S.; Qin, B.; Liu, T. HuaTuo: Tuning LLaMA Model with Chinese Medical Knowledge. arXiv 2023, arXiv:abs/2304.06975. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, C.; He, Z.; Yu, W. GeoGPT: An assistant for understanding and processing geospatial tasks. Int. J. Appl. Earth Obs. Geoinform. 2024, 131, 103976. [Google Scholar] [CrossRef]

- Nie, Y.; Zelikman, E.; Scott, A.; Paletta, Q.; Brandt, A. SkyGPT: Probabilistic ultra-short-term solar forecasting using synthetic sky images from physics-constrained VideoGPT. Adv. Appl. Energy. 2024, 14, 100172. [Google Scholar] [CrossRef]

- Deng, C.; Zhang, T.; He, Z.; Chen, Q.; Shi, Y.; Xu, Y.; He, J. K2: A foundation language model for geoscience knowledge understanding and utilization. In Proceedings of the 17th ACM International Conference on Web Search and Data Mining, Merida, Mexico, 4–8 March 2024; pp. 161–170. [Google Scholar] [CrossRef]

- Lin, Z.; Deng, C.; Zhou, L.; Zhang, T.; Xu, Y.; Xu, Y.; He, Z.; Shi, Y.; Dai, B.; Song, Y.; et al. GeoGalactica: A Scientific Large Language Model in Geoscience. arXiv 2023, arXiv:abs/2401.00434. [Google Scholar] [CrossRef]

- Asai, A.; Min, S.; Zhong, Z.; Chen, D. Retrieval-based language models and applications. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 7 July 2023; Volume 6, pp. 41–46. [Google Scholar] [CrossRef]

- Guu, K.; Lee, K.; Tung, Z.; Pasupat, P.; Chang, M. Retrieval augmented language model pre-training. In International Conference on Machine Learning; PMLR: New York, NY, USA, 2020; pp. 3929–3938. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar] [CrossRef]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Li, Q. A survey on rag meeting llms: Towards retrieval-augmented large language models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6491–6501. [Google Scholar] [CrossRef]

- Quinonez, C.; Meij, E. A new era of AI-assisted journalism at Bloomberg. AI Mag. 2024, 45, 187–199. [Google Scholar] [CrossRef]

- Qian, J.; Jin, Z.; Zhang, Q.; Cai, G.; Liu, B. A Liver Cancer Question-Answering System Based on Next-Generation Intelligence and the Large Model Med-PaLM 2. Int. J. Comput. Sci. Inf. Technol. 2024, 2, 28–35. [Google Scholar] [CrossRef]

- Iz, B.; Kyle, L.; Arman, C. SciBERT: A Pretrained Language Model for Scientific Text. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP): System Demonstrations, Hong Kong, China, 3–7 November 2019; pp. 3615–3620. [Google Scholar] [CrossRef]

- Chaminé, H.I.; Fernandes, I. The role of engineering geology mapping and GIS-based tools in geotechnical practice. In Advances on Testing and Experimentation in Civil Engineering; Springer: Cham, Switzerland, 2022; pp. 3–27. [Google Scholar] [CrossRef]

- Wang, C.; Wang, X.; Chen, J. Digital Geological Mapping to Facilitate Field Data Collection, Integration, and Map Production in Zhoukoudian, China. Appl. Sci. 2021, 11, 5041. [Google Scholar] [CrossRef]

- Cai, B.; Zhao, J.; Yu, X. A methodology for 3D geological mapping and implementation. Multimed. Tools Appl. 2019, 78, 28703–28713. [Google Scholar] [CrossRef]

- Chen, H.; Liu, H.; Shen, C.; Xie, W.; Liu, T.; Zhang, J.; Lu, J.; Li, Z.; Peng, Y. Research on Geological-Engineering Integration Numerical Simulation Based on EUR Maximization Objective. Energies 2024, 17, 3644. [Google Scholar] [CrossRef]

- Song, Y.; Yin, T.; Zhang, C.; Wang, N.; Hou, X. Application of sedimentary numerical simulation in sequence stratigraphy study. Arab. J. Geosci. 2020, 13, 267. [Google Scholar] [CrossRef]

- Pham, L.T.; Oliveira, S.P.; Le, C.V.A. Editorial for the Special Issue “Application of Geophysical Data Interpretation in Geological and Mineral Potential Mapping”. Minerals 2024, 14, 63. [Google Scholar] [CrossRef]

- Lai, J.; Su, Y.; Xiao, L.; Zhao, F.; Bai, T.; Li, Y.; Qin, Z. Application of geophysical well logs in solving geologic issues: Past, present and future prospect. Geosci. Front. 2024, 15, 101779. [Google Scholar] [CrossRef]

- Hdeid, O.M.; Morsli, Y.; Raji, M.; Baroudi, Z.; Adjour, M.; Nebagha, K.C.; Vall, I.B. Application of Remote Sensing and GIS in Mineral Alteration Mapping and Lineament Extraction Case of Oudiane Elkharoub (Requibat Shield, Northern of Mauritania). Open J. Geol. 2024, 14, 823–854. [Google Scholar] [CrossRef]

- Bety, A.K.; Hassan, M.M.; Salih, N.M.; Thannoun, R.G. The Application of Remote Sensing Techniques for Identification the Gypsum Rocks in the Qara Darbandi Anticline, Kurdistan Region of Iraq. Iraqi Geol. J. 2024, 57, 308–322. [Google Scholar] [CrossRef]

- Hofer, M.; Obraczka, D.; Saeedi, A.; Köpcke, H.; Rahm, E. Construction of Knowledge Graphs: Current State and Challenges. Information 2024, 15, 509. [Google Scholar] [CrossRef]

- Zhou, Y.Z.; Zhang, Q.L.; Huang, Y.J.; Yang, W.; Xiao, F.; Ji, J. Constructing knowledge graph for the porphyry copper deposit in the Qingzhou-Hangzhou Bay area: Insight into knowledge graph based mineral resource prediction and evaluation. Earth Sci. Front. 2021, 28, 9. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Zhou, Y.Z.; Guo, L.X.; Yuan, G.Q.; Yu, P.P.; Wang, H.Y.; Zhu, B.B.; Han, F.; Long, S.Y. Intelligent application of knowledge graphs in mineral prospecting: A case study of porphyry copper deposits in the Qin-Hang metallogenic belt. Earth Sci. Front. 2024, 31, 7–15. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Zhou, Y.Z.; Yu, P.P.; Wang, H.Y.; Han, F.; He, J.X. Ontology construction of multi-level ore deposit and its application in knowledge graph. Bull. Mineral. Petrol. Geochem. 2024, 43, 211–217. [Google Scholar] [CrossRef]

- Zhang, C.; Govindaraju, V.; Borchardt, J.; Foltz, T.; Ré, C.; Peters, S. GeoDeepDive: Statistical inference using familiar data-processing languages. In Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data, New York, NY, USA, 23–28 June 2013; pp. 993–996. [Google Scholar] [CrossRef]

- Janowicz, K.; Gao, S.; McKenzie, G.; Hu, Y.; Bhaduri, B. GeoAI: Spatially explicit artificial intelligence techniques for geographic knowledge discovery and beyond. Int. J. Geogr. Inf. Sci. 2020, 34, 625–636. [Google Scholar] [CrossRef]

- Choi, Y. GeoAI: Integration of Artificial Intelligence, Machine Learning, and Deep Learning with GIS. Appl. Sci. 2023, 13, 3895. [Google Scholar] [CrossRef]

- Bornstein, T.; Lange, D.; Münchmeyer, J.; Woollam, J.; Rietbrock, A.; Barcheck, G.; Tilmann, F. PickBlue: Seismic phase picking for ocean bottom seismometers with deep learning. Earth Space Sci. 2024, 11, e2023EA003332. [Google Scholar] [CrossRef]

- Maio, R.; Arko, R.A.; Lehnert, K.; Ji, P. Entity Linking Leveraging the GeoDeepDive Cyberinfrastructure and Managing Uncertainty with Provenance; AGU Fall Meeting Abstract; American Geophysical Union: Washington, DC, USA, 2017; p. #IN33B-0118. Available online: https://ui.adsabs.harvard.edu/abs/2017AGUFMIN33B0118M (accessed on 27 December 2023).

- Goring, S.; Marsicek, J.; Ye, S.; Williams, J.W.; Meyers, S.; Peters, S.E.; Marcott, S. A Model Workflow for GeoDeepDive: Locating Pliocene and Pleistocene Ice-Rafted Debris. EarthArXiv 2021. [Google Scholar] [CrossRef]

- Husson, J.M.; Peters, S.E.; Ross, I.; Czaplewski, J.J. Macrostrat and GeoDeepDive: A platform for Geological Data Integration and Deep-time Research; AGU Fall Meeting Abstract; American Geophysical Union: Washington, DC, USA, 2016; p. IN23F-04. Available online: https://ui.adsabs.harvard.edu/abs/2016AGUFMIN23F..04H (accessed on 27 December 2023).

- Kumpf, B. Evaporites Through Phanerozoic Time: Using GeoDeepDive, Macrostrat, and Geochemical Modeling to Investigate and Model Changes in Seawater Chemistry. Ph.D. Thesis, University of Victoria, Victoria, BC, Canada, 2024. Available online: https://hdl.handle.net/1828/16395 (accessed on 27 December 2023).

- Liu, P.; Biljecki, F. A review of spatially-explicit GeoAI applications in Urban Geography. Int. J. Appl. Earth Obs. Geoinform. 2022, 112, 102936. [Google Scholar] [CrossRef]

- Li, W.; Hsu, C.-Y. GeoAI for Large-Scale Image Analysis and Machine Vision: Recent Progress of Artificial Intelligence in Geography. ISPRS Int. J. Geo-Inf. 2022, 11, 385. [Google Scholar] [CrossRef]

- Woollam, J.; Münchmeyer, J.; Tilmann, F.; Rietbrock, A.; Lange, D.; Bornstein, T.; Soto, H. SeisBench—A toolbox for machine learning in seismology. Seismol. Soc. Am. 2022, 93, 1695–1709. [Google Scholar] [CrossRef]

- Ramaneti, K.; Rajkumar, S. An Overview of Recent Advances and Applications of Machine Learning in Seismic Phase Picking. RearchGate 2022. [Google Scholar] [CrossRef]

- Pita-Sllim, O.; Chamberlain, C.J.; Townend, J.; Warren-Smith, E. Parametric testing of EQTransformer’s performance against a high-quality, manually picked catalog for reliable and accurate seismic phase picking. Seism. Rec. 2023, 3, 332–341. [Google Scholar] [CrossRef]

- Münchmeyer, J.; Saul, J.; Tilmann, F. Learning the deep and the shallow: Deep-learning-based depth phase picking and earthquake depth estimation. Seismol. Res. Lett. 2024, 95, 1543–1557. [Google Scholar] [CrossRef]

- Chen, H.L.; Chen, H.Z.; Han, K.F.; Zhu, G.X.; Zhao, Y.C.; Du, Y. Domain-Specific Foundation-Model Customization: Theoretical Foundation and Key Technology. J. Data Acquis. Process. 2024, 39, 524–546. [Google Scholar] [CrossRef]

- He, J.X.; Zhang, Q.L.; Xu, Y.T.; Liu, Y.Q.; Wang, W.X.; Zhou, Y.Z.; Yu, P.P. Research progress of Qinzhou—Hangzhou metallogenic belt—Analysed from CiteSpace community discovery. Geol. Rev. 2023, 69, 1919–1927. [Google Scholar] [CrossRef]

- Shi, D.; Fan, S.; Li, G.; Zhu, Y.; Yan, Q.; Jia, M.; Faisal, M. Genesis of Yongping copper deposit in the Qin-Hang Metallogenic Belt, SE China: Insights from sulfide geochemistry and sulfur isotopic data. Ore Geol. Rev. 2024, 173, 106231. [Google Scholar] [CrossRef]

- Duan, R.C.; Jiang, S.Y. Fluid Inclusions and H-O-C-S-Pb Isotope Studies of the Xinmin Cu-Au-Ag Polymetallic Deposit in the Qinzhou-Hangzhou Metallogenic Belt, South China: Constraints on Fluid Origin and Evolution. Geofluids 2021, 1, 5171579. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Zhang, X.; Zhang, J.; Liu, A.; Jiang, M.; Bai, X. Geology, geochronology, and stable isotopes of the Triassic Tianjingshan orogenic gold deposit, China: Implications for ore genesis of the Qinzhou Bay–Hangzhou Bay metallogenic belt. Ore Geol. Rev. 2021, 131, 103952. [Google Scholar] [CrossRef]

- Zhou, B.; Yan, C.; Zhan, Y.; Sun, X.; Li, S.; Wen, X.; Huang, M. Deep electrical structures of Qinzhou-Fangcheng Junction Zone in Guangxi and seismogenic environment of the 1936 Lingshan M 6¾ earthquake. Sci. China Earth Sci. 2024, 67, 584–603. [Google Scholar] [CrossRef]

- Xu, D.M.; Qu, Z.Y.; Long, W.G.; Zhang, K.; Wang, L.; Zhou, D.; Huang, H. Research History and Current Situation of Qinzhou-Hangzhou Metallogenic Belt, South China. Geol. Mineral. Resour. South China 2012, 28, 277. [Google Scholar] [CrossRef]

- Wang, G.; Fang, H.; Qiu, G.; Pei, F.; He, M.; Du, B.; Peng, Y. Three-dimensional magnetotelluric imaging of the Eastern Qinhang Belt between the Yangtze Block and Cathaysia Block: Implications for lithospheric architecture and associated metallogenesis. Ore Geol. Rev. 2023, 158, 105490. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, Q.; Farquharson, C.; Yan, J. Metallogenic Mechanism of the North Qinhang Belt, South China, from Gravity and Magnetic Inversions. In Proceedings of the 25th European Meeting of Environmental and Engineering Geophysics, The Hague, The Netherlands, 8–12 September 2019; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Zheng, M.; Xu, T.; Lü, Q.; Lin, J.; Huang, M.; Bai, Z.; Badal, J. Upper crustal structure beneath the Qin-Hang and Wuyishan metallogenic belts in Southeast China as revealed by a joint active and passive seismic experiment. Geophys. J. Int. 2023, 232, 190–200. [Google Scholar] [CrossRef]

- Zhang, D.; Li, F.; He, X.L. Mesozoic tectonic deformation and its rock/ore-control mechanism in the important metallogenic belts in South China. J. Geomech. 2021, 27, 497–528. [Google Scholar] [CrossRef]

- Song, C.Z.; Li, J.H.; Yan, J.Y.; Wang, Y.Y.; Liu, Z.D.; Yuan, F.; Li, Z.W. A tentative discussion on some tectonic problems in the east of South China continent. Geol. China 2019, 46, 704–722. [Google Scholar] [CrossRef]

- Zhang, Y.Q.; Xu, Y.; Yan, J.Y.; Xu, Z.W.; Zhao, J.H. Crustal thickness, properties and its relations to mineralization in the southeastern part of South China: Constraint from the teleseismic receiver functions. Geol. China 2019, 46, 723–736. [Google Scholar] [CrossRef]

- Zeng, T.G.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Rojas, D.; Feng, G.; Zhao, H.; Lai, H.; Yu, H.; et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. arXiv 2024, arXiv:abs/2406.12793. [Google Scholar] [CrossRef]

- Topsakal, O.; Akinci, T.C. Creating large language model applications utilizing langchain: A primer on developing llm apps fast. Int. Conf. Appl. Eng. Nat. Sci. 2023, 1, 1050–1056. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, J.; Zhang, S.; Yang, Y. Poisoned LangChain: Jailbreak LLMs by LangChain. arXiv 2024, arXiv:2406.18122. [Google Scholar] [CrossRef]

- Jeong, S.; Baek, J.; Cho, S.; Hwang, S.J.; Park, J.C. Adaptive-rag: Learning to adapt retrieval-augmented large language models through question complexity. arXiv 2024, arXiv:2403.14403. [Google Scholar] [CrossRef]

- Massey, P.A.; Montgomery, C.; Zhang, A.S. Comparison of ChatGPT–3.5, ChatGPT-4, and orthopaedic resident performance on orthopaedic assessment examinations. JAAOS-J. Am. Acad. Orthop. Surg. 2023, 31, 1173–1179. [Google Scholar] [CrossRef] [PubMed]

- Crawford, J.; Cowling, M.; Ashton-Hay, S.; Kelder, J.-A.; Middleton, R.; Wilson, G. Artificial Intelligence and authorship editor policy: ChatGPT, Bard Bing, and beyond. J. Univ. Teach. Learn. Pract. 2023, 20, 1. [Google Scholar] [CrossRef]

- Reiter, E. A structured review of the validity of BLEU. Comput. Linguist. 2018, 44, 393–401. [Google Scholar] [CrossRef]

- Imran, M.; Almusharraf, N. Google Gemini as a next generation AI educational tool: A review of emerging educational technology. Smart Learn. Environ. 2024, 11, 22. [Google Scholar] [CrossRef]

- Zhou, Y.Z.; Zhang, L.J.; Zhang, A.D.; Wang, J. Bigdata Mining & Machine Learning in Geoscience; Sun Yat-sen University Press: Zhuhai, China, 2018. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).