Abstract

Recent advancements in image processing technology have positively impacted some fields, such as image, document, and video production. However, the negative implications of these advancements have also increased, with document image manipulation being a prominent issue. Document image manipulation involves the forgery or alteration of documents like receipts, invoices, various certificates, and confirmations. The use of such manipulated documents can cause significant economic and social disruption. To prevent these issues, various methods for the detection of forged document images are being researched, with recent proposals focused on deep learning techniques. An essential aspect of using deep learning to detect manipulated documents is to enhance or augment the characteristics of document images before inputting them into a model. Enhancing the distinctive features of manipulated documents before inputting them into a deep learning model is crucial to achieve high accuracy. One crucial characteristic of document images is their inherent symmetrical patterns, such as consistent text alignment, structural balance, and uniform pixel distribution. This study investigates document forgery detection through a symmetry-aware approach. By focusing on the symmetric structures found in document layouts and pixel distribution, the proposed LTHE technique enhances feature extraction in deep learning-based models. Therefore, this study proposes a new image enhancement technique based on the results of three general-purpose CNN models to enhance the characteristics of document images and achieve high accuracy in deep learning-based forgery detection. The proposed LTHE (Log-Transform Histogram Equalization) technique increases low pixel values through log transformation and increases image contrast by performing histogram equalization to make the features of the image more prominent. Experimental results show that the proposed LTHE technique achieves higher accuracy when compared to other enhancement methods, indicating its potential to aid the development of deep learning-based forgery detection algorithms in the future.

1. Introduction

Symmetry plays a critical role in document layouts, often through text alignment, font style, and formatting. Forgery disrupts this inherent symmetry, creating detectable anomalies. This study addresses such disruptions by integrating symmetry-aware techniques with image processing for deep learning-based forgery detection purposes. Using document images to manage important information offers several advantages in terms of information transmission and management. This approach not only overcomes the limitations of time and space but also helps conserve resources required for document printing. For these reasons, many companies and government agencies store documents as image files and distribute them when necessary to manage information more efficiently. However, with recent advancements in image editing technology, there has been an increase in cases of complex forgery or alteration of document images, which can cause economic and social disruption.

Within the various forgery techniques commonly used, copy-move and insertion are particularly prevalent. Copy-move involves copying a text image from one part of a document and pasting it in another location intended for manipulation. Insertion entails typing new text into the area to be manipulated, ensuring that font and font size match the original. Splicing is a technique where external data are cut and pasted into the area intended for manipulation, integrating it seamlessly into the document [1].

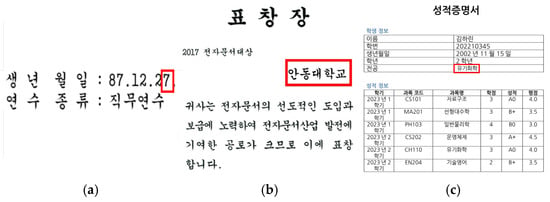

As shown in Figure 1, it is very difficult to detect manipulated areas without assistance. This is because, unlike general image manipulation, document image manipulation has several key characteristics [2].

Figure 1.

Images purposely forged for research purposes. (a) Copy-move method. (b) Insertion method. (c) Splicing method.

First, there is concealment: various factors can create a visual appearance that is difficult to detect, such as small size, similar colors, fonts, orientation, and background. Second, there are minimal traces: text usually appears on the surface of an object and, due to simpler environmental factors compared to general images, manipulation leaves fewer traces. Third, there is high variability: text is composed of characters and the scale of the manipulated text can vary widely, from individual characters to entire paragraphs.

For these reasons, various methods for detecting forged document images are being researched, with a recent focus on the use of deep learning technologies. In [3], Visual Perception Head (VPH) was designed to extract visual domain features from an image and Frequency Perception Head (FPH) was created to extract frequency domain features using Discrete Cosine Transform (DCT) coefficients. These extracted features were concatenated using a Multi-Modality Transformer. Following this, a Multi-View Iterative Decoder was applied to identify forged regions. Convolutional layers were utilized at every stage of the process, which contributed to its higher performance. In [4], the image was initially processed using DCT and divided into positive and negative regions, each of which was reconstructed into a separate image. To more effectively enhance the characteristics of these images, Canny and Sobel operators were applied. Subsequently, a CNN model was constructed using the enhanced features as input to classify the final results. In [5], the Error Level Analysis (ELA) technique was used to identify regions suspected of forgery; these regions were then analyzed using a CNN to determine whether an image had been tampered with. In [2], an image was preprocessed using block-wise DCT, noise extraction, and ELA to detect manipulation artifacts. These transformed features were then processed through a Dual Stream Extractor (DSE) and fused using the Gated Cross-neighborhood Attention Fusion (GCNF) module before being fed into the model for tampered region prediction.

As mentioned in the studies above, detection techniques that utilize deep learning generally emphasize or extract image features and use these emphasized or extracted data as input data for a deep learning model. While the architecture of the deep learning model is important, one of the most effective ways to enhance the performance of deep learning is to train the model with high-quality data. For this reason, various image processing techniques have been applied to emphasize the characteristics of forged regions in document images, with commonly used examples including DCT, Canny, and ELA algorithms. However, when images processed using these techniques are utilized as input data for different deep learning models, achieving consistent performance improvement is a challenge. In fact, the experiments conducted in this study show that the application of previously published image processing algorithms to enhance input data features does not achieve high classification accuracy across various CNN models.

To address this gap, this study proposes a novel image processing technique, LTHE (Log-Transform Histogram Equalization), specifically designed to amplify subtle pixel changes in forged document images, thereby enhancing feature extraction for deep learning models. LTHE is applied to foundational CNN models, including DenseNet121 [6], ResNet50 [7], and EfficientNetB0 [8], and demonstrates its effectiveness across these architectures. This method combines logarithmic transformations with histogram equalization to enhance contrast in darker regions of document images, which is highly relevant to forgery detection. By amplifying the subtle pixel-level changes that occur during a forgery, this dual enhancement approach provides a preprocessing technique that improves the ability of deep learning models to classify forged and non-forged images.

The experiments were conducted using images processed using LTHE and other image processing algorithms as input data for the DenseNet121, ResNet50, and EfficientNetB0 models. The results show that the LTHE method proposed in this study achieves the highest classification performance. This study expects to make a significant contribution toward improving the performance of various document image forgery detection methods using deep learning models.

2. Related Work

2.1. Document Image Forgery Techniques

Document image forgery techniques commonly involve methods such as copy-move, splicing, and insertion.

- Copy-move: Copy-move forgeries are performed by copying one or more regions of an image and pasting them in the same image in different locations [9]. The goal is often to duplicate or cover certain content in the document, making it appear as if the manipulated section is an original part of the image.

- Splicing: Splicing forgeries copy and paste parts of one image onto another, merging the two to create a new image [10]. In the context of document forgery, this involves inserting text, signatures, or other elements from one document into another, creating a falsified document that appears legitimate.

- Insertion: Words are altered using software tools to add characters in the appropriate places, according to the forger’s needs [11]. This technique is often used in cases where it is difficult to find the required characters within the document, such as in languages like Korean, which have a large variety of characters.

2.2. Optical Character Recognition (OCR)

OCR, or Optical Character Recognition, refers to the process of electronically converting handwritten, typewritten, or printed text into a machine-readable format. It is used extensively to recognize and search for text in electronic documents, as well as to make text on websites accessible. This OCR technology has a wide range of applications across various industries, including invoice image processing, the legal industry, banking, and healthcare. Additionally, OCR is widely used in many other fields, such as Captcha recognition, institutional repositories and digital libraries, optical music recognition performed without human intervention, automatic license plate recognition, and handwriting recognition [12]. Widely used OCR tools include PaddleOCR, Tesseract OCR, and Google Cloud Vision OCR.

2.3. Image Processing Methods

2.3.1. DCT

DCT (Discrete Cosine Transform) attempts to decorrelate image data. After decorrelation, each transform coefficient can be independently encoded without losing compression efficiency. The efficacy of a transformation scheme can be directly gauged by its ability to pack input data into as few coefficients as possible. This allows the quantizer to discard coefficients with relatively small amplitudes without introducing visual distortion in the reconstructed image. DCT exhibits excellent energy compaction in highly correlated images. The 2-dimension DCT formula is calculated in Equation (1), as follows [13]:

In the field of document image forgery detection, DCT is utilized to emphasize or detect characteristics of manipulated regions. DCT converts an image into a frequency domain, separating it into low-frequency and high-frequency components, each representing different features of an image. For example, low-frequency components reflect the overall structure or background of an image while high-frequency components highlight edges and fine details.

In manipulated images, subtle distortions or changes may occur at a pixel level compared to the original image. These changes might not be easily visible to the naked eye, but when transformed into a frequency domain using DCT, these differences become more apparent. Specifically, in a manipulated image, the original patterns or structures may become distorted, leading to changes in the DCT coefficients. For this reason, it is widely utilized in document image forgery detection to identify manipulated regions [4].

2.3.2. CLAHE

CLAHE (Contrast Limited Adaptive Histogram Equalization) is an algorithm used to enhance image contrast and is a variation of histogram equalization. This technique divides an image into small blocks and applies histogram equalization to each block individually, allowing for precise contrast adjustments in different regions of the image. A key feature of CLAHE is its contrast-limiting function, which prevents excessive contrast enhancement by setting a specific clipping limit on the histogram. Any frequencies that exceed this limit are redistributed to neighboring bins. This process helps maintain a more balanced and realistic result by controlling the increase in contrast. Below is a detailed method to apply the CLAHE algorithm to an image [14].

Step 1: Prepare the input image and retrieve all the input parameters used in the enhancement process, such as the number of regions in the row and column directions, dynamic range (number of bins used in the histogram transform function), clip limit, and distribution parameter type.

Step 2: Divide the original image into regions and preprocess these inputs.

Step 3: Apply the process over the tile (contextual region).

Step 4: Generate a gray-level map and a clipped histogram. In the contextual region, the number of pixels is divided equally between each gray level.

Step 5: Interpolate gray level mapping to create an enhanced image. In this process, apply a four-pixel cluster to the mapping process so that each map tile partially overlaps in the image region. Extract a single pixel and apply four mapping processes to that pixel. Interpolate the results to obtain an enhanced pixel. Repeat over the image.

In the field of document image forgery detection, CLAHE is used on forged images to enhance and extract key points from manipulated areas. The method proposed in [15] suggests using CLAHE before the key point extraction procedure to improve performance by increasing the number of key points in low-contrast regions. Experimental results indicate that the number of key points extracted from the forged regions increases after applying the CLAHE algorithm.

2.3.3. ELA

ELA (Error Level Analysis) is one of the techniques used to detect whether a digital image has been manipulated. ELA determines whether an image has been altered by checking whether the compression errors (or losses) in each part of the image are at the same level. This is especially relevant for JPEG images where, in general, the compression level of the entire image should be consistent. ELA identifies areas within an image that have different levels of compression. If a specific area of an image shows a different error level, it is likely an indication of a change or manipulation.

ELA emphasizes differences in JPEG compression rates in which uniform areas of color, such as a solid blue sky or a white wall, are likely to have lower ELA results (darker colors) compared to high-contrast edges. Therefore, ELA is an effective tool to detect whether an image has been manipulated [5].

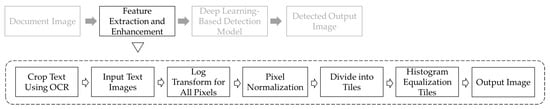

3. Log-Transform Histogram Equalization

We propose an algorithm called LTHE (Log-Transform Histogram Equalization) that enhances subtle changes in the forged regions of an image, making them suitable as input data for deep learning models to ultimately improve their detection performance of forged document images. The proposed LTHE technique amplifies symmetric patterns within document images, emphasizing pixel value changes in text regions that maintain or disrupt symmetry during manipulation. The overall structure of LTHE involves applying a logarithmic transformation to an image to enhance any subtle pixel value changes in text regions. Subsequently, histogram equalization is used to increase image contrast, further highlighting the differences between forged and non-forged regions in the log-transformed image. In the overall process of deep learning-based detection methods, the algorithm proposed in this study focuses on feature extraction and enhancement. The complete process is illustrated in Figure 2.

Figure 2.

The proposed feature extraction and enhancement steps for deep learning-based detection methods.

3.1. Log Transform

The first step of the algorithm is to apply logarithmic transformation to an input image. Logarithmic transformation is essential in image processing, especially when dealing with images that have a wide range of contrast. This transformation is nonlinear, meaning it is performed by applying a logarithmic function to the pixel intensity values of an original image. The key feature of this transformation is that it significantly increases lower intensity values while reducing higher intensity values. This adjustment makes details in darker areas of an image more visible while suppressing excessive brightness in lighter areas. The mathematical expression for logarithmic transformation is as follows:

In an original grayscale image, pixel intensity at a specific point is denoted as , where and represent the coordinates of the pixel within the image. After applying logarithmic transformation to the image, the resultant pixel value at the same coordinates is denoted as . This transformed value reflects the modified intensity after the logarithmic adjustment has been applied. The reason for adding 1 before applying the logarithmic function is to prevent the transformed pixel value becoming negative, which can occur if the original pixel intensity is zero. Normalization is then performed to convert the pixel values into a range from 0 to 255. The formula for normalizing pixel values is provided in Equation (3), as follows:

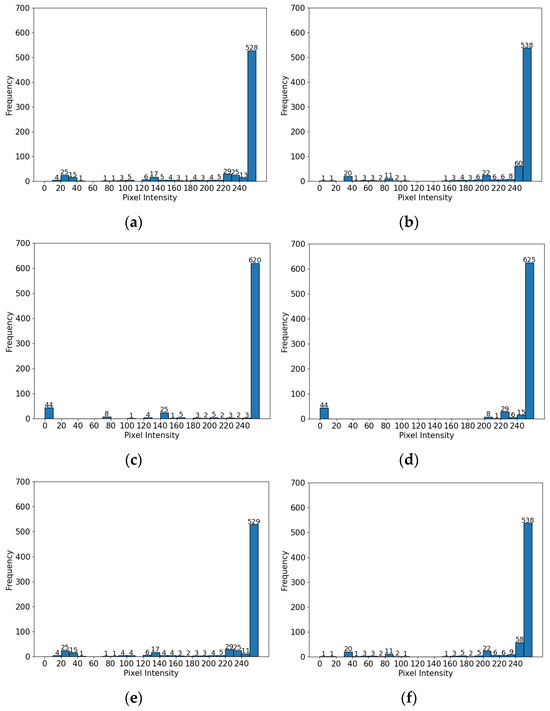

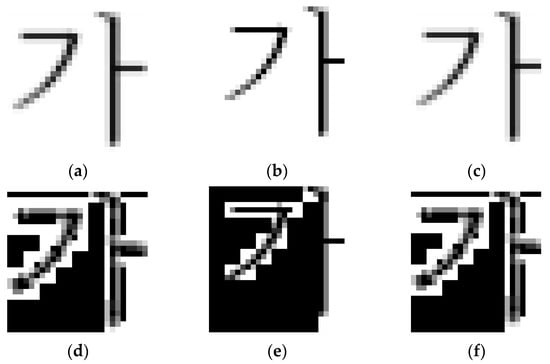

In the normalization process that follows, refers to the minimum pixel intensity value found in a logarithmically transformed image while denotes the maximum pixel intensity value within the same transformed image. This normalization step ensures that the pixel values of the logarithmically transformed image are scaled to fit within the 0 to 255 range, which is essential for the proper display and further processing of the image. The final output is a normalized image where enhanced details from the logarithmic transformation are clearly visible and are appropriately represented within the standard grayscale intensity range. Figure 3 presents the histogram results of logarithmic transformation after forgery using the insertion and copy-move methods on the same text.

Figure 3.

The logarithmic transformation results. (a) A histogram of the original image. (b) A histogram of the original image after applying log transformation. (c) A histogram of the forged image using the insertion method. (d) A histogram of the forged image using the insertion method after applying log transformation. (e) A histogram of the forged image using the copy-move method. (f) A histogram of the forged image using the copy-move method after applying log transformation.

As seen in Figure 3, the log-transformed image shows a decrease in the frequency of low pixel values and an increase in the frequency of higher pixel values. Since log transformation effectively enhances lower pixel values more than higher ones, it increases the value of relatively less-dark pixels in dark pixel regions, making them appear brighter. This result can effectively amplify the differences between original and forged images.

3.2. Histogram Equalization

Following logarithmic transformation, the image undergoes Tile-based Histogram Equalization where the frequency of pixel intensity values is limited. This stage is critical to enhance local contrast within small regions or tiles of an image.

The process begins by dividing an input image into smaller, non-overlapping regions. This division is based on a specified grid size (m × n) where m and n represent the number of regions along the height and width of the image, respectively. The purpose of this division is to allow for localized processing within each region to help enhance specific areas of the image independently, thereby improving local contrast. The height and width of each tile, and , are calculated using Equation (4), as follows:

The size of each tile is determined by the dimensions of the original image, denoted as h (height) and w (width), and the grid size, m and n. This division may result in some tiles at the rightmost and bottom edges of the image being slightly larger if the dimensions h and w are not perfectly divisible by m and n, respectively. In such cases, the last row and column of tiles will include any remaining pixels to ensure that the entire image is fully covered without leaving any regions unprocessed. Once the image is divided into tiles, each tile undergoes histogram equalization, a process designed to enhance contrast within a local region of an image. Afterward, the clipping limit is set to 1 and the histogram is recalculated. While setting the clipping limit to 1 can result in extreme contrast, potentially making an image appear unnatural, it increases contrast and amplifies differences between forged and non-forged regions, thereby enhancing detection capability.

represents the original histogram value for intensity level v within the kth tile and is the clipped histogram value of that tile. Excess pixels from the histogram, clipped at 1 within each tile, are then redistributed across the entire tile, as shown in Equation (6).

In Equation (6), represents the redistributed histogram value of the k-th tile. Once histogram redistribution is complete for each tile, the redistributed histogram is converted into a Cumulative Distribution Function (CDF) for each tile. The CDF of each tile calculates the cumulative frequency of pixel intensities up to a specific intensity level, representing the cumulative distribution within that tile. This is represented in Equation (7).

represents the redistributed histogram value and v is the specific pixel intensity value. The CDF is calculated by summing histogram values from 0 up to v, representing the cumulative distribution. The CDF value is smaller in lower-intensity values and increases as the intensity value becomes higher. Finally, the original pixel values of the image are transformed into new values using the CDF calculated for each tile. This is a crucial step in enhancing the image’s contrast, as explained in Equation (8).

In Equation (8), represents the original pixel intensity value, is the CDF value for intensity in the k-th tile, is the first non-zero CDF value, and is the total number of pixels in tile k. By applying Equation (8), this formula normalizes CDF values within each tile to start from 0, ensuring that the actual intensity range within each tile is preserved. The normalized CDF value is then scaled by multiplying it by 255 to calculate the new pixel intensity within the tile. This process expands pixel intensity values within each tile to a range between 0 and 255, maximizing local contrast.

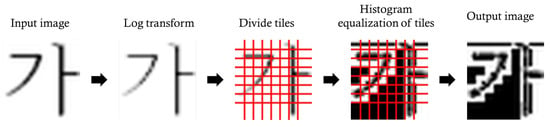

The overall process of LTHE is illustrated in Figure 4 and the results of applying histogram equalization to the log-transformed image are shown in Figure 5. Histogram equalization was performed on a per-tile basis; since the boundaries between tiles were not interpolated, the image might appear somewhat unnatural. However, this method effectively emphasizes subtle pixel variations which, in turn, highlight the characteristics of forged images. When an enhanced image is used as input for a deep learning-based detection method, it can lead to higher classification accuracy.

Figure 4.

A process diagram of the proposed LTHE method.

Figure 5.

The results after applying LTHE. (a) The non-processed original image. (b) The forged image using the insertion method. (c) The forged image using the copy-move method. (d) The LTHE-applied original image. (e) The LTHE-applied forged image using the insertion method. (f) The LTHE-applied forged image using the copy-move method.

4. Experimental Results

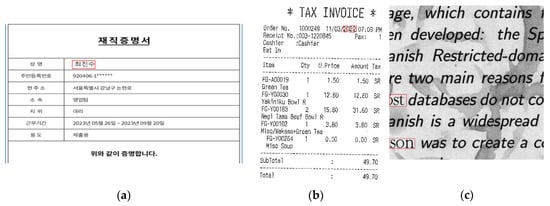

For the experiments, a custom dataset was created using original images and forged images using insertion and copy-move techniques. The generated dataset was then processed using various image processing techniques, including ELA [2], Canny [4], DCT with positive region extraction, DCT with negative region extraction [4], histogram equalization [5], CLAHE [16], Sauvola [3], and the proposed LTHE method. This produced distinct datasets corresponding to each image processing technique. These datasets were subsequently used to train classification models through widely used general-purpose CNN models, specifically DenseNet121, ResNet50, and EfficientNetB0, and the performance of these models was evaluated using custom test data, the ICPR 2018 Fraud Contest dataset, and the DocTamper dataset [3]. Additionally, the processing cost for each image processing algorithm was calculated to assess its applicability to real-world document forgery detection systems. The training dataset was created using Korean text images, consisting of 3940 original images, 3938 forged images using the insertion method, and 4044 forged images using the copy-move method. Examples of the results after applying various image processing techniques to these images are illustrated in Table 1. The custom test dataset consists of intentionally manipulated images for research purposes. The ICPR 2018 Fraud Contest dataset includes manipulated receipt images forged using techniques such as copy-move, splicing, and insertion. Additionally, the DocTamper dataset uses data written in English and is composed of document images, such as contracts and invoices, forged using copy-move and splicing methods. Examples of these images are shown in Figure 6.

Table 1.

Example images showing the results of image processing.

Figure 6.

For performance evaluation purposes, two types of datasets were used with the forged regions indicated by red boxes. (a) A custom test dataset. (b) The ICPR 2018 Fraud Contest dataset. (c) The DocTamper dataset.

As shown in Table 1, the 11,922-character dataset, generated by applying each image processing algorithm, was used to train the DenseNet121, ResNet50, and EfficientNetB0 models. The evaluation results of these models using the custom dataset are presented in Table 2, the results using the ICPR 2018 Fraud Contest dataset are presented in Table 3, and the results using the DocTamper dataset are presented in Table 4. The evaluation metrics used were classification accuracy and Macro F1 score. For each test, data were cropped into text units using OCR techniques and applied to eight image processing processes, including the LTHE method proposed in this paper. The images processed with each algorithm were evaluated for forgery using DenseNet121, ResNet50, and EfficientNetB0. For the histogram equalization step of LTHE, the clipping boundaries were set to 1 to create a stronger contrast. Upon examination of the processed images, it was observed that the changes in images processed with (2, 2) and (4, 4) tile sizes were less pronounced, leading to lower classification accuracy. Therefore, the tile size was ultimately set to (8, 8) and the clipping boundaries to 1 to balance the algorithm’s performance and computational cost. As seen in Table 2, Table 3 and Table 4, the proposed LTHE method achieves the highest classification accuracy and F1 score.

Table 2.

The evaluation results of the image processing algorithms on the custom dataset using deep learning models.

Table 3.

The evaluation results of the image processing algorithms on the ICPR 2018 Fraud Contest dataset using deep learning models.

Table 4.

The evaluation results of the image processing algorithms on the DocTamper dataset using deep learning models.

As seen in Table 2, Table 3 and Table 4, the image preprocessing method, using the proposed LTHE in this paper, achieved high classification accuracy and F1 scores in general deep learning models. Therefore, it is expected that inputting images preprocessed with the LTHE method into a deep learning-based document forgery detection system will result in relatively higher classification accuracy. The following reasons examine why each deep learning model performed differently: DenseNet121 efficiently propagates information through dense connections between layers, making it capable of capturing complex patterns in processed document images [6]. ResNet50 leverages its deep network structure and residual connections to alleviate a vanishing gradient problem experienced during training, excelling at learning deep features [7]. EfficientNetB0 strikes a balance between model size and performance, optimizing the trade-off between accuracy and computational efficiency [8]. Despite these differences in architecture, DenseNet121 demonstrated the most stable and reliable performance in classifying original and manipulated documents. This can be attributed to DenseNet121’s ability to efficiently propagate features, especially when sufficient training data are available, which typically results in better performance. Therefore, when using LTHE-processed images as input data, constructing a model based on DenseNet121 would be the most suitable approach.

If this technique is to be applied to real-world document image manipulation detection, the processing cost of each algorithm also needs to be carefully considered. Therefore, the processing time and memory cost for each algorithm were calculated and the results are presented in Table 5.

Table 5.

The per-image time and memory cost to process each algorithm.

As seen in Table 5, the processing time cost of the LTHE technique proposed in this paper is the highest, but its memory cost is the second lowest. In order for the LTHE algorithm to be used in real-world document forgery detection, it is necessary to develop and apply methods to reduce processing time costs.

5. Conclusions

In this paper, we proposed a new image processing method, LTHE (Log-Transform Histogram Equalization), to enhance the characteristics of forged images in deep learning-based document image forgery detection. By incorporating symmetry into document forgery detection, this study advances the field, offering robust techniques for recognizing asymmetric patterns caused by manipulation. The proposed LTHE technique could increase pixel values in dark areas through logarithmic transformation and enhance image contrast through histogram equalization, thereby highlighting the features of forged images. Experimental results show that the proposed LTHE technique achieves high classification accuracy and F1 scores in forgery detection using the DenseNet121, ResNet50, and EfficientNetB0 models, outperforming other image processing techniques. However, the high computational cost associated with image processing may limit its scalability when handling large-scale datasets. Additionally, inputting preprocessed images into general deep learning models does not always guarantee high accuracy in document forgery detection. Therefore, in addition to the proposed method, further research on the development of deep learning models specifically optimized for document forgery detection is needed. In the future, we plan to develop deep learning-based document image forgery detection methods using the proposed LTHE technique. This approach will lead to a more sophisticated forgery detection system that can be applied to various document types and languages.

Author Contributions

Conceptualization, Y.-Y.B. and K.-H.J.; validation, K.-H.J. and D.-J.C.; formal analysis, Y.-Y.B. and K.-H.J.; writing—original draft preparation, Y.-Y.B. and K.-H.J.; writing—review and editing, D.-J.C. and K.-H.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a Research Grant from Andong National University (Gyeongkuk National University).

Data Availability Statement

The datasets used in this paper are publicly available and their links are provided in the References section.

Acknowledgments

We thank the anonymous reviewers for their valuable suggestions that improved the quality of this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, W.; Dong, J.; Tan, T. Exploring DCT coefficient Quantization Effect for Image Tampering Localization. In Proceedings of the 2011 IEEE International Workshop on Information Forensics and Security, Iguacu Falls, Brazil, 29 November–2 December 2011; pp. 1–6. [Google Scholar]

- Luo, D.; Liu, Y.; Yang, R.; Liu, X.; Zeng, J.; Zhou, Y.; Bai, X. Toward real text manipulation detection: New dataset and new solution. Pattern Recognit. 2025, 157, 110828. [Google Scholar] [CrossRef]

- Qu, C.; Liu, J.; Zhang, H.; Chen, Y.; Wu, Y.; Li, X.; Yang, F. Towards Robust Tampered Text Detection in Document Image: New Dataset and New Solution. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 5937–5946. [Google Scholar]

- Nandanwar, L.; Shivakumara, P.; Pal, U. A New Method for Detecting Altered Text in Document Images. In Pattern Recognition and Artificial Intelligence; Springer: Cham, Switzerland, 2020; Volume 12068. [Google Scholar]

- Balabantaray, B.; Gnaneshwar, C.; Yadav, S.; Singh, M. Analysis of Image Forgery Detection using Convolutional Neural Network. Int. J. Appl. Syst. Stud. 2022, 9, 240–260. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA; 2017; pp. 2261–2269. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Zanardelli, M.; Guerrini, F.; Leonardi, R.; Adami, N. Image Forgery Detection: A Survey of Recent Deep-Learning Approaches. Multimed. Tools Appl. 2022, 82, 17521–17566. [Google Scholar] [CrossRef]

- Bi, X.; Wei, Y.; Xiao, B.; Li, W. RRU-Net: The Ringed Residual U-Net for Image Splicing Forgery Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 30–39. [Google Scholar]

- Gornale, S.S.; Patil, G.; Benne, R. Document Image Forgery Detection Using RGB Color Channel. Trans. Eng. Comput. Sci. 2022, 10, 1–14. [Google Scholar] [CrossRef]

- Singh, A.; Bacchuwar, K.; Bhasin, A. A Survey of OCR Applications. Int. J. Mach. Learn. Comput. 2012, 2, 314–318. [Google Scholar] [CrossRef]

- Khayam, S.A. The Discrete Cosine Transform (DCT): Theory and Application. Course Notes Dep. Electr. Comput. Eng. 2003. Available online: https://api.semanticscholar.org/CorpusID:2593654 (accessed on 7 December 2024).

- Yadav, G.; Maheshwari, S.; Agarwal, A. Contrast Limited Adaptive Histogram Equalization Based Enhancement for Real Time Video System. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Delhi, India, 24–27 September 2014; pp. 2392–2397. [Google Scholar]

- Tahaoglu, G.; Ulutaş, G.; Ustubioglu, B.; Nabiyev, V.V. Improved Copy Move Forgery Detection Method via L*a*b* Color Space and Enhanced Localization Technique. Multimed. Tools Appl. 2021, 80, 23419–23456. [Google Scholar] [CrossRef]

- Pakala, S.; Mantri, M.B.P.; Kumar, M.N. Forgery Detection in Medical Image and Enhancement Using Modified CLAHE Method. J. Surv. Fish. Sci. 2023, 10, 1930–1937. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).