A Joint Extraction Model of Multiple Chinese Emergency Event–Event Relations Based on Weighted Double Consistency Constraint Learning

Abstract

1. Introduction

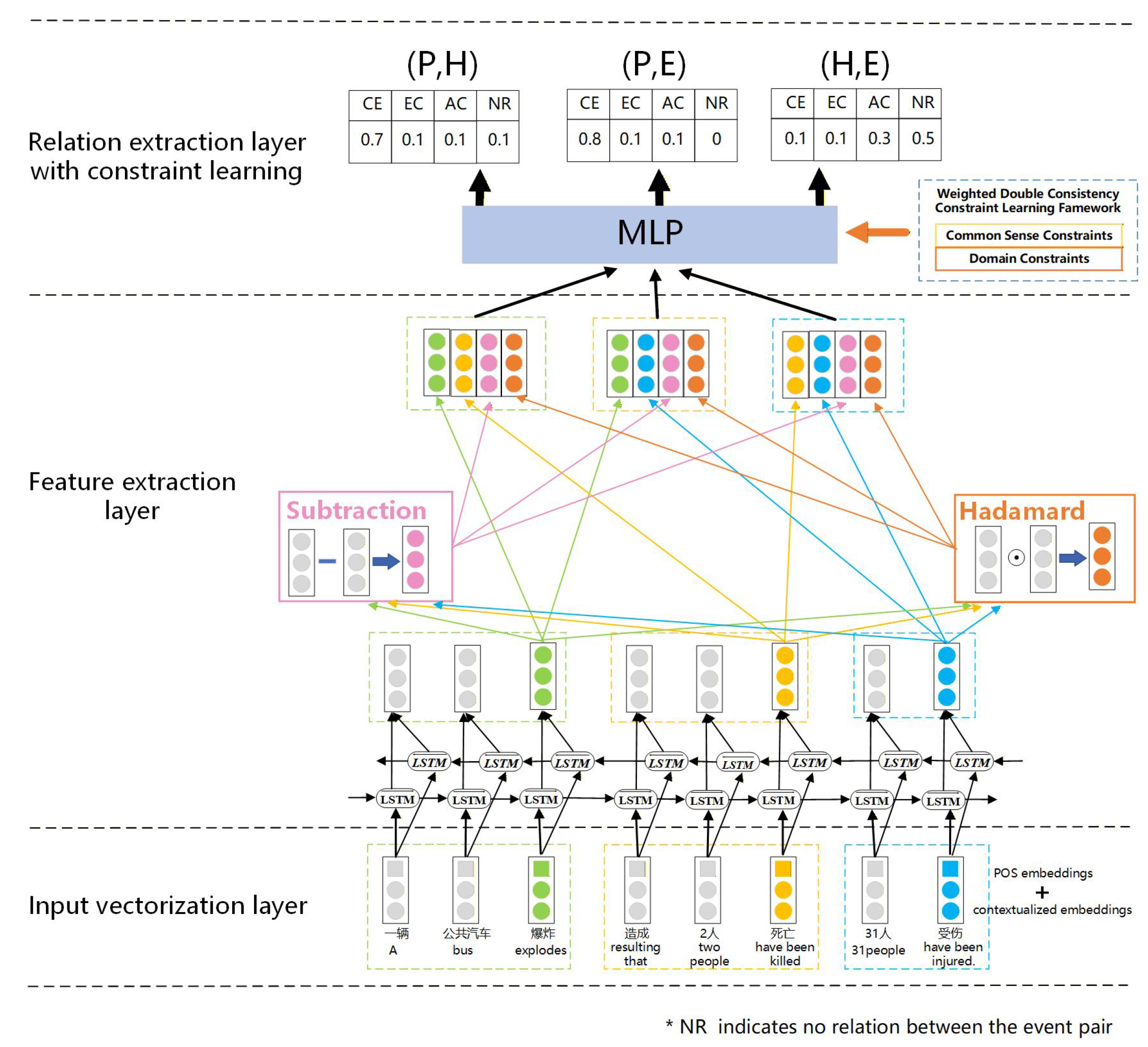

- Firstly, this paper proposes a joint extraction model for multiple Chinese event–event relations based on heterogeneous deep neural networks. By concatenating contextualized embeddings with POS embeddings and encoding them via Bi-LSTM, we aim to capture richer syntactic and semantic features of event pairs; we expect this design to yield more accurate relation predictions, especially when labeled data is limited.

- Secondly, this paper proposes a weighted double consistency constraint learning framework to integrate prior knowledge into neural network models. Both common sense and domain consistency constraints are defined and transformed into distinguishable learning objectives with dynamic weights. We hypothesize that this regularization mechanism will guide the model toward logically consistent predictions, thereby improving its generalization capability in few-shot settings.

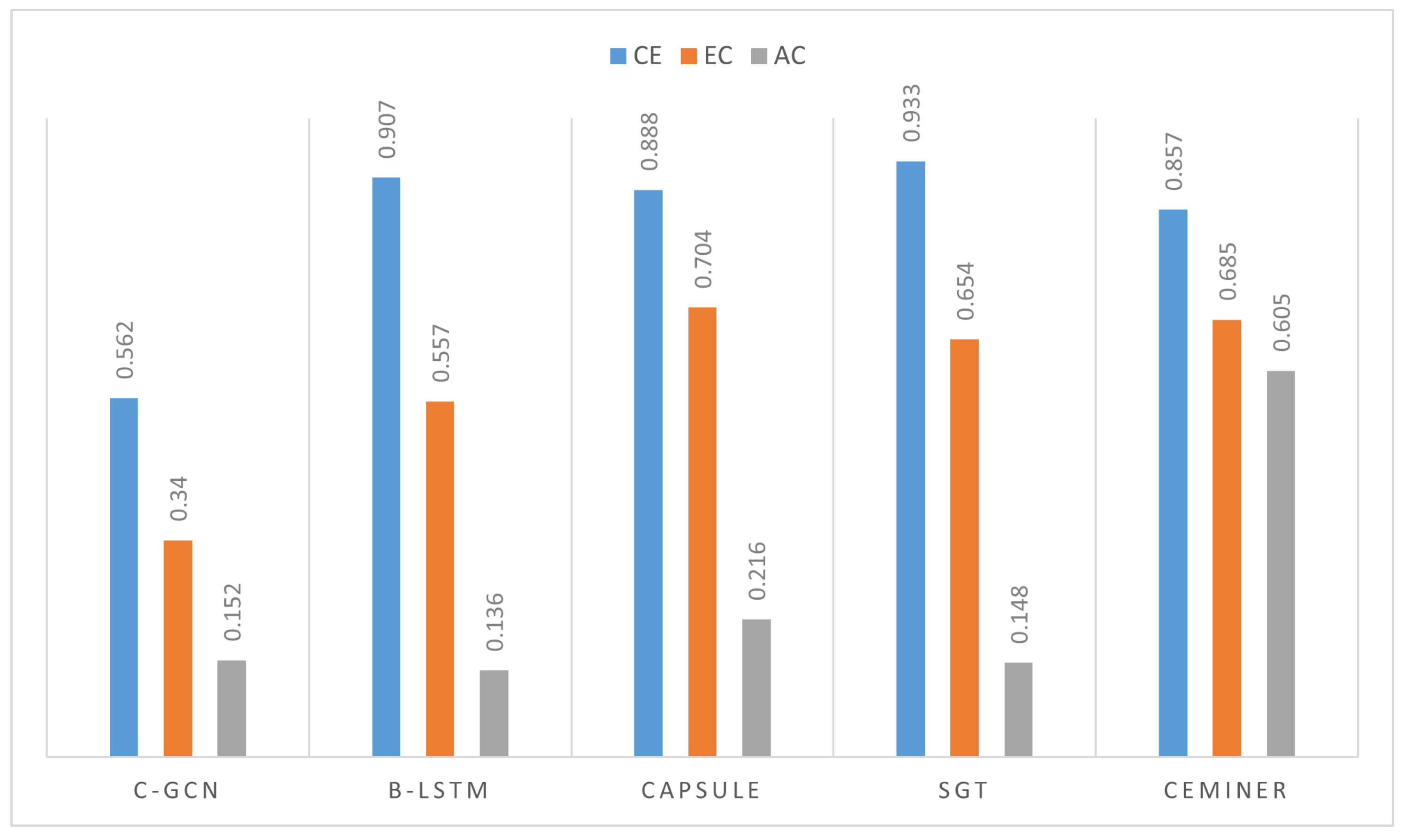

- Finally, a group of experiments was performed on the open CEC corpus. The experimental results show that the proposed CERMiner achieves significant improvement in the joint extraction of multiple Chinese emergency event–event relations over existing state-of-the-art models under low-resource conditions.

2. Related Work

2.1. Event–Event Relation Extraction

2.2. Few-Shot Learning on Information Extraction

3. Methods

3.1. Dataset

3.2. The Overall Framework

3.2.1. Input Vectorization Layer

3.2.2. Feature Extraction Layer

3.2.3. Relation Extraction Layer

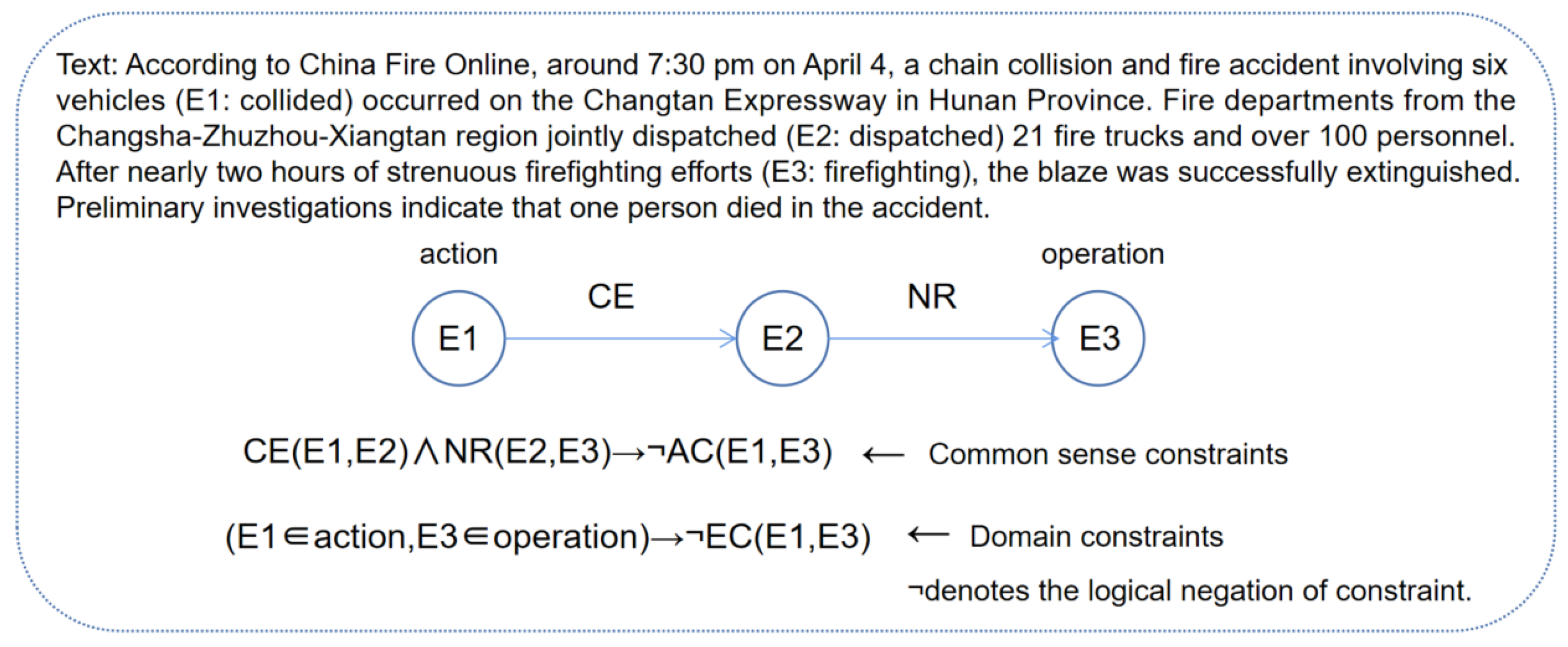

3.3. Weighted Double Consistency Constraints

3.3.1. Common Sense Constraints

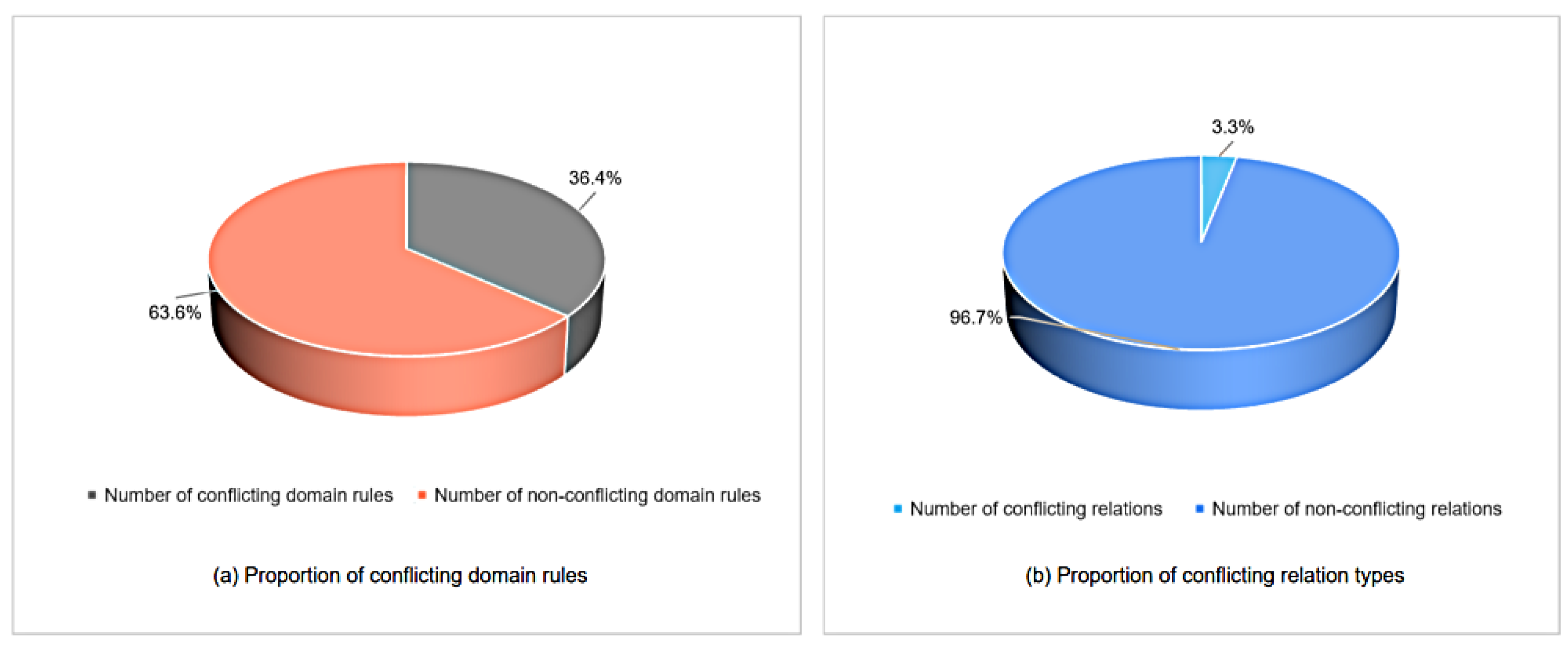

3.3.2. Domain Constraints

- Type inferring consistency

- Type excluding consistency

3.3.3. Weighted Joint Learning Objective Loss

- (1)

- Common sense constraints logical formulas

- (2)

- Domain constraints logical formulas

4. Experiment

4.1. Baselines

- C-GCN (Contextualized Graph Convolutional Networks) [43]: This model extracted entity-centric representations for robust relation prediction. An extended graph convolutional network (GCN) was employed to encode dependency structures of sentences for augmenting input information and enhancing the model’s learning capability with limited data.

- B-LSTM (Bert-LSTM) [44]: This model employed BERT to encode sentences and their constraint information for relation extraction. A key phrase extraction network was constructed to obtain key phrase features of contexts and a global gating mechanism was used to transfer phrase contextual information to the current phrase representation for enhancing the information representation of the phrase itself. By augmenting input information, the model’s learning capability with limited data can be improved.

- Capsule (Attention-Based Capsule Networks with Dynamic Routing) [45]: This model used a new capsule network with the attention mechanism for few-shot relation extraction. Unlike traditional networks that rely on implicit statistical learning, capsule networks with dynamic routing can stably learn generalizable feature patterns from limited data due to their explicit component-wise modeling.

- SGT (Syntax-guided Graph Transformer network) [46]: This model proposed a new syntax-guided Graph Transformer network (SGT) to extract the temporal relations between events. By adding the new syntax-guided attention into Graph Transformer, the prior knowledge-guided feature extraction can be implemented to obtain an enhanced contextual representation of event mentions that considers both local and global dependencies between events. The model’s learning capability with limited data was consequently improved.

4.2. Metric

4.3. Implementations

5. Results

6. Ablation Study

- Bi-LSTM+MLP(BM): This model removes the weighted double consistency constraint learning from CERMiner.

- Bi-LSTM+MLP+Common sense constraints (BM-CC): This model removes all domain constraints from CERMiner. The dynamic weight adjustment mechanism is also removed. All weights of loss terms are set to 1.

- Bi-LSTM+MLP+Domain constraints (BM-DC): This model removes all common sense constraints from CERMiner. The dynamic weight adjustment mechanism is also removed. All weights of loss terms are set to 1.

- Bi-LSTM+MLP+ Common sense constraints+Domain constraints (BM-CC-DC): This model contains both common sense constraints and domain constraints, but the dynamic weight adjustment mechanism is removed.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shen, H.; Shi, J.; Zhang, Y. CrowdEIM: Crowdsourcing emergency information management tasks to the mobile social media users. Int. J. Disaster Risk Reduct. 2021, 54, 102024. [Google Scholar] [CrossRef]

- Kuai, H.; Huang, J.X.; Tao, X.; Pasi, G.; Yao, Y.; Liu, J.; Zhong, N. Web intelligence (wi) 3.0: In search of a better-connected world to create a future intelligent society. Artif. Intell. Rev. 2025, 58, 265. [Google Scholar] [CrossRef]

- Zhang, B.; Li, L.; Song, D.; Zhao, Y. Bomedical event causal relation extraction based on a knowledge-guided hierarchical graph network. Soft Comput. 2023, 27, 17369–17386. [Google Scholar]

- Yao, H.-R.; Breitfeller, L.; Naik, A.; Zhou, C.; Rose, C. Distilling Multi-Scale Knowledge for Event Temporal Relation Extraction. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management (CIKM ’24), Boise, ID, USA, 21–25 October 2024. [Google Scholar]

- Yong, S.J.; Dong, K.; Sun, A. DOCoR: Document-level OpenIE with Coreference Resolution. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining, Kyoto, Japan, 12–16 February 2024. [Google Scholar]

- Zhang, M.; Qian, T.; Liu, B. Exploit feature and relation hierarchy for relation extraction. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 917–930. [Google Scholar] [CrossRef]

- Han, X.; Wang, J. Earthquake Information Extraction and Comparison from Different Sources Based on Web Text. ISPRS Int. J. Geo-Inf. 2019, 252, 2220–9964. [Google Scholar] [CrossRef]

- Xiao, H.; Zheng, S.; Chen, X.Y. Temporal Relationship Extraction of Conflict Events in Open Source Military Journalism. In Proceedings of the 2023 2nd International Conference on Artificial Intelligence and Computer Information Technology (AICIT), Yichang, China, 15–17 September 2023. [Google Scholar]

- Qiu, J.; Sun, L. A Joint Graph Neural Model for Chinese Domain Event and Relation Extraction with Character-Word Fusion. In Proceedings of the 2024 10th International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2024. [Google Scholar]

- Prasad, R.; Dinesh, N.; Lee, A.; Miltsakaki, E.; Robaldo, L.; Joshi, A.; Webber, B. The Penn Discourse TreeBank 2.0. In Proceedings of the Sixth International Conference on Language Resources and Evaluation (LREC’08), Marrakech, Morocco, 28–30 March 2008. [Google Scholar]

- Khoo, C.S.G.; Kornfilt, J.; Oddy, R.N.; Myaeng, S.H. Automatic Extraction of Cause-Effect Information from Newspaper Text Without Knowledge-based Inferencing. Lit. Linguist. Comput. 1998, 13, 77–186. [Google Scholar] [CrossRef]

- Nichols, M. Efficient Pattern Search in Large, Partial-Order Data Sets. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2008. [Google Scholar]

- Shen, J.; Wu, Z.; Lei, D.; Shang, J.; Ren, X.; Han, J. SetExpan: Corpus-Based Set Expansion via Context Feature Selection and Rank Ensemble. arXiv 2019, arXiv:1910.08192. [Google Scholar]

- Chang, D.-S.; Choi, K.-S. Causal relation extraction using cue phrase and lexical pair probabilities. In Proceedings of the First International Joint Conference on Natural Language Processing, Hainan Island, China, 22–24 March 2004. [Google Scholar]

- Girju, R.; Beamer, B.; Rozovskaya, A.; Fister, A.; Bhat, S. A knowledge-rich approach to identifying semantic relations between nominals. Inf. Process. Manag. 2010, 46, 589–610. [Google Scholar] [CrossRef]

- Liu, C.; Sun, W.; Chao, W.; Che, W. Convolution Neural Network for Relation Extraction. In Advanced Data Mining and Applications; Motoda, H., Wu, Z., Cao, L., Zaiane, O., Yao, M., Wang, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 231–242. [Google Scholar]

- Xu, X.; Gao, T.; Wang, Y.; Xuan, X. Event Temporal Relation Extractionwith Attention Mechanism and Graph Neural Network. Tsinghua Sci. Technol. 2022, 27, 79–90. [Google Scholar] [CrossRef]

- Li, T.; Wang, Z. LDRC: Long-tail Distantly Supervised Relation Extraction via Contrastive Learning. In Proceedings of the 2023 7th International Conference on Machine Learning and Soft Computing, Chongqing, China, 5–7 January 2023. [Google Scholar]

- Man, H.; Ngo, N.T.; Van, L.N.; Nguyen, T.H. Selecting Optimal Context Sentences for Event-Event Relation Extraction. Proc. AAAI Conf. Artif. Intell. 2025, 36, 11058–11066. [Google Scholar] [CrossRef]

- El-allaly, E.-d.; Sarrouti, M.; En-Nahnahi, N. An attentive joint model with transformer-based weighted graph convolutional network for extracting adverse drug event relation. J. Biomed. Inform. 2022, 125, 103968. [Google Scholar] [CrossRef]

- Huang, P.; Zhao, X.; Hu, M.; Tan, Z.; Xiao, W. Logic Induced High-Order Reasoning Network for Event-Event Relation Extraction. Proc. AAAI Conf. Artif. Intell. 2025, 39, 24141–24149. [Google Scholar] [CrossRef]

- Chen, M.; Cao, Y.; Zhang, Y.; Liu, Z. CHEER: Centrality-aware High-order Event Reasoning Network for Document-level Event Causality Identification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Li, P.; Zhu, Q.; Zhou, G.; Wang, H. Global Inference to Chinese Temporal Relation Extraction. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016. [Google Scholar]

- Zhu, G.; Huang, X.; Yang, R.; Sun, R. Relationship Extraction Method for Urban Rail Transit Operation Emergencies Records. IEEE Trans. Intell. Veh. 2023, 8, 520–530. [Google Scholar] [CrossRef]

- Wan, Q.; Wan, C.; Xiao, K.; Hu, R.; Liu, D.; Liu, X. CFERE: Multi-type Chinese financial event relation extraction. Inf. Sci. 2023, 630, 119–134. [Google Scholar] [CrossRef]

- Pan, X.; Wang, P.; Jia, S.E.A. Multi-contrast learning-guided lightweight few-shot learning scheme for predicting breast cancer molecular subtypes. Med. Biol. Eng. Comput. 2024, 62, 1601–1613. [Google Scholar] [CrossRef]

- Miao, W.; Huang, K.; Xu, Z.; Zhang, J.; Geng, J.; Jiang, W. Pseudo-label meta-learner in semi-supervised few-shot learning for remote sensing image scene classification. Appl. Intell. 2024, 54, 9864–9880. [Google Scholar] [CrossRef]

- Schwartz, E.; Karlinsky, L.; Feris, R.; Giryes, R.; Bronstein, A. Baby steps towards few-shot learning with multiple semantics. Pattern Recognit. Lett. 2022, 160, 142–147. [Google Scholar] [CrossRef]

- Hou, J.; Li, X.; Zhu, R.; Zhu, C.; Wei, Z.; Zhang, C. A Neural Relation Extraction Model for Distant Supervision in Counter-Terrorism Scenario. IEEE Access 2020, 8, 225088–225096. [Google Scholar] [CrossRef]

- Yang, S.; Song, D. FPC: Fine-tuning with Prompt Curriculum for Relation Extraction. In Proceedings of the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing, Online Only, 20–23 November 2022. [Google Scholar]

- Chen, Y. A transfer learning model with multi-source domains for biomedical event trigger extraction. BMC Genom. 2021, 22, 31. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Parulian, N.; Ji, H.; Elsayed, A.; Myers, S.; Palmer, M. Fine-grained Information Extraction from Biomedical Literature based on Knowledge-enriched Abstract Meaning Representation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021. [Google Scholar]

- Yuan, l.; Cai, Y.; Huang, J. Few-Shot Joint Multimodal Entity-Relation Extraction via Knowledge-Enhanced Cross-modal Prompt Model. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024. [Google Scholar]

- Li, L.; Xiang, Y.; Hao, J. Biomedical event causal relation extraction with deep knowledge fusion and Roberta-based data augmentation. Methods 2024, 231, 8–14. [Google Scholar] [CrossRef]

- Giunchiglia, E.; Stoian, M.C.; Lukasiewicz, T. Deep Learning with Logical Constraints. arXiv 2022, arXiv:2205.00523. [Google Scholar] [CrossRef]

- Hoernle, N.; Karampatsis, R.M.; Belle, V.; Gal, K. MultiplexNet: Towards Fully Satisfied Logical Constraints in Neural Networks. Proc. AAAI Conf. Artif. Intell. 2022, 36, 5700–5709. [Google Scholar] [CrossRef]

- Daniele, A. Knowledge Enhanced Neural Networks for Relational Domains. In PRICAI 2019: Trends in Artificial Intelligence, Proceedings of the Pacific Rim International Conference on Artificial Intelligence Cuvu, Yanuka Island, Fiji, 26 August 2019; Dovier, A., Montanari, A., Orlandini, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 542–554. [Google Scholar]

- Wang, H.; Chen, M.; Zhang, H.; Roth, D. Joint Constrained Learning for Event-Event Relation Extraction. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 6–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 696–706. [Google Scholar]

- Liu, Z.; Huang, M.; Zhou, W.; Zhong, Z.; Fu, J.; Shan, J.; Zhi, H. Research on Event-oriented Ontology Model. Comput. Sci. 2009, 36, 189–192+199. [Google Scholar]

- Sun, R.; Guo, S.; Ji, D.H. Topic Representation Integrated with Event Knowledge. Chin. J. Comput. 2017, 40, 791–804. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Chen, Z.; Badrinarayanan, V.; Lee, C.-Y.; Rabinovich, A. GradNorm: Gradient Normalization for Adaptive Loss Balancing in Deep Multitask Networks. arXiv 2017, arXiv:1711.02257. [Google Scholar]

- Zhang, Y.; Qi, P.; Manning, C.D. Graph Convolution over Pruned Dependency Trees Improves Relation Extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2–4 November; Riloff, E., Chiang, D., Hockenmaier, J., Tsujii, J., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 2205–2215. [Google Scholar]

- Xu, S.; Sun, S.; Zhang, Z.; Xu, F.; Liu, J. BERT gated multi-window attention network for relation extraction. Neurocomputing 2022, 492, 516–529. [Google Scholar] [CrossRef]

- Zhang, N.; Deng, S.; Sun, Z.; Chen, X.; Zhang, W.; Chen, H. Attention-Based Capsule Networks with Dynamic Routing for Relation Extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2–4 November 2018; Riloff, E., Chiang, D., Hockenmaier, J., Tsujii, J., Eds.; Association for Computational Linguistic: Stroudsburg, PA, USA, 2018; pp. 986–992. [Google Scholar]

- Zhang, S.; Ning, Q.; Huang, L. Extracting Temporal Event Relation with Syntax-guided Graph Transformer. In Findings of the Association for Computational Linguistics: NAACL 2022; Carpuat, M., Marneffe, M.-C., Meza Ruiz, I.V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 379–390. [Google Scholar]

| CE | CE | − |

| EC | − | |

| AC | ¬AC | |

| NR | ¬AC | |

| EC | CE | − |

| EC | − | |

| AC | − | |

| NR | ¬CE | |

| AC | CE | NR |

| EC | − | |

| AC | − | |

| NR | NR | |

| NR | CE | − |

| EC | ¬CE | |

| AC | NR | |

| NR | − |

| Emergency | Movement | stateChange | Statement | Perception | Action | Operation | |

|---|---|---|---|---|---|---|---|

| Emergency | - | ¬EC | CE, NR, ¬AC | - | - | - | - |

| Movement | - | - | - | - | - | - | ¬EC |

| StateChange | EC, NR, ¬AC | - | - | - | - | EC, NR | - |

| Statement | CE, NR | CE, NR | - | - | AC, NR | - | AC, NR |

| Perception | - | - | - | - | - | - | - |

| Action | - | - | - | - | - | - | ¬EC |

| Operaton | - | - | - | - | - | - | AC, NR, ¬EC |

| C-GCN | B-LSTM | Capsule | SGT | CERMiner | |

|---|---|---|---|---|---|

| P | 0.575 | 0.664 | 0.745 | 0.808 | 0.848 |

| R | 0.382 | 0.631 | 0.695 | 0.733 | 0.727 |

| 0.458 | 0.641 | 0.717 | 0.765 | 0.782 |

| Model | P | R | |

|---|---|---|---|

| BM | 0.825 | 0.702 | 0.759 |

| BM-DC | 0.840 | 0.715 | 0.772 |

| BM-CC | 0.839 | 0.720 | 0.775 |

| BM-CC-DC | 0.826 | 0.717 | 0.768 |

| CERMiner | 0.848 | 0.727 | 0.782 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Tang, Z.; Ma, L.; Zhang, Z.; Yang, H. A Joint Extraction Model of Multiple Chinese Emergency Event–Event Relations Based on Weighted Double Consistency Constraint Learning. Symmetry 2025, 17, 1910. https://doi.org/10.3390/sym17111910

Chen J, Tang Z, Ma L, Zhang Z, Yang H. A Joint Extraction Model of Multiple Chinese Emergency Event–Event Relations Based on Weighted Double Consistency Constraint Learning. Symmetry. 2025; 17(11):1910. https://doi.org/10.3390/sym17111910

Chicago/Turabian StyleChen, Jianhui, Zhiyi Tang, Lianfang Ma, Zitong Zhang, and Haonan Yang. 2025. "A Joint Extraction Model of Multiple Chinese Emergency Event–Event Relations Based on Weighted Double Consistency Constraint Learning" Symmetry 17, no. 11: 1910. https://doi.org/10.3390/sym17111910

APA StyleChen, J., Tang, Z., Ma, L., Zhang, Z., & Yang, H. (2025). A Joint Extraction Model of Multiple Chinese Emergency Event–Event Relations Based on Weighted Double Consistency Constraint Learning. Symmetry, 17(11), 1910. https://doi.org/10.3390/sym17111910