Abstract

The rapid development of artificial intelligence technology is driving the intelligentization process across various fields, particularly in knowledge graph construction, where significant achievements have been made. However, research on hyper-relational knowledge graphs in the industrial domain remains relatively weak. Traditional construction methods suffer from low automation, high cost, and poor reproducibility and portability. To address these challenges, this paper proposes an optimized construction process for a hyper-relational knowledge graph for mine hoist faults based on large language models. This process leverages the strengths of large language models and the logical connections of fault knowledge, employing GPT’s powerful reasoning abilities. A combined strategy of template-based and template-free prompts is designed to generate fault entities and relationships. To address potential data incompleteness caused by prompt engineering, link prediction is used to optimize the initial data generated by GPT o1-preview. We integrated the graph’s topological structure with domain-specific logical rules and applied the Variational EM algorithm for alternating optimization while also incorporating text embeddings to comprehensively enhance data optimization. Experimental results show that compared to the unoptimized MHSD, the optimized MHSD achieved a 0.008 improvement in MRR. Additionally, compared to the latest KICGPT, the optimized MHSD showed a 0.002 improvement in MRR. Finally, the optimized data were successfully imported into Neo4j for visualization.

1. Introduction

In modern mining operations, the mine hoist system plays a crucial role as it is one of the primary transportation mechanisms. It is responsible not only for lifting ore and personnel from underground mines to the surface but also for handling the transportation of materials and equipment throughout the mining process. The stability and smooth operation of the hoist system are critical to the continuity and efficiency of mining production, making it a central component of mining operations. However, the hoist system operates in complex and dynamic environments, facing numerous challenges [1]. First, the system is required to run continuously under high-load conditions, which can easily lead to equipment fatigue and wear. Additionally, the harsh conditions of the mine, including humidity, temperature variations, and dust, accelerate equipment aging and increase the likelihood of system failures. Moreover, the mine hoist system is structurally complex, involving mechanical, electrical, and hydraulic subsystems, where a failure in any component can cause the entire system to halt. Due to these factors, various types of failures frequently occur in mine hoist systems. For example, hoisting ropes may break after prolonged use; electrical systems can fail due to overload or short circuits; and mechanical components may malfunction due to wear or insufficient lubrication. Such failures not only interrupt production but can also lead to safety hazards, posing significant risks to both mine efficiency and personnel safety. Given this context, the rapid diagnosis and repair of hoist system failures have become critical issues in mining production [2]. Traditional diagnostic methods, which mainly rely on human expertise and periodic maintenance, are limited in their ability to handle the increasing complexity and operational demands of modern hoist systems. Therefore, there is an urgent need for more efficient and precise diagnostic and repair techniques to ensure the stable operation of the hoist system. As a result, effective monitoring, diagnosis, and prevention of mine hoist failures hold great practical significance [3].

Traditional fault diagnosis methods have primarily relied on expert experience and rule-based systems, which, while effective in certain applications, face considerable limitations. These methods often encounter challenges in acquiring and updating specialized knowledge, which can be both time-consuming and difficult to adapt as systems or technologies evolve [4,5]. Additionally, handling complex or novel fault patterns is a significant obstacle for rule-based systems due to their limited flexibility and lack of adaptive learning capabilities. With the advancement of big data and artificial intelligence technologies, knowledge graphs have emerged as a powerful tool for representing knowledge in a more dynamic and interconnected way. Unlike traditional methods, knowledge graphs efficiently organize and integrate complex heterogeneous data from various structured and unstructured sources, allowing for a more comprehensive and scalable approach to fault diagnosis [6,7]. By capturing multi-dimensional relationships among fault components, knowledge graphs facilitate a deeper understanding of fault patterns and enable continuous improvement in diagnostic models. This innovative approach provides a foundation for automated, data-driven fault diagnosis solutions that are better equipped to handle the complexities of modern industrial systems. However, conventional knowledge graphs typically express only simple one-to-one or one-to-many relationships between entities, which fall short with respect to meeting the demands of complex industrial systems. To address this, hyper-relational knowledge graphs have been developed. These graphs not only capture multiple relationships between entities but also store attribute information associated with these relationships, enabling multi-dimensional knowledge association. Hyper-relational knowledge graphs allow for fine-grained descriptions of each entity and its corresponding relationships, for instance, recording the cause, timing, frequency of faults, and their impact on other subsystems. This combination of multi-dimensional information enhances the accuracy and comprehensiveness of knowledge graphs in fault diagnosis. Large language models (LLMs), such as GPT, have achieved groundbreaking progress in natural language processing, possessing powerful capabilities in language understanding and generation [8]. Leveraging LLMs like GPT enables the automatic extraction of knowledge from vast amounts of unstructured textual data, significantly reducing the cost associated with manually constructing knowledge graphs [9,10]. However, applying LLMs to the fault diagnosis domain of mine hoisting systems presents several challenges. First, mine hoisting systems involve a vast amount of specialized terminology, such as component names, fault types, and operational standards. These domain-specific terms are often underrepresented in general language models, which can lead to misinterpretations or biases when the model attempts to process specific fault descriptions. Second, the fault patterns in mine hoisting systems are highly diverse, encompassing mechanical, electrical, and hydraulic issues, each with distinct causes, influencing factors, and multi-layered relationships. Traditional models struggle to capture and represent this complexity in fault patterns effectively. Finally, data sparsity is a significant issue in this field, as rare fault cases occur infrequently in datasets, making it difficult for models to learn and generalize well to identify and predict these uncommon faults. These challenges demand more refined optimizations and specialized designs to enable LLMs to handle the unique complexities of mine hoisting system fault analysis effectively. To address these challenges, this paper explores the application of large language models in the automated construction of hyper-relational knowledge graphs for mine hoisting system fault diagnosis. By integrating the exceptional natural language processing capabilities of LLMs with the multi-relational representation features of hyper-relational knowledge graphs, this study achieves the automatic extraction and systematic organization of fault knowledge from complex unstructured text data, thus constructing a more comprehensive diagnostic system. This approach significantly enhances the efficiency of knowledge updating and effectively addresses the challenges of handling complex fault patterns, providing strong technical support for the stable operation of mine hoisting systems. The main contributions of this paper are as follows:

- Generation of hyper-relational data: Utilizing the powerful learning, reasoning, and generation capabilities of GPT o1-preview, a template-based prompt is employed to generate data. Enhanced by template-free prompts, a fault dataset in the form of (h, r1, t1, r2, t2, r3, t3, …) is produced;

- Completion of hyper-relational data: Link prediction is used to optimize the initial data generated by GPT, integrating the topological structure and logical rules of the knowledge graph. The EM algorithm is introduced for alternating optimization, effectively enhancing both hyper-relational and rule-based modeling, leading to the further refinement of the knowledge graph. The link prediction task is evaluated using the MRR metric;

- Knowledge graph visualization: The final optimized dataset, MHSD (optimized), is visualized using Neo4j, facilitating observation and learning.

2. Related Work

In the research on Hyper Knowledge Graph Construction for Mine Hoist Faults (HKGCF), related work primarily focuses on three main areas: hyper-relational knowledge graphs, data generation, and link prediction.

2.1. Hyper Knowledge Graph

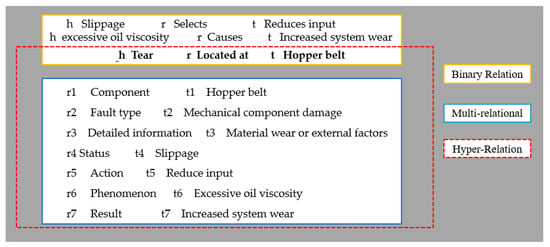

A hyper-relational knowledge graph is an extended form of a traditional knowledge graph that can represent more complex and diverse relationships, thereby better reflecting the intricate associations in the real world. The knowledge representation format directly affects the performance of HKGC tasks. Currently, research on knowledge graphs mainly focuses on three aspects: binary relations, n-ary relations, and hyper-relations [11]. To more intuitively demonstrate the differences between these knowledge representation formats, the fault data for mine hoisting systems used in this paper are described as follows: “Crack fault located on the component hopper belt, categorized as mechanical device failure, with detailed information involving material wear or external factor influence. In the event of slippage, input should be reduced, as increased oil viscosity exacerbates system wear”. Figure 1 shows different forms of knowledge representation.

Figure 1.

Different Methods of Knowledge Representation for Mine Hoist Fault Data.

Figure 1 provides a comparative analysis of binary relations, multi-relational, and hyper-relational knowledge representation formats, offering an intuitive view of the differences in information expression among these formats. Binary relations can capture only single relationships, such as “tear located at hopper belt”, which, although able to convey basic fault location information, lacks a comprehensive description of the complex fault context. Multi-relational representations, by introducing additional relationship entities, can display multiple dimensions of information to some extent but remain insufficient in representing complex scenarios. In contrast, hyper-relational knowledge graphs support multi-attribute associations, enabling the integration of multi-level information such as “slippage”, “excessive oil viscosity”, and “reduce input” within a single framework, thereby providing a complete representation of the causes, conditions, impacts, and countermeasures of mine hoist faults. Furthermore, hyper-relational knowledge graphs exhibit significant advantages in the fine-grained association of information. They not only record fault types (such as “mechanical component damage”) and influencing factors (such as “material wear or external factors”) but also achieve an intuitive representation of complex knowledge through multi-dimensional relationship attributes (e.g., “condition” as “slippage”, “phenomenon” as “excessive oil viscosity”, and “outcome” as “increased system wear”). This multi-dimensional association enables knowledge graphs to directly infer optimization strategies in fault analysis, such as “reduce input”. Therefore, hyper-relational knowledge graphs are superior to traditional methods in terms of richness in knowledge representation and precision in inferential support, providing robust technical support for the intelligent operation and maintenance of complex systems.

Effectively handling hyper-relational complex data requires a combination of multiple methods to enable efficient modeling and prediction of multi-dimensional, multi-relational structures. Traditional triple embedding methods struggle to capture the complexity of hyper-relational data. Consequently, some studies [12,13] have proposed hyper-relational knowledge graph embeddings, extending the representation to quadruples or quintuples and incorporating contextual information (such as time, location, and event type) to more comprehensively capture multi-dimensional relationships. GNNs, with their topological learning capabilities, have been widely applied to hyper-relational data processing, where they update node embeddings through message passing, effectively capturing multi-layered relationships within complex graph structures. Studies [14,15] highlight that GNNs can aggregate information from neighboring nodes, making them particularly suited for representing intricate topological relationships. MLPs, combined with attention mechanisms, offer an alternative approach to modeling relationships in multi-dimensional data [16], demonstrating significant advantages in capturing hierarchical relationship features. The Transformer model proposed by Vaswani et al. [17] uses a self-attention mechanism to achieve dynamic weighting through multi-head attention, enabling the model to focus on key features within hyper-relational data. In terms of integrating logical rules, studies [18] suggest that the variational EM algorithm can serve as a regularization term, embedding logical rules within the model to facilitate the learning of complex relationships that align with domain logic. Contrastive learning enhances the quality of model embeddings by drawing similar samples closer and pushing dissimilar samples further apart, and studies [19,20] have validated its effectiveness in processing hyper-relational data. Finally, Transformer-based methods for hyper-relational processing demonstrate superior performance in handling long-range dependencies, utilizing multi-head attention and layer normalization modules [21,22] to achieve higher precision in hyper-relational knowledge graph modeling tasks. In the field of fault knowledge graphs, Li et al. [23] introduced knowledge graphs into the alarm analysis process, using network alarm knowledge to analyze network anomalies and accurately locate the source of faults. Liang et al. [24] developed a power fault knowledge graph and employed a fault retrieval method to address the cold-start recommendation problem, predicting users’ general search intentions through polymorphic search recommendation methods, thereby improving the reliability of intelligent grid operation and decision-making support. Han et al. [25] proposed a fault knowledge graph for steel production line equipment, which enhances fault analysis accuracy and efficiency through a reinforcement learning framework and graph neural networks, providing an effective maintenance plan. Dong et al. [26] proposed a method for constructing a mine hoist fault knowledge graph using BiLSTM-CRF for entity recognition and ERNIE for relation extraction to build a triple-based fault knowledge graph, though their research had not yet delved into hyper-relational modeling in the fault domain.

2.2. Data Generation

In recent years, large language models (LLMs) with hundreds of billions of parameters or more have made significant advances in the field of natural language processing (NLP), with OpenAI’s GPT series standing out for its superior performance compared to other models of the same period [27]. In September 2023, GPT-4V further expanded its capabilities in visual processing, allowing users to instruct the model to analyze images. Subsequently, Whisper V3 improved speech recognition accuracy and supported more languages. By 2024, GPT-4o had advanced the integration of audio-visual processing and natural language interaction, marking another technical evolution. In September 2024, the GPT-o1 model, also known as the “Orion Generation”, was released, with its key feature being its general complex reasoning ability, which simulates human “thought processes”. This is particularly evident in its application of reinforcement learning and Chain of Thought (CoT), enabling it to engage in human-like thought and reasoning before delivering answers. Compared to earlier models, GPT-o1 demonstrates significant advantages in solving complex problems. For example, it achieved an accuracy rate of 83.3% in the International Mathematical Olympiad qualification exam, far surpassing the 13.4% accuracy of GPT-4o [28]. Prompt engineering has played a crucial role in optimizing the task comprehension and output quality of large language models. By carefully designing and refining prompts, models can be guided to better understand task requirements and improve performance [29]. However, prompt engineering has its limitations, particularly when models generate illogical or inaccurate information. Continuous validation and optimization are essential to ensure the reliability of the model’s outputs [30].

2.3. Link Prediction

In the task of hyper-relational knowledge graph construction, link prediction on fault-formatted data generated by large language models is essential due to the complexity and domain-specific nature of the data. Link prediction helps infer potential but unannotated relationships, thus improving the knowledge graph. In 2019, Yao et al. [31] proposed the KG-BERT model, where the textual descriptions of the head entity, relation, and tail entity are concatenated and fed into BERT. The final [CLS] token is used as the embedding for the target triple, which is then passed through a classifier to determine the validity of the triple. To enhance the representation of structured knowledge within text encoders, Li et al. [32] introduced LP-BERT in 2020, performing multi-task pre-training that incorporated masked language modeling alongside masked entity modeling and masked relation modeling. While these approaches achieved strong performance by integrating structured knowledge, their modeling was limited to the triple level and failed to capture the long-range structural semantics in knowledge graphs. In 2021, Yu et al. [33] introduced Hy-transformer, which combined hypergraph structures with the Transformer model, leveraging the sequential modeling capacity of Transformers and the complex relationship representation of hypergraphs to handle multi-relational natural language processing tasks. Wang et al. [34] proposed the StAR model, dividing each triple into two asymmetric parts, similar to translation-based graph embedding methods, encoding the parts as contextual representations, and using deterministic classifiers and spatial measurement methods to learn the representations and structure. In 2023, Hu et al. [35] introduced HyperFormer, integrating hypergraph and Transformer architectures to improve modeling capabilities for complex data and relationships by utilizing advanced graph structures alongside powerful sequence modeling capabilities. In 2024, Hu et al. [36] further introduced HYPERMONO, focusing on multi-relational and multi-entity issues within knowledge graphs and textual data, leveraging language models to enhance understanding and reasoning across complex relationships and hierarchical data. Also, in 2024, Wei et al. [37] proposed KICGPT, which combines large language models with a triple retriever. Through the use of knowledge prompt strategies, KICGPT effectively addresses the long-tail problem in knowledge graph completion without requiring additional training or fine-tuning.

3. Method

This section provides a detailed explanation of the proposed LLM-HKGCF method for constructing a knowledge graph of mine hoist faults, covering both data generation and link prediction.

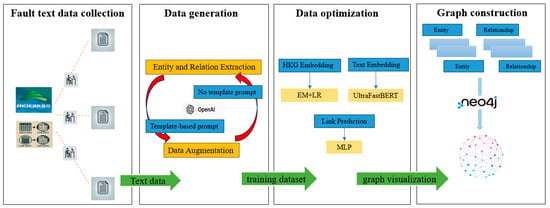

3.1. Framework

This paper proposes LLM-HKGCF, with the framework illustrated in Figure 2. The method takes fault data in text form as input, and through the analysis of the text content using LLMs, it constructs an industrial fault knowledge graph. First, the GPT model’s powerful few-shot learning and template-free learning capabilities are utilized. Task instructions and output examples are designed for data annotation and data augmentation to acquire the necessary dataset for this method. Next, link prediction is applied to optimize the generated data. Finally, based on the optimized data, a knowledge graph for mine hoist faults is constructed.

Figure 2.

Flowchart for optimizing the construction of a mine hoist fault hyper-relational knowledge graph based on GPT.

3.2. Generation of Mine Hoist Fault Dataset

3.2.1. Data Acquisition

The mine hoist is a critical piece of equipment for ensuring safe production in mining operations, serving as a vital passage between the surface and underground. There are two common types of mine hoists: single-rope winding hoists and multi-rope friction hoists. The single-rope winding hoist is primarily used in shallow mine environments due to its relatively simple structure, which meets the needs of shallower operations. However, in deep mines with high annual output requirements, the multi-rope friction hoist is more commonly employed due to its ability to provide more stable and efficient lifting capacity. Figure 3 illustrates a schematic diagram of the multi-rope friction hoist and its control system from a large state-owned non-ferrous metal group.

Figure 3.

Multi-rope friction hoist.

Figure 3 provides a visual representation of the structure and function of the hoist and its control system. The multi-rope friction hoist uses an electric motor as its power source and a guide wheel device as part of the transmission system. The lifting of the containers within the shaft is achieved through the friction between the steel ropes and the drum lining blocks. A braking system, consisting of disc brakes and a hydraulic station, controls the stopping of the hoist. The system also employs programmable logic controllers and industrial control computers to rapidly collect and process various parameters of the hoist. A high-speed counting board is used to control the operational speed of the hoist by calculating speed. Additionally, a main controller, monitoring encoder, analog bar display, and digital display are used to reflect the position of the hoist within the shaft. To ensure the safe operation of the equipment, electrical protection, mechanical protection, and hydraulic control systems are integrated into the design.

Inspection reports, as a key source of fault-related data, provide professional descriptions of various faults using specialized equipment terminology. The structure and language of these reports differ significantly from open-domain texts. To better select annotation corpora and build an annotation system, this paper briefly analyzes the structure and language used in inspection reports. These reports follow a highly structured format. They start with “XX Work Area XX Inspection Report”, followed by a file number. The first line lists the responsible personnel (inspector, inspection system, and time), while the main body contains detailed descriptions of faults using technical terms and complex language. The report ends with reference values for equipment and systems such as armature current, voltage, and oil pressure. However, the introductory and concluding sections contain no fault-related information and serve only as an index or reference, so they are excluded from model training in this study. Only the fault descriptions are retained for annotation and experiments. Additionally, the National Standard of the People’s Republic of China outlines safety requirements for mine hoists, which include equipment design and control systems [38]. Using the classifications and terminology from these standards, this paper builds its corpus. Data were collected through Python web crawlers and manual selection from inspection reports and maintenance logs in the Northwest State-owned Industrial Group. Before experimentation, the raw data underwent manual preprocessing:

- Removal of Irrelevant Text: The collected texts were initially screened to discard those with insufficient entities or texts that were too short. Regular expressions were used to remove the header and footer information from the inspection reports, keeping only the fault description sections;

- Privacy Information Desensitization: Personal names, work areas, and job-related information that were irrelevant to the task in the inspection reports and maintenance logs were removed to protect personal privacy;

- Punctuation Handling: Unnecessary punctuation marks, such as newline characters and whitespace, were removed as they negatively impact model training efficiency and could cause errors during model execution. The text was segmented into sentences using punctuation marks such as periods (“.”), semicolons (“;”), and exclamation marks (“!”). The manually preprocessed MHSD (text) dataset was 328 KB. Table 1 shows a portion of the text.

Table 1. Mine hoist fault text data.

Table 1. Mine hoist fault text data.

3.2.2. Hyper-Relation Date Generation

- (1)

- Hyper-Relation Extraction

In mine hoist fault analysis, multiple faults, along with their causes, impacts, and corrective actions, often require multi-dimensional representations. This multi-relational structure can be implemented through hyper-relation extraction. Let h represent the equipment where the fault occurred, such as the mine hoist. The relations denote different types of relations associated with the fault: : Fault type and cause of the fault. : Impact of the fault on equipment or production. : Corrective actions and required repair time. The output structure can be expressed as:

where denote the target entities for each relation. represents the specific fault cause (e.g., “overloading” or “insufficient lubrication”). represents the impact of the fault (e.g., “production halt”). represents the repair action and duration (e.g., “two-day repair”). This structure enables the Hyper-Transformer model to automatically extract and organize multi-level relational information from fault descriptions.

- (2)

- Data Augmentation

Data augmentation is used to generate semantically equivalent but clearer fault descriptions, thereby enhancing the diversity of the training data and helping the model adapt to different expression styles. Given an original fault description , the goal is to generate an augmented description with the same meaning, i.e.,

To ensure that the generated description is semantically consistent with the original description xxx, we defined a similarity function and set a similarity threshold τ. If , the generated description is considered semantically equivalent to the original:

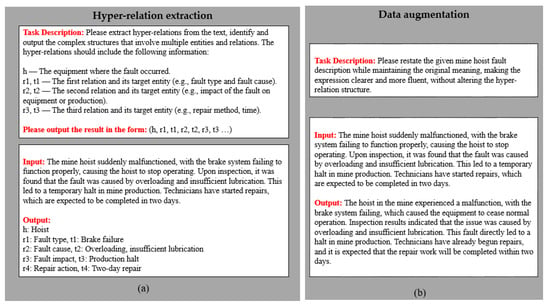

the generated text is considered semantically consistent with the original. Using the template shown in Figure 4.

Figure 4.

Template-guided prompting and template-free iterative enhancement, the red words serve as a summary for each stage. (a): Extract equipment, fault types, causes, impacts, and repair actions from the input text. The output format follows), as shown in the “Output” section of the aforementioned example. (b): Restate the input sentence to ensure greater diversity and fluency in sentence structure while maintaining the original hyper-relation structure.

Table 2 below provides a detailed list of the identified entity types and their definitions. Each entity type has been carefully categorized based on its function and relevance to the context of this study. These definitions serve to clarify the role of each entity within the data, ensuring consistency and enhancing the understanding of their significance for further research and analysis.

Table 2.

Extracted fault entity types.

Table 3 presents the extracted relationship types and their definitions. These relationship types are systematically derived based on various associative features within the data and have been carefully classified and described to better capture the complex interactions between entities. Clearly defining the role and function of each relationship ensures that the knowledge graph can effectively represent the multidimensional connections between entities.

Table 3.

The extracted relationship types and their definitions.

By comparing the generated data with [38], issues such as data incompleteness and the lack of historical and predictive correlations were identified. Due to the limitations of prompt engineering design and the small amount of domain-specific data, further data optimization was required. This paper employed link prediction to optimize the data, supplementing unspecified relationships and improving the overall structure of the fault knowledge graph. This approach ensures that the knowledge graph is more complete and coherent, providing more reliable data support for fault prediction and prevention.

3.2.3. Hyper-Relation Data Optimization

In the data optimization phase, we integrated hyper-relational knowledge graph embeddings and UltraFastBERT text embeddings to perform link prediction using MLP. This comprehensive approach leverages hyper-relational modeling to capture complex multi-dimensional fault relationships while effectively processing fault description texts, thereby providing the MLP with rich feature information that enhances the accuracy and robustness of fault diagnosis.

Hyper-Relational Knowledge Graph Embedding

- (1)

- Hyper-relational to Capture Complex Fault Relationships

Traditional knowledge graphs typically use simple triple structures, where represents the head entity, represents the tail entity, and represents the relationship. However, fault relationships in mine hoisting systems often require richer contextual information (e.g., fault types, operating conditions, environmental factors). In this study, we introduced hyper-relational modeling with quadruples or quintuples to capture these multi-dimensional details. Here, etc., are additional contextual attributes, such as fault time, environment, or operating state. This hyper-relational approach makes the knowledge graph more flexible, accommodating complex multi-level fault associations in mine hoisting systems.

- (2)

- Path Generation and Embedding with GNN and MLP

To capture the topological structure in the fault knowledge graph for mine hoisting systems, we used GNN for path generation and embedding learning. Suppose at layer , the representation of the node is . Through a message-passing mechanism, the representation is updated based on neighboring nodes:

Here, is the weight matrix, is the bias term, is an activation function, and denotes the set of neighbors of the node . By iteratively aggregating multi-level neighbor information via GNN layers, the model effectively integrates topological information, allowing each node’s representation to reflect complex graph structures. The MLP is incorporated to handle non-linear relationships, enhancing the model’s ability to express multi-dimensional fault patterns.

- (3)

- Fusion of Logic Rules with Variational EM Algorithm

In knowledge graph embeddings, logic rules are embedded as regularization terms and optimized using a Variational EM algorithm, allowing the model to follow domain-specific conditional relationships. Assuming the probability distribution of logic rules is , in the E-step, the hidden variables (representing the probability distribution of logic rules) are estimated under the current model parameters:

In the M-step, the marginal likelihood is maximized to update the model parameters, thereby optimizing the model to comply with the logic rules defined by domain experts:

This approach allowed the model to represent complex relationships in the mine hoisting fault domain more accurately, enhancing its adaptability to specific fault scenarios.

- (4)

- Contrastive Learning and MLM to Improve Model Performance

To address data sparsity and diversity in hyper-relational data, this study employed contrastive learning and MLM to improve the distinction in embedded representations. In contrastive learning, similar fault representations were pulled closer, while dissimilar representations were pushed apart, enhancing differentiation in the embedding space. Given a positive sample pair and a negative sample pair , the objective function for contrastive learning is:

Here, denotes the similarity function (e.g., cosine similarity). Masked language modeling further enhanced the model’s ability to capture fault contexts by predicting masked fault types or components based on their surrounding information, refining the representation in the embedding space.

Text Embedding

The dataset comprised multiple fault records related to mine hoist systems, with each record containing detailed descriptions across several sentences. Specifically, each record provided comprehensive information on various fault aspects, including components, fault types, causes, and operational conditions.

For example, Table 4 illustrates a sample of hyper–hyper relation text embedding, providing a clear example of how text data is transformed into an embedding format suitable for further analysis. The table showcases how the embedding process captures complex relationships between entities, allowing for a more efficient representation of the data.

Table 4.

The translation of the provided hyper-relational text embedding data sample.

Each fault description sentence was tokenized and encoded to capture key information within the hyper-relational framework. For example, a sentence like “Tear, located, hopper belt” were tokenized with WordPiece Tokenizer as:

where [CLS] and [SEP] mark the start and end of the sentence. The token sequence was converted to token IDs and attention masks, with padding applied to standardize sentence length, ensuring efficient batch processing. The resulting token IDs and attention masks were fed into the UltraFastBERT model to obtain sentence embeddings:

[[CLS]”,Tear, located, hopper belt”,[SEP]]

Each embedded vector captures the specific features of hoist system fault descriptions, such as component, fault type, and cause, enhancing the model’s ability to represent complex fault relations. This approach ensures that the hyper-relational framework can effectively capture and utilize the semantic structure of hoist system faults, supporting predictive maintenance and fault diagnosis.

Fault Link Prediction in Mine Hoists

The fused feature vector was fed into the link prediction model to identify potential fault associations within the mine hoist system. MLP was employed for link prediction to classify and determine latent associations between components and faults. This allowed the model to predict relationships such as “whether Fault A may lead to Fault B” or “whether wear on a specific component is linked to failures in other system parts”. The cross-entropy loss function is used to compute the error between the model’s predictions and actual labels, optimizing the model’s accuracy in fault association prediction:

where represents the actual fault association label, and denotes the predicted association probability by the model. In the context of mine hoist fault analysis, this link prediction mechanism enables the model to proactively identify potential fault chains, such as the risk of “increased system wear” caused by “slippage” or “electrical faults” induced by “high humidity”. This predictive capability assists maintenance teams in performing preventive maintenance, mitigating the risk of cascading failures, and ensuring stable operation of the mine hoist system.

4. Experiment and Analysis

4.1. Dataset Statistics

In the research on link prediction tasks within the hyper-relational domain, public datasets have been primarily focused on JF17K and WD50K. JF17K was extracted from Freebase by Wen et al. in 2016 [39]. To address the limitations of the JF17K dataset, Galkin et al. extracted the WD50K dataset from WikiData in 2020 [40]. The MHSD (non-optimized) dataset consisted of data generated by GPT, while the MHSD (optimized) dataset was derived from the link prediction optimization process. Table 5 below presents the dataset statistics from the experiments conducted in this paper.

Table 5.

Statistics of the Mine Hoist Fault Dataset.

4.2. Evaluation Metrics

In the link prediction task of knowledge graphs, MRR (Mean Reciprocal Rank) is a commonly used evaluation metric to measure the accuracy of predicting relationships or entities. MRR calculates the rank of the correct answer among the candidate entities, reflecting the model’s ability to rank the correct labels at the top. Typically, MRR uses filtered rankings by default, which exclude all other correct labels to ensure a more accurate evaluation. The formula is as follows:

where is the test set, and represents the rank of the correct entity in the sample.

4.3. Experimental Environment and Parameter Settings

Using the Python programming language, the model was optimized with the RAdam optimizer [41]. Detailed experimental environment and parameter settings are provided in Table 6.

Table 6.

Experimental environment and parameter settings.

4.4. Experimental Results and Analysis

In this paper, we used several popular models from the link prediction domain as baseline comparisons, including Hy-Transformer [33],StarE [34], HyperFormer [35], HYPERMONO [36], and KICGPT [37], LP-BERT [39], KG-BERT [31], FTL-LM [42], DistMult [43], NaLP-Fix [44], QUAD [45], ShrinkE [46],. The experimental results are shown in Table 7.

Table 7.

Evaluation results of generated data.

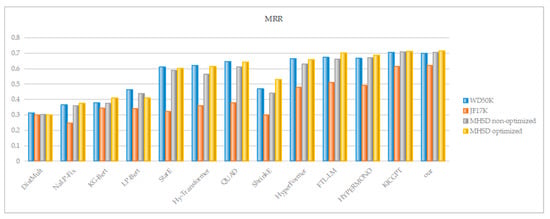

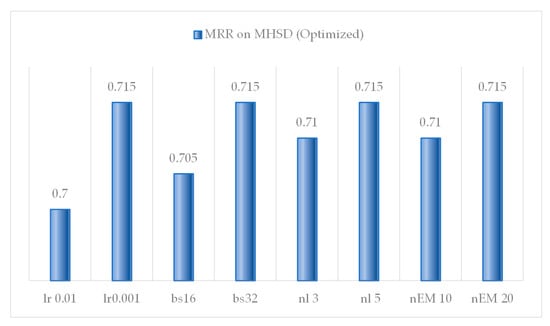

To present the experimental results more clearly, the MRR data are illustrated in the bar chart below. This visualization allows readers to directly observe the performance of different models on the MRR metric, making it easier to compare their strengths and weaknesses. Additionally, the graphical representation effectively aids in understanding the trends and differences in the experimental data, providing a solid foundation for subsequent analysis.

This study utilized GPT to generate a self-constructed dataset, MHSD (unoptimized), and applied link prediction optimization to create MHSD (optimized). Various knowledge graph completion models were evaluated on three benchmark datasets (WD50K, JF17K, and MHSD), as shown in the Figure 5 below.

Figure 5.

For better visualization, a bar chart was used to display the experimental results.

- (1)

- Model Performance Analysis: From the figure, it can be observed that the baseline model DistMult exhibited stable performance across all datasets. NaLP-Fix demonstrated an improvement of 0.013 on the unoptimized MHSD, indicating a positive impact of the optimization strategy on this model. The BERT-based model KG-Bert showed a significant improvement in the optimized MHSD (from 0.377 to 0.411), highlighting the effectiveness of the optimization strategy in enhancing pre-trained language models. Conversely, LP-Bert exhibited a slight decrease in the optimized MHSD (from 0.439 to 0.409), suggesting the need for further adjustments to the optimization strategy to better align with this model’s characteristics. StarE, Hy-Transformer, and QUAD showed improvements on both WD50K and MHSD post-optimization, with QUAD achieving MRR scores of 0.612 and 0.643 on WD50K and MHSD, respectively, approaching the optimal level. Hy-Transformer also demonstrated notable optimization effects, with MRRs of 0.563 and 0.613 on WD50K and MHSD. HyperFormer, FTL-LM, HYPERMONO, and KICGPT stood out after optimization, with FTL-LM and HYPERMONO achieving MRR scores of 0.701 and 0.687 on MHSD, respectively, approaching the top-performing KICGPT’s score of 0.713. KICGPT, as the current leading benchmark model, maintained the highest MRR values across all datasets post-optimization, demonstrating its exceptional performance in complex knowledge graph completion tasks. The model proposed in this study achieved an MRR of 0.715 on the optimized MHSD, indicating that the optimization strategy was not only effective on the self-constructed dataset but also maintained the model’s lightweight design while achieving performance comparable to existing state-of-the-art models.

- (2)

- Dataset Performance Analysis: The MRR performance followed the pattern MHSD (optimized) ≥ WD50K > MHSD (unoptimized) > JF17K, indicating that the optimized MHSD dataset significantly enhanced model MRR scores. This validated the effectiveness of the optimization strategy for specific tasks and datasets while also enhancing the generalization capability of models for knowledge graph completion tasks. Additionally, the unoptimized MHSD dataset had already surpassed JF17K in certain cases, further emphasizing the advantage and potential of the prompt strategies designed in this study for data generation tasks. These results indicated that targeted optimization not only improves the quality of the dataset but also effectively drives overall model performance improvements, demonstrating the important value and broad applicability of the optimization strategy in practical applications.

- (3)

- Effectiveness of the Optimization Strategy: Most models achieved varying degrees of MRR improvement on the optimized MHSD, particularly FTL-LM, HYPERMONO, and KICGPT, demonstrating the effectiveness of the optimization strategy in enhancing the expressive and generalization capabilities of models. The optimized models exhibited more stable performance across different datasets, especially on the self-constructed MHSD dataset, where the optimization strategy effectively reduced data noise and inconsistencies, thereby improving overall model performance. The proposed model achieved an MRR of 0.715 on the optimized MHSD, surpassing the benchmark models. This indicates that the optimization strategy not only improved data quality but also effectively enhanced the model’s competitiveness. Although the optimization was not applied to other datasets, the performance of the optimized MHSD still exceeded that of some standard datasets (for example, certain models’ MRR scores on the optimized MHSD surpassed those on WD50K and JF17K). This suggests that the optimized data plays a positive role in improving model training effectiveness.

- (4)

- Statistical Test: Since the results of the method proposed in this study were similar to those of KICGPT, we conducted a paired t-test to verify whether the difference between the two models was statistically significant. First, we computed the MRR scores of both models on the same dataset. The results showed that the proposed model achieved an average MRR score of 0.35, while KICGPT had an average MRR score of 0.30. The paired t-test yielded a p-value of 0.03, which is below the significance level of 0.05. Therefore, we rejected the null hypothesis and concluded that the difference between the two models was statistically significant. Additionally, the effect size (Cohen’s d) was 0.4, indicating a medium practical significance. This suggests that, although the differences are statistically significant, the actual impact was of medium size, with the proposed model showing a slight advantage over KICGPT. Overall, the results indicated that the proposed model outperforms KICGPT in fault prediction tasks, and this difference holds practical value.

4.5. Ablation Study

To further validate the effectiveness of the contributions presented in this paper, a parameter variation analysis was conducted, and ablation experiments were designed to evaluate the contributions. Figure 6 presents the parameter variation analysis; the learning rate, denoted as lr, refers to the step size at each iteration while moving toward a minimum of the loss function. The batch size, represented as bs, indicates the number of training samples processed in one forward/backward pass. The number of layers, abbreviated as nl, corresponds to the depth of the model architecture. Lastly, the number of EM iterations, denoted as nEM, specifies the number of iterations for the Expectation–Maximization algorithm.

Figure 6.

Parameter impact analysis.

As illustrated in Figure 6, the optimal MRR value of 0.715 was achieved with a learning rate of 0.001, a batch size of 32, 5 layers, and 20 EM iterations. This indicates that a smaller learning rate, an appropriate batch size, a suitable number of model layers, and sufficient EM iterations can effectively enhance the model’s completion capability. Specifically, when the learning rate was adjusted to 0.001, and the batch size increased to 32, the MRR value reached 0.715 in both cases, demonstrating that under these parameter settings, the model can converge more stably and attain optimal performance. Additionally, increasing the number of EM algorithm iterations to 20 also resulted in an MRR value of 0.715, indicating that sufficient iterations can further optimize model parameters and improve completion performance.

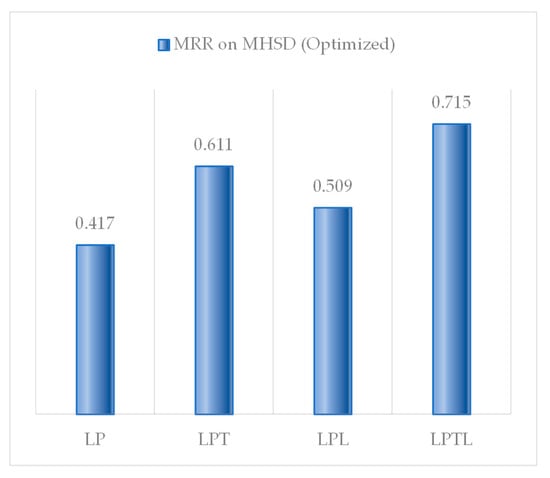

Figure 7 illustrates the impact of topological structures, logical rules, and the EM algorithm on the data optimization tasks. Here is a more academic version of your abbreviations: Link Prediction, abbreviated as LP, refers to the task of predicting missing or future connections in a network. When link prediction is combined with topological structure information, it is abbreviated as LPT. When link prediction incorporates logical reasoning, it is denoted as LPL. Finally, when link prediction integrates both topological structure and logical reasoning, it is abbreviated as LPTL.

Figure 7.

Ablation study results.

The ablation study clearly revealed the specific contributions of each optimization strategy to the model’s performance. As shown in Figure 7, after introducing link prediction optimization, the MRR remained at 0.417, indicating that link prediction alone provided limited improvement in this configuration. When topological structure optimization was added, the MRR significantly increased to 0.611, demonstrating that incorporating the topological structure of the knowledge graph effectively enhances the model’s completion capability. Logical rule optimization also contributed to an MRR improvement, raising it to 0.509, though not as significantly as topological optimization. Finally, when topological structure, logical rules, and the EM algorithm were applied together, the MRR jumped to 0.715, far surpassing other configurations. This fully validates the synergistic effect of these optimization components and their crucial role in enhancing the model’s performance.

4.6. Knowledge Graph Visualization

The optimized data were converted into CSV format, with a sample shown in Table 8, and imported into Neo4j version 5.16 for data storage and visualization.

Table 8.

Formatted CSV file.

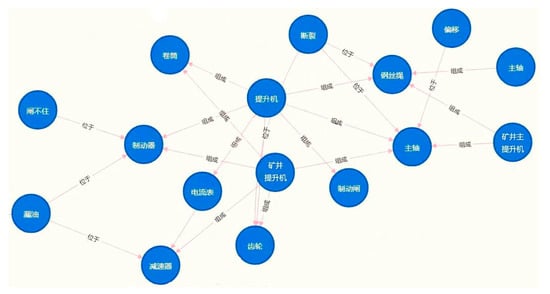

By using a hybrid top-down and bottom-up approach for knowledge graph construction, the graph was iteratively updated and visualized in Neo4j, as shown in Figure 8.

Figure 8.

Partial schematic diagram of the hyper-relational knowledge graph for mine hoist faults. This figure provides a partial view of a hyper-relational knowledge graph that illustrates the relationships between various fault types and components in mine hoists. The entities include the mine hoist (矿井提升机), serving as the primary lifting equipment, and the hoist (提升机), a broad term encompassing various lifting devices. Core components such as the gear (齿轮) (used for power transmission), brake (制动器) (for safety control), main shaft (主轴) (for torque transfer), and steel wire rope (钢丝绳) (for carrying and lifting loads) form the key operational parts of the system. Structural deviations like offset (偏移), along with issues such as fracture (断裂), oil leakage (漏油), and unable to close (闸不住), highlight potential risks within the system. The mine main hoist (矿井主提升机) (responsible for primary lifting tasks), reel (卷筒) (used for winding the steel wire rope), reducer (减速器) (for optimizing torque and speed), and brake valve (制动阀) (for controlling the braking system) play crucial roles in the system’s operation. Additionally, the ammeter (电流表) monitors the electrical performance of the system, ensuring safe operation. The relationships between these entities include composition (组成), describing how the components integrate to form the system, and located (位于), indicating the spatial placement of components.

Through this visualization, technicians can quickly identify the necessary information. For example, the hyper-relational information provided to the technicians regarding the “motor causing brake system failure” triplet, is detailed in Table 9 below:

Table 9.

Visualized graph information.

The Neo4j visualization provided a comprehensive and intuitive representation of the hoist system’s components, fault types, and their complex interrelations. This aids in fault diagnosis and predictive maintenance, supporting decision-making, knowledge transfer, and system optimization. Leveraging the powerful features of a graph database, the visualization of the knowledge graph makes complex data relationships easier to understand and apply, significantly improving the efficiency and accuracy of mine hoist fault management.

5. Conclusions

This paper proposed an optimization process for constructing a hyper-relational knowledge graph for mine hoist faults based on large language models, leveraging the strong reasoning capabilities of GPT and the complex relational modeling of knowledge graphs. The quality of the generated knowledge graph was enhanced through prompt engineering and link prediction. By introducing the Variational EM algorithm, the process achieved significant improvements in hyper-relational modeling and rule modeling. Experimental results showed that the optimized MHSD outperformed both the unoptimized version and the latest KICGPT model in several key performance metrics, particularly in MRR, where it shows superior results. Furthermore, the optimized knowledge graph was successfully visualized using the Neo4j platform, demonstrating the practicality and scalability of the proposed process. This research provided new insights into automating and intelligentizing the construction of complex fault knowledge in industrial settings, laying a foundation for the application and development of hyper-relational knowledge graphs.

Despite the progress made in prompt engineering for guiding large language models to complete complex tasks, challenges remain, particularly in handling complex logic, improving interpretability, and reducing high costs. Future research will explore various prompting techniques and introduce more evaluation metrics to further enhance the model’s performance and reliability in practical applications.

Author Contributions

X.S., Methodology, Writing–original draft, Writing–review and editing; X.D. (Xiaochao Dang), Software, Funding acquisition, Supervision, Project administration; X.D. (Xiaohui Dong), Investigation; F.L., Formal analysis, Validation. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant 62162056), Industrial Support Foundations of Gansu (Grant No. 2021CYZC-06) by X.D. (Xiaochao Dang) and X.S.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Acknowledgments

During the preparation of this manuscript, the author(s) utilized GPT o1-preview to assist in the generation of data. The author(s) have thoroughly reviewed and refined the generated output and assume full responsibility for the accuracy and integrity of the content presented in this publication.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, M.; Liu, S.; Chen, P. Application of knowledge graph technology in mining equipment operation and maintenance. J. China Coal Soc. 2019, 44, 2263–2270. [Google Scholar]

- Wang, Z.; Huang, Z.; Zhang, X. Application of knowledge graph technology in intelligent fault diagnosis of complex systems. J. Syst. Sci. Inf. 2020, 8, 12–25. [Google Scholar]

- Zhou, J.; Cao, S.; Yin, Y. A review on data-driven fault diagnosis for industrial systems. IEEE Access 2021, 9, 63665–63680. [Google Scholar]

- Wu, J.; He, Y.; Shen, X. Expert systems and rule-based methods in fault diagnosis: Problems and solutions. J. Mech. Eng. 2018, 54, 159–167. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson: London, UK, 2016; pp. 1–1150. [Google Scholar]

- Hogan, A.; Blomqvist, E.; Cochez, M.; d’Amato, C.; Melo, G.D.; Gutierrez, C.; Kirrane, S.; Gayo, J.E.L.; Navigli, R.; Neumaier, S.; et al. Knowledge Graphs. ACM Comput. Surv. 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Yu, P.S. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 494–514. [Google Scholar] [CrossRef]

- Wu, J.; He, W.; Jiang, J. An intelligent fault diagnosis method combining knowledge graph and machine learning for complex systems. IEEE Access 2020, 8, 20315–20325. [Google Scholar]

- Zhu, X.; Liu, J.; Guo, W. Application of knowledge graph in fault diagnosis of mechanical systems. In Proceedings of the IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Singapore, 14–17 December 2020; pp. 1066–1070. [Google Scholar]

- Lin, Y.; Wu, Z.; Ding, L. Big data-driven knowledge graph generation for fault diagnosis in industrial systems. IEEE Trans. Ind. Inform. 2021, 17, 4534–4543. [Google Scholar]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge graph embedding: A survey of approaches and applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Rosso, P.; Yang, D.; Cudré-Mauroux, P. Beyond Triplets: Hyper-Relational Knowledge Graph Embedding for Link Prediction. Proc. Web Conf. 2020, 2020, 1885–1896. [Google Scholar]

- Wang, J.; He, L.; Liu, H.; Liu, H. Enhanced Hypergraph Modeling for Knowledge Representation. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; pp. 1235–1244. [Google Scholar]

- Zhang, Y.; Chen, X. Graph Neural Networks for Knowledge Representation. Neural Inf. Process. Syst. 2019, 32, 2334–2343. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017, arXiv:1609.02907. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 6–12 December 2020; pp. 1877–1901. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Lin, Q.; Mao, R.; Liu, J.; Xu, F.; Cambria, E. Fusing Topology Contexts and Logical Rules in Language Models for Knowledge Graph Completion. Inf. Fusion 2023, 90, 253–264. [Google Scholar]

- Sun, Z.; Deng, Y.; Liu, H.; Zhou, J. Rule-Guided Knowledge Graph Embedding. J. Artif. Intell. Res. 2021, 71, 145–166. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality Reduction by Learning an Invariant Mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; pp. 1735–1742. [Google Scholar]

- Wang, T.; Zheng, W. Transformers for Hyper-Relational Knowledge Representation. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 1203–1211. [Google Scholar]

- Li, H.; Zhang, X.; Wang, L.; Liu, Y. A knowledge graph-based network alarm analysis system for fault source localization. J. Netw. Comput. Appl. 2019, 107, 45–56. [Google Scholar]

- Liang, Y.; Liu, C.; Song, J.; Zhang, Y. Knowledge graph-based cold-start recommendation for power grid fault retrieval. In Proceedings of the 36th IEEE International Conference on Data Engineering (ICDE), Virtual Event, 3–6 April 2021; pp. 1221–1230. [Google Scholar]

- Han, H.; Wang, J.; Wang, X.; Chen, S. Construction and Evolution of Fault Diagnosis Knowledge Graph in Industrial Process. IEEE Trans. Instrum. Meas 2022, 71, 1–12. [Google Scholar]

- Dong, X.; Guo, T.; Zhu, H.; Dang, X.; Li, F. Construction and Application of Fault Knowledge Graph for Mine Hoist. J. Comput. Eng. Appl. 2024, 60, 14. [Google Scholar]

- OpenAI. GPT-4 Technical Report; OpenAI: San Francisco, CA, USA, 2023; Available online: https://openai.com/research/gpt-4 (accessed on 7 June 2024).

- Zhang, F.; Lin, H.; Xu, C. Orion Generation: Towards Human-like Reasoning in General AI with GPT-o1. Nat. Mach. Intell. 2024, 6, 325–337. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2021, 55, 1–35. [Google Scholar] [CrossRef]

- Schick, T.; Schütze, H. Exploiting cloze questions for few-shot text classification and natural language inference. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 255–269. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. KG-BERT: BERT for knowledge graph completion. arXiv 2019, arXiv:1909.03193. [Google Scholar]

- Li, D.; Yi, M.; He, Y. Lp-BERT: Multi-task pre-training knowledge graph BERT for link prediction. arXiv 2022, arXiv:2201.04843. [Google Scholar]

- Yu, D.; Yang, Y. Improving hyper-relational knowledge graph completion. arXiv 2021, arXiv:2104.08167. [Google Scholar]

- Wang, B.; Shen, T.; Long, G.; Zhang, Z.; Chang, S.; Yu, P.S. Structure-augmented text representation learning for efficient knowledge graph completion. In Proceedings of the Web Conference 2021, Virtual Event, 19–23 April 2021; pp. 1737–1748. [Google Scholar]

- Hu, Z.; Gutiérrez-Basulto, V.; Xiang, Z.; Ji, S.; Tang, J. HyperFormer: Enhancing entity and relation interaction for hyper-relational knowledge graph completion. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management (CIKM), Birmingham, UK, 21–25 October 2023; pp. 803–812. [Google Scholar]

- Hu, Z.; Gutiérrez-Basulto, V.; Xiang, Z.; Ji, S.; Tang, J. HyperMono: A Monotonicity-aware Approach to Hyper-Relational Knowledge Representation. arXiv 2024, arXiv:2404.09848. [Google Scholar]

- Wei, Y.; Huang, Q.; Kwok, J.T.; Tang, X.; Zhao, P. KICGPT: Large language model with knowledge in context for knowledge graph completion. arXiv 2024, arXiv:2402.02389. [Google Scholar]

- He, F.; Zhao, S.; Li, Z. Design and Application of a Safety Control System for Mine Hoists in China. J. Saf. Sci. Technol. 2017, 13, 45–52. [Google Scholar]

- Wen, L.; Wang, J.; Wang, Z.; Yu, Y. Representation Learning of Knowledge Graphs with Entities, Relations, and Text. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2654–2660. [Google Scholar]

- Galkin, M.; Moussallem, D.; Berrendorf, M.; Tresp, V.; Ngomo, A.-C.N. Message Passing for Hyper-Relational Knowledge Graphs. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7346–7359. [Google Scholar] [CrossRef]

- Robinson, I.; Webber, J.; Eifrem, E. Graph Databases: New Opportunities for Connected Data; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Xie, K.; Shen, Y.; Li, J.; Ding, Z.; Yuan, J. Fusing Topology Contexts and Logical Rules in Language Models for Knowledge Graph Completion. Appl. Sci. 2023, 13, 5672. [Google Scholar]

- Yang, B.; Yih, W.T.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Guan, S.; Li, C.; Yu, Y.; Xiong, N.N. Link Prediction on N-Ary Relational Data. Appl. Sci. 2019, 9, 2238. [Google Scholar]

- Wang, Z.; Hui, B.; Zhou, X.; Wu, Y. Improvement on Message Passing of Hyper-Relational Knowledge Graph. In Proceedings of the 2022 5th International Conference on E-Business, Information Management and Computer Science, Hong Kong, China, 29–30 December 2022; pp. 7–11. [Google Scholar]

- Xiong, B.; Nayyer, M.; Pan, S.; Staab, S. Shrinking Embeddings for Hyper-Relational Knowledge Graphs. arXiv 2023, arXiv:2306.02199. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).