1. Introduction

Visual servoing control that utilizes visual feedback to guide the movements of the manipulator’s end-effector has been widely used in the field of robotics [

1]. This approach enhances the manipulator’s versatility, expanding its applicability to unstructured environments.

Image-based visual servoing (IBVS) directly controls the manipulator using image feature errors. One class of IBVS approaches realizes visual tracking by directly using global image information for error regulation in the loop [

2]. For example, Collewet et al. [

3] use photometric information to achieve visual servoing tasks without image feature extraction; however, this method struggles with handling diffuse reflection targets. In addition, other types of global image information, such as histograms [

4] or Gaussian mixtures [

5], have been utilized.

Another important class of IBVS methods is the point featured-based method. Since the design of point feature-based IBVS methods is simple, they have been widely used in the field of robotic arm visual servoing tasks. Considering the complexity of the calibration process and the impact of camera distortion, uncalibrated image-based visual servoing (UIBVS) methods that do not require precise calibration of the camera’s intrinsic and extrinsic parameters have been studied in depth [

6,

7,

8,

9,

10]. The transformation of feature points in vision space and the robotic workspace can be represented via the image Jacobian matrix, which is related to depth information, as well as the camera’s intrinsic and extrinsic parameters. Therefore, for the UIBVS problem, the control performance highly depends on the accuracy of the image Jacobian matrix estimation. However, the underlying transformation between the pose of the end-effector and the image coordinates of feature points is nonlinear. While the image Jacobian matrix is a linearized representation, it results in modeling uncertainties. Moreover, due to the presence of unknown process and measurement noise, accurately estimating the image Jacobian matrix remains a major challenge in the UIBVS problem.

Currently, most UIBVS methods [

11,

12,

13,

14] focus on the kinematic-based control, which involves tracking desired image feature points via controlling joint velocities. Despite their advantages in terms of simple controller design and high computation efficiency, these methods require the robotic manipulator to follow commanded speeds in real time, which is often impractical [

15]. Furthermore, joint torque constraints are not considered, potentially leading to performance degradation issues.

Dynamic-based control methods have been well studied in robotic control, but they are not widely utilized in UIBVS. One problem resides in the fact that the dynamic model of the robot is nonlinear, which leads to the design of a nonlinear controller with high computation complexity, making real-time visual tracking difficult. In addition, uncertainties arise due to the inaccurate or unknown parameters of the robotic manipulator, such as joint inertia and mass. Designing the controller for systems with unknown dynamics also compromises control precisions. To tackle this problem, data-driven methods [

16,

17] which do not require the knowledge of the analytical models have been studied recently. However, practical constraints such as joint torque constraints and camera field of view constraints should be taken into account, posing additional challenges to dynamic-based UIBVS control methods.

Motivated by the above-mentioned discussions, in this paper, we propose a novel ARKF-KMPC algorithm for UIBVS control. The ARKF is used to estimate the image Jacobian matrix when both the camera’s intrinsic and extrinsic parameters are unknown, which eliminates the laborious camera calibration procedures and enhances the system’s robustness against camera disturbances. The Koopman operator is introduced to construct a linear dynamic model of the manipulator, and the MPC controller is used to solve the UIBVS problem with state and control constraints. The main contributions of this paper can be summarized as follows:

To address the IBVS problem in presence of uncalibrated camera parameters, unknown dynamics, and state and control constraints, we propose an ARKF-KMPC approach in which the UIBVS controller is designed at the dynamic level. Compared to the kinematic-based IBVS methods [

18,

19], the proposed method is robust against model uncertainties, and performance degradation arising from control torque constraints could be avoided. In contrast to dynamic-based UIBVS methods, the proposed approach alleviates the need for the dynamic model and achieves real-time control performance.

The proposed ARKF-KMPC approach could effectively handle the camera calibration issue. The ARKF is utilized to estimate the image Jacobian matrix online when both the camera’s intrinsic and extrinsic parameters are unknown, thereby eliminating the laborious camera calibration procedures and improving robustness against camera disturbances.

It is the first time that the data-driven control strategy has been introduced for the UIBVS problem. The unknown nonlinear dynamic model is learned via Koopman operator theory, resulting in a linear Koopman prediction model. Consequently, with a symmetric quadratic cost function, the proposed approach allows for the utilization of quadratic programming (QP) for online optimization, significantly reducing the computation cost, and making the real-time dynamic-based UIBVS control feasible. Simulation results further validate that the computation time of the proposed approach is comparable to the one of kinematic-based methods.

This paper is organized as follows.

Section 2 introduces the related work of the paper.

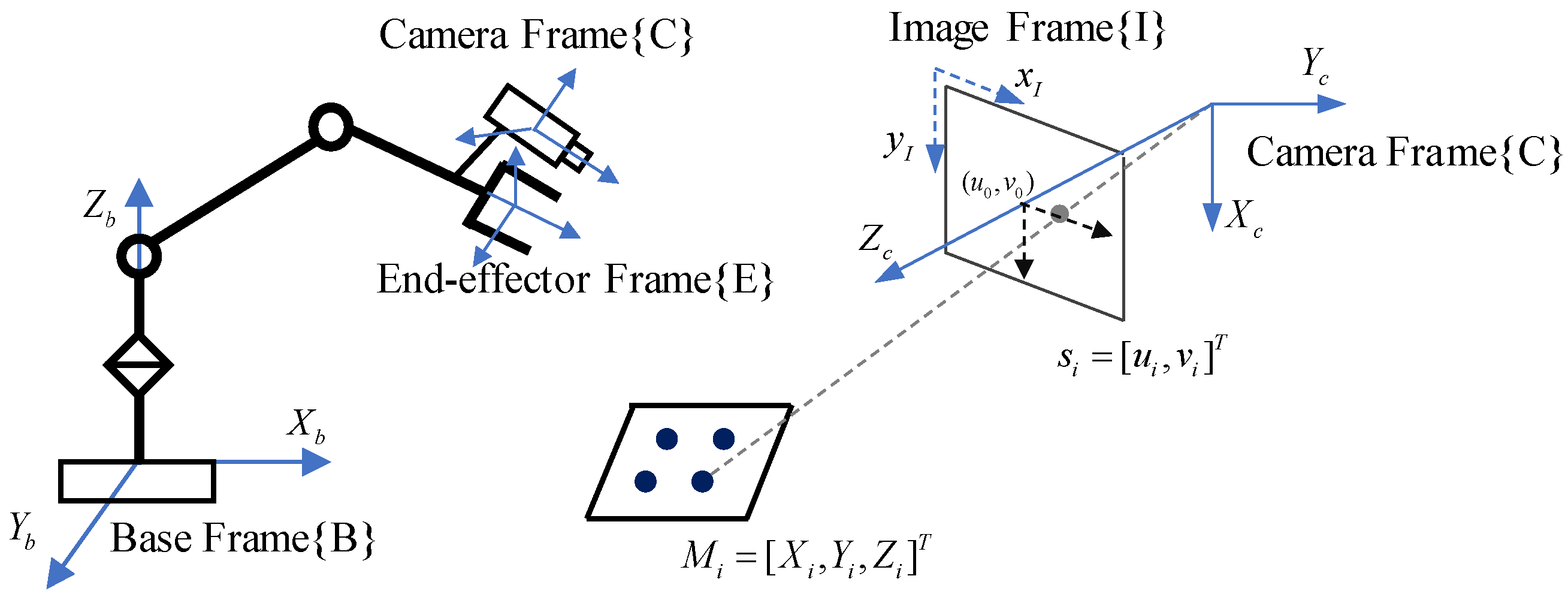

Section 3 introduces the mathematical model of the eye-in-hand camera configuration UIBVS system.

Section 4 proposes the ARKF-KMPC algorithm for UIBVS. Simulations and comparisons are presented in

Section 5, and conclusions are drawn in

Section 6.

2. Related Work

UIBVS designs the control law based on the image Jacobian matrix; however, due to the nonlinearity of the robotics and uncertainties in camera parameters, deriving the image Jacobian matrix analytically is nontrivial. Recently, different methods have been proposed to enhance the accuracy and robustness of image Jacobian matrix estimation, such as Kalman filter [

20], robust Kalman filter [

21], extended Kalman filter [

22], particle filter [

23], and neural networks [

24]. The Kalman filter method was first introduced into the estimation of the image Jacobian matrix by Qian et al. [

20]. They constructed a dynamic system wherein the state variables of the system were composed of the elements of the image Jacobian matrix. Subsequently, a Kalman–Bucy filter was employed to online estimate the state variables of the system. The designed filter demonstrated robustness to system noise and external disturbances. Zhong et al. [

21] proposed a UIBVS scheme based on the robust Kalman filter, in conjunction with Elman neural network (ENN) learning techniques. The relationship between the vision space and the robotic workspace was learned using an ENN, and then a robust KF was used to improve the ENN learning result. Wang et al. [

25] proposed to estimate the total Jacobian matrix using the unscented particle filter, which can make full use of the feature measurements, and hence, can result in more accurate estimations. Gong et al. [

23] proposed a geometric particle filter for visual tracking and grasping of moving targets. The introduction of these methods has facilitated research in UIBVS; however, due to the discrepancy between the actual nonlinear transformation and the linearized image Jacobian matrix, the process noise of the image Jacobian matrix estimation model is actually unknown, and hence, how to improve the estimation accuracy without sacrificing real-time performance remains an open problem.

Once the image Jacobian matrix is estimated, different control strategies have been proposed for addressing the UIBVS problem. For example, Chaumette et al. [

11] designed the PD controller based on the pseudo-inverse of the image Jacobian matrix. Siradjuddin et al. [

12] designed a distributed PD controller of a 7 degrees-of-freedom (DOF) robot manipulator for tracking a moving target. The reinforcement learning algorithm was proposed to adaptively tune the proportional gain in [

14]. To address the problem of unknown dynamics, He et al. [

26] proposed an eye-in-hand visual servoing control scheme based on the input mapping method, which directly utilizes the past input–output data for designing the feedback control law. The above-mentioned approaches could be used for online control; however, the state and control constraints in the UIBVS system are not taken into account.

Model Predictive Control (MPC) is also a method to solve the constraints in the control system [

27,

28,

29], which involves the online solution of a finite-horizon open-loop optimization problem at each sampling instant. The first element of the obtained control sequence is then applied, and the process is repeated at the next sampling instant. MPC efficiently handles state and input constraints and could be implemented in real time. Consequently, MPC has been widely used in robotic control, and several MPC-based UIBVS methods have been developed. For example, Qiu et al. [

18] proposed an adaptive MPC control scheme wherein the unknown camera intrinsic and extrinsic parameters, unknown depth parameters, as well as external disturbances are estimated via a modified disturbance observer. He et al. [

19] proposed a synthetic robust MPC method with input mapping for the UIBVS problem under system constraints. However, these methods are designed only using the kinematics of robotics.

For the dynamic-based MPC methods of the UIBVS system, Qiu et al. [

1] proposed an MPC controller based on the identification algorithm and the sliding mode observer. The unknown model parameters are estimated via the parameter identification algorithm, and the sliding mode observer is designed to estimate the joint velocities of the IBVS system. Wu et al. [

30] introduced a two-layer MPC control scheme incorporating an extended state observer to address the problem of parameter uncertainties. Two MPC controllers were designed for both the kinematic level and the dynamic level sequentially, resulting in solving two optimal control problems online. As a result, designing the controller is complicated when tuning design parameters, and the performance of the dynamic controller highly depends on the performance of the kinematic controller. Moreover, both the above dynamic-based MPC methods are based on the nonlinear dynamic model of robotics, which results in solving the nonlinear optimization problem, and hence, is difficult to implement in real time.

Recently, data-driven MPC has been proposed to address the issue of unknown model parameters, wherein input–output data are used to learn the system model. For example, Gaussian Process (GP) [

31] and neural networks [

32] are employed for offline learning of prediction models, followed by online MPC controller design. However, online updates of GP models require tracking all measurement data, which may be computationally infeasible. Additionally, due to the nonlinearity of the neural network, the controller suffers from real-time implementation.

Koopman operator theory provides a data-driven solution by constructing a linear model of the nonlinear dynamical system, enabling the use of linear control methods like linear quadratic regulators (LQRs) and linear MPC [

33]. For instance, Zhu et al. [

34] proposed a Koopman MPC (KMPC) approach for trajectory tracking control of an omnidirectional mobile manipulator. Bruder et al. [

35] designed MPC controllers for a pneumatic soft robot arm via the Koopman operator and demonstrated that the KMPC controllers outperform the MPC controller based on the linearized model, making accurate linear control of nonlinear systems achievable. However, to the best of the authors’ knowledge, few data-driven MPC strategies have been considered for solving the IBVS problems.

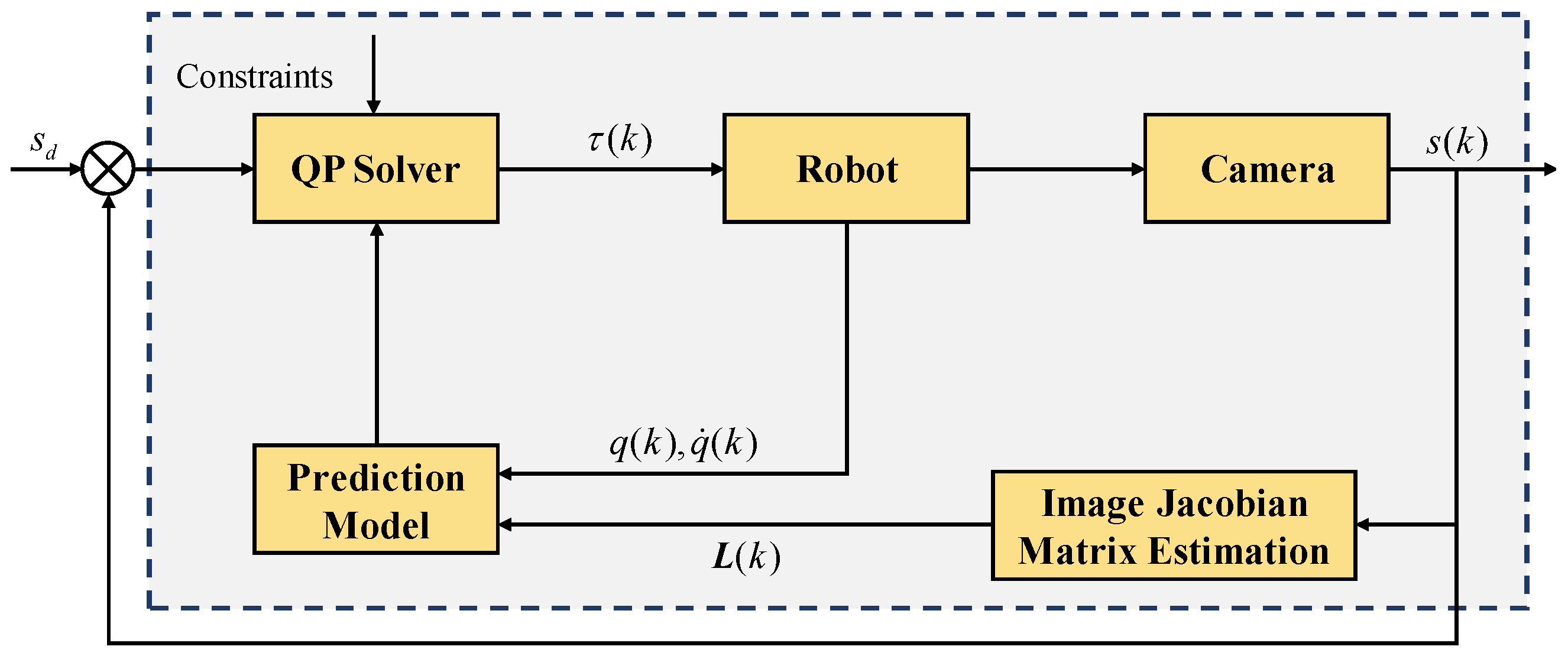

4. ARKF-KMPC Algorithm for UIBVS

In this section, we propose a novel ARKF-KMPC algorithm for solving the UIBVS problem. The ARKF is introduced to estimate the image Jacobian matrix

; then, the Koopman operator is used to approximate the manipulator’s dynamic model, and the MPC controller is designed for the linear UIBVS model under state and control constraints. The uncalibrated visual servoing control system framework is shown in

Figure 2.

4.1. Image Jacobian Matrix Estimation

Since the intrinsic and extrinsic parameters of the camera are unknown or varying, the ARKF algorithm [

38] is proposed to estimate the image Jacobian matrix online. Denote

as an augmented state vector formed by collections of row elements of the image Jacobian matrix

. The system observation vector is

, where

represents the variation of image features. The image Jacobian matrix could be estimated via the discrete-time system [

20]

where

denotes the measurement matrix.

and

are the process noise and measurement noise, respectively.

The measurement matrix

could be written as

where

represents the pose change of the camera (end-effector) between time

k and

.

The state and covariance prediction equations are

where

and

represent the state estimation and error covariance matrix at time

, respectively.

is the covariance matrix of the process noise.

Define the error of the state and measurement at time

k as

Denote as the covariance matrix of measurement noise, as the error covariance matrix of predicted state vector , and is an adaptive factor with values .

By using the least squares principle,

the estimator of the adaptive filter is

where

is an adaptive gain matrix, and

The posterior error covariance matrix of the estimated state vector is

Furthermore, the adaptive factor is constructed based on the prediction residual, which is tuned automatically.

Define the prediction residual vector as

The theoretical calculated covariance matrix is

and the estimated covariance matrix is

where

N is often chosen as 1 in practice. To verify whether the filter diverges, a statistical measure for discerning the adaptive factor is constructed by comparing the computed matrix

of

with the estimated matrix

as

An adaptive factor function model is constructed as

where

and

are design parameters, and usually,

,

.

The ARKF algorithm for the image Jacobian matrix estimation is summarized in Algorithm 1.

| Algorithm 1 ARKF Algorithm. |

Input: ,,,,,.

Output: .

for do. Step 1. Predict state and error covariance via (10). Step 2. Calculate the adaptive factor via (16)–(20), where is the variation of image features, and is defined in (9). Step 3. Update the posterior state and error covariance via (13)–(15). Step 4. Reorganize for . |

Remark 1. Due to the nonlinearity of the visual servoing system of the robotic manipulator, applying the KF for estimating the image Jacobian matrix needs to satisfy the requirement that the manipulator should not move too fast; otherwise, the linear model (8a) is not valid. Furthermore, the model uncertainties and are not known a priori and may not be Gaussian white noise. From (12), it can be seen that the ARKF could adjust the weight of the prediction information, and hence, estimating the image Jacobian matrix via the ARKF could provide more accurate results [38]. 4.2. Koopman Operator for Dynamical System

To tackle the problem where the system’s dynamic model is unknown, in this paper, we propose a data-driven modeling approach based on the Koopman theory. We briefly summarize procedures for constructing linear dynamical systems via Koopman operators. For further details, the reader may refer to the work [

39,

40].

Consider a discrete-time nonlinear dynamical system

where

is the system state,

is a non-empty compact set, and

is assumed to be a locally Lipschitz continuous nonlinear function.

Define a real-valued observable function of

x as

, and denote

as the space of observables. Koopman theory maps the nonlinear system (

21) to a linear system using an infinite-dimensional linear operator

, which acts on the observable function, i.e.,

Therefore, the Koopman operator is a linear infinite-dimensional operator which propagates the observable to the next time step. In other words, the Koopman operator evolves nonlinear dynamics linearly without loss of accuracy.

Since the Koopman operator is infinite-dimensional, a data-driven algorithm, extended dynamic mode decomposition (EDMD), is used to approximate with a finite-dimensional operator using collected data. A set of observables is used to lift the system from the original state space to a high-dimensional space, and the procedures are summarized as follows.

First, we select

observables

, and let

We collect

P snapshot pairs

, and construct the lifted data matrices

Then, the approximate Koopman operator

could be calculated by solving the least-squares problem

where

represents the Euclidean vector norm [

41].

Next, we generalize the Koopman theory to the controlled system:

where

is the control input at time step

k. The extended state

is defined, and the lifted data matrices are further extended as

where

such that

. Therefore, the finite-dimensional Koopman operator for the controlled system

can be obtained as the solution to the optimization problem

Denote the linear Koopman model of (

26) as

where

is the lifted state and

is the predicted value of the original state

. Then, problem (

28) is equivalent to

and the analytical solution is given as

where

denotes the pseudoinverse of matrix

.

is obtained by solving the least-squares problem

where

, and the solution is given as

Furthermore, we choose observable functions

i.e., the observable functions contain the original state; then,

The Koopman linear system identification algorithm is summarized in Algorithm 2.

| Algorithm 2 Koopman Linear System Identification. |

Input: .

Output: , ,.

Step 1. Collect P snapshot pairs and for , where and . Step 2. Choose observable functions and construct the lifted data matrices via ( 25) and ( 28). Step 3. Identify the linear Koopman model ( 31) via ( 32) and ( 34). |

Remark 2. To train the Koopman model, we collect P input-state datasets , satisfying (26), and the data need not to be collected at consecutive time steps. The dimension of the lifted state space is a design parameter, which should be selected according to the precision requirements. The observable functions can be chosen as some basis functions, such as Gaussian kernel functions, polyharmonic splines, and thin plate splines, or neural networks. Remark 3. A standard algorithm [39] is used to realize the Koopman model (29), and modifications of the algorithm are beyond the scope of this research. Recently, different algorithms have been proposed to improve the estimation accuracy of the model (29). For example, deep neural networks have been used to learn the observable functions [42], and instructions to construct a stable Koopman model have been studied in [43]. Stability analysis has been discussed in [43,44]. 4.3. KMPC for UIBVS

Consider the nonlinear dynamic model of the manipulator (7b) and (7c), and let

. We choose observable functions to contain the original state

as (

34); then, the linear Koopman model (

29) could be constructed, where

, and

represents the rest of the lifted states. Therefore, the overall linear UIBVS prediction model is

where

and

is the extended state vector,

is the extended predicted value of states.

In the UIBVS, the image feature should remain within the image plane, so that the visibility constraints are defined as

where

and

are the image boundaries, respectively.

The control input constraints are defined as

where

and

are the minimum and maximum control input torques, respectively.

and

are the minimum and maximum control input increments, respectively.

Define the quadratic cost function at sampling time

k as

where

is the prediction horizon,

,

, and

are the weighting matrices.

denotes the predicted image feature points at time

via prediction model (

36) given the current state

,

denotes the input at the time instant

.

The object of the UIBVS is to track the desired feature points

, and the optimization problem is formulated as follows:

At each sampling time, the optimal input sequence

is calculated by solving the open-loop finite-horizon optimal control problem. The first element of the sequence is considered as the optimal control input

, and is applied to the robot. The process is repeated at the next sampling moment. Since

is a symmetric quadratic cost function, and the prediction model (

36) is linear, the quadratic programming (QP) algorithm is used to solve the optimal control problem above.

Remark 4. Compared to conventional visual servoing control methods [7,12,45], MPC is suitable for addressing situations with state and control constraints. Furthermore, in contrast to approaches in [18,30], the proposed method directly controls the joint torques of robotics, which can improve the control accuracy and robustness to external disturbances, enabling the control system to better adapt to complex working environments. Additionally, the symmetric cost function and the Koopman MPC strategy used in this paper result in a linear quadratic optimization problem, which could be solved via QP. This leads to a notable increase in computation speed, ensuring that real-time requirements are met and substantially enhancing the practical applicability of the proposed method. The proposed ARKF-KMPC algorithm for UIBVS is summarized in Algorithm 3.

| Algorithm 3 ARKF-KMPC Algorithm. |

Input:

Prediction horizon , cost matrices , , .

Output:

Control input .

Step 1. Koopman Linear System Identification. Identify the Koopman linear model , , via Algorithm 2. Step 2. for do. a. Image Jacobian Matrix Estimation. Estimate via Algorithm 1, and update in ( 37). b. KMPC optimization. Solve ( 41) via QP to find the optimal input . c. Apply to the UIBVS system. |

5. Simulation Results

We test the proposed ARKF-KMPC method on a 2-DOF robotic manipulator. The parameters of the manipulator are shown in

Table 1. The inertia matrix

, the centripetal and Coriolis matrix

, and the gravitational torque vector

are defined in

Appendix B [

30].

Simulations are carried out with unknown dynamics, and the camera intrinsic parameters are unknown. The actual intrinsic parameters of the camera are set as follows: the focal length m, the coordinates of the principal points pixels. The image has a pixels resolution. The sampling time is 0.01s. The simulations are conducted in MATLAB with a 2.6 GHz Intel Core i5.

In general, root mean squared deviation (RMSD) is defined as

where

is any

-dimensional actual state vector at time step

k, and

represents the corresponding predicted state vector.

5.1. Verification of the Model Accuracy

First, we test the prediction accuracy of the Koopman model.

Training of the Koopman model. Data are collected over 1000 trajectories, with 100 snapshots in each trajectory. The control input for each trajectory is generated from a two-dimensional Gaussian distribution with zero mean and covariance of . The initial condition for each trajectory is randomly generated with a uniform distribution over . The observable functions are chosen to be the state itself, and the thin plate spline radial basis functions, where the center points are uniformly distributed in a given interval . The total number of observable states is .

In

Table 2, we compare the prediction performance of different Koopman models during a short duration of

s, a medium duration of

s, and a long duration of 2 s. For each model, a Monte Carlo simulation with 1000 randomly generated initial conditions is conducted, and the RMSDs over the entire prediction horizon are shown in

Table 2 as a function of

.

It should be noted that prediction errors do not necessarily decrease as increases, and the prediction performance for the short, medium, and long durations are different. For example, the best prediction performance can be achieved when for the short duration but it performs the worst for the long duration. Therefore, the design parameter should be tuned by trial-and-error.

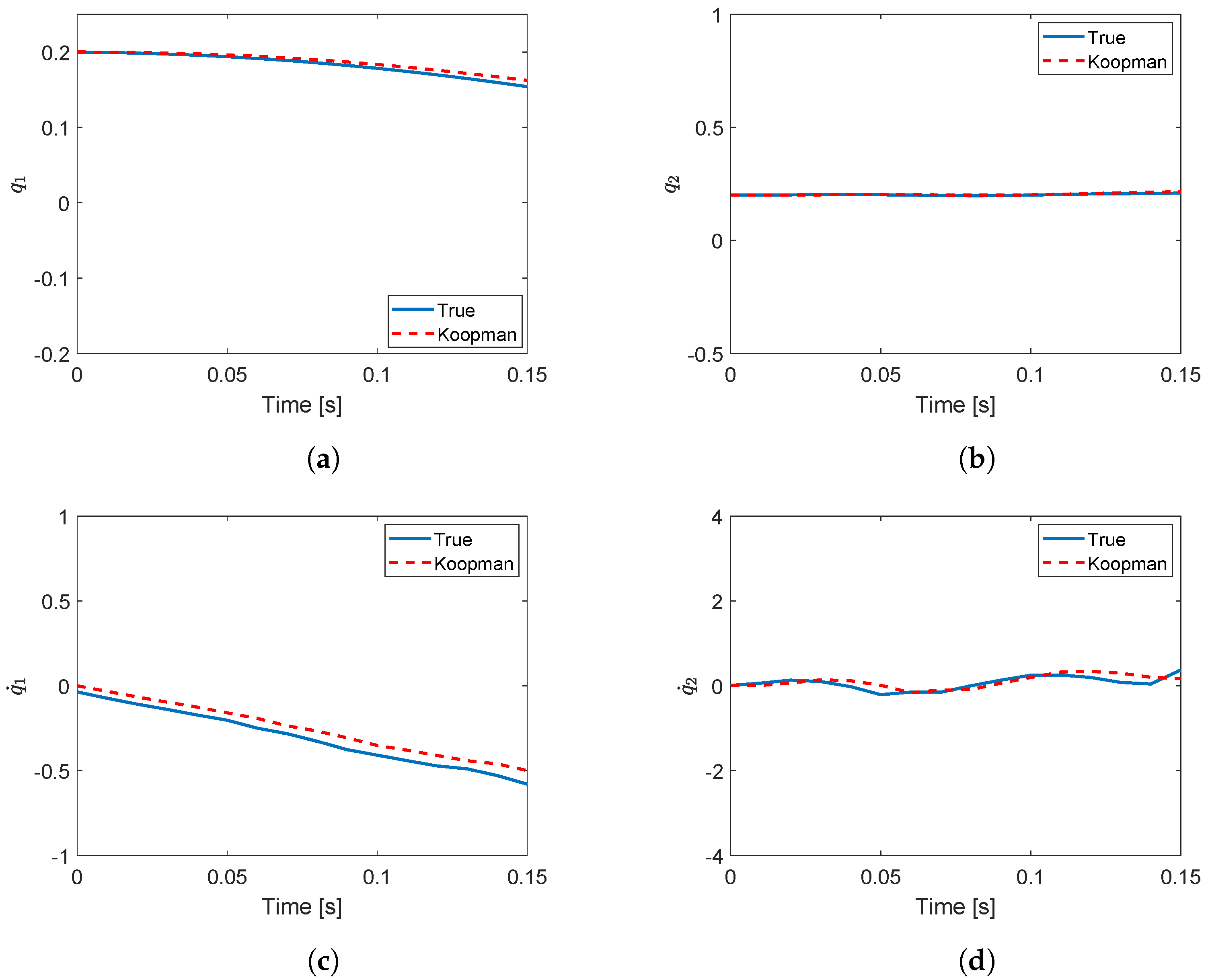

Testing of the Koopman model. In

Figure 3, we compare the prediction results between the original nonlinear dynamic model (7b) and (7c), and the proposed Koopman model with

within a duration of 0.15s. It can be seen that predictions using the Koopman model are rather accurate.

5.2. Verification the Control Performance of the Model

Next, we test the control performance of the proposed ARKF-KMPC method. The visibility constraints are set as

The control input constraints are set as

The control objective is to map four feature points from their initial positions to desired positions on the image plane, with an error tolerance of four pixels, i.e.,

The initial positions of the feature points on the image plane are pixels, pixels, pixels, and pixels, respectively. The desired positions of the feature points on the image plane are pixels, pixels, pixels, and pixels, respectively. For image Jacobian matrix estimation, the weighting matrices are chosen as , , and , and the design parameters are and . For the MPC controller, the weighting matrices are chosen as , , and . To balance the computation time and control efficiency, the prediction horizon is chosen as s. The dimension of the Koopman model is chosen as .

Figure 4 shows simulation results for a 2-DOF robotic manipulator. The error curve

between the four actual feature points and the desired ones is shown in

Figure 4a, where the smallest error is

pixels. In

Figure 4b, the 2-D trajectory of the feature points on the image plane is presented. It can be seen that the robotic manipulator can reach the desired position successfully. The control input torques of the joints are shown in

Figure 4c, from which we can observe that the joint torques and the increment of joint torques are always within the constraints. Joint angles of the 2-DOF manipulator are shown in

Figure 4d, which changes smoothly. The average optimization time at each time step is

s, comfortably meeting real-time requirements.

Furthermore, we conducted 100 Monte Carlo simulations for models with different values of

.

Table 3 presents the average final control errors for different models. The results further corroborate the effectiveness of the proposed control algorithm.

We also compare the proposed method with the method using the original nonlinear dynamics model. Although the image feature points using the nonlinear dynamic model converge to the desired ones with a smaller error of pixels, the average computation time is s, which results from solving the nonlinear optimization problem using fmincon, and hence, is not suitable in real applications.

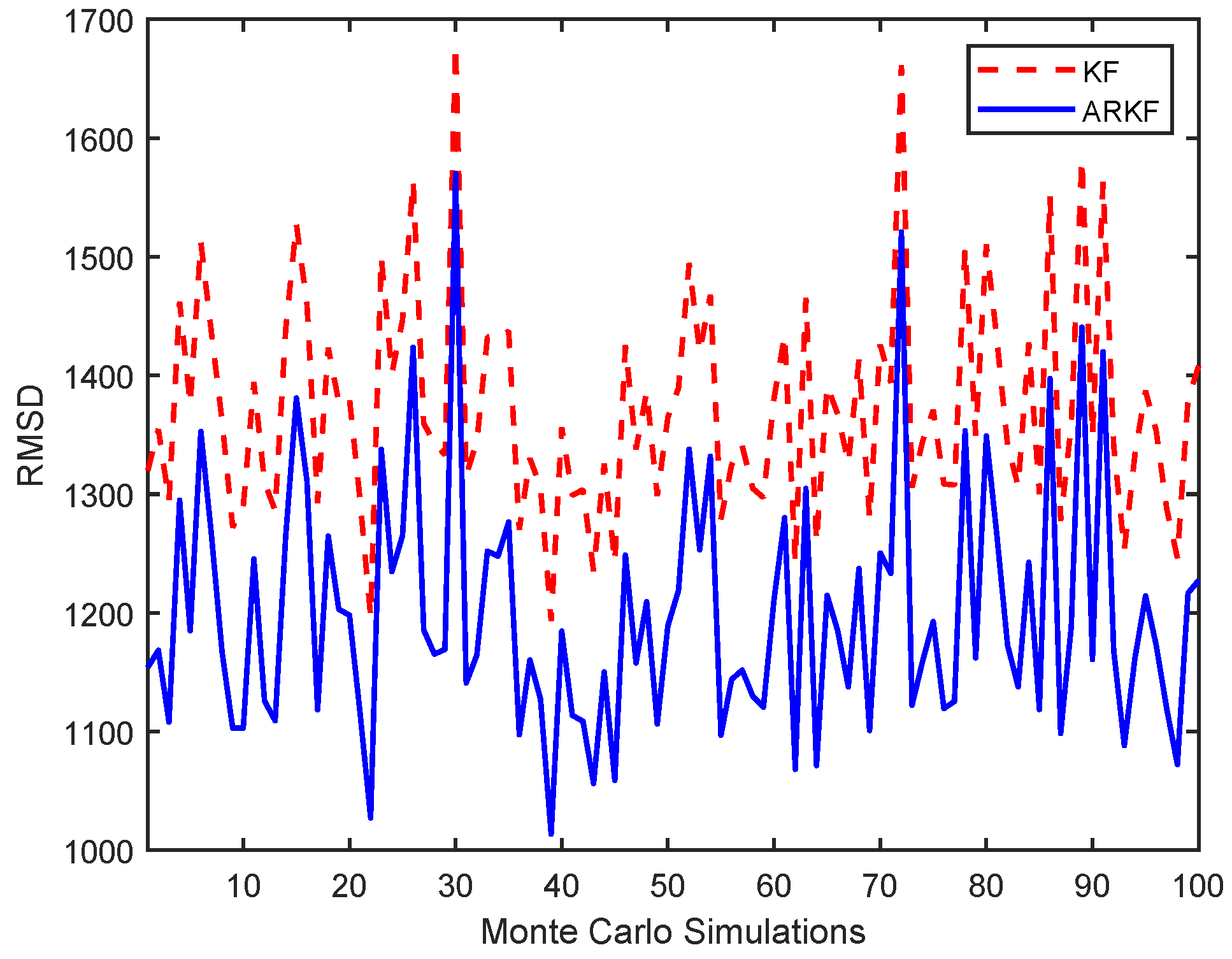

Furthermore, to validate the performance of the proposed ARKF algorithm for image Jacobian matrix estimation, we compare the RMSD between the estimated image Jacobian matrix and the actual image Jacobian matrix using KF and ARKF. We perform 100 Monte Carlo simulations; in each simulation, the control inputs are randomly generated from a two-dimensional Gaussian distribution with zero mean and a covariance of

, over a simulation time of 6 s. The average RMSD of 100 Monte Carlo simulations using the KF algorithm is 1.3716

and 1.1995

using the ARKF. The RMSDs in 100 Monte Carlo simulations for both algorithms are presented in

Figure 5.

It can be seen that, compared to the KF method, applying the ARKF for estimating the image Jacobian matrix effectively reduces the estimation error, enabling the system to perform visual servoing tasks accurately.

5.3. Comparisons with Related Work

To further demonstrate the superiority of the proposed algorithm, a comparative analysis is conducted against three related methods, as detailed in

Table 4. For all methods, the kinematic-based MPC is solved using QP, and design parameters are all tuned for best performance.

Method (a) designs the MPC controller only considering the kinematics of the manipulator, resulting in the fastest convergence rate. The average computation time for this method is about 0.0005 s per time step, which is the lowest among four algorithms; however, the final image error is 7.3696 pixels, which cannot satisfy the control performance requirement.

Both Methods (b) and (c) design two controllers for kinematics and dynamics separately. The joint angular velocities are optimized via the kinematic-based MPC controller, and the PD controller and MPC controller are designed at the dynamic level to track the desired joint angular velocities, respectively. It can be seen that, without sacrificing too much computation time, the position control using the PD controller is feasible, and the final image error of Method (b) is comparable to that of Method (a); however, the joint torque constraints are not considered when using the PD controller. On the other hand, Method (c) needs to solve a nonlinear optimization problem at each time step, and the average computation time is around 1.5778 s, which could not be implemented in real time. Moreover, the control performance of both Methods (b) and (c) highly depends on the results from the kinematic-based MPC controller, and the selection of design parameters also poses challenges in this problem.

Therefore, from

Table 4, it can be seen that the proposed ARKF-KMPC method, which designs the dynamic-based MPC controller directly, could achieve the best performance. With a linear Koopman prediction model and a symmetric quadratic cost function, a linear optimization problem is solved at each time step, the computation time of the proposed approach is comparable to the one of the kinematic-based MPC controller and is significantly reduced when compared to Method (c). Moreover, the proposed approach is a data-driven control approach which does not need the actual dynamic model, making it robust to model uncertainties.

6. Conclusions

In this paper, we propose an ARKF-KMPC scheme for the data-driven control of constrained UIBVS systems. First, when the intrinsic and extrinsic parameters of the camera are unknown, the ARKF with an adaptive factor is utilized to estimate the image Jacobian matrix online. Then, the KMPC strategy is proposed to solve the UIBVS problem at the dynamic level. To address the issue of unknown dynamics, a linear Koopman prediction model is constructed via input–output data offline, resulting in a linear optimal control problem which is solved via QP online. The proposed UIBVS strategy is robust to imprecise camera calibration as well as unknown dynamics, with state and control constraints taken into account. Numerical simulations demonstrate that the proposed approach outperforms the kinematic-based control approaches, and the computation time of the proposed approach is comparable to the one of the kinematic-based control approach and has been significantly reduced when compared to the dynamic-based control approaches. The existing problem when estimating the image Jacobian matrix via the ARKF algorithm with a linear discrete-time system model is that the robotic manipulator should not move too fast. Therefore, the application of the proposed approach may be limited and the construction of a more realistic model for estimating the image Jacobian matrix could be further studied in future work. Also, the proposed approach is validated using the simulation, and future work will include testing the proposed method using data collected from actual experiments.