1. Introduction

Down syndrome (DS) is currently the most frequent genetic cause of intellectual disability and congenital malformations [

1,

2]. In addition, these individuals present heart disease, cognitive impairment, characteristic physical, and a flattened facial appearance due to an extra copy of chromosome 21 [

1,

2,

3]. Among other physical characteristics, certain distinctive features in the human face are typically associated with DS, such as slanting eyes, brush-field spots in the iris, round faces, abnormal outer ears, and flat nasal bridges [

4].

People’s emotions are best communicated through their non-verbal behavior (gestures, body posture, tone of voice) [

5]. They support cognitive processes to improve social interactions. Communication is a more complex process in people with DS, given that speech results from cognitive, affective, and social elements. The linguistic activity in these people maintains a pattern of execution similar to people with typical development (TD), although the delay progressively increases as the intellectual functions are complex [

6]. In people with disabilities, the control, regulation, knowledge, and expression of emotions can improve their quality of life [

7]. Therapies are one of the daily activities that people with DS carry out. Thus, within Clinical Psychology, the importance of emotional activation, its intensity, and the processing of emotions constitute decisive elements in the success of a therapy [

8].

The studies relate artificial intelligence techniques with DS, focusing mainly on detecting DS. One of them is performed invasively through a puncture in the mother’s abdomen between weeks 15 and 20 of gestation; however, being an invasive method, there is a probability of losing the fetus. Other methods work non-invasively, such as analyzing the fetus’ images [

9].

Computer-aided tools based on machine learning can currently recognize facial features in people with genetic syndromes by considering the shape and size of the face [

3], [

10]. In addition, these tools detect and extract relevant facial points to calculate measurements from images [

9,

10,

11]. Moreover, image processing and machine learning techniques facilitate recognizing facial dysmorphic features associated with genetic factors [

10,

12]. For instance, a method to detect DS in photographs of children uses a graphical method from facial points and a neural network for classification [

10,

13]. Zhao [

14] proposed a restricted hierarchical local model based on the analysis of independent components to detect DS based on facial photographs through the combination of texture and geometric information. An SVM classifier was used to distinguish between normal and abnormal cases using a sample of 48 images and achieved an efficiency of 97.90%.

Burçin and Vasif used the LBP descriptor to identify DS in images of faces based on 107 obtained samples and achieved an efficiency of 95.30% [

15]. Saraydemir used the Gabor wavelet transform as a feature to recognize dysmorphic faces, K-nearest neighbor (K-NN), and SVM to classify the training set of 30 samples and achieved an efficiency of 97.34% [

10,

14,

15,

16,

17]. Due to subjects’ privacy and sensitivity, a reduced number of studies used a DS dataset. These studies detect the syndrome by taking characteristics of their skull, face, or clinical factors typically associated with DS [

3]. In addition, the images of the faces of people with DS processed through computational tools allow new lines of study, such as the recognition of their facial expressions using artificial intelligence, which has been studied very little to date.

There are two approaches to measuring facial expressions: one based on messages and another based on signs [

11,

18,

19,

20]. The first case involves labeling the word in basic emotional categories, such as “happiness”, “disgust”, or “sadness”. However, expressions do not frequently occur in their typical forms. In the second case, the messages usually hide social themes. Therefore, the sign-based approach is analyzed to find the best alternative, given that it objectively describes changes in the face configuration during an expression rather than interpreting its meaning. Within this approach, the most-used [

18,

20,

21] signs are those belonging to the FACS, which breaks down facial expressions into small components of an anatomical basis called Action Units (AUs) [

10,

12,

13,

14,

15,

16,

17,

18,

22]. Considering that the investigations of people with DS focus on detecting the syndrome, many of them are based on facial characteristics or some other part of their body [

3,

9,

10,

11,

12,

13].

Artificial intelligence advances in facial expression recognition (FER) to identify emotions have not been applied to people with DS, especially in uncontrolled settings. This study presents a proposal that is part of research that works with people with DS who attend particular education institutions to support daily activities within the therapies they perform and in which they interact with others, taking into account that emotions constitute a support base to define the achievements of people with DS within the treatment.

In this phase presented in this article, different artificial intelligence techniques and statistical analyses are evaluated to define the facial characteristics of this group of people [

4] when they express their basic emotions. Since no studies have been carried out in this field, the objective is to obtain the characteristics of the face of this group of people. The analysis is based on facial measurements such as FACS, analysis of machine learning techniques, and deep learning. In this context, it is expected to contribute from the point of view of applied research to support the activities that this group of people carries out in their day-to-day life, emotions being a fundamental pillar in the performance of the activities of all human beings.

People with DS during this pandemic carried out many of their activities through videoconferences. However, with the progressive return to face-to-face activities, it is even more important to analyze how they feel emotionally as additional support to stimulation sessions. For this reason, in this work, different machine learning and deep learning techniques are evaluated and compared for the automatic recognition of emotions (happiness, anger, surprise, sadness, and neutrality), focusing on people with DS, using the intensity values of their micro-expressions through a dataset of this group of people.

In this work,

Section 1 shows a compilation of research on people with DS using machine learning and deep learning techniques.

Section 2 shows relevant studies based on the field of FER to identify emotions and related works to recognize emotions based on these artificial intelligence techniques, as well as a bibliographic compilation on the system of facial action codes and the main characteristics of the Action Units in people with TD.

Section 3 shows the methodology applied in this research.

Section 4 presents the results obtained. Finally,

Section 5 presents the conclusions and discussion.

3. Materials and Methods

The structure of the face of people with DS has unique characteristics as presented in the literature [

1,

2,

3,

4], and their attitude to different situations generates emotions that, in terms of their facial expressions, could differ from those of other people with TD. For this reason, this article will first investigate the structure of the expressions of people with DS, which will be studied from typical expressions such as anger, happiness, sadness, surprise, and neutrality, and later through techniques of artificial expression intelligence, which evaluate their performance to classify them automatically.

A quantitative experimental methodology will be used based on the literature review described in

Section 2. In addition, the data will be obtained from developing a dataset of images of faces of people with DS classified as anger, happiness, sadness, surprise, and neutral.

Section 2.4 mentioned that the emotions identified based on FER could be evaluated through the behavior of specific micro-expressions, defined as Action Units, so this technique will be used to obtain the most relevant AUs for each of the emotions of people with DS based on the samples in the created dataset. The Action Units are obtained through the OpenFace software [

36], which allows for the discrimination of the micro-expressions of photographs and videos through each Action Unit’s activation and intensity levels. With the results obtained, through a statistical evaluation, based on the activation values and the intensity averages of each Action Unit, the most relevant micro-expressions for each emotion of people with DS are obtained.

Subsequently, the performance of artificial intelligence methods to classify emotions using supervised machine learning and deep learning techniques are compared. In the case of machine learning techniques, it will initially be necessary to define the relevant characteristics of the image dataset, which will be performed based on the activation intensity levels of the Action Units. For the case of deep learning, the classified images of the dataset are used directly.

Several techniques are used for machine learning, but the ones that obtained the best results were SVM, KNN, and assembly, taking into account Zago’s research [

25] on the techniques used on people with TD, whereas in the same way for people with DS, SVM presented the best result for FER.

For deep learning, image convolutional neural network techniques based on the Xception architecture are used and are selected based on their efficiency, precision, and low complexity, which are explained in

Section 3.4.

The results will be presented based on statistical graphs showing the intensity levels of each Action Unit for each emotion of people with DS. In addition, confusion matrices are given for recognizing emotions through artificial intelligence techniques, where the percentage of success in the recognition of each emotion can be observed.

3.1. Dataset

A dataset of images of faces of people with DS was created and classified according to anger, happiness, sadness, surprise, and neutrality. The images were freely obtained on the Internet and were saved in JPG format, with a matrix size of 227 × 227 × 3. The created dataset (Dataset-IMG) corresponded to a different individual; that is, it contained 555 images of other people with DS that were distributed as follows: 100 images of anger, 120 of happiness, 100 of sadness, 115 of surprise, and 120 neutral. As mentioned above, each sample in each emotion represented a different individual. An example of four individuals per emotion is shown in

Table 5.

These images were preprocessed and will be detailed in

Section 3.2 to obtain a dataset based on the intensity of each emotion studied action unit, called the Dataset-AU.

Table 6 presents a random sample of the intensity values of the AUs of 5 people with DS; this means that for each individual or sample, a set of the intensity values of the 18 AUs that were studied was obtained, and these displayed values represent the preprocessed dataset generated for each emotion.

3.2. Evaluation of Action Units for People with Down Syndrome

This section shows the analysis carried out to recognize emotions from the facial features of people with DS. To perform the analysis of facial expressions, we based it on the intensity levels of the Action Units 1, 2, 4, 5, 6, 7, 9, 10, 12, 14, 15, 17, 20, 23, 25, 26, 28, and 45, taking into account the fact that these represent the upper part (eyes and eyebrows) and the lower part (mouth) of the face, with these being fundamental to define emotions [

37] according to the classification shown in

Table 1. This work used OpenFace to detect Action Units, an open-source tool that uses a Python and Torch implementation to recognize deep neural networks’ facial recognition [

36]. OpenFace detects the Action Units that are present in emotions, as well as their level of intensity. The emotions analyzed in this research were happiness, anger, surprise, sadness, and neutral expressions.

The images of the dataset mentioned in

Section 3.1 were processed using OpenFace to generate the structured data presented in

Table 6. This table shows the emotions studied in the first column; then, in the following columns, the values of the intensities are given for each sample of the AUs analyzed in each emotion.

The data in

Table 6 are the basis of the Dataset–AU that represents the characteristics of the images of the faces that are labeled, and a machine learning system will evaluate them.

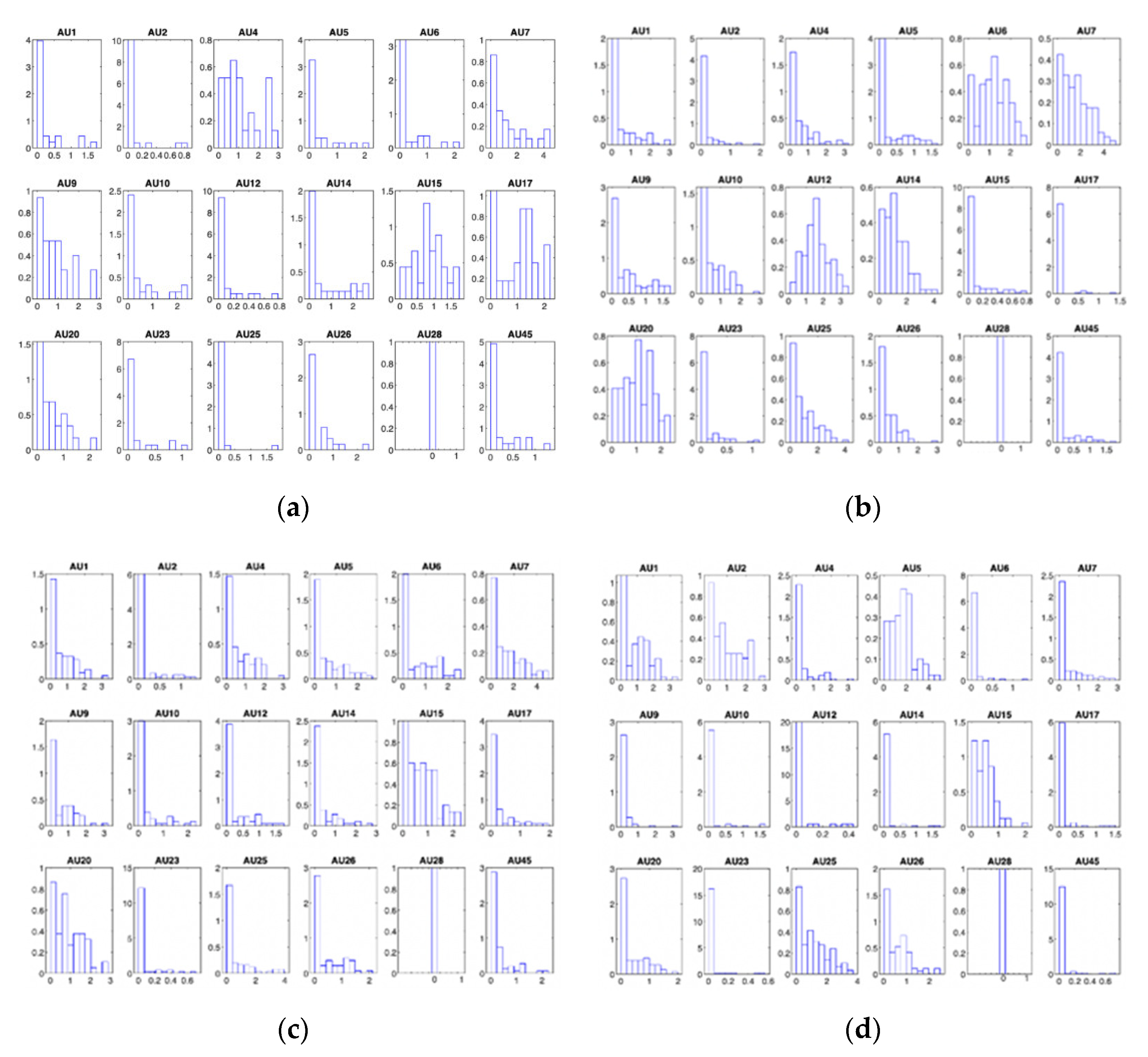

To analyze the behavior of the Action Units within each emotion,

Figure 1 shows the graphs of the normalized histograms for each emotion, according to the number of samples and the size of the container. This process is derived from the analysis carried out on the intensities of the AUs obtained in the information acquisition phase through OpenFace.

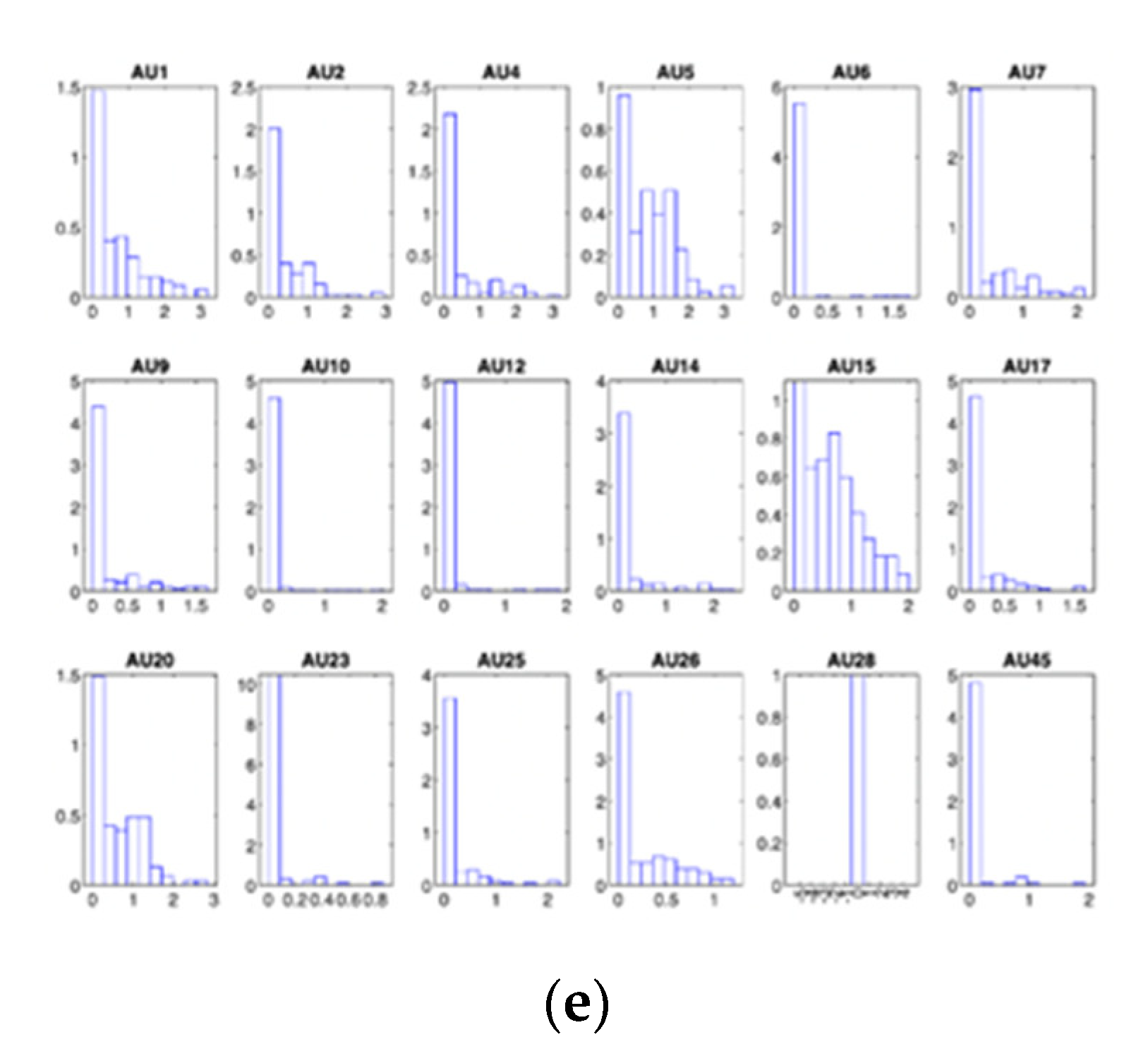

Figure 1 shows the degree of contribution of each AU within the emotion studied. This analysis allowed us to obtain

Figure 2, where the distributions presented in

Figure 1 are considered, and the mean values of the intensity levels of the AUs for each emotion are obtained. For example, the emotion of happiness showed an approximation to a normal distribution of AU 6 and 12, while AU 1 and 2 showed an exponential distribution.

Figure 2 aims to present the characteristic emotion in each action unit.

Figure 2 is based on the histograms shown in

Figure 1 for each emotion, where the mean values of the intensity levels of the AU were obtained.

The statistical analysis of the units of action of the 555 images (IMG-Dataset) of the different people with DS described in

Section 3.1 was used. The highest mean value was chosen for each AU in

Figure 2.

The highest mean value in

Figure 2 will represent a particular emotion; for example, the mean intensities in the surprise emotion of AUs 1, 2, 5, 25, and 26 were higher than the other emotions. However, in the case of sadness, it only had AU45 as a representative value. Conversely, in the case of this emotion, it was still shallow, approximately 80% lower than the surprise AU5 (the AU with the highest intensity value within the analysis performed). The neutral emotion did not present greater intensities in each analyzed AU, so it was decided as an analysis criterion to take the second greater intensity shown in each unit of action. In the case of neutral AUs, they should not be activated as they do not offer any facial expressions. However, these Action Units are active due to the anatomical features of their eyes.

Table 7 was obtained from the analysis of

Figure 2, taking the highest emotions of each analyzed AU, which is why they are considered the most representative of people with DS.

Figure 2 shows that in the case of AU 23, its mean values were less than 10% of the highest mean values, so it did not present representative intensities with high numerical values compared to the other AUs.

3.3. Machine Learning Analysis in People with Down Syndrome

Various supervised machine learning techniques are used to classify the emotions of the Dataset-AU (mentioned in

Section 3.2) that correspond to the intensity levels of the AUs of people with DS. In [

27,

38], machine learning techniques are presented and developed for emotion recognition in people with TD. In this research, the performance of the techniques presented in [

27,

38] in people with DS was analyzed, where the best results found are shown in

Figure 3,

Figure 4 and

Figure 5 with the techniques described below:

Support Vector Machines (SVM) [

27,

38] based on a linear kernel.

Nearest Neighbor (KNN) [

27,

39].

Set of sub-spatial discriminant classifiers based on the AdaBoost method [

39] with the Decision Tree learning technique.

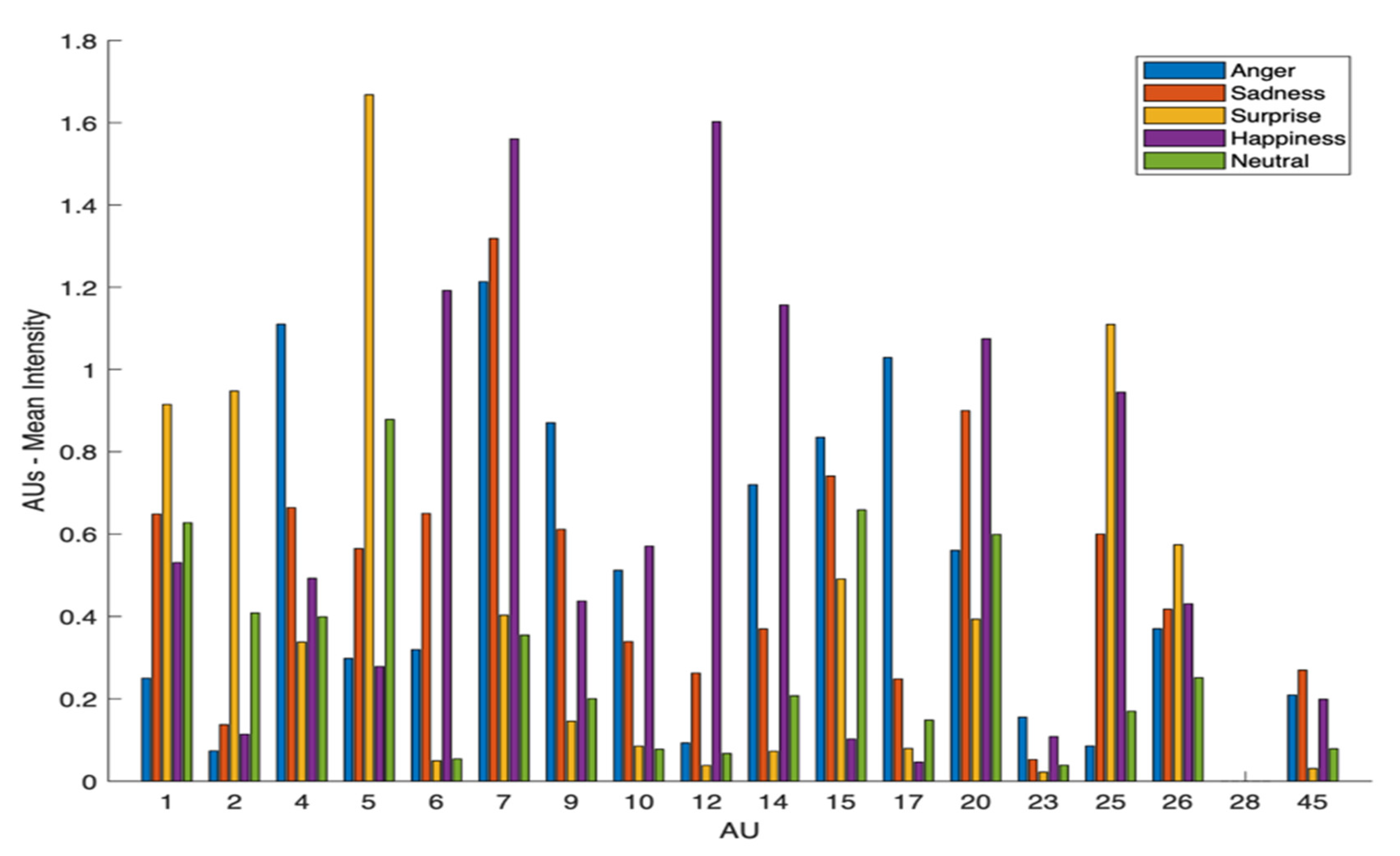

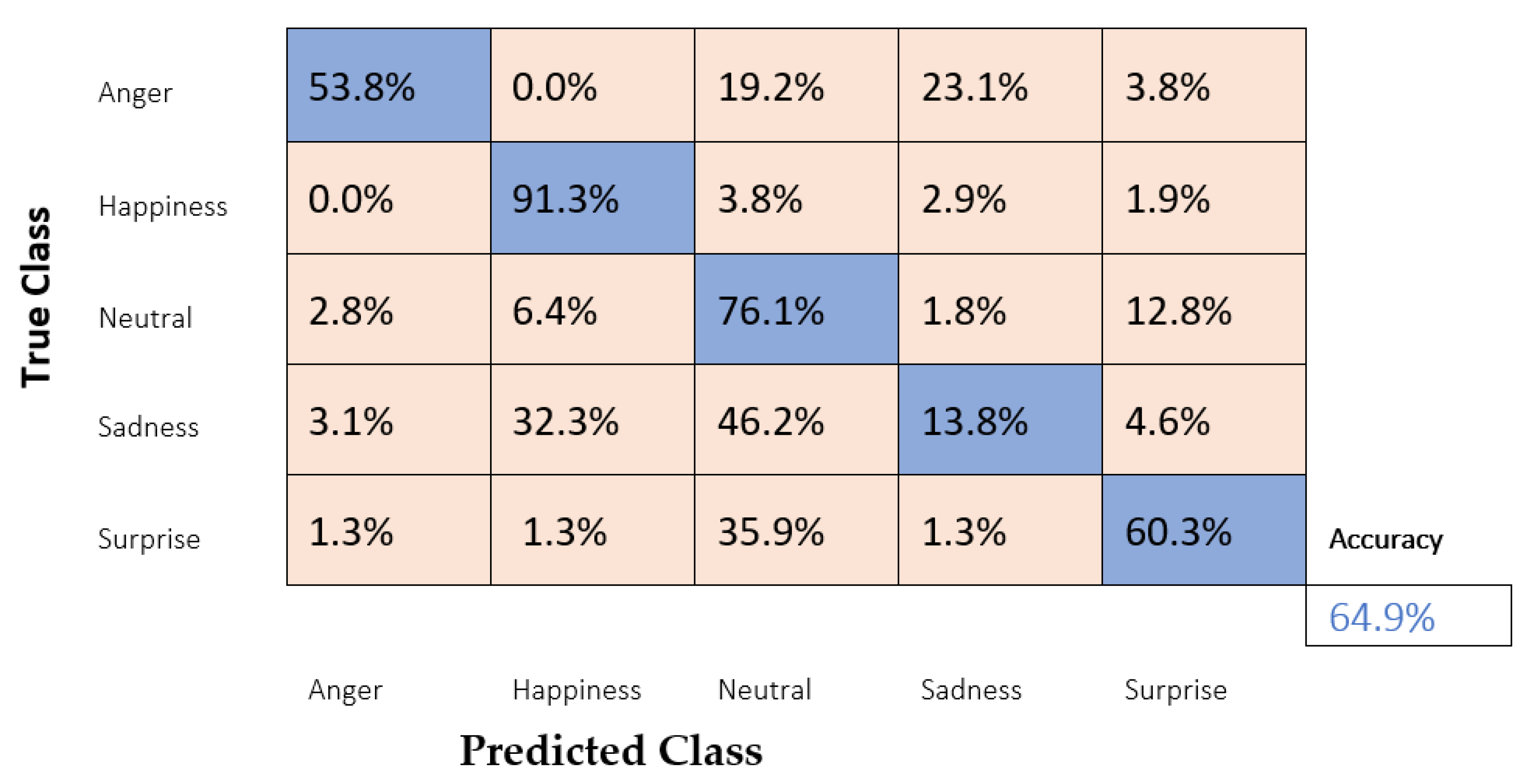

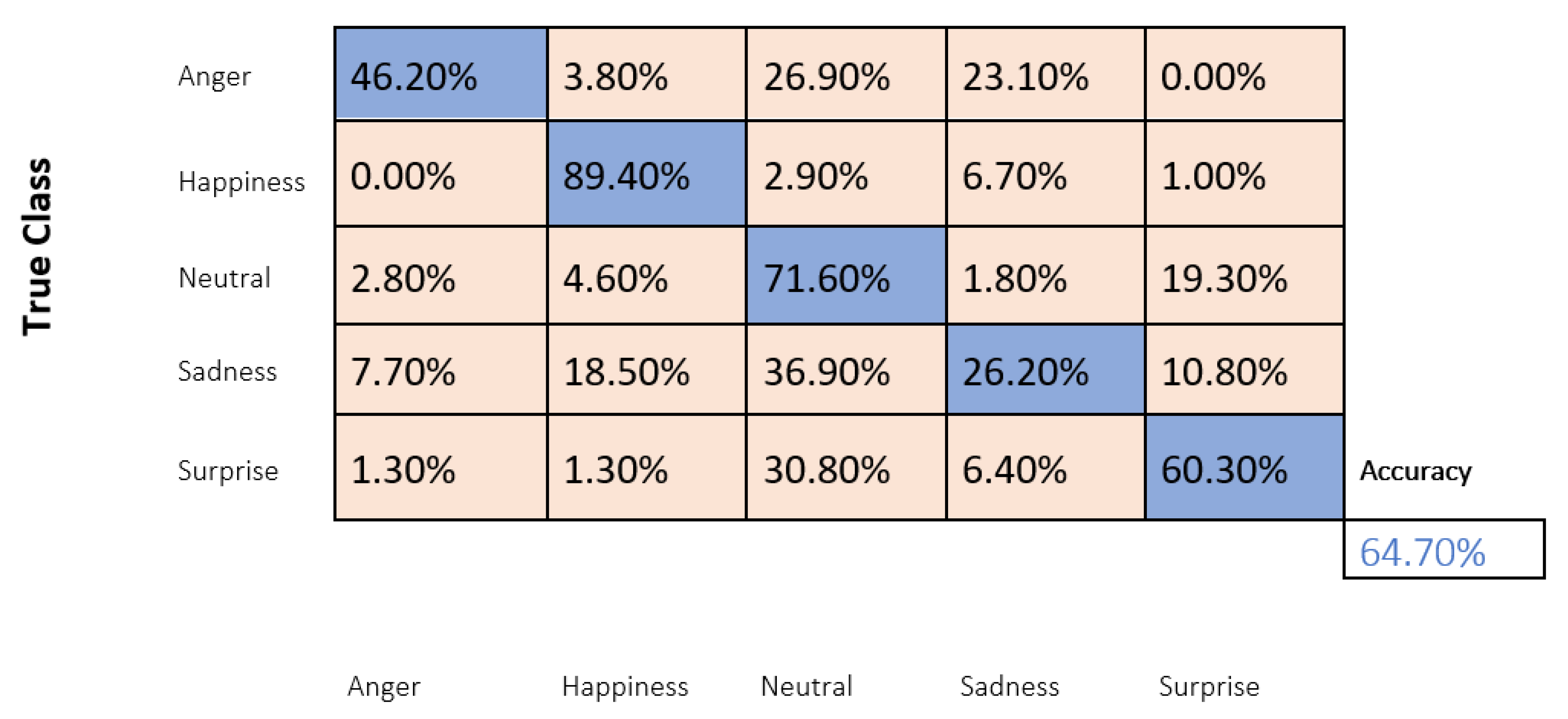

The results of the Dataset-AU were analyzed through these learning techniques and are presented in

Figure 3,

Figure 4 and

Figure 5 through the validation of confusion matrices. This matrix was based on the True Positive Rates (TPR) on the diagonal of the matrix and the False Negative Rates (FNR), and the precision values of the three applied techniques were: for SVM, 66.2%; KNN, 64.9%,; and the combined technique, 64.7%. Accuracy is a metric that allows one to evaluate classification models through true positives over the total number of samples.

In

Figure 3,

Figure 4 and

Figure 5, the emotions of anger and sadness have low precision; in the case of sadness, it was 30.8% (SVM), 13.8% (KNN), and 26.20% (Ensemble), while in the case of anger they showed an accuracy of 42.3% (SVM), 53.8% (KNN) and 46.20% (Ensemble). There are emotions such as happiness with a precision more significant than 82%.

Because emotions such as sadness and anger have low predictive values, it is necessary to analyze these cases. Furthermore, it needs to consider that there are studies carried out on people with DS, which have shown their difficulty in expressing negative emotions such as fear, sadness, and anger [

35]. For this reason, it was decided as a next step to evaluate deep learning techniques to analyze if there is an improvement in predicting these emotions.

3.4. Deep Learning Analysis in People with Down Syndrome

The machine learning techniques used in

Section 3.3 resulted in a prediction rate of less than 66%, especially in sadness, with a prediction of less than 30%. This section will discuss the use of deep neural networks (DNNs) to predict the emotions of people with DS to improve these previous results.

To choose a technique, we investigated how some DNN architectures are used to recognize the facial expressions of people with TD since there are still no studies focused on people with DS, as in [

40,

41,

42,

43] AlexNet, VGG, ResNet, Xception, and Squeezenet. Bianco [

44] evaluated some DNN architectures to analyze performance indices, accuracy, computational complexity, and memory usage. The experiment by Bianco [

44] was based on the comparison of two machines with different computational capacities.

Table 8, based on the analysis carried out by Bianco [

44], allows one to compare the techniques used for the recognition of the facial expressions of people with TD. The first column presents the architectures analyzed. The second column shows the computational complexity based on the number of operations; for example, the one with the lowest precision of 57% was AlexNet, and the one with the best accuracy was Xception with 79%. The third column is the computational complexity based on the number of operations. SqueezeNet and Xception were the least complex systems, with 5 M and 10 M (M stands for Millions of parameters), respectively. In contrast, the most complex system was VGG with 150 M. Finally, the fourth column shows the relationship between precision and the number of parameters, that is, efficiency, with Xception being the architecture that works best with 47%. Moreover, finally the fifth column, the number of frames processed per second (FPS); it is essential to analyze this point together with the first, that is:

- -

ResNet renders between 70–300 FPS with a maximum accuracy of 73%.

- -

Xception processes 160 FPS, with an accuracy of 79%.

- -

AlexNet renders around 800 FPS with a 57% accuracy.

It is worth pointing out that after the analysis was carried out, the Xception had a higher efficiency than the other systems; its model is not complex, and it works with a rate of 160 FPS, which is why this architecture was chosen to work within this investigation [

44].

Xception’s CNN architecture is slightly different from the typical CNN model because, in the end, fully connected layers are used. In addition, residual modules modify the expected mapping of subsequent layers. Thus, the learned functions become the difference between the feature map of the desired functions and the original ones.

Within the Xception architecture family, we have the mini-Xception architecture; as Arriaga [

43] mentioned, mini-Xception reduces the number of parameters compared to an Xception. Moreover, the architecture combines the suppression of a fully connected layer and the inclusion of the combination convolutions separable in-depth and residual modules. Finally, the architectures are trained with the ADAM optimizer.

In [

45,

46,

47], the mini-Xception architecture for people with TD was evaluated using the FER2013 dataset containing 35,887 images of emotions such as happiness, anger, sadness, surprise, neutral, disgust, and fear. This dataset was used to recognize emotions, achieving an accuracy of up to 95%.

Mini-Xception was used with transfer learning in this work, considering that it was already trained with another dataset (FER2013) for emotion recognition. In our case, we took the dataset collected from people with DS called Dataset-IMG (images) described in

Section 3.1. These data entered the mini-Xception architecture already trained with FER2013 as a block of test data. Then, the system returned the recognized emotion based on the highest prediction; that is, the highest value of the prediction was the one that the architecture decided was the recognized emotion.

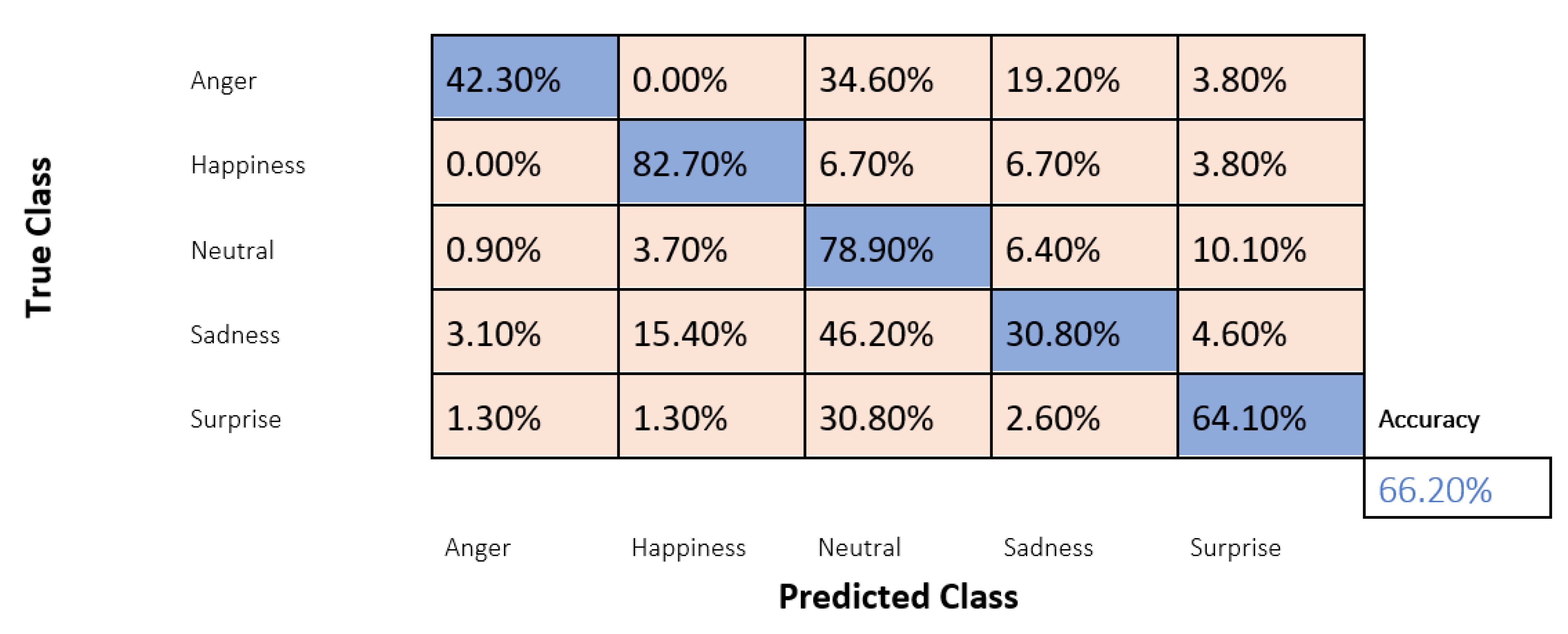

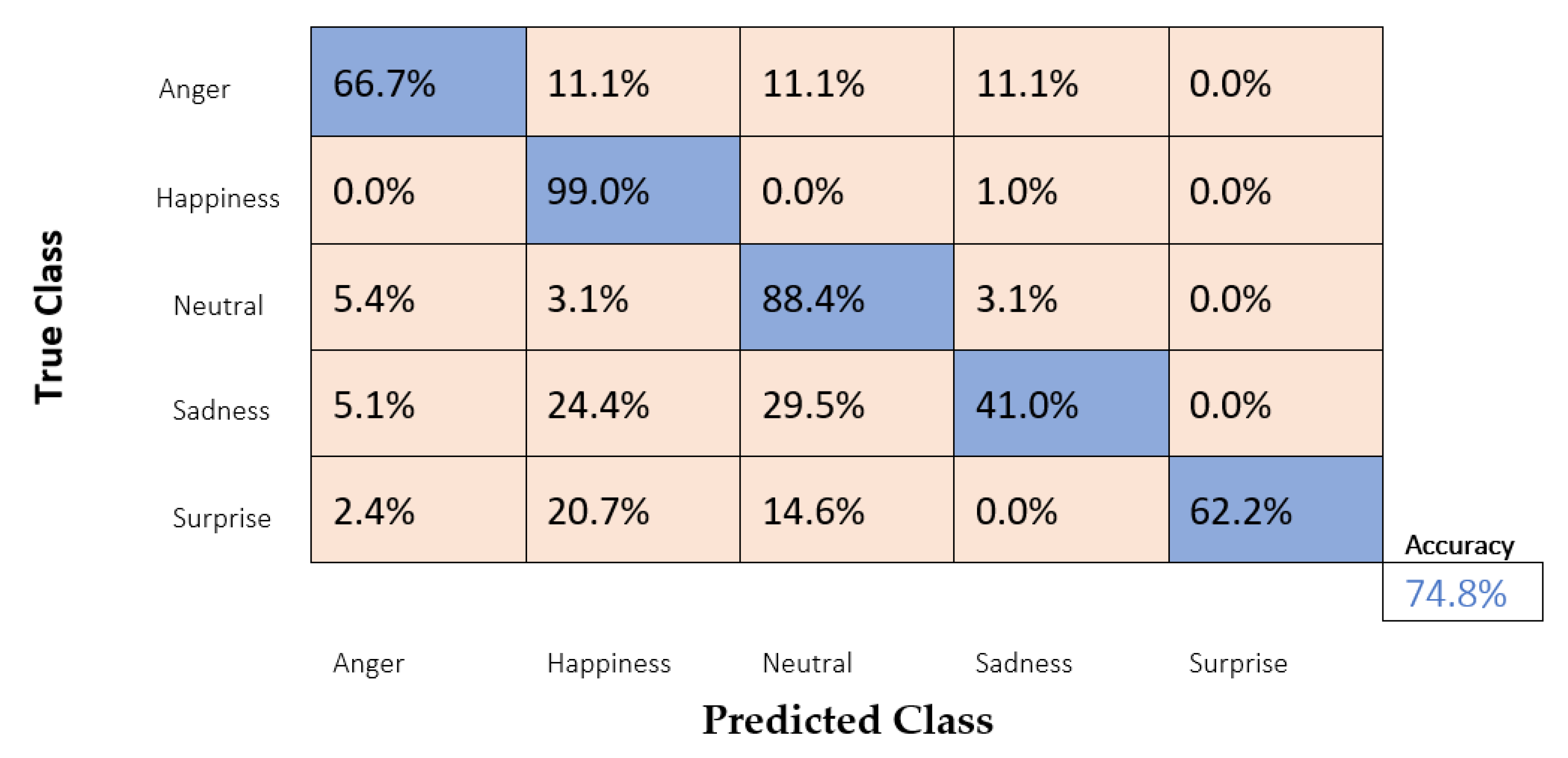

Figure 6 shows the confusion matrix obtained with this architecture, with an improvement in the average accuracy of all the emotions of 74.8%. In the case of the emotions of sadness, an accuracy of 41% was achieved.

4. Results Analysis

Based on the dataset created with faces of people with DS for the five facial expressions, happiness, sadness, surprise, anger, and neutral, the intensities of the Action Units of the micro-expressions that define each emotion were obtained. The behavior of the intensities of the Action Units was evaluated through histograms of relative frequencies. Their level of activation was presented through the mean values of each action unit per emotion, which allowed them to define the most relevant Action Units for each emotion of people with DS, whose results are summarized in

Table 7, where additionally, a high correlation can be seen between the Action Units that represent the emotions of sadness and anger, especially with the AUs 4, 9, 15 and 17, which from the beginning indicated that there would be a high probability that these emotions can be confused. On the contrary, emotions such as happiness and surprise presented a greater decorrelation with the other emotions, which indicated that a high probability was possible to differentiate them from the other emotions.

The intensities of the Action Units for all the facial expressions obtained became the main input characteristics to work with machine learning techniques.

From the analysis carried out in

Table 7 of

Section 3.2, to obtain the technique that gives us a better accuracy when identifying the emotions of people with DS, the AUs were selected for each emotion of people with DS based on the most relevant mean intensity values obtained after the analysis carried out using the Dataset-IMG. First, the results were extracted in the confusion matrices. Then, when evaluating the machine learning and deep learning techniques described in

Section 2.4 and

Section 3.3, they were consolidated in

Table 9, where it was observed that, based on the TPR, the emotion that presented the highest index was happiness, with values between 82.7% and 99%, using all the samples of a single class and applying the deep learning technique used. On the other hand, the surprise emotion obtained a better TPR with machine learning techniques, reaching 64.7% according to the analysis that depended exclusively on the intensity levels of the AUs.

The sadness emotion was the one that achieved the lowest TPR with the machine learning techniques of 13.8% since the intensity in its average value was not relevant and reached a maximum of 41% with deep learning. In

Table 7, the active AUs had greater relevance for the happiness emotion. The emotion of anger presented its best TPR with 53.8% machine learning techniques and 66.7% deep learning techniques.

The neutral expression using machine learning was close to 78.9%, reaching 88.4% with the deep learning approach.

The use of transfer learning allowed for the reuse of the Mini-Xception architecture previously trained with the FER2013 dataset. The dataset described in

Section 3.1 was taken, which was made up of images of faces of people with DS called Dataset-IMG (images). These data entered the already-trained system (Mini-Xception) as a test data block. This architecture was already tested in previous works to recognize emotions.

5. Conclusions and Discussion

This paper analyzed the typical facial features of people with DS through the units of action identified with the statistical analysis, the moment they express their emotions. The facial characteristics of the emotions of people with DS were evaluated using various artificial intelligence techniques, which were chosen and analyzed based on the results given in previous works for FER. To meet these objectives, a dataset of faces of this group of people had initially been created and classified according to the emotions of anger, happiness, sadness, surprise, and neutrality.

To obtain the characteristics of the micro-expressions that occur in the emotions of this target group, the intensity values of the Action Units defined in the structure of the FACS were obtained, for which the Open Face software was used.

The intensity of all the Action Units described in

Section 3.2 was obtained for each emotion. Additionally, the Action Units that presented the highest levels of intensity for each emotion on average were selected, being the most representative, as well as their behavior through histograms of relative frequencies. From these results, it has been deduced that emotions such as happiness and surprise are more uncorrelated concerning other emotions, which increases the probability of differentiating and classifying them. On the other hand, anger and sadness are highly correlated, which would give a higher level of confusion between these emotions.

Obtaining Action Units was used as characteristics for machine learning training, where SVM presented the best performance, with an accuracy of 66.2%, taking into account that in the literature, there are works carried out for people with TD with an accuracy greater than 80% [

27,

38]. However, on the other hand, KNN managed to classify the emotions of joy and anger with a higher TPR.

On the other hand, images of the collected database were evaluated through transfer learning based on convolutional neural networks (Mini-Xception); it was trained with people with DS, obtaining an accuracy of 74.8%. On the other hand, the images of the collected database were evaluated by transfer learning based on convolutional neural networks (Mini-Xception); it was trained with people with DS, obtaining an accuracy of 74.8%, taking into account that in works carried out with this architecture with databases of people with TD, an accuracy of 66% was obtained [

45]. In [

47], the authors analyzed emotion recognition in real-time with an accuracy of around 80.77%, and finally, Behera [

46] used the Mini-Xception architecture and time series analysis, obtaining a maximum recognition percentage of 40%. The emotion that was recognized by more than 90% was happiness for people with DS and TD people. The results showed a similar performance in accuracy for both types of people.

Based on the obtained results, this proposal has allowed the opening of a research field that considers this group of people who require support tools in their daily activities due to their physical and cognitive characteristics. This work has constituted a starting point to propose algorithms based on the characteristics of this group of people to assess their emotions within their daily activities in real time, such as stimulation sessions, taking into account that the algorithms that have been developed will support the automatic recognition of emotions both in person and through activities carried out through videoconferences; that is, this study will be applied to virtual and face-to-face activities.

As the objective of future research is also to work with people with DS who attend institutions, it will be essential to include the images obtained from the stimulation sessions in the image dataset and to retrain the techniques evaluated in this article to improve the accuracy of the classification of emotions with people with DS and to even work on particular cases. Finally, the analysis of micro-expression behavior also leaves the option of generating synthetic samples based on their relative frequency histogram structured as probability density functions, which allows the recreation of different types of gestures that reflect the emotions typical of people with DS.