Abstract

In this paper, we propose a stochastic gradient descent algorithm, called stochastic gradient descent method-based generalized pinball support vector machine (SG-GPSVM), to solve data classification problems. This approach was developed by replacing the hinge loss function in the conventional support vector machine (SVM) with a generalized pinball loss function. We show that SG-GPSVM is convergent and that it approximates the conventional generalized pinball support vector machine (GPSVM). Further, the symmetric kernel method was adopted to evaluate the performance of SG-GPSVM as a nonlinear classifier. Our suggested algorithm surpasses existing methods in terms of noise insensitivity, resampling stability, and accuracy for large-scale data scenarios, according to the experimental results.

1. Introduction

Support vector machine (SVM) is a popular supervised binary classification algorithm based on statistical learning theory. Initially proposed by Vapnik [1,2,3], it has been gaining more and more attention. There have been many algorithmic and modeling variations of it, and it is a powerful pattern classification tool that has had many applications in various fields during recent years, including face detection [4,5], text categorization [6], electroencephalogram signal classification [7], financial regression [8,9,10,11], image retrieval [12], remote sensing [13], feature extraction [14], etc.

The concept of the conventional SVM is to find an optimal hyperplane that results in the greatest separation of the different categories of observations. The conventional SVM uses the well-known and common hinge loss function; however, it is sensitive to noise, especially noise around the decision boundary, and is not stable in resampling [15]. As a result, many researcher have proposed new SVM methods by changing the loss function. The SVM model with the pinball loss function (Pin-SVM) was proposed by Huang [16] to treat noise sensitivity and instability in resampling. The outcome is less sensitive and is related to the quantile distance. On the other hand, Pin-SVM cannot achieve sparsity. To achieve sparsity, a modified -insensitive zone for Pin-SVM was proposed. This method does not consider the patterns that lie in the insensitive zone while building the classifier, and its formulation requires the value of to be specified beforehand; therefore, a bad choice may affect its performance. Motivated by these developments, Rastogi [17] recently proposed the modified -insensitive zone support vector machine. This method is an extension of existing loss functions that account for noise sensitivity and resampling stability.

However, practical problems require processing large-scale datasets, while the existing solvers are not computationally efficient. Since the generalized pinball loss SVM (GPSVM) still needs to solve a large quadratic programming problem and the dual problem of GPSVM requires solving the quadratic programming problem (QPP), these techniques have difficulty handling large-scale problems [18,19]. Different solving methods have been proposed for large-scale SVM problems. The optimization techniques such as sequential minimal optimization (SMO) [20], successive over-relaxation (SOR) [21], and the dual coordinate descent method (DCD) [22] have been proposed to solve the SVM problem and the dual problem of SVM. However, these methods necessitate computing the inverse of large matrices. Consequently, the dual solutions of SVM cannot be effectively applied to large-scale problems. On the one hand, a main challenge for the conventional SVM is the high computational complexity of the training samples, i.e., , where m is the total number of training samples. As a result, the stochastic gradient descent algorithm (SGD) [23,24] has been proposed to solve the primal problem of SVM. As an application of the stochastic subgradient descent method, Pegasos [25] obtained an -accuracy solution for the primal problem in iterations with the cost per iteration being , where n is the feature dimension. This technique partitions a large-scale problem into a series of subproblems by stochastic sampling with a suitable size. The SGD for SVM has been shown to be the fastest method among the SVM-type classifiers for large-scale problems [23,26,27,28].

In order to overcome the above-mentioned limitations of large-scale problems and inspired by the studies of SVM and the generalized pinball loss function, we propose a novel stochastic subgradient descent method with generalized pinball support vector machine (SG-GPSVM). The proposed technique is an efficient method for real-world datasets, especially large-scale ones. Furthermore, we prove the theorem that guarantees the approximation and convergence of our proposed algorithm. Finally, the experimental results show that the proposed SG-GPSVM approach outperforms the existing approaches in terms of accuracy, and the proposed SG-GPSVM may result in a more efficient and faster process of convergence rather than using the conventional SVM. The results also show that our proposed SG-GPSVM is noise insensitive and more stable in resampling and can handle large-scale problems.

The structure of the paper is as follows. Section 2 outlines the background. SG-GPSVM is proposed in Section 3, which includes both linear and nonlinear cases. In Section 4, we theoretically compare the proposed SG-GPSVM with two other algorithms, i.e., conventional SVM and Pegasos [25]. Experiments on benchmark datasets, available in the UCI Machine Learning Repository [29], with different noises are conducted to verify the effectiveness of our SG-GPSVM. The results are given in Section 5. Finally, the conclusion is given in Section 6.

2. Related Work and Background

The purpose of this section is to review related methods for binary classification problems. This section contains some notations and definitions that are used throughout the paper. Let us consider a binary classification problem of m data points and n-dimensional Euclidean space . Here, we denote the set of training samples by , where is a sample with a label . Below, we give a brief outline of several related methods.

Support Vector Machine

The SVM model consists of maximizing the distance between the two bounding hyperplanes that bound the classes, so the SVM model is generally formulated as a convex quadratic programming problem. Let denote the Euclidean norm, or two-norm, of a vector in . Given a training set , the strategy of SVM is to find the maximum margin separating hyperplane between two classes by solving the following problem:

where C is a penalty parameter and are the slack variables. By introducing the Lagrangian multipliers , we derive its dual QPP as follows:

After optimizing this dual QPP, we obtain the following decision function:

where is the solution of the dual problem (Equation (2)) and represents the number of support vectors satisfying . In fact, the SVM problem (Equation (1)) can be rewritten as the unconstrained optimization problem as follows: [30]:

where is the so-called hinge loss function. This loss is related to the shortest distance between the sets, and the corresponding classifier leads to its sensitivity to noise and instability in resampling [31]. To address the noise sensitivity, Huang [16] proposed the pinball loss function instead of the hinge loss function by using the pinball loss function with the SVM classifier (Pin-SVM). This model works by penalizing correctly classified samples, which is evident from the pinball loss function, which is defined as follows:

where and . Although the pinball loss function achieves noise insensitivity, in the process, it cannot achieve sparsity. This is because the pinball loss function’s subgradient is nonzero almost everywhere. Therefore, to achieve sparsity, in the same publication, Huang [16] proposed the -insensitive pinball loss function, which is insensitive to noise and stable in resampling. The -insensitive pinball loss function is defined by:

where and are user-defined parameters. On the other hand, the -insensitive pinball loss function necessitates the selection of an ideal parameter. Rastogi [17] introduced the -insensitive zone pinball loss function, also known as the generalized pinball loss function. The generalized pinball loss function is defined by:

where are user-defined parameters. After employing the generalized pinball loss function instead of the hinge loss function in problem (1), we obtain the following optimization problem:

By introducing the Lagrangian multipliers , we derive its dual QPP as follows:

A new data point is classified as positive or negative according to the final decision function:

where and are the solution to the dual problem (Equation (9)).

In the next section, we go over the core of our proposed technique and present its pseudocode.

3. Proposed Stochastic Subgradient Generalized Pinball Support Vector Machine

For our SG-GPSVM formulation, which is based on the generalized pinball loss function, we apply the stochastic subgradient approach in this section. Our SG-GPSVM can be used in both linear and nonlinear cases.

3.1. Linear Case

Following the method of formulating SVM problems (discussed in Equation (4)), we incorporated the generalized pinball loss function (Equation (7)) in the objective function to obtain the convex unconstrained minimization problems:

where and are the weight vectors and biases, respectively, and are the penalty parameters. To apply the stochastic subgradient approach, for each iteration t, we propose a more general method that uses k samples where . We chose a subset with where k samples are drawn uniformly at random from the training set and . Let us consider a model of stochastic optimization problems. Let denote the current hyperplane iterate, and we obtain an approximate objective function:

When , each iteration t, an approximate objective function is the original objective function in problem (11). Let be subgradients of associated with the index set of minibatches at point , that is:

where:

With the above notations and the existence of , can be written as:

Then, is updated using a step size of . Additionally, a new sample z is predicted by:

The above steps can be outlined as Algorithm 1.

| Algorithm 1 SG-GPSVM. |

| Input: Training samples are represented by , positive parameters , , and tolerance . 1: Set to zero; 2: while do 3: Choose , where , uniformly at random. 4: Compute stochastic subgradient using Equation (15). 5: Update by . 6: 7: end Output: Optimal hyperplane parameters . |

3.2. Nonlinear Case

Support vector machine has the advantage of being able to be employed using symmetric kernels rather than having direct access to the feature vectors x, that is instead of considering predictors that are linear functions of the training samples x themselves, predictors that are linear functions of some implicit mapping of the instances are considered. In order to extend the linear SG-GPSVM to the nonlinear case by a symmetric kernel trick [32,33], the symmetric-kernel-generated surfaces are considered instead of hyperplanes and are given by:

Then, the primal problem for the nonlinear SG-GPSVM is as follows:

where is representative of a nonlinear mapping function, which maps x into a higher-dimensional feature space. To apply the stochastic subgradient approach, for each iteration t, we propose a more general method that uses k samples where . We chose a subset with where k samples are drawn uniformly at random from the training set and . Consider an approximate objective function:

Then, consider the subgradient of the above approximate objective, and let be the subgradient of f at , that is:

where is defined in Equation (14), and similar to the linear case, exists. Then, can be written as:

Then, update using a step size of . Additionally, a new sample x can be predicted by:

The above steps can be outlined as Algorithm 2.

| Algorithm 2 Nonlinear SG-GPSVM. |

| Input: Training samples are represented by , positive parameters , , and tolerance . 1: Set to zero; 2: while do 3: Choose , where , uniformly at random. 4: Compute stochastic subgradient using Equation (20). 5: Update by . 6: 7: end Output: Optimal hyperplane parameters . |

However, the mapping is never specified explicitly, but rather through a symmetric kernel operator yielding the inner products after the mapping .

4. Convergence Analysis

In this section, we analyze the convergence of the proposed SG-GPSVM model. For convenience, we only consider the optimization problem (Equation (11)) together with the conclusions for another nonlinear algorithm, which can be obtained similarly. and the step size for are updated by:

i.e.,

To prove the convergence of Algorithm 1, consider the boundedness of first. In fact, we have the following lemma.

Lemma 1.

Proof of Lemma 1.

Equation (22) can be rewritten as:

where , I is the identity matrix, and . For , is positive definite, and the largest eigenvalue of is equal to . From Equation (23), we have:

where:

For any ,

Therefore,

Next, consider . In Case I for , we have:

and in Case II, for , we have:

From Case I and Case II, we have:

Thus,

Let be the largest norm of the samples in the dataset and:

that is . For ,

□

The following theorem demonstrates that we derive the proof of the convergence property of Algorithm 1 by using Lemma 1.

Theorem 1.

Proof of Theorem 1.

Note that an infinite series of vectors is convergent if its norm series is convergent [34]. Therefore, the following limit exists:

The following theorem gives the relation between GPSVM and our proposed SG-GPSVM.

Theorem 2.

Proof of Theorem 2.

By the definition of , we have:

As f is convex and is the subgradient of f at , we have:

By summing over to T and dividing by T, we obtain the following inequality:

Since , we have:

Summing over to T, we obtain:

Multiplying Equation (41) by , we have:

With Theorem 2, we showed that in a random iteration T, the resulting expected error is bounded by , and the above theorem provides the approximations of by , that is the average instantaneous objective of SG-GPSVM correlates with the objective of GPSVM.

5. Numerical Experiments

In this section, to demonstrate the validity of our proposed SG-GPSVM, we compare SG-GPSVM with the conventional SVM [35] and Pegasos [25] using artificial datasets and the UCI Machine Learning Repository [29] with noises of different variances. All of experiments were performed with Python 3.6.3 on a Windows 8 machine with an Intel i5 Processor 2.50 GHz with 4 GB RAM.

5.1. Artificial Datasets

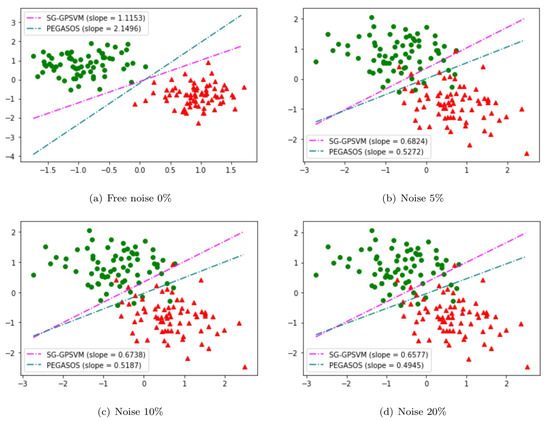

We conducted experiments on a two-dimensional example, for which the samples came from two Gaussian distributions with equal probability: and where and . We added noise to the dataset. The labels of the noise points were selected from with equal probabilities. Each noisy sample was drawn from a Gaussian distribution where and . This noise affects the labels around the boundary. The level of noise was controlled by the ratio of the noisy data in the training set, denoted by r. The value of r was fixed at (i.e., noise-free), , and . All the results of the two algorithms, SG-GPSVM and Pegasos, were derived using the two-dimensional dataset shown in Figure 1. Here, green circles denote samples from Class 1 and red triangles represent data samples from Class . From Figure 1, we can see that the noisy samples affect the labels around the decision boundary. As we increase the amount of noise from to , the hyperplanes of Pegasos start deviating from the ideal slope of 2.14, whereas the deviation in the slopes of the hyperplanes is significantly less in our SG-GPSVM. This implies that our purposed algorithm is insensitive to noise around the decision boundary.

Figure 1.

SG-GPSVM and Pegasos on a noisy artificial dataset. The above four figures demonstrate the noise-insensitive properties of our purposed SG-GPSVM compared to Pegasos when we have: (a) (noise free); (b) ; (c) ; (d) .

5.2. UCI Datasets

We also performed experiments on 11 benchmark datasets available in the UCI Machine Learning Repository. Table 1 lists the datasets and their descriptions. For the benchmarks, we compared SG-GPSVM with two other SVM-based classical classifiers: conventional SVM and Pegasos. Among these SVM models, Pegasos and SG-GPSVM have extra hyperparameters, in addition to the hyperparameter C. The performance of different algorithms depends on the choices of the parameters [33,36,37]. All the hyperparameters were chosen through the grid search method and manual adjustment. The 10-fold cross-validation evaluation method was also employed. In each algorithm, the optimal parameter C was searched from set , and other parameters were searched from set . Further, the kernel method was adopted to evaluate the performance of SG-GPSVM as a nonlinear classifier, where the RBF kernel was adopted. The parameter of the RBF kernel is , searched from set . The results of the numerical experiments are shown in Table 2, and the optimal parameters used in Table 2 are summarized in Table 3.

Table 1.

Description of the UCI datasets.

Table 2.

Accuracy obtained on the UCI datasets using the linear and nonlinear () kernel.

Table 3.

The optimal parameters of conventional SVM, Pegasos, SG-GPSVM, and SG-GPSVM .

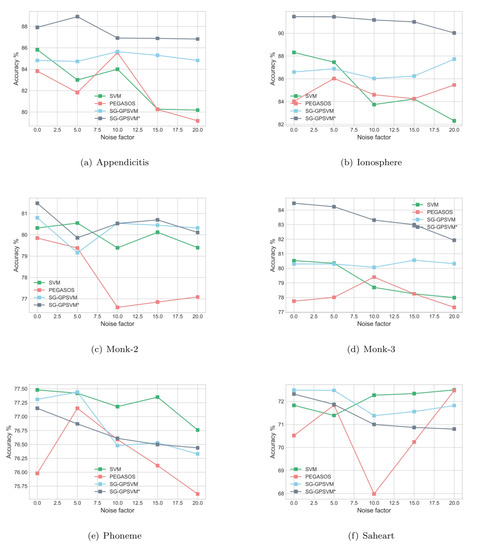

Table 2 shows the results for a linear and nonlinear kernel on six different UCI datasets by applying conventional SVM, Pegasos, and our proposed SG-GPSVM. From the experimental outcomes, it can be seen that the classification performance of SG-GPSVM is better than that of the conventional SVM and Pegasos on most of the datasets in terms of accuracy. From Table 2, our proposed SG-GPSVM yielded the best prediction accuracy on four of six datasets. In addition, when we varied the number of noisy samples from (noise-free) to , our proposed SG-GPSVM exhibited better classification accuracy and stability than the other algorithms no matter the noise factor value, as shown in Figure 2. On the Appendicitis dataset, when the noise factor increased, the accuracy of the conventional SVM and Pegasos fluctuated greatly. On the contrary, the classification accuracies of our proposed SG-GPSVM were stable. Similarly, on the other datasets, such as Monk-3 and Appendicitis, the accuracies of the conventional SVM and Pegasos not only varied greatly, but they also performed worse than our proposed algorithm. This demonstrates that our proposed SG-GPSVM is relatively stable, regardless of the noise factor. In terms of computational time, our proposed algorithm cost nearly the same as Pegasos. The computational time of Pegasos and our proposed SG-GPSVM on the largest dataset was longer than the computational time of the conventional SVM on the smaller datasets.

Figure 2.

The accuracy of four algorithms with different noise factors on six datasets: (a) Appendicitis; (b) Ionosphere; (c) Monk-2; (d) Monk-3; (e) Phoneme; (f) Saheart.

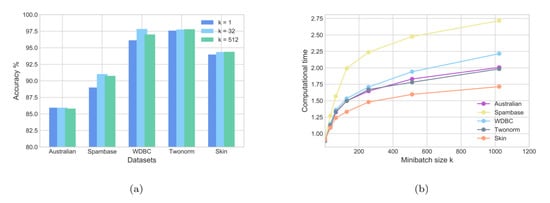

The experimental results of three different minibatches for SG-GPSVM and the accuracy and computational time results of the methods are illustrated in Figure 3. Subfigure (a) shows how the accuracy changed in terms of the batch size, and the curves in Subfigure (b) show how the computational time changed in terms of the batch size. We can see that when the size of the minibatch increased, the accuracy increased, and the computational time increased approximately linearly when the batch size increased. The datasets Spambase and WDBC were taken into consideration. It is evident that if the minibatch size k is small enough, is more accurate than . However, when using a large minibatch size of , the accuracy decreased and the computational time increased. This suggests that in the scenarios in which we care more about the accuracy, we should choose a relatively medium batch size (it does not need to be large).

Figure 3.

Classification results on the UCI datasets with minibatch SG-GPSVM. (a) Accuracy of minibatch SG-GPSVM. (b) Computational time of minibatch SG-GPSVM.

5.3. Large-Scale Dataset

In order to validate the classification efficiency of SG-GPSVM, we conducted comparisons of our methods with the two most related methods (SVM and Pegasos) on four large-scale UCI datasets. We show that our SG-GPSVM is capable of solving large-scale problems. Note that the results of this section were solved on a machine equipped with an Intel CPU E5-2658 v3 at 2.20 GHz and 256 GB RAM running the Ubuntu Linux operating system. The scikit-learn package was sued for the conventional SVM [35]. The large-scale datasets are presented in Table 4.

Table 4.

The details of the large-scale datasets.

For the four large-scale datasets, we compare the performance of SG-GPSVM against the conventional SVM and Pegasos in Table 5. The reduced kernel [38] was employed in the nonlinear situation, and the kernel size was set to 100. From the results in Table 5, SG-GPSVM outperformed the conventional SVM and Pegasos on three out of the four datasets in terms of accuracy. The best ones are highlighted in bold. However, SG-GPSVM’s accuracy on some datasets, such as Credit card, was not the best. On the Skin dataset, the conventional SVM performed much worse than SG-GPSVM and Pegasos, and it was not possible to use the conventional SVM on the Kddcup and Susy datasets due to the high memory requirements. This was because SVM requires the complete training set to be stored in the main memory. On the Credit card dataset, our proposed SG-GPSVM took almost the same amount of time as the conventional SVM and Pegasos. Our suggested approach, SG-GPSVM, took almost the same amount of time as Pegasos on the Kddcup and Susy datasets.

Table 5.

Classification results on the large-scale UCI datasets.

6. Conclusions

In this paper, we used the generalized pinball loss function in SVM to perform classification and proposed the SG-GPSVM classifier. This paper adapted the stochastic subgradient descent method as stochastic subgradient descent method-based generalized pinball SVM (SG-GPSVM). Compared to the hinge loss SVM and Pegasos, the major advantage of our proposed method is that SG-GPSVM is less sensitive to noise, especially the feature noise around the decision boundary. In addition, we investigated the convergence of SG-GPSVM and the theoretical approximation between GPSVM and SG-GPSVM. The validity of our proposed SG-GPSVM was demonstrated by numerical experiments on artificial datasets and datasets from UCI with noises of different variances. The experimental results clearly showed that our suggested SG-GPSVM outperformed the existing classifier approach in terms of accuracy, and SG-GPSVM has a significant advantage in handling large-scale classification problems. The results imply that the SG-GPSVM approach is the strongest candidate for solving binary classification problems.

In further work, we would like to consider applications of SG-GPSVM to activity recognition datasets and image retrieval datasets, and we also plan to improve our approach to deal with multicategory classification scenarios.

Author Contributions

Conceptualization, W.P. and R.W.; writing—original draft, W.P.; writing—review and editing, W.P. and R.W. Both authors read and agreed to the published version of the manuscript.

Funding

This research was funded by the NSRF and NU, Thailand, with Grant Number R2564B024.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the referees for careful reading and constructive comments. This research is partially supported by the Development and Promotion of the Gifted in Science and Technology Project and Naresuan University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar]

- Vapnik, V.N. Statistical Learning Theory; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Colmenarez, A.J.; Huang, T.S. Face Detection With Information-Based Maximum Discrimination. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 782–787. [Google Scholar]

- Osuna, E.; Freund, R.; Girosi, F. Training support vector machines: An application to face detection. In Proceedings of the IEEE Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997. [Google Scholar]

- Joachims, T.; Ndellec, C.; Rouveriol, C. Text categorization with support vector machines: Learning with many relevant features. In Proceedings of the European Conference on Machine Learning, Chemnitz, Germany, 21–23 April 1998; pp. 137–142. [Google Scholar]

- Richhariya, B.; Tanveer, M. EEG signal classification using universum support vector machine. Expert Syst. Appl. 2018, 106, 169–182. [Google Scholar] [CrossRef]

- Mukherjee, S.; Osuna, E.; Girosi, F. Nonliner prediction of chaotic time series using a support vector machine. In Proceedings of the 1997 IEEE Workshop on Neural Networks for Signal Processing, Amelia Island, FL, USA, 24–26 September 1997. [Google Scholar]

- Ince, H.; Trafalis, T.B. Support vector machine for regression and applications to financial forecasting. In Proceedings of the International Joint Conference on Neural Networks (IEEE-INNSENNS), Como, Italy, 27 July 2000. [Google Scholar]

- Huang, Z.; Chen, H.; Hsua, C.-J.; Chen, W.-H.; Wu, S. Credit rating analysis with support vector machines and neural networks: A market comparative study. Decis. Support Syst. 2004, 37, 543–558. [Google Scholar] [CrossRef]

- Khemchandani, R.; Jayadeva; Chandra, S. Regularized least squares fuzzy support vector regression for nancial time series forecasting. Expert Syst. Appl. 2009, 36, 132–138. [Google Scholar] [CrossRef]

- Tao, D.; Tang, X.; Li, X.; Wu, X. Asymmetric bagging and random subspace for support vector machines-based relevance feedback in image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 28, 1088–1099. [Google Scholar]

- Pal, M.; Mather, P. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Li, Y.Q.; Guan, C.T. Joint feature re-extraction and classification using an iterative semi-supervised support vector machine algorithm. Mach. Learn. 2008, 71, 33–53. [Google Scholar] [CrossRef]

- Bi, J.; Zhang, T. Support vector classification with input data uncertainty. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 13–18 December 2004. [Google Scholar]

- Huang, X.; Shi, L.; Suykens, J.A.K. Support vector machine classifier with pinball loss. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 984–997. [Google Scholar] [CrossRef]

- Rastogi, R.; Pal, A.; Chandra, S. Generalized pinball loss SVMs. Neurocomputing 2018, 322, 151–165. [Google Scholar] [CrossRef]

- Ñanculef, R.; Frandi, E.; Sartori, C.; Allende, H. A Novel Frank-Wolfe Algorithm. Analysis and Applications to Large-Scale SVM Training. Inf. Sci. 2014, 285, 66–99. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Xu, C.; Zou, B.; Tang, Y.Y.; Peng, J.; You, X. New incremental learning algorithm with support vector machines. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2230–2241. [Google Scholar] [CrossRef]

- Platt, J. Fast training of support vector machines using sequential minimal optimization. In Advances in Kernel Methods—Support Vector Learning; MIT Press: Cambridge, MA, USA, 1999; pp. 185–208. [Google Scholar]

- Mangasarian, O.; Musicant, D. Successive overrelaxation for support vector machines. IEEE Trans. Neural Netw. 1999, 10, 1032–1037. [Google Scholar] [CrossRef] [Green Version]

- Fan, R.; Chang, K.; Hsieh, C.; Wang, X.; Lin, C. LIBLINEAR: A library for large linear classification. J. Mach. Learn. Res. 2008, 9, 1871–1874. [Google Scholar]

- Zhang, T. Solving large-scale linear prediction problems using stochastic gradient descent algorithms. In Proceedings of the International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004. [Google Scholar]

- Xu, W. Towards optimal one pass large-scale learning with averaged stochastic gradient descent. arXiv 2011, arXiv:1107.2490. [Google Scholar]

- Shai, S.; Singer, Y.; Srebro, N.; Cotter, A. Pegasos: Primal estimated subgradient solver for SVM. Math. Program. 2011, 127, 3–30. [Google Scholar]

- Alencar, M.; Oliveira, D.J. Online learning early skip decision method for the hevc inter process using the SVM-based Pegasos algorithm. Electron. Lett. 2016, 52, 1227–1229. [Google Scholar]

- Reyes-Ortiz, J.; Oneto, L.; Anguita, D. Big data analytics in the cloud: Spark on Hadoop vs MPI/OpenMP on Beowulf. Procedia Comput. Sci. 2015, 53, 121–130. [Google Scholar] [CrossRef] [Green Version]

- Sopyla, K.; Drozda, P. Stochastic gradient descent with barzilaicborwein update step for SVM. Inf. Sci. 2015, 316, 218–233. [Google Scholar] [CrossRef]

- Dua, D.; Taniskidou, E.K. UCI Machine Learning Repository; School of Information and Computer Science, University of California: Irvine, CA, USA, 2019; Available online: http://archive.ics.uci.edu/ml (accessed on 24 September 2018).

- Shwartz, S.; Ben-David, S. Understanding Machine Learning Theory Algorithms; Cambridge University Press: Cambridge, MA, USA, 2014; 207p. [Google Scholar]

- Xu, Y.; Yang, Z.; Pan, X. A novel twin support-vector machine with pinball loss. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 359–370. [Google Scholar] [CrossRef]

- Shao, Y.; Zhang, C.; Wang, X.; Deng, N. Improvements on twin support vector machines. IEEE Trans. Neural Netw. 2011, 22, 962–968. [Google Scholar] [CrossRef] [PubMed]

- Khemchandani, R.; Jayadeva; Chandra, S. Optimal kernel selection in twin support vector machines. Optim. Lett. 2009, 3, 77–88. [Google Scholar] [CrossRef]

- Rudin, W. Principles of Mathematical Analysis, 3rd ed.; McGraw-Hill: New York, NY, USA, 1964. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Shawe-Taylor, J.; Cristianini, N. Kernel Methods for Pattern Analysis; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Lee, Y.; Mangasarian, O. RSVM: Reduced support vector machines. In Proceedings of the First SIAM International Conference on Data Mining, Chicago, IL, USA, 5–7 April 2001. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).