Fingerprint Classification Based on Deep Learning Approaches: Experimental Findings and Comparisons

Abstract

1. Introduction

- thorough analysis using three different pre-existing CNN-based architectures on fingerprint classification (into four, five, and eight classes), which can improve fingerprint recognition when integrated into an AFIS;

- considerable number of tests performed, using two distinct large-scale fingerprint databases, aimed to acquire further insights into performance achieved on different databases with heterogeneous characteristics;

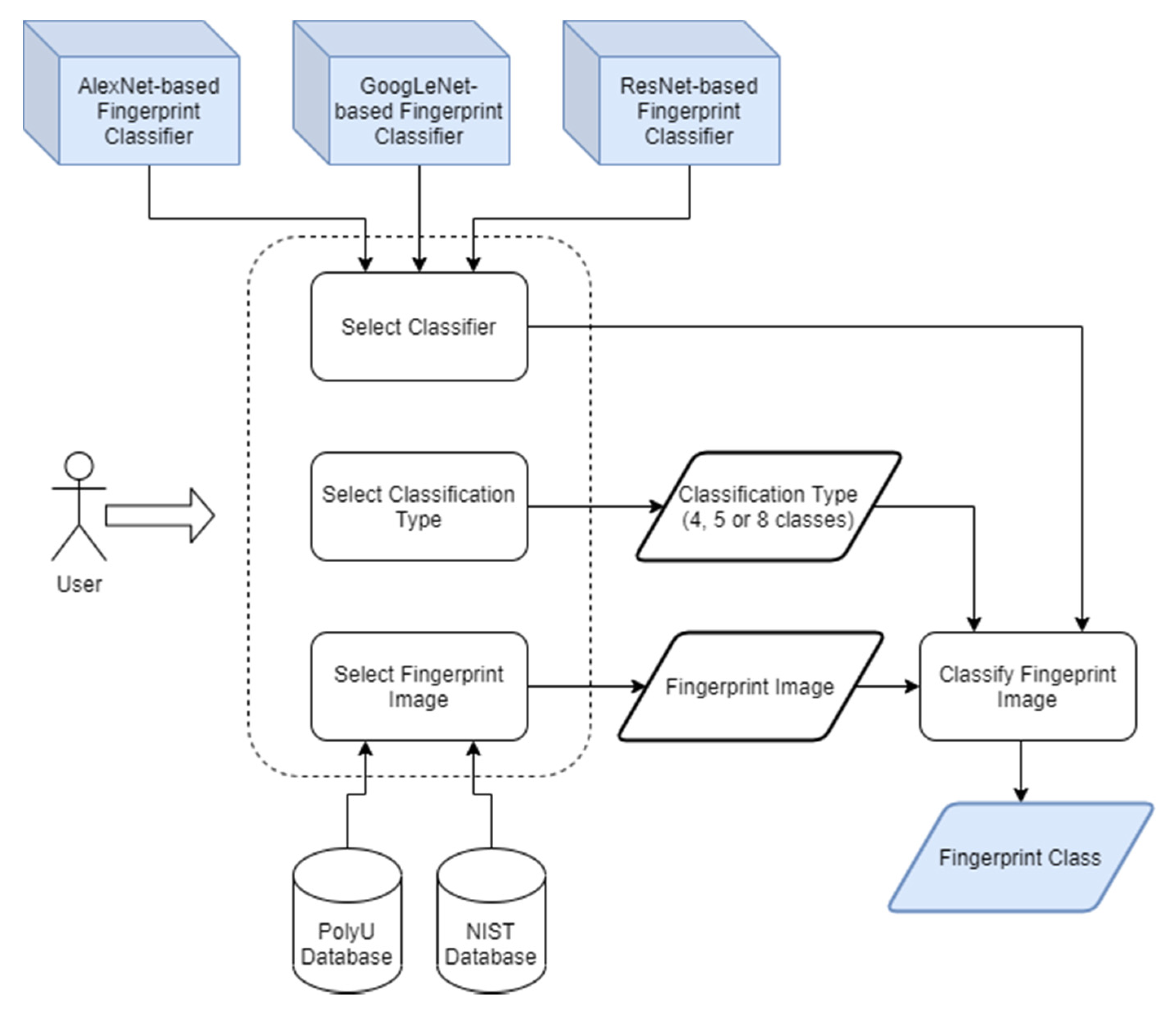

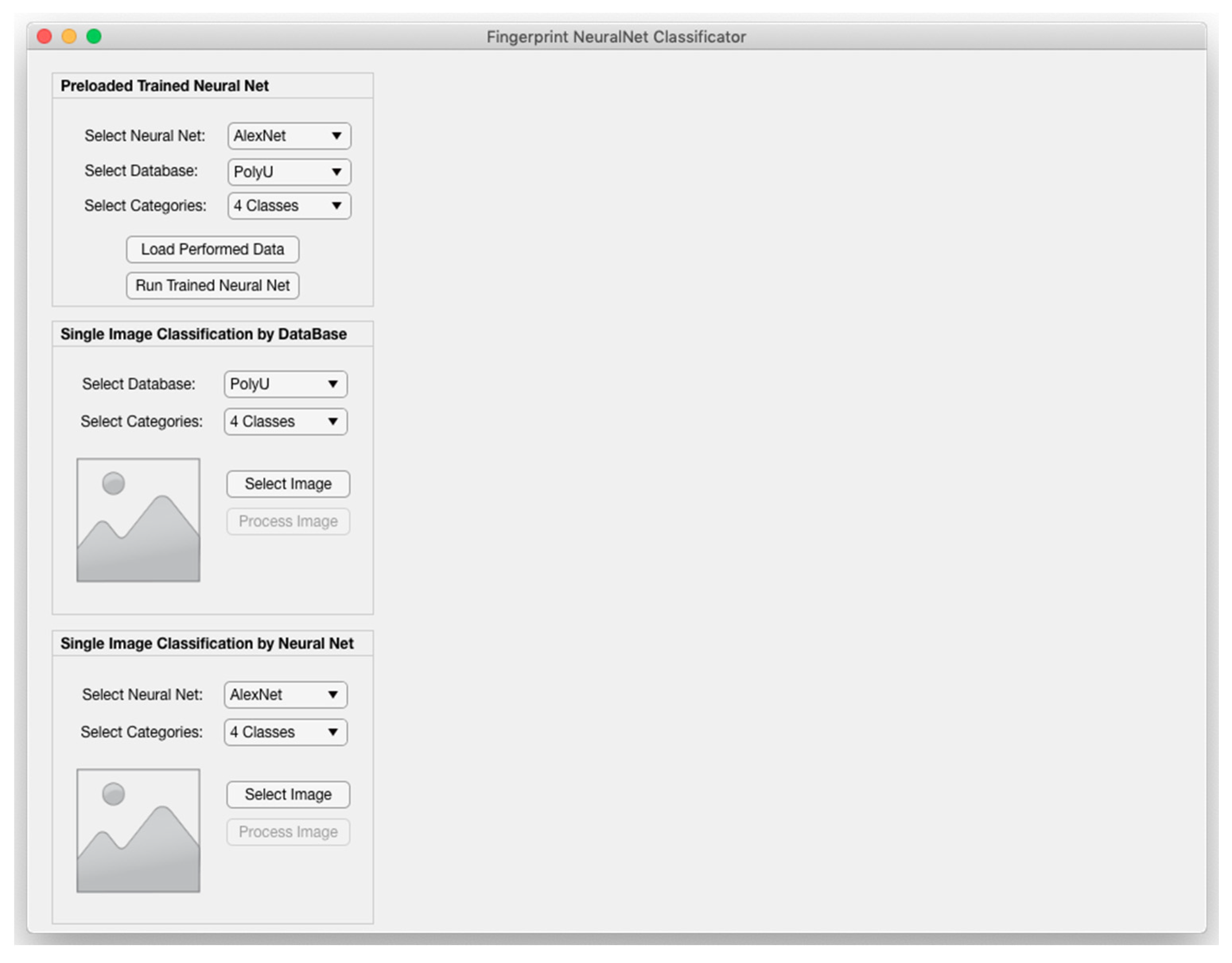

- a user-friendly tool offering an immediate interaction and performance assessment via a GUI;

- statistical validation of the obtained findings and comparisons by means of the McNemar test.

2. Fingerprint Classification

2.1. Types of Fingerprint Classification

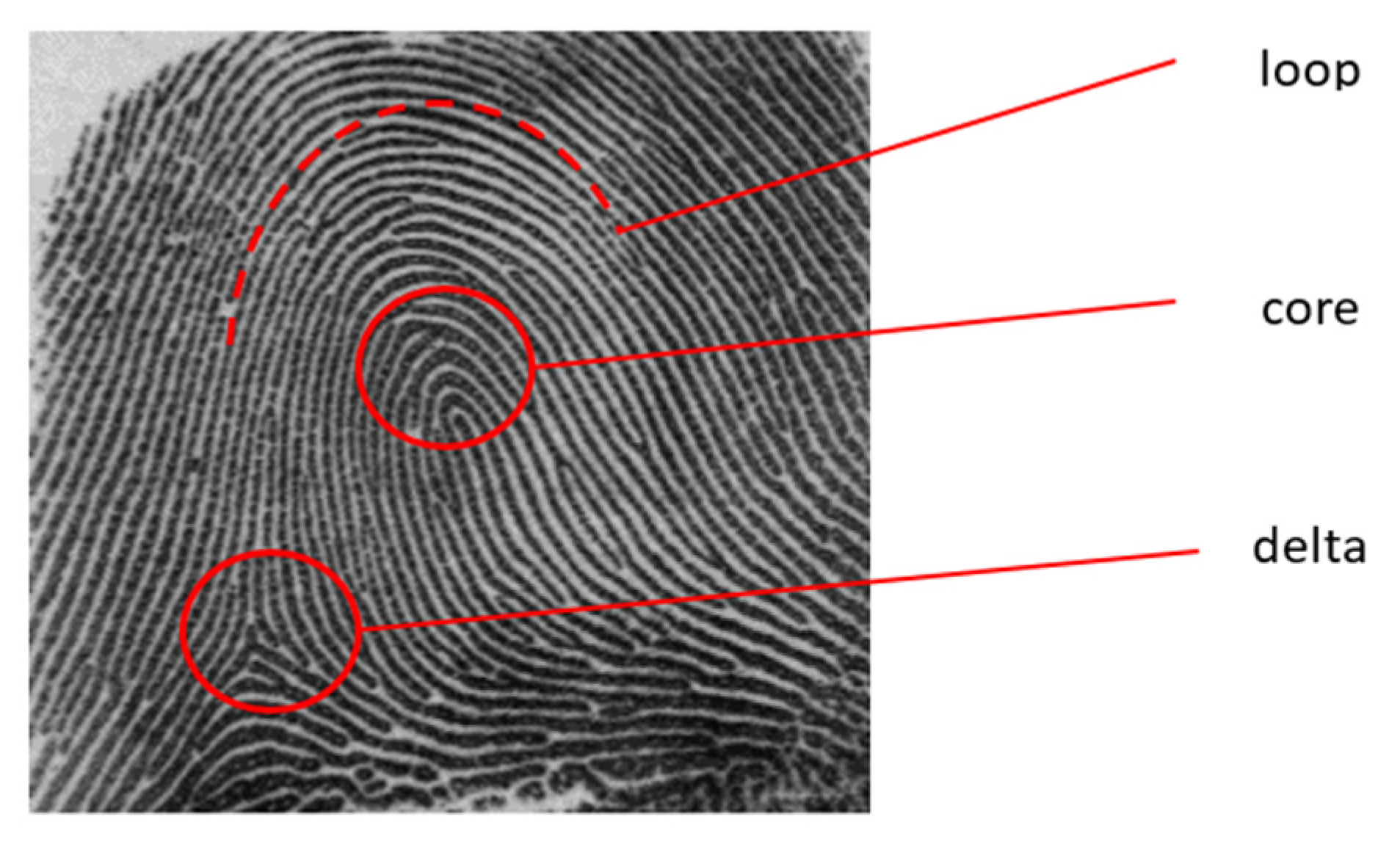

2.2. Fundamental Elements for Fingerprint Classification

- Core: approximately coincides with the center of the ridge pattern. Each fingerprint has only a core point. In practice, it consists of the point with the greatest curvature of the innermost ridge that forms the spiral.

- Delta: represents a divergence point and identifies a stretch where two ridges, which draw an almost parallel path, are divided. The delta is also defined as the point in the centre of a triangular region, which is usually found in the lower right or left corner, wherein the ridges converge from different directions.

- Loop: is characterized by several ridges that cross the imaginary line between the core point and the delta point, thus forming a “U” pattern; the loops return approximately in the direction from which they originated, since they are indeed characterized by having exactly one loop point and one core.

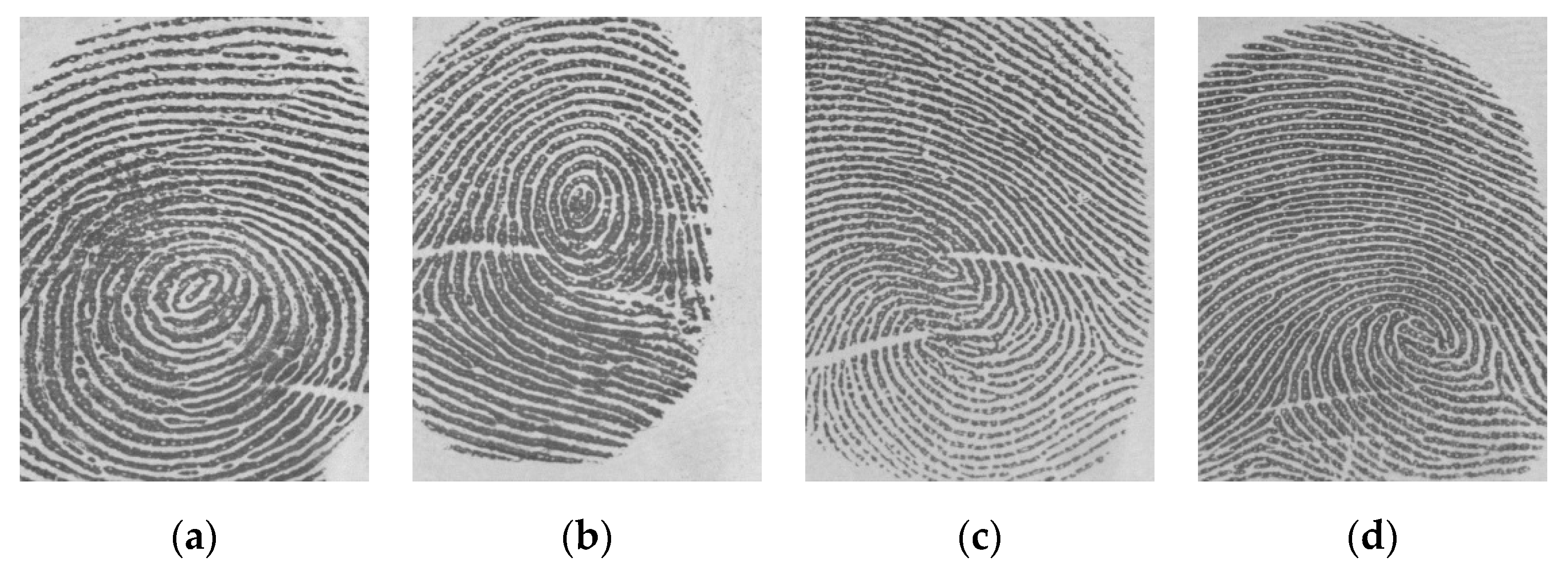

2.3. Fingerprint Classes

- Arch: they are distinguished by the fact that the ridges enter from one side, rise forming a small protuberance and exit from the opposite side. They do not have loops and deltas.

- Tented Arch: if the arch has at least one ridge showing a high curvature, and there is the presence of a loop and a delta, they can be classified as Tented Arch.

- Left Loop: the ridges that form it come and return towards the left direction. They have a loop and a delta (core positioned to the left of the delta).

- Right Loop: characterized by one or more ridges that enter from the right side, curve and exit from the same side. They have a loop and a delta (core positioned to the right of the delta).

- Whorl: it is characterized by the presence of at least two delta points. The pattern is defined by a part of ridges that tend to form a circular pattern. The fingerprints of this class are characterized by at least one ridge that makes a complete 360° turn around the fingerprint center.

- Plain Whorl: they can be distinguished by drawing an ideal line that joins the two delta points, if this does not intersect the area of the ridges that form a circle then it is a Plain subclass fingerprint.

- Central Pocket Whorl: also in this case, it is necessary to draw a line joining the two delta points identified, if it intersects the area of the ridges that form a circle then it can be said that the fingerprint belongs to the Central Pocket subclass.

- Double Loop Whorl: when it is possible to distinguish two loops, which meet, the fingerprint is defined as a Double Loop subclass.

- Accidental Loop Whorl: this category includes all the fingerprints that contain more than two deltas and all those that do not clearly belong to any of the three previous subclasses.

2.4. Fingerprint Classification Using Deep Learning Approaches

3. Related Work

3.1. Approaches Based on Heuristics/Singularity Points

3.2. Structure/Morphology-Based Approaches

3.3. Neural- and CNN-Based Approaches

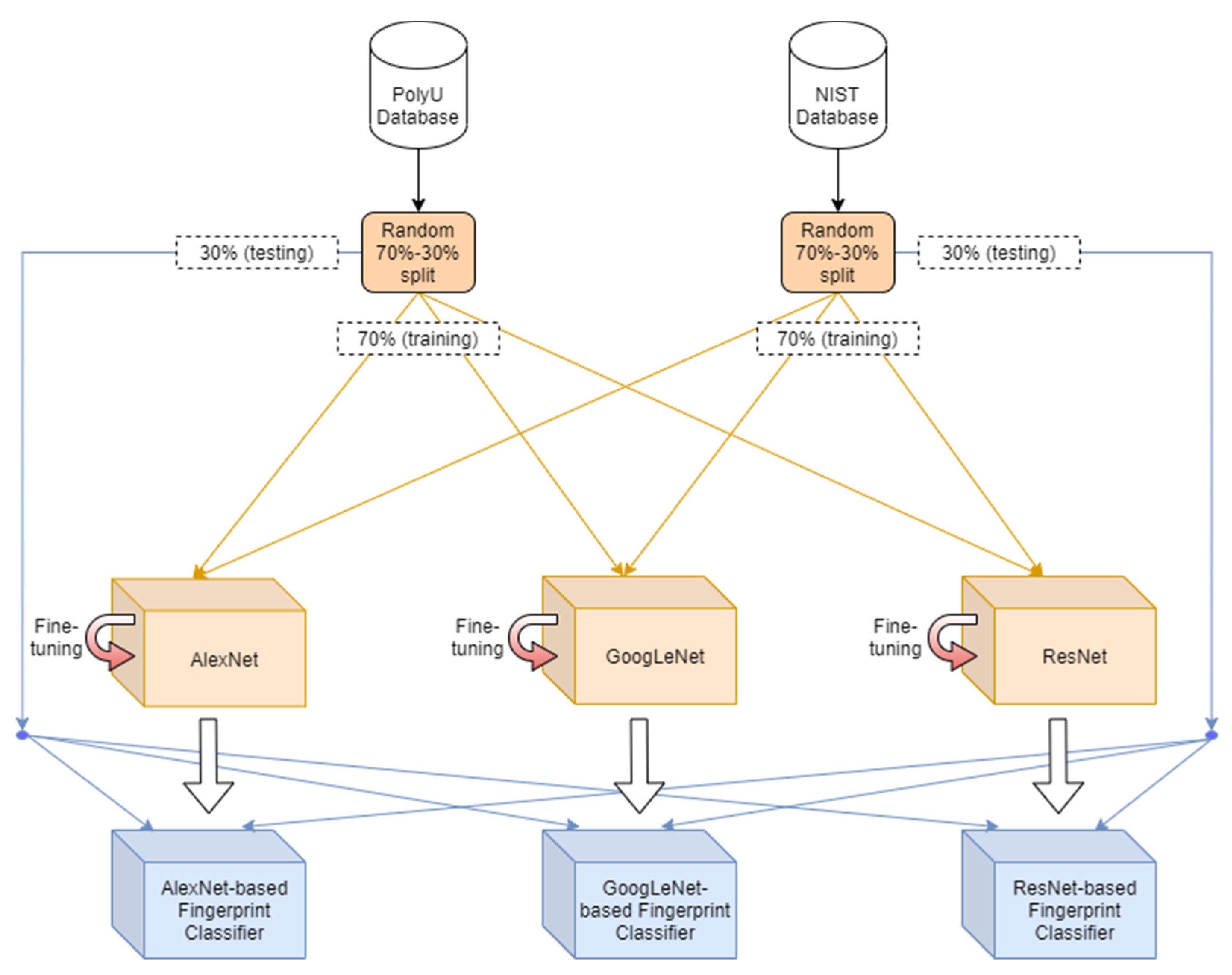

4. Materials and Methods

4.1. Fingerprint Databases

- PolyU [10]: fingerprint database acquired from September 2014 to February 2016 at “The Hong Kong Polytechnic University”. The fingerprints come from 300 different individuals; contains 1800 images in JPG format, in 8-bit grayscale and with an image size of 320 × 240 pixels. The images have a very high quality, as they are acquired with an innovative technique that does not require finger contact with the sensor.

- NIST [11]: the NIST database containing 2000 pairs of 512 × 512 8-bit grayscale fingerprint images. The fingerprints were classified into five classes, in fact each image is associated with a text file that provides the corresponding class (along with other information, such as the gender and date of acquisition). The database consists of 400 pairs of fingerprints for each class, stored in PNG format.

4.2. Investigated Deep Learning Architectures

4.2.1. AlexNet

4.2.2. GoogLeNet

4.2.3. ResNet

4.3. Implementation Details: Fine-Tuning

4.3.1. Fingerprint Image Pre-Processing

4.3.2. CNN Architecture Adaptations

4.3.3. Development Environment

4.4. Experimental Setup

4.5. Fingerprint Classification Tool

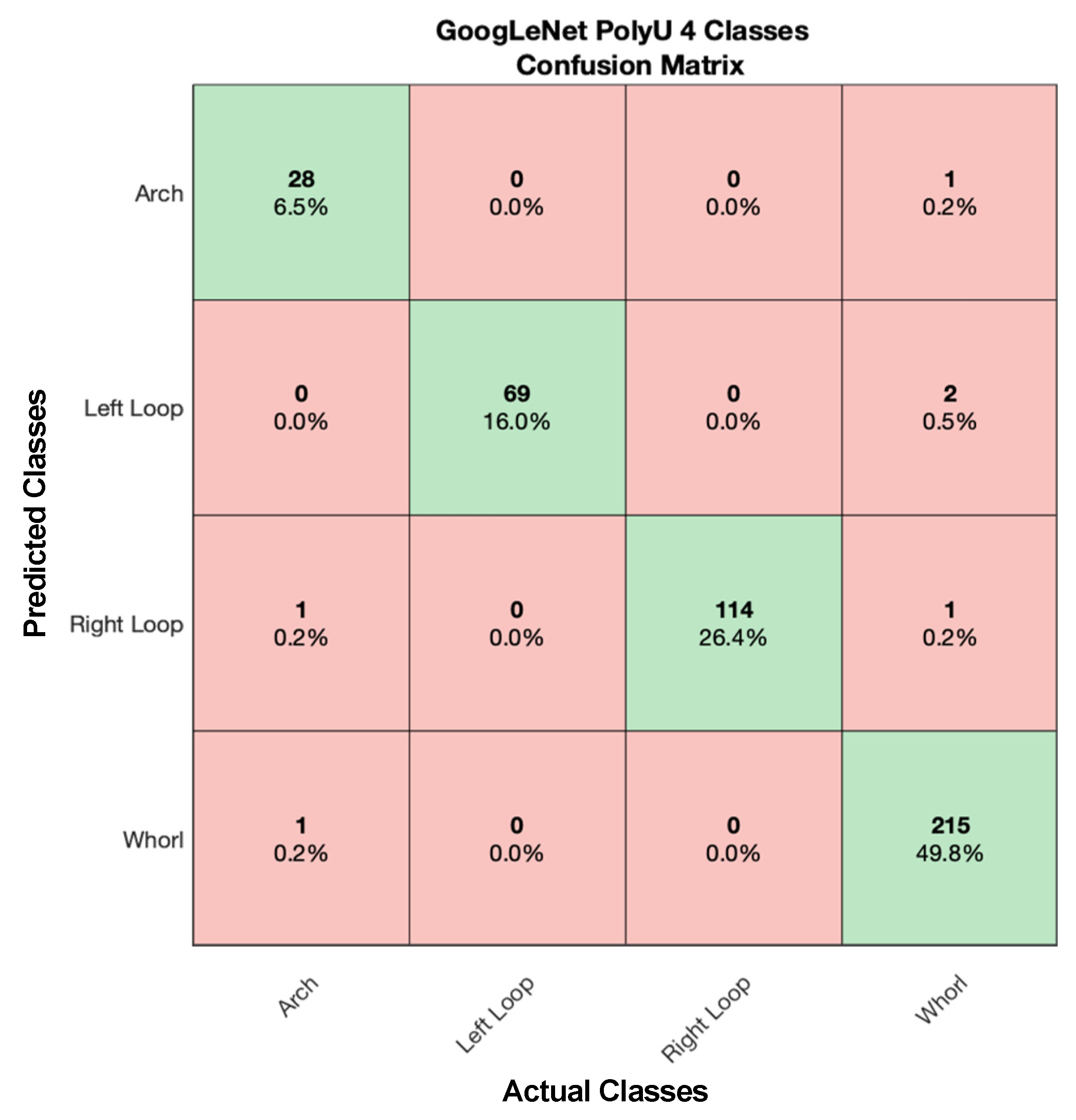

5. Experimental Results

5.1. Classification Evaluation

5.2. Training Times

6. Discussion and Conclusions

- AlexNet and ResNet achieved equivalent performance in four- and five-class classifications on the NIST database, by significantly outperforming GoogLeNet;

- AlexNet performed significantly the best in the eight-class classification;

- all the investigated CNN-based architectures achieved comparable performance in the case of high-quality images (such as the PolyU database).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Conti, V.; Vitello, G.; Sorbello, F.; Vitabile, S. An Advanced Technique for User Identification Using Partial Fingerprint. In Proceedings of the 2013 Seventh International Conference on Complex, Intelligent, and Software Intensive Systems, Taichung, Taiwan, 3–5 July 2013; pp. 236–242. [Google Scholar]

- Adler, A.; Schuckers, S. Biometric vulnerabilities, overview. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009. [Google Scholar] [CrossRef]

- Nguyen, H.T. Fingerprints Classification through Image Analysis and Machine Learning Method. Algorithms 2019, 12, 241. [Google Scholar] [CrossRef]

- Peralta, D.; Triguero, I.; García, S.; Saeys, Y.; Benitez, J.M.; Herrera, F. On the use of convolutional neural networks for robust classification of multiple fingerprint captures. Int. J. Intell. Syst. 2018, 33, 213–230. [Google Scholar] [CrossRef]

- Sundararajan, K.; Woodard, D.L. Deep Learning for Biometrics: A Survey. ACM Comput. Surv. 2018, 51, 1–34. [Google Scholar] [CrossRef]

- Militello, C.; Rundo, L.; Minafra, L.; Cammarata, F.P.; Calvaruso, M.; Conti, V.; Russo, G. MF2C3: Multi-feature Fuzzy clustering to enhance cell colony detection in automated clonogenic assay evaluation. Symmetry 2020, 12, 773. [Google Scholar] [CrossRef]

- Shadrina, E.; Vol’pert, Y. Functional asymmetry and fingerprint features of left-handed and right-handed young Yakuts (Mongoloid race, north-eastern Siberia). Symmetry 2018, 10, 728. [Google Scholar] [CrossRef]

- Nilsson, K.; Bigun, J. Using linear symmetry features as a pre-processing step for fingerprint images. In Lecture Notes in Computer Science; Springer: Berlin, Germany, 2001; pp. 247–252. ISBN 9783540422167. [Google Scholar]

- Le, T.H.; Van, H.T. Fingerprint reference point detection for image retrieval based on symmetry and variation. Pattern Recognit. 2012, 45, 3360–3372. [Google Scholar] [CrossRef]

- The Hong Kong Polytechnic University—3D Fingerprint Images Database Version 2.0. Available online: http://www4.comp.polyu.edu.hk/~csajaykr/fingerprint.htm (accessed on 22 January 2021).

- NIST Biometric Special Databases and Software. Available online: https://www.nist.gov/itl/iad/image-group/resources/biometric-special-databases-and-software (accessed on 22 January 2021).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 2016; pp. 770–778. [Google Scholar]

- Jian, W.; Zhou, Y.; Liu, H. Lightweight convolutional neural network based on singularity ROI for fingerprint classification. IEEE Access 2020, 8, 54554–54563. [Google Scholar] [CrossRef]

- Grzybowski, A.; Pietrzak, K. Jan Evangelista Purkynje (1787–1869): First to describe fingerprints. Clin. Dermatol. 2015, 33, 117–121. [Google Scholar] [CrossRef]

- Galton, F. Finger Prints; Macmillan and Co.: London, UK, 1892. [Google Scholar]

- Galton, F. Decipherment of Blurred Finger Prints. In Finger Prints; Macmillan and Co.: London, UK, 1893. [Google Scholar]

- International Biometric Group—The Henry Classification System. Available online: http://static.ibgweb.com/HenryFingerprintClassification.pdf (accessed on 22 January 2021).

- The Science of Fingerprints: Classification and Uses; United States Federal Bureau of Investigation: Washington, DC, USA, 1985.

- Zabala-Blanco, D.; Mora, M.; Barrientos, R.J.; Hernández-García, R.; Naranjo-Torres, J. Fingerprint Classification through Standard and Weighted Extreme Learning Machines. Appl. Sci. 2020, 10, 4125. [Google Scholar] [CrossRef]

- Bigun, J. Fingerprint Features. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 465–473. ISBN 9780387730035. [Google Scholar]

- Wegstein, J.H. An Automated Fingerprint Identification System; National Institute of Standards and Technologyy: Gaithersburg, MD, USA, 1982. [Google Scholar]

- Vitabile, S.; Conti, V.; Lentini, G.; Sorbello, F. An Intelligent Sensor for Fingerprint Recognition. In International Conference on Embedded and Ubiquitous Computing; Springer: Berlin, Germany, 2005; Volume 3824, pp. 27–36. [Google Scholar]

- Militello, C.; Conti, V.; Vitabile, S.; Sorbello, F. A novel embedded fingerprints authentication system based on singularity points. In Proceedings of the CISIS 2008: 2nd International Conference on Complex, Intelligent and Software Intensive Systems, Barcelona, Spain, 4–7 March 2008; pp. 72–78. [Google Scholar]

- Win, K.N.; Li, K.; Chen, J.; Fournier-Viger, F.; Li, K. Fingerprint classification and identification algorithms for criminal investigation: A survey. Future Gener. Comput. Syst. 2020, 110, 758–771. [Google Scholar] [CrossRef]

- Hu, J.; Xie, M. Fingerprint classification based on genetic programming. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Chengdu, China, 16–18 April 2010; Volume 6, pp. 193–196. [Google Scholar]

- Rundo, L.; Tangherloni, A.; Cazzaniga, P.; Nobile, M.S.; Russo, G.; Gilardi, M.C.; Vitabile, S.; Mauri, G.; Besozzi, D.; Militello, C. A novel framework for MR image segmentation and quantification by using MedGA. Comput. Methods Programs Biomed. 2019, 176, 159–172. [Google Scholar] [CrossRef] [PubMed]

- Alias, N.A.; Radzi, N.H.M. Fingerprint Classification using Support Vector Machine. In Proceedings of the 5th ICT International Student Project Conference, Bangkok, Thailand, 27–28 May 2016. [Google Scholar]

- Saini, M.K.; Saini, J.S.; Sharma, S. Moment based Wavelet Filter Design for Fingerprint Classification. In Proceedings of the 2013 International Conference on Signal Processing and Communication, Noida, India, 12–14 December 2013; pp. 267–270. [Google Scholar]

- Li, X.; Wang, G.; Lu, X. Neural Network Based Automatic Fingerprints Classification Algorithm. In Proceedings of the 2010 International Conference of Information Science and Management Engineering, Xi’an, China, 7–8 August 2010; Volume 1, pp. 94–96. [Google Scholar]

- Turky, A.M.; Ahmad, M.S. The use of SOM for fingerprint classification. In Proceedings of the 2010 International Conference on Information Retrieval Knowledge Management, Shah Alam, Malaysia, 17–18 March 2010; pp. 287–290. [Google Scholar]

- Saeed, F.; Hussain, M.; Aboalsamh, H.A. Classification of Live Scanned Fingerprints using Dense SIFT based Ridge Orientation Features. In Proceedings of the 2018 1st International Conference on Computer Applications Information Security, Riyadh, Saudi Arabia, 4–5 April 2018; pp. 1–4. [Google Scholar]

- Wang, X.; Wang, F.; Fan, J.; Wang, J. Fingerprint classification based on continuous orientation field and singular points. In Proceedings of the 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; Volume 4, pp. 189–193. [Google Scholar]

- Biometric Systems Lab—FVC2002: Fingerprint Verification Competition. Available online: http://bias.csr.unibo.it/fvc2002/ (accessed on 22 January 2021).

- Biometric Systems Lab—FVC2004: Fingerprint Verification Competition. Available online: http://bias.csr.unibo.it/fvc2004/ (accessed on 22 January 2021).

- Neurotechnology Company—Sample Fingerprint Databases. Available online: http://www.neurotechnologija.com/download.html (accessed on 22 January 2021).

- Conti, V.; Militello, C.; Vitabile, S.; Sorbello, F. Introducing Pseudo-Singularity Points for Efficient Fingerprints Classification and Recognition. In Proceedings of the 2010 International Conference on Complex, Intelligent and Software Intensive Systems, Krakow, Poland, 15–18 February 2010; pp. 368–375. [Google Scholar]

- Biometric Systems Lab—FVC2000: Fingerprint Verification Competition. Available online: http://bias.csr.unibo.it/fvc2000/ (accessed on 22 January 2021).

- Wang, W.; Li, J.; Chen, W. Fingerprint Classification Using Improved Directional Field and Fuzzy Wavelet Neural Network. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; Volume 2, pp. 9961–9964. [Google Scholar]

- Nain, N.; Bhadviya, B.; Gautam, B.; Kumar, D.; Deepak, B.M. A Fast Fingerprint Classification Algorithm by Tracing Ridge-Flow Patterns. In Proceedings of the 2008 IEEE International Conference on Signal Image Technology and Internet Based Systems, Bali, Indonesia, 30 November–30 December 2008; pp. 235–238. [Google Scholar]

- Borra, S.R.; Jagadeeswar Reddy, G.; Sreenivasa Reddy, E. Classification of fingerprint images with the aid of morphological operation and AGNN classifier. Appl. Comput. Inform. 2018, 14, 166–176. [Google Scholar] [CrossRef]

- Saeed, F.; Hussain, M.; Aboalsamh, H.A. Classification of Live Scanned Fingerprints using Histogram of Gradient Descriptor. In Proceedings of the 2018 21st Saudi Computer Society National Computer Conference, Riyadh, Saudi Arabia, 25–26 April 2018; pp. 1–5. [Google Scholar]

- Özbayoğlu, A.M. Unsupervised Fingerprint Classification with Directional Flow Filtering. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference, Ankara, Turkey, 6–7 November 2019; pp. 1–4. [Google Scholar]

- Wang, R.; Han, C.; Guo, T. A novel fingerprint classification method based on deep learning. In Proceedings of the 2016 23rd International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 931–936. [Google Scholar]

- Listyalina, L.; Mustiadi, I. Accurate and Low-cost Fingerprint Classification via Transfer Learning. In Proceedings of the 2019 5th International Conference on Science in Information Technology, Yogyakarta, Indonesia, 23–24 October 2019; pp. 27–32. [Google Scholar]

- Hamdi, D.E.; Elouedi, I.; Fathallah, A.; Nguyuen, M.K.; Hamouda, A. Combining Fingerprints and their Radon Transform as Input to Deep Learning for a Fingerprint Classification Task. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision, Singapore, 18–21 November 2018; pp. 1448–1453. [Google Scholar]

- Ge, S.; Bai, C.; Liu, Y.; Liu, Y.; Zhao, T. Deep and discriminative feature learning for fingerprint classification. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications, Chengdu, China, 13–16 December 2017; pp. 1942–1946. [Google Scholar]

- Michelsanti, D.; Ene, A.-D.; Guichi, Y.; Stef, R.; Nasrollahi, K.; Moeslund, T.B. Fast Fingerprint Classification with Deep Neural Networks; Scitepress: Setúbal, Portugal, 2017; pp. 202–209. [Google Scholar]

- Pandya, B.; Cosma, G.; Alani, A.A.; Taherkhani, A.; Bharadi, V.; McGinnity, T.M. Fingerprint classification using a deep convolutional neural network. In Proceedings of the 2018 4th International Conference on Information Management, Oxford, UK, 25–27 May 2018; pp. 86–91. [Google Scholar]

- Conti, V.; Rundo, L.; Militello, C.; Mauri, G.; Vitabile, S. Resource-Efficient Hardware Implementation of a Neural-based Node for Automatic Fingerprint Classification. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. ISYOU 2017, 8, 19–36. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural. Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Westfall, P.H.; Troendle, J.F.; Pennello, G. Multiple McNemar tests. Biometrics 2010, 66, 1185–1191. [Google Scholar] [CrossRef]

- Yamasaki, T.; Honma, T.; Aizawa, K. Efficient optimization of convolutional neural networks using particle swarm optimization. In Proceedings of the IEEE Third International Conference on Multimedia Big Data, Laguna Hills, CA, USA, 19–21 April 2017; pp. 70–73. [Google Scholar] [CrossRef]

- Han, S.; Meng, Z.; Li, Z.; O’Reilly, J.; Cai, J.; Wang, X.; Tong, Y. Optimizing filter size in convolutional neural networks for facial action unit recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5070–5078. [Google Scholar] [CrossRef]

- Tang, Y.; Gao, F.; Feng, J. Latent fingerprint minutia extraction using fully convolutional network. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics, Denver, CO, USA, 1–4 October 2017; pp. 117–123. [Google Scholar] [CrossRef]

- Huang, X.; Qian, P.; Liu, M. Latent fingerprint image enhancement based on progressive generative adversarial network. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 3481–3489. [Google Scholar] [CrossRef]

- Xu, H.; Li, C.; Rahaman, M.M.; Yao, Y.; Li, Z.; Zhang, J.; Kulwa, F.; Zhao, X.; Qi, S.; Teng, Y. An enhanced framework of generative adversarial networks (EF-GANs) for environmental microorganism image augmentation with limited rotation-invariant training data. IEEE Access 2020, 8, 187455–187469. [Google Scholar] [CrossRef]

- Han, C.; Rundo, L.; Araki, R.; Nagano, Y.; Furukawa, Y.; Mauri, G.; Nakayama, H.; Hayashi, H. Combining noise-to-image and image-to-image GANs: Brain MR image augmentation for tumor detection. IEEE Access 2019, 7, 156966–156977. [Google Scholar] [CrossRef]

- Goodfellow, I.; McDaniel, P.; Papernot, N. Making machine learning robust against adversarial inputs. Commun. ACM 2018, 61, 56–66. [Google Scholar] [CrossRef]

- Biggio, B.; Fumera, G.; Russu, P.; Didaci, L.; Roli, F. Adversarial biometric recognition: A review on biometric system security from the adversarial machine-learning perspective. IEEE Signal Process. Mag. 2015, 32, 31–41. [Google Scholar] [CrossRef]

- won Kwon, H.; Nam, J.W.; Kim, J.; Lee, Y.K. Generative adversarial attacks on fingerprint recognition systems. In Proceedings of the 2021 International Conference on Information Networking, Jeju Island, Korea, 13–16 January 2021; pp. 483–485. [Google Scholar] [CrossRef]

| Database | Fingerprint Images | People | Image Size (Pixels) | Image Format |

|---|---|---|---|---|

| PolyU | 1800 | 300 | 320 × 240 | BMP (8 bit) |

| NIST | 4000 | N.A. | 512 × 512 | PNG (8 bit) |

| CNN | Year | Input Size | Layers |

|---|---|---|---|

| AlexNet | 2012 | 227 × 227 × 3 | 25 |

| GoogLeNet | 2014 | 224 × 224 × 3 | 144 |

| ResNet | 2015 | 224 × 224 × 3 | 177 |

| CNN | Original Layers | Adapted Layers |

|---|---|---|

| AlexNet (25 layers) no layer is frozen | #23: Fully Connected 1000 fully connected layer #25: Classification Output crossentropyex with “tench” and 999 other classes | #23: Fully Connected 4 fully connected layer #25: Classification Output crossentropyex |

| GoogLeNet (144 layers) layers 1–10 are frozen | #142 “loss3-classifier” Fully Connected 1000 fully connected layer #143 “prob” Softmax #144 “output” Classification Output crossentropyex with “tench” and 999 other classes | #142 “fc” Fully Connected 4 fully connected layer #143 “softmax” Softmax #144 “classoutput” Classification Output crossentropyex |

| ResNet (177 layers) layers 1–110 are frozen | #175 “fc1000” Fully Connected 1000 fully connected layer #176 “fc1000_softmax” Softmax #177 “ClassificationLayer_fc1000” Classification Output crossentropyex with “tench” and 999 other classes | #175 “fc” Fully Connected 4 fully connected layer #176 “softmax” Softmax #177 “classoutput” Classification Output crossentropyex |

| Learning Parameter | AlexNet | GoogLeNet | ResNet |

|---|---|---|---|

| Mini Batch Size | 64 | 36 | 64 |

| Max Epochs | 26 | 20 | 16 |

| Initial Learn Rate | 0.001 | 0.001 | 0.001 |

| Validation Frequency | 3 | 3 | 3 |

| Database | Classification Type | AlexNet | GoogLeNet | ResNet |

|---|---|---|---|---|

| NIST | four-class | 96.85 | 93.90 | 96.57 |

| five-class | 96.05 | 91.55 | 95.37 | |

| eight-class | 93.75 | 92.07 | 92.71 | |

| PolyU | four-class | 99.51 | 99.51 | 99.65 |

| five-class | 99.79 | 99.79 | 99.51 | |

| eight-class | 99.51 | 99.58 | 99.31 |

| Database | Classification Type | AlexNet vs. GoogLeNet | AlexNet vs. ResNet | GoogLeNet vs. ResNet |

|---|---|---|---|---|

| NIST | four-class | 5.512 × 10−14 | 0.3659 | 2.254 × 10−11 |

| five-class | 2.545 × 10−20 | 0.0672 | 4.123 × 10−15 | |

| eight-class | 9.099 × 10−4 | 0.0254 | 0.2020 | |

| PolyU | four-class | 1.0 | 1.0 | 1.0 |

| five-class | 1.0 | 0.3750 | 0.3750 | |

| eight-class | 0.9090 | 0.9090 | 0.9053 |

| Classification Type | AlexNet | GoogLeNet | ResNet |

|---|---|---|---|

| four-class | 98.18 | 96.71 | 98.11 |

| five-class | 97.92 | 95.67 | 97.44 |

| eight-class | 96.63 | 95.83 | 96.00 |

| Database | AlexNet | GoogLeNet | ResNet |

|---|---|---|---|

| NIST | 95.55 | 92.51 | 94.88 |

| PolyU | 99.61 | 99.63 | 99.49 |

| Database | Classification Type | AlexNet | GoogLeNet | ResNet |

|---|---|---|---|---|

| NIST | four-class | 02:17:50 | 07:57:01 | 07:58:11 |

| five-class | 02:16:17 | 07:56:23 | 07:54:52 | |

| eight-class | 02:16:06 | 07:57:59 | 07:52:04 | |

| PolyU | four-class | 00:29:16 | 01:23:25 | 01:23:42 |

| five-class | 00:29:01 | 01:23:26 | 01:23:09 | |

| eight-class | 00:33:56 | 01:23:24 | 01:03:10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Militello, C.; Rundo, L.; Vitabile, S.; Conti, V. Fingerprint Classification Based on Deep Learning Approaches: Experimental Findings and Comparisons. Symmetry 2021, 13, 750. https://doi.org/10.3390/sym13050750

Militello C, Rundo L, Vitabile S, Conti V. Fingerprint Classification Based on Deep Learning Approaches: Experimental Findings and Comparisons. Symmetry. 2021; 13(5):750. https://doi.org/10.3390/sym13050750

Chicago/Turabian StyleMilitello, Carmelo, Leonardo Rundo, Salvatore Vitabile, and Vincenzo Conti. 2021. "Fingerprint Classification Based on Deep Learning Approaches: Experimental Findings and Comparisons" Symmetry 13, no. 5: 750. https://doi.org/10.3390/sym13050750

APA StyleMilitello, C., Rundo, L., Vitabile, S., & Conti, V. (2021). Fingerprint Classification Based on Deep Learning Approaches: Experimental Findings and Comparisons. Symmetry, 13(5), 750. https://doi.org/10.3390/sym13050750