1. Introduction

Pedestrian detection is the key technology of intelligent transportation [

1,

2,

3]. In addition, the core technologies included in pedestrian detection are also indispensable for other applications, such as, robotics, video surveillance and behavior prediction [

4,

5,

6]. In recent years, researchers have proposed many different pedestrian detection methods and successfully applied them in commercial and military fields [

7,

8,

9,

10,

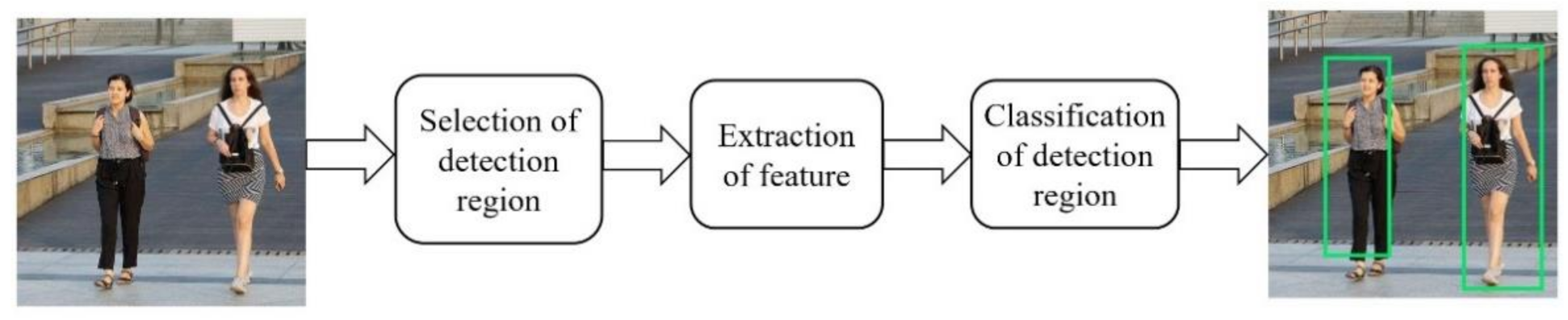

11]. Feature based pedestrian detection method is the mainstream method at present. Although they are different in the processing of raw data and the training of classifier, they basically follow the similar path as shown in

Figure 1. The input of this path is the original image in the form of pixel representation, while the output includes a set of rectangular borders with different sizes. Each rectangular border corresponds to a pedestrian identified in the image. A typical pedestrian detection scheme mainly includes three steps: selection of detection region, extraction of feature and classification of detection region.

In the stage of selection of detection region, the input is usually the original image, and the output is a group of regions with different sizes and ratios. Sliding window method is the simplest method among all the region selection algorithms. It can be used to obtain regions with multiple proportions and aspect ratios. As the number of candidate regions has a great influence on the speed of the whole pedestrian detection system, more complicated approaches analyze the original images in advance to filter out the regions in which no target objects are believed to contain, and therefore the number of candidate regions to be tested is reduced.

For the extraction of features, the input is the candidate region that may or may not contain a pedestrian, and the output is a feature vector in the form of real-valued or binary-valued. The criteria of which features should be extracted are whether they can classify pedestrians and non-pedestrians. Feature extraction can be clustered as single and multifeature extraction, respectively. Single features typically include HOG (histogram of oriented gradient) [

12], LBP (local binary pattern) [

13] and Haar-like [

14], while the representatives of multifeature are HOG-LBP [

15], HOG-Harr-like [

16] and HOG-SIFT (scale-invariant feature transform) [

17,

18].

In the stage of classification of detection region, the main task is to identify whether there is a human shape in the candidate region. The feature vector obtained in the feature extraction stage for a candidate detection region is input into the classifier, and a binary label is output after classification calculation to indicate whether the area is positive (that means containing pedestrian) or negative (that means not containing pedestrian). The classical classifier comprises SVM (support vector machine) [

19,

20], AdaBoost [

21,

22] and CNN (convolutional neural network) [

23,

24].

Compared to the methods of multi-component combination, the implementation process and structure of feature-based methods of pedestrian detection is relatively simple. When using different feature methods, it does not need to change the original architecture and consequently guarantees better portability. The critical steps of the feature-based pedestrian detection pipeline mentioned above are feature extraction and region classification. Therefore, a novel and efficient feature extraction algorithm is essential, and which inspires the origin of this paper.

HOG [

12] is widely considered as one of the best features to obtain edge or local shape information. It has achieved great success in target recognition and detection [

25,

26,

27]. In [

28], Zhu et al. integrate the cascade rejections method to accelerate the HOG extraction process in human detection. In [

29], HOG-DOT algorithm with L1 normalization technique and SVM is used. Although this method can get good TPR (true positive rate), its FPR (false positive rate) is very high. HOG using discrete wavelet transform is proposed in [

30], but its detection rate is not high, only 85.12%. In [

31], selective gradient self-similarity (SGSS) feature is applied for feature extraction with HOG. The addition of SGSS significantly improves the accuracy of pedestrian detection, and the detection ability of cascade structure based on AdaBoost is better than linear SVM or HIKSVM.

It should be noted that the performance of HOG is poor in the background clustered with noisy edges. In such a situation, LBP [

32] can play a very good complementary role. LBP feature has been widely used in different applications and has presented satisfactory performance in face recognition. It is a very effective feature to distinguish images because of its invariance to monotonic gray level changes and high efficiency of computation.

Based on the above reasons, it is natural to think that the combination of edge/local shape information with texture information can capture the appearance of pedestrians more efficiently. In [

15], aiming at the problem of partial occlusion, a feature descriptor based on serial fusion of HOG and LBP features is proposed. Although the detection rate has been improved, the detection efficiency is sacrificed owing to the increase of dimension. In [

33], Jiang et al. also use concatenation method to combine HOG and LBP features to for a new feature vector, and then send it to XGBoost (eXtreme Gradient Boosting) classifier. Therefore, the problem of sacrificing detection efficiency to improve detection accuracy still exists. In the feature fusion of pedestrian detection, the current mainstream method is to concatenate several features in series. This approach may raise two problems. First, serial feature concatenation leads to an extreme increase of the number of features for the whole image being processed and consequently affects the processing speed. Secondly, the processing method of concatenating features does not consider the possible interaction between features and the possible impact of this interaction on the final classification decision. Therefore, the current feature fusion based on concatenation cannot be regarded as the feature fusion in a strict sense.

In this paper, Choquet integral based on the fuzzy measure is applied to realize the parallel fusion of HOG and LBP feature descriptors. This methodology is expected to improve the detection accuracy without increasing the feature dimension. The Choquet integral based on fuzzy measure is a very effective feature fusion method. When it is applied to the fusion problem, the fuzzy measure in the integral can well reflect the importance of each feature to the fusion target and the influence of the interaction among features on the fusion target. At the same time, it may help us to mine the possible interaction between different pedestrian descriptors, which has a positive research significance for the development of pedestrian detection technology.

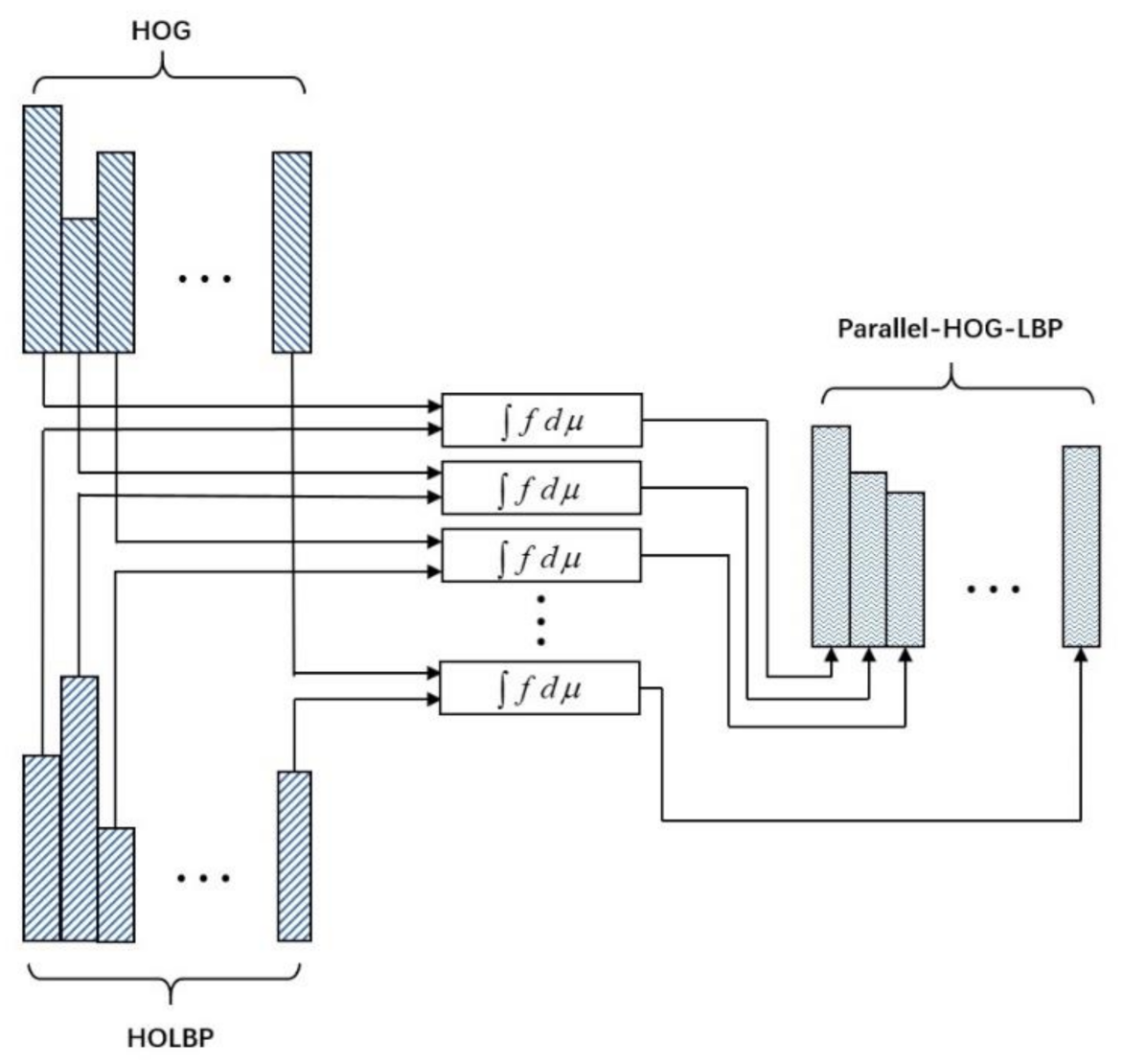

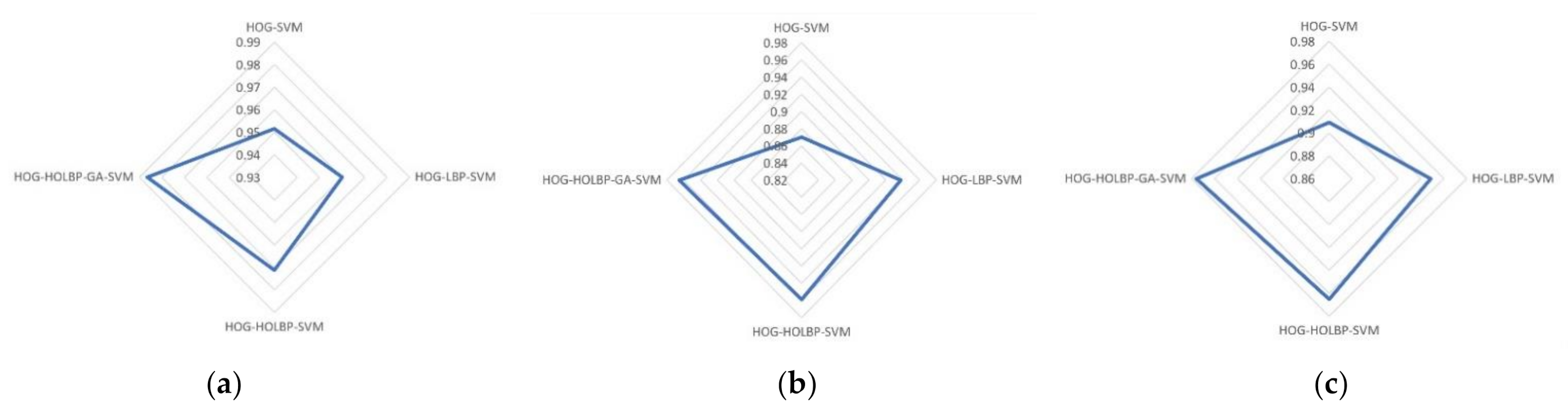

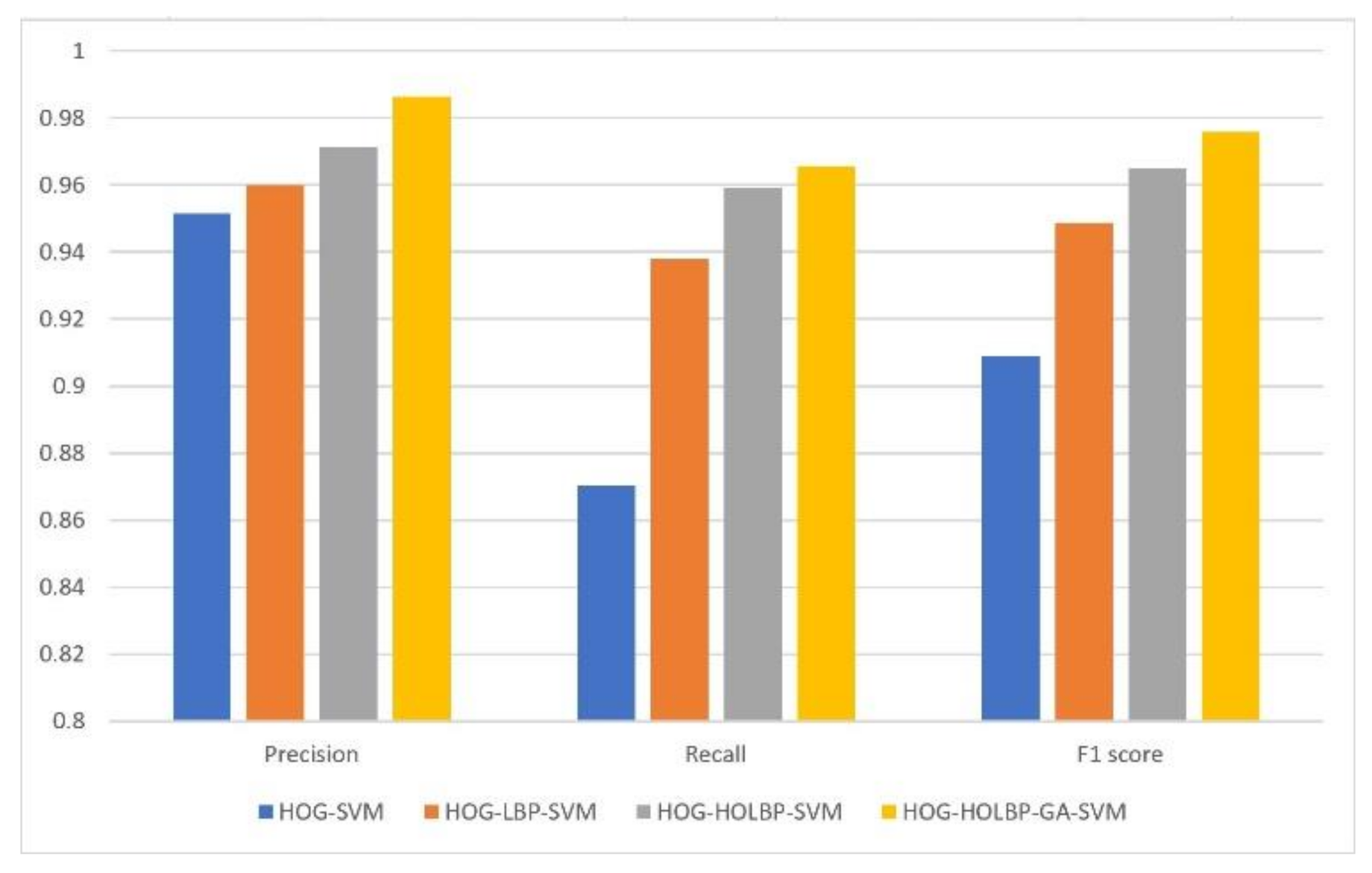

The procedure of pedestrian detection based on the parallel fusion of HOG and LBP features is demonstrated in

Figure 2. Here, HOG features and histogram of LBP descriptors of each cell are extracted from original image, respectively. They are parallelly fused by Choquet integral with its internal parameters, i.e., values of fuzzy measure, being optimized by a genetic algorithm. This fusion results in a new set of features, called parallel-HOG-HOLBP (histogram of gradient—histogram of local binary patterns), which is consequently transmitted to SVM for classification.

The intervention of Choquet integral makes the two features (HOG and LBP) merge in parallel. The resulting feature, parallel-HOG-HOLBP, not only contains the original advantages of HOG and LBP, but also avoids the unavoidable dimension disaster in traditional serial fusion. Genetic algorithm is used to optimize the interval coefficients of fuzzy measure in Choquet integral. It is a more rational way to retrieve these parameters through a global optimization algorithm compared to through trial and error method. We designed a series of experiments to verify the effectiveness of the proposed method. The experimental results show that the proposed method has better comprehensive performance than the existing methods.

The organizational structure of this paper is as follows. In

Section 2, typical features used in this paper are presented. Aggregation of feature fusion based on Choquet integral is introduced in

Section 3. An adaptive algorithm based on genetic algorithm is implemented in

Section 4 to optimize the internal coefficients of fuzzy measure in Choquet integral. In

Section 5, experimental results and analysis are demonstrated. Finally,

Section 6 summarizes and prospects this paper.

4. Pedestrian Detection Framework with Parameters Retrieved by Genetic Algorithm

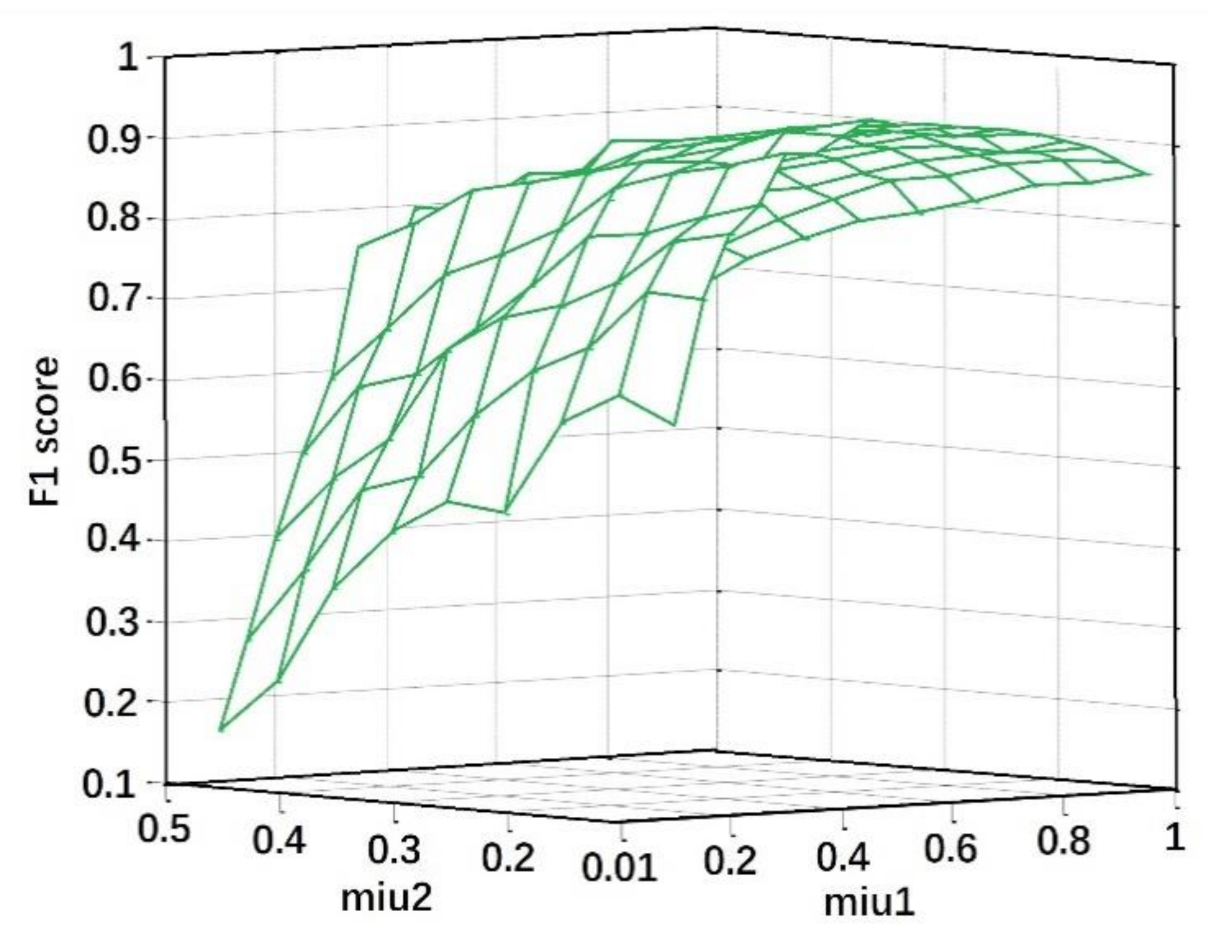

To accomplish the parallel feature fusion between HOG and HOLBP via Choquet integral based on signed fuzzy measure, a series of interval parameters, i.e., , and , need to be retrieved. Of course, we can use trial and error method to predict the values of these parameters. However, a more scientific way is to retrieve these parameters through a global optimization algorithm.

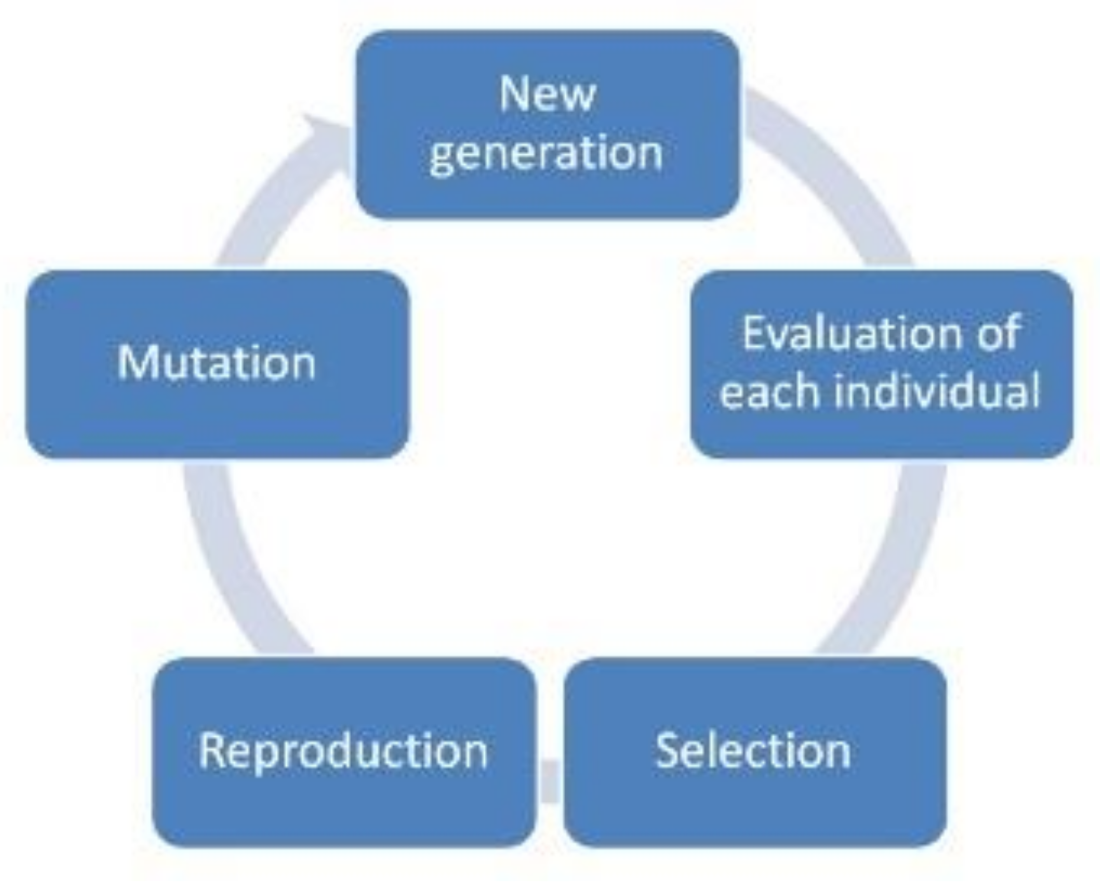

As shown in

Figure 7, genetic algorithm is an adaptive optimization algorithm which can ensure global search. It includes the process of initialization of new generation, evaluation of each individual of population, selection, reproduction (crossover), and mutation.

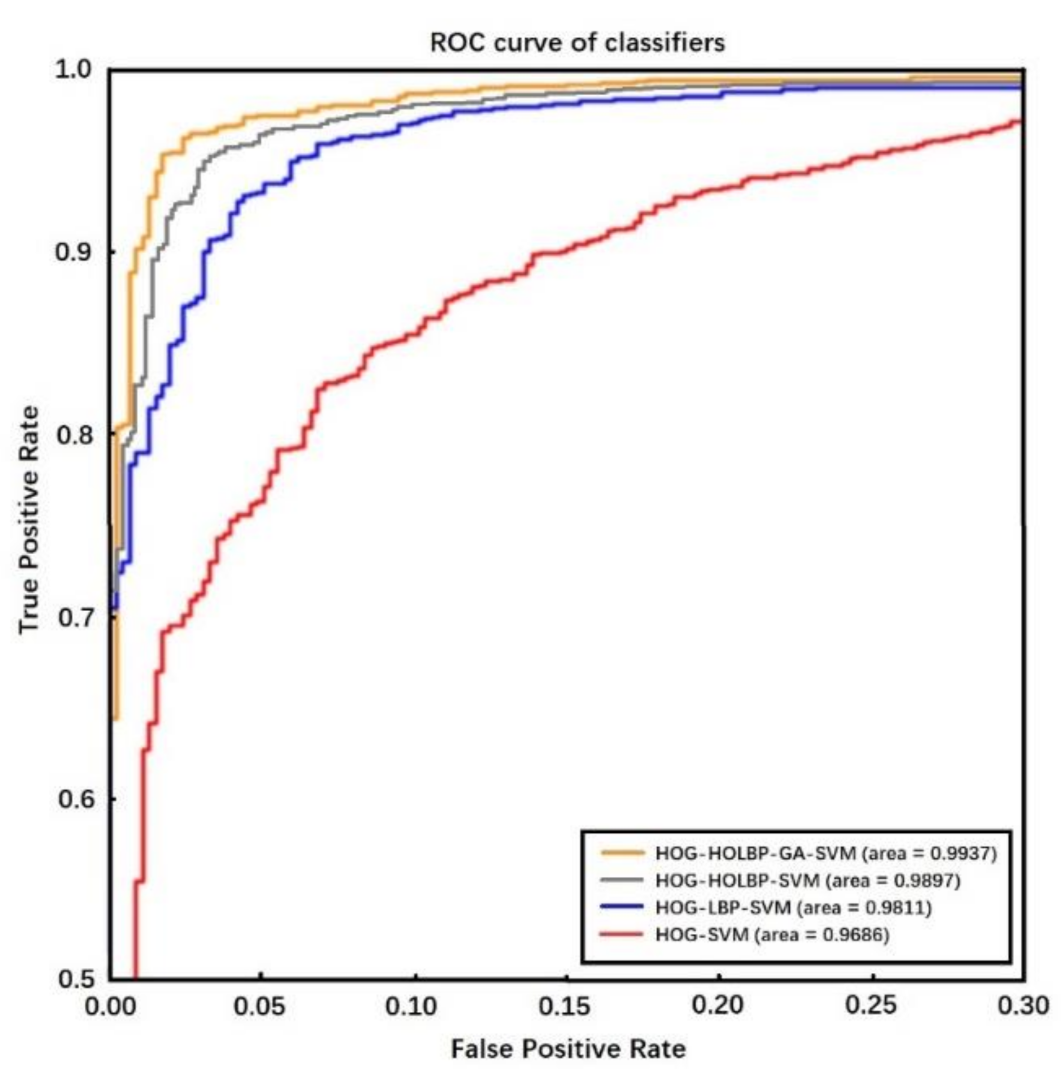

4.1. Parameters Retrieving under Genetic Algorithm Framework

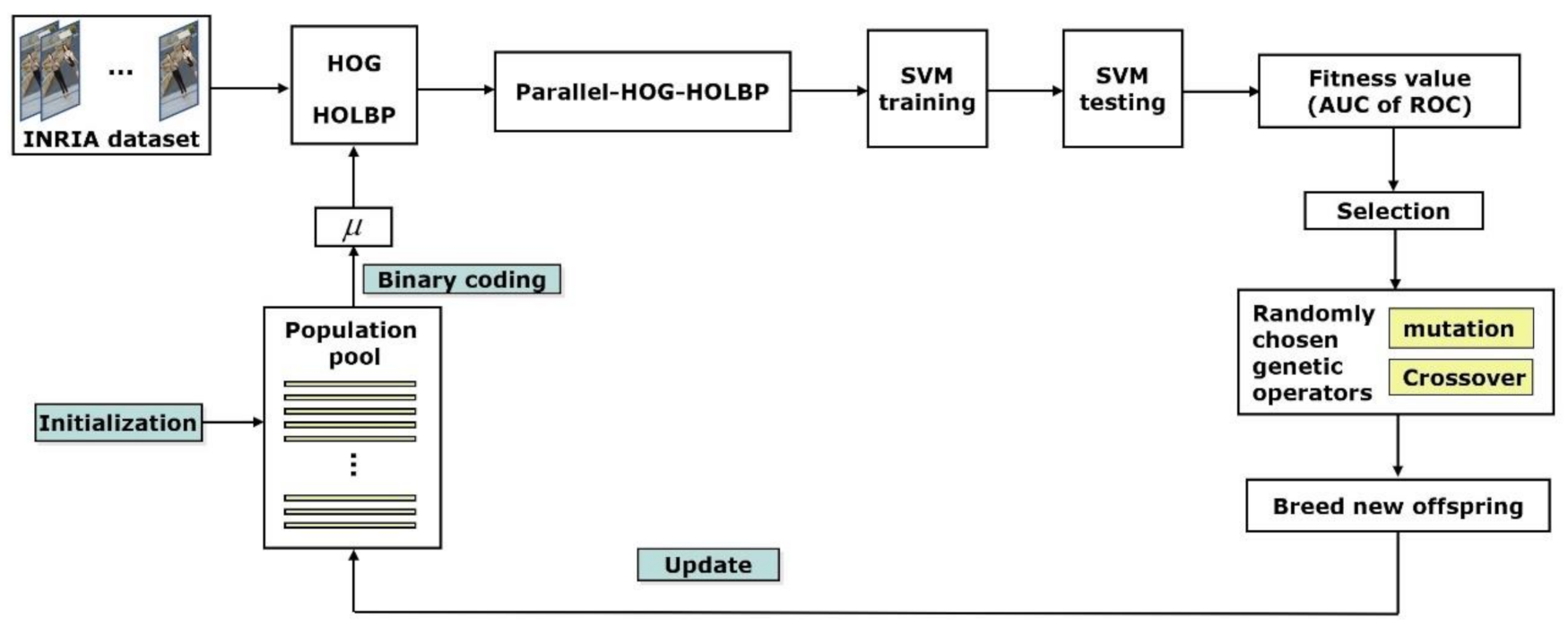

In the genetic algorithm of parameter retrieving, each individual of a chromosome represents a set of signed fuzzy measures. Due to binary coding, each chromosome is composed of 30 genes (10 genes corresponding to a parameter to be optimized). The value of each gene is a binary number. Each chromosome is decoded as three real values between 0 and 1, corresponding to the normalized value of , and . The fitness value of each chromosome is evaluated by the AUC (area under curve) of ROC (receiver operating characteristic) curve. Since the probability that a chromosome in a population can be selected to generate offspring depends on its fitness value, the pedestrian detection parameter optimization algorithm based on genetic algorithm takes the maximum AUC as the criterion to optimize.

Figure 8 shows the process diagram of parameter retrieving process based on a GA structure under the application of pedestrian detection. The algorithm starts with a randomly generated initialization population. Each individual of chromosome in the population is decoded into a set of values, which is actually a representation of a specific signed fuzzy measure. Typical HOG feature extraction and HOLBP feature construction presented in

Section 2 are performed. The two sets of features are fused by the Choquet integral with respect to the specific signed fuzzy measure represented by the corresponding individual chromosome in the current population. The fusion results are a new set of features, called parallel-HOG-HOLBP, which is consequently transmitted to SVM for classification. The same process is done for each sliding window of the images in INRIA data set [

36]. AUC is calculated from the ROC curve which is constructed for each individual of the population.

The value of AUC is used to evaluate the fitness value of the chromosome being considered. Then, a tournament selection is conducted. Better individuals (with higher AUC values) have more opportunities to perform several randomly chosen genetic operators to produce offspring. The population is updated by the newly created offspring. This process is repeated until the number of individuals generated exceeds the preset maximum size of population. In the process of program iteration, in order to keep the global search space, some special operations are used when the best fitness value remains unchanged for successive generations (the default value is 20). The individuals in the original population are divided into three parts according to the ascending order of fitness value. The excellent individuals in the first part are all retained, the individuals in the second part produce new offspring by random mutation, and the individuals in the third part are randomly replaced by the new individuals produced by previous genetic operations. As a result, the population is updated and the program continues to iterate.

4.2. Classifier Training

Each chromosome corresponds to a signed fuzzy measure. Based on each signed fuzzy measure, a Choquet integral fuses HOG and LBP features in a candidate image, and the generated parallel-HOG-HOLBP features are sent to an SVM classifier to evaluate the performance of the chromosome according to the classification results on a set of testing images.

Based on the principle of structural risk minimization, support vector machine has a very powerful ability in dealing with nonlinear problems. The algorithm uses learning samples to find an optimal hyperplane in high-dimensional space, so as to separate different samples from two groups. First, the parallel-HOG-HOLBP features of positive and negative samples are calculated as input of the SVM classifier. Then the final decision function is calculated as

where

is a nonlinear mapping from the input space to a high-dimensional feature space.

is optimal in the sense of maximizing the distance between the hyper-plane and the nearest point

. The following equation is usually used to solve optimization problem mentioned above.

where

is a slack variable, which corresponds to the vertical distance from each wrongly classified sample point to the corresponding boundary hyperplane. Parameter

is the penalty coefficient. The larger this parameter is, the more severe the penalty is.

In this paper, INRIA data set [

36] is selected as the training set to train SVM classifier, because INRIA data set is a benchmark data set which is widely used in pedestrian detection. We extract positive samples from INRIA training set according to the pedestrian coordinates marked in the dataset, and construct negative samples from the training set by randomly cropping. After extraction, the training set being used consists of 2416 positive samples and 12,180 negative samples from the INRIA dataset. The parameters of SVM classifier are shown in

Table 1.

4.3. Classifier Training and Evaluation Criterion

To evaluate the classifier constructed by each chromosome in the current iteration, 1126 positive samples and 453 negative samples are extracted from the INRIA testing set. For each chromosome, a confusion matrix is summarized by four indicators, as shown in

Figure 9. The indicators represent four situations:

The actual value is true, and the classifier assigns it to be positive (True Positive = TP);

The actual value is true, and the classifier assigns it to be negative (False Negative = FN);

The actual value is false, and the classifier assigns it to be positive (False Positive = FP);

The actual value is false, and the classifier assigns it to be negative (False Negative = TN).

Three new indicators are sequentially calculated. They are

and

Here, indicator precision and indicator recall describe the classifier’s correct predictions as a percentage of all results, where indicator F1 Score takes into account that the destination of optimization is to find the best combination of precision and recall. In our algorithm, indicator F1 Score is utilized as the fitness value of each chromosome in iterations.