1. Introduction

Technology development and social demand have caused rapid progress in virtual reality (VR) applications. VR content is also called 360° content, or omnidirectional content, which covers 360° horizontal and 180° vertical. These contents are spherical in nature, providing the user with an extraordinary experience of navigating a captured scene of the real world in every direction. Many devices have recently been supplied for VR, including Oculus Rift, HTC VIVE, Sony PlayStation VR, and Samsung GearVR. For immersive visual experiences, a high resolution and high frame rate (e.g., 8K at 90 frames per second) are expected, and therefore, the file size of the content tends to be very large. This requires extensive resources for storage and bandwidth and causes transmission delays. Additionally, 360° content distribution is expected to push the current storage and network capacities to their limits. Most head-mounted displays (HMDs) currently available on the market provide up to ultra high-definition (UHD) display resolution. As these devices usually provide a 110° field-of-view, 4K resolution is being widely accepted as a minimum functional resolution for the full 360° planar signal. However, HMDs with 4K or 8K display resolution already appear on the market. Thus, 360° planar signals with 12K or higher resolution will have to be efficiently stored and transmitted, and soon [

1].

For a 360° view and an immersive experience, the demand for high-resolution 360° content is high, and therefore, improving the compression efficiency of the content is an urgent demand. However, there is no suitable encoding technique for 360° content. Thus, mapping the spherical content to a 2D plane is a common choice. The Joint Video Exploration Team (JVET) of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11 also suggested to use existing coding systems for efficient compressions such as the H.264/AVC video coding standard [

2] and high-efficiency video coding (HEVC) standard [

3]. This activity significantly affects the development of VR applications. Several 360° coding methods have been proposed in this context, and these can be categorized into three groups, based on existing coding standards. The first type is projection-based coding [

4], which projects the spherical content on a two-dimensional plane. The second type is an optimization-based coding method [

5] that improves the coding efficiency based on the features of the content. The third type is called a region-of-interest (ROI)-based coding method [

5], and improves the coding efficiency of the content by transmitting a portion of the content in high quality based on a corresponding user ROI, and the rest in lower quality. The JVET was established in October 2015 to explore the recent advances and create a future coding standard called the versatile video codec (VVC), or H.266. The essential consideration is to find an optimal way for the efficient compression of these contents. The JVET invited interested parties from the industry, and many formats have been proposed so far. These formats are normally criticized over the artifacts introduced by the format conversion. Each format has its own merits and demerits, and different formats may introduce different artifacts [

4] such as redundant samples, shape distortion, and discontinuous boundaries, which reduce the coding efficiency. Some areas are oversampled in these formats and are then criticized for causing the encoding to waste many bits. Thus, various mapping methods have been proposed [

6,

7,

8,

9] with less distortion and pixel wasting. Many companies are developing more efficient 360° video and image compression methods, delivery systems, and products. Some preliminary 360° image and video services are already provided on several major image and video platforms, such as Facebook and YouTube. Typical formats include Equirectangular (ERP), which is the most widely used format, because it is intuitive and easy to generate [

10], and cubemap (CMP), which maps the scene onto six faces of a cube and rearranges and packs the data for conversion into the coding geometry [

11]. No matter which format is used, the encoding process will not be done in the spherical domain. An inverse mapping operation should be applied at the display side to convert back to the display geometry. So far, thirteen formats, ACP, AEP, CISP, CMP, EAC, equal-area projection (EAP), ECP, ERP, HEC, octahedron projection (OHP), RSP, segmented sphere projection (SSP), and truncated square pyramid (TSP), are proposed, and JVET is investigating the effects of various formats on the coding performance. It is also common in the industry to support multiple formats, as they provide a more personalized experience to users. Taking into account the aforementioned industry’s needs, the omnidirectional media format (OMAF) standard supports the cubemap format. These formats may be useful for efficient compression and for use in the gaming industry and 360° services such as maps, museum tours, and architectural scenes.

To assess the performance of these formats and compression technologies for the 360° content or to develop new algorithms, it is essential to evaluate the existing methods accurately. This study focuses on finding the effects of coding efficiency and provides an overview of the quality evaluation framework for various 360° image formats. The framework is designed to generate various formats of 360° image by the format conversion considered as a primary aspect of the proposed framework. The quality can be measured at different points in the processing workflow, and using different objective quality metrics (OQMs) to evaluate the impact on OQMs of various formats and codecs, the results are analyzed.

The standardization activities of quality performance for ranking these formats for a future image coding standard are limited. The existence of the numerous formats proposed so far influences a business’s choice of configuration for their work or research, so there is a need to produce evidence of selecting the best format from among the state-of-the-art formats. The main contributions can be summarized as follows:

We aim to find the coding efficiency of various formats for the 360° image when the ERP image is given.

We evaluate JPEG and JPEG 2000 as widely used image coding standards in two subsampling modes (4:4:4 and 4:2:0).

We consider the downsampled ERP as the ground truth image in our evaluation. Format conversion is applied to the downsampled ERP to find the coding gain of other formats.

We consider the recently proposed formats of equi-angular projection (EAC), equatorial cylindrical projection (ECP), and hybrid equi-angular projection (HEC).

We evaluate outcomes using quality metrics designed for 360° content and conventional 2D metrics and analyze their effects.

We evaluate both codec-level distortions and end-to-end (E2E) distortions.

We choose the image sizes recommended by a common test condition (CTC) of JVET [

12].

The remainder of this paper is organized as follows.

Section 2 introduces a brief overview of projection formats, objective quality metrics, and related works.

Section 3 presents our proposed framework for the evaluation of 360° image projection formats.

Section 4 presents the experimental setup, datasets, and evaluation results, followed by a discussion. Finally,

Section 5 concludes the paper with future research directions.

2. Related Works

A testing procedure on the exploration of coding efficiency of different formats for videos (especially) is carried out in [

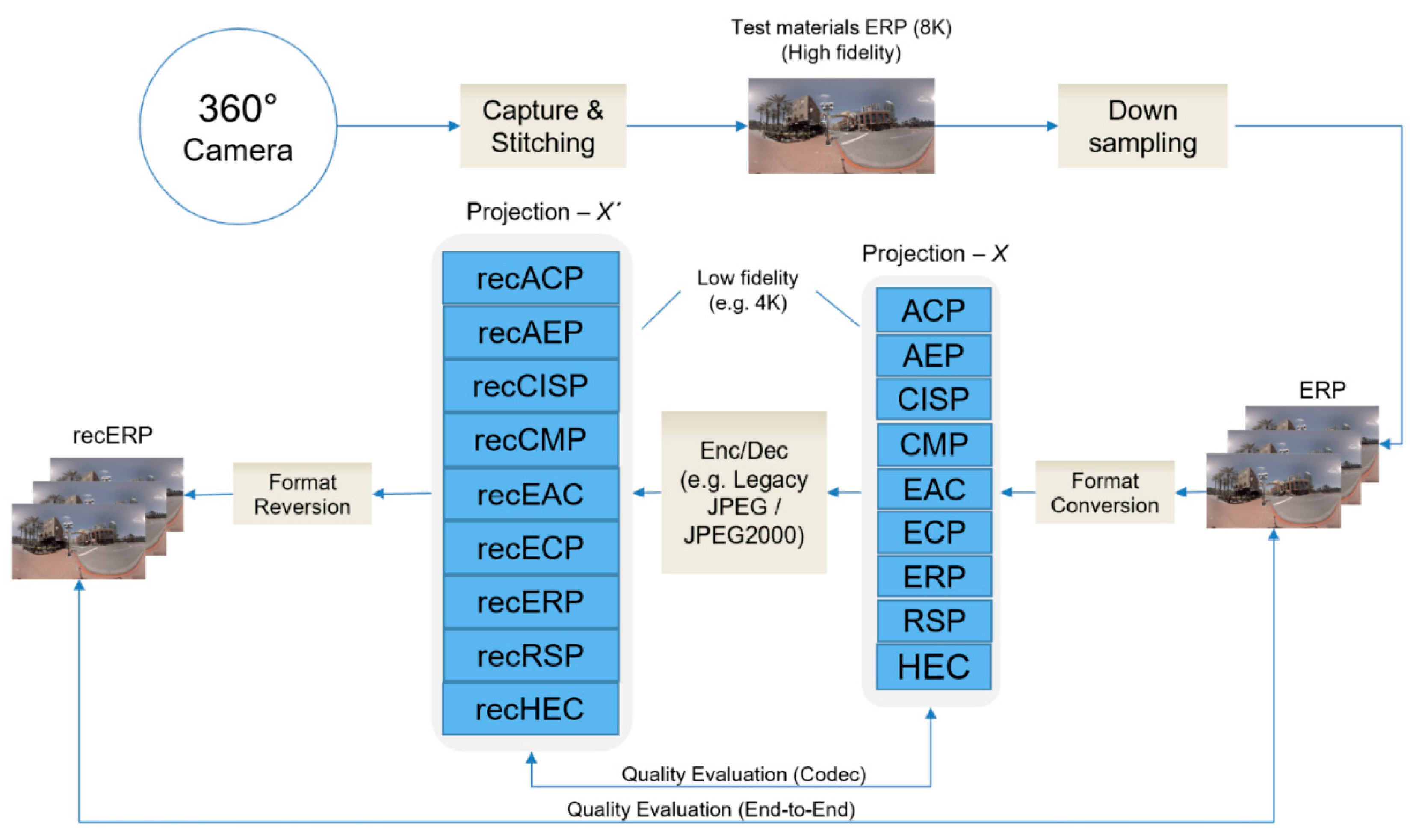

13]. The framework is based on the coding performance of projection formats, as shown in

Figure 1. Before encoding the image or video, it passes through steps A to F. First, the content is captured by covering the scene in all directions via an array of cameras, and the content is stitched to achieve the spherical view in high resolution. The content is projected on a two-dimensional plane (also known as “conversion to coding geometry”) and packed to use the generic image or video coding frameworks. The content is encoded and then decoded, and an inverse operation is performed, shown as F’ to A’. Quality metrics designed for 360° are used at two levels, i.e., for codec distortion measurement between F and F’, and for E2E distortion measurement between B and B’. More detail on the projection formats is preferentially referred to in [

4,

14,

15].

A projection-based coding technique projects the spherical content onto the 2D plane and uses a generic coding standard for the subsequent compression. Projection formats introduced in the JVET activities include adjusted cubemap projection (ACP), adjusted equal-area projection (AEP), CMP, EAC, ECP, ERP, rotated sphere projection (RSP), compact icosahedron projection (CISP), and HEC. HEC was originally called the modified cubemap projection (MCP) but was changed to HEC because it was based on EAC format. ERP and cubemap are famous formats in the industry. An ERP image maps the sphere to a 2D rectangle by stretching the pixels in the latitude direction to construct a rectangle, which has severe effects for the north and south pole of the sphere insofar as bandwidth consumption. In contrast, CMP maps the sphere on the six faces of a cube, which can mitigate geometry distortions caused by scaling. Therefore, the CMP can achieve a higher coding efficiency than the ERP. ACP [

16], EAC [

17], and HEC [

18,

19] are modified versions of CMP. Mapping the sphere on the cube faces results in the edge getting more pixels than the face of the cube, and this oversampling problem is resolved in ACP and EAC. Further, a more uniform sample is provided in HEC using the EAC. AEP is a modification of conventional ERP, and EAP was proposed to solve the oversampling problem in ERP. EAP guarantees that the area in the 2D plane is equal to the original sphere’s area [

20]. EAP was replaced by AEP [

21] to improve the compression performance and avoid the visual quality problem around the poles caused by the conventional EAP format. ERP and EAP are the two most original formats that were derived from the map projection. Thus, the compression efficiency is not a key factor to be considered [

4]. ECP is a modified version of the cylindrical projection format [

22]. It has no distortion along the equator, but distortion increases rapidly toward the poles. Unlike ERP, it preserves the scale of vertical objects, e.g., buildings, which is important for architectural scenes. CISP was proposed by Samsung and is known as a patch-based format that decreases the oversampling at the cost of discontinuous boundaries [

4]. RSP was proposed by Go Pro Inc. [

4] to improve coding efficiency, and in RSP, the original sphere is rotated before projection. This format unfolds the sphere under two different rotation angles and stitches them together like the surface of a baseball.

Evaluation of 360° videos using projection-based coding techniques was carried out by Z. Chen et al. [

4]. A number of formats and quality evaluation methods were reviewed, where the various orientation of omnidirectional video research was opened. Different formats introduce different artifacts, such as redundant samples, shape distortions, and discontinuous boundaries, which reduce the coding efficiency. It is reported that ACP outperforms other formats, as far as the coding efficiency of the HEVC test model (HM)/HEVC [

23] is concerned. Recently, new formats have been proposed by Qualcomm and MediaTek, i.e., ECP and HEC, and it is reported in the JVET that HEC shows better coding efficiency, as it has a more uniform sampling [

19]. A scalable 360° coding technique is reported in the literature, as ROI-based coding can save approximately 75% in average bitrate, with no significant decrease in quality. The viewer’s point of view (POV) is encoded in high quality, and the non-POV area is encoded in low quality based on the ERP format, leading to high bitrate savings [

5].

Quality assessment is a critical issue for 360° content. Because the content is rendered on the sphere after decoding for human watching, the traditional peak signal-to-noise ratio (PSNR) does not reflect the actual quality. Many experiments indicate that the structural similarity index (SSIM) is more consistent with a subjective quality evaluation than PSNR [

24,

25]. The use of global OQMs such as PSNR and SSIM directly in the planar mode is straightforward; however, they give the same importance to the different parts of the 360° content, which differ from classical images. The 360° content has different viewing probabilities, and probably different importance [

26]. A good OQM should correlate well with the perceived distortion of a human [

9]. A study on the quality metrics for 360° content reports that PSNR-related quality measures correlate well with the subjective quality [

27]. Weighted spherical PSNR (WS-PSNR) was introduced by Sun et al. [

28] and does not need to remap the plane; instead, it evaluates the distortion by the weights of the sampling rate.

Meanwhile, Zakharchenko et al. introduced a metric that remaps the 360° content to Craster parabolic projection PSNR (CPP-PSNR) in order to compare different geometrical representations [

29]. The metric can be used for cross-format quality measurement, i.e., if the reference is 8K and the query image is 4K or in different format. However, in the proposed metric, the correlation analysis with the subjective scores is not provided. In addition, E. Upenik et al. [

30] reported that quality metrics designed for 360° content do not outperform the metrics designed for conventional 2D images, such as SSIM and visual information fidelity (VIF). Another metric spherical structural similarity (SSSIM) index [

31] designed especially for the 360° content based on the SSIM OQM was proposed, and made an attempt to measure the quality based on the standard SSIM. Investigations have been conducted regarding the concept that subjects consistently prefer looking at the center of the front region of the 360° content [

32]. However, dependencies on the content of the video still exist. Two subjective and two objective metrics were proposed for assessing the quality, named overall difference mean opinion score, vectorized difference mean opinion score, non-content based PSNR, and CPP-PSNR. In general, all of the above metrics address the problems of the traditional metrics.

Today, a vital issue towards the development of perceptual optimized OQM is the lack of a common quality 360° dataset. A study on the subjective quality evaluation of the compressed VR images reported that the multiscale SSIM (MS-SSIM) and analysis of distortion distribution SSIM (ADD-SSIM) models led to high correlation with human visual perception [

33]. A subjective 360° content database is provided [

34] for quality assessment (QA) for coding applications based on subjective ratings and a differential mean opinion score (DMOS). Another 360° content database is provided, including 16 source images and 528 compressed ones [

35], and can be used to facilitate future research work on a coding application.

5. Conclusions

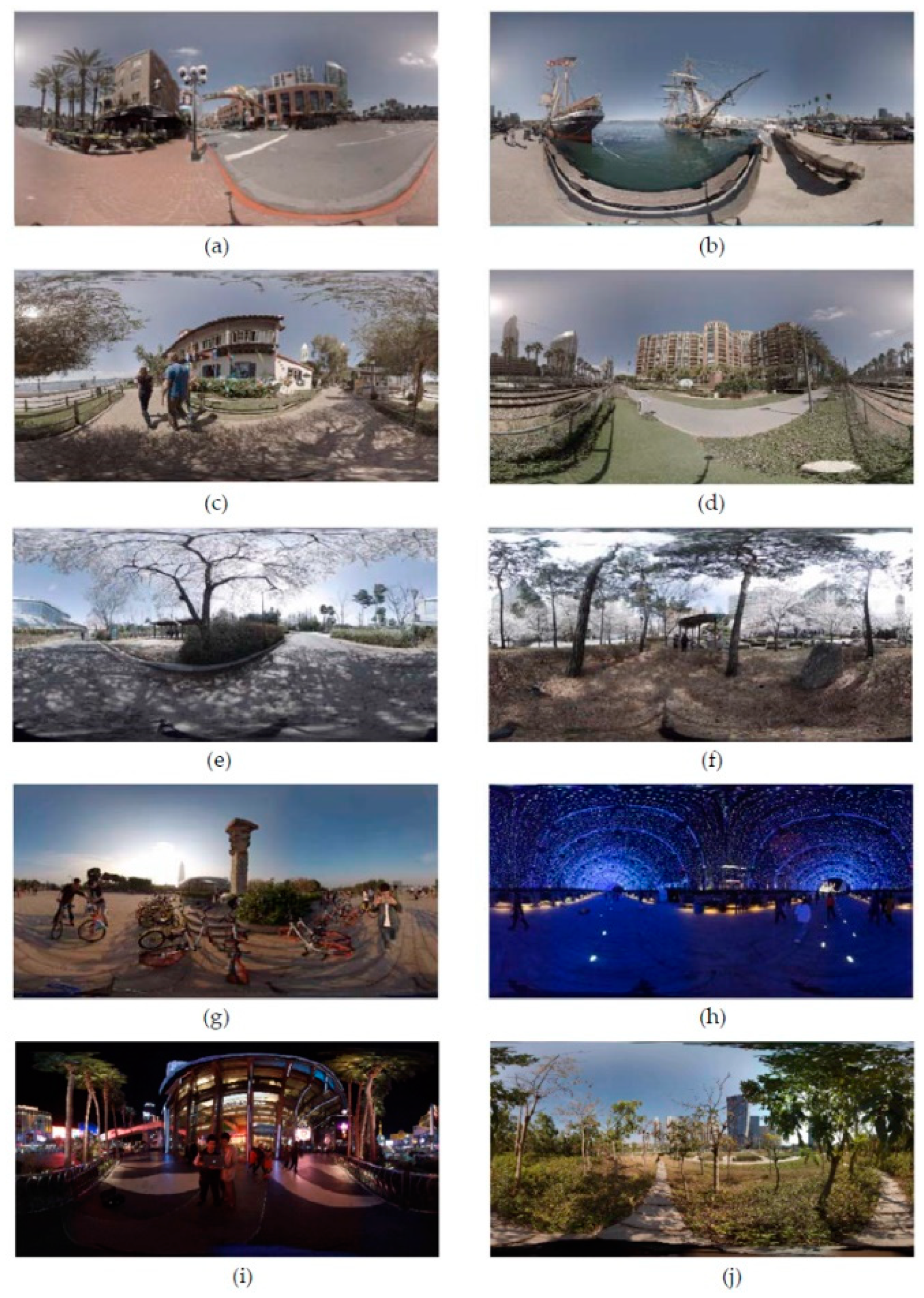

This study evaluated 360° image projection formats as adapted to the input of legacy JPEG and JPEG 2000 images when a ground truth image is given. Various formats are generated from the ERP format. Nine state-of-the-art formats were selected for our evaluation: ACP, AEP, CISP, CMP, EAC, ECP, ERP, RSP, and HEC. We propose an evaluation framework that reduces the bias toward the ERP format by employing six OQMs: WS-PSNR, PSNR, SSIM, VIF, ADD-SSIM, and PSIM. Selected sample images have attributes that show the perceptual image quality. We conclude that the ERP format, which is currently a commonly used format in the industry, is not appropriate for coding performance. HEC and ECP were found to be the best formats from among the selected state-of-the-art formats and showed a significant gain over ERP formats at the codec level. However, there still exists a contradiction between OQMs at E2E distortion-level measurement.

Currently, the evaluation has been conducted for still images. In the future, we plan to focus on the following three research directions.

- (1)

Extending our research for videos should prove interesting.

- (2)

Designing a quality metric for the 360° content that correlates well with the perceived distortion of a human would be interesting for many researchers.

- (3)

It would also be a valuable contribution to the community to have a comprehensive set of subjective tests. The subjective test can be carried out in two directions: the distortion introduced to the content due to the format conversion step for adjusting to the input of existing coding standard and the distortions caused to the content due to the codec.