A Smart System for Low-Light Image Enhancement with Color Constancy and Detail Manipulation in Complex Light Environments

Abstract

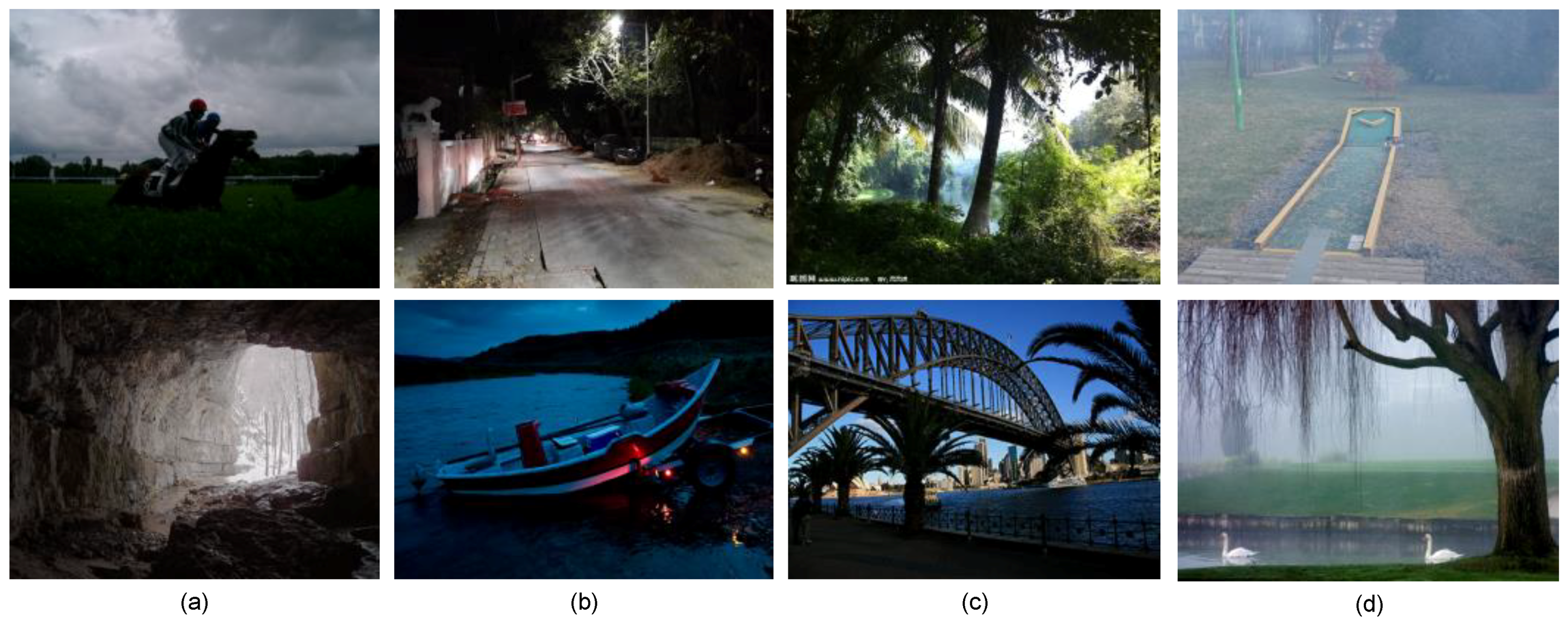

1. Introduction

2. Literature Review

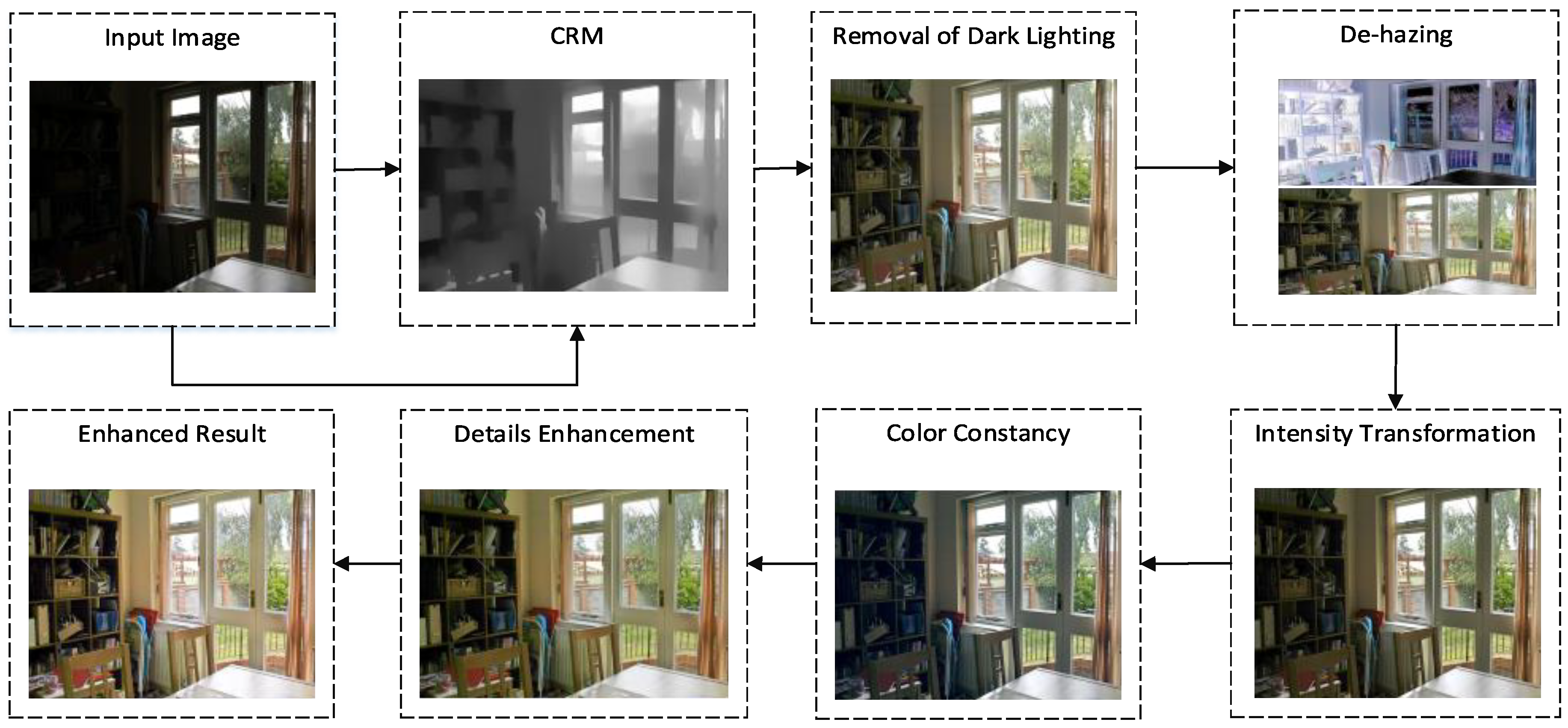

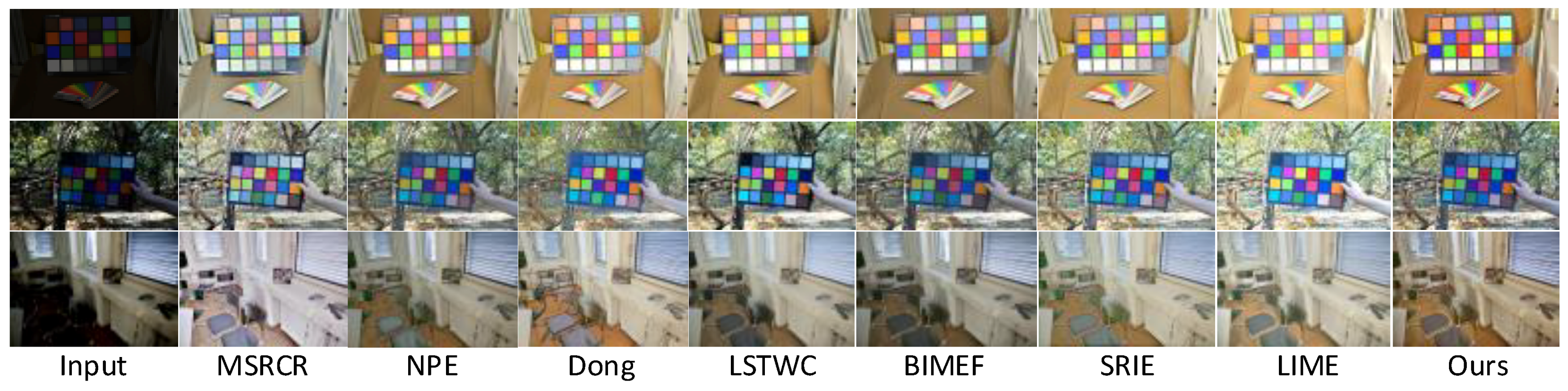

3. Proposed Framework

3.1. Camera Response Model

3.2. Dehazing and Intensity Transformation

3.3. Color Constancy

3.4. Details Enhancement

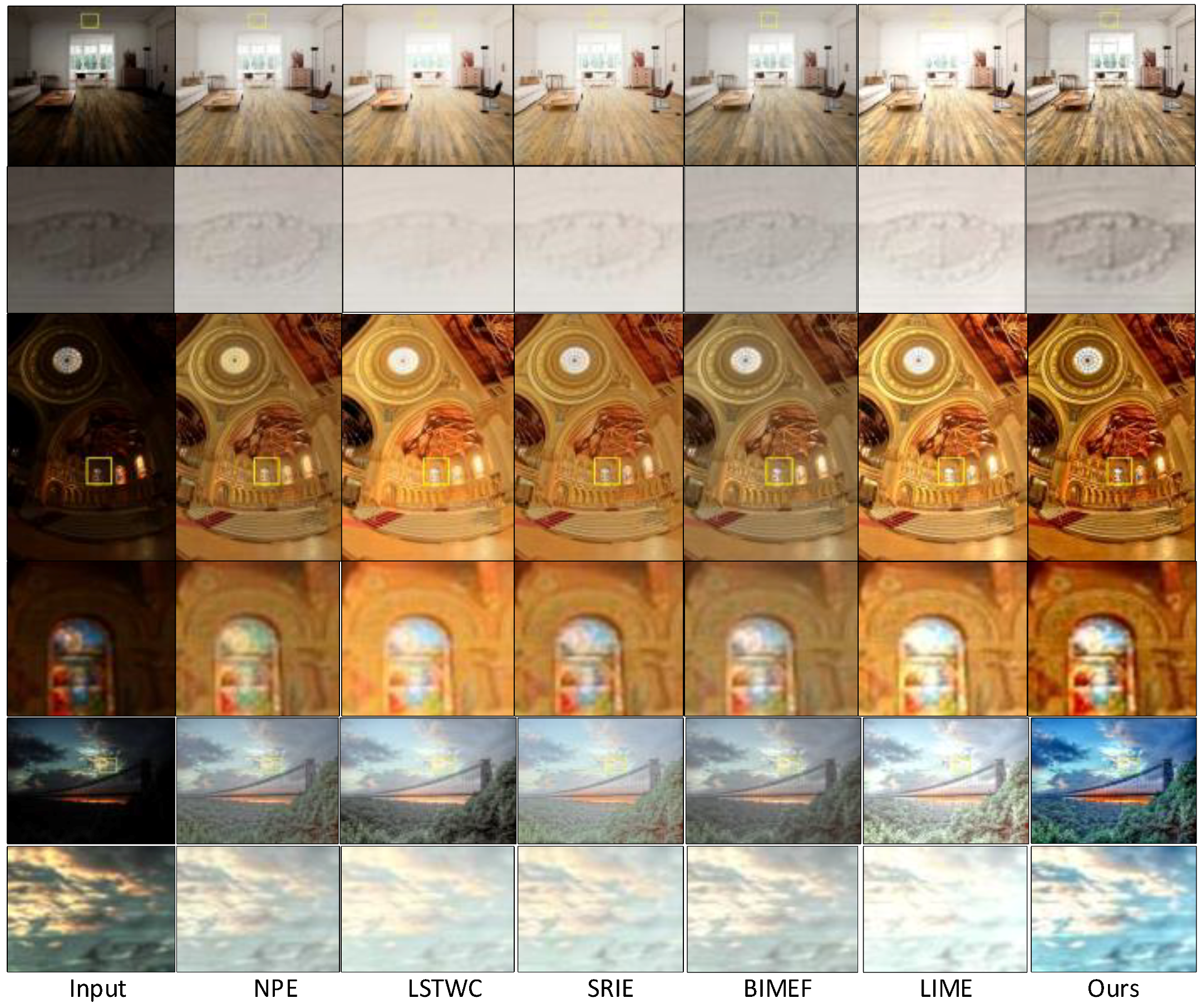

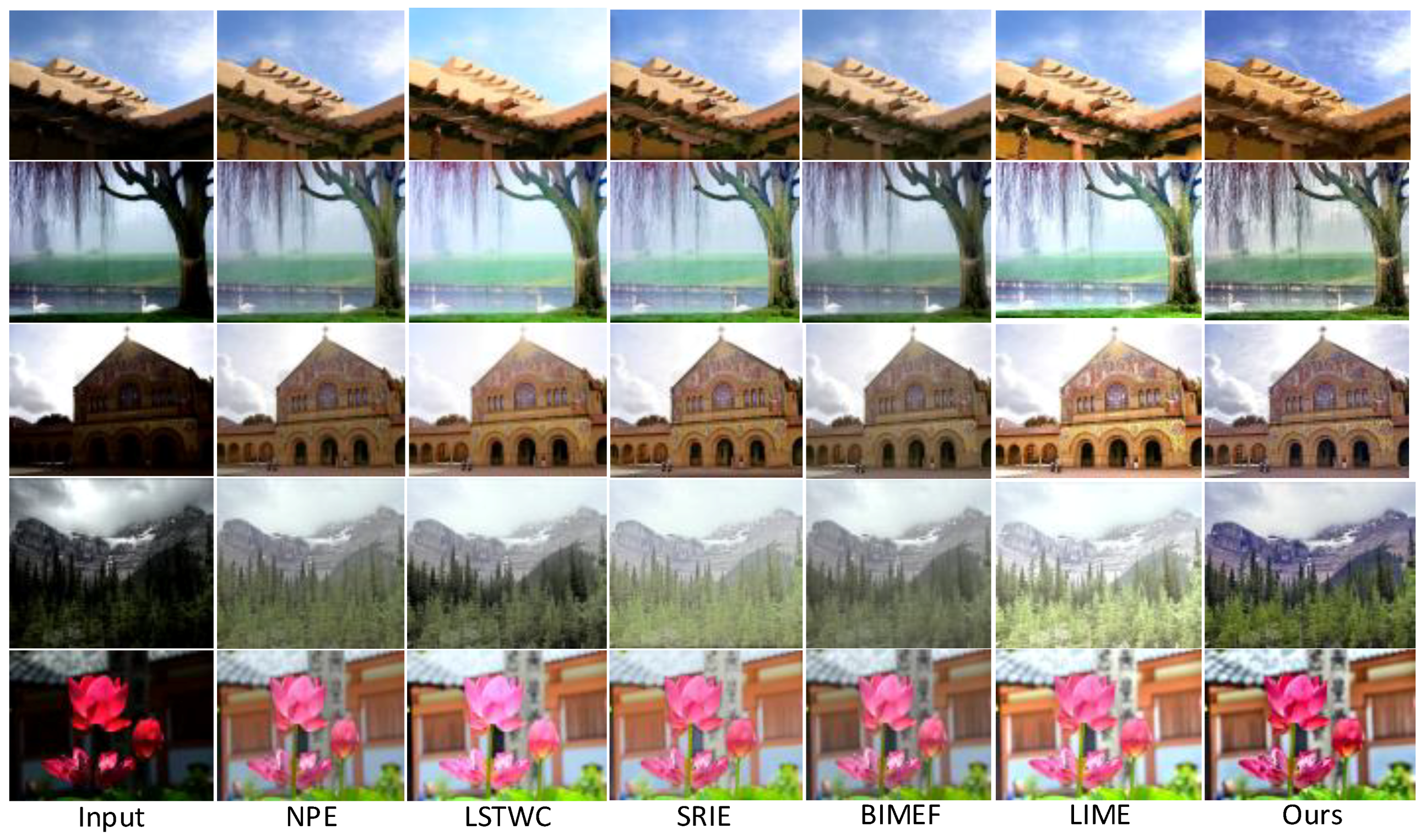

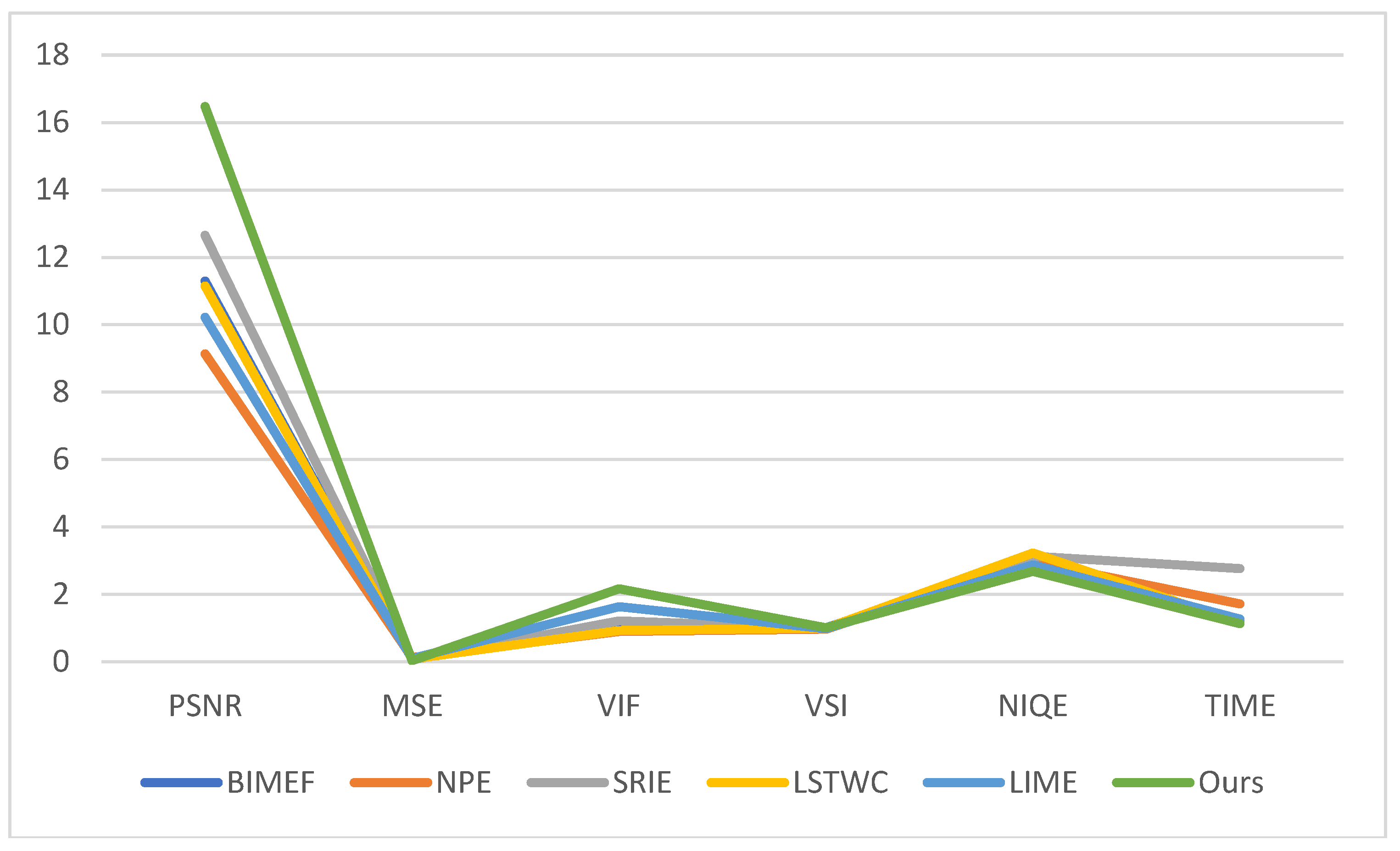

4. Evaluation and Results

4.1. Light Distortion

4.2. Color Distortion

4.3. Running Time Comparison

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Chan, F.H.; Lam, F.K. Image contrast enhancement by constrained local histogram equalization. Comput. Vis. Image Underst. 1999, 73, 281–290. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. J. Image Video Process. 2008, 2008, 1. [Google Scholar] [CrossRef]

- Jin, L.; Satoh, S.; Sakauchi, M. A novel adaptive image enhancement algorithm for face detection. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 26 August 2004; pp. 843–848. [Google Scholar]

- Leyvand, T.; Cohen-Or, D.; Dror, G.; Lischinski, D. Data-driven enhancement of facial attractiveness. ACM Trans. Gr. (TOG) 2008, 27, 38. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 580–587. [Google Scholar]

- Ghita, O.; Whelan, P.F. A new GVF-based image enhancement formulation for use in the presence of mixed noise. Pattern Recognit. 2010, 43, 2646–2658. [Google Scholar] [CrossRef]

- Panetta, K.; Agaian, S.; Zhou, Y.; Wharton, E.J. Parameterized logarithmic framework for image enhancement. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2011, 41, 460–473. [Google Scholar] [CrossRef] [PubMed]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.-C.; Cheng, F.-C.; Chiu, Y.-S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2013, 22, 1032–1041. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Gr. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Chen, S.-D.; Ramli, A.R. Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans. Consum. Electron. 2003, 49, 1310–1319. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.-P.; Zhao, Y.-M.; Hu, F.-Q. A nonlinear image enhancement algorithm based on single scale retinex. J.-Shanghai Jiaotong Univ.-Chin. Ed. 2007, 41, 685–688. [Google Scholar]

- Jobson, D.J.; Rahman, Z.-U.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Dong, X.; Pang, Y.A.; Wen, J.G. Fast efficient algorithm for enhancement of low lighting video. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2010. [Google Scholar]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.P.; Ding, X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2782–2790. [Google Scholar]

- Lee, J.Y.; Sunkavalli, K.; Lin, Z.; Shen, X.; So Kweon, I. Automatic content-aware color and tone stylization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2470–2478. [Google Scholar]

- Bhutada, G.G.; Anand, R.S.; Saxena, S.C. Edge preserved image enhancement using adaptive fusion of images denoised by wavelet and curvelet transform. Dig. Signal Process. 2011, 21, 118–130. [Google Scholar] [CrossRef]

- Zha, X.-Q.; Luo, J.-P.; Jiang, S.-T.; Wang, J.-H. Enhancement of polysaccharide production in suspension cultures of protocorm-like bodies from Dendrobium huoshanense by optimization of medium compositions and feeding of sucrose. Process Biochem. 2007, 42, 344–351. [Google Scholar] [CrossRef]

- Matin, F.; Jung, Y.; Park, K.-H. Multiscale Retinex Algorithm with tuned parameter by Particle Swarm Optimization. Korea Inst. Commun. Sci. Proc. Symp. Korean Inst. Commun. Inf. Sci. 2017, 6, 1636. [Google Scholar]

- Lin, H.; Shi, Z. Multi-scale retinex improvement for nighttime image enhancement. Optik-Int. J. Light Electron Opt. 2014, 125, 7143–7148. [Google Scholar] [CrossRef]

- Song, J.; Zhang, L.; Shen, P.; Peng, X.; Zhu, G. Single low-light image enhancement using luminance map. In Proceedings of the Chinese Conference on Pattern Recognition, Chengdu, China, 5–7 November 2016; pp. 101–110. [Google Scholar]

- Tai, Y.-W.; Chen, X.; Kim, S.; Kim, S.J.; Li, F.; Yang, J.; Yu, J.; Matsushita, Y.; Brown, M.S. Nonlinear camera response functions and image deblurring: Theoretical analysis and practice. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2498–2512. [Google Scholar]

- Huo, Y.; Zhang, X. Single image-based HDR image generation with camera response function estimation. IET Image Process. 2017, 11, 1317–1324. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Ren, Y.; Wang, R.; Wang, W. A New Image Contrast Enhancement Algorithm Using Exposure Fusion Framework. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; pp. 36–46. [Google Scholar]

- Qian, X.; Wang, Y.; Wang, B. Fast color contrast enhancement method for color night vision. Infrared Phys. Technol. 2012, 55, 122–129. [Google Scholar] [CrossRef]

- Raju, G.; Nair, M.S. A fast and efficient color image enhancement method based on fuzzy-logic and histogram. AEU-Int. J. Electron. Commun. 2014, 68, 237–243. [Google Scholar] [CrossRef]

- Hao, S.; Feng, Z.; Guo, Y. Low-light image enhancement with a refined illumination map. Multimed. Tools Appl. 2017, 77, 29639–29650. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, G.; Mei, C.; Zhang, D.; Song, X. Color enhancement algorithm for low-quality image based on gamma mapping. In Proceedings of the Sixth International Conference on Electronics and Information Engineering, Dalian, China, 3 December 2015; p. 97941X. [Google Scholar]

- Provenzi, E.; Caselles, V. A wavelet perspective on variational perceptually-inspired color enhancement. Int. J. Comput. Vis. 2014, 106, 153–171. [Google Scholar] [CrossRef]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A fusion-based enhancing method for weakly illuminated images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

- Mann, S. Comparametric equations with practical applications in quantigraphic image processing. IEEE Trans. Image Process. 2000, 9, 1389–1406. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Fattal, R.; Agrawala, M.; Rusinkiewicz, S. Multiscale shape and detail enhancement from multi-light image collections. In Proceedings of the ACM Transactions on Graphics (TOG), San Diego, CA, USA, 5–9 August 2007; Volume 26, p. 51. [Google Scholar]

- Cheng, D.; Prasad, D.K.; Brown, M.S. Illuminant estimation for color constancy: Why spatial-domain methods work and the role of the color distribution. JOSA A 2014, 31, 1049–1058. [Google Scholar] [CrossRef]

- Lynch, S.; Drew, M.; Finlayson, G. Colour constancy from both sides of the shadow edge. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 899–906. [Google Scholar]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- Vonikakis, V.; Andreadis, I.; Gasteratos, A. Fast centre–surround contrast modification. IET Image Process. 2008, 2, 19–34. [Google Scholar] [CrossRef]

- Petro, A.B.; Sbert, C.; Morel, J.-M. Multiscale retinex. Image Process. On Line 2014, 71–88. [Google Scholar] [CrossRef]

- Wang, S.; Zheng, J.; Hu, H.-M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Ying, Z.; Li, G.; Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv, 2017; arXiv:1711.00591. [Google Scholar]

- Łoza, A.; Bull, D.R.; Hill, P.R.; Achim, A.M. Automatic contrast enhancement of low-light images based on local statistics of wavelet coefficients. Dig. Signal Process. 2013, 23, 1856–1866. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a Completely Blind Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 22, 209–212. [Google Scholar] [CrossRef]

| Methods | PSNR | MSE | VIF | VSI | NIQE | TIME |

|---|---|---|---|---|---|---|

| BIMEF | 11.29358 | 0.074241 | 1.07210 | 0.998304 | 3.0815 | 1.179365 |

| NPE | 9.132491 | 0.12211 | 0.891493 | 0.96469 | 2.9666 | 1.717044 |

| SRIE | 12.6508 | 0.054315 | 1.20474 | 0.985432 | 3.1348 | 2.765191 |

| LSTWC | 11.14675 | 0.076794 | 0.91805 | 0.985332 | 3.2217 | 1.14674 |

| LIME | 10.21465 | 0.095178 | 1.630941 | 0.979886 | 2.8744 | 1.269965 |

| Ours | 16.47228 | 0.028364 | 2.166639 | 0.99922 | 2.6730 | 1.128813 |

| Methods | NUS | UEA | VV | NPE | LIME | MEF |

|---|---|---|---|---|---|---|

| MSRCR | 3034 | 1578 | 2728 | 1881 | 1829 | 1678 |

| NPE | 411 | 689 | 817 | 639 | 1468 | 1146 |

| Dong | 711 | 1337 | 848 | 1021 | 1239 | 1057 |

| LSTWC | 991 | 881 | 1011 | 994 | 1189 | 979 |

| BIMEF | 789 | 754 | 864 | 912 | 790 | 1021 |

| SRIE | 414 | 657 | 560 | 530 | 819 | 747 |

| LIME | 1428 | 960 | 1168 | 1090 | 1317 | 1063 |

| Ours | 420 | 488 | 423 | 501 | 492 | 337 |

| Dataset | MSRCR | NPE | Dong | SRIE | LIME | LSTWC | BIMEF | Ours |

|---|---|---|---|---|---|---|---|---|

| UEA | 26.93 | 19.59 | 21.60 | 23.70 | 26.18 | 20.99 | 21.23 | 17.99 |

| NUS | 22.04 | 19.89 | 24.51 | 18.55 | 27.79 | 21.55 | 19.66 | 16.89 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, Z.; Aamir, M.; Pu, Y.-F.; Ullah, F.; Dai, Q. A Smart System for Low-Light Image Enhancement with Color Constancy and Detail Manipulation in Complex Light Environments. Symmetry 2018, 10, 718. https://doi.org/10.3390/sym10120718

Rahman Z, Aamir M, Pu Y-F, Ullah F, Dai Q. A Smart System for Low-Light Image Enhancement with Color Constancy and Detail Manipulation in Complex Light Environments. Symmetry. 2018; 10(12):718. https://doi.org/10.3390/sym10120718

Chicago/Turabian StyleRahman, Ziaur, Muhammad Aamir, Yi-Fei Pu, Farhan Ullah, and Qiang Dai. 2018. "A Smart System for Low-Light Image Enhancement with Color Constancy and Detail Manipulation in Complex Light Environments" Symmetry 10, no. 12: 718. https://doi.org/10.3390/sym10120718

APA StyleRahman, Z., Aamir, M., Pu, Y.-F., Ullah, F., & Dai, Q. (2018). A Smart System for Low-Light Image Enhancement with Color Constancy and Detail Manipulation in Complex Light Environments. Symmetry, 10(12), 718. https://doi.org/10.3390/sym10120718