Vegetation Classification and Extraction of Urban Green Spaces Within the Fifth Ring Road of Beijing Based on YOLO v8

Abstract

1. Introduction

2. Materials and Methods

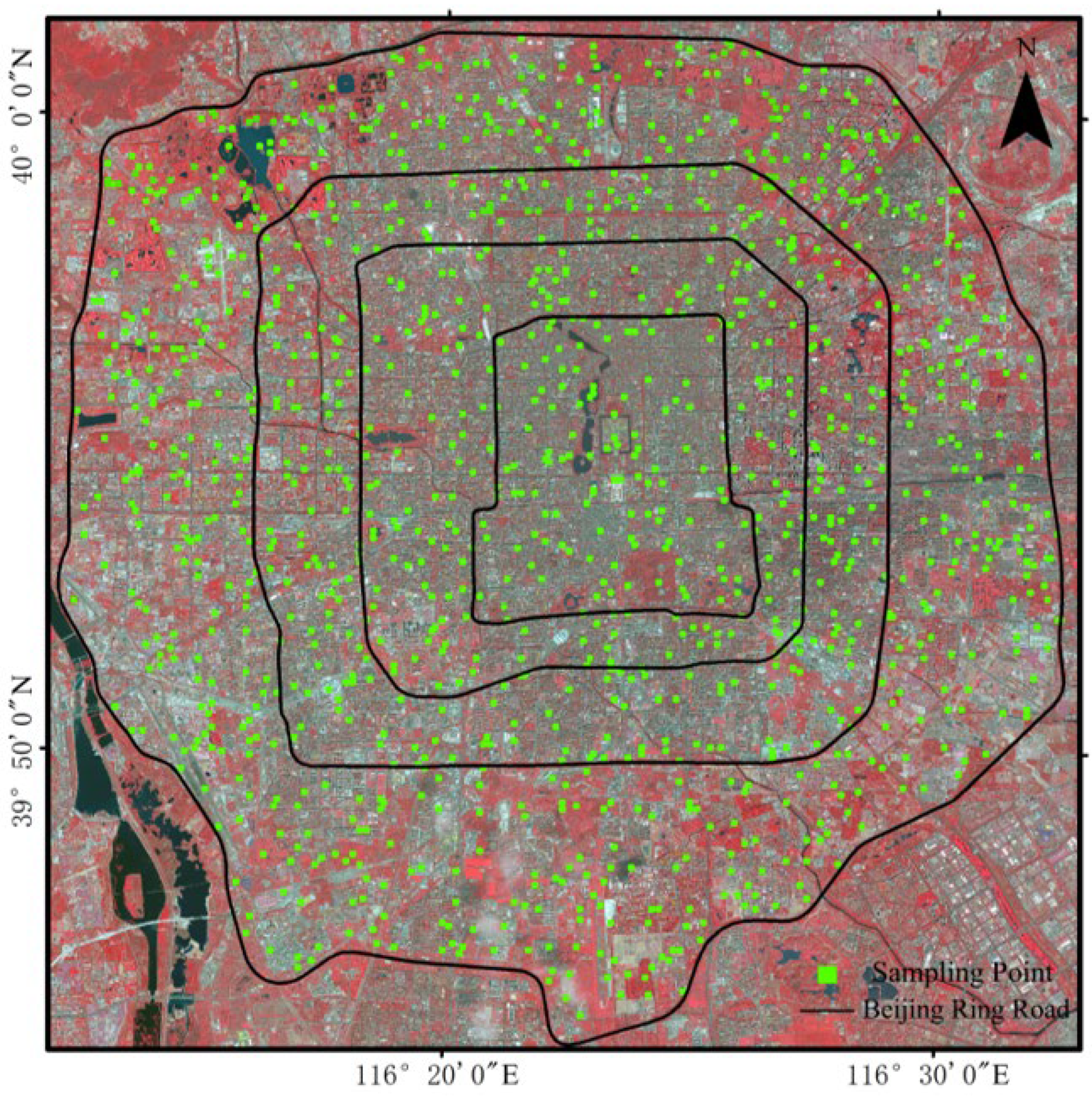

2.1. Study Area

2.2. Data Sources and Data Processing

2.2.1. Satellite Images

2.2.2. Data Processing

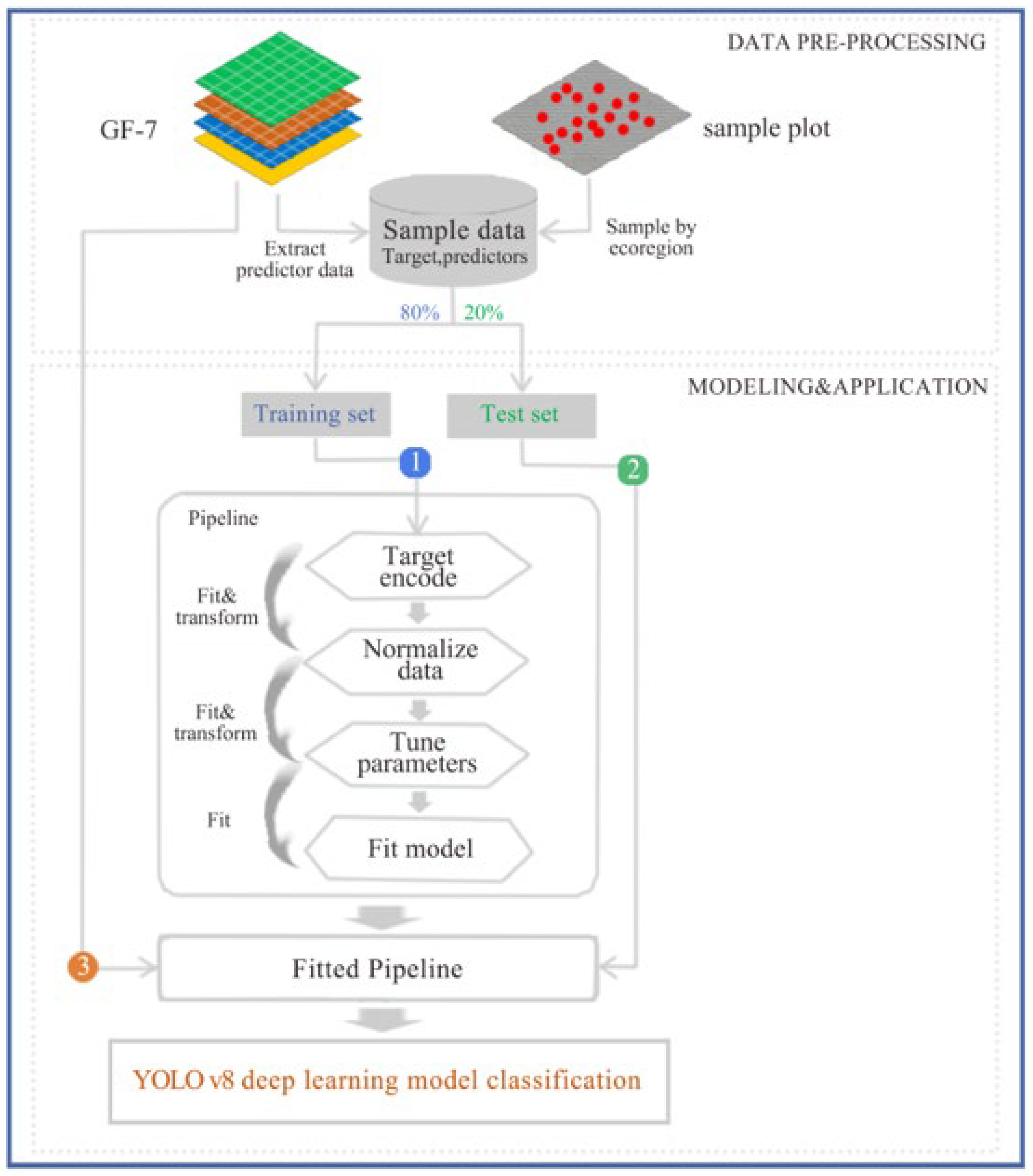

2.3. Methods

2.3.1. YOLO v8 Deep Learning Model

2.3.2. Support Vector Machine

2.3.3. Maximum Likelihood Classification

2.3.4. Evaluation Metrics

3. Results

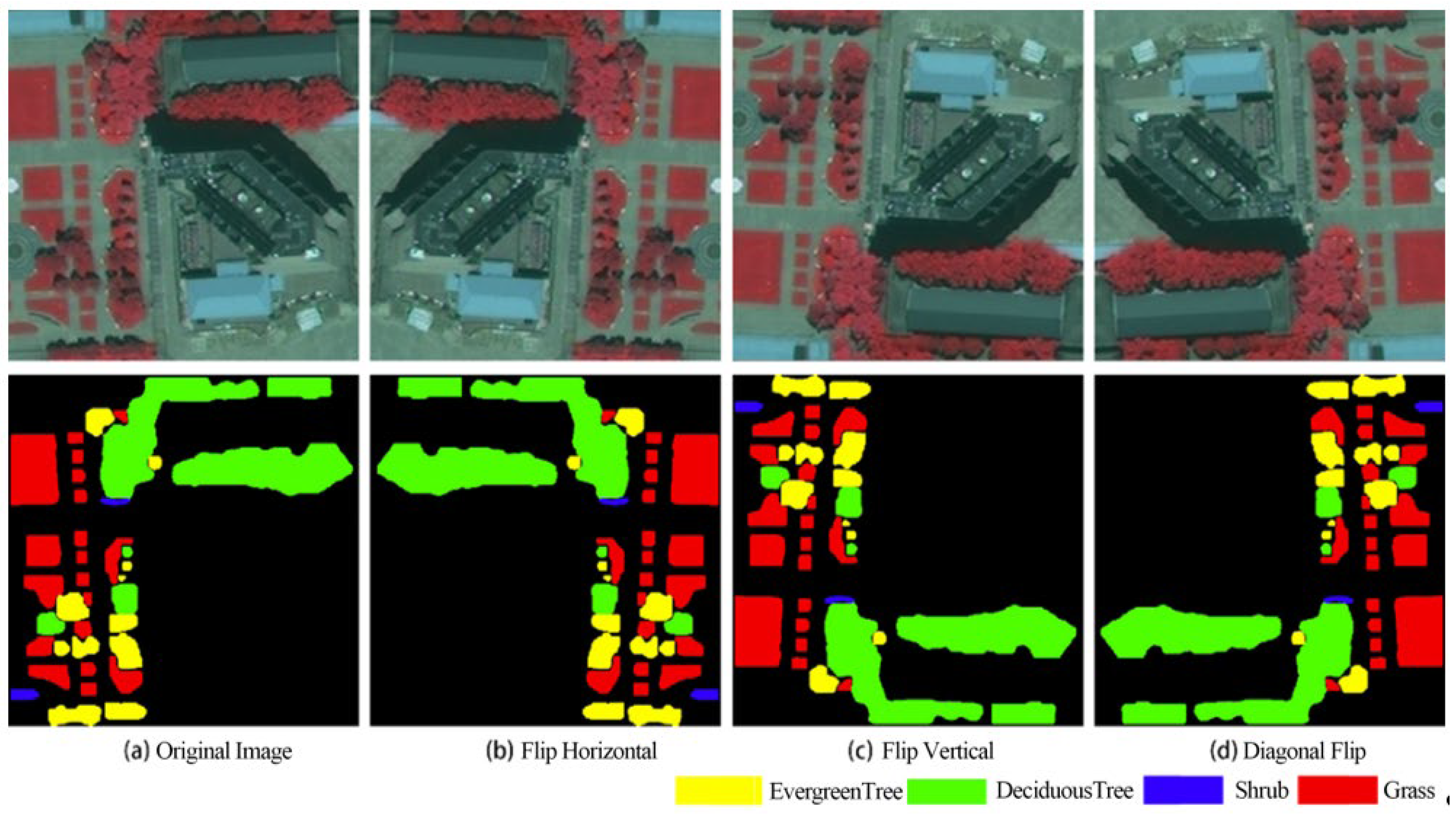

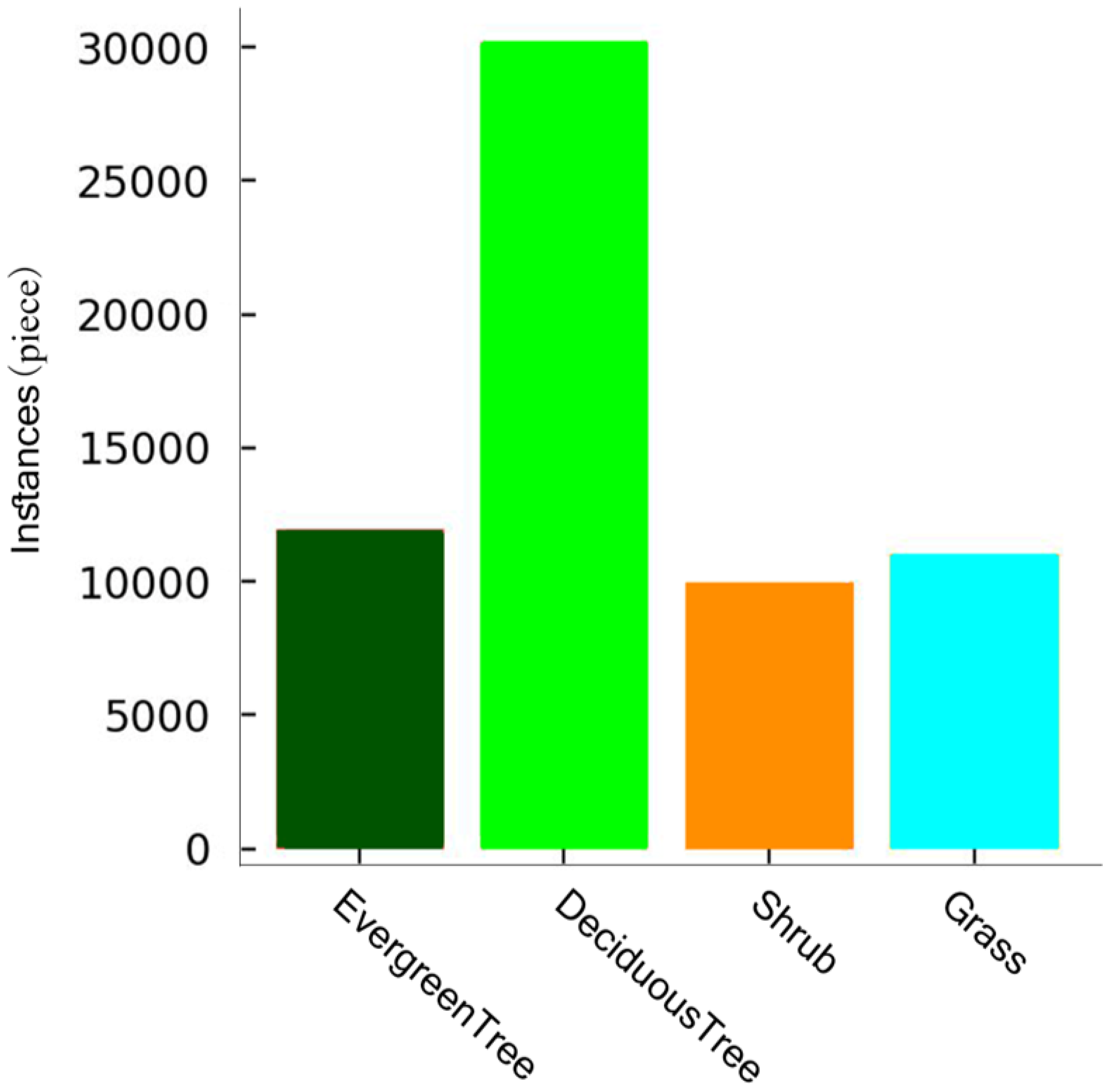

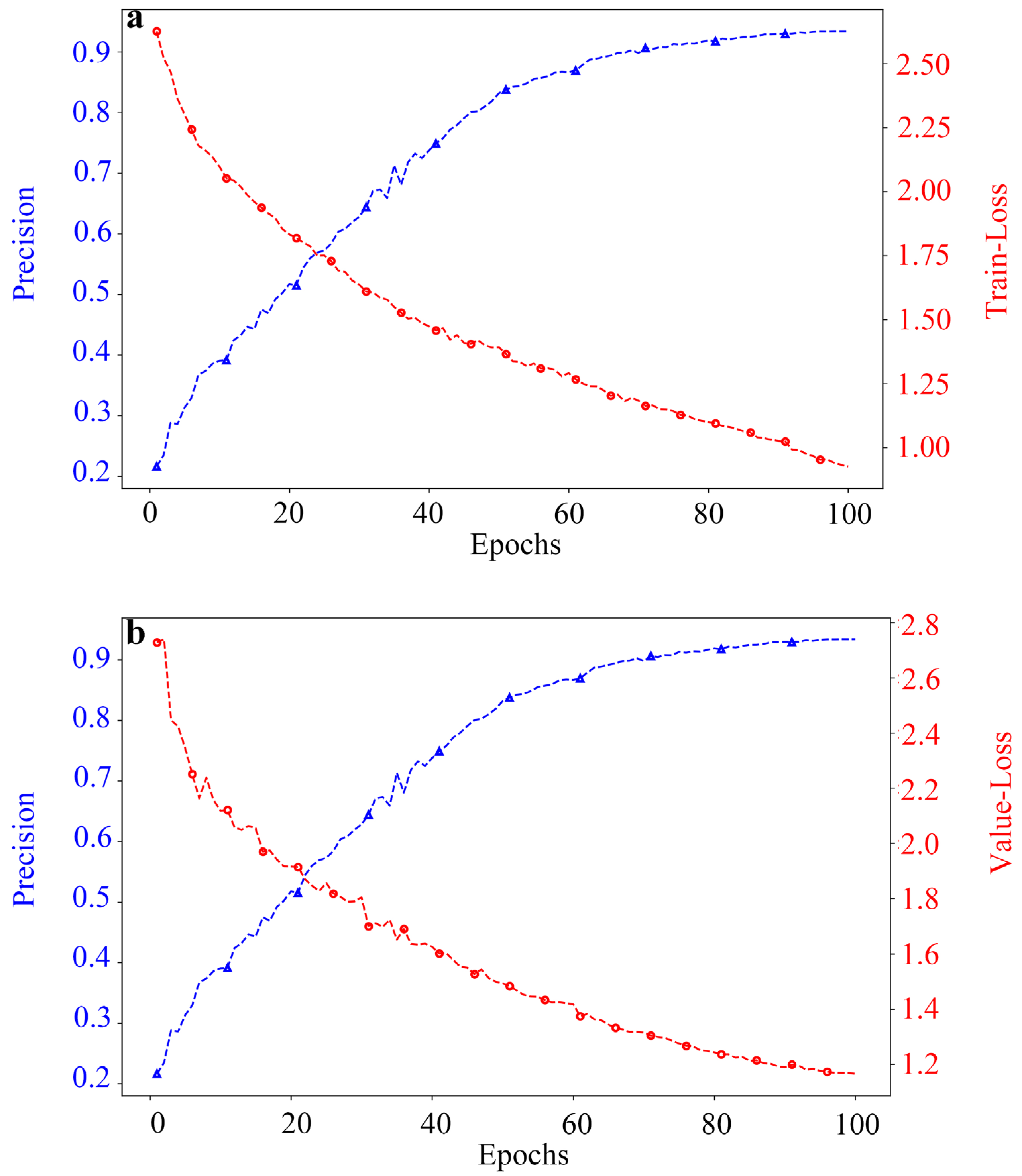

3.1. Training of Deep Learning Models

3.2. Accuracy Evaluation of the Green Space Classification Model Within the Five Rings

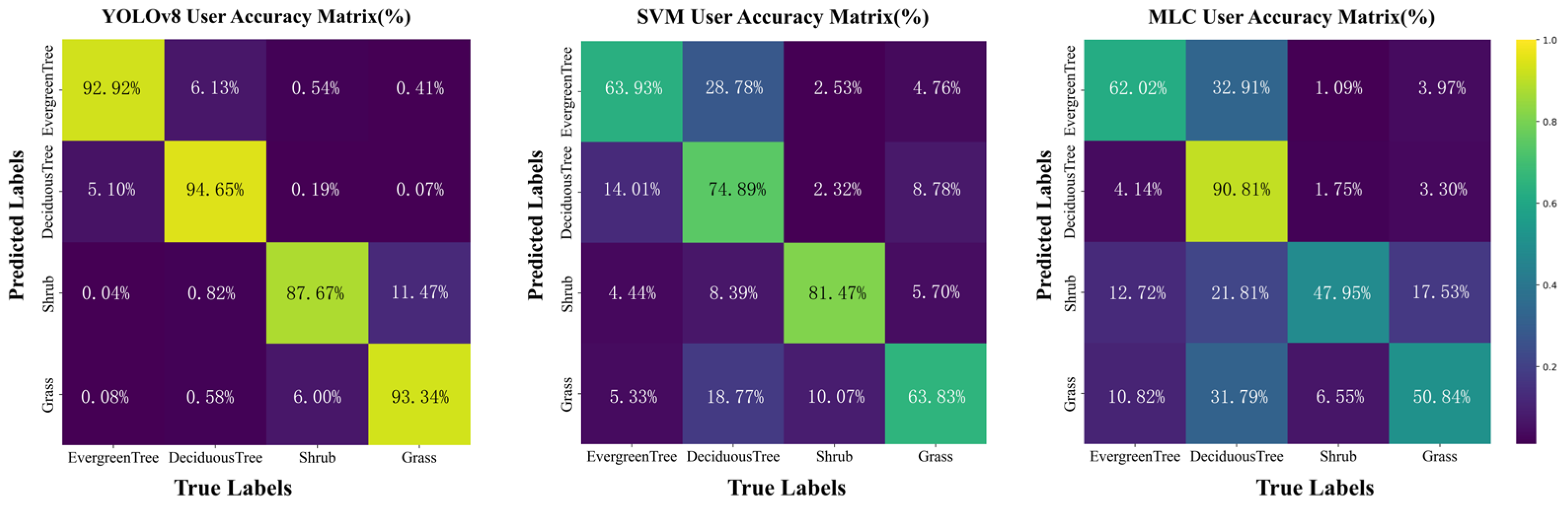

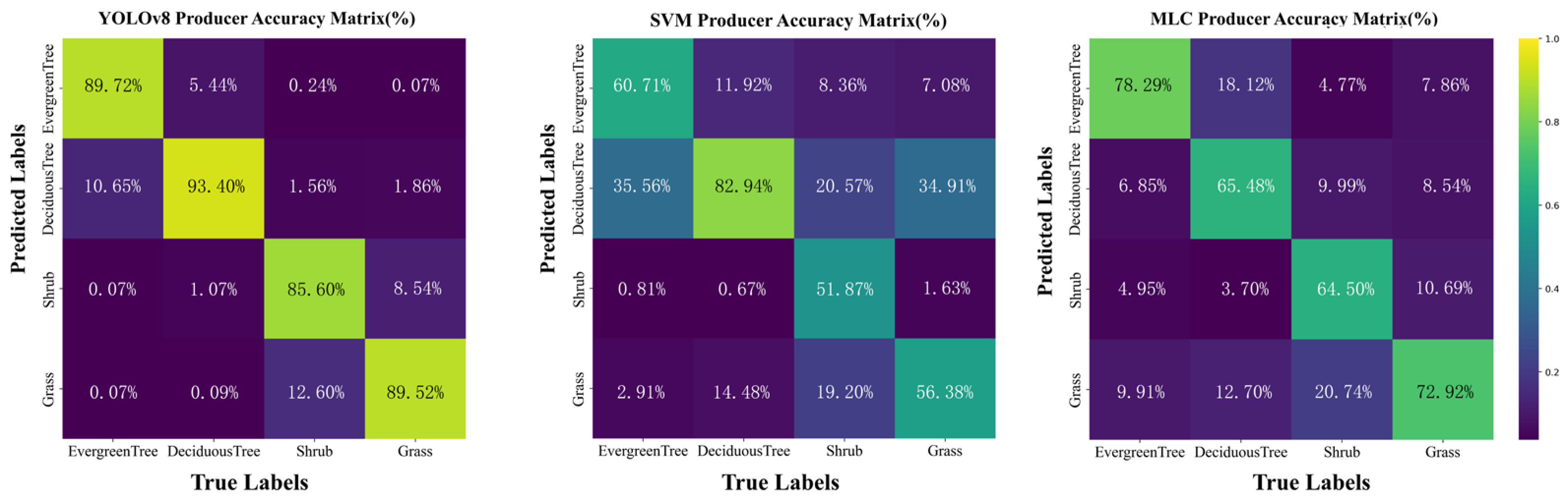

3.2.1. Comparison of Model Classification Accuracy

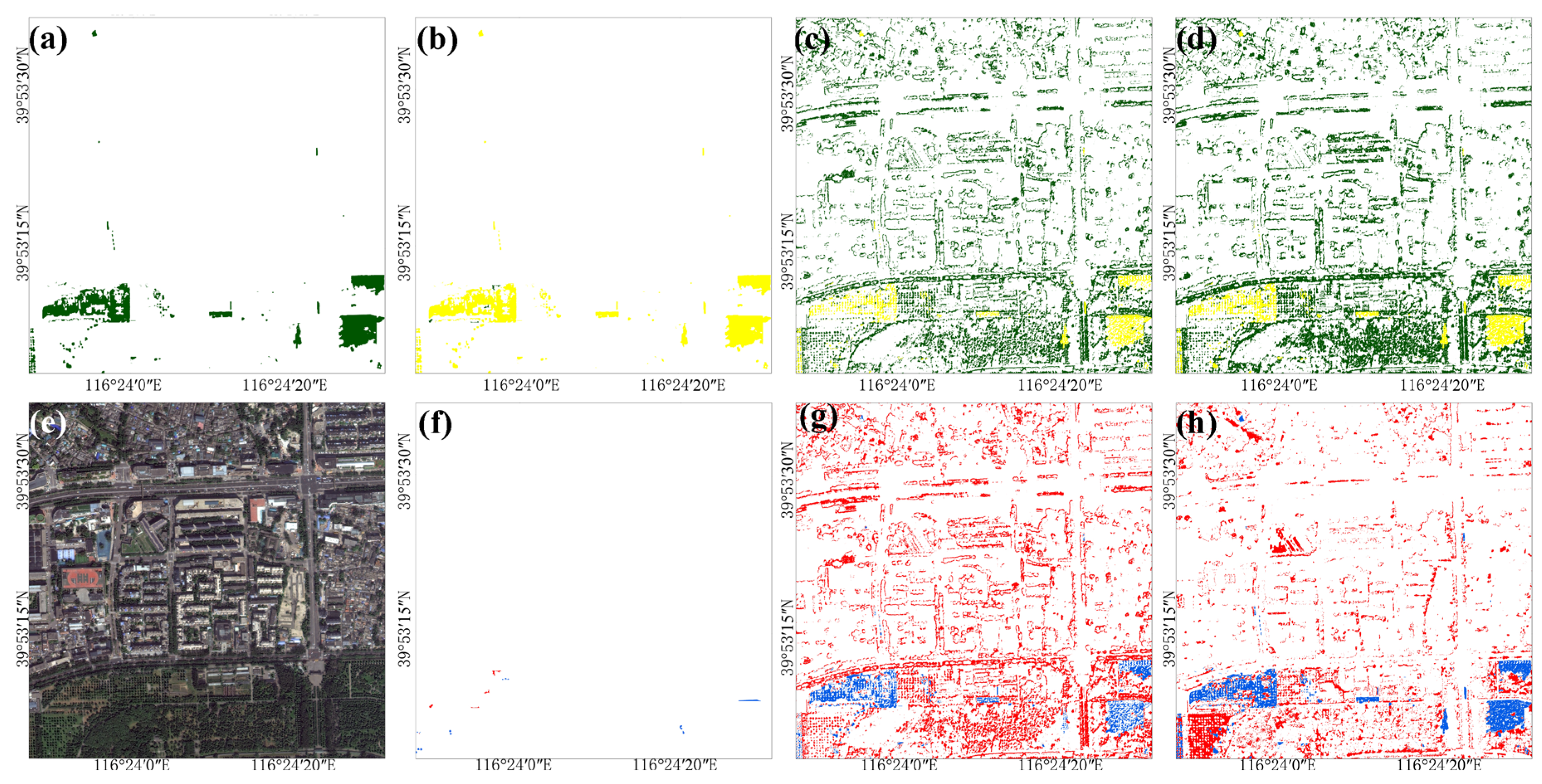

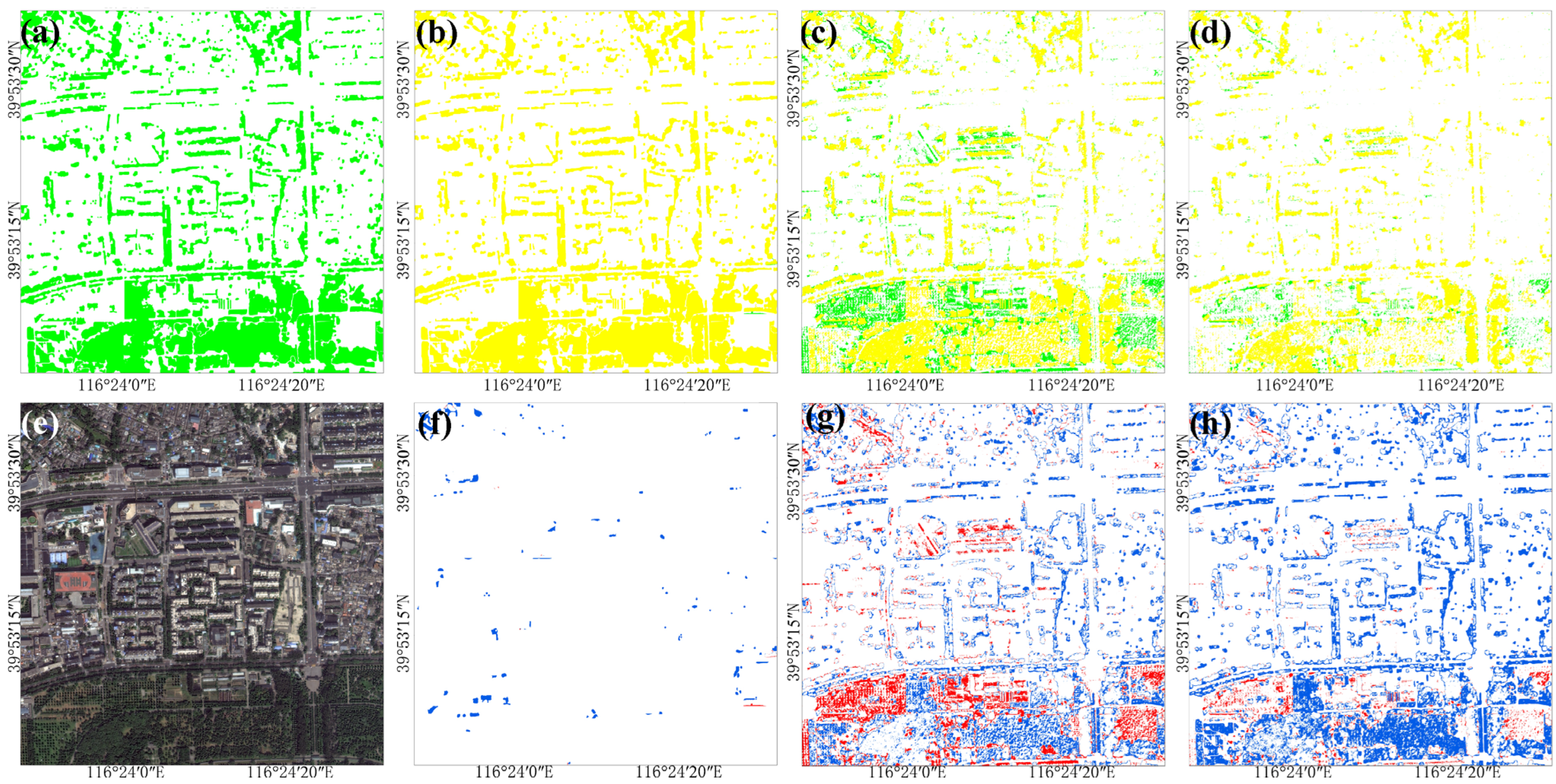

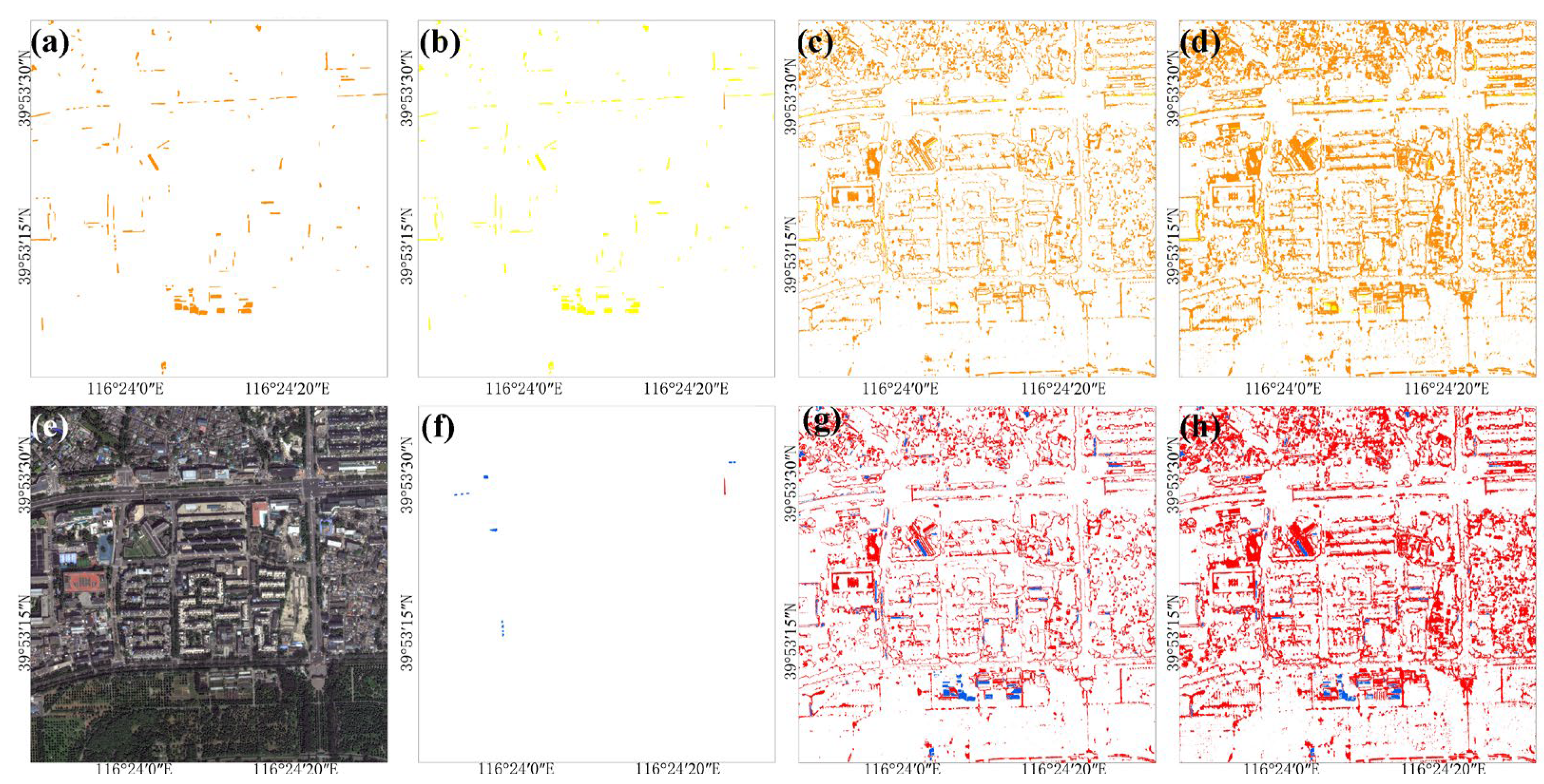

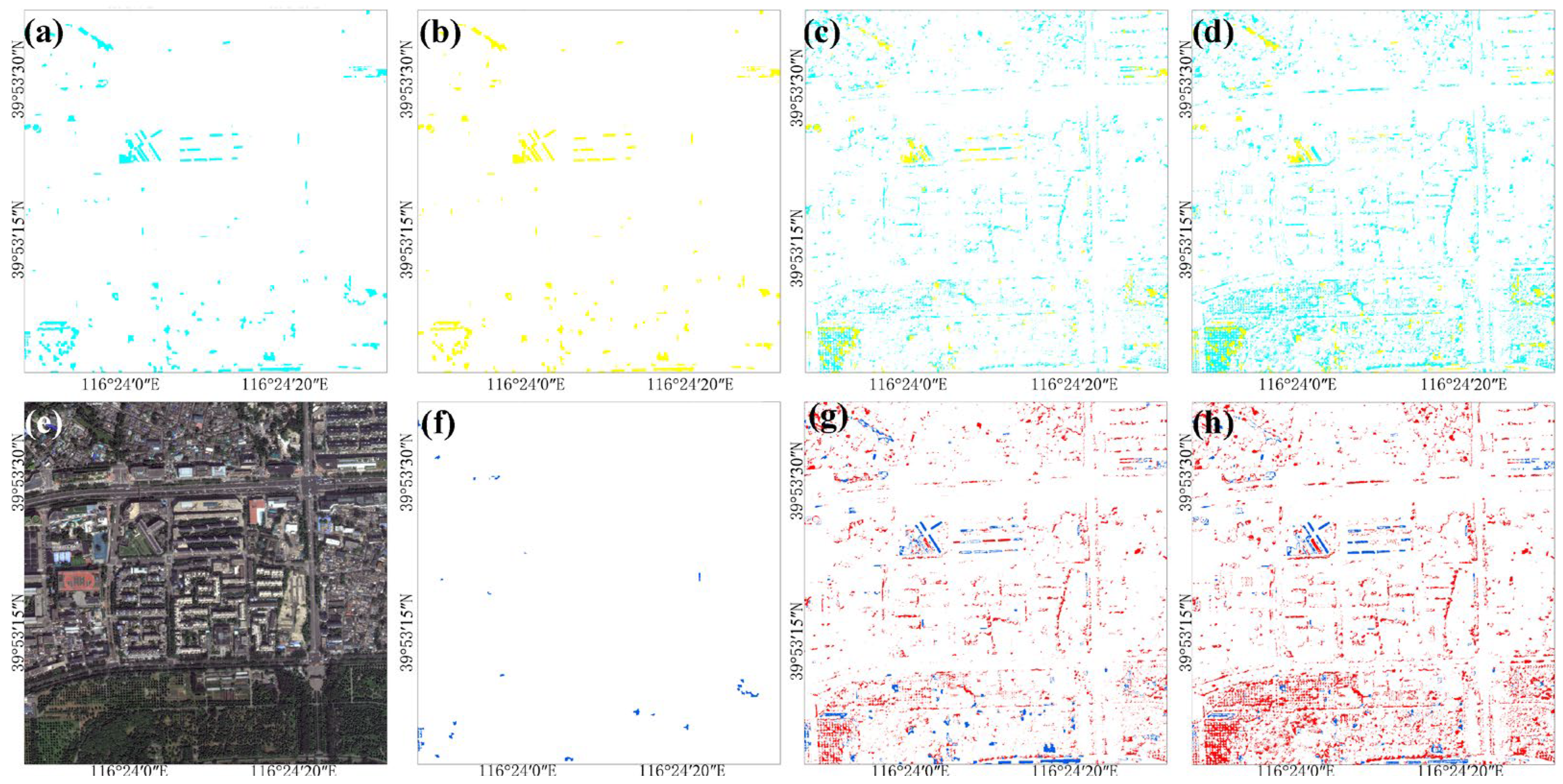

3.2.2. Comparison of Model Classification Results

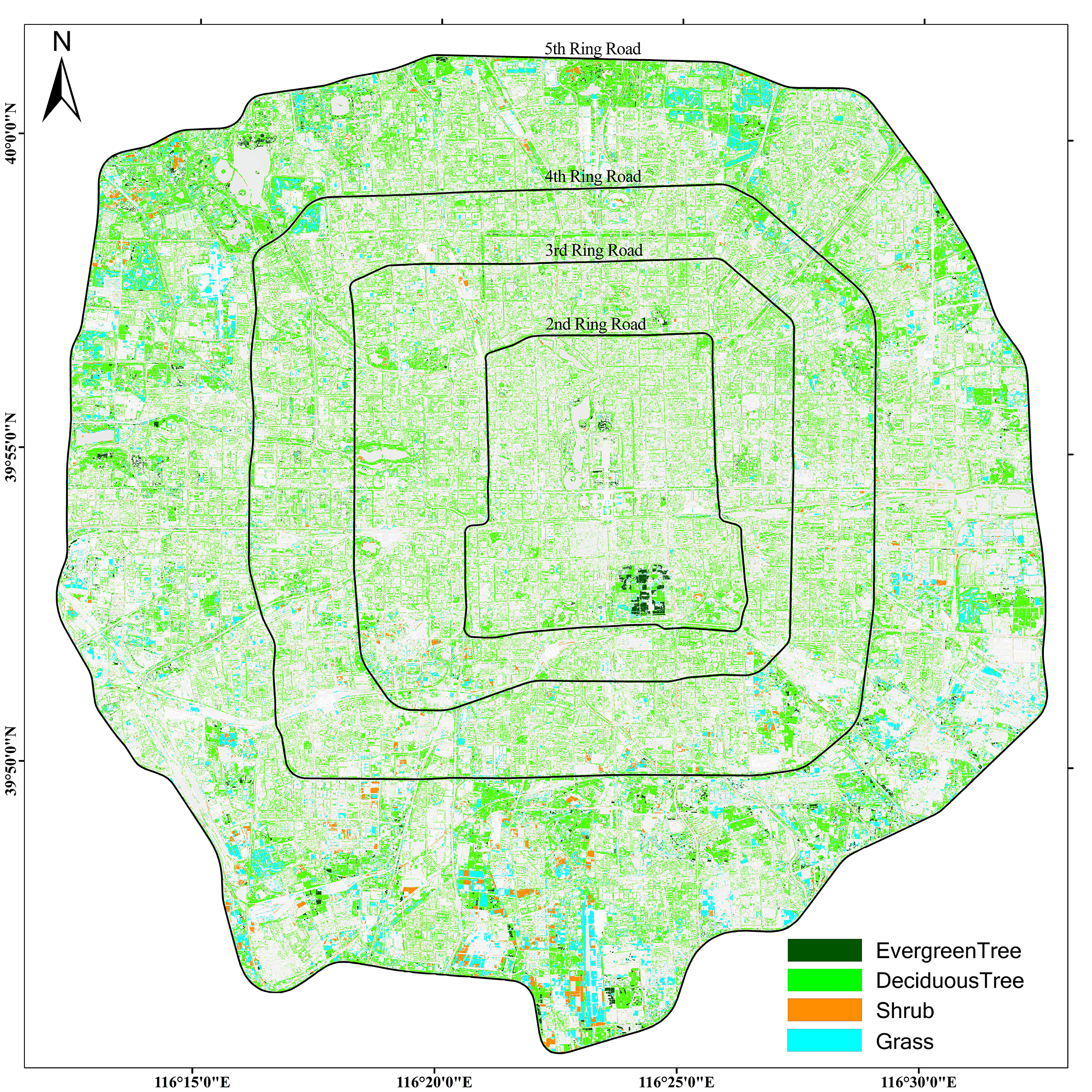

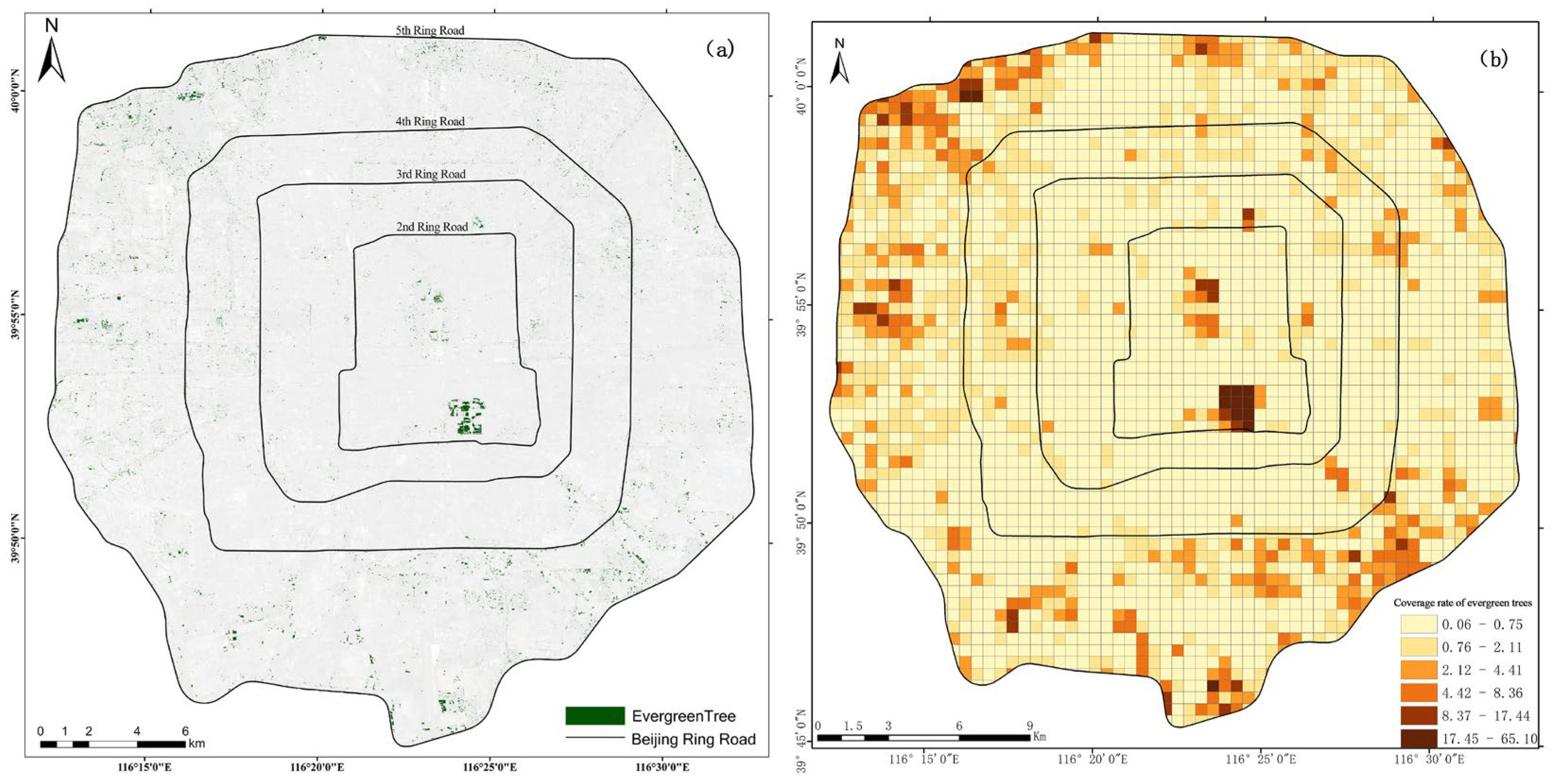

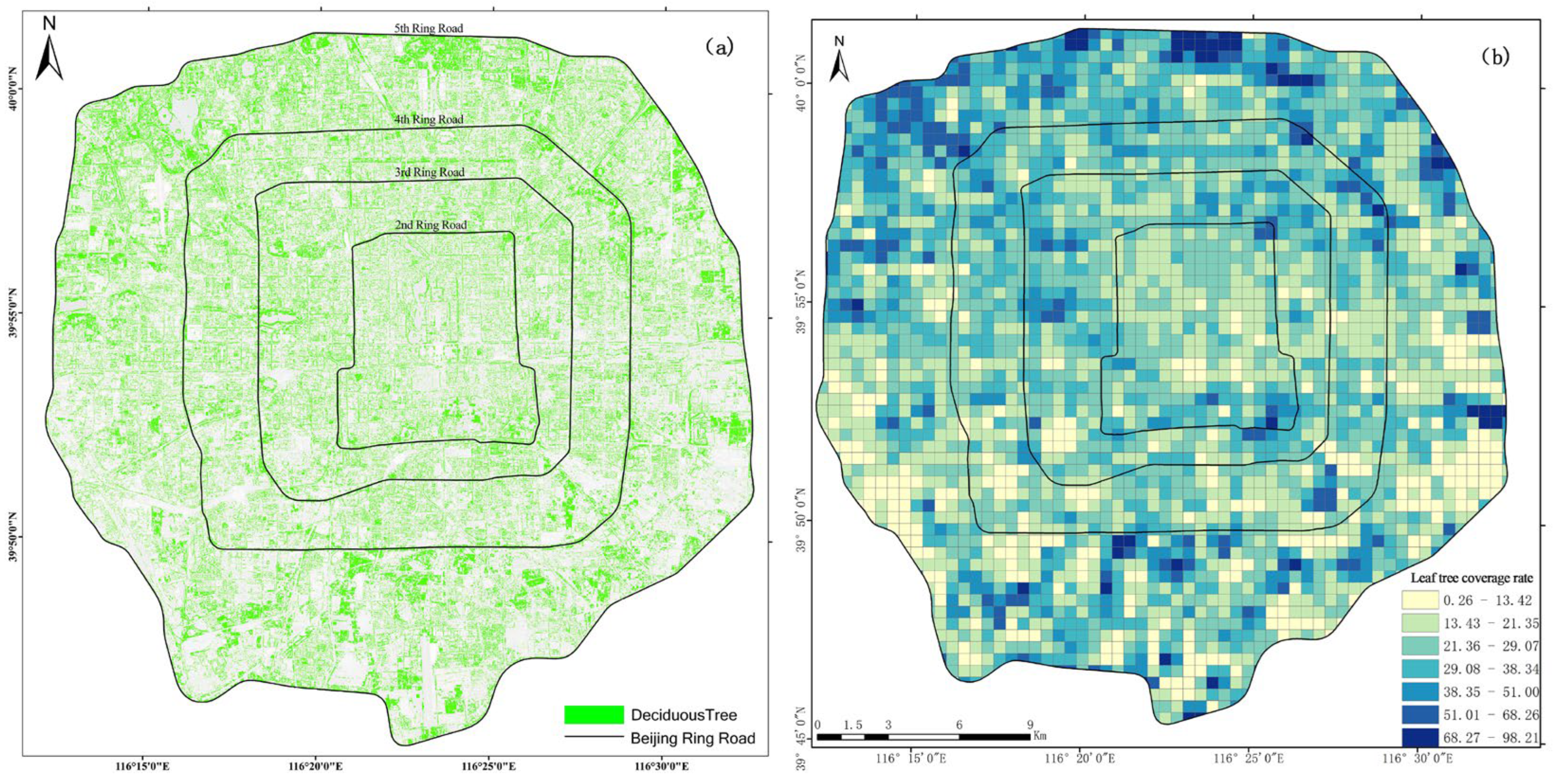

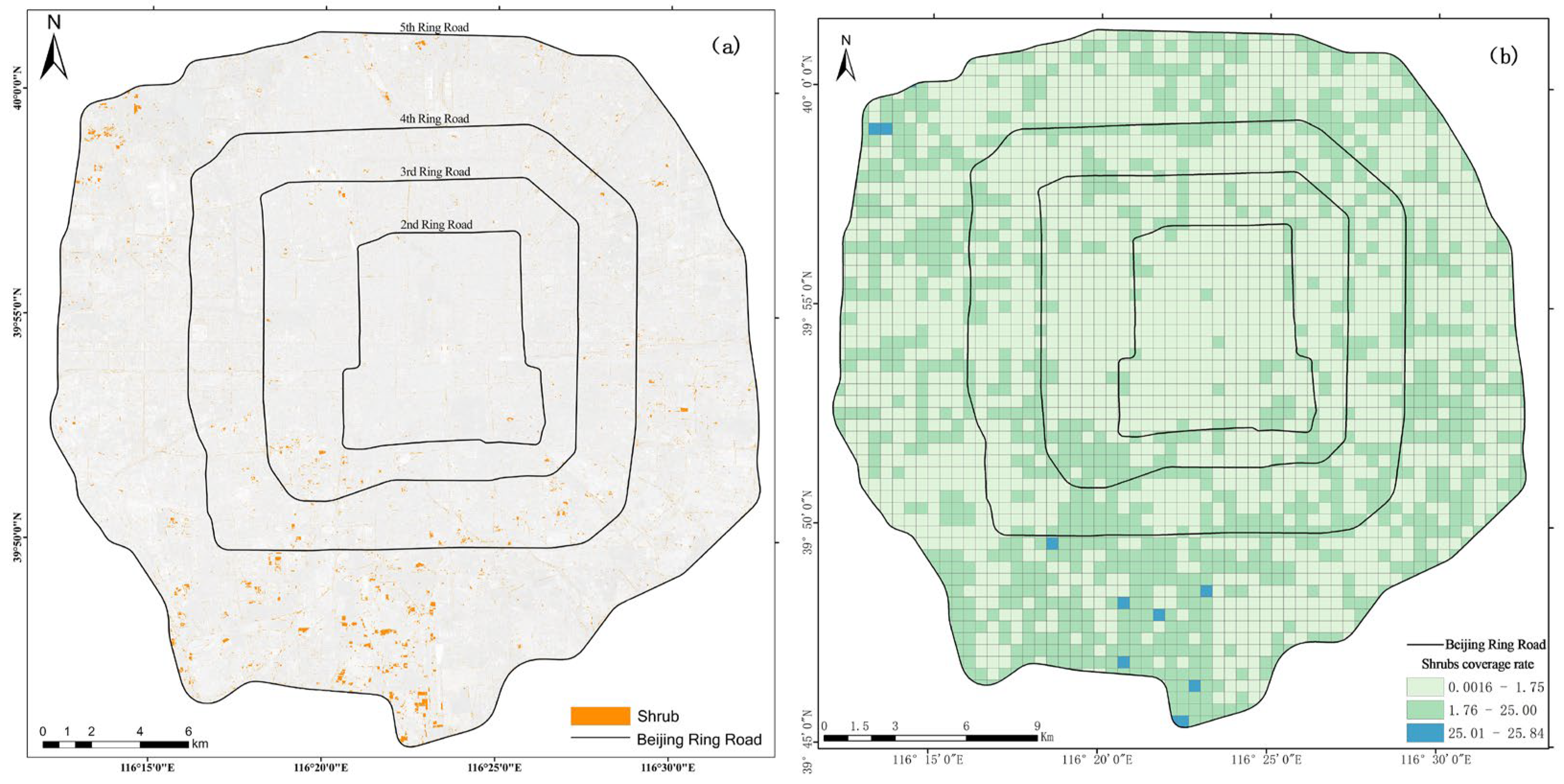

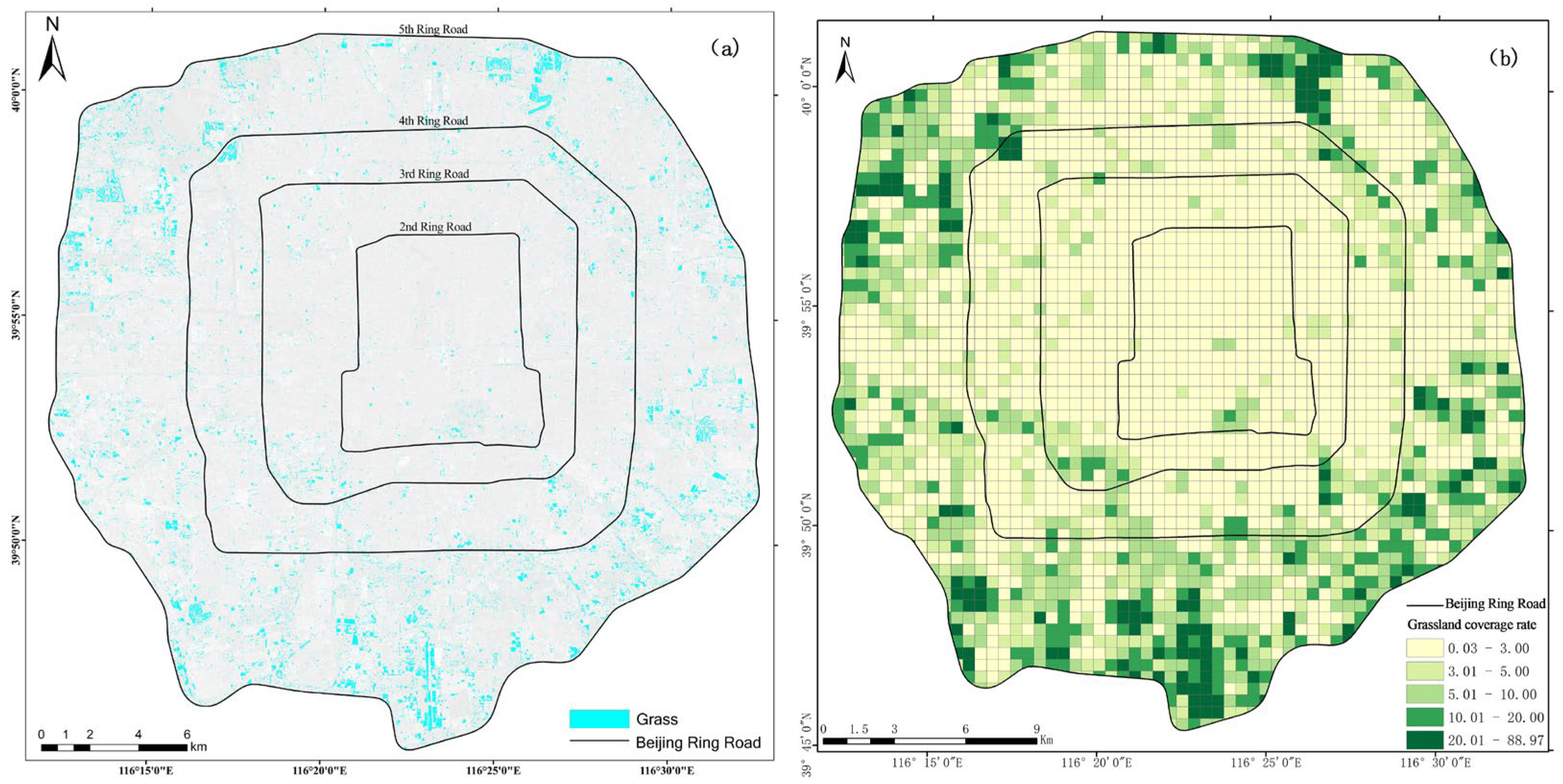

3.3. Spatial Analysis of Green Space Classification Within the Fifth Ring Road

4. Discussion

4.1. Precision Analysis of Deep Learning Models

4.2. Factors Affecting the Accuracy of Deep Learning Models

4.3. Classification Differences Between Deep Learning Models and Traditional Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahmed, F.; Noor, W.; Nasim, M.A.; Ullah, I.; Basit, A. Vegetation and Non-Vegetation Classification Using Object Detection Techniques and Deep Learning from Low/Mixed Resolution Satellite Images. Pak. J. Emerg. Sci. Technol. 2023, 4, 1–18. [Google Scholar]

- Burrewar, S.S.; Haque, M.; Haider, T.U. A Survey on Mapping of Urban Green Spaces within Remote Sensing Data Using Machine Learning & Deep Learning Techniques. In Proceedings of the 15th International Conference on Computer and Automation Engineering, Sydney, NSW, Australia,, 3–5 March 2023; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2023. [Google Scholar]

- Chan, R.H.; Kan, K.K.; Nikolova, M.; Plemmons, R.J. A two-stage method for spectral–spatial classification of hyperspectral images. J. Math. Imaging Vis. 2020, 62, 790–807. [Google Scholar]

- Chen, S.; Zhang, M.; Lei, F. Mapping Vegetation Types by Different Fully Convolutional Neural Network Structures with Inadequate Training Labels in Complex Landscape Urban Areas. Forests 2023, 14, 1788. [Google Scholar] [CrossRef]

- Chen, Y.; Weng, Q.; Tang, L.; Liu, Q.; Zhang, X.; Bila, M. Automatic mapping of urban green spaces using a geospatial neural network. GIScience Remote Sens. 2021, 58, 624–642. [Google Scholar]

- Deng, C.; Wu, C. BCI: A biophysical composition index for remote sensing of urban environments. Remote Sens. Environ. 2012, 127, 247–259. [Google Scholar]

- Xu, S.; Wang, R.; Shi, W.; Wang, X. Classification of Tree Species in Transmission Line Corridors Based on YOLO v7. Forests 2024, 15, 61. [Google Scholar] [CrossRef]

- Khalili, B.; Smyth, A.W. SOD-YOLOv8—Enhancing YOLOv8 for Small Object Detection in Aerial Imagery and Traffic Scenes. Sensors 2024, 24, 6209. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar]

- Noi, P.T.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar]

- Franchi, G.; Angulo, J.; Sejdinovic, D. Hyperspectral image classification with support vector machines on kernel distribution embeddings. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1898–1902. [Google Scholar]

- Hao, X.; Liu, L.; Yang, R.; Yin, L.; Zhang, L.; Li, X. A Review of Data Augmentation Methods of Remote Sensing Image Target Recognition. Remote Sens. 2023, 15, 827. [Google Scholar]

- Hasan, M.; Ullah, S.; Khan, M.J.; Khurshid, K. Comparative Analysis of SVM, ANN and CNN for Classifying Vegetation Specie using Hyperspectral Thermal Infrared Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 1861–1868. [Google Scholar] [CrossRef]

- Javed, A.; Cheng, Q.; Peng, H.; Altan, O.; Li, Y.; Ara, I.; Huq, E.; Ali, Y.; Saleem, N. Review of Spectral Indices for Urban Remote Sensing. Photogramm. Eng. Remote Sens. 2021, 87, 513–524. [Google Scholar] [CrossRef]

- Kadhim, I.; Abed, F.M.; Vilbig, J.M.; Sagan, V.; DeSilvey, C. Combining Remote Sensing Approaches for Detecting Marks of Archaeological and Demolished Constructions in Cahokia’s Grand Plaza, Southwestern Illinois. Remote Sens. 2023, 15, 1057. [Google Scholar] [CrossRef]

- Na, L.; Jing, H.; Bin, W.; Feifei, T.; Junyu, Z.; Jiang, G. Intelligent Extraction of Urban Vegetation Information based on Vegetation Spectral Signature and Sep-U Net. J. Geo-Inf. Sci. 2023, 25, 1717–1729. [Google Scholar]

- Liu, W.; Yue, A.; Shi, W.; Ji, J.; Deng, R. An Automatic Extraction Architecture of Urban Green Space Based on DeepLabv3plus Semantic Segmentation Model. In Proceedings of the IEEE 4th International Conference on Image, Vision and Computing, Xiamen, China, 5–7 July 2019; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2019. [Google Scholar]

- Liu, Y.; Zhong, Y.; Shi, S.; Zhang, L. Scale-aware deep reinforcement learning for high resolution remote sensing imagery classification. ISPRS J. Photogramm. Remote Sens. 2024, 209, 296–311. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Wang, H.; Duan, Y.; Lei, H.; Liu, C.; Zhao, N.; Liu, X.; Li, S.; Lu, S. Analysis and Comprehensive Evaluation of Urban Green Space Information Based on Gaofen 7: Considering Beijing’s Fifth ring Area as an Example. Remote Sens. 2024, 16, 3946. [Google Scholar] [CrossRef]

- Li, Y.; Li, Q.; Pan, J.; Zhou, Y.; Zhu, H.; Wei, H.; Liu, C. SOD-YOLO: Small-Object-Detection Algorithm Based on Improved YOLO v8 for UAV Images. Remote Sens. 2024, 16, 3057. [Google Scholar] [CrossRef]

- Nawaz, S.A.; Li, J.; Bhatti, U.A.; Shoukat, M.U.; Ahmad, R.M. AI-based object detection latest trends in remote sensing, multimedia and agriculture applications. Front. Plant Sci. 2022, 13, 1041514. [Google Scholar] [CrossRef]

- Ramos, L.T.; Sappa, A.D. Leveraging U-Net and selective feature extraction for land cover classification using remote sensing imagery. Sci. Rep. 2025, 15, 784. [Google Scholar] [CrossRef]

- Rochefort-Beaudoin, T.; Vadean, A.; Achiche, S.; Aage, N. From density to geometry: Instance segmentation for reverse engineering of optimized structures. Eng. Appl. Artif. Intell. 2025, 141, 109732. [Google Scholar] [CrossRef]

- Ruiz-Ponce, P.; Ortiz-Perez, D.; Garcia-Rodriguez, J.; Kiefer, B. POSEIDON: A Data Augmentation Tool for Small Object Detection Datasets in Maritime Environments. Sensors 2023, 23, 3691. [Google Scholar] [CrossRef]

- Sekertekin, A.; Marangoz, A.M.; Akcin, H. Pixel-based Classification Analysis of Land Use Land Cover Using Sentiel-2 and Landsat-8 Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII, 91–93. [Google Scholar] [CrossRef]

- Zhang, M.; Arshad, H.; Abbas, M.; Jehanzeb, H.; Tahir, I.; Hassan, J.; Samad, Z.; Chunara, R. Quantifying Greenspace with Satellite Images in Karachi, Pakistan, Using a New Data Augmentation Paradigm. ACM J. Comput. Sustain. Soc. 2025, 3, 1–23. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, W.; Ren, Z.; Zhao, Y.; Liao, Y.; Ge, Y.; Wang, J.; He, J.; Gu, Y.; Wang, Y.; et al. Multi-scale feature fusion and transformer network for urban green space segmentation from high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 103586. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, H.; Zhang, Y.; Liu, K.; Zhang, J.; Zhu, Y.; Dilixiati, B.; Ning, J.; Gao, J. YOLO-DS: A detection model for desert shrub identification and coverage estimation in UAV remote sensing. J. For. Res. 2025, 36, 116. [Google Scholar] [CrossRef]

- Shi, D.; Yang, X. Support Vector Machines for Land Cover Mapping from Remote Sensor Imagery. In Monitoring and Modeling of Global Changes: A Geomatics Perspective; Springer: Berlin/Heidelberg, Germany, 2015; pp. 265–279. [Google Scholar]

- Shi, Q.; Liu, M.; Marinoni, A.; Liu, X. UGS-1m: Fine-grained urban green space mapping of 31 major cities in China based on the deep learning framework. Earth Syst. Sci. Data 2023, 15, 555–577. [Google Scholar] [CrossRef]

- Tran, T.V.; Julian, J.P.; De Beurs, K.M. Land Cover Heterogeneity Effects on Sub-Pixel and Per-Pixel Classifications. ISPRS Int. J. Geo-Inf. 2014, 3, 540–553. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, H. Classification of Hyperspectral Images by SVM Using a Composite Kernel by Employing Spectral, Spatial and Hierarchical Structure Information. Remote Sens. 2018, 10, 441. [Google Scholar] [CrossRef]

- Wu, T.; Dong, Y. YOLO-SE: Improved YOLO v8 for Remote Sensing Object Detection and Recognition. Appl. Sci. 2023, 13, 12977. [Google Scholar] [CrossRef]

- Xu, C.; Gao, L.; Su, H.; Zhang, J.; Wu, J.; Yan, W. Label Smoothing Auxiliary Classifier Generative Adversarial Network with Triplet Loss for SAR Ship Classification. Remote Sens. 2023, 15, 4058. [Google Scholar] [CrossRef]

- Yan, Y.; Tan, Z.; Su, N. A Data Augmentation Strategy Based on Simulated Samples for Ship Detection in RGB Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2019, 8, 276. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, Y.; Su, N. A Novel Data Augmentation Method for Detection of Specific Aircraft in Remote Sensing RGB Images. IEEE Access 2019, 7, 56051–56061. [Google Scholar] [CrossRef]

- Yao, Z.Y.; Liu, J.J.; Zhao, X.W.; Long, D.F.; Wang, L. Spatial dynamics of above ground carbon stock in urban green space: A case study of Xi’an, China. J. Arid Land 2015, 7, 350–360. [Google Scholar] [CrossRef]

- Du, X.; Wang, G.; Lu, C.; Yan, Z.; Zhang, T. Green space extraction from Resource-3 remote sensing images in complex urban environments. J. Remote Sens. 2024, 28, 2954–2969. [Google Scholar]

- Hu, X.; Lou, L.; Zhou, X. Design and implementation of Urban Remote Sensing Image Processing System Based on Deep learning. Mod. Comput. 2023, 29, 25–29. [Google Scholar]

- Huang, F.; Cao, F.; Wang, Q. Research on Fine Classification of Urban Green Spaces by fusing GF-2 and Open Map Data. Resour. Dev. Mark. 2024, 40, 321–329. [Google Scholar]

- Li, G.; Qiao, Y.; Wu, W.; Zheng, Y.; Hong, Y.; Zhou, X. Deep learning and its applications in computer vision. J. Comput. Appl. 2019, 36, 3521–3529+3564. [Google Scholar]

- Liu, W.; Yue, A.; Ji, J.; Shi, W.; Deng, R.; Liang, Y.; Xiong, L. GF-2 image urban green space extraction based on DeepLabv3+ Semantic segmentation Model. Remote Sens. Land. Resour. 2020, 32, 120–129. [Google Scholar]

- Men, G. Research on the Extraction Method and Spatio-Temporal Variation of Green Space Information from High-Resolution Images of the Pearl River Delta Urban Agglomeration. Master’s Thesis, University of Chinese Academy of Sciences, Beijing, China, 2022. [Google Scholar]

- Nie, Z. Research on Changes in Urban Green Spaces in China Based on Multi-source Remote Sensing data. Acta Geod. Sin. 2024, 53, 205. [Google Scholar]

- Yan, J.; Tang, S. Research on the application of unmanned Aerial Vehicle remote sensing in Urban landscaping survey. Jiangxi Commun. Sci. Technol. 2024, 1, 37–41. [Google Scholar]

- Ziyuan, Z.; Miao, C.; Lian, H. An improved small object and slender target detection model based on YOLOv8. Comput. Appl. 2024, 44, 286–295. [Google Scholar]

- Jian, W.; Di, X.; Lihang, F.; Cheng, S. A PCB Small Object Defect Detection Model Based on Improved YOLOv8s. Comput. Eng. Appl. 2025, 61, 288–297. [Google Scholar]

- Zhao, Y.; Zhang, N.; Xu, M. Comparison and Analysis of Remote Sensing Image Interpretation Methods for Geographic National Conditions Monitoring. Surv. Mapp. Spat. Geogr. Inf. 2021, 1, 103–105. [Google Scholar]

- Yang, Y.; Sun, W.; Su, G. A Novel Support-Vector-Machine-Based Grasshopper Optimization Algorithm for Structural Reliability Analysis. Buildings 2022, 12, 855. [Google Scholar] [CrossRef]

- Gong, F.; Zheng, Z.C.; Ng, E. Modeling Elderly Accessibility to Urban Green Space in High Density Cities: A Case Study of Hong Kong. Procedia Environ. Sci. 2016, 36, 90–97. [Google Scholar] [CrossRef]

- Habibollah, F.; Taher, P. Analysis of spatial equity and access to urban parks in Ilam, Iran. J. Environ. Manag. 2020, 260, 110–122. [Google Scholar] [CrossRef]

- Huang, Y.Y.; Lin, T.; Zhang, G.Q. Spatiotemporal patterns and inequity of urban green space accessibility and its relationship with urban spatial expansion in China during rapid urbanization period. Sci. Total Environ. 2021, 809, 151123. [Google Scholar] [CrossRef] [PubMed]

| Green Space Classification Accuracy Evaluation Index | Calculation Formula |

|---|---|

| OA | |

| Kappa | |

| UA | |

| PA | |

| F1 score |

| Classification Model | Overall Classification Accuracy (%) | Kappa Coefficient | F1 Score |

|---|---|---|---|

| YOLO v8 | 89.60 | 0.798 | 0.860 |

| SVM | 71.53 | 0.523 | 0.715 |

| MLC | 69.57 | 0.551 | 0.696 |

| YOLO v8 (no augmentation) | 42.19 | 0.092 | 0.422 |

| YOLO v8 | SVM | MLC | |

|---|---|---|---|

| Evergreen trees | 92.67% | 46.27% | 61.14% |

| Deciduous trees | 95.69% | 63.30% | 40.73% |

| Shrubs | 89.08% | 25.27% | 47.88% |

| Grassland | 89.06% | 46.74% | 55.82% |

| Research Area | Evergreen Trees (km2) | Deciduous Trees (km2) | Shrubs (km2) | Grasslands (km2) |

|---|---|---|---|---|

| Within the Second Ring Road | 0.89 | 12.42 | 0.54 | 0.64 |

| Within the second–third rings | 0.34 | 20.46 | 1.37 | 1.45 |

| Within the third–fourth rings | 0.74 | 30.02 | 2.39 | 3.50 |

| Within the fourth–fifth rings | 4.26 | 80.08 | 7.96 | 21.29 |

| Total (within five rings) | 6.23 | 142.97 | 12.26 | 26.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Xu, X.; Duan, Y.; Wang, H.; Liu, X.; Sun, Y.; Zhao, N.; Li, S.; Lu, S. Vegetation Classification and Extraction of Urban Green Spaces Within the Fifth Ring Road of Beijing Based on YOLO v8. Land 2025, 14, 2005. https://doi.org/10.3390/land14102005

Li B, Xu X, Duan Y, Wang H, Liu X, Sun Y, Zhao N, Li S, Lu S. Vegetation Classification and Extraction of Urban Green Spaces Within the Fifth Ring Road of Beijing Based on YOLO v8. Land. 2025; 14(10):2005. https://doi.org/10.3390/land14102005

Chicago/Turabian StyleLi, Bin, Xiaotian Xu, Yingrui Duan, Hongyu Wang, Xu Liu, Yuxiao Sun, Na Zhao, Shaoning Li, and Shaowei Lu. 2025. "Vegetation Classification and Extraction of Urban Green Spaces Within the Fifth Ring Road of Beijing Based on YOLO v8" Land 14, no. 10: 2005. https://doi.org/10.3390/land14102005

APA StyleLi, B., Xu, X., Duan, Y., Wang, H., Liu, X., Sun, Y., Zhao, N., Li, S., & Lu, S. (2025). Vegetation Classification and Extraction of Urban Green Spaces Within the Fifth Ring Road of Beijing Based on YOLO v8. Land, 14(10), 2005. https://doi.org/10.3390/land14102005