Abstract

Rural road networks are vital for rural development, yet narrow alleys and occluded segments remain underrepresented in digital maps due to irregular morphology, spectral ambiguity, and limited model generalization. Traditional segmentation models struggle to balance local detail preservation and long-range dependency modeling, prioritizing either local features or global context alone. Hypothesizing that integrating hierarchical local features and global context will mitigate these limitations, this study aims to accurately segment such rural roads by proposing R-SWTNet, a context-aware U-Net-based framework, and constructing the SQVillages dataset. R-SWTNet integrates ResNet34 for hierarchical feature extraction, Swin Transformer for long-range dependency modeling, ASPP for multi-scale context fusion, and CAM-Residual blocks for channel-wise attention. The SQVillages dataset, built from multi-source remote sensing imagery, includes 18 diverse villages with adaptive augmentation to mitigate class imbalance. Experimental results show R-SWTNet achieves a validation IoU of 54.88% and F1-score of 70.87%, outperforming U-Net and Swin-UNet, and with less overfitting than R-Net and D-LinkNet. Its lightweight variant supports edge deployment, enabling on-site road management. This work provides a data-driven tool for infrastructure planning under China’s Rural Revitalization Strategy, with potential scalability to global unstructured rural road scenes.

1. Introduction

1.1. Background

Rural road networks serve as critical lifelines for rural development, underpinning economic growth, social equity, and sustainable livelihoods globally. Research has demonstrated that well-connected road infrastructure directly enhances rural household income by facilitating access to markets, healthcare, and education [1]. However, a significant portion of the global rural population remains disconnected from formal road networks [2], perpetuating poverty cycles by limiting mobility and resource access [3].

China, as the largest developing country in the world, is undergoing rapid urbanization [4]. By the end of 2024, the urbanization ratio of China surpassed 67% [5]. However, China is continuously investing in rural areas by prioritizing rural infrastructure development under its “Rural Revitalization Strategy” [6,7], aiming to construct safe, convenient, and efficient rural road systems. By the end of 2024, the total length of rural roads in China exceeded 4.64 million kilometers [8], representing a 19.5% increase since 2014 [9]—a milestone that has garnered international attention as a model for developing countries. This expansion, however, has intensified the demand for intelligent tools to support rural road identification, mapping, and management [10].

Rural roads present unique challenges for automated extraction from remote sensing imagery, distinct from their urban counterparts. Notably, narrow alleys within and between rural settlements—vital for daily mobility and social interaction—are consistently absent from mainstream commercial maps (e.g., Amap, OpenStreetMap) and public geographic databases. Their small width, irregular morphology, blurred spectral boundaries, and frequent occlusion by vegetation or buildings [11] make them particularly difficult to identify using traditional segmentation models like the original U-Net. Compounding this issue, most state-of-the-art road segmentation models are validated on well-structured, homogeneous road networks in Europe and North America (e.g., the Massachusetts dataset [12]), exhibiting limited generalizability to the highly heterogeneous, unplanned rural road networks of developing countries.

China’s dense and complex rural road networks, characterized by diverse landscapes (e.g., polders, hillsides, riverine villages) and mixed infrastructure types (paved/unpaved roads, alleys), thus present an ideal testbed for advancing road extraction technologies. Addressing the gaps in automated segmentation of such networks is critical to supporting data-driven rural planning and infrastructure management.

1.2. Related Applications

Road extraction is one of the major application scenarios of semantic segmentation, in which deep learning plays an important role. Starting from the AlexNet [13], the CNN (Convolutional Neural Networks) structure has built up a solid foundation for follow-up studies. In 2015, CNN was first implemented in pixel-wise labeling [14]. By introducing residual connection methods into CNN, the ResNet [15] structure significantly alleviates gradient vanishing of CNN, and provides a new prototype for complex feature extraction. The MobileNet [16], on the other hand, introduces the depthwise separable convolution (DSC) [17], making it possible to deploy deep learning models on mobile devices.

In the following studies, CNN has been improved to adapt to segmentation rather than classification tasks. Aoki and Saito [18] used CNN to recognize buildings and roads in aerial imagery by adding a new fully connected layer to resize the vector, which was later proven to be equivalent with another convolution layer in FCN (Fully Convolutional Network) [19], where there is no fully connected layer in the structure, making the segmentation of high-resolution aerial imagery possible [20].

Considering FCN’s drawbacks of rough upsampling, obscure details, and relatively low efficiency, DeconvNet [21], SegNet [22], and UNet [23] were introduced, forming an encoder–decoder structure, which has become a milestone in semantic segmentation tasks. In recent research, an encoder–decoder structure is nearly indispensable. Meanwhile, DeepLab series widened the receptive field of FCN by introducing the ASPP (Atrous Spatial Pyramid Pooling) [24] to fetch the multi-scale context of images.

From then on, improvements in backbones and structures above emerged. By combining ResNet with UNet structure, ResUNet was introduced to extract roads from aerial images [25]. By adding attention gates into the UNet structure, Oktay et al. established a model that learned to focus on target structures of varying shapes and sizes by suppressing irrelevant regions in an input image while highlighting salient features useful for a specific task [26]. By re-designing skip connections and using deep supervision, UNet++ achieved an average IoU gain of more than 3 points over UNet [27]. Inspired by the “Encoder-Decoder” structure, Wang et al. established the RD-Net to segment unstructured roads, improving overall accuracy [28].

The rise of Transformer architecture [29] has brought about a paradigm shift. The ViT (Vision Transformer) model has become one of the most important models in image processing [30], which later led to the Swin Transformer [31], where a SW-MSA (shifted window Multi-head Self-Attention) mechanism was brought to life, reducing overall resource cost. Researchers tried to combine the transformer architecture with other state-of-the-art models and achieved inspiring results. By adding transformer blocks into different sections of the UNet structure, TransUNet [32] and Swin-UNet were invented to capture long-range dependencies of biomedical scenarios [33] and aerial imagery scenarios [34] via shifted window self-attention.

The segmentation of aerial images, especially roads, with the demand of maintaining long-range dependencies calls for a more integrated and complex design of the models. Thus, it has become a trend to merge multiple structures to achieve well-optimized segmentation results. DPIF-Net [35] takes multiple measures like transformers and ASPP to merge global and local information, while Res_ASPP_UNet++ [36] uses depthwise separable convolution and ASPP to reduce model parameters. Sloan et al. [37] used multiple methods to map remote roads using satellite imagery. Yang et al. [38] designed R-MSFNet to extract roads of rural Xiong’an New Area using a self-built dataset and the DeepGlobe dataset.

1.3. Research Objectives

As most state-of-the-art models are trained and validated on well-structured road networks in Europe and North America, they exhibit significant limitations in generalizing to the unstructured, heterogeneous landscapes prevalent in rural China. To address the aforementioned challenges, this study aims to develop a robust deep learning framework for accurate segmentation of rural streets and alleys. The specific research objectives are as follows:

First, to propose a context-aware U-Net-based model optimized for rural road segmentation. This model will integrate advanced context-aware mechanisms (e.g., multi-scale feature fusion, long-range dependency modeling) to enhance segmentation accuracy in complex rural scenes. Key targets include mitigating blurred boundaries, severe occlusions, and varying road textures—issues that traditional U-Net and its variants struggle to resolve. By combining hierarchical feature extraction with adaptive attention mechanisms, the model will improve the representation of fine-grained rural road features.

Second, to enhance the model’s capacity to identify narrow rural alleys. These critical pathways are systematically missing from existing maps due to their small scale and ambiguous spectral signatures. To achieve this, the study will optimize dataset construction by integrating multi-source data (high-resolution satellite and aerial imagery) and refining labeling protocols, ensuring that narrow alleys are accurately labeled and distinguishable from non-road features.

These objectives collectively address the technical and practical gaps in rural road segmentation, with the goal of supporting intelligent infrastructure planning under China’s Rural Revitalization Strategy.

2. Methodology

2.1. Dataset Preparation

2.1.1. Overview of Study Region

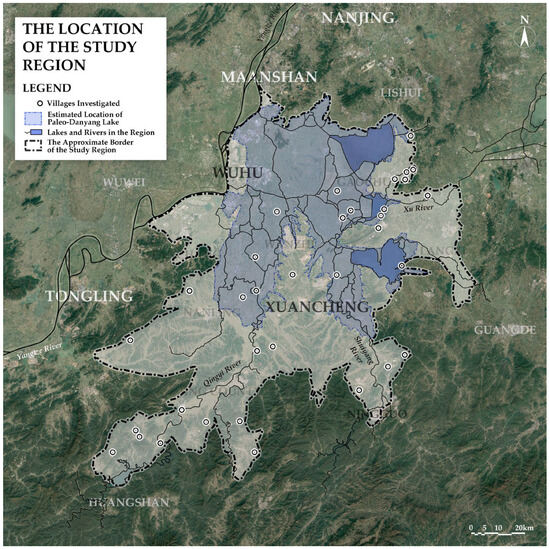

This study focuses on the Shuiyang River–Qingyi River basin and the paleo-Danyang Lake region, situated along the border of Jiangsu and Anhui Provinces in eastern China (Figure 1). This transprovincial area is hydrologically and ecologically distinct, characterized by a complex interplay of rivers, lakes, and anthropogenically modified landscapes, making it a representative testbed for investigating rural road networks in diverse and unstructured environments.

Figure 1.

The location of the study region.

The region’s hydrological framework is dominated by the Shuiyang and Qingyi Rivers, which originate from the northern slopes of the Huangshan Mountains (Anhui Province). Flowing northward, these rivers traverse municipal administrative divisions, including Huangshan, Xuancheng, Wuhu (Anhui), and Nanjing (Jiangsu), collectively shaping the basin’s drainage network and influencing rural settlement patterns. Central to this system is the paleo-Danyang Lake, a historical lacustrine depression formed by upstream sedimentation and blockage of inflows to the Yangtze River [39]. Over centuries of natural infilling and anthropogenic land reclamation [40], this ancient lake has fragmented into a mosaic of smaller water bodies, including Shijiu Lake, Gucheng Lake, and Nanyi Lake. These are interconnected by an intricate network of natural rivers and artificial canals, giving rise to extensive polders—low-lying agricultural tracts enclosed by dykes to mitigate flooding—which define the region’s landscape.

The study area exhibits remarkable rural settlement diversity, with villages distributed across three primary geomorphic types, mostly polder villages, river-side villages, and plain villages. Polder villages cluster within dyke-enclosed areas, adapting to irregular reclaimed land boundaries, resulting in winding, narrow alleys and road networks that follow topographic gradients; river-side villages align along watercourses featuring linear road networks and frequent intersections with canals, posing challenges for segmentation due to spectral similarity between water and road surfaces; plain villages are located on flat alluvial plains with more regular layouts and mixed land uses (residential, agricultural, and public spaces) that complicate feature discrimination.

Within the study area, rural development exhibits diverse stage characteristics of “original-transitional-activated”: there are traditional villages preserving the original form of “built along ridges, lanes following polders”; transitional resettlement communities formed under the guidance of the “Three Zones and Three Lines” policy; and homestay villages revitalized through “culture+tourism” updates. This “multi-stage coexistence” pattern not only retains rural historical memory but also reflects rural adaptation attempts to modern needs in the urbanization process, further demonstrating the regenerative value of “cultural activation” for traditional spaces, thus providing a vivid case for exploring the spatial integration of tradition and modernity.

The study area is located in the triangular intersection area of Wu Culture (southern Jiangsu and northern Zhejiang), Huizhou Culture (Huangshan-Wuhu), and Lianghuai Culture (region between the Yangtze and the Huai river). The core area of the study area (e.g., Gaochun in Jiangsu, Xuanzhou in Anhui) belongs to the Xuanzhou subgroup of Wu Dialect, serving as the “Xin’an Cultural Sub-region” [41] on the western edge of Wu Culture; the study area connects to the Huangshan Mountains in the south and borders the Jianghuai Plain in the north. The intersection of the three cultures has ultimately formed a “pluralistic unity” cultural identity in rural settlements. This cultural pattern is not only reflected in dialects, customs, and architectural styles but also profoundly influences the spatial form and social structure of rural settlements.

This heterogeneity of spaces, construction, and culture mirrors the complexity of rural road networks across eastern China. Critically, the coexistence of narrow, unstructured alleys, occluded or shaded pathways, and mixed surface types provides a rigorous test for developing context-aware segmentation models. By capturing such diversity, the dataset derived from this region ensures robust model generalization to other rural contexts.

2.1.2. Data Source and Labeling

The dataset construction relies on multi-source data to ensure the accuracy and diversity of rural road features. Primary data sources include the following: (1) satellite imagery retrieved via the official API of Tianditu [42], a national geospatial platform developed by the National Geomatics Center of China, ensuring high-precision georeferencing1; (2) processed aerial imagery, obtained by exporting orthomosaics from oblique photography surveys of selected villages, providing sub-meter resolution details critical for distinguishing narrow alleys and occluded pathways.

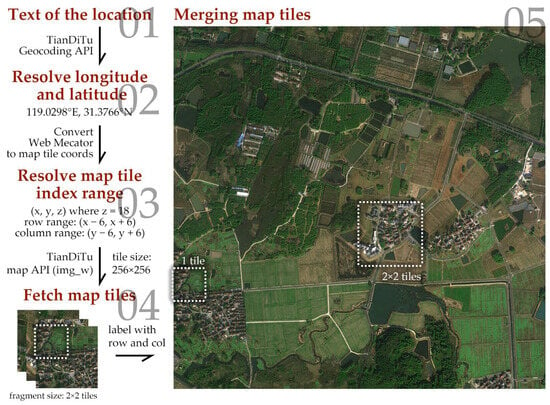

After on-site investigations and landscape diversity assessments, more than 30 natural villages [43] were selected as dataset candidates, distributed across the Shuiyang River–Qingyi River basin and paleo-Danyang Lake region (Figure 1). This selection ensures coverage of diverse rural typologies (polders, plains, river-side villages) and infrastructure conditions (traditional villages, resettlement communities), mirroring the complexity of rural road networks in eastern China. For each village, a square satellite image centered on the settlement was fetched from Tianditu, with a resolution of 3072 × 3072 pixels (covering ~1.8 km × 1.8 km). This image is a mosaic of 144 tiles at zoom level 18, balancing spatial coverage and local detail (Figure 2).

Figure 2.

Fetching satellite images (source of the satellite image: Tianditu API).

When loading images from Tianditu API, a workflow originating from the name of the villages is carried out. In Tianditu, the villages are treated as POI (points of interest), and their longitude and latitude can be acquired through geocoding API. The longitude and latitude are then resolved as tile indices in the form of a triple tuple (x, y, z). The images’ centering coordinates (x, y) with a zoom level of z are then downloaded through Tianditu map API. The acquired images are 3072 × 3072 in resolution, merging 12 × 12 = 144 tiles, each with a resolution of 256 × 256.

Semantic segmentation requires pixel-wise alignment between images and masks. To address the ambiguity of rural road boundaries, a dual-source labeling strategy was adopted: (1) Manually labeling binary masks with a size of 3072 × 3072, where public spaces (roads, squares, parking lots) are labeled white; (2) cross-validating labels with high-resolution aerial imagery, which reveals fine-grained details indistinct in satellite imagery. This multi-source fusion reduces labeling errors caused by spectral confusion, particularly for unpaved or shaded rural pathways.

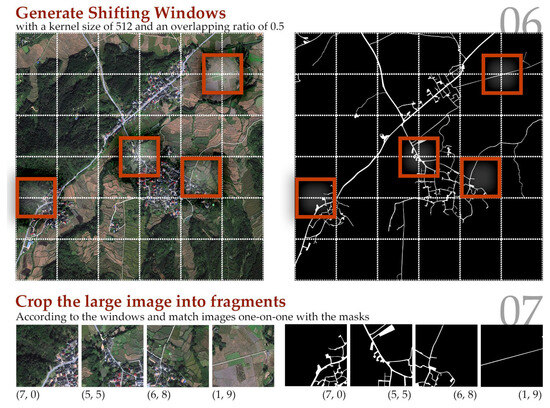

To adapt to model input size (512 × 512 pixels) and augment training diversity, large satellite images and their corresponding masks were cropped using a shifting window strategy (Figure 3). The window size was set to 512 × 512 pixels with a stride of 256 pixels (50% overlap), generating 121 non-overlapping sub-images per satellite image. This cropping strategy balances local feature integrity (e.g., preserving continuous alley segments) and dataset size, avoiding over-reliance on large homogeneous regions.

Figure 3.

Large image cropping and dataset generation (source of the satellite image: Tianditu API).

The cropped sub-images and masks form the backbone of the dataset, which is further split into training, validation, and test sets to ensure model generalization. This structured pipeline from multi-source data acquisition to precision labeling and adaptive cropping lays a robust foundation for training context-aware rural road segmentation models.

2.1.3. Dataset Outline

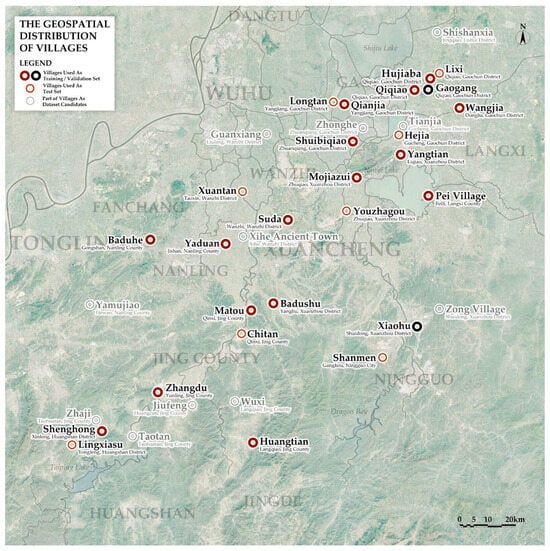

To ensure the dataset captures the heterogeneous characteristics of rural road networks, 18 natural villages were selected from over 30 candidates to form the SQVillages dataset, with their geographic distribution visualized in Figure 4. This selection prioritizes diversity in geomorphic types and infrastructure conditions across the study region, comprising 2178 cropped image–mask pairs (512 × 512 pixels) derived from the shifting window strategy detailed in Section 2.2.2, with 121 image–mask pairs for each village.

Figure 4.

The geospatial distribution of villages.

The dataset is split into training and validation sets using a village-level stratified strategy. In Figure 4, the training set is labeled as dark red bold rings, the validation set as black bold rings, the test set as red rings, and the rest, which stands for dataset candidates, as gray thin rings. Of the 18 villages selected in the SQVillages dataset, the validation set exclusively uses 242 samples from Gaogang Village (GG) and Xiaohu Village (XH), while the remaining 1936 samples form the training set. This separation ensures the validation set represents an independent rural scene, avoiding data leakage. The proportion of the training set in SQVillages is 88.9%, which falls in the commonly used domain of 85% to 90% for small samples.

Training samples undergo adaptive augmentation: random rotations (−90 to 90), random horizontal flips, and color jitter (brightness and contrast enhancement level 0.3, hue enhancement level 0.1) to simulate varying lighting and spectral conditions. Each image is normalized using channel-wise mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225) from the ImageNet dataset [44], aligning with pre-trained model initialization. Augmentation is applied three times per training sample, expanding the training set to 5808 samples—sufficient to train deep models while preserving rural road feature diversity. The basic information of the villages in the SQVillages dataset is listed in Table 1, where the villages used as the validation set are in italic format.

Table 1.

The basic information of the villages in the SQVillages dataset.

To rigorously evaluate model generalization to unseen rural scenes, eight additional villages from the same region (excluded from SQVillages) were selected as the independent test set, ensuring a comprehensive assessment of model robustness, as shown in Table 2. Test set preprocessing follows the same pipeline as the training data (512 × 512 sliding windows, georeferencing) but with no data augmentation applied to reflect real-world inference conditions. This consistency in preprocessing ensures fair comparison, while the exclusion of test villages from training guarantees unbiased evaluation of the model’s ability to segment narrow alleys, occluded roads, and complex rural topologies in novel environments.

Table 2.

Information about the villages used for testing.

2.2. Network Architecture

2.2.1. General Structure

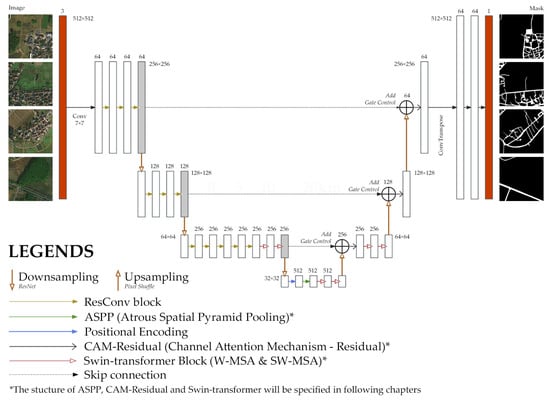

The R-SWTNet network structure (Figure 5) adopts an encoder–decoder architecture optimized for rural road segmentation, building on the classic UNet framework with three core components, encoders, bottleneck block, and decoders, each modified to address the complexity of rural road features. The detailed parameters of the network are listed in Table 3.

Figure 5.

The structure of R-SWTNet.

Table 3.

The parameters of R-SWTNet.

The encoders perform hierarchical feature extraction via four downsampling blocks, progressively reducing spatial dimensions while enriching semantic information. It uses ResNet34 as the backbone, with early blocks extracting low-level features (textures, edges) through consecutive convolution layers, and Swin Transformer modules integrated gradually to model long-range spatial dependencies—critical for connecting occluded or fragmented road segments. By combining local feature refinement (via ResNet) and contextual correlation (via Transformer), the encoder captures multi-scale road characteristics, from narrow alley edges to large-scale network topology.

The bottleneck block deepens semantic integration by merging high-level encoder features. It first downsamples features via patch merging, then employs a positional encoding block and an ASPP module to aggregate multi-scale context, enabling the model to adapt to varying road widths and occlusions. Two subsequent Swin Transformer blocks further strengthen global feature correlation, ensuring coherent representation of rural road networks despite their unstructured layout.

The decoder module reconstructs high-resolution segmentation maps by reversing downsampling, leveraging skip connections to fuse encoder-derived multi-scale features. Each decoder block upsamples features via pixel shuffle and gated skip connections and refines the fused map using CAM-Residual blocks—these enhance channel-wise attention to critical road features while suppressing noise. Selective integration of Transformer modules in later decoder stages further optimizes boundary precision, ensuring accurate recovery of narrow alleys and blurred edges. The final output is a binary segmentation mask generated via a 1 × 1 convolution with sigmoid activation, classifying road pixels from background.

This architecture integrates ResNet34’s hierarchical feature extraction, Swin Transformer’s long-range dependency modeling, ASPP’s multi-scale context fusion, and CAM-Residual’s channel-wise attention, collectively enhancing the model’s ability to capture blurred boundaries, occluded segments, and narrow alleys in rural scenes.

2.2.2. The Structure of Swin Transformer Module

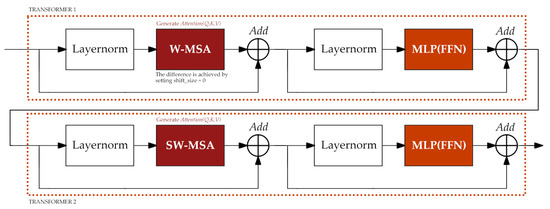

The Swin Transformer module in R-SWTNet is a computationally optimized adaptation of the Swin Transformer architecture (Figure 6), designed to enhance the network’s capacity for capturing long-range dependencies and global context, which is critical for segmenting rural roads with fragmented or occluded segments. The module comprises two consecutive transformer blocks, which work synergistically to balance local detail preservation and cross-region information interaction.

Figure 6.

The Swin Transformer structure.

The first block employs a Window Multi-head Self-Attention (W-MSA) mechanism, partitioning the feature map into non-overlapping local windows and confining self-attention computations within these windows to reduce computational complexity. By anchoring attention to local regions, W-MSA prioritizes fine-grained details such as road edges, texture variations, and small-scale occlusions, ensuring precise modeling of subtle rural road features without the cost of global attention.

The second block introduces a Shifted Window Multi-head Self-Attention (SW-MSA) mechanism, which shifts the window partitions of the previous layer to create overlapping regions between adjacent windows. This design enables cross-window information communication: features from neighboring windows (e.g., a partially occluded road segment in one window and its continuation in an adjacent window) can interact, effectively expanding the model’s receptive field. Importantly, the sequential arrangement of W-MSA and SW-MSA ensures local features are first stabilized before integrating contextual cues from neighboring regions, preventing noise from diluting critical details (e.g., distinguishing a narrow alley from surrounding field ridges).

The self-attention operation within each block is computed as:

where Q, K, and V represent query, key, and value matrices, respectively, and denotes the dimension of the query/key vectors.

The shifted window strategy enriches inter-window information interaction, enabling the model to aggregate context from adjacent regions and adaptively adjust feature weights based on semantic relevance (e.g., emphasizing distant but structurally continuous road segments). When deployed in R-SWTNet’s encoders, this module outperforms conventional transformer blocks by balancing three key advantages: (1) capturing long-range dependencies to connect fragmented road segments (e.g., roads interrupted by buildings or trees); (2) preserving multi-scale features, from fine-grained alley edges (low-level) to large-scale network topology (high-level); and (3) maintaining computational efficiency, as window-based attention reduces resource consumption compared to global self-attention.

By deploying these transformer blocks at different depths of the encoder, R-SWTNet adaptively models long-range dependencies across varying feature scales: shallow layers focus on short-range context (e.g., local road texture coherence), while deeper layers capture long-range relationships (e.g., connectivity between distant road segments). This hierarchical adaptation enhances the model’s ability to interpret complex rural road topologies, particularly in scenarios with severe occlusions or narrow, winding alleys—ultimately improving segmentation accuracy and robustness for unstructured rural road networks.

2.2.3. The Structure of Atrous Spatial Pyramid Pooling

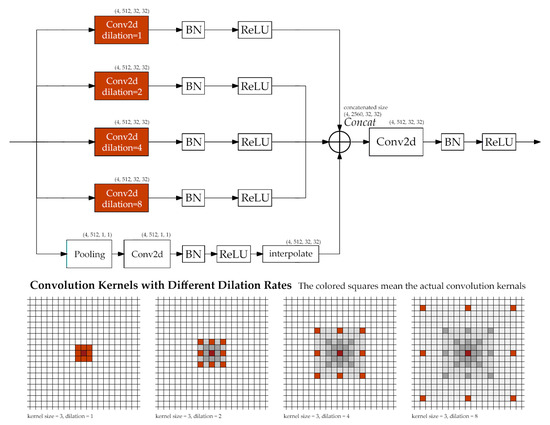

The Atrous Spatial Pyramid Pooling (ASPP) block (Figure 7) in R-SWTNet is a critical component in the bottleneck layer, designed to enrich high-level features with multi-scale contextual information—essential for capturing rural roads of varying widths (from narrow alleys to main roads) and mitigating the impact of occlusions.

Figure 7.

The ASPP structure.

The ASPP block consists of multiple parallel convolutional branches that process the input feature map at distinct scales, then concatenate their outputs to aggregate multi-scale context. By leveraging atrous convolution with varying dilation rates, ASPP effectively captures context at multiple scales: small dilation rates focus on local details like narrow alley edges, while larger rates capture broader contextual cues such as the spatial relationship between a road and surrounding buildings or vegetation. The global average pooling branch further enhances the model’s awareness of “road-like” regions in complex rural scenes, where roads may be fragmented by trees or occluded by houses. Together, these mechanisms enable the bottleneck layer to synthesize rich, multi-scale context, which is critical for accurately segmenting rural roads with blurred boundaries, irregular morphologies, and diverse widths.

In the R-SWTNet architecture, the ASPP block processes high-level features from the encoder’s deepest layer (after patch merging), enriching them with multi-scale context before passing to subsequent Swin Transformer blocks. This ensures that the decoder receives feature maps encoding both fine-grained details and global structure, ultimately improving the model’s ability to reconstruct continuous, accurate road networks in unstructured rural environments.

2.2.4. The Structure of CAM-Residual Block

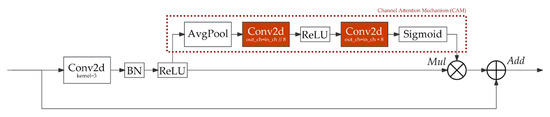

The CAM-Residual (Channel Attention Mechanism-Residual) block is a key component in R-SWTNet’s decoders, designed to enhance feature refinement while maintaining computational efficiency (Figure 8). It embeds a channel attention mechanism within residual connections, enabling adaptive emphasis on critical road features while suppressing irrelevant background noise.

Figure 8.

The CAM-Residual structure.

Structurally, the block processes concatenated features from encoders and skip connections: first, residual paths preserve low-level details to mitigate gradient vanishing during training; second, the channel attention module, which replaces traditional fully connected layers with lightweight convolutions, dynamically weights feature channels based on their contribution to road segmentation, reducing parameter complexity. This dual design strengthens the model’s ability to discern intricate topological details.

In rural road segmentation, CAM-Residual addresses two core challenges: (1) enhancing discriminability between spectrally similar features via channel-wise weighting; (2) stabilizing training for imbalanced datasets (fewer road pixels) by preserving gradient flow through residual paths. By refining decoder features, it ensures accurate reconstruction of rural road planar morphology, particularly for narrow, occluded alleys critical to village mobility networks.

2.3. Loss Function

2.3.1. Focal Loss

Binary cross-entropy loss (BCE loss) is one of the most popular loss functions in binary classification scenarios. The CE of a pixel with a predicted value of p and a true label of y is as follows:

If we assign a parameter as

then the CE value can be simplified to

In tasks where the foreground and background are imbalanced, focal loss [47] is more suitable than cross-entropy. In Table 1, the foreground ratio of each village stays below 10%, indicating the applicability of focal loss. Compared with cross-entropy, focal loss introduces factors and in the form of

where

Generally, the value of stays around 0.75, and is often set to 2. By introducing these two factors, the easy-to-classify samples are less important, and the hard-to-classify ones are amplified, forcing models to focus on underrepresented classes. This adaptability ensures better recognition of hard-to-classify cases, balancing performance across all categories and enhancing model robustness in imbalanced datasets. The focal loss of a whole image is the arithmetic mean of .

2.3.2. Tversky Loss

Tversky loss [48], an extension of the Tversky index, is optimized for imbalanced segmentation tasks by introducing adjustable weights for false positives (FP) and false negatives (FN), making it particularly effective for rural road segmentation—where narrow alleys (small targets) and class imbalance (fewer road pixels) demand precise control over error penalties. Unlike Dice loss (which treats FP and FN equally), Tversky loss dynamically balances these errors via hyperparameters α and β, enabling targeted optimization for rural road challenges like under-segmentation of narrow paths or over-segmentation of background clutter.

The Tversky loss for a binary segmentation task is defined as:

where TP (true positives), FP (false positives), and FN (false negatives) form the confusion matrix; (often 1 × 10−8) avoids division by zero; α and β weight FP and FN, respectively. For rural roads, we set α = 0.3 and β = 0.7 to prioritize reducing FN, which is critical for capturing narrow alleys and maintaining road continuity.

This design enhances sensitivity to small, sparse structures: by amplifying FN penalties (β = 0.7), the model focuses on recovering fragmented road segments, while controlled FP weighting (α = 0.3) balances precision. Compared with Dice loss [49] (which averages TP/FP/FN), Tversky loss adaptively aligns with rural road characteristics, ensuring both continuity of major roads and preservation of narrow alley details, critical for reconstructing complete village mobility networks.

2.3.3. Dynamic Hybrid Loss

To address the dual challenges of class imbalance (fewer road pixels) and fine-grained structure preservation (narrow alleys) in rural road segmentation, we propose a Dynamic Hybrid Loss (DHLoss) that adaptively combines Focal loss and Tversky loss via a task-aware weight scheduler. This design leverages the strengths of both losses: Tversky loss optimizes spatial overlap for small targets, while Focal loss prioritizes hard-to-classify pixels, ensuring robust segmentation of complex rural scenes.

DHLoss is formulated as:

In this expression, is the dynamic ratio increasing epoch-wise in the first several warming-up epochs from 0 to (generally ranging from 0.5 to 0.7), so that in the early stages of training, the DHLoss consists mainly of Tversky loss, which focuses on maximizing spatial overlap between predicted and ground-truth road regions. This stabilizes initial convergence by prioritizing the recovery of large-scale road topology and mitigating false negatives (FN) for narrow alleys. As training progresses, Focal loss gradually gains weight, emphasizing hard-to-classify pixels (e.g., blurred alley boundaries, occluded segments by vegetation). By down-weighting easy samples and up-weighting hard samples (e.g., road-edge pixels confused with field ridges), Focal loss refines boundary precision and reduces false positives (FP) from background clutter, complementing Tversky loss’s focus on spatial overlap.

This dynamic combination ensures both initial topology capture and late-stage detail optimization: Tversky loss establishes a baseline road network structure, avoiding under-segmentation of narrow alleys, while focal loss sharpens boundary definition and suppresses background interference, enhancing overall segmentation fidelity. For rural road scenes—characterized by imbalanced data, small targets, and ambiguous boundaries—DHLoss balances global structural integrity and local detail accuracy, outperforming single-loss strategies (e.g., Focal or Tversky alone) in both quantitative metrics (IoU, F1-score) and visual continuity of segmented roads.

3. Experiment and Results

3.1. Optimization and Learning Rate Management

In deep learning-based segmentation tasks, the choice of optimizer and learning rate scheduling directly impacts model convergence, generalization, and training stability, which is particularly critical for complex rural road scenes with imbalanced data and fine-grained features. This study employs a robust optimization pipeline combining the AdamW optimizer [50,51], with a sequential learning rate scheduler complemented by memory-efficient training strategies, to enable stable training of the R-SWTNet architecture.

The AdamW optimizer is selected for its adaptive gradient descent capabilities and effective weight decay regularization, configured with a base learning rate of 1 × 10−4, weight decay of 1 × 10−4, and batch size of 4. To balance training stability and convergence speed, the learning rate is dynamically adjusted via a two-stage sequential scheduler: (1) A linear warm-up phase [52] over the first 10 epochs, starting from 0.1× the base rate (1 × 10−5) and gradually increasing to the full base rate. This mitigates unstable early training caused by large initial gradients. (2) A cosine annealing scheduler with warm restarts [53], which oscillates the learning rate between the base value and a minimum of 1 × 10−6, with an initial cycle length of 15 epochs that doubles after each restart ( = 2). This strategy encourages exploration of the loss landscape, facilitating convergence to flat minima [54]—critical for robust generalization [55] to unseen rural road scenes.

To accommodate larger batch sizes under computational constraints, the learning rate is scaled proportionally to the square root of the batch size (e.g., doubling the batch size increases the learning rate by ), a conservative scaling strategy that avoids overfitting compared with linear scaling. Additionally, memory efficiency is enhanced via gradient checkpointing and mixed-precision training (using autocast for 16-bit floating-point operations), enabling training with larger batch sizes without compromising model capacity.

This integrated optimization framework balances three key objectives: (1) adaptive gradient updates via AdamW to handle imbalanced rural road data; (2) stable convergence via warm-up and cosine annealing to avoid local minima; and (3) memory-efficient training via checkpointing and mixed precision to support larger batch sizes, ultimately accelerating convergence and improving model robustness.

3.2. Evaluation Metrics

To comprehensively assess the performance of rural road segmentation models, we select four core metrics: Precision, Recall, Intersection over Union (IoU), and F1-score. These metrics are tailored to address the unique challenges of rural road scenes, including class imbalance (fewer road pixels), narrow target preservation (alleys < 3 m), and boundary ambiguity (blurred edges from occlusions).

Precision focuses on suppressing background clutter (e.g., field ridges or dry riverbeds) by evaluating the proportion of correctly predicted road pixels, directly mitigating false positives that arise from spectral similarity between roads and non-road features. Recall prioritizes capturing narrow alleys (<3 m) and occluded segments by measuring the model’s ability to recover all true road pixels, reducing false negatives critical for preserving rural road networks’ continuity. IoU, the primary spatial metric, quantifies overlap between predicted and ground-truth road regions, explicitly reflecting the model’s capacity to maintain spatial integrity (e.g., avoiding fragmented segmentation of winding paths). F1-score, the harmonic mean of Precision and Recall, balances these two metrics to counteract class imbalance (road pixels < 5% of total) and prevents bias toward over-segmentation (high Precision but missed narrow lanes) or under-segmentation (high Recall but excessive background misclassifications). Together, these metrics collectively validate the model’s robustness across rural-specific scenarios, from fine-grained edge preservation to large-scale topological consistency.

3.3. Comparative Experiment

3.3.1. Experiment Design

To systematically evaluate the performance of the proposed R-SWTNet architecture, comparative experiments were conducted against five benchmark models, spanning classic, transformer-based, residual-enhanced, and lightweight variants (Table 4).

Table 4.

The basic information of the models.

All models were trained under identical experimental conditions to ensure fair comparison: 100 epochs with a batch size of 16, early stopping to prevent overfitting on the validation set, and the same optimization pipeline (as detailed in Section 3.1). Input images were standardized to 512 × 512 pixels, and data augmentation was applied uniformly to all models.

To investigate the impact of lightweight design on rural road segmentation, two DSC-based variants were constructed by replacing all standard convolutional layers in the ResNet34 backbone blocks with depthwise separable convolutions. This modification decouples spatial and channel-wise operations, drastically reducing parameter counts. These variants enabled analysis of the gains and trade-offs between computational efficiency and segmentation accuracy, critical for deploying models in resource-constrained rural environments.

By spanning architectural spectrums—from vanilla U-Net to hybrid transformer convolutional models, and from full-capacity ResNet to lightweight DSC variants—this experimental design isolates the contributions of key components in R-SWTNet: residual feature extraction (ResNet34), long-range dependency modeling (Swin Transformer), multi-scale context fusion (ASPP), and channel-wise attention (CAM-Residual). Collectively, these comparisons validate whether the proposed architecture achieves superior performance by synergistically integrating these mechanisms, rather than relying on isolated innovations.

3.3.2. Experiment Results

The quantitative performance of all models is summarized in Table 5, which reveals distinct disparities in segmentation accuracy and parameter efficiency across architectural paradigms. Of all the trained models, R-Net, D-LinkNet, and R-SWTNet converge around epoch 35–40, after which the metrics on the validation set struggle to improve, while the others take more time to converge.

Table 5.

The quantitative metrics of the models.

Overall, the baseline U-Net exhibits the lowest performance across all metrics, even with the second-highest parameter counts, with validation IoU and F1-score values significantly below those of other models, confirming its inadequacy for complex rural road segmentation. In contrast, transformer-based and residual-enhanced models demonstrate marked improvements, though their performance varies with architectural design.

Swin-UNet, representing pure transformer architectures, achieves higher training precision and recall than U-Net but shows a substantial drop in validation metrics, particularly in IoU and F1-score, indicating limited generalization to unseen rural scenes. Residual-based models, by comparison, perform consistently better: R-Net (ResNet34 backbone) outperforms Swin-UNet in both training and validation metrics, with validation IoU and F1-score exceeding those of Swin-UNet by notable margins. R-Net, D-LinkNet, and the proposed R-SWTNet share similar validation metrics and FLOPs, yet the proposed R-SWTNet has the least overfitting with the most parameters. Generally speaking, the more the parameters, the more possible to overfit, the seemingly contradictory phenomenon indicates the superiority of the R-SWTNet.

Lightweight variants with depthwise separable convolutions (DSC) exhibit clear parameter efficiency trade-offs. R-Net-DSC and R-SWTNet-DSC reduce parameter counts compared with their non-DSC counterparts. However, this parameter reduction is accompanied by consistent drops in both training and validation metrics, with validation IoU decreasing by 7–8 percentage points for both variants and a slight increase in overfitting.

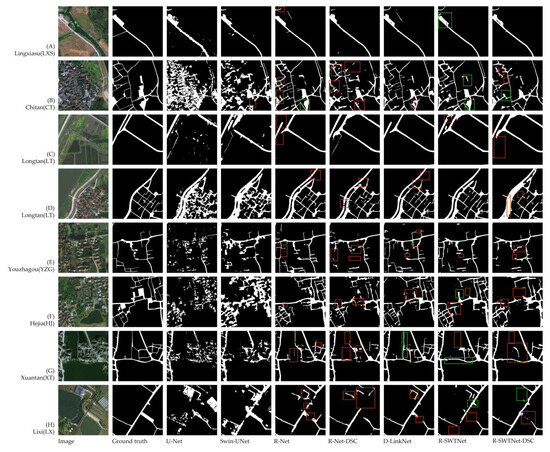

The actual segmentation results vary across models, with visual patterns in Figure 9 corroborating the quantitative trends in Table 5. Visually, the U-Net model struggles to distinguish rural roads from background features, often misclassifying buildings as roads, which is a tendency rooted in its architectural bias toward large, high-contrast regions. U-Net’s encoder–decoder structure prioritizes local, block-like features and loses fine-grained details during downsampling, failing to capture narrow alleys or occluded segments. In low-occlusion scenarios (e.g., scenarios A, C, and H), the U-Net model performs better, but is still far from optimal. This results in blurred boundaries and incomplete road networks, aligning with its low IoU scores (22.76% validation).

Figure 9.

Comparison of different models on the test set.

The pure transformer-based Swin-UNet also suffers from misclassifications. Unlike U-Net, the Swin-UNet can recognize semantics mostly properly, but due to the lack of capabilities in local feature extraction, the boundaries of the foreground remain blurred. Experiments were taken to replace the original four times downsampling with two times downsampling in the first layer, only to find a massive increase in training cost. In contrast, residual-based models exhibit clearer segmentation. R-Net (ResNet34 backbone) outperforms Swin-UNet visually, with sharper road boundaries and fewer false positives, confirming residual connections’ role in stabilizing local feature extraction.

The proposed R-SWTNet demonstrates incremental but critical visual improvements over benchmarks. It reaches the best balance of local and global features. The pure CNN-based models, like the R-Net, D-LinkNet and R-Net-DSC, if looked closely, have lots of spurs and small white dots in their segmentation results, while R-SWTNet, thanks to its transformer-based encoder3 and bottleneck, reduces this greatly. Moreover, in scenario F, R-SWTNet performs the best in segmenting the playground in the middle, though far from optimal. In addition, the comparison of D-LinkNet and R-SWTNet correlates with the metrics in Table 5. In scenarios B, D, E, and F, the alleys R-SWTNet recognizes are a little bit wider than the alleys D-LinkNet recognizes, which is cross-validated by the difference in Precision and Recall on the validation set.

Lightweight DSC variants (R-Net-DSC, R-SWTNet-DSC) exhibit noticeable visual degradation, particularly in scenario B. In scenario B, R-Net-DSC fails to identify the Chitan Old Town parking lot, a distinct white square in high-resolution imagery. Their non-DSC counterparts (R-Net, R-SWTNet), on the contrary, both segment it correctly. The degradation reflects reduced feature extraction capacity from parameter compression, aligning with the quantitative IoU drops (5–6 percentage points), confirming DSC’s trade-off between efficiency and representational power for complex rural scenes.

4. Discussion

4.1. Necessity of Transformer-CNN Conjunction

For rural road segmentation, addressing occlusion and discontinuous road segments hinges on effectively modeling long-range dependencies—a capability inherently limited in vanilla U-Net architectures. Two primary strategies exist to mitigate this limitation: data augmentation and architectural enhancement. Among these, architectural innovations have proven most impactful for capturing the complex topological relationships of rural road networks.

Transformers are indispensable for segmenting occluded rural roads, where long-range contextual reasoning is critical. Unlike CNNs, which rely on local receptive fields and implicitly construct connections between distant pixels with extended receptive fields by stacking multiple layers, transformers dynamically and explicitly weight semantic relevance across an entire image. For instance, the hierarchical SW-MSA mechanism in the Swin Transformer progressively builds global context by correlating disconnected segments across non-overlapping windows, enabling coherent reconstruction of occluded roads (Figure 9). This explains the drastic performance gap between transformer-equipped models (Swin-UNet, R-SWTNet) and the baseline U-Net, whose convolutional inductive bias prioritizes local patterns, leading to fragmented segmentation of rural alley networks.

Despite their superior ability to model long-range dependencies, transformer mechanisms exhibit inherent limitations in local feature granularity and training convergence for pixel-wise segmentation tasks. These drawbacks necessitate hybrid architectures that merge the strengths of both paradigms: CNNs for local feature precision, and transformers for long-range contextual reasoning. The proposed R-SWTNet exemplifies this synergy by integrating ResNet34 and Swin Transformer modules. ResNet34’s hierarchical convolution layers efficiently extract low-level features and preserve spatial resolution, mitigating the “local blur” issue of pure transformers. Meanwhile, Swin Transformer blocks model long-range dependencies, correlating fragmented road segments across distant image regions. This hybrid design outperforms pure transformer architectures and pure CNN architectures, achieving higher validation IoUs or lower overfitting. For rural road segmentation—where both narrow alleys (local) and network continuity (global) are critical—such hybrid integration transitions from architectural choice to necessity.

4.2. The Gains and Trade-Offs of DSC Module

Depthwise Separable Convolution (DSC) decouples spatial and channel-wise operations, drastically reducing parameters by factorizing standard convolution into depthwise (spatial) and pointwise (channel) convolutions, drastically reducing parameters and FLOPs.

However, this parameter compression introduces critical trade-offs. Quantitatively, DSC variants exhibit consistent performance degradation: R-SWTNet-DSC’s validation IoU drops by 5.76% (from 54.88% to 49.12%), and R-Net-DSC’s validation IoU decreases by 5.10% (from 53.15% to 48.05%). Visually, DSC models fail to segment fine-grained rural features due to reduced model capacity, creating a “shallow representation bottleneck”.

Training dynamics further highlight DSC limitations. DSC-equipped models (e.g., R-Net-DSC) converge more slowly than their standard convolution counterparts, partly because DSC replaces ResNet34’s pre-trained kernels with randomly initialized depthwise layers, disrupting transfer learning benefits.

For rural road segmentation, DSC’s trade-offs are context-dependent: it offers a feasible compromise for resource-constrained edge devices (e.g., on-site mapping with limited memory), but its accuracy loss makes it unsuitable for precision-critical tasks (e.g., narrow alley preservation). R-SWTNet’s non-DSC variant thus remains preferable for most rural applications, balancing performance and efficiency without sacrificing fine-grained feature extraction.

4.3. Labeling Noise and Training Ambiguities

Labeling noise and training ambiguities collectively undermine the robustness of rural road segmentation models, stemming from the intrinsic complexity of rural road semantics and limitations in remote sensing data. Rural roads lack standardized definitions, unlike their urban counterparts, which have clear functional boundaries and hardened surfaces. This semantic fuzziness is exacerbated by morphological diversity: pathways may be unpaved, grass-covered, or barely distinguishable from adjacent features like field ridges or footpaths. When narrowed, their spectral signatures in satellite imagery converge with the surrounding terrain. For example, a sunlit earth alley and a dry field ridge often exhibit near-identical reflectance under midday light, making spectral cues alone insufficient for reliable classification.

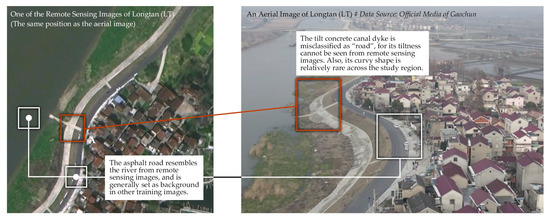

Compounding this, manual labeling introduces subjective errors due to inconsistent criteria for defining “roads”. Annotators may label a 1.5 m wide compacted path as “foreground” (due to frequent pedestrian use) or “background” (due to lack of vehicular traffic), creating conflicting labels for identical features. Such noise propagates through training: models learn unstable patterns when locally similar receptive fields (e.g., a 2 m wide alley vs. a 2 m wide ridge) have contradictory labels, leading to erratic segmentation. For instance, scenario D (LT) in Figure 9 shows misclassification of a canal dyke as a road (Figure 10), likely because of the spectral similarity of concrete dyke and road with similar curvatures, and the opposite labels.

Figure 10.

The ambiguity of Longtan (LT).

Training ambiguities further amplify these issues, driven by satellite imagery with relatively low resolution that homogenizes inner-village textures, blurring subtle topological details (e.g., alley edges vs. courtyard walls). This forces models to rely on global context over local features, increasing vulnerability to labeling errors. For example, squares and parking lots are labeled “foreground” (road), while courtyards are “background”, but their spectral similarity and blurred boundaries (e.g., walls indistinct in satellite imagery) make this distinction unreliable. As shown in scenario H of Figure 9, none of the models clearly segments the “open space” at the road junction—a failure rooted in training data, where such spaces were inconsistently labeled, leaving the model to “speculate” based on incomplete cues.

These intertwined challenges—labeling noise (semantic fuzziness and subjective annotation) and training ambiguity (feature confusion from low resolution and inconsistent labels)—create a bottleneck for rural road segmentation. Models trained under such conditions struggle to balance recall for narrow alleys and precision for background clutter, highlighting the need for multi-source validation (e.g., cross-referencing satellite and aerial imagery) and expanded datasets with diverse “open space” semantics to reduce reliance on unstable spectral cues.

5. Conclusions

This study addresses the critical challenge of accurately segmenting unstructured rural roads and narrow alleys using deep learning, proposing a context-aware framework and dataset to advance rural road extraction technology. By integrating hierarchical feature extraction, long-range dependency modeling, and multi-scale context fusion, the research provides a robust solution for data-driven rural planning under China’s Rural Revitalization Strategy.

5.1. Context-Aware Hybrid Architecture: Advancing Rural Road Segmentation

The proposed R-SWTNet architecture achieves superior segmentation performance by synergistically integrating ResNet34, Swin Transformer, ASPP, and CAM-Residual modules. ResNet34’s hierarchical convolutions extract low-level features (textures, edges) with high spatial resolution, while the Swin Transformer models long-range dependencies via shifted window attention, correlating fragmented road segments across distant image regions. The ASPP module enriches multi-scale contexts to adapt to varying road widths, and CAM-Residual blocks refine decoder features by suppressing background noise and emphasizing critical road boundaries.

Quantitatively, R-SWTNet outperforms benchmark models on the SQVillages dataset: it achieves a validation IoU of 54.88% and F1-score of 70.87% (Table 5), exceeding U-Net (22.76% IoU) and Swin-UNet (38.73% IoU), and is slightly superior to R-Net (53.15% IoU) and D-LinkNet (54.64% IoU), with remarkably lower overfitting. Visually, it demonstrates superior capability in segmenting narrow alleys and maintaining road continuity, confirming its robustness (Figure 9). This hybrid design resolves the limitations of pure CNNs (poor long-range reasoning) and pure transformers (local detail loss), establishing a new paradigm for unstructured rural road segmentation.

5.2. SQVillages Dataset: Enabling Robust Model Generalization

To address the scarcity of rural-specific road datasets, this study constructs the SQVillages dataset, integrating multi-source remote sensing data (satellite and aerial imagery) and adaptive augmentation. The dataset includes 18 villages across diverse geomorphic types (polders, plains, river-side villages) and infrastructure conditions (traditional villages, resettlement communities).

Key innovations of SQVillages include:

- (1)

- Multi-source labeling (cross-validating satellite imagery with sub-meter aerial orthomosaics to reduce spectral confusion errors);

- (2)

- Village-level stratified splitting (isolating validation/test sets by village to avoid data leakage). This dataset ensures model generalization to unseen rural scenes, as evidenced by R-SWTNet’s consistent performance across training and validation sets.

5.3. Limitations and Future Work

Despite the promising results, this study has several limitations. First, the SQVillages dataset, while diverse, is geographically focused on eastern China, and model performance on villages in vastly different landscapes (e.g., high-altitude plateaus or deserts) remains unexplored. Second, the computational cost of the full R-SWTNet model may hinder its deployment on extremely resource-constrained edge devices.

These limitations point to clear directions for future research. Our immediate next steps include:

- (1)

- Expanding the SQVillages dataset to incorporate villages from western China’s mountainous regions to test model generalization further;

- (2)

- Exploring more advanced model compression and quantization techniques to enhance deployment feasibility without significant accuracy loss;

- (3)

- Investigating semi-supervised learning paradigms to reduce the heavy reliance on costly pixel-wise annotations.

In conclusion, this study achieved its initial aim of developing a robust deep learning framework for segmenting rural streets and alleys in China. By addressing both architectural innovation and dataset construction, we have advanced the state-of-the-art in rural road extraction and laid a foundation for intelligent rural infrastructure management.

Author Contributions

Conceptualization, J.W., X.L. and H.Z.; network construction, J.W.; aerial images acquisition, J.W., X.X., Y.Z. and Y.C.; aerial images processing, J.Y., X.X. and Y.Z.; satellite imagery acquisition, J.W. and J.Y.; dataset preparation, J.Y.; model training and testing, J.W.; writing—original draft preparation, J.W.; writing—review and editing, J.Y. and Y.C.; visualization, J.W., J.Y. and X.X.; supervision, X.L. and H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to the first author.

Acknowledgments

During the preparation of this manuscript/study, the author(s) used deepseek-R1-0528 and doubao-seed-1.6-thinking for the purposes of refining texts. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The most commonly used abbreviations of network structures are listed as follows:

| CNN | Convolutional Neural Network |

| DSC | Depthwise Separable Convolution |

| FCN | Fully Convolutional Network |

| ASPP | Atrous Spatial Pyramid Pooling |

| IoU | Intersection over Union |

| W-MSA | Window Multi-head Self-Attention |

| SW-MSA | Shifted Window Multi-head Self-Attention |

The specific definition of the list networks, such as AlexNet, DeconvNet, SegNet etc., can be found in the corresponding literature.

Note

| 1 | The official API of Tianditu can be found at http://lbs.tianditu.gov.cn/server/MapService.html (accessed from 14 June to 7 August 2025). The API is accessed using Python 3.6 script. |

References

- Zhang, H.; Dong, W.; Fang, X. Road construction and rural household income: Empirical evidence from village road paving in China. Financ. Res. Lett. 2023, 51, 103460. [Google Scholar] [CrossRef]

- World Bank Group. Measuring Rural Access: Using New Technologies; World Bank Group: Washington, DC, USA, 2016; Available online: https://openknowledge.worldbank.org/entities/publication/ba2e6b4d-ea2e-58f0-b54e-326c902169ba (accessed on 30 August 2025).

- Tian, Z.; Xin, Y.; Lin, Y. Do roads help rural populations escape poverty? new evidence from Chinese survey data. Appl. Econ. 2025, 1–14. [Google Scholar] [CrossRef]

- Morán Uriel, J.; Camerin, F.; Córdoba Hernández, R. Urban Horizons in China: Challenges and Opportunities for Community Intervention in a Country Marked by the Heihe-Tengchong Line. In Diversity as Catalyst: Economic Growth and Urban Resilience in Global Cityscapes; Urban Sustainability; Siew, G., Allam, Z., Cheshmehzangi, A., Eds.; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- National Bureau of Statistics of China; Wang, P.P. Total Population Decline Narrowed, Population Quality Continued to Improve Report; National Bureau of Statistics of China: Beijing, China, 2025. Available online: https://www.stats.gov.cn/xxgk/jd/sjjd2020/202501/t20250117_1958337.html (accessed on 26 August 2025). (In Chinese)

- The Central Committee of the Communist Party of China (CPC); The State Council. Rural Revitalization Strategy Plan (2018–2022) Report; The State Council of the People’s Republic of China: Beijing, China, 2018. Available online: http://www.gov.cn/zhengce/2018-09/26/content_5325534.htm (accessed on 11 February 2021). (In Chinese)

- The Central Committee of the Communist Party of China (CPC); The State Council. Comprehensive Rural Revitalization Plan (2024–2027) Report; The State Council of the People’s Republic of China: Beijing, China, 2025. Available online: https://www.gov.cn/zhengce/202501/content_7000493.htm (accessed on 2 March 2025). (In Chinese)

- Ministry of Transport of the People’s Republic of China. 2024 Statistical Bulletin of the Transportation Industry; Ministry of Transport: Beijing, China, 2024. Available online: https://xxgk.mot.gov.cn/2020/jigou/zhghs/202506/t20250610_4170228.html (accessed on 7 August 2025). (In Chinese)

- Ministry of Transport of the People’s Republic of China. 2014 Statistical Bulletin of the Transportation Industry; Ministry of Transport: Beijing, China, 2014. Available online: https://www.gov.cn/xinwen/2015-04/30/content_2855735.htm (accessed on 7 August 2025). (In Chinese)

- Cai, H.; Yuan, S.; Yang, K.; Wang, F.; Sheng, G. Method for Rural Road Verification and Its Application Based on Chinese High Resolution Remote Sensing Image. Bull. Surv. Mapp. 2020, 03, 91–95, (In Chinese with English Abstract). [Google Scholar] [CrossRef]

- He, H.; Fan, J.; Chen, W.; Zhou, Y.; Zhang, P.; Yu, X. Extraction of Shaded Roads in High-Resolution Remote Sensing Imagery based on Brightness Compensation. J. Geo-Inf. Sci. 2020, 22, 258–267, (In Chinese with English Abstract). [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Toronto, ON, Canada, 2013. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C.J., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Van-Den Hengel, A. Effective semantic pixel labelling with convolutional networks and Conditional Random Fields. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 36–43. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Aoki, Y.; Saito, S. Building and road detection from large aerial imagery. In Proceedings of the SPIE—Image Processing: Machine Vision Applications VIII, San Francisco, CA, USA, 27 February 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9405, p. 94050K. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Sherrah, J. Fully Convolutional Networks for Dense Semantic Labelling of High-Resolution Aerial Imagery. arXiv 2016, arXiv:1606.02585. [Google Scholar] [CrossRef]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1520–1528. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.I.L.; Lee, M.J.; Heinrich, M.P.; Misawa, K.; Mori, K.; McDonagh, S.G.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Wang, R.; Pan, F.; An, Q.; Diao, Q.; Feng, X. Aerial Unstructured Road Segmentation Based on Deep Convolution Neural Network. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8494–8500. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.U.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Von Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Trans-former using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Proceedings of the ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Ge, C.; Nie, Y.; Kong, F.; Xu, X. Improving Road Extraction for Autonomous Driving Using Swin Transformer Unet. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 1216–1221. [Google Scholar]

- Sun, Y.; Gu, X.; Zhou, X.; Yang, J.; Shen, W.; Cheng, Y.; Zhang, J.M.; Chen, Y. DPIF-Net: A dual path network for rural road extraction based on the fusion of global and local information. PeerJ Comput. Sci. 2024, 10, e2079. [Google Scholar] [CrossRef] [PubMed]

- Lyu, S.; Li, J.; A, X.; Yang, C.; Yang, R.; Shang, X. Res_ASPP_UNet++: Building an extraction network from remote sensing imagery combining depthwise separable convolution with atrous spatial pyramid pooling. Natl. Remote Sens. Bull. 2023, 27, 502–519. (In Chinese) [Google Scholar] [CrossRef]

- Sloan, S.; Talkhani, R.R.; Huang, T.; Engert, J.; Laurance, W.F. Mapping Remote Roads Using Artificial Intelligence and Satellite Imagery. Remote Sens. 2024, 16, 839. [Google Scholar] [CrossRef]

- Yang, N.; Di, W.; Wang, Q.; Liu, W.; Feng, T.; Tian, X. Rural Road Extraction in Xiong’an New Area of China Based on the RC-MSFNet Network Model. Sensors 2024, 24, 6672. [Google Scholar] [CrossRef]

- Chen, Z.; Yuan, F.; Zhang, J.; Shen, S.; Li, X.; Li, X.; Huang, M.; Jowitt, S.M. Paleomagnetic evidence for the Gothenburg geomagnetic excursion during the Pleistocene–Holocene transition recorded in the Paleo-Danyang Lake, eastern China. J. Asian Earth Sci. 2020, 201, 104140. [Google Scholar] [CrossRef]

- Hu, X.; Wu, L.; Zhuang, Y.; Wang, X.; Ma, C.; Li, L.; Guan, H.; Lu, S.; Luo, W.; Xu, Z. Evolution of the historical polder landscape in the ancient Danyang wetland, lower Yangtze River, China, during the last 3000 years. J. Geogr. Sci. 2024, 34, 2053–2073. [Google Scholar] [CrossRef]

- Li, M.; Rui, Y.; Wang, C.X. Spatial Distribution and Influencing Factors of Traditional Villages: A Case Study of the Wuyue Cultural Region. Resour. Environ. Yangtze Basin 2018, 27, 1693–1702. Available online: https://yangtzebasin.whlib.ac.cn/CN/Y2018/V27/I08/1693 (accessed on 30 August 2025). (In Chinese).

- Dong, G.; Mou, X.; Zhang, H.; Li, R.; Wu, H.; Jiang, J.; Li, F.; Yu, W. Browsing target extraction and spatiotemporal preference mining from the complex virtual trajectories. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103819. [Google Scholar] [CrossRef]

- Kang, T. Administrative Village: Nationalization Governance of Rural Society. In Handbook of Essential Keywords for Understanding Rural China; Jinhai, L., Ed.; Springer Nature: Singapore, 2024; pp. 83–101. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Zhang, H.; Wu, R.; Chen, H.; Cui, W.; Wu, J.; Yang, J. Symbiosis of the “Old” and the “New” in the Renovation of Existing Rural Buildings: Taking the Practice of Residential Renovation in Gaogang Village, Nanjing City as an Example. Urban Des. 2024, 2024, 54–61. Available online: https://d.wanfangdata.com.cn/periodical/qk_f3eb0e481c6441a6a4e4642f4aebfe4a (accessed on 30 August 2025). (In Chinese with English Abstract).

- Yuan, M.; Zhang, H. The Practical Case of Gaogang in Rural Revitalization. World Archit. 2021, 43–47+127, (In Chinese with English Abstract). [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky Loss Function for Image Segmentation Using 3D Fully Convolutional Deep Networks. Lect. Notes Comput. Sci. 2017, 10541, 379–387. [Google Scholar] [CrossRef]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice Loss for Data-imbalanced NLP Tasks. arXiv 2019, arXiv:1911.02855. [Google Scholar]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Goyal, P.; Dollár, P.; Girshick, R.B.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar] [CrossRef]

- Huang, G.; Li, Y.; Pleiss, G.; Liu, Z.; Hopcroft, J.E.; Weinberger, K.Q. Snapshot Ensembles: Train 1, get M for free. arXiv 2017, arXiv:1704.00109. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Flat Minima. Neural Comput. 1997, 9, 1–42. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–1924. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).