Corn Land Extraction Based on Integrating Optical and SAR Remote Sensing Images

Abstract

:1. Introduction

2. Materials and Methods

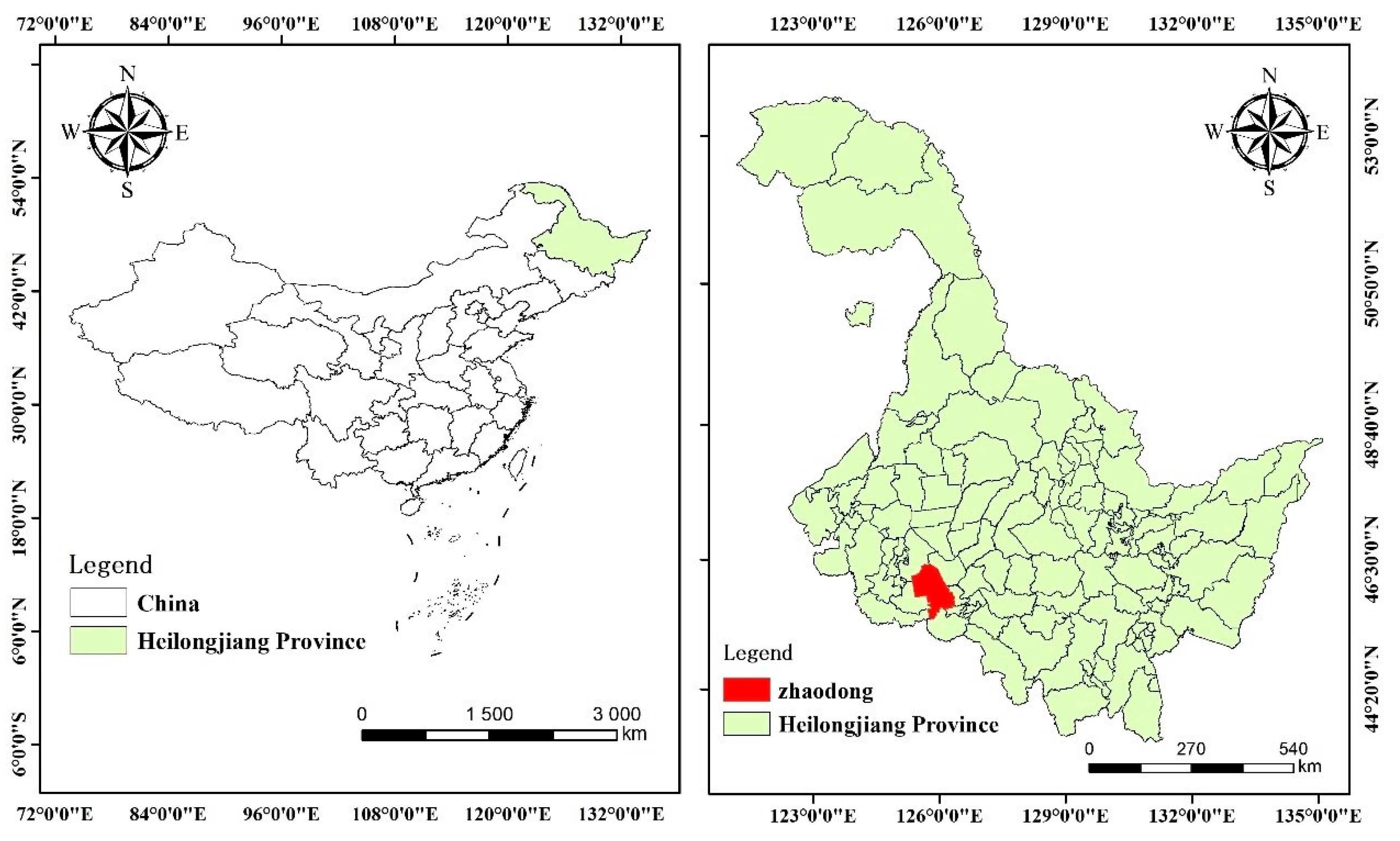

2.1. Study Area

2.2. Date Preparation

2.2.1. Remote Sensing Data Acquisition

2.2.2. Ground Data Acquisition

2.2.3. Remote Sensing Data Preprocessing

2.3. Research Methods

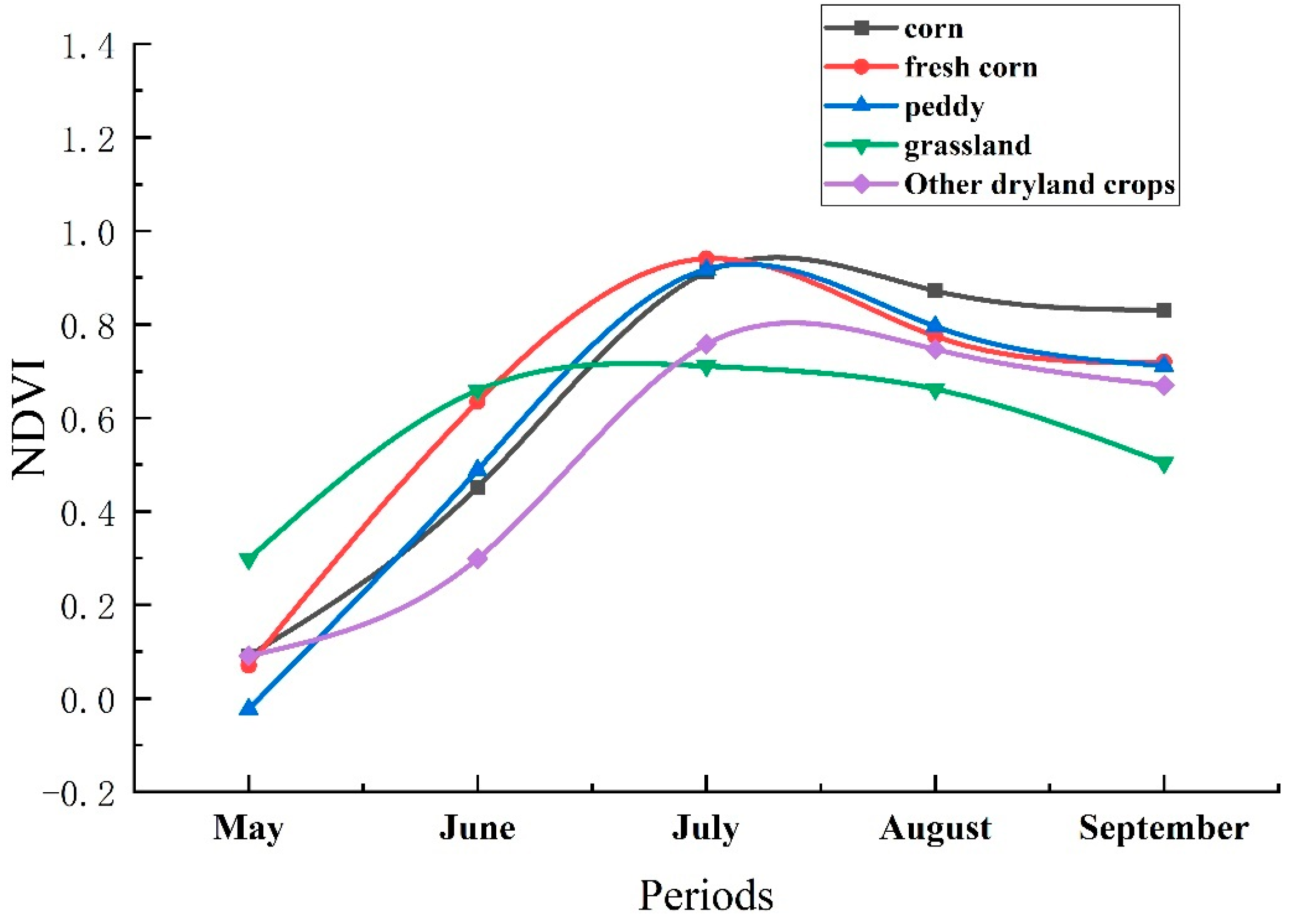

2.3.1. Vegetation Index

2.3.2. SAR Polarization Feature

2.3.3. Classification Methods

2.3.4. Classification Scene Design

2.4. Accuracy Evaluation

3. Results

3.1. Corn Field Extraction Accuracy in Different Time Series of GF-6/GF-3 Images

3.2. Influence of SAR Images on Corn Field Extraction Accuracy

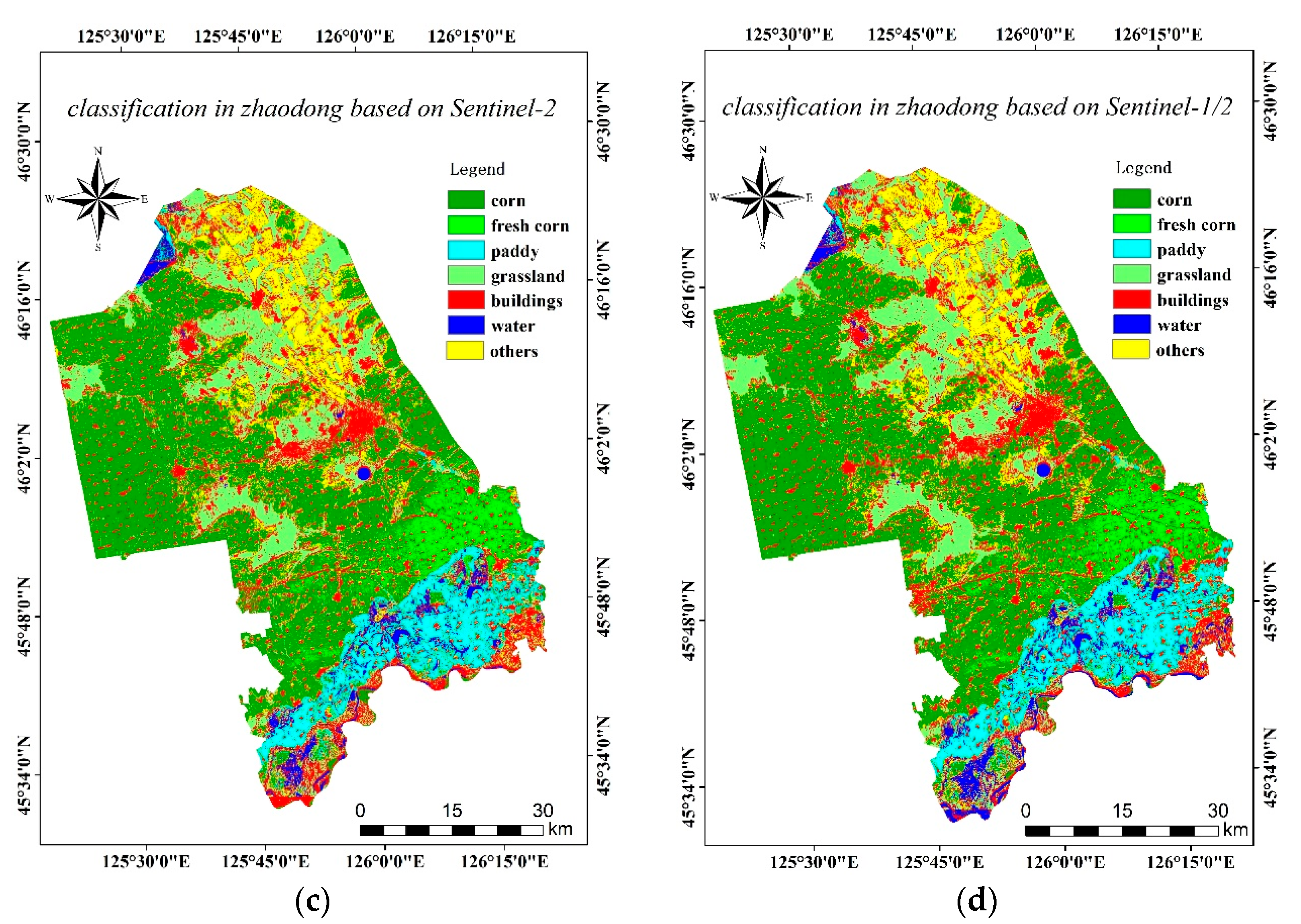

3.3. Comparison of Classification Accuracy between GF and Sentinel in Time Series Images Combined with Optical and SAR

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, X.; Yu, L.; Zhong, L.; Hao, P.; Wu, B.; Wang, H.; Yu, C.; Gong, P. Spatial-temporal patterns of features selected using random forests: A case study of corn and soybeans mapping in the USA. Int. J. Remote Sens. 2018, 40, 269–283. [Google Scholar] [CrossRef]

- Qiu, T.; Song, C.; Li, J. Deriving Annual Double-Season Cropland Phenology Using Landsat Imagery. Remote Sens. 2020, 12, 3275. [Google Scholar] [CrossRef]

- Jiang, L.; Yang, Y.; Shang, S. Remote Sensing-Based Assessment of the Water-Use Efficiency of Maize over a Large, Arid, Regional Irrigation District. Remote Sens. 2022, 14, 2035. [Google Scholar] [CrossRef]

- Orusa, T.; Orusa, R.; Viani, A.; Carella, E.; Borgogno Mondino, E. Geomatics and EO Data to Support Wildlife Diseases Assessment at Landscape Level: A Pilot Experience to Map Infectious Keratoconjunctivitis in Chamois and Phenological Trends in Aosta Valley (NW Italy). Remote Sens. 2020, 12, 3542. [Google Scholar] [CrossRef]

- Vaudour, E.; Noirot-Cosson, P.E.; Membrive, O. Early-season mapping of crops and cultural operations using very high spatial resolution Pleiades images. Int. J. Appl. Earth Obs. Geoinf. 2015, 42, 128–141. [Google Scholar] [CrossRef]

- Sreedhar, R.; Varshney, A.; Dhanya, M. Sugarcane crop classification using time series analysis of optical and SAR sentinel images: A deep learning approach. Remote Sens. Lett. 2022, 13, 812–821. [Google Scholar] [CrossRef]

- Xiao, X.; Lu, Y.; Huang, X.; Chen, T. Temporal Series Crop Classification Study in Rural China Based on Sentinel-1 SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2769–2780. [Google Scholar] [CrossRef]

- Wang, X.; Qiu, P.; Li, Y.; Cha, M. Crop type identification based on Landsat remote sensing data in Kaikong River Basin, Xinjiang. Trans. Soc. Agric. Mach. 2019, 35, 180–188. [Google Scholar]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved Early Crop Type Identification by Joint Use of High Temporal Resolution SAR And Optical Image Time Series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef]

- Luo, C.; Liu, H.-J.; Lu, L.-P.; Liu, Z.-R.; Kong, F.-C.; Zhang, X.-L. Monthly composites from Sentinel-1 and Sentinel-2 images for regional major crop mapping with Google Earth Engine. J. Integr. Agric. 2021, 20, 1944–1957. [Google Scholar] [CrossRef]

- Skakun, S.; Kussul, N.; Shelestov, A.Y.; Lavreniuk, M.; Kussul, O. Efficiency Assessment of Multitemporal C-Band Radarsat-2 Intensity and Landsat-8 Surface Reflectance Satellite Imagery for Crop Classification in Ukraine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3712–3719. [Google Scholar] [CrossRef]

- Sun, Y.; Li, Z.-L.; Luo, J.; Wu, T.; Liu, N. Farmland parcel-based crop classification in cloudy/rainy mountains using Sentinel-1 and Sentinel-2 based deep learning. Int. J. Remote Sens. 2022, 43, 1054–1073. [Google Scholar] [CrossRef]

- Zhang, Y. Calculation of radiative fluxes from the surface to top of atmosphere based on ISCCP and other global data sets: Refinements of the radiative transfer model and the input data. J. Geophys. Res. 2004, 109. [Google Scholar] [CrossRef]

- He, Z.; Hu, J.; Cai, Z.; Wang, W.; Hu, Q. Remote sensing recognition of Artemisia argyi using multi-temporal GF-1 and GF-6 satellite images. Trans. Chin. Soc. Agric. Eng. 2022, 38, 186–195. [Google Scholar]

- Orusa, T.; Cammareri, D.; Borgogno Mondino, E.B. A Possible Land Cover EAGLE Approach to Overcome Remote Sensing Limitations in the Alps Based on Sentinel-1 and Sentinel-2: The Case of Aosta Valley (NW Italy). Remote Sens. 2022, 15, 178. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, J.; Wu, T.; Zhou, Y.; Liu, H.; Gao, L.; Dong, W.; Liu, W.; Yang, Y.; Hu, X.; et al. Synchronous Response Analysis of Features for Remote Sensing Crop Classification Based on Optical and SAR Time-Series Data. Sensors 2019, 19, 4227. [Google Scholar] [CrossRef]

- van Beijma, S.; Comber, A.; Lamb, A. Random forest classification of salt marsh vegetation habitats using quad-polarimetric airborne SAR, elevation and optical RS data. Remote Sens. Environ. 2014, 149, 118–129. [Google Scholar] [CrossRef]

- Liu, C.-A.; Chen, Z.-X.; Shao, Y.; Chen, J.-S.; Hasi, T.; Pan, H.-Z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506. [Google Scholar] [CrossRef]

- Orusa, T.; Cammareri, D.; Borgogno Mondino, E. A Scalable Earth Observation Service to Map Land Cover in Geomorphological Complex Areas beyond the Dynamic World: An Application in Aosta Valley (NW Italy). Appl. Sci. 2022, 13, 390. [Google Scholar] [CrossRef]

- Erinjery, J.J.; Singh, M.; Kent, R. Mapping and assessment of vegetation types in the tropical rainforests of the Western Ghats using multispectral Sentinel-2 and SAR Sentinel-1 satellite imagery. Remote Sens. Environ. 2018, 216, 345–354. [Google Scholar] [CrossRef]

- Shuai, G.; Zhang, J.; Basso, B.; Pan, Y.; Zhu, X.; Zhu, S.; Liu, H. Multi-temporal RADARSAT-2 polarimetric SAR for maize mapping supported by segmentations from high-resolution optical image. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 1–15. [Google Scholar] [CrossRef]

- Lee, C.; Chen, W.; Wang, Y.; Jack, M.C.; Wang, Y.; She, Y. Extraction of winter wheat planting area in county based on multi-source Sentinel data. Trans. Soc. Agric. Mach. 2021, 52, 207–215. [Google Scholar]

- Zhang, C.; Chen, C.; Xu, H.; Xue, L. Multi-source remote sensing crop recognition in cloudy and foggy areas based on XGBoost algorithm. Trans. Soc. Agric. Mach. 2022, 53, 149–156. [Google Scholar]

- Liu, Z.; Liu, Z.; Wan, W.; Hang, J.; Wong, J.; Zheng, M. Estimation of corn straw cover from SAR and optical remote sensing images. J. Remote Sens. 2021, 25, 1308–1323. [Google Scholar]

- Lee, L.; Tian, X.; Weng, Y. Land cover classification based on polarimetric SAR and optical image features. J. Southeast Univ. 2021, 51, 529–534. [Google Scholar]

- Cai, W.; Zhao, S.; Wang, Y.; Peng, F.; Heo, J.; Duan, Z. Estimation of Winter Wheat Residue Coverage Using Optical and SAR Remote Sensing Images. Remote Sens. 2019, 11, 1163. [Google Scholar] [CrossRef]

- Bai, Y.; Sun, G.; Li, Y.; Ma, P.; Li, G.; Zhang, Y. Comprehensively analyzing optical and polarimetric SAR features for land-use/land-cover classification and urban vegetation extraction in highly-dense urban area. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102496. [Google Scholar] [CrossRef]

- Hasituya; Chen, Z.; Li, F.; Hongmei. Mapping Plastic-Mulched Farmland with C-Band Full Polarization SAR Remote Sensing Data. Remote Sens. 2017, 9, 1264. [Google Scholar] [CrossRef]

- Hou, H.; Ge, L.; Sun, X.; Hole, T.; Lou, W.; Qin, T.; Pore, F.; Yang, B.; Young, K. Application of surface matrix in the survey and evaluation of black land resources in China: Based on the survey of surface matrix in Baoqing area, Heilongjiang Province. J. Nat. Resour. 2022, 37, 2264–2276. [Google Scholar]

- Yang, H.; Zhao, H. Spatial-temporal characteristics of land and water resources matching under the change of cultivated land structure: A case study of Heilongjiang Province. J. Nat. Resour. 2022, 37, 2247–2263. [Google Scholar]

- Liang, J.; Zheng, Z.; Xia, S.; Zhang, T.; Don, Y. Crop identification and evaluation of red edge features of GF-6. J. Remote Sens. 2020, 24, 1168–1179. [Google Scholar]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Mapping crop cover using multi-temporal Landsat 8 OLI imagery. Int. J. Remote Sens. 2017, 38, 4348–4361. [Google Scholar] [CrossRef]

- Liu, X.; Zhai, H.; Shen, Y.; Lou, B.; Jiang, C.; Li, T.; Hussain, S.B.; Shen, G. Large-Scale Crop Mapping from Multisource Remote Sensing Images in Google Earth Engine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 414–427. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GIScience Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, H.; Li, H.; Wang, C. A unified framework for crop classification in southern China using fully polarimetric, dual polarimetric, and compact polarimetric SAR data. Int. J. Remote Sens. 2015, 36, 3798–3818. [Google Scholar] [CrossRef]

- Yang, X.; Sun, L.; Tang, X.; Ai, B.; Xu, H.; Wen, Z. An Improved Fmask Method for Cloud Detection in GF-6 WFV Based on Spectral-Contextual Information. Remote Sens. 2021, 13, 4936. [Google Scholar] [CrossRef]

- Chen, T.; Zhao, Y.; Guo, Y. Sparsity-regularized feature selection for multi-class remote sensing image classification. Neural Comput. Appl. 2019, 32, 6513–6521. [Google Scholar] [CrossRef]

- Kang, Y.; Meng, Q.; Liu, M.; Zou, Y.; Wang, X. Crop Classification Based on Red Edge Features Analysis of GF-6 WFV Data. Sensors 2021, 21, 4328. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, X.; Shi, T.; Zhang, C.; Li, M. Classification of Medicinal Plants Astragalus Mongholicus Bunge and Sophora Flavescens Aiton Using GaoFen-6 and Multitemporal Sentinel-2 Data. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

| Wavelength Band | Spatial Resolution | Center Wavelength/nm |

|---|---|---|

| B2—Blue | 10 m | 490 |

| B3—Green | 560 | |

| B4—Red | 665 | |

| B 8—Nir | 842 | |

| B5—Red edge | 20 m | 705 |

| B6—Red edge | 740 | |

| B7—Edge of the Nir plateau | 783 | |

| B8a—Narrow Nir | 865 | |

| B11—Swir | 1610 | |

| B12—Swir | 2190 | |

| B1—Coastal aerosol | 60 m | 443 |

| B9—Water Vapor | 945 |

| May | June | July | August | September | |

|---|---|---|---|---|---|

| Corn growth period | Seedling stage | Jointing stage | Heading stage | Milk ripening stage | Maturation stage |

| GF images | GF-6 | GF-3 | GF-6 | GF-6 | GF-6 |

| Sentinel images | Sentinel-2 | Sentinel-2 | Sentinel-1 | Sentinel-1 | Sentinel-2 |

| Index Name | Formula |

|---|---|

| Normalized Differential Vegetation Index (NDVI) | |

| Ratio Vegetation Index (RVI) | |

| Differential Vegetation Index (DVI) | |

| Chlorophyll Index (CIgreen) |

| Scenes | Feature Combination | Data | Algorithm |

|---|---|---|---|

| S1 | May + spectral characteristics (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S2 | July + spectral characteristics (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S3 | August + spectral features (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S4 | September + spectral characteristics (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S5 | 5,6,7 months + spectral characteristics (NDVI RVI DVI CIgreen ) + HH HV | GF6/GF3 | RF |

| S6 | 7,8,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S7 | 5,7,8,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) | GF6 | RF |

| S8 | 5,6,7,8,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) + HH HV | GF6/GF3 | RF |

| S9 | 5,6,7 months + spectral characteristics (NDVI RVI DVI CIgreen ) + VV VH | Sentinel-1/2 | RF |

| S10 | 7,8,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) + VV VH | Sentinel-1/2 | RF |

| S11 | 5,6,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) | Sentinel-1 | RF |

| S12 | 5,6,7,8,9 months + spectral characteristics (NDVI RVI DVI CIgreen ) + VV VH | Sentinel-1/2 | RF |

| Scenes | Months | Data | Overall Accuracy OA/% | Kappa | Corn PA/% | Corn UA/% |

|---|---|---|---|---|---|---|

| S1 | 5 | GF-6 | 78.61 | 0.7251 | 55.42 | 52.68 |

| S2 | 7 | GF-6 | 86.01 | 0.8210 | 82.40 | 89.74 |

| S3 | 8 | GF-6 | 89.16 | 0.8599 | 86.10 | 91.72 |

| S4 | 9 | GF-6 | 86.95 | 0.8312 | 81.95 | 93.05 |

| S5 | 5,6,7 | GF-6/3 | 91.10 | 0.8853 | 86.83 | 85.86 |

| S6 | 7,8,9 | GF-6 | 89.54 | 0.8654 | 89.26 | 93.07 |

| S7 | 5,7,8,9 | GF-6 | 93.09 | 0.9108 | 85.23 | 92.69 |

| S8 | 5,6,7,8,9 | GF-6/3 | 93.37 | 0.9143 | 89.39 | 91.97 |

| Scenes | Months | Data | Overall Accuracy/% | Kappa | Corn PA/% | Corn UA/% |

|---|---|---|---|---|---|---|

| S5 | 5,6,7 | GF-6/3 | 90.58 | 0.8786 | 84.66 | 85.58 |

| S6 | 7,8,9 | GF-6 | 89.54 | 0.8654 | 89.26 | 93.07 |

| S8 | 5,6,7,8,9 | GF-6/3 | 93.37 | 0.9143 | 89.39 | 91.97 |

| S9 | 5,6,7 | Sentinel-2/1 | 83.79 | 0.7931 | 75.69 | 79.58 |

| S10 | 7,8,9 | Sentinel-2/1 | 87.44 | 0.8373 | 86.82 | 87.08 |

| S12 | 5,6,7,8,9 | Sentinel-2/1 | 88.32 | 0.8505 | 86.49 | 89.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, H.; Li, C.; Liu, Y.; Gong, Y.; He, W.; Zou, M. Corn Land Extraction Based on Integrating Optical and SAR Remote Sensing Images. Land 2023, 12, 398. https://doi.org/10.3390/land12020398

Meng H, Li C, Liu Y, Gong Y, He W, Zou M. Corn Land Extraction Based on Integrating Optical and SAR Remote Sensing Images. Land. 2023; 12(2):398. https://doi.org/10.3390/land12020398

Chicago/Turabian StyleMeng, Haoran, Cunjun Li, Yu Liu, Yusheng Gong, Wanying He, and Mengxi Zou. 2023. "Corn Land Extraction Based on Integrating Optical and SAR Remote Sensing Images" Land 12, no. 2: 398. https://doi.org/10.3390/land12020398

APA StyleMeng, H., Li, C., Liu, Y., Gong, Y., He, W., & Zou, M. (2023). Corn Land Extraction Based on Integrating Optical and SAR Remote Sensing Images. Land, 12(2), 398. https://doi.org/10.3390/land12020398