A Method for Improving the Efficiency and Effectiveness of Automatic Image Analysis of Water Pipes

Abstract

1. Introduction

2. Methods

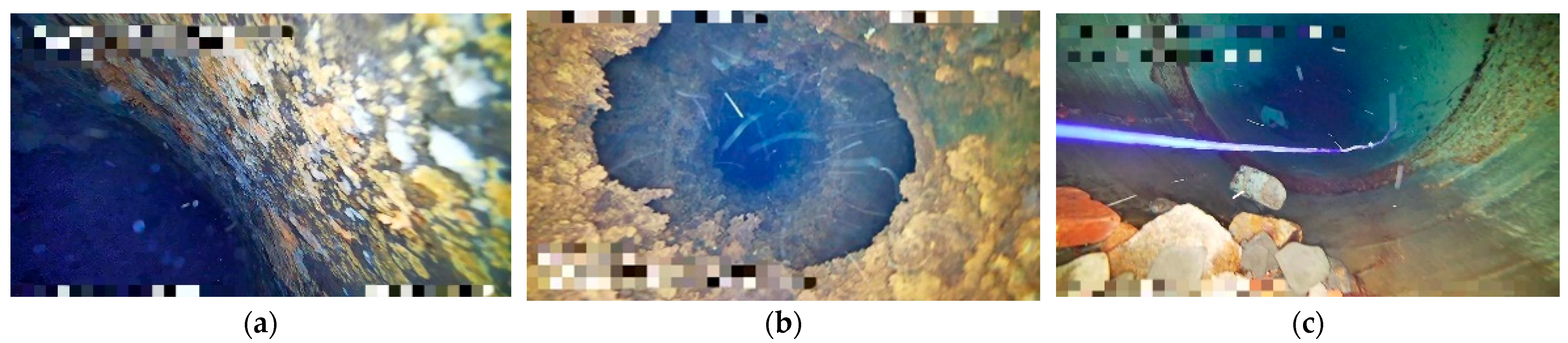

2.1. Data Collection

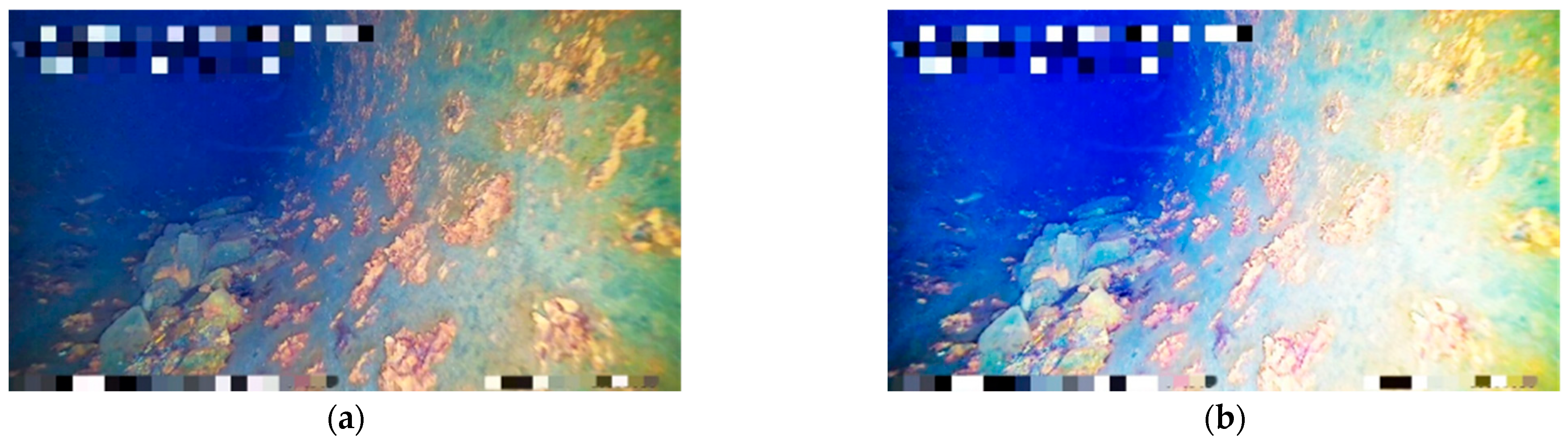

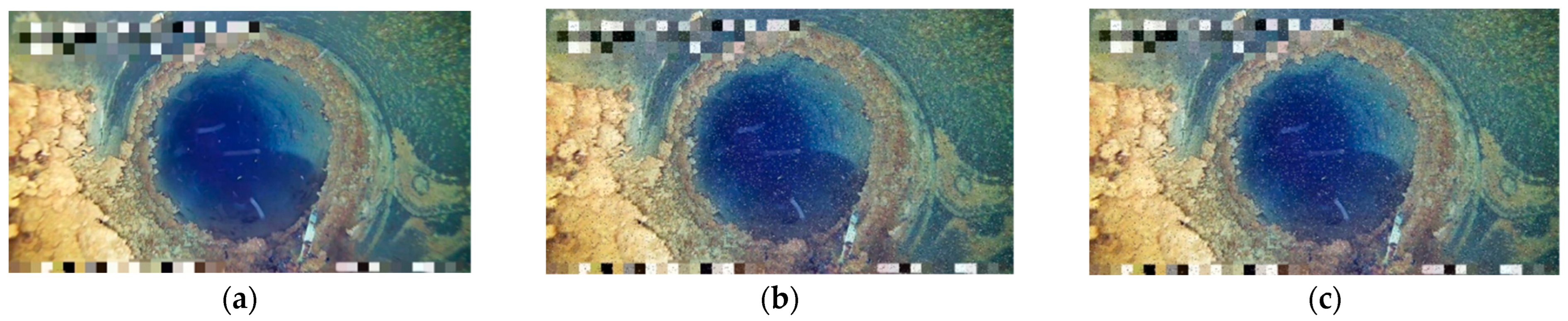

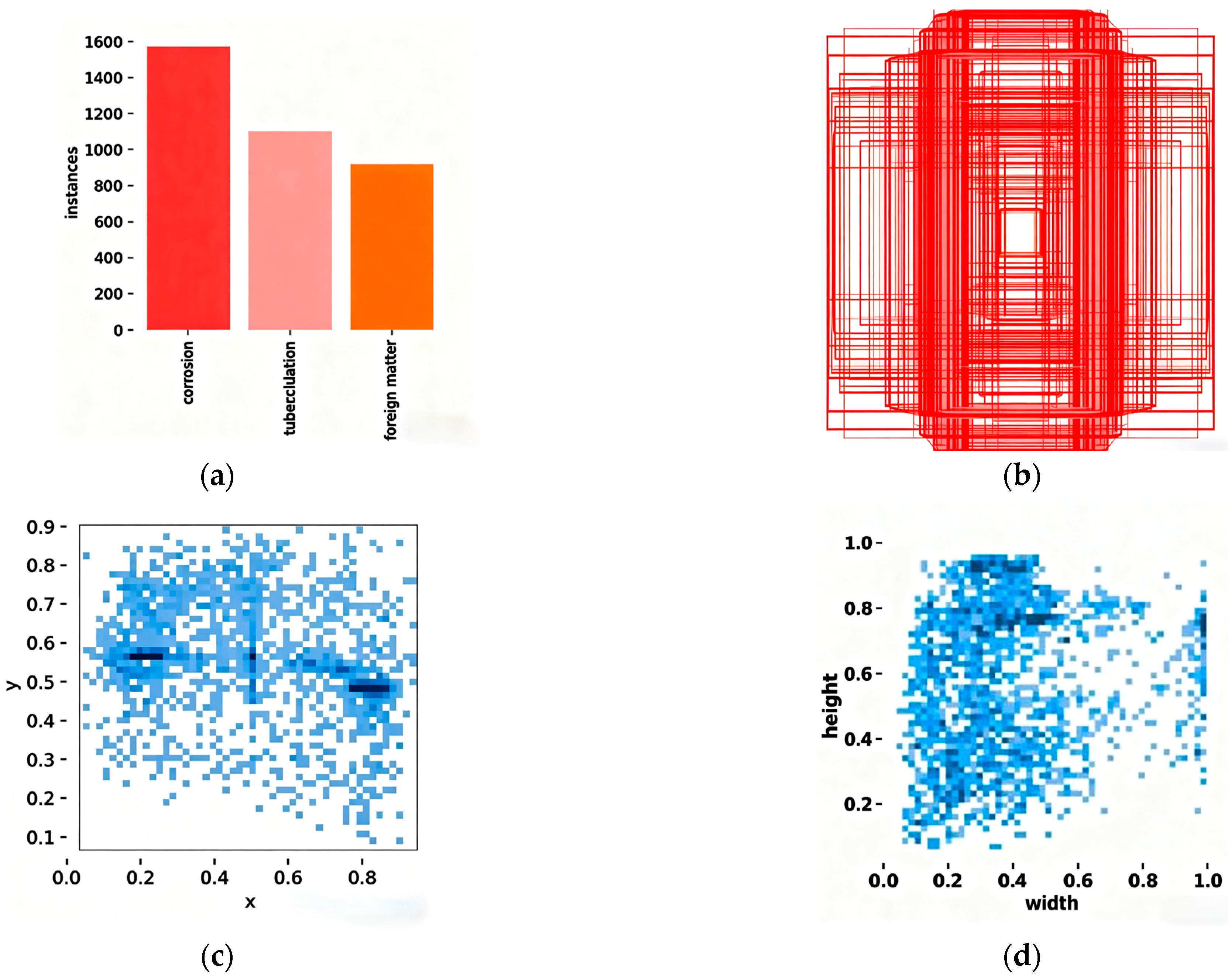

2.2. Image Enhancement and Annotation

2.2.1. Image Enhancement

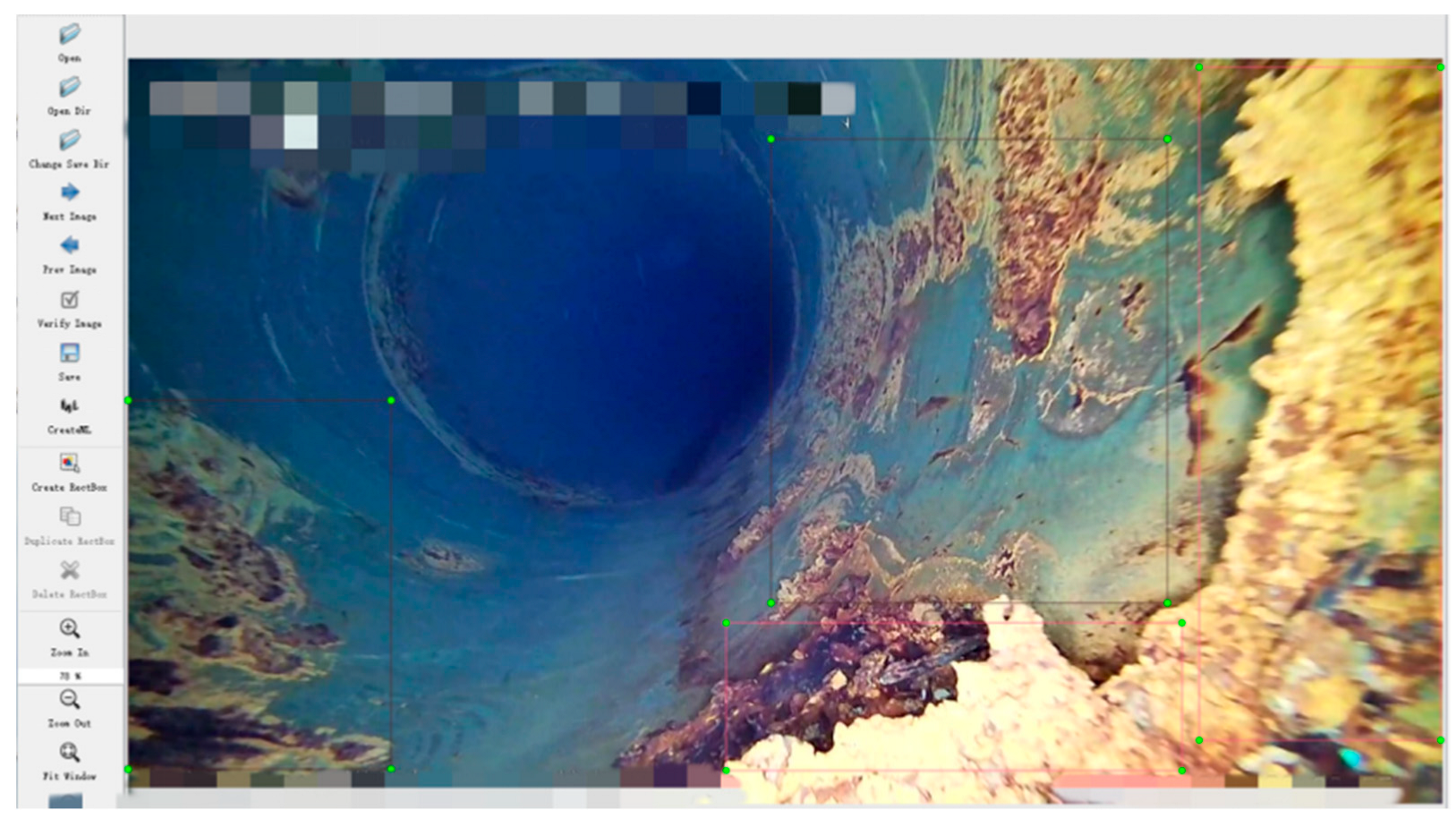

2.2.2. Dataset Partitioning and Labeling

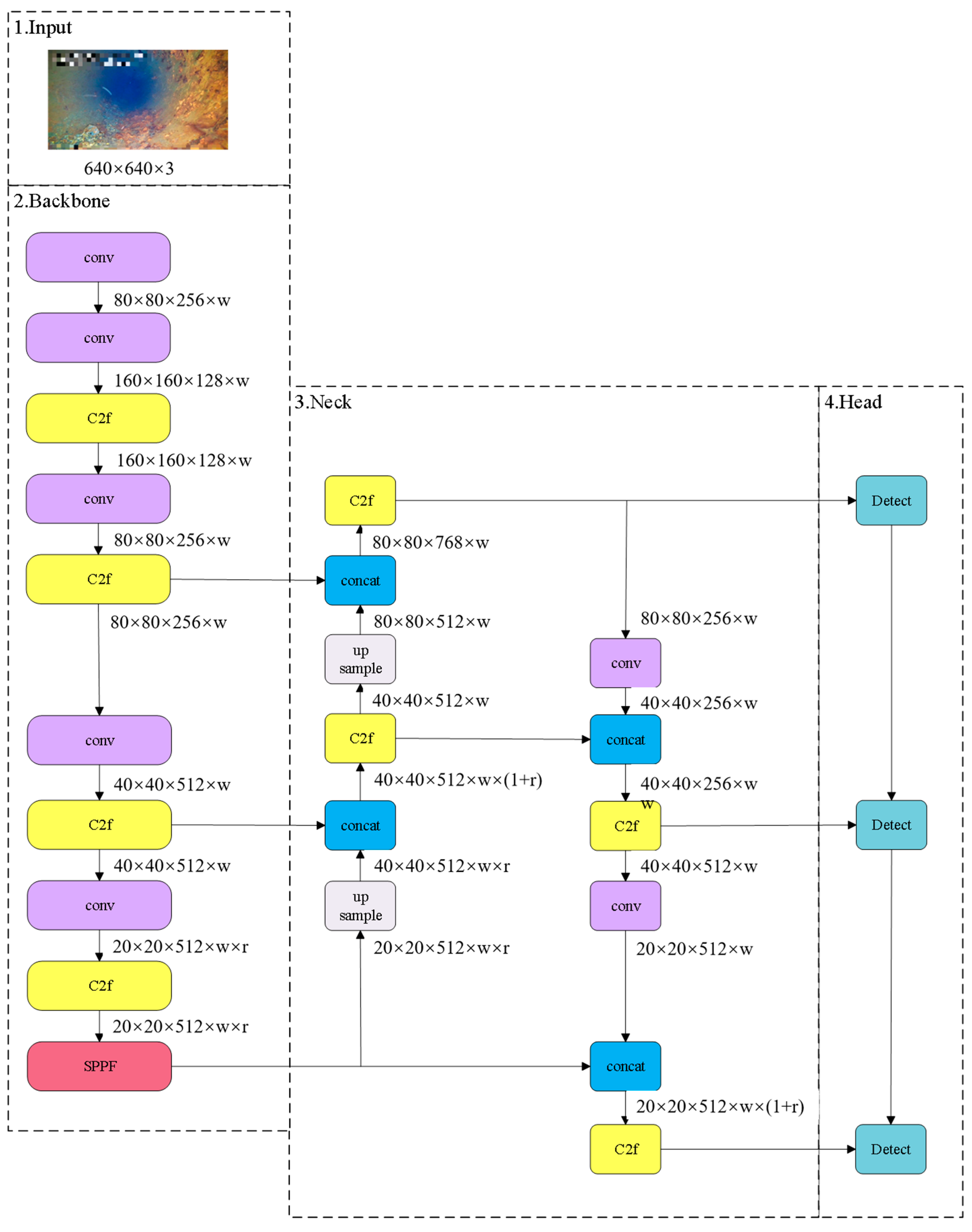

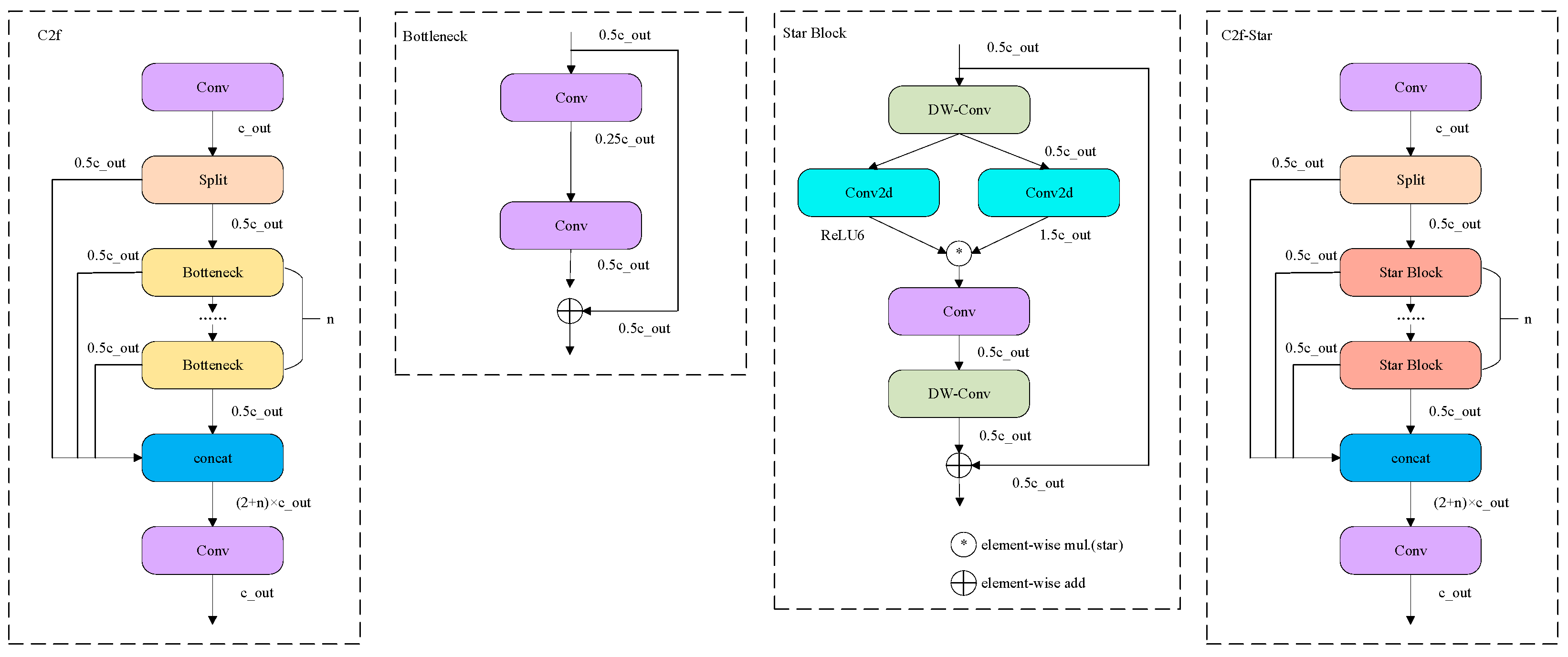

2.3. YOLOv8 Architecture

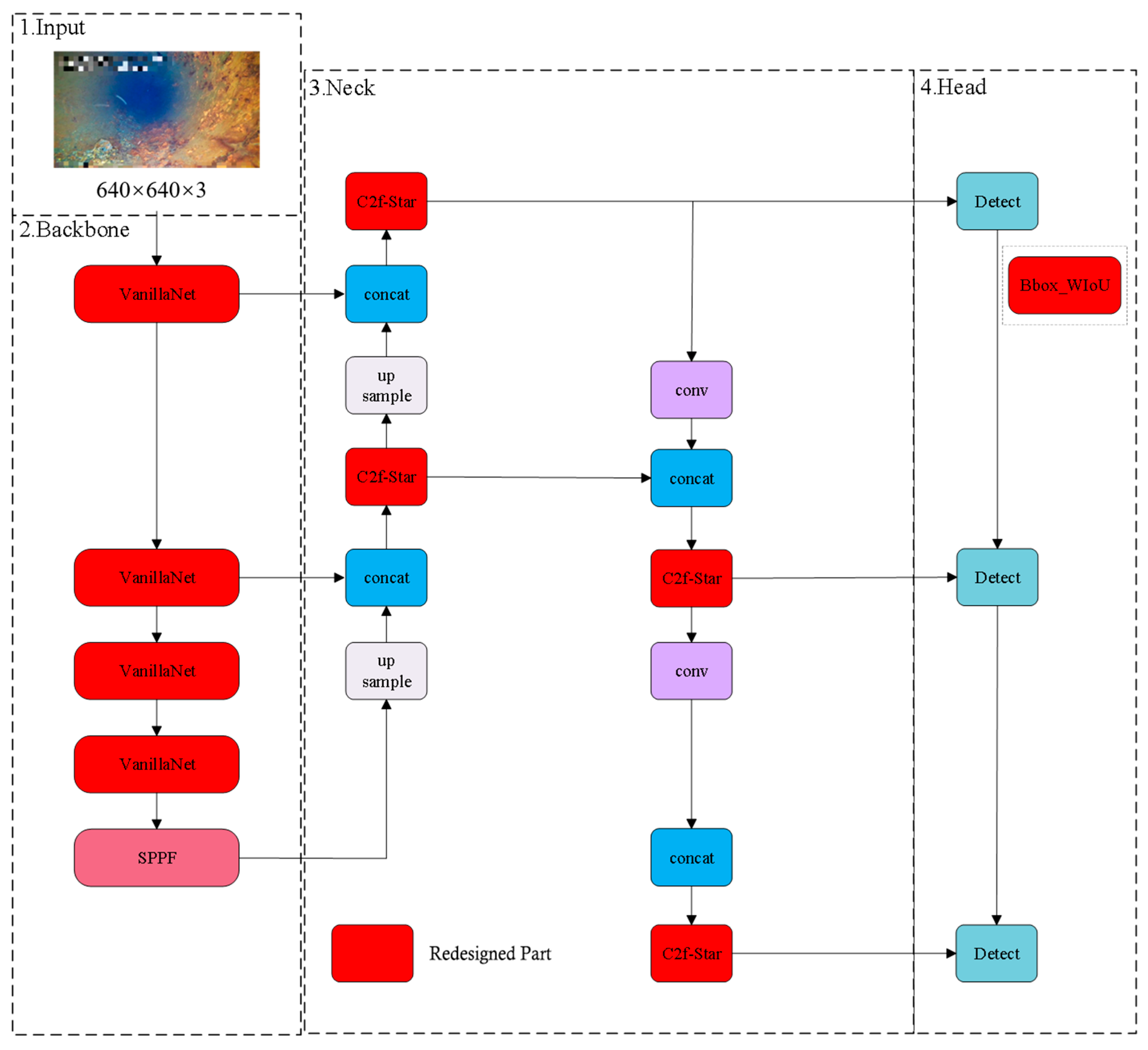

2.4. Improved YOLOv8 Model

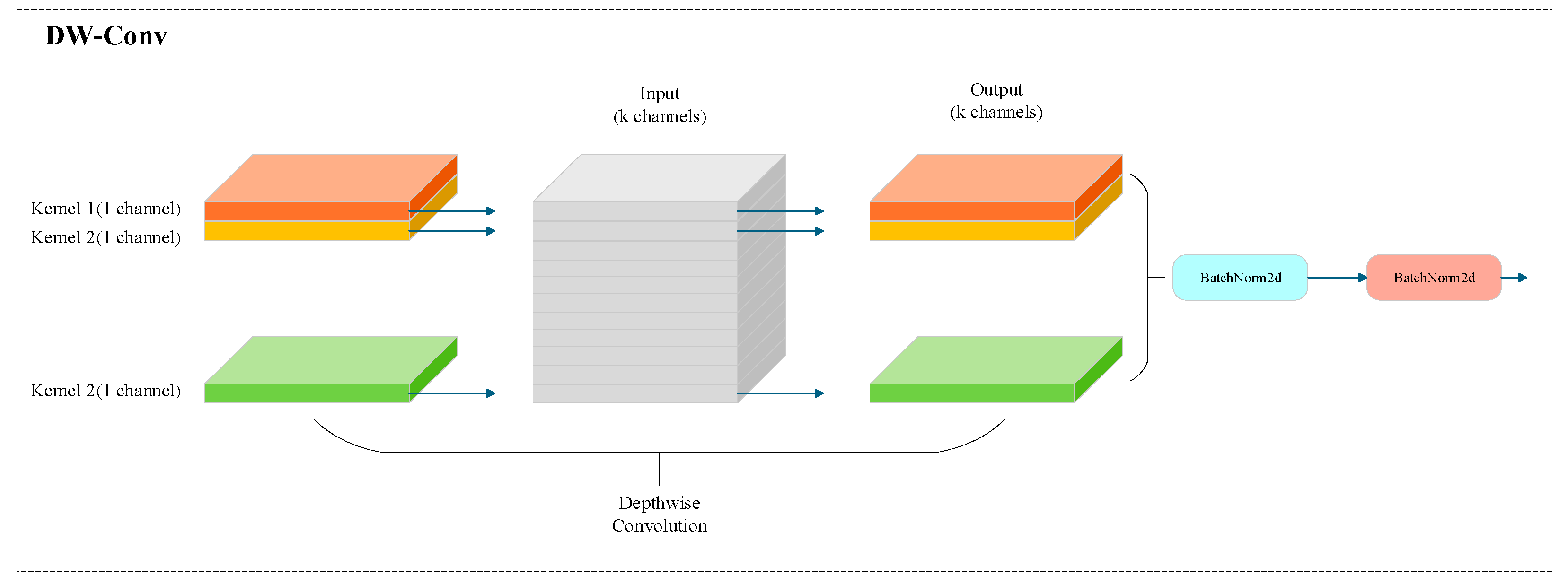

2.4.1. Lightweight Improvement Based on VanillaNet

2.4.2. Neck Improvement Based on C2f-Star

2.4.3. WIoU-Based Loss Function Improvement

2.5. Test Set up

2.6. Model Evaluation Method

3. Results

3.1. Enhance the Evaluation of Data Augmentation Experiment Results

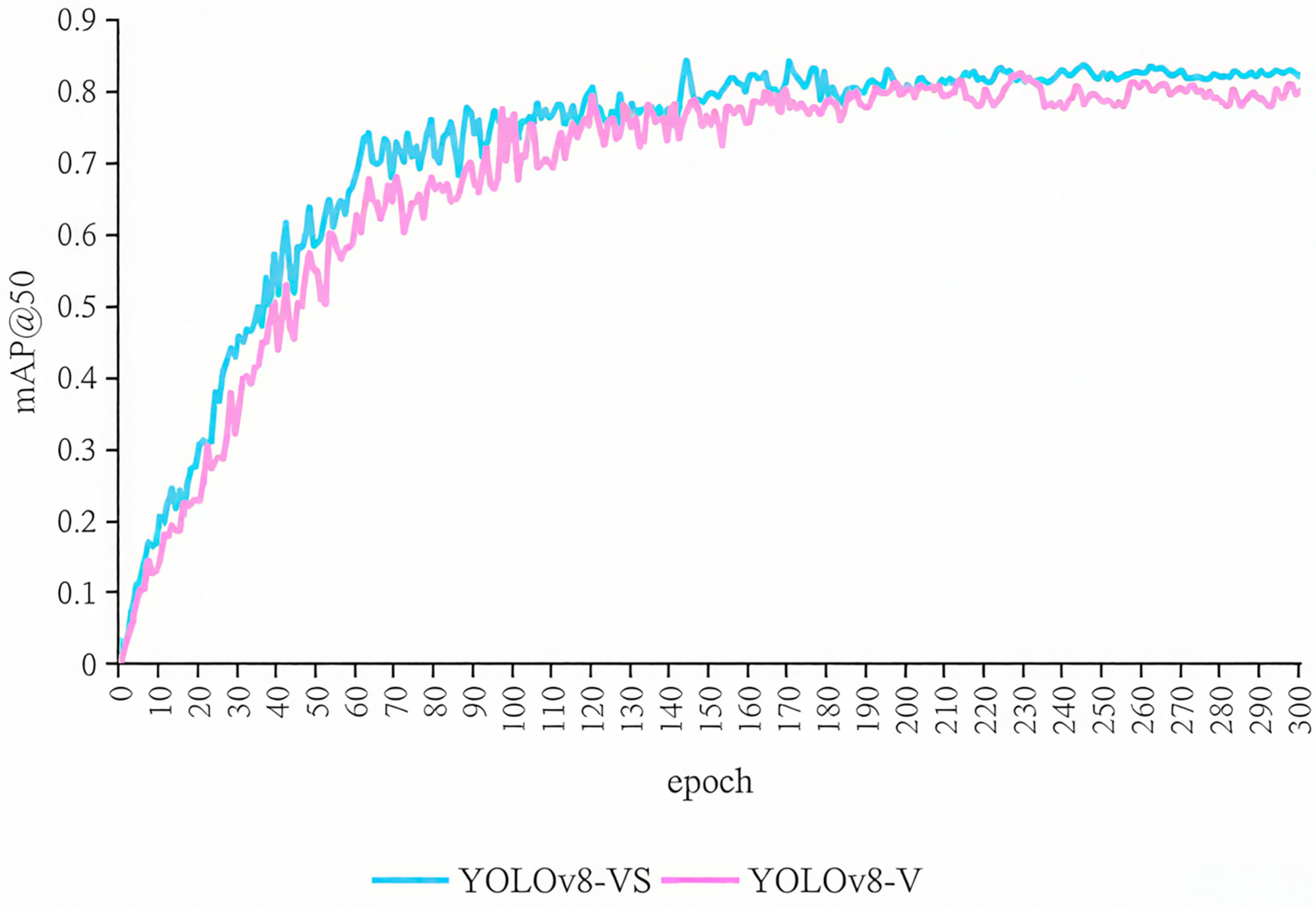

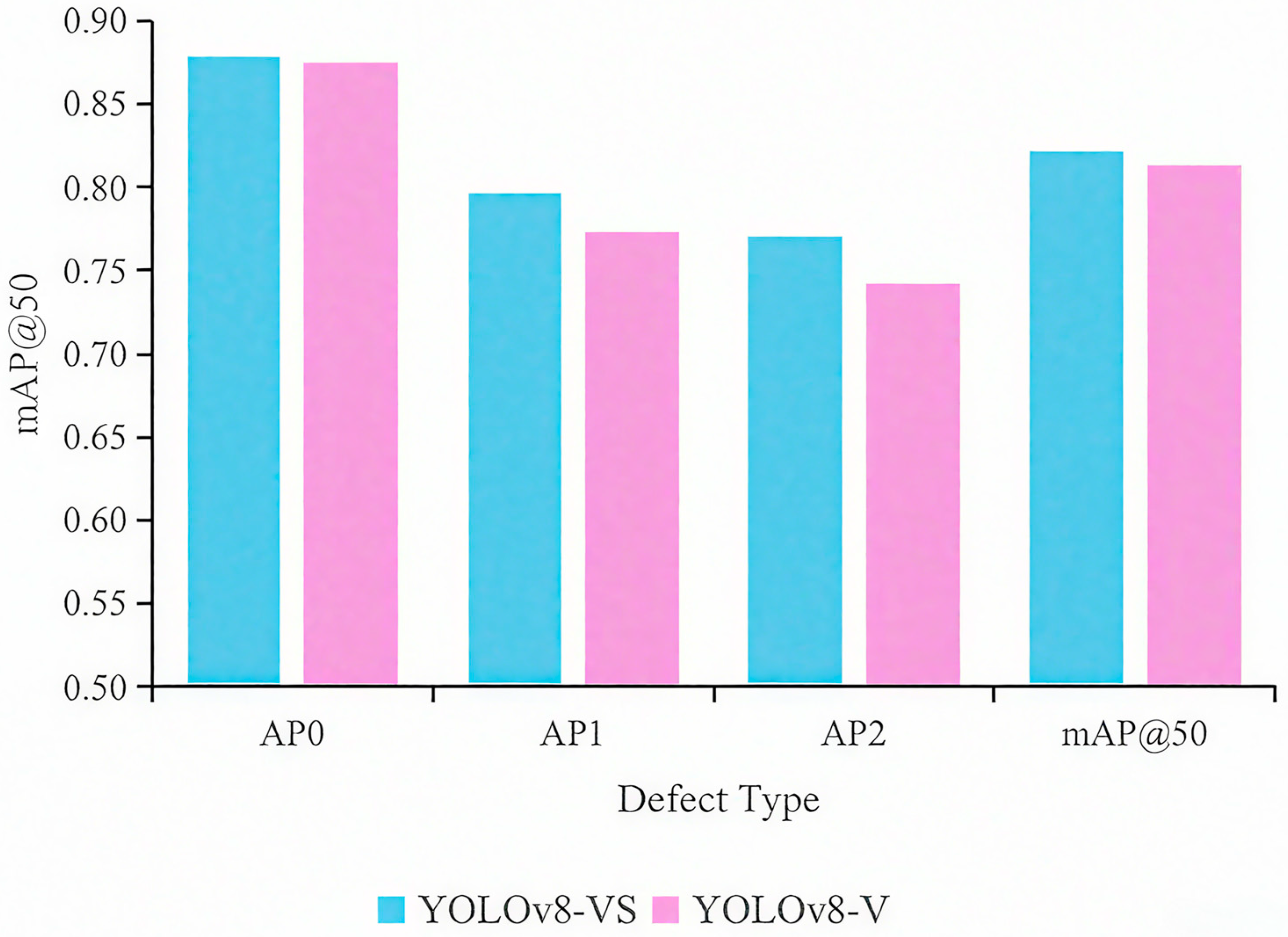

3.2. Enhanced Test Results of Lightweight Based on VanillaNet

3.3. Neck Improvement Test Results Based on C2f-Star

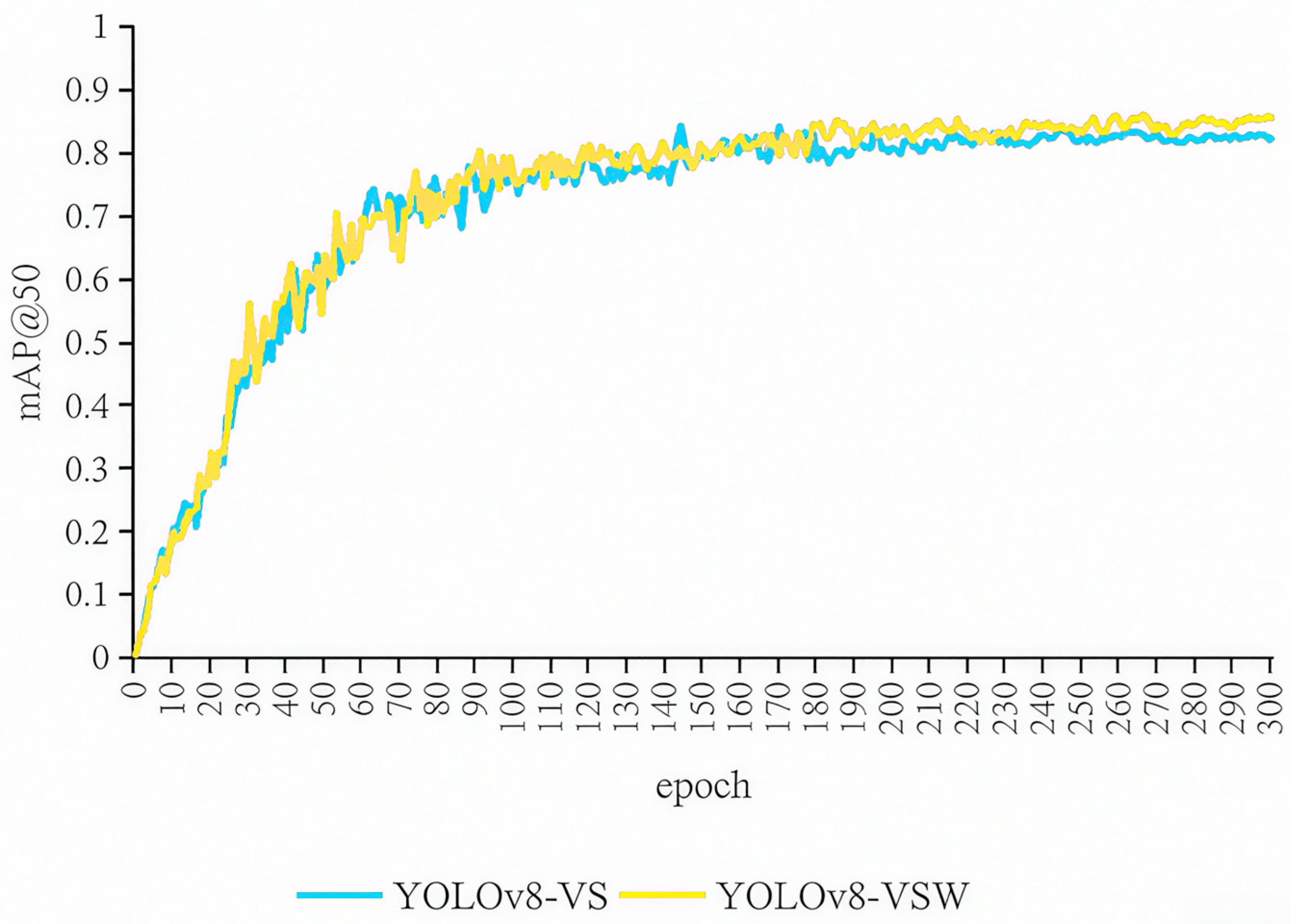

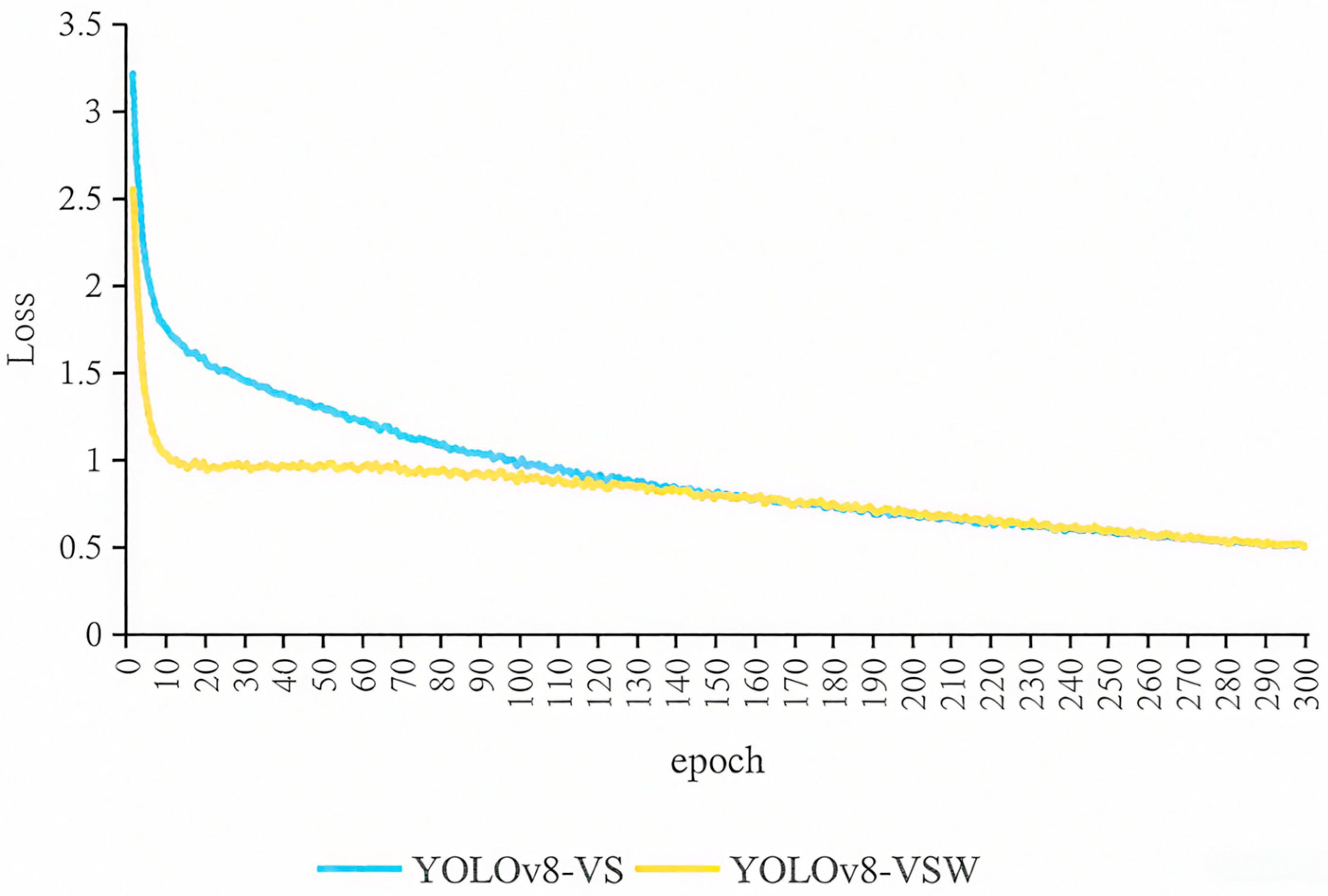

3.4. Experimental Results of Loss Function Improvement Based on WIoU

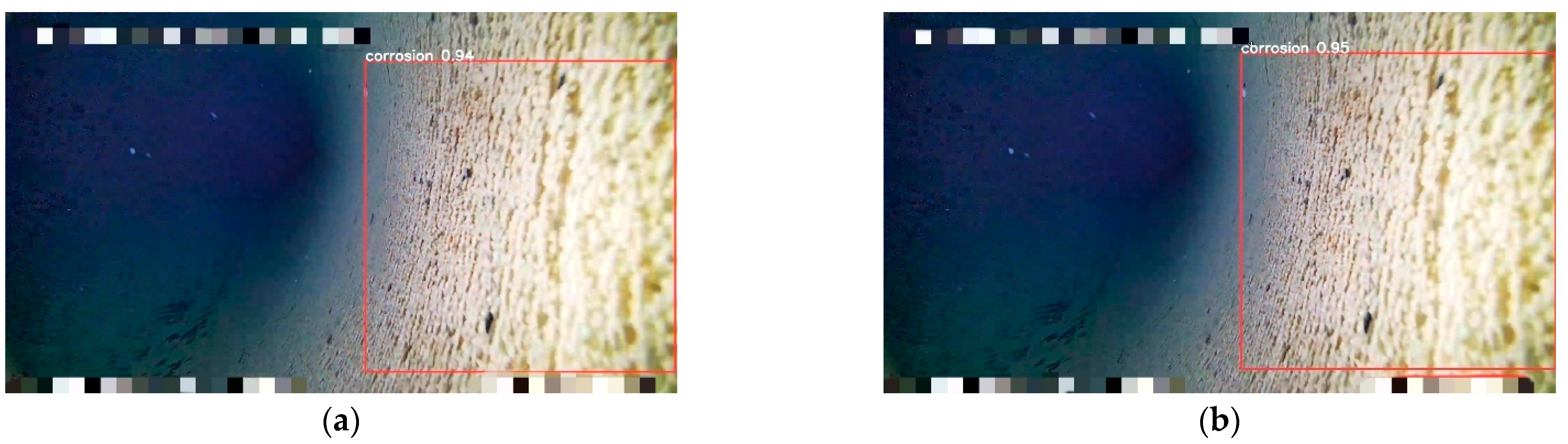

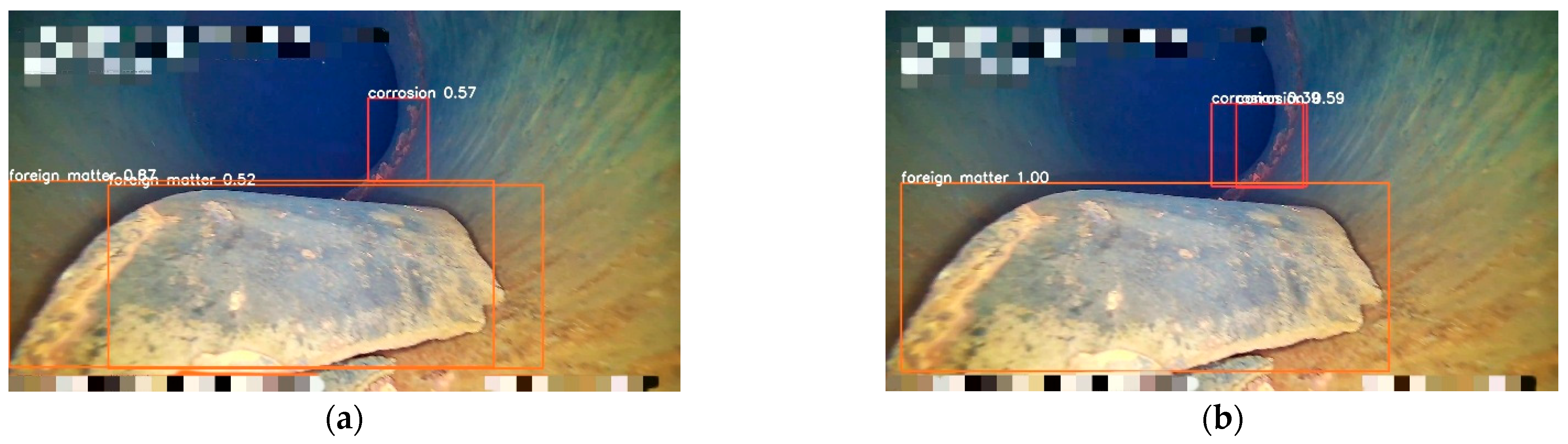

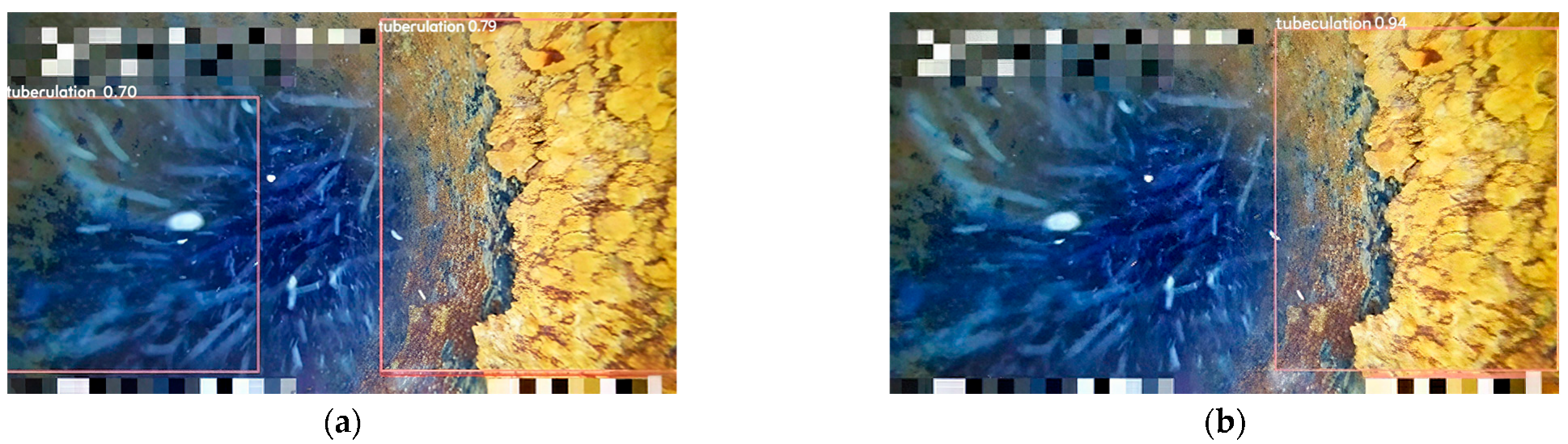

4. Case Verification and Discussion

4.1. Case Verification

4.2. Discussion

5. Conclusions

- (1)

- A specialized dataset for in-service water supply pipeline defects was constructed. It was demonstrated that a combined data augmentation strategy, including photometric and affine transformations and noise injection, effectively addresses the challenges of data scarcity and class imbalance inherent in this domain.

- (2)

- Qualitative analysis revealed the typical “failure modes” of the baseline YOLOv8 model in this context, including a tendency for FP errors on complex backgrounds and localization inaccuracies, such as generating redundant bounding boxes for single targets. This demonstrates that general-purpose object detection models struggle to adapt to the challenging internal pipeline environment without targeted modifications.

- (3)

- The proposed YOLOv8-VSW architecture enhances the model’s information processing on three levels: the VanillaNet backbone simplifies feature extraction to focus on key defect patterns; the C2f-Star neck improves multi-scale feature fusion through high-dimensional implicit space interaction, boosting accuracy for irregular targets like tuberculation; and the WIoUv3 loss function dynamically adjusts gradients based on sample quality, significantly improving model performance and stability.

- (4)

- Ablation studies quantified the impact of each module, with their contribution to the mAP@50 improvement ranked as follows: VanillaNet > WIoUv3 > C2f-Star. Backbone replacement provided the most significant gain of 1.8 percentage points, suggesting that an efficient, task-specific top-level design is critical for baseline model performance.

- (5)

- The final proposed YOLOv8-VSW model achieved an mAP@50 of 83.5% on the test set while reducing the parameter count by 38.7% compared to the baseline. This result confirms that a synergistic optimization of accuracy and efficiency was achieved, meeting the requirements for real-time, automated inspection.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CCTV | closed-circuit television |

| GPR | ground penetrating radar |

| CV | computer vision |

| DL | deep learning |

| CNNs | convolutional neural networks |

| mAP | mean average precision |

| YOLO | You Only Look Once |

| CSP | cross-stage partial |

| SPPF | spatial pyramid pooling-fast |

| PANet | path aggregation network |

| DW-Conv | depth-wise separable convolutions |

| CIoU | complete-IoU |

| WIoU | wise-IoU |

| GFLOPs | gigaFLOPs |

| FPS | frames per second |

| AP | average precision |

| P-R | precision-recall |

| FLOPs | computational complexity |

References

- National Bureau of Statistics of China. China Statistical Yearbook; China Statistics Press: Beijing, China, 2023. [Google Scholar]

- Li, Y.; Kang, G.; Hu, Z.; Zhou, B.; Tian, T. Analysis of Shallow Groundwater Nitrate Pollution Sourcesin the Suburbs of Liaocheng City. J. China Hydrol. 2020, 40, 91–96. [Google Scholar] [CrossRef]

- Da, H.; Shen, H.; Yuan, J.; Wang, R.; Hu, S.; Sang, Z. Emergency Repair and Safeguard Measures of Leakage Points in Large Diameter Water Supply Jacking Pipe. China Water Wastewater 2020, 36, 140–144. [Google Scholar] [CrossRef]

- Guo, H.; Tian, Y.; Zhang, H.; Li, X.; Jin, Y.; Yin, J. Research Progress on Internal Corrosion of Lron-metal Pipes of Water Distribution Systems. China Water Wastewater 2020, 36, 70–75. [Google Scholar] [CrossRef]

- Zhang, Z. Discussion on Connotations and Requirements of Leakage Control for Public Water Supply Networks. Water Purif. Technol. 2022, 22, 1–3. [Google Scholar]

- Joseph, K.; Sharma, A.K.; van Staden, R.; Wasantha, P.L.P.; Cotton, J.; Small, S. Application of Software and Hardware-Based Technologies in Leaks and Burst Detection in Water Pipe Networks: A Literature Review. Water 2023, 15, 2046. [Google Scholar] [CrossRef]

- Bertulessi, M.; Bignami, D.F.; Boschini, I.; Longoni, M.; Menduni, G.; Morosi, J. Experimental Investigations of Distributed Fiber Optic Sensors for Water Pipeline Monitoring. Sensors 2023, 23, 6205. [Google Scholar] [CrossRef] [PubMed]

- Meniconi, S.; Brunone, B.; Tirello, L.; Rubin, A.; Cifrodelli, M.; Capponi, C. Transient Tests for Checking the Trieste Subsea Pipeline: Diving into Fault Detection. J. Mar. Sci. Eng. 2024, 12, 391. [Google Scholar] [CrossRef]

- Meniconi, S.; Brunone, B.; Tirello, L.; Rubin, A.; Cifrodelli, M.; Capponi, C. Transient Tests for Checking the Trieste Subsea Pipeline: Toward Field Tests. J. Mar. Sci. Eng. 2024, 12, 374. [Google Scholar] [CrossRef]

- Awwad, A.; Albasha, L.; Mir, H.S.; Mortula, M.M. Employing Robotics and Deep Learning in Underground Leak Detection. IEEE Sens. J. 2023, 23, 8169–8177. [Google Scholar] [CrossRef]

- Feng, Y.; Fan, G.; Zhang, H. Analysis of Water Quality Characteristics in Aeras with Frequent Water Quality Problems in a City of South China. Water Wastewater Eng. 2018, 111–116. [Google Scholar]

- Wang, Y.; Wang, R.; Hu, Q.; Wang, F. Risk Assessment Model for Structural Stability of Urban Water Supply Pipeline. Water Purif. Technol. 2018, 115, 104–110. [Google Scholar]

- Cui, Y.; Yu, P.; Wu, J. Research and Discussion on Construction of Whole Process Information System of Drainage Pipe Network. Water Wastewater Eng. 2022, 58, 537–541. [Google Scholar] [CrossRef]

- He, M.; Zhao, Q.; Gao, H.; Zhang, X.; Zhao, Q. Image Segmentation of a Sewer Based on Deep Learning. Sustainability 2022, 14, 6634. [Google Scholar] [CrossRef]

- Yusuf, W.; Alaka, H.; Ahmad, M.; Godoyon, W.; Ajayi, S.; Toriola-Coker, L.O.; Ahmed, A. Deep Learning for Automated Encrustation Detection in Sewer Inspection. Intell. Syst. Appl. 2024, 24, 200433. [Google Scholar] [CrossRef]

- Liu, R.; Shao, Z.; Yu, Z.; Li, R. Research on Real-Time Helmet Detection and Deployment Based on an Improved YOLOv7 Network with Channel Pruning. SIViP 2025, 19, 118. [Google Scholar] [CrossRef]

- Wu, Z.; Guo, Y.; Huang, S.; Ma, B. A Sewer Pipeline Defect Detection Model Based onYOLOv8 with Efficient ViT Algorithm. Water Wastewater Eng. 2025, 125–130. [Google Scholar]

- Li, W. Research on Key Technology of Defect Detection of Urban Drainage Pipeline. Master’s Thesis, South China University of Technology, Guangzhou, China, 2022. [Google Scholar]

- Acharyya, A.; Sarkar, A.; Aleksandrova, M. Deep Learning-Based Object Detection: An Investigation. In Lecture Notes in Electrical Engineering; Springer Nature: Singapore, 2022; pp. 697–711. ISBN 978-981-19-5036-0. [Google Scholar]

- Kacprzyk, J. Analyzing Deep Neural Network Algorithms for Recognition of Emotions Using Textual Data. In Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2023; pp. 60–70. ISBN 978-3-031-31152-9. [Google Scholar]

- Sharma, A.; Kumar, P.; Babulal, K.S.; Obaid, A.J.; Patel, H. Categorical Data Clustering Using Harmony Search Algorithm for Healthcare Datasets. Int. J. E-Health Med. Commun. (IJEHMC) 2022, 13, 1–15. [Google Scholar] [CrossRef]

- Cheng, J.C.; Wang, M. Automated Detection of Sewer Pipe Defects in Closed-Circuit Television Images Using Deep Learning Techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A Deep Learning-Based Framework for an Automated Defect Detection System for Sewer Pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Ha, B.; Schalter, B.; White, L.; Koehler, J. Automatic Defect Detection in Sewer Network Using Deep Learning Based Object Detector. In Proceedings of the 3rd International Conference on Image Processing and Vision Engineering, Prague, Czech Republic, 21–23 April 2023; pp. 188–198. [Google Scholar]

- Zhu, J.; Wang, Y. Research on Transducers Impedance Matching Technology in Sonar Imaging Detection of Drainage Pipelines. Mod. Electron. Tech. 2024, 47, 129–134. [Google Scholar] [CrossRef]

- Ma, S. Underground Pipeline Quality Inspection Method Based on Infrared Thermal Imaging. Beijing Surv. Mapp. 2023, 37, 465–470. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef]

- Li, D.; Xie, Q.; Yu, Z.; Wu, Q.; Zhou, J.; Wang, J. Sewer Pipe Defect Detection via Deep Learning with Local and Global Feature Fusion. Autom. Constr. 2021, 129, 103823. [Google Scholar] [CrossRef]

- Boaretto, N.; Centeno, T.M. Automated Detection of Welding Defects in Pipelines from Radiographic Images DWDI. Ndt E Int. 2017, 86, 7–13. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Siu, C.; Wang, M.; Cheng, J.C. A Framework for Synthetic Image Generation and Augmentation for Improving Automatic Sewer Pipe Defect Detection. Autom. Constr. 2022, 137, 104213. [Google Scholar] [CrossRef]

- Dang, L.M.; Wang, H.; Li, Y.; Nguyen, L.Q.; Nguyen, T.N.; Song, H.-K.; Moon, H. Lightweight Pixel-Level Semantic Segmentation and Analysis for Sewer Defects Using Deep Learning. Constr. Build. Mater. 2023, 371, 130792. [Google Scholar] [CrossRef]

- Wang, N.; Ma, D.; Du, X.; Li, B.; Di, D.; Pang, G.; Duan, Y. An Automatic Defect Classification and Segmentation Method on Three-Dimensional Point Clouds for Sewer Pipes. Tunn. Undergr. Space Technol. 2024, 143, 105480. [Google Scholar] [CrossRef]

- Situ, Z.; Teng, S.; Liao, X.; Chen, G.; Zhou, Q. Real-Time Sewer Defect Detection Based on YOLO Network, Transfer Learning, and Channel Pruning Algorithm. J. Civ. Struct. Health Monit. 2024, 14, 41–57. [Google Scholar] [CrossRef]

- Liu, R.; Shao, Z.; Sun, Q.; Yu, Z. Defect Detection and 3D Reconstruction of Complex Urban Underground Pipeline Scenes for Sewer Robots. Sensors 2024, 24, 7557. [Google Scholar] [CrossRef]

- Zheng, X.; Guan, Z.; Chen, Q.; Wen, G.; Lu, X. A Lightweight Road Traffic Sign Detection Algorithm Based on Adaptive Sparse Channel Pruning. Meas. Sci. Technol. 2024, 36, 016176. [Google Scholar] [CrossRef]

- Lu, J.; Song, W.; Zhang, Y.; Yin, X.; Zhao, S. Real-Time Defect Detection in Underground Sewage Pipelines Using an Improved YOLOv5 Model. Autom. Constr. 2025, 173, 106068. [Google Scholar] [CrossRef]

- Li, H.; Pang, X. YOLOv8-plus: A Small Object Detection Model Based on Fine Feature Capture and Enhanced Attention Convolution Fusion. Acad. J. Comput. Inf. Sci. 2025, 8, 116–125. [Google Scholar] [CrossRef]

- Barton, N.A.; Farewell, T.S.; Hallett, S.H.; Acland, T.F. Improving Pipe Failure Predictions: Factors Affecting Pipe Failure in Drinking Water Networks. Water Res. 2019, 164, 114926. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Zhang, Y.; Li, Y.; Zhang, X.; Zheng, J.; Shi, H.; Zhang, Q.; Chen, Z.; Ma, Y. Model Experimental Study on the Mechanism of Collapse Induced by Leakage of Underground Pipeline. Sci. Rep. 2024, 14, 17717. [Google Scholar] [CrossRef]

- Tan, Y.; Cai, R.; Li, J.; Chen, P.; Wang, M. Automatic Detection of Sewer Defects Based on Improved You Only Look Once Algorithm. Autom. Constr. 2021, 131, 103912. [Google Scholar] [CrossRef]

- Bai, D.; Wei, S.; He, X.; Yu, Q. Annotation Methods for Object Detection: A Comparative Analysis from Manual Labeling to Automated Annotation Technologies. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA), Xi’an, China, 28–30 March 2025; IEEE: New York, NY, USA, 2025; pp. 1473–1479. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on YOLOv8 and Its Advancements. In Algorithms for Intelligent Systems; Springer Nature: Singapore, 2024; pp. 529–545. ISBN 978-981-99-7999-8. [Google Scholar]

- Zhu, S.; Li, X.; Wan, G.; Wang, H.; Shao, S.; Shi, P. Underwater Dam Crack Image Classification Algorithm Based on Improved Vanillanet. Symmetry 2024, 16, 845. [Google Scholar] [CrossRef]

- Ma, X.; Wang, W.; Li, W.; Wang, J.; Ren, G.; Ren, P.; Liu, B. An Ultralightweight Hybrid CNN Based on Redundancy Removal for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- LING, L.; Zhu, C.; Liu, M.; Hu, J.; Zhang, X.; Ge, M. YOLOv8-SC: Improving the YOLOv8 Network for Real-Time Detection of Automotive Coated Surface Defects. Meas. Sci. Technol. 2025, 36, 036003. [Google Scholar] [CrossRef]

- Liu, X.; Yang, X.; Chen, Y.; Zhao, S. Object Detection Method Based on CloU Improved Bounding Box Loss Function. Chin. J. Liq. Cryst. Disp. 2023, 38, 656–665. [Google Scholar] [CrossRef]

- Yang, X.; Liu, C.; Han, J. Reparameterized Underwater Object Detection Network Improved by Cone-Rod Cell Module and WIOU Loss. Complex Intell. Syst. 2024, 10, 7183–7198. [Google Scholar] [CrossRef]

| Categories of Water Supply Pipeline Diseases | Number of Images | Corresponding Label |

|---|---|---|

| corrosion | 1184 | 0 |

| tuberculation | 794 | 1 |

| foreign matter | 144 | 2 |

| Class ID | Center_x | Center_y | Width | Height |

|---|---|---|---|---|

| 0 | 0.906611 | 0.462500 | 0.183631 | 0.902778 |

| 0 | 0.628279 | 0.855556 | 0.346800 | 0.198148 |

| 1 | 0.639822 | 0.418519 | 0.301679 | 0.622222 |

| 1 | 0.100210 | 0.705093 | 0.199370 | 0.495370 |

| Configuration | Test Environment | Model Version |

|---|---|---|

| Hardware environment | CPU | Intel Core i9 14900kf |

| GPU | NVIDIA RTX 4080 | |

| Video memory | 16 GB | |

| Memory | 64 GB | |

| Software environment | Operating system | Windows 11 |

| Programming languages | Python 3.8.20 | |

| Development environment | Pycharm | |

| DL Framework | Pytorch 2.4.1 | |

| CUDA version | 12.1 |

| Hyperparameters | Numerical Value |

|---|---|

| Input image size | 640 × 640 |

| Batch size | 32 |

| Initial learning rate | 0.01 |

| optimizer | SGD |

| Weight decay term | 0.0005 |

| Epoch | 300 |

| Image Enhancement | Corrosion | Tuberculation | Foreign Matter |

|---|---|---|---|

| Number before enhancement | 1122 | 732 | 82 |

| Augmented quantity | 1184 | 794 | 144 |

| Enhanced before mAP@50 | 76.2 | 75.4 | 62.3 |

| Enhanced mAP@50 | 80.4 | 79.9 | 73.8 |

| Model | R/% | mAP @50/% | mAP @50–95/% | Parameter Count/106 | FLOPs | FPS/HZ |

|---|---|---|---|---|---|---|

| YOLOv8 | 75.0 | 79.5 | 62.2 | 11.13 | 23.4 | 526.2 |

| YOLOv8-V | 74.2 | 81.3 | 65.2 | 6.54 | 13.5 | 611.4 |

| Model | R/% | mAP @50/% | mAP @50–95/% | Parameter Count/106 | FLOPs | FPS/HZ |

|---|---|---|---|---|---|---|

| YOLOv8 | 75.0 | 79.5 | 62.2 | 11.13 | 23.4 | 526.2 |

| YOLOv8-V | 74.2 | 81.3 | 65.2 | 6.54 | 13.5 | 611.4 |

| YOLOv8-VSM | 79.2 | 83.5 | 66.6 | 6.82 | 14.3 | 603.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Lu, L.; Liu, S.; Hu, Q.; Zhong, G.; Su, Z.; Xu, S. A Method for Improving the Efficiency and Effectiveness of Automatic Image Analysis of Water Pipes. Water 2025, 17, 2781. https://doi.org/10.3390/w17182781

Wang Q, Lu L, Liu S, Hu Q, Zhong G, Su Z, Xu S. A Method for Improving the Efficiency and Effectiveness of Automatic Image Analysis of Water Pipes. Water. 2025; 17(18):2781. https://doi.org/10.3390/w17182781

Chicago/Turabian StyleWang, Qiuping, Lei Lu, Shuguang Liu, Qunfang Hu, Guihui Zhong, Zhan Su, and Shengxin Xu. 2025. "A Method for Improving the Efficiency and Effectiveness of Automatic Image Analysis of Water Pipes" Water 17, no. 18: 2781. https://doi.org/10.3390/w17182781

APA StyleWang, Q., Lu, L., Liu, S., Hu, Q., Zhong, G., Su, Z., & Xu, S. (2025). A Method for Improving the Efficiency and Effectiveness of Automatic Image Analysis of Water Pipes. Water, 17(18), 2781. https://doi.org/10.3390/w17182781