Prediction of Dam Inflow in the River Basin Through Representative Hydrographs and Auto-Setting Artificial Neural Network

Abstract

1. Introduction

2. Methodology

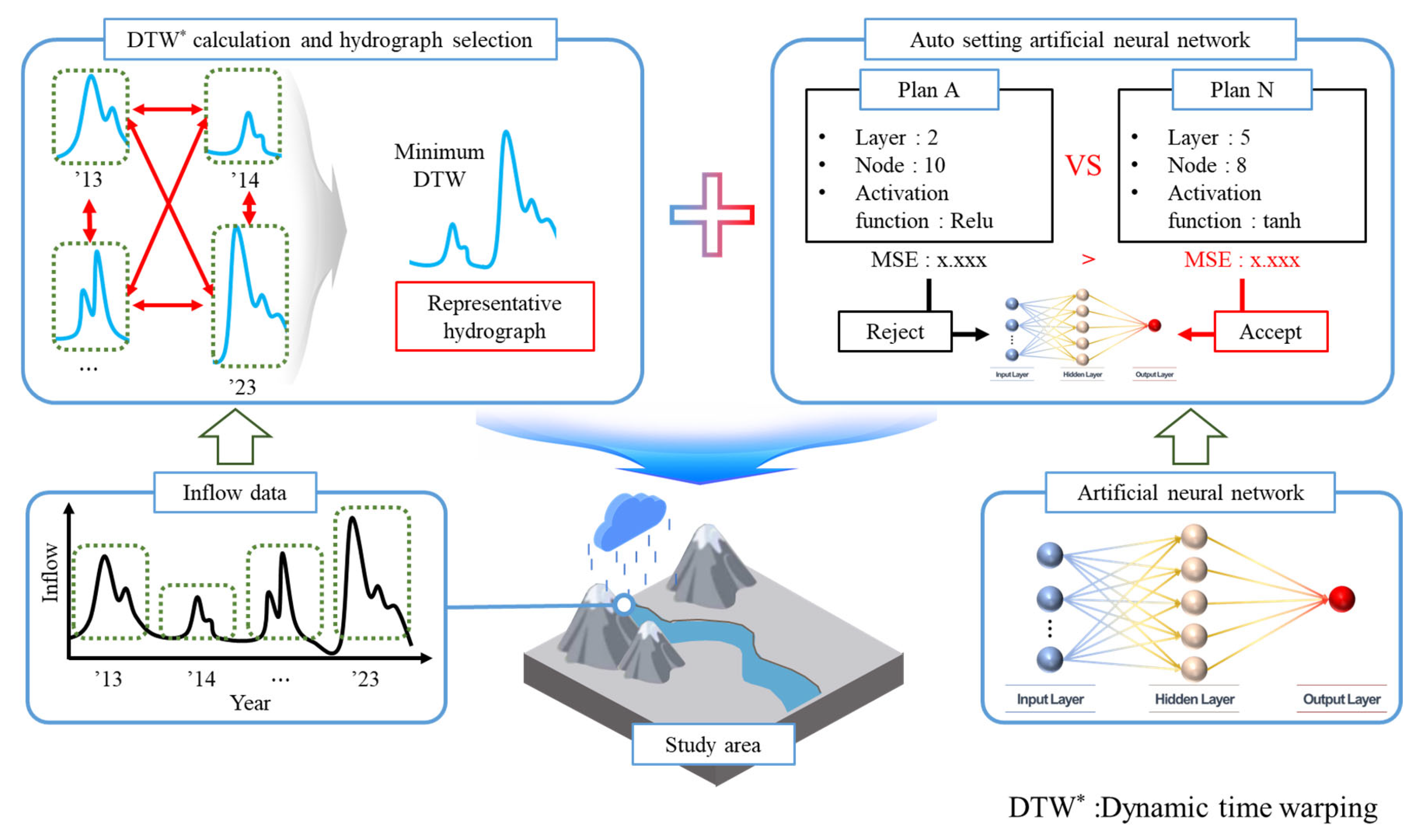

2.1. Overview

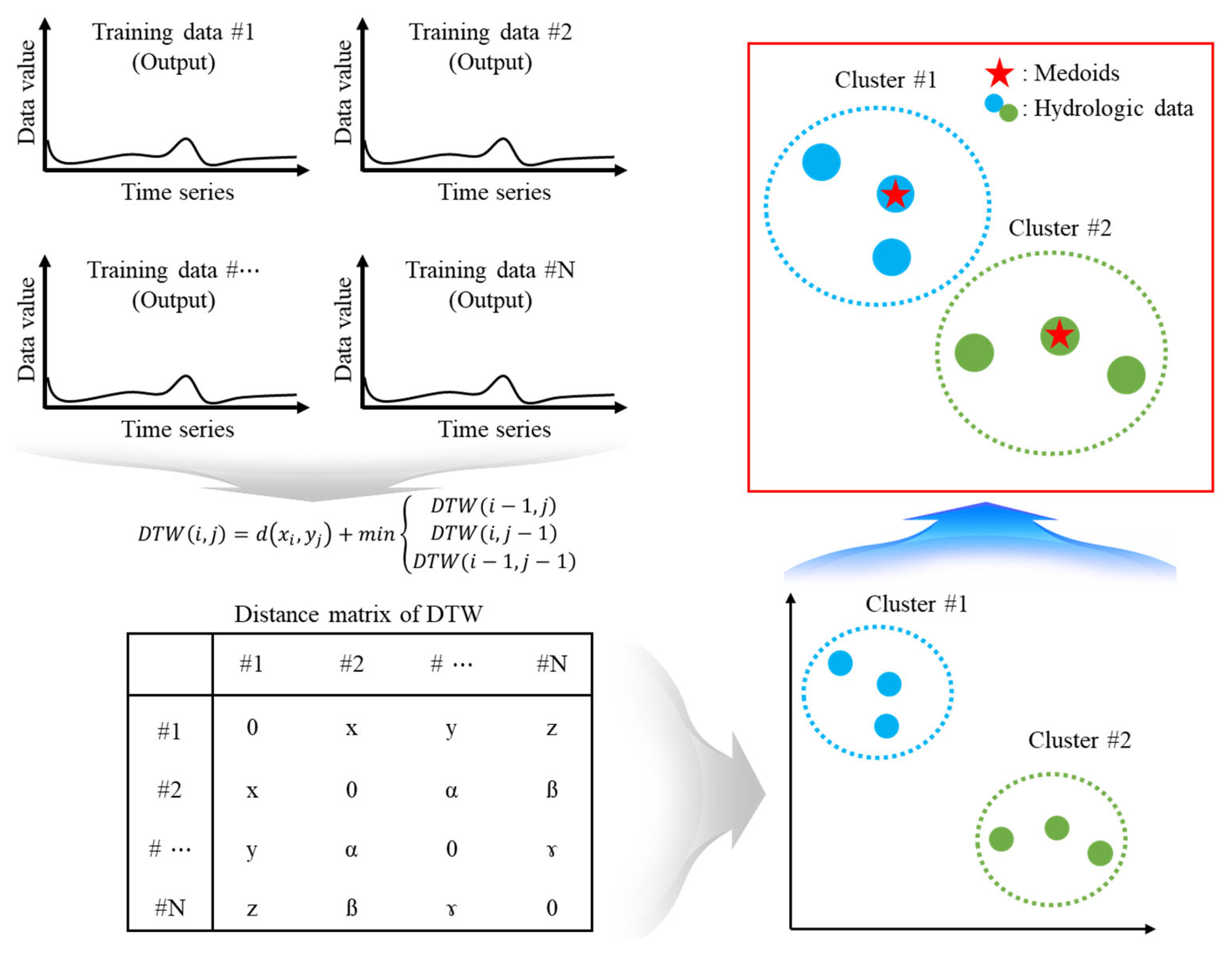

2.2. Representative Hydrograph Extraction Technique

- Establishment of full-period data for the target basin;

- Division of data by year;

- Calculation of mutual DTW by annual event using the results of 2;

- Selection of the center point using DTW-based K-medoids clustering;

- Selection of data based on the center point.

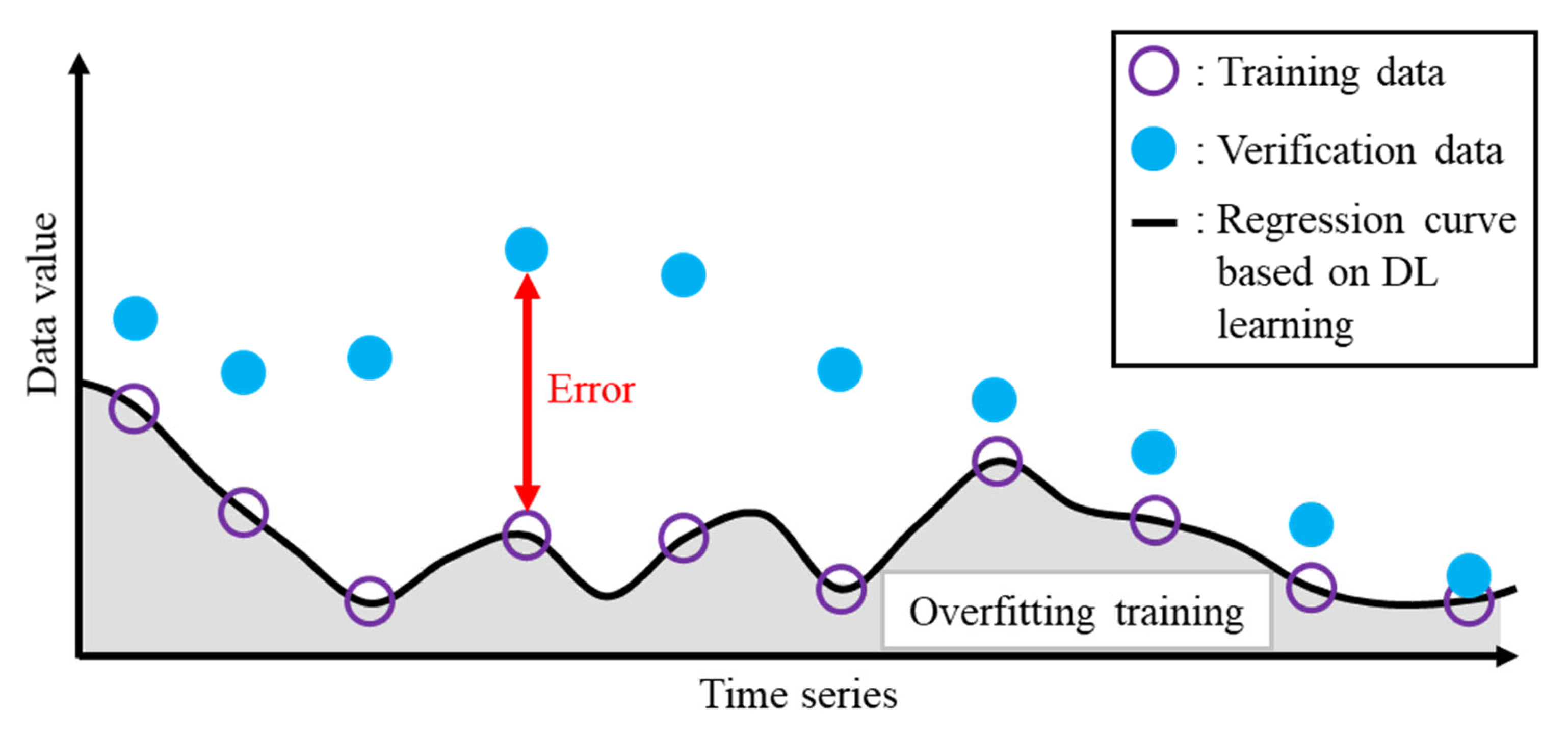

2.3. Auto Setting Artificial Neural Network

3. Results

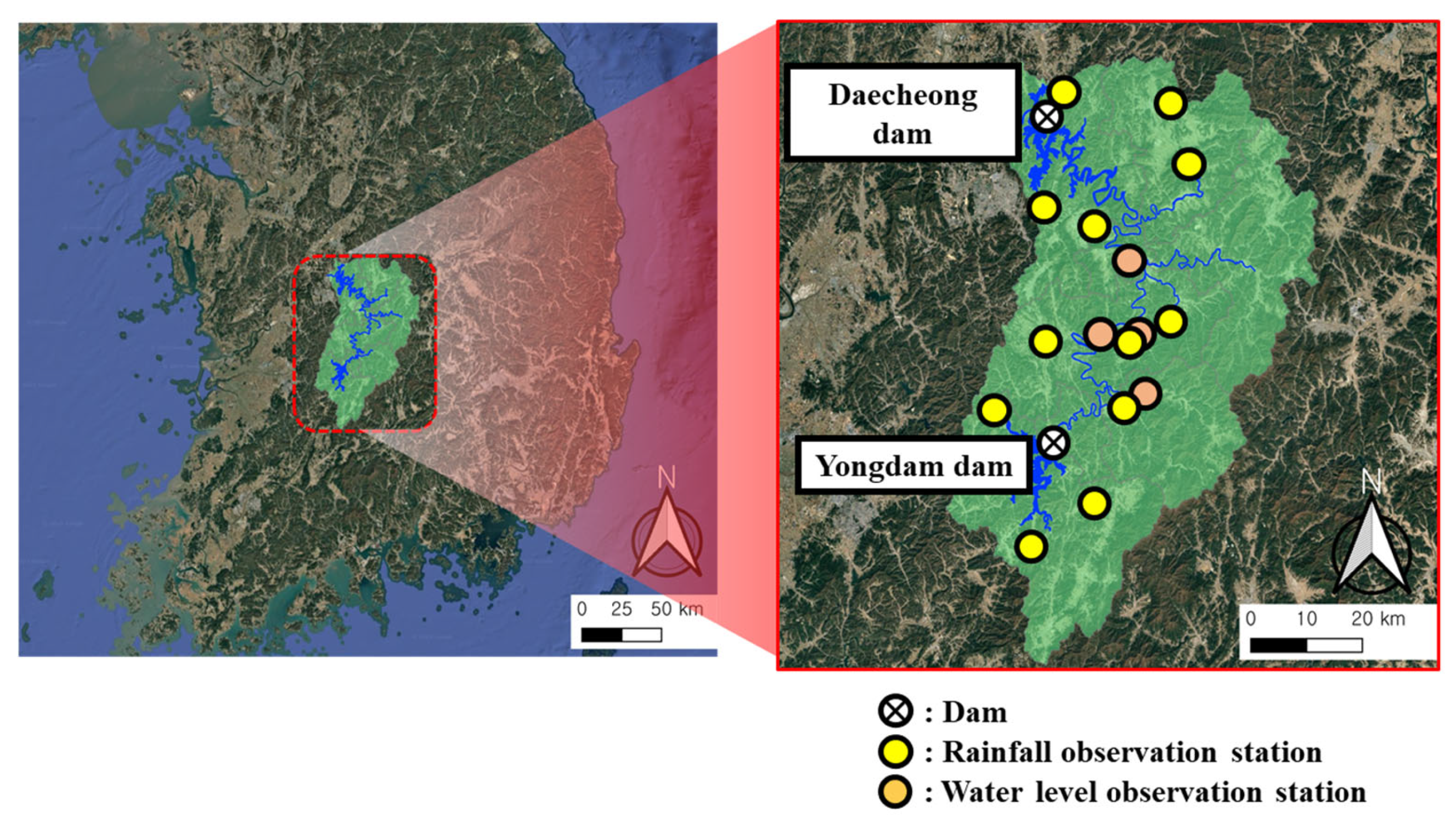

3.1. Study Area

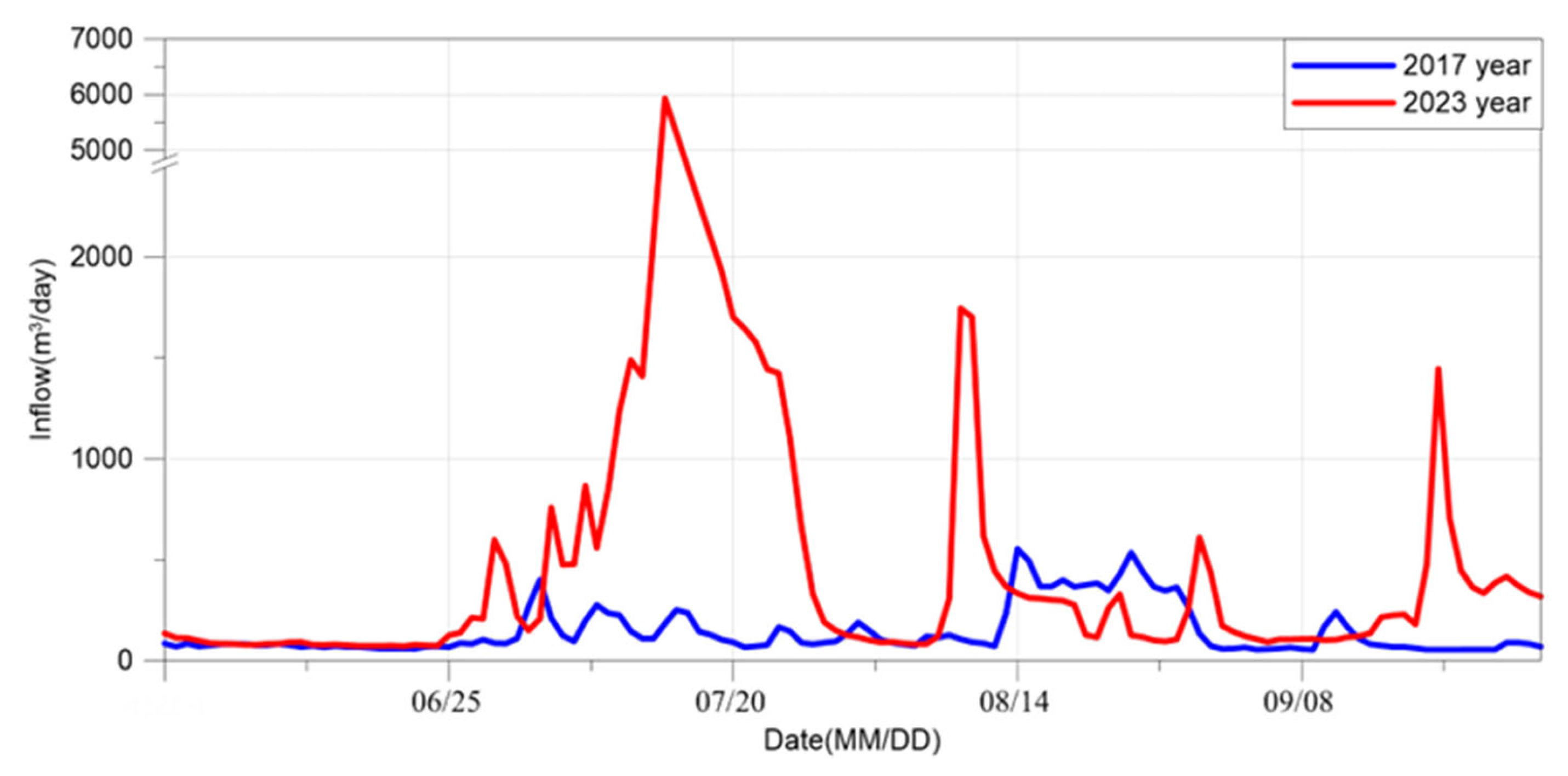

3.2. Collection of Hydrological Data and Application of RHET

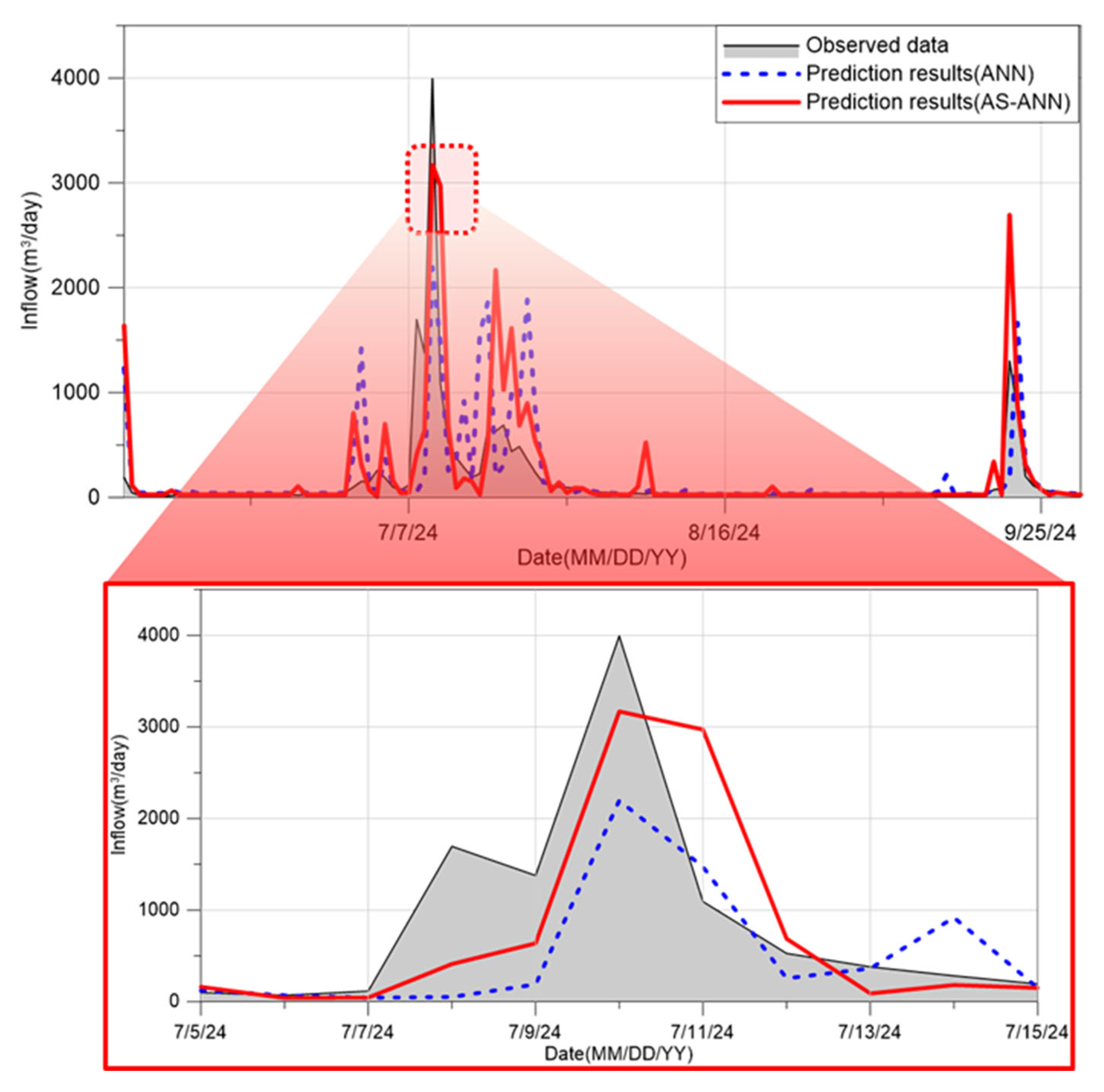

3.3. Verification and Prediction Results Analysis of RHET-Based AS-ANN

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Di Baldassarre, G.; Wanders, N.; AghaKouchak, A.; Kuil, L.; Rangecroft, S.; Veldkamp, T.I.; Garcia, M.; van Oel, P.R.; Breinl, K.; Van Loon, A.F. Water shortages worsened by reservoir effects. Nat. Sustain. 2018, 1, 617–622. [Google Scholar] [CrossRef]

- Lee, S.; Kang, D. Analyzing the effectiveness of a multi-purpose dam using a system dynamics model. Water 2020, 12, 1062. [Google Scholar] [CrossRef]

- Lee, M.H.; Im, E.S.; Bae, D.H. Future projection in inflow of major multi-purpose dams in South Korea. J. Wetl. Res. 2019, 21, 107–116. [Google Scholar]

- Kim, Y.; Yu, J.; Lee, K.; Chung, H.I.; Sung, H.C.; Jeon, S. Impact assessment of climate change on the near and the far future streamflow in the Bocheongcheon Basin of Geumgang river, South Korea. Water 2021, 13, 2516. [Google Scholar] [CrossRef]

- Park, C.Y.; Moon, J.Y.; Cha, E.J.; Yun, W.T.; Choi, Y.E. Recent Changes in Summer Precipitation Characteristics over South Korea. J. Korean Geogr. Soc. 2008, 43, 324–336. [Google Scholar]

- Korea Meteorological Administration. Climate Change Forecast Analysis Report for Korean Peninsula; Meteorological Administration: Seoul, Republic of Korea, 2018.

- National Assembly Budget Office. Disaster Damage Support System Status and Analysis of Financial Needs; National Assembly Budget Office: Seoul, Republic of Korea, 2019.

- Sarker, I.H. Deep learning: A comprehensive overview on techniques, taxonomy, applications and research directions. SN Comput. Sci. 2021, 2, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Jabbar, H.; Khan, R.Z. Methods to avoid over-fitting and under-fitting in supervised machine learning (comparative study). Comput. Sci. Commun. Instrum. Devices 2015, 70, 978–981. [Google Scholar]

- Ahmed, S.F.; Alam, M.S.B.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Ali, A.B.M.S.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Piotrowski, A.P.; Napiorkowski, J.J. A comparison of methods to avoid overfitting in neural networks training in the case of catchment runoff modelling. J. Hydrol. 2013, 476, 97–111. [Google Scholar] [CrossRef]

- Lee, W.J. Improvement of Multi Layer Perceptron Using Adaptive Moments and Harmony Search: Focused on Daecheong Dam Inflow Prediction. Master’s Thesis, Chungbuk National University, Cheongju-si, Republic of Korea, 2024. [Google Scholar]

- Zuo, G.; Luo, J.; Wang, N.; Lian, Y.; He, X. Decomposition ensemble model based on variational mode decomposition and long short-term memory for streamflow forecasting. J. Hydrol. 2020, 585, 124776. [Google Scholar] [CrossRef]

- Lee, W.J.; Lee, E.H. Runoff prediction based on the discharge of pump stations in an urban stream using a modified multi-layer perceptron combined with meta-heuristic optimization. Water 2022, 14, 99. [Google Scholar] [CrossRef]

- Riahi-Madvar, H.; Gharabaghi, B. Pre-processing and Input Vector Selection Techniques in Computational Soft Computing Models of Water Engineering. In Computational Intelligence for Water and Environmental Sciences; Springer: Singapore, 2022; pp. 429–447. [Google Scholar]

- Riahi-Madvar, H.; Dehghani, M.; Parmar, K.S.; Nabipour, N.; Shamshirband, S. Improvements in the explicit estimation of pollutant dispersion coefficient in rivers by subset selection of maximum dissimilarity hybridized with ANFIS-firefly algorithm (FFA). IEEE Access 2020, 8, 60314–60337. [Google Scholar] [CrossRef]

- Lee, W.J.; Lee, E.H. Improvement of multi layer perceptron performance using combination of adaptive moments and improved harmony search for prediction of Daecheong Dam inflow. J. Korea Water Resour. Assoc. 2023, 56, 63–74. [Google Scholar]

- Ryu, Y.M.; Lee, E.H. Development of dam inflow prediction technique based on explainable artificial intelligence (XAI) and combined optimizer for efficient use of water resources. Environ. Model. Softw. 2025, 187, 106380. [Google Scholar] [CrossRef]

- Aliferis, C.; Simon, G. Overfitting, underfitting and general model overconfidence and under-performance pitfalls and best practices in machine learning and AI. In Artificial Intelligence and Machine Learning in Health Care and Medical Sciences: Best Practices and Pitfalls; Springer: Berlin/Heidelberg, Germany, 2024; pp. 477–524. [Google Scholar]

- Bilmes, J. Underfitting and Overfitting in Machine Learning. UW ECE Course Notes, 5. 2020. Available online: https://people.ece.uw.edu/bilmes/classes/ee511/ee511_spring_2020/overfitting_underfitting.pdf (accessed on 8 August 2025).

- Bejani, M.M.; Ghatee, M. A systematic review on overfitting control in shallow and deep neural networks. Artif. Intell. Rev. 2021, 54, 6391–6438. [Google Scholar] [CrossRef]

- Pothuganti, S. Review on over-fitting and under-fitting problems in Machine Learning and solutions. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2018, 7, 3692–3695. [Google Scholar]

- Senin, P. Dynamic Time Warping Algorithm Review. Information and Computer Science Department University of Hawaii at Manoa Honolulu, USA, 2008, 855(1–23), 40. Available online: https://seninp.github.io/assets/pubs/senin_dtw_litreview_2008.pdf (accessed on 8 August 2025).

- Xiao, M.; Wu, Y.; Zuo, G.; Fan, S.; Yu, H.; Shaikh, Z.A.; Wen, Z. Addressing overfitting problem in deep learning-based solutions for next generation data-driven networks. Wirel. Commun. Mob. Comput. 2021, 1, 8493795. [Google Scholar] [CrossRef]

- Han, J. Spatial clustering methods in data mining: A survey. In Geographic Data Mining and Knowledge Discovery; CRC Press: Boca Raton, FL, USA, 2001; pp. 188–217. [Google Scholar]

- MacQueen, J. Multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statisticsand Probability, Berkeley, CA, USA, 27 December 1965–7 January 1966; Volume 1, pp. 281–297. [Google Scholar]

- Park, H.S.; Jun, C.H. A simple and fast algorithm for K-medoids clustering. Expert Syst. Appl. 2009, 36, 3336–3341. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Ministry of Environment. 2020 Flood Damage Survey (2nd) (Nakdong River, Geumgang River, Seomjin-Yeongsan River Area), Korea 2021. Available online: https://www.archives.go.kr/next/newsearch/searchTotalUp.do?selectSearch=1&upside_query=2020%EB%85%84+%ED%99%8D%EC%88%98%ED%94%BC%ED%95%B4%EC%83%81%ED%99%A9%EC%A1%B0%EC%82%AC (accessed on 8 August 2025).

- Nawi, N.M.; Atomi, W.H.; Rehman, M.Z. The effect of data pre-processing on optimized training of artificial neural networks. Procedia Technol. 2013, 11, 32–39. [Google Scholar] [CrossRef]

- Kite, G.W. Frequency and Risk Analyses in Hydrology; Water Resources Publications: Lone Tree, CO, USA, 1977; 224p. [Google Scholar]

- Loaiciga, H.A.; Mariño, M.A. Recurrence interval of geophysical events. J. Water Resour. Plan. Manag. 1991, 117, 367–382. [Google Scholar] [CrossRef]

| Category | Parameter | Description |

|---|---|---|

| Structure parameter | Hidden node | A node existing in the hidden layer, one of the variables that determines the complexity of DL during the learning and prediction process. |

| Hidden layer | A layer added between the input and output layers for nonlinear learning and high-accuracy learning of DL (a layer containing hidden nodes) | |

| Internal operator | Activation function | An operator that determines whether information is transmitted during the process of transmitting information from node to node. |

| Optimizer | Operator that searches for weights and biases that produce minimum errors during the learning process of DL based on training data |

| Input: Input data (X), Output data (Y) Output: Optimal (Layer, Node, Activation, Optimizer) combination with lowest RMSE Step 1: Determine optimal number of nodes For node = 1 to max_node: For r = 1 to Number of repeats: Build ANN with 1 hidden layer of size ‘node’, activation = ‘ReLU’ Compute RMSE on validation set Compute average RMSE for this node If RMSE decreases 4 times and increases once: Break Select node with lowest average RMSE → best_node Step 2: Determine optimal number of layers For layer = 1 to max_layer: For r = 1 to Number of repeats: Build ANN with ‘layer’ hidden layers using best_node and activation = ‘ReLU’ Compute RMSE on validation set Compute average RMSE for this layer If RMSE decreases 4 times and increases once: Break Select layer with lowest average RMSE → best_layer Step 3: Search best activation and optimizer For activation in {ReLU, tanh, sigmoid}: For optimizer in {Adam, Nadam, SGD}: For r = 1 to R: Build ANN with best_layer hidden layers and best_node per layer Use given activation and optimizer Compute RMSE on validation set Compute average RMSE for this combination Select (activation, optimizer) with lowest average RMSE → best_act, best_opt Return: best_layer, best_node, best_act, best_opt |

| Category (Number of Input Data) | Input Data |

|---|---|

| Dam discharge (1) | Yongdam dam |

| Water level data (4) | Sangyegyo Yangganggyo Choganggyo Yeouigyo |

| Rainfall data (12) | Boeun Cheongnamdae Secheon Okcheon Geumsan Jucheon Jinan Cheongsan Yeongdong Gagok Muju Donghyang |

| Parameter | ANN | AS-ANN |

|---|---|---|

| Number of hidden nodes | 10 | Auto setting |

| Number of hidden layers | 5 | Auto setting |

| Optimizer | Adam | Auto setting |

| Activation function | Relu | Auto setting |

| Epochs | 1200 | 1200 |

| Method | Min RMSE (m3/day) | Max RMSE (m3/day) | Average RMSE (m3/day) |

|---|---|---|---|

| ANN | 467.03 | 583.14 | 520.74 |

| AS-ANN | 199.52 | 644.50 | 276.67 |

| Method | Difference in Peak Inflow (m3/day) |

|---|---|

| ANN | 1784.55 |

| AS-ANN | 1358.14 |

| Method | Min RMSE (m3/day) | Max RMSE (m3/day) | Average RMSE (m3/day) |

|---|---|---|---|

| ANN | 401.27 | 655.73 | 537.45 |

| AS-ANN | 348.24 | 492.63 | 403.02 |

| Method | Difference in Peak Inflow (m3/day) |

|---|---|

| ANN | 1797.21 |

| AS-ANN | 823.94 |

| Method | NOR |

|---|---|

| ANN | 729 |

| AS-ANN | 108 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ryu, Y.M.; Lee, E.H. Prediction of Dam Inflow in the River Basin Through Representative Hydrographs and Auto-Setting Artificial Neural Network. Water 2025, 17, 2689. https://doi.org/10.3390/w17182689

Ryu YM, Lee EH. Prediction of Dam Inflow in the River Basin Through Representative Hydrographs and Auto-Setting Artificial Neural Network. Water. 2025; 17(18):2689. https://doi.org/10.3390/w17182689

Chicago/Turabian StyleRyu, Yong Min, and Eui Hoon Lee. 2025. "Prediction of Dam Inflow in the River Basin Through Representative Hydrographs and Auto-Setting Artificial Neural Network" Water 17, no. 18: 2689. https://doi.org/10.3390/w17182689

APA StyleRyu, Y. M., & Lee, E. H. (2025). Prediction of Dam Inflow in the River Basin Through Representative Hydrographs and Auto-Setting Artificial Neural Network. Water, 17(18), 2689. https://doi.org/10.3390/w17182689