A Performance Comparison Study on Climate Prediction in Weifang City Using Different Deep Learning Models

Abstract

1. Introduction

- (1)

- Wavelet transform is introduced to determine the input variables of the deep learning models.

- (2)

- By analyzing the performance of different deep learning models, CNN, LSTM, and GRU are used to form a hybrid model.

- (3)

- CNN-LSTM-GRU can simultaneously focus on both local and global information of the climate change time series, improving its ability to recognize complex patterns in the climate change time series.

- (4)

- The proposed hybrid CNN-LSTM-GRU model has higher prediction accuracy than a single method.

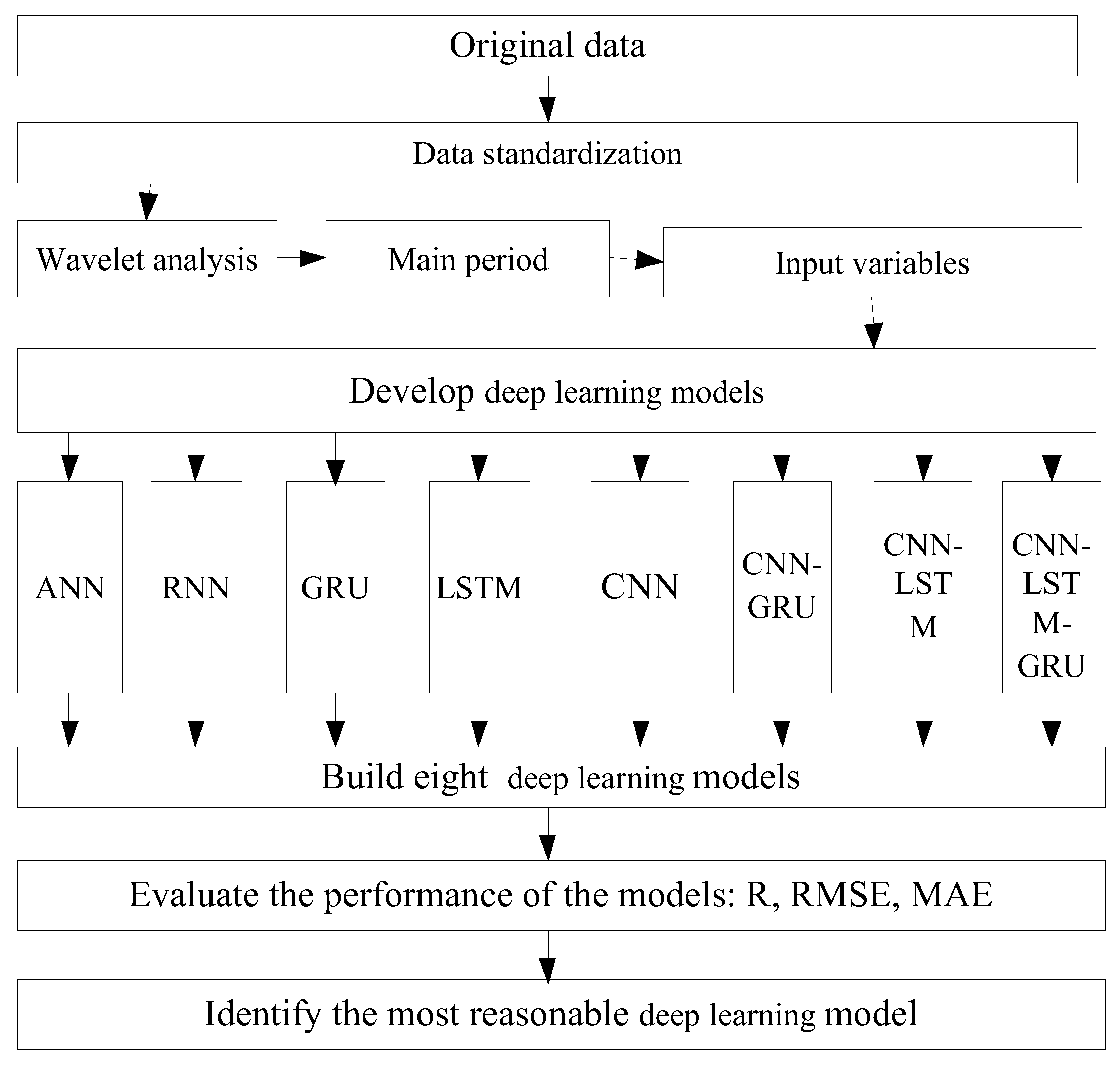

2. Materials and Methods

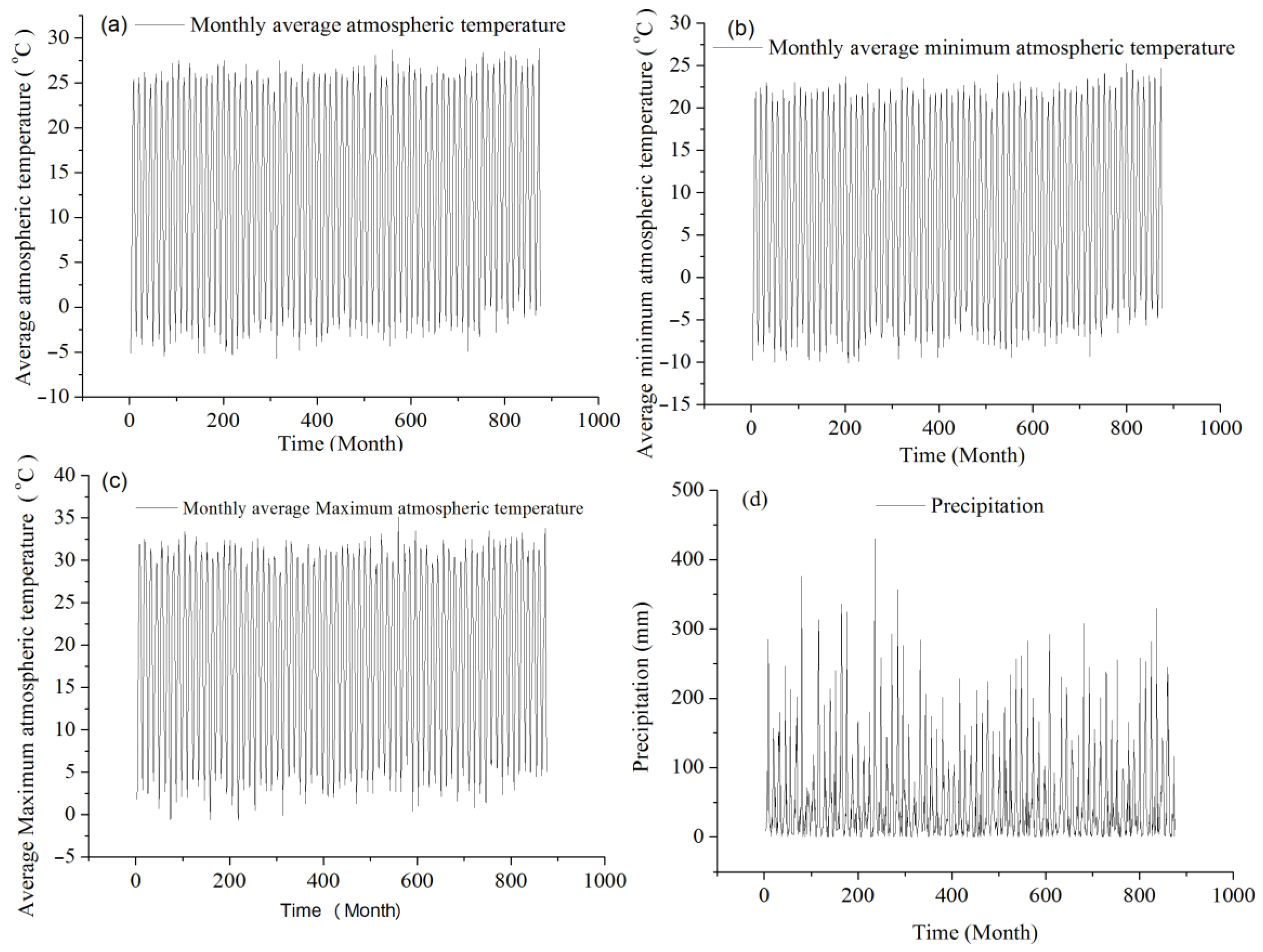

2.1. Study Area and Data

2.2. Data Standardization

2.3. Artificial Neural Network (ANN)

2.4. Recurrent Neural Network (RNN)

2.5. Long Short-Term Memory (LSTM)

2.6. Gate Recurrent Unit (GRU)

2.7. Convolutional Neural Network (CNN)

2.8. Hybrid Model

2.9. Model Evaluation Indicators

2.10. Cross-Validation

3. Results

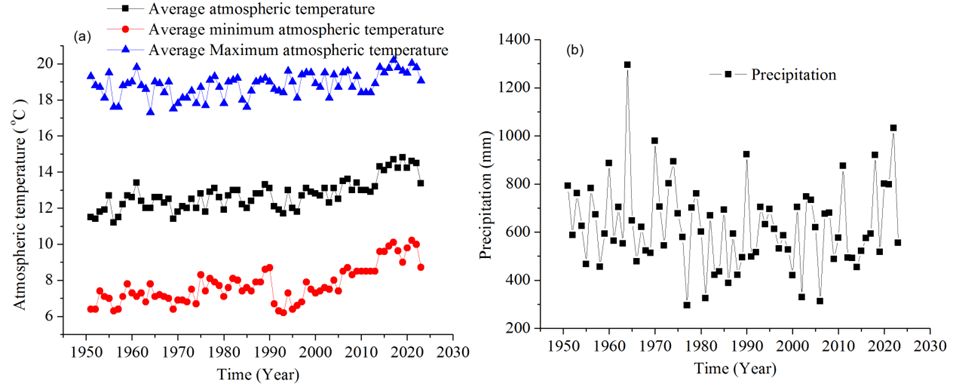

3.1. Annual Climate Change in Weifang City

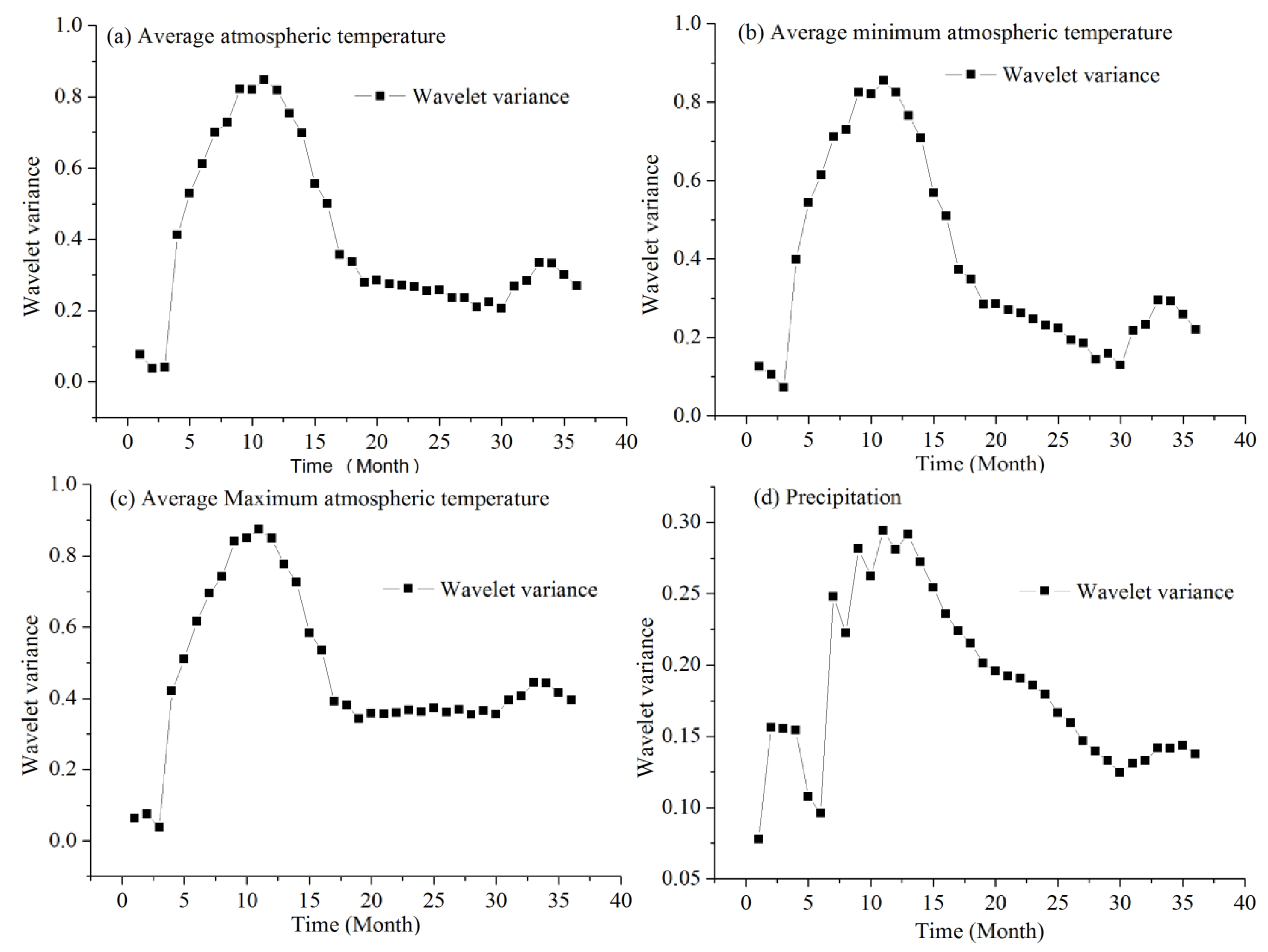

3.2. The Cyclicality of Climate Change

3.3. Hyperparameter Information of the Deep Learning Models

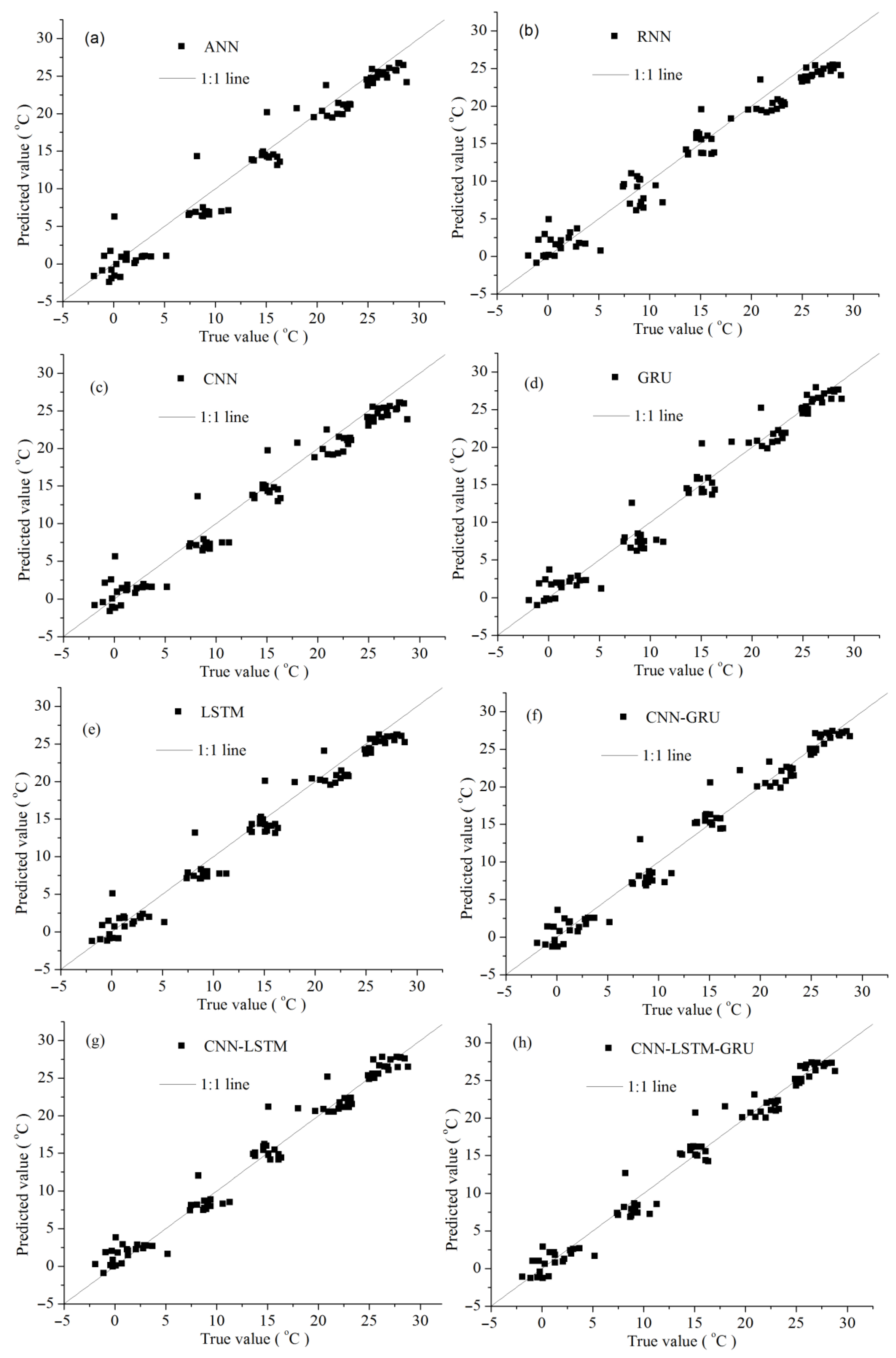

3.4. Prediction of MAAT in Weifang City

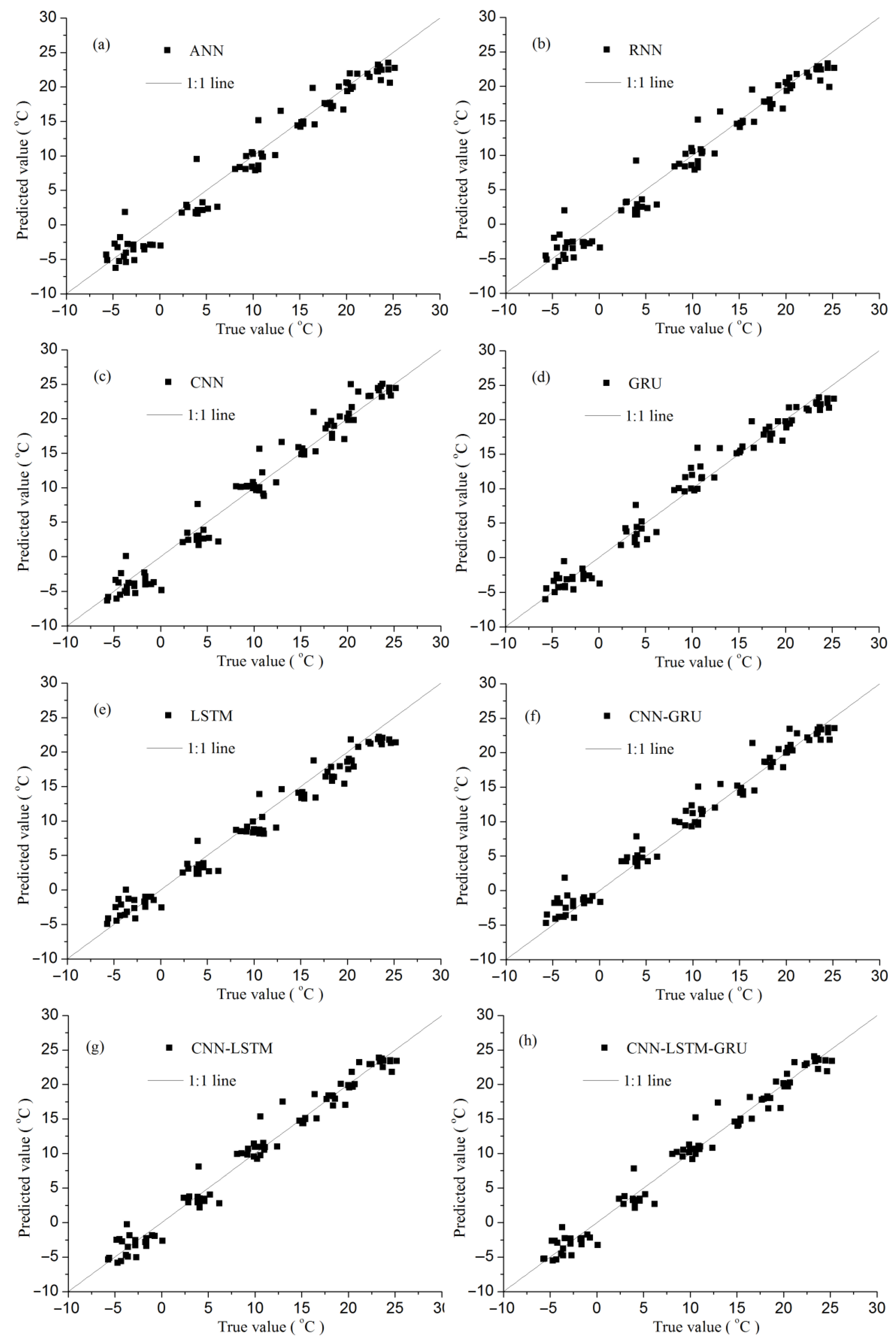

3.5. Prediction of MAMINAT in Weifang City

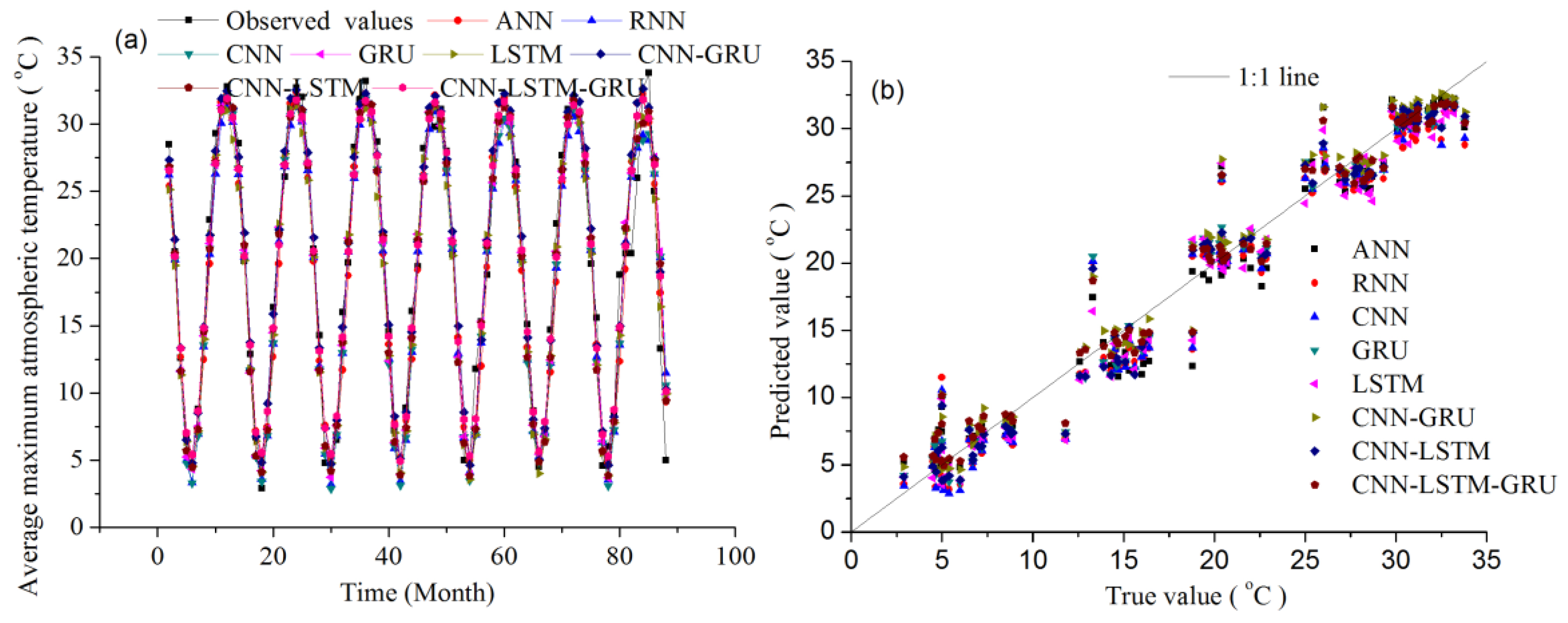

3.6. Prediction of MAMAXAT in Weifang City

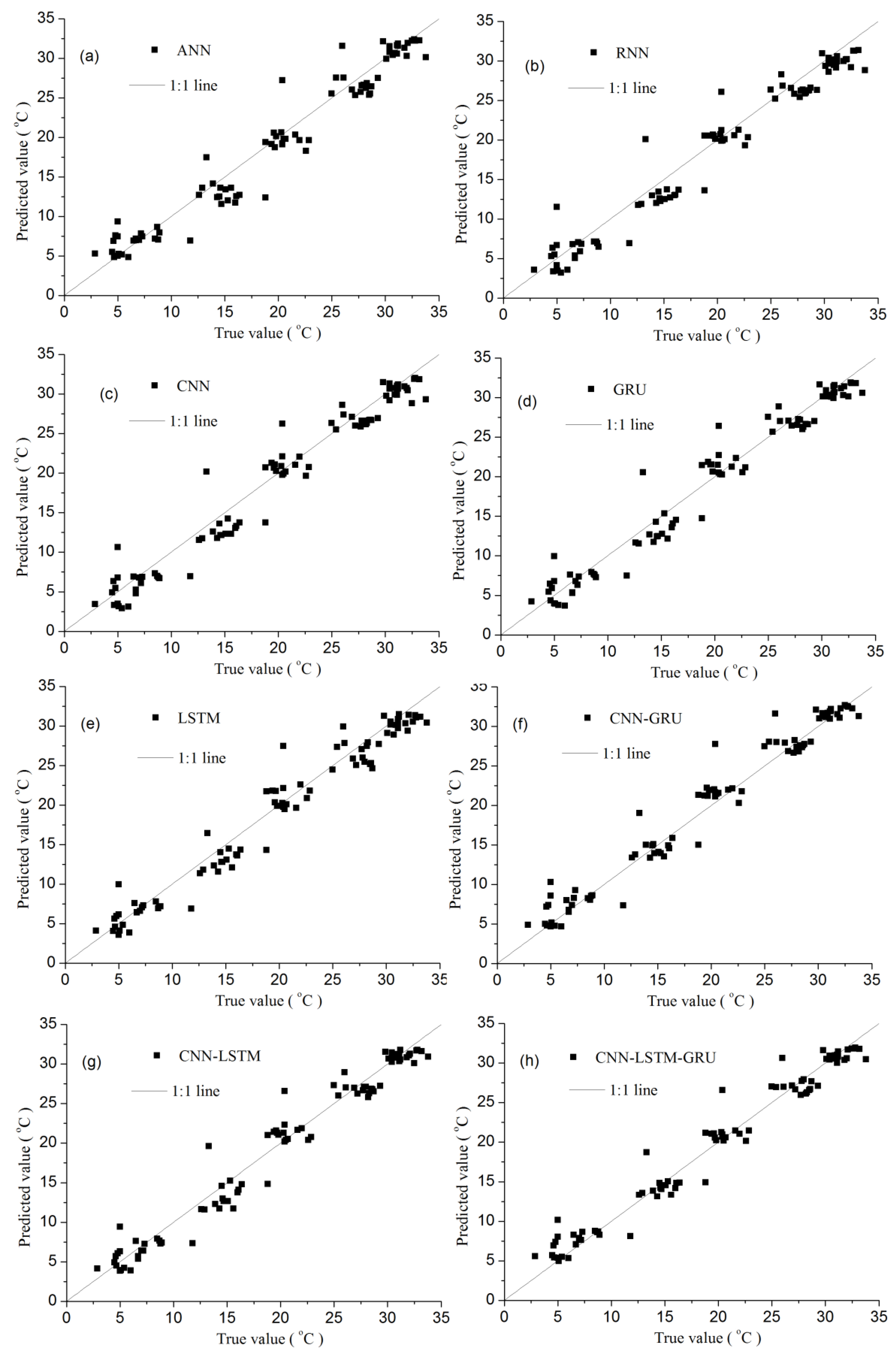

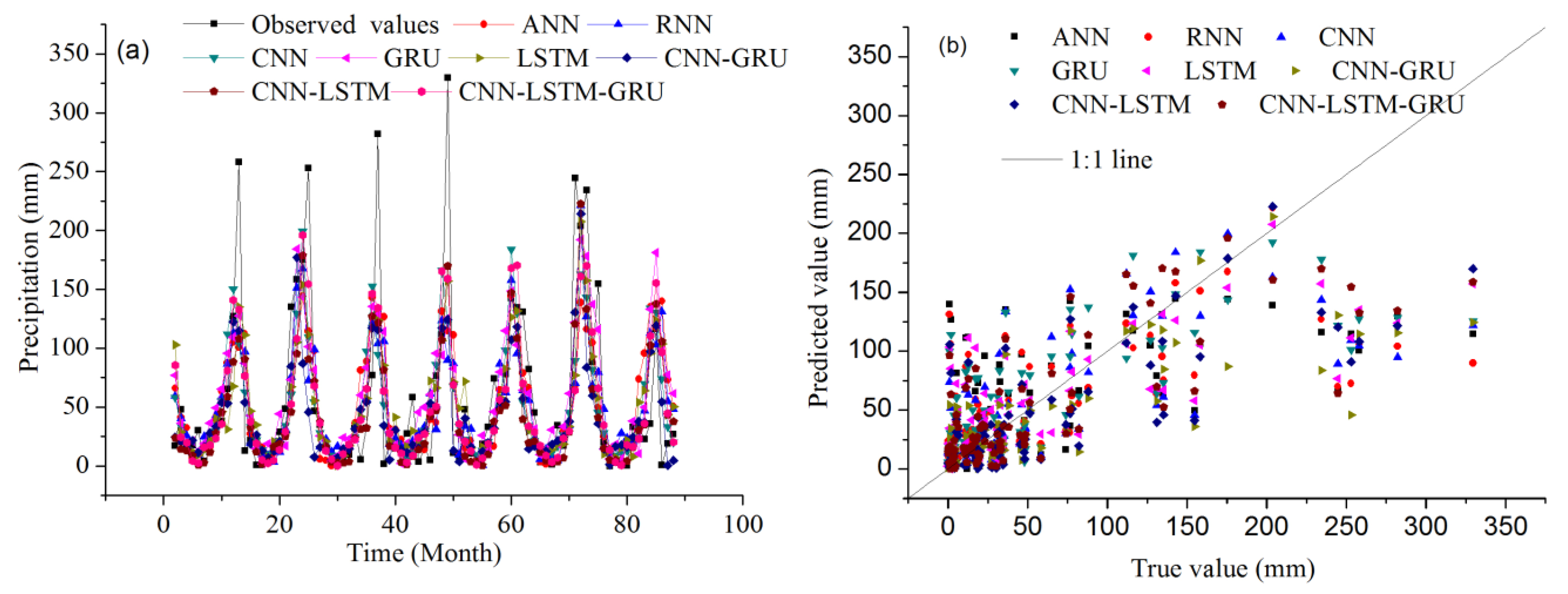

3.7. Prediction of Monthly Precipitation (MP) in Weifang City

4. Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, X.; Wang, Y.; Xue, B.L.; Yinglan, A.; Zhang, X.; Wang, G. Attribution of runoff and hydrological drought changes in an ecologically vulnerable basin in semi-arid regions of China. Hydrol. Process. 2023, 37, e15003. [Google Scholar] [CrossRef]

- Sun, Y.; Zhu, S.; Wang, D.; Duan, J.; Lu, H.; Yin, H.; Tan, C.; Zhang, L.; Zhao, M.; Cai, W.; et al. Global supply chains amplify economic costs of future extreme heat risk. Nature 2024, 627, 797–804. [Google Scholar] [CrossRef]

- Kotz, M.; Levermann, A.; Wenz, L. The economic commitment of climate change. Nature 2024, 628, 551–557. [Google Scholar] [CrossRef] [PubMed]

- Benz, S.; Irvine, D.; Rau, G.; Bayer, P.; Menberg, K.; Blum, P.; Jamieson, R.; Griebler, C.; Kurylyk, B. Global groundwater warming due to climate change. Nat. Geosci. 2024, 17, 545–551. [Google Scholar] [CrossRef]

- Liu, J.; Li, D.; Chen, H.; Wang, H.; Wada, Y.; Kummu, M.; Gosling, S.N.; Yang, H.; Pokhrel, Y.; Ciais, P. Timing the first emergence and disappearance of global water scarcity. Nat. Commun. 2024, 15, 7129. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. The Characteristics of Air Quality Changes in Hohhot City in China and their Relationship with Meteorological and Socio-economic Factors. Aerosol Air Qual. Res. 2024, 24, 230274. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. Change in Air Quality during 2014–2021 in Jinan City in China and Its Influencing Factors. Toxics 2023, 11, 210. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, Z.; He, Z.; Li, X.; Meng, J.; Hou, Z.; Yang, J. Changes in Air Quality from the COVID to the Post-COVID Era in the Beijing-Tianjin-Tangshan Region in China. Aerosol Air Qual. Res. 2021, 21, 210270. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, T.; Wu, P. Anthropogenic amplification of precipitation variability over the past century. Science 2024, 385, 427–432. [Google Scholar] [CrossRef]

- Mondini, A.; Guzzetti, F.; Melillo, M. Deep learning forecast of rainfall-induced shallow landslides. Nat. Commun. 2023, 14, 2466. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. Long-term projection of future climate change over the twenty-first century in the Sahara region in Africa under four Shared Socio-Economic Pathways scenarios. Environ. Sci. Pollut. Res. 2023, 30, 22319–22329. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Yin, X.; Zhou, G.; Bruijnzeel, L.A.; Dai, A.; Wang, F.; Gentine, P.; Zhang, G.; Song, Y.; Zhou, D. Rising rainfall intensity induces spatially divergent hydrological changes within a large river basin. Nat. Commun. 2024, 15, 823. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Hu, Y.; Zhang, C.; Shen, D.; Xu, B.; Chen, M.; Chu, W.; Li, R. Using Physics-Encoded GeoAI to Improve the Physical Realism of Deep Learning′s Rainfall-Runoff Responses under Climate Change. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104101. [Google Scholar] [CrossRef]

- Wang, W.-C.; Tian, W.-C.; Hu, X.-X.; Hong, Y.-H.; Chai, F.-X.; Xu, D.-M. DTTR: Encoding and decoding monthly runoff prediction model based on deep temporal attention convolution and multimodal fusion. J. Hydrol. 2024, 643, 131996. [Google Scholar] [CrossRef]

- Yang, R.; Hu, J.; Li, Z.; Mu, J.; Yu, T.; Xia, J.; Li, X.; Dasgupta, A.; Xiong, H. Interpretable machine learning for weather and climate prediction: A review. Atmos. Environ. 2024, 338, 120797. [Google Scholar] [CrossRef]

- Yosri, A.; Ghaith, M.; El-Dakhakhni, W. Deep learning rapid flood risk predictions for climate resilience planning. J. Hydrol. 2024, 631, 130817. [Google Scholar] [CrossRef]

- Suhas, D.L.; Ramesh, N.; Kripa, R.M.; Boos, W.R. Influence of monsoon low pressure systems on South Asian disasters and implications for disaster prediction. Npj Clim. Atmos. Sci. 2023, 6, 48. [Google Scholar] [CrossRef]

- Eyring, V.; Collins, W.D.; Gentine, P.; Barnes, E.A.; Barreiro, M.; Beucler, T.; Bocquet, M.; Bretherton, C.S.; Christensen, H.M.; Dagon, K.; et al. Pushing the frontiers in climate modelling and analysis with machine learning. Nat. Clim. Change 2024, 14, 916–928. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Li, S.; Li, X.; Meng, J.; Hou, Z.; Liu, J.; Chen, Y. Air Pollution Forecasting Using Artificial and Wavelet Neural Networks with Meteorological Conditions. Aerosol Air Qual. Res. 2020, 20, 1429–1439. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. Predicting of Daily PM2.5 Concentration Employing Wavelet Artificial Neural Networks Based on Meteorological Elements in Shanghai, China. Toxics 2023, 11, 51. [Google Scholar] [CrossRef] [PubMed]

- Guo, Q.; He, Z.; Wang, Z. Prediction of Hourly PM2.5 and PM10 Concentrations in Chongqing City in China Based on Artificial Neural Network. Aerosol Air Qual. Res. 2023, 23, 220448. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. Simulating daily PM2.5 concentrations using wavelet analysis and artificial neural network with remote sensing and surface observation data. Chemosphere 2023, 340, 139886. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Guo, Q.; Wang, Z.; Li, X. Prediction of Monthly PM2.5 Concentration in Liaocheng in China Employing Artificial Neural Network. Atmosphere 2022, 13, 1221. [Google Scholar] [CrossRef]

- Wang, H.; Fu, T.; Du, Y.; Gao, W.; Huang, K.; Liu, Z.; Chandak, P.; Liu, S.; Van Katwyk, P.; Deac, A.; et al. Scientific discovery in the age of artificial intelligence. Nature 2023, 620, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Comito, C.; Pizzuti, C. Artificial intelligence for forecasting and diagnosing COVID-19 pandemic: A focused review. Artif. Intell. Med. 2022, 128, 102286. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Y.; Wang, D.; Tong, X.; Liu, T.; Zhang, S.; Huang, J.; Zhang, L.; Chen, L.; Fan, H.; et al. Artificial Intelligence for COVID-19: A Systematic Review. Front. Med. 2021, 8, 704256. [Google Scholar] [CrossRef] [PubMed]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N.; Prabhat, F. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Nearing, G.; Cohen, D.; Dube, V.; Gauch, M.; Gilon, O.; Harrigan, S.; Hassidim, A.; Klotz, D.; Kratzert, F.; Metzger, A.; et al. Global prediction of extreme floods in ungauged watersheds. Nature 2024, 627, 559–563. [Google Scholar] [CrossRef]

- Diez-Sierra, J.; del Jesus, M. Long-term rainfall prediction using atmospheric synoptic patterns in semi-arid climates with statistical and machine learning methods. J. Hydrol. 2020, 586, 124789. [Google Scholar] [CrossRef]

- Lu, S.; Li, W.; Yao, G.; Zhong, Y.; Bao, L.; Wang, Z.; Bi, J.; Zhu, C.; Guo, Q. The changes prediction on terrestrial water storage in typical regions of China based on neural networks and satellite gravity data. Sci. Rep. 2024, 14, 16855. [Google Scholar] [CrossRef]

- He, Z.; Zhang, Y.; Guo, Q.; Zhao, X. Comparative Study of Artificial Neural Networks and Wavelet Artificial Neural Networks for Groundwater Depth Data Forecasting with Various Curve Fractal Dimensions. Water Resour. Manag. 2014, 28, 5297–5317. [Google Scholar] [CrossRef]

- Molajou, A.; Nourani, V.; Davanlou Tajbakhsh, A.; Akbari Variani, H.; Khosravi, M. Multi-Step-Ahead Rainfall-Runoff Modeling: Decision Tree-Based Clustering for Hybrid Wavelet Neural- Networks Modeling. Water Resour. Manag. 2024, 38, 5195–5214. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, X.; Khan, A.; Zhang, Y.-k.; Kuang, X.; Liang, X.; Taccari, M.L.; Nuttall, J. Daily runoff forecasting by deep recursive neural network. J. Hydrol. 2021, 596, 126067. [Google Scholar] [CrossRef]

- Gao, S.; Huang, Y.; Zhang, S.; Han, J.; Wang, G.; Zhang, M.; Lin, Q. Short-term runoff prediction with GRU and LSTM networks without requiring time step optimization during sample generation. J. Hydrol. 2020, 589, 125188. [Google Scholar] [CrossRef]

- Xie, Y.; Sun, W.; Ren, M.; Chen, S.; Huang, Z.; Pan, X. Stacking ensemble learning models for daily runoff prediction using 1D and 2D CNNs. Expert Syst. Appl. 2023, 217, 119469. [Google Scholar] [CrossRef]

- Yang, X.; Zhou, J.; Zhang, Q.; Xu, Z.; Zhang, J. Evaluation and Interpretation of Runoff Forecasting Models Based on Hybrid Deep Neural Networks. Water Resour. Manag. 2024, 38, 1987–2013. [Google Scholar] [CrossRef]

- Long, J.; Wang, L.; Chen, D.; Li, N.; Zhou, J.; Li, X.; Xiaoyu, G.; Hu, L.; Chai, C.; Xinfeng, F. Hydrological Projections in the Third Pole Using Artificial Intelligence and an Observation-Constrained Cryosphere-Hydrology Model. Earth’s Future 2024, 12, e2023EF004222. [Google Scholar] [CrossRef]

- Chen, S.; Huang, J.; Huang, J.-C. Improving daily streamflow simulations for data-scarce watersheds using the coupled SWAT-LSTM approach. J. Hydrol. 2023, 622, 129734. [Google Scholar] [CrossRef]

- Yao, Z.; Wang, Z.; Wang, D.; Wu, J.; Chen, L. An ensemble CNN-LSTM and GRU adaptive weighting model based improved sparrow search algorithm for predicting runoff using historical meteorological and runoff data as input. J. Hydrol. 2023, 625, 129977. [Google Scholar] [CrossRef]

- Zhang, Y.; Long, M.; Chen, K.; Xing, L.; Jin, R.; Jordan, M.I.; Wang, J. Skilful nowcasting of extreme precipitation with NowcastNet. Nature 2023, 619, 526–532. [Google Scholar] [CrossRef]

- Ham, Y.-G.; Kim, J.-H.; Min, S.-K.; Kim, D.; Li, T.; Timmermann, A.; Stuecker, M.F. Anthropogenic fingerprints in daily precipitation revealed by deep learning. Nature 2023, 622, 301–307. [Google Scholar] [CrossRef] [PubMed]

- Ham, Y.-G.; Kim, J.-H.; Luo, J.-J. Deep learning for multi-year ENSO forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef] [PubMed]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate medium-range global weather forecasting with 3D neural networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Alet, F.; Ravuri, S.; Ewalds, T.; Eaton-Rosen, Z.; Hu, W.; et al. Learning skillful medium-range global weather forecasting. Science 2023, 382, eadi2336. [Google Scholar] [CrossRef]

- Chen, L.; Han, B.; Wang, X.; Zhao, J.; Yang, W.; Yang, Z. Machine Learning Methods in Weather and Climate Applications: A Survey. Appl. Sci. 2023, 13, 12019. [Google Scholar] [CrossRef]

- Schneider, T.; Behera, S.; Boccaletti, G.; Deser, C.; Emanuel, K.; Ferrari, R.; Leung, L.R.; Lin, N.; Müller, T.; Navarra, A.; et al. Harnessing AI and computing to advance climate modelling and prediction. Nat. Clim. Change 2023, 13, 887–889. [Google Scholar] [CrossRef]

- Chen, M.; Qian, Z.; Boers, N.; Jakeman, A.J.; Kettner, A.J.; Brandt, M.; Kwan, M.-P.; Batty, M.; Li, W.; Zhu, R.; et al. Iterative integration of deep learning in hybrid Earth surface system modelling. Nat. Rev. Earth Environ. 2023, 4, 568–581. [Google Scholar] [CrossRef]

- Kochkov, D.; Yuval, J.; Langmore, I.; Norgaard, P.; Smith, J.; Mooers, G.; Klöwer, M.; Lottes, J.; Rasp, S.; Düben, P.; et al. Neural general circulation models for weather and climate. Nature 2024, 632, 1060–1066. [Google Scholar] [CrossRef]

- Ratnam, J.V.; Dijkstra, H.A.; Behera, S.K. A machine learning based prediction system for the Indian Ocean Dipole. Sci. Rep. 2020, 10, 284. [Google Scholar] [CrossRef]

- Heng, S.Y.; Ridwan, W.M.; Kumar, P.; Ahmed, A.N.; Fai, C.M.; Birima, A.H.; El-Shafie, A. Artificial neural network model with different backpropagation algorithms and meteorological data for solar radiation prediction. Sci. Rep. 2022, 12, 10457. [Google Scholar] [CrossRef]

- Trok, J.T.; Barnes, E.A.; Davenport, F.V.; Diffenbaugh, N.S. Machine learning–based extreme event attribution. Sci. Adv. 2024, 10, eadl3242. [Google Scholar] [CrossRef] [PubMed]

- Patil, K.R.; Doi, T.; Behera, S.K. Predicting extreme floods and droughts in East Africa using a deep learning approach. Npj Clim. Atmos. Sci. 2023, 6, 108. [Google Scholar] [CrossRef]

- Yang, Y.-M.; Kim, J.-H.; Park, J.-H.; Ham, Y.-G.; An, S.-I.; Lee, J.-Y.; Wang, B. Exploring dominant processes for multi-month predictability of western Pacific precipitation using deep learning. Npj Clim. Atmos. Sci. 2023, 6, 157. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, F.; Sun, P.; Li, X.; Du, Z.; Liu, R. A spatio-temporal graph-guided convolutional LSTM for tropical cyclones precipitation nowcasting. Appl. Soft Comput. 2022, 124, 109003. [Google Scholar] [CrossRef]

- Chen, L.; Zhong, X.; Li, H.; Wu, J.; Lu, B.; Chen, D.; Xie, S.-P.; Wu, L.; Chao, Q.; Lin, C.; et al. A machine learning model that outperforms conventional global subseasonal forecast models. Nat. Commun. 2024, 15, 6425. [Google Scholar] [CrossRef]

- Banda, T.D.; Kumarasamy, M. Artificial Neural Network (ANN)-Based Water Quality Index (WQI) for Assessing Spatiotemporal Trends in Surface Water Quality—A Case Study of South African River Basins. Water 2024, 16, 1485. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, H.; Bai, M.; Xu, Y.; Dong, S.; Rao, H.; Ming, W. A Comprehensive Review of Methods for Hydrological Forecasting Based on Deep Learning. Water 2024, 16, 1407. [Google Scholar] [CrossRef]

- Shah, W.; Chen, J.; Ullah, I.; Shah, M.H.; Ullah, I. Application of RNN-LSTM in Predicting Drought Patterns in Pakistan: A Pathway to Sustainable Water Resource Management. Water 2024, 16, 1492. [Google Scholar] [CrossRef]

- He, F.; Wan, Q.; Wang, Y.; Wu, J.; Zhang, X.; Feng, Y. Daily Runoff Prediction with a Seasonal Decomposition-Based Deep GRU Method. Water 2024, 16, 618. [Google Scholar] [CrossRef]

- Liu, H.; Ding, Q.; Yang, X.; Liu, Q.; Deng, M.; Gui, R. A Knowledge-Guided Approach for Landslide Susceptibility Mapping Using Convolutional Neural Network and Graph Contrastive Learning. Sustainability 2024, 16, 4547. [Google Scholar] [CrossRef]

- Yu, H.; Yang, Q. Applying Machine Learning Methods to Improve Rainfall–Runoff Modeling in Subtropical River Basins. Water 2024, 16, 2199. [Google Scholar] [CrossRef]

- Samset, B.H.; Lund, M.T.; Fuglestvedt, J.S.; Wilcox, L.J. 2023 temperatures reflect steady global warming and internal sea surface temperature variability. Commun. Earth Environ. 2024, 5, 460. [Google Scholar] [CrossRef]

- Oyounalsoud, M.S.; Yilmaz, A.G.; Abdallah, M.; Abdeljaber, A. Drought prediction using artificial intelligence models based on climate data and soil moisture. Sci. Rep. 2024, 14, 19700. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Cho, D.; Im, J.; Yoo, C.; Lee, J.; Ham, Y.-G.; Lee, M.-I. Unveiling teleconnection drivers for heatwave prediction in South Korea using explainable artificial intelligence. Npj Clim. Atmos. Sci. 2024, 7, 176. [Google Scholar] [CrossRef]

- Gayathry, V.; Kaliyaperumal, D.; Salkuti, S.R. Seasonal solar irradiance forecasting using artificial intelligence techniques with uncertainty analysis. Sci. Rep. 2024, 14, 17945. [Google Scholar] [CrossRef] [PubMed]

- Astsatryan, H.; Grigoryan, H.; Poghosyan, A.; Abrahamyan, R.; Asmaryan, S.; Muradyan, V.; Tepanosyan, G.; Guigoz, Y.; Giuliani, G. Air temperature forecasting using artificial neural network for Ararat valley. Earth Sci. Inform. 2021, 14, 711–722. [Google Scholar] [CrossRef]

- Kagabo, J.; Kattel, G.R.; Kazora, J.; Shangwe, C.N.; Habiyakare, F. Application of Machine Learning Algorithms in Predicting Extreme Rainfall Events in Rwanda. Atmosphere 2024, 15, 691. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, J.; Yang, J. Time series forecasting of pedestrian-level urban air temperature by LSTM: Guidance for practitioners. Urban Clim. 2024, 56, 102063. [Google Scholar] [CrossRef]

- Niño Medina, J.S.; Suarez Barón, M.J.; Reyes Suarez, J.A. Application of Deep Learning for the Analysis of the Spatiotemporal Prediction of Monthly Total Precipitation in the Boyacá Department, Colombia. Hydrology 2024, 11, 127. [Google Scholar] [CrossRef]

- Zheng, C.; Tao, Y.; Zhang, J.; Xun, L.; Li, T.; Yan, Q. TISE-LSTM: A LSTM model for precipitation nowcasting with temporal interactions and spatial extract blocks. Neurocomputing 2024, 590, 127700. [Google Scholar] [CrossRef]

- Guo, Q.; He, Z.; Wang, Z. Prediction of monthly average and extreme atmospheric temperatures in Zhengzhou based on artificial neural network and deep learning models. Front. For. Glob. Change 2023, 6, 1249300. [Google Scholar] [CrossRef]

- Jose, D.M.; Vincent, A.M.; Dwarakish, G.S. Improving multiple model ensemble predictions of daily precipitation and temperature through machine learning techniques. Sci. Rep. 2022, 12, 4678. [Google Scholar] [CrossRef] [PubMed]

- Kang, D.G.; Kim, K.S.; Kim, D.-J.; Kim, J.-H.; Yun, E.-J.; Ban, E.; Kim, Y. PRISM and Radar Estimation for Precipitation (PREP): PRISM enhancement through ANN and radar data integration in complex terrain. Atmos. Res. 2024, 307, 107476. [Google Scholar] [CrossRef]

- Ma, C.; Yao, J.; Mo, Y.; Zhou, G.; Xu, Y.; He, X. Prediction of summer precipitation via machine learning with key climate variables: A case study in Xinjiang, China. J. Hydrol. Reg. Stud. 2024, 56, 101964. [Google Scholar] [CrossRef]

- Yin, Y.; He, J.; Guo, J.; Song, W.; Zheng, H.; Dan, J. Enhancing precipitation estimation accuracy: An evaluation of traditional and machine learning approaches in rainfall predictions. J. Atmos. Sol. Terr. Phys. 2024, 255, 106175. [Google Scholar] [CrossRef]

- Abdullahi, J.; Rufai, I.; Rimtip, N.N.; Orhon, D.; Aslanova, F.; Elkiran, G. A novel approach for precipitation modeling using artificial intelligence-based ensemble models. Desalination Water Treat. 2024, 317, 100188. [Google Scholar] [CrossRef]

- Dinh, T.-L.; Phung, D.-K.; Kim, S.-H.; Bae, D.-H. A new approach for quantitative precipitation estimation from radar reflectivity using a gated recurrent unit network. J. Hydrol. 2023, 624, 129887. [Google Scholar] [CrossRef]

- Eyring, V.; Gentine, P.; Camps-Valls, G.; Lawrence, D.M.; Reichstein, M. AI-empowered next-generation multiscale climate modelling for mitigation and adaptation. Nat. Geosci. 2024, 17, 851–959. [Google Scholar] [CrossRef]

- Belletreche, M.; Bailek, N.; Abotaleb, M.; Bouchouicha, K.; Zerouali, B.; Guermoui, M.; Kuriqi, A.; Alharbi, A.H.; Khafaga, D.S.; El-Shimy, M.; et al. Hybrid attention-based deep neural networks for short-term wind power forecasting using meteorological data in desert regions. Sci. Rep. 2024, 14, 21842. [Google Scholar] [CrossRef]

- Iizumi, T.; Takimoto, T.; Masaki, Y.; Maruyama, A.; Kayaba, N.; Takaya, Y.; Masutomi, Y. A hybrid reanalysis-forecast meteorological forcing data for advancing climate adaptation in agriculture. Sci. Data 2024, 11, 849. [Google Scholar] [CrossRef] [PubMed]

- Guo, Q.; He, Z.; Wang, Z. Monthly climate prediction using deep convolutional neural network and long short-term memory. Sci. Rep. 2024, 14, 17748. [Google Scholar] [CrossRef] [PubMed]

- Ni, L.; Wang, D.; Singh, V.; Wu, J.; Chen, X.; Tao, Y.; Zhu, X.; Jiang, J.; Zeng, X. Monthly precipitation prediction at regional scale using deep convolutional neural networks. Hydrol. Process. 2023, 37, e14954. [Google Scholar] [CrossRef]

- Uluocak, İ.; Bilgili, M. Daily air temperature forecasting using LSTM-CNN and GRU-CNN models. Acta Geophys. 2023, 72, 2107–2126. [Google Scholar] [CrossRef]

- Nagaraj, R.; Kumar, L.S. Univariate Deep Learning models for prediction of daily average temperature and Relative Humidity: The case study of Chennai, India. J. Earth Syst. Sci. 2023, 132, 100. [Google Scholar] [CrossRef]

- Jingwei, H.; Wang, Y.; Zhou, J.; Tian, Q. Prediction of hourly air temperature based on CNN–LSTM. Geomat. Nat. Hazards Risk 2022, 13, 1962–1986. [Google Scholar] [CrossRef]

- Jingwei, H.; Wang, Y.; Hou, B.; Zhou, J.; Tian, Q. Spatial Simulation and Prediction of Air Temperature Based on CNN-LSTM. Appl. Artif. Intell. 2023, 37, 2166235. [Google Scholar] [CrossRef]

- Zhang, X.; Ren, H.; Liu, J.; Zhang, Y.; Cheng, W. A monthly temperature prediction based on the CEEMDAN–BO–BiLSTM coupled model. Sci. Rep. 2024, 14, 808. [Google Scholar] [CrossRef]

- Pinheiro, E.; Ouarda, T.B.M.J. Short-lead seasonal precipitation forecast in northeastern Brazil using an ensemble of artificial neural networks. Sci. Rep. 2023, 13, 20429. [Google Scholar] [CrossRef]

- Kadow, C.; Hall, D.M.; Ulbrich, U. Artificial intelligence reconstructs missing climate information. Nat. Geosci. 2020, 13, 408–413. [Google Scholar] [CrossRef]

- Thottungal Harilal, G.; Dixit, A.; Quattrone, G. Establishing hybrid deep learning models for regional daily rainfall time series forecasting in the United Kingdom. Eng. Appl. Artif. Intell. 2024, 133, 108581. [Google Scholar] [CrossRef]

- Guimarães, S.O.; Mann, M.E.; Rahmstorf, S.; Petri, S.; Steinman, B.A.; Brouillette, D.J.; Christiansen, S.; Li, X. Increased projected changes in quasi-resonant amplification and persistent summer weather extremes in the latest multimodel climate projections. Sci. Rep. 2024, 14, 21991. [Google Scholar] [CrossRef] [PubMed]

- Allabakash, S.; Lim, S. Anthropogenic influence of temperature changes across East Asia using CMIP6 simulations. Sci. Rep. 2022, 12, 11896. [Google Scholar] [CrossRef] [PubMed]

- Pengxin, D.; Jianping, B.; Jianwei, J.; Dong, W. Evaluation of daily precipitation modeling performance from different CMIP6 datasets: A case study in the Hanjiang River basin. Adv. Space Res. 2024, 79, 4333–4341. [Google Scholar] [CrossRef]

- Patel, G.; Das, S.; Das, R. Accuracy of historical precipitation from CMIP6 global climate models under diversified climatic features over India. Environ. Dev. 2024, 50, 100998. [Google Scholar] [CrossRef]

- Humphries, U.W.; Waqas, M.; Hlaing, P.T.; Dechpichai, P.; Wangwongchai, A. Assessment of CMIP6 GCMs for selecting a suitable climate model for precipitation projections in Southern Thailand. Results Eng. 2024, 23, 102417. [Google Scholar] [CrossRef]

- Zhang, X.; Hua, L.; Jiang, D. Assessment of CMIP6 model performance for temperature and precipitation in Xinjiang, China. Atmos. Ocean. Sci. Lett. 2022, 15, 100128. [Google Scholar] [CrossRef]

| Models | Inputs | HL | Units of HL | Outputs | AF | LR | BS | Epochs | Optimizer | KS | MP | CF |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ANN | 11 | 2 | 5 | 1 | logsig-purelin | 0.005 | 12 | 200 | ||||

| RNN | 11 | 2 | 50 | 1 | tansig-purelin | 0.005 | 12 | 200 | Adam | |||

| CNN | 11 | 9 | 50 | 1 | Relu | 0.005 | 12 | 200 | Adam | 3 | 2 | 12-12 |

| GRU | 11 | 2 | 50 | 1 | tanh-sigmoid | 0.005 | 12 | 200 | Adam | |||

| LSTM | 11 | 2 | 50 | 1 | tanh-sigmoid | 0.005 | 12 | 200 | Adam | |||

| CNN-GRU | 11 | 12 | 50 | 1 | Relu | 0.005 | 12 | 200 | Adam | 3 | 2 | 12-12 |

| CNN-LSTM | 11 | 12 | 50 | 1 | Relu | 0.005 | 12 | 200 | Adam | 3 | 2 | 12-12 |

| CNN-LSTM-GRU | 11 | 14 | 50 | 1 | Relu | 0.005 | 12 | 200 | Adam | 3 | 2 | 12-12 |

| Models | R | RMSE | MAE | MAPE (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | |

| ANN | 0.9902 | 0.9813 | 0.9821 | 1.5857 | 1.8953 | 2.1184 | 1.3970 | 1.6114 | 1.7801 | 38.8151 | 39.2472 | 59.6989 |

| RNN | 0.9908 | 0.9893 | 0.9824 | 1.5707 | 1.8571 | 2.1060 | 1.3927 | 1.5646 | 1.7751 | 37.4104 | 38.2629 | 57.2946 |

| CNN | 0.9918 | 0.9901 | 0.9834 | 1.4071 | 1.8470 | 2.0518 | 1.3889 | 1.5502 | 1.6774 | 37.0679 | 38.2391 | 50.4916 |

| GRU | 0.9921 | 0.9905 | 0.9852 | 1.3976 | 1.4629 | 1.8914 | 1.1084 | 1.2018 | 1.5429 | 36.7256 | 36.2439 | 49.1905 |

| LSTM | 0.9922 | 0.9911 | 0.9859 | 1.3823 | 1.4596 | 1.8314 | 1.0989 | 1.1984 | 1.4753 | 36.7081 | 34.8983 | 48.2297 |

| CNN-GRU | 0.9923 | 0.9915 | 0.9870 | 1.3207 | 1.3832 | 1.5834 | 1.0946 | 1.0814 | 1.2041 | 35.5082 | 32.5118 | 48.1806 |

| CNN-LSTM | 0.9926 | 0.9916 | 0.9873 | 1.3185 | 1.3792 | 1.5560 | 1.0545 | 1.0797 | 1.1918 | 35.4328 | 28.3677 | 39.4255 |

| CNN-LSTM-GRU | 0.9929 | 0.9919 | 0.9879 | 1.2239 | 1.3277 | 1.5347 | 0.9635 | 1.0161 | 1.1830 | 32.1069 | 22.1625 | 37.7203 |

| Models | R | RMSE | MAE | MAPE (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | |

| ANN | 0.9906 | 0.9910 | 0.9828 | 1.5542 | 1.4529 | 1.9446 | 1.3511 | 1.1593 | 1.5581 | 45.7389 | 43.3909 | 38.6852 |

| RNN | 0.9910 | 0.9911 | 0.9830 | 1.5457 | 1.4482 | 1.8898 | 1.3274 | 1.1264 | 1.4666 | 45.6283 | 42.4281 | 38.6794 |

| CNN | 0.9912 | 0.9913 | 0.9859 | 1.5261 | 1.4253 | 1.8885 | 1.3011 | 1.1032 | 1.4533 | 41.3898 | 37.0634 | 37.5265 |

| GRU | 0.9916 | 0.9918 | 0.9863 | 1.4877 | 1.3867 | 1.8878 | 1.2005 | 1.0780 | 1.2493 | 41.3382 | 36.7026 | 36.2668 |

| LSTM | 0.9916 | 0.9925 | 0.9873 | 1.4280 | 1.3769 | 1.8863 | 1.1519 | 1.0774 | 1.1757 | 41.1811 | 33.6287 | 33.3576 |

| CNN-GRU | 0.9916 | 0.9928 | 0.9878 | 1.3592 | 1.3711 | 1.6135 | 1.1438 | 1.0763 | 1.1606 | 40.6552 | 32.6522 | 32.3939 |

| CNN-LSTM | 0.9922 | 0.9928 | 0.9881 | 1.2531 | 1.2420 | 1.5104 | 0.9980 | 0.9748 | 1.1519 | 39.2689 | 31.7472 | 31.9745 |

| CNN-LSTM-GRU | 0.9925 | 0.9930 | 0.9886 | 1.2483 | 1.2345 | 1.4856 | 0.9954 | 0.9668 | 1.1218 | 39.1492 | 30.8418 | 20.9646 |

| Models | R | RMSE | MAE | MAPE (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | |

| ANN | 0.9842 | 0.9819 | 0.9754 | 2.3794 | 1.9897 | 2.2885 | 1.7839 | 1.5853 | 1.7891 | 46.1894 | 45.2416 | 43.0432 |

| RNN | 0.9866 | 0.9861 | 0.9779 | 2.2872 | 1.9883 | 2.2274 | 1.7633 | 1.5741 | 1.7861 | 45.7685 | 44.5697 | 41.9481 |

| CNN | 0.9868 | 0.9862 | 0.9783 | 2.2753 | 1.9872 | 2.1555 | 1.7537 | 1.5639 | 1.6930 | 44.4984 | 43.2180 | 47.3248 |

| GRU | 0.9871 | 0.9864 | 0.9795 | 2.2601 | 1.9869 | 2.0988 | 1.7425 | 1.5531 | 1.5928 | 43.5614 | 42.4237 | 43.3839 |

| LSTM | 0.9872 | 0.9865 | 0.9798 | 2.1722 | 1.9780 | 2.0417 | 1.7343 | 1.5483 | 1.5896 | 42.8722 | 36.7567 | 42.1758 |

| CNN-GRU | 0.9874 | 0.9866 | 0.9813 | 2.0807 | 1.9651 | 1.9183 | 1.6934 | 1.5440 | 1.5597 | 41.0262 | 30.1028 | 41.7519 |

| CNN-LSTM | 0.9875 | 0.9868 | 0.9816 | 1.9755 | 1.9308 | 1.8956 | 1.5315 | 1.5279 | 1.4592 | 39.4491 | 29.8837 | 31.0140 |

| CNN-LSTM-GRU | 0.9877 | 0.9869 | 0.9832 | 1.8660 | 1.8900 | 1.7843 | 1.4418 | 1.4996 | 1.2523 | 38.1749 | 23.7538 | 10.5160 |

| Models | R | RMSE | MAE | MAPE (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | Training | Verification | Predicting | |

| ANN | 0.6642 | 0.6687 | 0.6069 | 49.3483 | 43.9792 | 58.6085 | 30.9600 | 30.8499 | 37.3071 | 50.6661 | 67.8641 | 58.5394 |

| RNN | 0.6928 | 0.6769 | 0.6366 | 47.6149 | 43.8190 | 57.0347 | 30.3719 | 30.4301 | 34.7349 | 49.8734 | 66.8581 | 57.9923 |

| CNN | 0.7104 | 0.7017 | 0.6992 | 44.8526 | 41.8625 | 52.9328 | 28.9693 | 28.7527 | 32.6053 | 47.9215 | 59.0610 | 55.4945 |

| GRU | 0.7118 | 0.7018 | 0.7169 | 44.7503 | 41.6705 | 52.4547 | 28.8234 | 28.6599 | 32.1501 | 46.9836 | 45.4873 | 55.3527 |

| LSTM | 0.7121 | 0.7095 | 0.7468 | 44.6990 | 41.5458 | 52.2456 | 28.7508 | 27.3973 | 31.9676 | 45.1820 | 43.7496 | 54.9684 |

| CNN-GRU | 0.7136 | 0.7100 | 0.7530 | 44.5125 | 41.4811 | 52.1634 | 28.7298 | 27.3589 | 31.8863 | 41.2146 | 42.6950 | 49.3238 |

| CNN-LSTM | 0.7269 | 0.7181 | 0.7617 | 44.3821 | 41.3459 | 50.1189 | 28.3015 | 27.2776 | 31.1853 | 38.7615 | 41.7658 | 48.9628 |

| CNN-LSTM-GRU | 0.7412 | 0.7343 | 0.7629 | 44.3618 | 41.2949 | 48.0323 | 28.2407 | 26.9292 | 31.1680 | 33.7869 | 41.4275 | 44.7220 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Q.; He, Z.; Wang, Z.; Qiao, S.; Zhu, J.; Chen, J. A Performance Comparison Study on Climate Prediction in Weifang City Using Different Deep Learning Models. Water 2024, 16, 2870. https://doi.org/10.3390/w16192870

Guo Q, He Z, Wang Z, Qiao S, Zhu J, Chen J. A Performance Comparison Study on Climate Prediction in Weifang City Using Different Deep Learning Models. Water. 2024; 16(19):2870. https://doi.org/10.3390/w16192870

Chicago/Turabian StyleGuo, Qingchun, Zhenfang He, Zhaosheng Wang, Shuaisen Qiao, Jingshu Zhu, and Jiaxin Chen. 2024. "A Performance Comparison Study on Climate Prediction in Weifang City Using Different Deep Learning Models" Water 16, no. 19: 2870. https://doi.org/10.3390/w16192870

APA StyleGuo, Q., He, Z., Wang, Z., Qiao, S., Zhu, J., & Chen, J. (2024). A Performance Comparison Study on Climate Prediction in Weifang City Using Different Deep Learning Models. Water, 16(19), 2870. https://doi.org/10.3390/w16192870