Abstract

In the traditional surface irrigation system of Vega Baja del Segura (Spain), large amounts of floating waste accumulate at certain points of the river, irrigation channels and drainage ditches, causing malfunctioning of the irrigation network and rising social problems related to the origins of waste. This work proposes a standardized and quick methodology to characterize the floating waste to detect changes in its amount and components. A dataset was created with 477 images of floating plastic items in different environments and was used for training an algorithm based on YOLOv5s. The mean Average Precision of the trained algorithm was 96.9%, and the detection speed was 81.7 ms. Overhead photographs were taken with an unmanned aerial vehicle at strategic points of the river and channels, and its automatic count of floating objects was compared with their manual count. Both methods showed good agreement, confirming that water bottles were the most abundant (95%) type of floating waste. The automatic count reduced the required time and eliminated human bias in image analysis of the floating waste. This procedure can be used to test the reach of corrective measures implemented by local authorities to prevent floating waste in the river.

1. Introduction

The presence of plastic waste in freshwater is an increasing environmental issue. Large debris can obstruct water flow, increasing flood risk in urban areas and affecting local infrastructure, causing economic losses. Plastic debris accumulates on riverbanks, potentially affecting tourism or real estate value. Dams, water inlets, sluices and canals can act as accumulation zones for macroplastics. A significant issue is the aesthetic pollution and loss of recreational value of these water bodies. Visible waste disrupts perception of rivers and lakes, reducing their appeal for recreational activities such as swimming, fishing and tourism, which can have an adverse economic impact on local communities [1,2,3].

Plastic waste in the aquatic ecosystem nowadays is a great concern due to its large amount in rivers and oceans [4] and its persistence in them [3]. Several studies have established that the main source of marine plastic pollution is land-based, due to littering, plastic bag usage and solid waste disposal [5], and rivers are the link connecting land surfaces to the sea or oceans. Since there is evidence that not all the plastic that enters a river is released into the ocean [3], there is growing interest in characterizing the flow of macroplastic residues in rivers to identify and design measures to reduce global plastic pollution [6].

Surface irrigation systems in traditionally irrigated river plains spread the water flow network, increasing the likelihood of land-based waste reaching the oceans. In these surface irrigation systems, the effect of floating woody debris in channels and reservoirs has been studied because debris blocks the water flow and increases reference head on flow control structures [7]. Automated trash racks removal systems have been used to protect automated gates in canal automation projects [8], but there are no mentions of plastic floating waste occurrence in irrigation channels in the scientific literature.

In the Segura River and its traditional surface irrigation system of Vega Baja del Segura, in southeast Spain, where the traditional surface irrigation system dating from the 13th century is still in use, a large amount of floating waste accumulates at certain points, causing problems in the distribution of irrigation in channels and drainage ditches [9]. The floating debris that is found in the river and irrigation network has varied origins, predominantly plant waste from the river itself, such as reeds, as well as waste of anthropogenic origin [10]. The specific land-based sources of plastic waste in the Segura River include domestic activities, agricultural practices and industrial processes. Among these, plastic bottles of bottled water are the most significant contributor, comprising 74.2% of the waste identified. Containers of domestic origin, such as food containers and hygiene products, also play a significant role, alongside agricultural waste such as bottles and cans of phytosanitary products [9,10].

Besides impairment to the functioning of infrastructure, floating waste causes economic damage to farmers because they must face the cost of removal of floating waste, or the fines imposed by the river authority if they do not keep their channels clean and allow the discharge of floating material into the river [9].

Awareness campaigns aimed at different sectors of the population have been proposed by local authorities as an effective measure to prevent plastic waste from reaching the Segura River. However, for these campaigns to be effective, it is necessary to define the sector of the population to which they should be addressed. Knowing the type of plastic found in the irrigation infrastructure channels can disclose whether these wastes have a predominantly agricultural origin, and the campaigns should then be addressed to farmers, or are due to daily activities of the general population.

Quantifying and characterizing floating debris has been attempted using different approaches such as the development of software to quantify large amounts of waste in the Pacific ocean [11], a deep learning-based methodology to detect floating macroplastics on river surface images in Jakarta [12] and an algorithm based on convolutional neural networks (CNN), APLASTIC-Q, to classify (PLD-CNN) and quantify (PLQ-CNN) litter items in classes like water bottles, Styrofoam, canisters, cartons, bowls, shoes, polystyrene packaging, cups, textile and small or large carry bags in beaches and riverine systems in Cambodia [13]. Recent works have already used the detection of plastics in water with YOLO [14] and compare diverse configurations of YOLOv5 to detect bottles and cartons from images taken with unmanned surface vehicles [14,15].

The advances in deep-learning methods have boosted research in object detection, giving rise to a wide range of approaches based on deep neural networks. Traditional two-stage methods, such as region-based convolutional neural network (R-CNN) serial algorithms [16,17,18], are therefore distinguished from representative single-stage methods, such as YOLO serial algorithms [14,15,19].

The introduction of the convolutional neural network (R-CNN) in 2014 by Girshick et al. [20] marked a milestone in the development of deep learning-based object detection methods. Although methods like R-CNN have demonstrated high accuracy, they are perceived as slow [21]. Unlike two-stage methods, YOLO does not need to generate candidate regions; instead, it divides the image into grids, each with bounding boxes, to detect objects in a single step, thus streamlining the process [14]. In a study on garbage detection in water, Faster R-CNN showed unsatisfactory performance due to its accuracy loss compared to that of YOLOv5 [14]. Currently, YOLOv5 is the first widely adopted single-stage detector that outperforms two-stage detectors [15]. The better performance of YOLOv5 has been documented in challenging environments like the automatic identification of filaments, protozoa, spherical flocs and open flocs in microscopical images of wastewater treatment plants, in which YOLOv5 achieved an mAP of 67.0%, outperforming Faster R-CNN by 15% [21], or the identification of four biological categories in underwater conditions, where YOLOv5s, the lightest model with the fewest parameters, obtained an mAP of 84.9% [15]. According to some authors [14,15,21], YOLOv5s-based methodologies outperformed other deep-learning methods like Faster R-CNN in similar applications, particularly in challenging environments. YOLOv5s is noted for its higher mean Average Precision (mAP) and faster detection speed, making it more suitable for real-time applications when compared to two-stage detectors like R-CNN.

Despite the growing amount of work on image detection for litter quantification in the environment, most articles detect isolated waste where the objects appear against a smooth water surface [11,14], or detect floating waste by means of video cameras mounted on bridges [12] or surface vehicles [22]. No scientific works have dealt with detecting clustered floating waste, like the one that accumulates at booms and retaining structures of irrigation networks, using the YOLOv5 algorithm, perhaps because one of the main limitations of using YOLOv5 is its potential difficulty in accurately detecting small or overlapping objects in cluttered environments, which is common in floating waste scenarios.

The objective of this work is to propose a standardized and quick methodology to count and characterize the floating waste accumulated in the irrigation system to detect changes in its amount and composition. This objective will be attained by training an algorithm to automatically detect the different residues for their classification and quantification through image analysis using YOLOv5s, testing its performance on previous images counted manually and applying the model to overhead photographs taken with an unmanned aerial vehicle (UAV) at various strategic points of the Segura River and irrigation channels in the Vega Baja. The aim of the process is to eliminate the subjective component of manual quantification and characterization of floating waste to accurately describe the problem’s magnitude.

While the study itself might not directly solve the problem of floating debris, its objective is to improve the detection and monitoring of such debris using UAVs and deep-learning techniques. This tool is devised to enhance data acquisition and analysis, improving the understanding of floating waste accumulation patterns, which could then inform targeted cleanup efforts and policymaking. The tool developed in this work will be at public disposal to impartially evaluate the effectiveness of the potential measures put in place by public authorities to solve the problem of floating debris accumulation in the river and the irrigation networks.

2. Materials and Methods

2.1. Description of the Study Area

The study took place in the Vega Baja region, which is located at the southern end of the province of Alicante, in the Valencian Community, Spain, along the system formed by the Segura River in its last section, and the irrigation and drainage channels that distribute water in the traditional irrigation area of Vega Baja (Figure 1 top).

Figure 1.

Location of the study area in the southern Alicante province (top) and location of the sampling sites along the river, irrigation channels (acequias) and drainage ditches (azarbes) (bottom).

The traditional irrigation of the Vega Baja del Segura has a unique set of hydraulic works that can be classified into two large groups: the dams that take the irrigation water directly from the river and the aqueducts that distribute the water to the traditional irrigated lands through an extensive and complex branched and hierarchical network of irrigation channels and drainage ditches [23]. A thorough description of the site can be found in Rocamora et al. [9].

2.2. Training of the Model

In previous work [9], the largest class of waste found in the Segura River and the surrounding irrigation network was made up of plastic bottles of bottled water (74.2%), containers of domestic origin like food containers, personal hygiene and cleaning products (14.9%), and containers of agricultural origin, bottles and cans of phytosanitary products (3.8%). Based on this information, we trained the algorithm to identify the following types of waste:

- Different-size plastic water bottles.

- Bleach bottles.

- Detergent bottles.

- Drums of chemical products from agricultural activity.

- Game balls.

2.2.1. Dataset

A carefully curated and diverse dataset was prepared to improve the detection accuracy of YOLOv5. A dataset of 477 images was created by gathering images from a previous study conducted in 2019, along with additional images of specific waste objects in the conditions of this study of the object classes we wanted our model to recognize. The selection criteria for these images focused on the types of plastic waste that were most commonly found in the Segura River and its irrigation network. These included water bottles, detergent bottles, bleach bottles, agricultural drums, and game balls. Of them, 430 images were labelled for training (90%) and 47 for model validation (10%). This dataset is partly available at the Rediumh repository (https://hdl.handle.net/11000/32965, accessed on 25 July 2024), which contains the images of our authorship.

2.2.2. Software

To process the images, several computer programs were used in sequence. The software used to label the images and carry out the model training was the following:

Makesense (https://www.makesense.ai/index.html, (accessed on 25 July 2024)): it is a free online photo-tagging tool that does not require any installation. Makesense was used to add all the labels of the different identified waste to a total of 477 images. After labelling, these images were downloaded in YOLO format.

YOLOv5 (https://pytorch.org/hub/ultralytics_yolov5/, (accessed on 25 July 2024)) [19]: a single-stage object detection network that is region-based. This model redefines object detection as a regression problem, allowing for high processing speed. Its architecture is made up of three main components: spine, neck and head. The backbone module extracts features from the input image using Focus, BottleneckCSP (inter-stage partial network) and SPP (spatial pyramid pooling) and transmits them to the neck module. The neck module generates a feature pyramid based on PANet (Path Aggregation Network), improving the ability to detect objects at different scales by bidirectionally fusing low-level spatial features with high-level semantic features. The head module produces the detection boxes, indicating the category, coordinates and confidence by applying anchor boxes to the multiscale feature maps generated by the neck module. There are four versions of the YOLOv5 model: YOLOv5s, YOLOv5m, YOLOv5l and YOLOv5x. The SPP module mainly increases the receptive field of the network and captures features at different scales [24,25].

Google Colaboratory (https://colab.research.google.com/, (accessed on 25 July 2024)): it is a product of Google Research. It allows any user to write and execute arbitrary Python code in the browser. It is especially suitable for machine learning, data analysis and education tasks. From a more technical standpoint, Colaboratory is a zero-configuration Jupyter-hosted notebook service that provides access to computing resources such as GPUs.

Jupyter Notebook (https://jupyter.org/, (accessed on 25 July 2024)) docs are documents made up of code to be executed in Python and text that can include paragraphs, links, images, etc. Texts are often written using the Multi Markdown notation [26]. The documents contained the code and images that determined the model’s behavior.

Python (https://www.python.org/, (accessed on 25 July 2024)) is a programming language created by Guido van Rossum in 1991 [27]. It is characterized by being high-level and interpreted, with special emphasis on code readability. It uses dynamic types and is a multi-paradigm language, which means that it supports object-oriented, structured, functional, etc programming. It is expandable, that is, it consists of a core to which modules and libraries can be added as required.

Sublime Text (https://www.sublimetext.com/, (accessed on 25 July 2024)) (© Sublime HQ Pty Ltd., Woollahra, Sydney) is a text editor for writing code in almost any file format. Sublime Text was used to create and edit the text documents necessary for training the YOLOv5 model. Specifically, it was used to define the number of classes, the names of these classes, and the paths to the training and validation folders.

2.2.3. Procedure

The first step was to label our images with Makesense software, where we used the following labels: water bottle, detergent bottle, bleach bottle, agricultural drum, and ball. Once these images were labelled, they were divided into two folders: one called “train”, with the images that would be used to train the algorithm, and one called “val”, with the images that would be used to validate the algorithm’s training. Once the labelling was finished, we proceeded to extract these images in YOLO format to utilize them in Google Colab using YOLOv5.

The next step was to create a text document with Sublime Text, where we wrote the number of classes, the name of these classes and the address of our “train” and “val” folders.

YOLOv5 was downloaded in a Google Colaboratory file. The abovementioned “train” and “val” folders were added, as was the text document with the address of these folders. The image size, 1024 pixels, and the number of epochs, 126, were written in a new cell. These parameters were chosen based on a balance between processing efficiency and accuracy. Image size refers to the number of times an image is divided when being analyzed: an increase in this number means that the image is analyzed more accurately. The number of epochs is a hyperparameter that defines the number of times the learning algorithm will work on the entire training data set. An epoch means that each sample in the training data set has had a chance to update the parameters of the internal model. At epoch 126, the model stopped improving and stabilized. The number of epochs was selected to ensure that the model had sufficient exposure to the training data to minimize error. The number of epochs is traditionally large, often hundreds or thousands, allowing the learning algorithm to run until model error has been sufficiently minimized [28]. Once the training cell was executed, a folder was created with validation and prediction of the results, as was a folder with the graphs analyzing the effectiveness of our trained algorithm.

2.3. Model Assessment

To verify the efficiency of the model, indicators such as precision, recall, mAP (mean Average Precision), detection speed and F1 score were used for evaluation in this study [29].

Precision (Equation (1)) is the proportion of true positive samples among all positive samples predicted by the model.

where TP is the number of true positive cases, and FP is the number of false positive cases.

Recall (Equation (2)) indicates the proportion of true positive samples predicted by the model out of all true positive samples.

where TP is as in Equation (1), and FN is the number of false negative cases.

Typically, there is a negative correlation between accuracy and recall, where one increases and the other decreases. To balance the effects of precision and recall and evaluate a model more comprehensively, AP (Average Precision) can be entered as a comprehensive evaluation index.

The value of AP (Equation (3)) is the area under the PR curve, a higher value meaning better model performance.

The F1 score (Equation (4)) is the reconciled mean of precision and recall, considering both the precision (Equation (1)) and recall (Equation (2)) of the classification model.

2.4. Image Acquisition

The images were obtained with an UAV, an Autel EVO 2 model quadrotor. The UAV specifications are as follows: weight: 1127 g, wheelbase: 397 mm, removable 7100 mAh 11.55 V batteries, maximum flight time: 40 min, maximum horizontal flight speed: 20 m/s, maximum flight distance: 25 km. Camera specifications: effective pixels: 48 MP, field of view: 79° and lens dimensions: 25.6 mm. The Autel EVO 2 quadrotor was chosen for its high-resolution camera, long flight time, and significant range. These features made it suitable for capturing detailed images over large areas, ensuring comprehensive coverage of the waste accumulation sites along the Segura River and its irrigation channels.

To define the image acquisition sites, we also kept the same garbage accumulation sites along the river and the irrigation channels network described in Rocamora et al. [9]. The most relevant accumulation sites were determined in a survey of the river layout along the Vega Baja, excluding those in which the regulations do not allow photographic flights to be carried out because they are in an urban area. Regarding the accumulation sites in the channels, those indicated by the managers of the irrigation communities as most problematic were selected. Additional sites were included in this study since new retention booms were installed in 2022 by the Segura River Basin Authority (CHS) that were intended to capture floating waste more effectively.

Accumulation sites surveyed in this study are described in the following list:

- Point 1 (ID1): Azud de las Norias,

- Point 2 (ID2): Boom at the Town Mill,

In 2022, a new sampling point was added due to a new retention boom at the entrance to the city of Orihuela. This site is identified in the present work as ID2.a to maintain order in the direction of the river flow.

- Point 2.a (ID2.a): Boom at the entrance of Orihuela,

- Point 3 (ID3): Boom in Almoradí,

- Point 4 (ID4): Azud de Alfeitamí,

- Point 5 (ID5): Sluices on the inlet of the irrigation community of Riegos de Levante,

- Point 6 (ID6): Acequia de Callosa, syphon at the crossing with Acequia Vieja de Almoradí,

- Point 7 (ID7): Azarbe de Cebadas, siphon at the crossing with drainage channels del Convenio,

- Point 8 (ID8): Guardamar floating screen.

In 2022, waste retention booms came into operation in the mouths of drainage channels discharging near point 8. The new booms were also considered as additional evaluation points for the present study, and are numbered as ID8.a, ID8.b, ID8.c, ID8.d and ID8.e.

These eight sites can be seen in Figure 1 (bottom) numbered along the river flow.

Flight planning was programmed for each of the different sampling points along with specific take-off and landing sites. In this study, three flights were carried out at each of the selected points along the river and channels on 28 July, 8 September and 31 October 2022. These flights were made at different heights: 5 m, 10 m and 20 m. The flights were conducted between 10:00 and 14:00 CET, under clear sky conditions, ensuring clear and consistent image capture across different sampling points. The UAV’s camera took the aerial images in a zenithal view, and the number of photos at each point varied between one and nine, depending on the area extension of waste found in the river.

A total of 61 images, including all sampling dates and points, were analyzed using the algorithm trained in Section 2.2. The count results for each image were transferred to an Excel spreadsheet for further analysis.

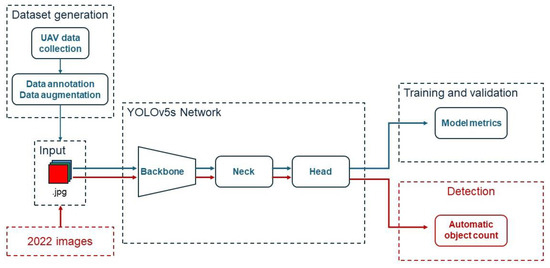

Figure 2 depicts the conceptual framework of the methodology used to train and validate the model and to automatically detect the objects in a set of data acquired in the same environment during year 2022.

Figure 2.

Conceptual framework of the object detection methodology. Blue color indicates the model training and validation, and red color indicates the process of object detection on the new images obtained in 2022.

2.5. Comparison of Automatic and Manual Count

To test the performance of the model, we manually marked and counted the objects appearing in the 61 images that were analyzed with the model with help of the ImageJ 1.54g application [30]. We recorded both the counting results by date and object class, and the time spent in the process.

The agreement between both methods was assessed using the methodology proposed by Bland and Altman [31], by studying the mean difference and constructing limits of agreement.

3. Results

3.1. Results of the Model Calibration and Performance Assessment

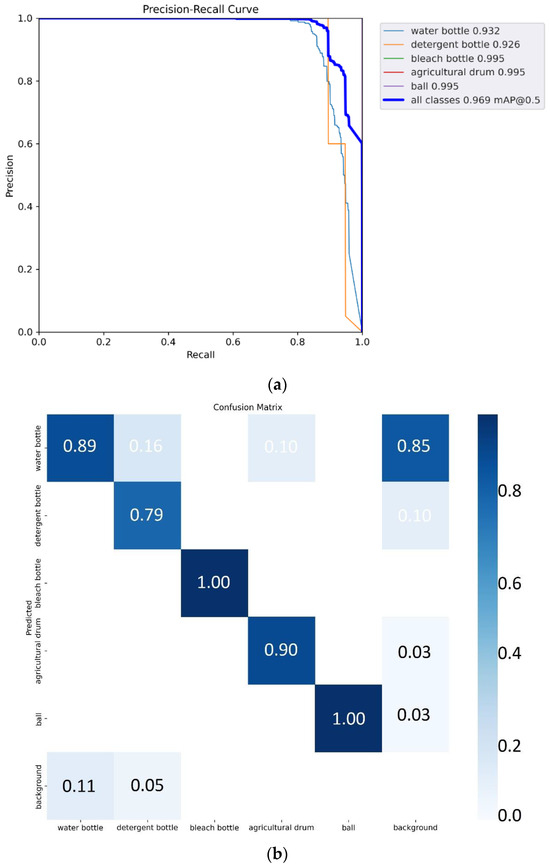

Model training was run for 126 epochs. The model’s performance was validated using metrics like precision, recall, mean Average Precision (mAP) and the F1 score, as stated in Section 2.3. These metrics were continuously monitored over the 126 epochs to ensure that the model was learning effectively and improving its accuracy in detecting and classifying the different types of waste. A precision graph (Figure 3a) shows how the mAP@0.5 improved as training progressed. A rapid increase was observed at the beginning, followed by a gradual stabilization until around 126 epochs, where the value stabilized, indicating that the algorithm had reached its maximum performance in terms of mAP@0.5, achieving an mAP@0.5 of 0.96. Figure 3b shows the mAP over a range of thresholds from 0.5 to 0.95. A constant increase was seen until around 120 epochs, where the value stabilized at about 0.73, indicating that the algorithm had reached its maximum performance in this more stringent metric. Figure 3c illustrates the precision of the algorithm during training. Initially, the precision fluctuated significantly, but as training progressed, the fluctuations decreased, and the precision stabilized around 0.95 from 120 epochs onwards, suggesting that the algorithm had reached its maximum precision. This indicates that continuing training beyond this point did not provide significant additional improvements in these metrics. The batch size used was 2 because it presented a good confusion matrix, and choosing a higher batch size would have consumed more computing time.

Figure 3.

Precision graphs: (a) mAP@0.5, (b) mAP@0.5 to 0.95, (c) precision.

Model performance can be assessed by average precision, precision, recall, test time and F1 score. In this experiment, a confidence threshold of 0.5 was set, and precision, recall and F1 score were derived on this basis. The results obtained for this model were as follows: training time of 3 h 7 min; precision of 92.04%; recall of 97.07%; mAP of 96.90% and an F1 score of 94.48%.

The objective of this work was to identify the different residues quickly and accurately, for which the mAP values (96.90%) and the detection speed of 81.7 ms per image were used as the main evaluation metrics, where the higher the mAP value, the better the detection result.

The running training cell also provided the confusion matrix (Figure 4b) that allowed to visualize the performance of the supervised learning algorithm. Each column of the matrix represents the number of predictions of each class, while each row represents the real class of each object, that is, it allows us to see what types of successes and errors our model had when going through the process of learning with the data [32]. In the present case, the probability that the detected objects were really those objects was high (89%), but there was an unusual fact with the background, which was confused with water bottles up to 85% of the time. This result might have been due to the fact that the analyzed photos were cropped to where only the waste layer could be seen, not the water in which they float, and at the bottom of this upper waste layer there were always more plastic water bottles.

Figure 4.

The model’s precision–recall curve (a) and confusion matrix (b).

3.2. Automatic Count of Floating Waste

The algorithm was applied to the images captured throughout 2022 at the 11 sampling points defined in Section 2, Materials and Methods. A total of 61 images were analyzed in the results cell, leading to the automatic counting of each one of them. The YOLO algorithm performed the identification and automatic count of residues on the analyzed images by means of bounding boxes (Figure 5).

Figure 5.

Automatic characterization of waste at sampling point ID6 (top), and point ID8.b (bottom). Date 8 September 2022.

The results of the automatic count of floating objects are presented in Table 1. For each sampling point (in lines), the total of the detected objects is presented for each class (in columns). The results obtained by manual counting using the ImageJ application are also shown in Table 1 along with the automatic ones.

Table 1.

Count of objects detected with the algorithm and with the manual count.

The most common objects were water bottles with a proportion of 96%, followed by detergent (2.07%) and bleach bottles (1.12%), and the least numerous were agricultural drums (0.6%) and balls (0.3%). This pattern of object classes’ frequency distribution was found for all sampling points and on all dates. The number of elements fluctuated in some points, showing that there was no continuous accumulation. This was due to cleaning tasks that are carried out from time to time and the dynamics of the river and irrigation ditches.

Comparing the number of objects obtained with each technique, the automatic count numbers were always equal or lower than the numbers obtained by the manual count in the case of water bottles. For other object classes, the differences between the two counts were more variable.

3.3. Comparison of Automatic Count with Manual Count

The time required for the manual counting of 61 images with the ImageJ application was 7 h and 34 min.

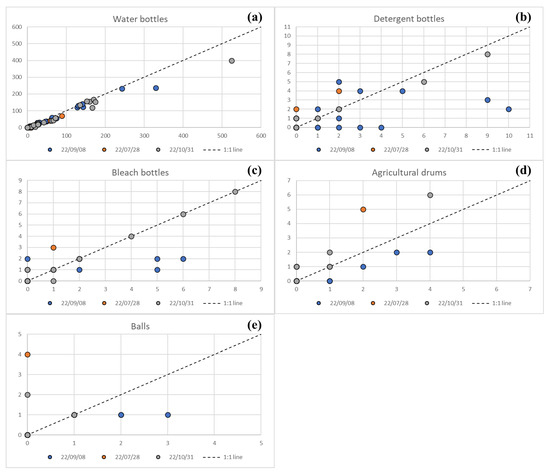

The comparison between the number of objects counted manually and the objects counted with the trained algorithm is shown in Figure 6. Each one of the scatter diagrams shows one of the objects classes. Each point shows the paired results of the manual count taken as reference in the X axes and the algorithm count in the Y axes. The difference between the two measurements can be noticed graphically in Figure 6, where the axes have been tailored to each object class depending on the counted quantities.

Figure 6.

Comparison between manual (X axes) and automated (Y axes) count for water bottles (a), detergent bottles (b), bleach bottles (c), agricultural drums (d) and balls (e).

Agreement between object counts by the two methods, by hand and automatic, was analyzed, calculating bias and agreement ranges for each object class [30]. For each of the object classes, we found a bias of 11.4,and an agreement range from −32.25 to 55.05 units for water bottles (Figure 6a); a bias of 0.28 and an agreement range from −3.22 to 3.78 units for detergent bottles (Figure 6b); a bias of 0.12 and an agreement range from −1.92 to 2.16 units for bleach bottles (Figure 6c); a bias of −0.06 and an agreement range from −1.51 to 1.39 units for agricultural drums (Figure 6d); and a bias of −0.32 and an agreement range from −4.44 to 3.8 units for balls (Figure 6e).

For water bottles, detergent bottles and bleach bottles, the model tended to count fewer objects than the manual count did (positive bias); on the contrary, the model tended to count more agricultural drums and balls than the manual count did. In the case of water bottles, the bias seemed to change with count number, becoming higher when the count number was higher, probably due to the stacking of the bottles that could be appreciated in the manual count but not in the automatic one.

4. Discussion

Due to the reduced availability of models for detecting plastic waste when it is accumulated in the inland aquatic environment using UAV images, we introduced this model to improve and facilitate the task of identification and characterization of plastic waste to expedite cleaning tasks and to be able to apply measures for a faster way to reduce this problem. The results of this study show excellent performance in computing time and waste identification. The model worked well for both isolated residues and grouped residues, which were accurately detected.

Our proposed algorithm achieved an mAP50 of 96,9% and a detection speed of 81.7 ms. Compared to that of other research works, such as [14] with an mAP50 of 98.1%, [15] with an mAP50 of 82.9%, and [22] with an mAP50 of 76.12%, we can conclude that our approach provided strong results in both detection accuracy and speed.

The algorithm developed in this work could classify different types of plastic bottles: water bottles, bottles of hygiene and cleaning products, and cans of chemical products used in agriculture. This distinction among types of plastic bottles represents an enhancement over the algorithm of Wolf et al. [13], since the type of bottle can indicate its origin. However, our model suffers from false detections in the background of the images, identifying them as plastic bottles. Water bottles are the most difficult to characterize as they are found in large numbers and can be seen stacked together, making the bottle look like different shapes. On the contrary, bleach bottles and drums are easier to identify since they have a characteristic shape and color and are not found in large quantities.

The methodology used in this study, particularly the use of UAVs and YOLOv5 for object detection, could be adapted for monitoring other types of environmental issues, such as wildlife populations, vegetation health, or the spread of pollutants. The key advantage is the ability to cover large areas quickly and analyze the data with minimal human bias.

The results of the automatic count in three different dates on floating waste accumulation sites obtained in this work are in agreement with those reported by Rocamora et al. [9] in the same environment, who found that the most numerous class of waste is made up of water bottles, whereas bottles and cans of phytosanitary products represented only 3.8% of the elements counted at the sampling points. In our study, over three measurement days and more than eight measuring sites, we found that 95% of the floating items were plastic water bottles (96% by manual count), and only 0.8% of the items were agricultural drums (0.6% by manual count).

To compare the results of the algorithm with those of the manual count, it is necessary to highlight the time spent with the two methodologies. The time required for the manual counting of 61 images with the ImageJ application was 7 h and 34 min. On the other hand, the automatic counting of those 61 images lasted 42 s. Therefore, there is a clear reduction in the time required for the analysis, even considering the training time, 3 h and 7 min, which was only done once.

Besides the reduction in working time, we must add that the automatic count does not present issues such as problems of subjectivity or fatigue for the person carrying out the manual count.

The algorithm showed good agreement with the manual count except for the case of counting playing balls that are found in very small numbers. For water, detergent and bleach bottles, as well as for agricultural drums, the algorithm showed good agreement with the manual count by a trained observer.

With the data obtained, it was possible to monitor the presence of floating waste throughout the study period at each of the points where images were taken. Therefore, with this methodology, more continuous monitoring can be achieved, giving results almost in real time and the only limitation being imposed by UAV flights to take the images.

From the results of this study, the objects present in the greatest proportion cannot be exclusively associated with any sector of the population, since many of them can have domestic origin, such as cleaning product bottles and the larger part of water bottles. The presence of this type of waste may be due to the scattered homes in the area and the fact that certain individuals dump their garbage into the river or into the irrigation network. Among the waste, there is a small proportion of agricultural drums despite the obligation to eliminate them through an integrated container management system. This differentiated count helps determine the origin of these accumulations in the river and therefore be able to implement measures, like awareness campaigns, to reduce this issue over time. The methodology that we propose in this work can be a useful tool due to its speed and objectivity in the evaluation of public policies aimed at eliminating plastic contamination in traditional irrigation networks.

The contribution of new knowledge of this work lies in the following features:

- (a)

- Application in a specific environmental context: This study focuses on the irrigation system of the Vega Baja region in Spain, a specific and understudied environment where floating plastic waste presents unique challenges. The complexity of this environment, including varying water levels, debris types, and narrow irrigation channels, necessitated the adaptation of existing models for effective waste identification.

- (b)

- Comprehensive dataset and longitudinal study: This work utilized a dataset that spans multiple years, capturing images in 2019 and 2022. This allowed us to evaluate the model’s performance across different seasons and environmental conditions, providing insights into the model’s robustness over time across accumulations varying in amount and composition.

- (c)

- Practical application and impact: Our study emphasizes the practical application of this methodology for a real-world situation. Local authorities in Vega Baja can integrate our findings into their waste reduction strategies, demonstrating the practical value of our work in preventing blockages and supporting public health initiatives.

Future research in our area could enhance the classes of objects detected, for instance, include Styrofoam trays, and drive more attention to the differentiation of stacked objects where waste accumulation takes place. Future enhancements might include testing the model in different locations to improve its robustness. Another potential enhancement could involve integrating other sensors (e.g., thermal or multispectral cameras) with the UAV to gather additional data that could improve detection accuracy in varied environmental conditions.

Author Contributions

Conceptualization, C.R. and H.P.; methodology, C.R. and H.P.; software, A.M.C.-A.; validation, A.M.C.-A.; resources and visualization, data curation, formal analysis, writing—original draft preparation, A.M.C.-A.; writing—review and editing, H.P. and C.R. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by two research grants from Miguel Hernández University: 53ND0007RP Caracterización de residuos flotantes en ríos y canales mediante inteligencia artificial, and 79SH0010RP Análisis de imágenes en agricultura de precisión para cultivos de interés local.

Data Availability Statement

The dataset generated to train the YOLOv5s model in this article is deposited in the Universidad Miguel Hernandez of Elche repository at the handle https://hdl.handle.net/11000/32965 (accessed on 18 September 2024).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Bellasi, A.; Binda, G.; Pozzi, A.; Galafassi, S.; Volta, P.; Bettinetti, R. Microplastic Contamination in Freshwater Environments: A Review, Focusing on Interactions with Sediments and Benthic Organisms. Environments 2020, 7, 30. [Google Scholar] [CrossRef]

- van Emmerik, T.; Schwarz, A. Plastic debris in rivers. WIREs Water 2020, 7, e1398. [Google Scholar] [CrossRef]

- van Emmerik, T.; Mellink, Y.; Hauk, R.; Waldschläger, K.; Schreyers, L. Rivers as Plastic Reservoirs. Front. Water 2022, 3, 786936. [Google Scholar] [CrossRef]

- Hafeez, S.; Wong, M.S.; Abbas, S.; Kwok, C.Y.T.; Nichol, J.; Lee, K.H.; Tang, D.; Pun, L. Detection and Monitoring of Marine Pollution Using Remote Sensing Technologies. In Monitoring of Marine Pollution; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Derraik, J.G.B. The pollution of the marine environment by plastic debris: A review. Mar. Pollut. Bull. 2002, 44, 842–852. [Google Scholar] [CrossRef]

- Hurley, R.; Braaten, H.F.V.; Nizzetto, L.; Steindal, E.H.; Lin, Y.; Clayer, F.; van Emmerik, T.; Buenaventura, N.T.; Eidsvoll, D.P.; Økelsrud, A.; et al. Measuring riverine macroplastic: Methods, harmonisation, and quality control. Water Res. 2023, 235, 119902. [Google Scholar] [CrossRef]

- Vaughn, T.; Crookston, B.M.; Pfister, M. Floating Woody Debris: Blocking Sensitivity of Labyrinth Weirs in Channel and Reservoir Applications. J. Hydraul. Eng. 2021, 147, 06021016. [Google Scholar] [CrossRef]

- Stringam, B.L.; Gill, T.; Sauer, B. Integration of irrigation district personnel with canal automation projects. Irrig. Sci. 2016, 34, 33–40. [Google Scholar] [CrossRef]

- Rocamora, C.; Puerto, H.; Abadía, R.; Brugarolas, M.; Martínez-Carrasco, L.; Cordero, J. Floating Debris in the Low Segura River Basin (Spain): Avoiding Litter through the Irrigation Network. Water 2021, 13, 1074. [Google Scholar] [CrossRef]

- Abadía, R.; Brugarolas, M.; Rocamora, C.; Martínez-Carrasco, L.; Puerto, H.; Cordero, J. Causes, consequences and solutions to the problem of floating solid waste in the Segura River and its irrigation channels, in the district of Vega Baja (Alicante, Spain). In Proceedings of the 5th International Congress on Water, Waste and Energy Management (WWEM-19), Paris, France, 22–24 July 2019; p. 178. [Google Scholar]

- de Vries, R.; Egger, M.; Mani, T.; Lebreton, L. Quantifying floating plastic debris at sea using vessel-based optical data and artificial intelligence. Remote Sens. 2021, 13, 3401. [Google Scholar] [CrossRef]

- van Lieshout, C.; van Oeveren, K.; van Emmerik, T.; Postma, E. Automated River Plastic Monitoring Using Deep Learning and Cameras. Earth Space Sci. 2020, 7, e2019EA000960. [Google Scholar] [CrossRef]

- Wolf, M.; van den Berg, K.; Garaba, S.P.; Gnann, N.; Sattler, K.; Stahl, F.; Zielinski, O. Machine learning for aquatic plastic litter detection, classification and quantification (APLASTIC-Q). Environ. Res. Lett. 2020, 15, 114042. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, F.; Chen, Y.; Wu, W.; Wu, Q. A water surface garbage recognition method based on transfer learning and image enhancement. Results Eng. 2023, 19, 101340. [Google Scholar] [CrossRef]

- Li, Y.; Wang, R.; Gao, D.; Liu, Z. A Floating-Waste-Detection Method for Unmanned Surface Vehicle Based on Feature Fusion and Enhancement. J. Mar. Sci. Eng. 2023, 11, 2234. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Tian, Z.; Huang, J.; Yang, Y.; Nie, W. KCFS-YOLOv5: A High-Precision Detection Method for Object Detection in Aerial Remote Sensing Images. Appl. Sci. 2023, 13, 649. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Inbar, O.; Shahar, M.; Gidron, J.; Cohen, I.; Menashe, O.; Avisar, D. Analyzing the secondary wastewater-treatment process using Faster R-CNN and YOLOv5 object detection algorithms. J. Clean. Prod. 2023, 416, 137913. [Google Scholar] [CrossRef]

- Lin, F.; Hou, T.; Jin, Q.; You, A. Improved Yolo based detection algorithm for floating debris in waterway. Entropy 2021, 23, 1111. [Google Scholar] [CrossRef]

- Trapote Jaume, A.; Roca Roca, J.F.; Melgarejo Moreno, J. Azudes y acueductos del sistema de riego tradicional de la Vega Baja del Segura (Alicante, España). Investig. Geográficas 2015, 63, 143–160. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A real-time detection algorithm for kiwifruit defects based on yolov5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Penney, F.T. MultiMarkdown User’s Guide. Version 6.6.0. Available online: https://fletcherpenney.net/multimarkdown/ (accessed on 15 March 2024).

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Brownlee, J. Difference Between a Batch and an Epoch in a Neural Network. Available online: https://machinelearningmastery.com/difference-between-a-batch-and-an-epoch/ (accessed on 19 July 2024).

- Olson, D.L.; Delen, D. Performance Evaluation for Predictive Modeling. In Advanced Data Mining Techniques; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- US National Institutes of Health. Image J. Available online: https://imagej.net/ij/index.html (accessed on 19 July 2024).

- Bland, J.M.; Altman, D.G. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Arce, J.B. La Matriz de Confusión y Sus Métricas. Available online: https://www.juanbarrios.com/la-matriz-de-confusion-y-sus-metricas/ (accessed on 15 November 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).