1. Introduction

In the realm of computer vision, while extensive exploration of land has been conducted, our understanding of oceans and lakes remains relatively limited due to the formidable challenges posed by underwater exploration. Scientists strive to fathom the underwater world using various technological methods, such as underwater optical imaging techniques [

1], UAI (underwater acoustic imaging), and Quantum LiDAR technology [

2]. The acquisition and imaging of underwater optical images hold significant potential applications across diverse fields, including oceanographic research, underwater resource exploration, mapping submarine topography, and underwater archaeology [

3]. However, natural factors like light scattering and absorption in water significantly impact image visibility and contrast, often leading to captured images falling short of meeting stringent quality requirements for other application scenarios.

At the beginning of the 21st century, researchers conducted extensive studies on underwater image restoration focused on physical models. However, the intricate nature of underwater physical environments posed significant challenges to accurate modeling, thereby limiting the effectiveness of these physical model-based approaches. Consequently, researchers have explored image enhancement techniques that do not rely on physical models. These methodologies have introduced novel perspectives for underwater image processing and have, to a certain extent, enhanced the quality of underwater images [

4]. Nevertheless, as technology continues to advance and applications deepen, there is still a need to explore more advanced and effective methods for processing underwater images to meet higher demands for image quality and clarity in practical applications.

In recent years, the field of computer vision, particularly image processing, has witnessed significant breakthroughs attributed to neural networks. In 2020, Ho et al. [

5] introduced the Denoising Diffusion Probabilistic Model (DDPM), which has garnered widespread attention. The model has demonstrated a remarkable performance in various aspects, including the denoising, restoration, super-resolution, generation, and enhancement of images. As a latent variable model, DDPM builds a bridge between the data distribution and simpler distributions, such as Gaussian distribution, progressively transforming data into pure Gaussian noise. This process follows the principles of the Markov process and involves reverse data reconstruction and training across a weighted variational boundary, ultimately enabling the generation of high-quality synthetic images. The successful deployment of DDPM not only pioneers new methodologies in the realm of computer vision but also provides a potent tool for image-processing tasks, thereby propelling further advancements in this field.

Building upon prior research, we have observed that conventional underwater image enhancement tasks frequently encounter challenges, such as unbalanced color distribution, inadequate super-resolution effects, and constraints in enhancing individual underwater images [

6]. Inspired by these challenges, this paper proposes a novel underwater image enhancement method based on the Denoising Diffusion Probabilistic Model, aimed at addressing these issues. Underwater image enhancement has stricter requirements for color, saturation, contrast, and resolution compared to general image enhancement. The objectives of this experiment are not only to achieve super-resolution of underwater images but also to significantly enhance the overall quality of the images while preserving key features, ultimately producing high-quality images that meet human visual perception standards. This method is expected to overcome the shortcomings of traditional underwater image enhancement techniques, offering new perspectives and tools for research and applications in related fields.

Our main contributions are summarized as follows:

We introduced a denoising neural network framework based on the probabilistic diffusion model, which has shown remarkable performance in enhancing the resolution and color restoration of low-quality underwater images. When compared with other models performing the same tasks, the model proposed in this paper demonstrated superior competitive strength.

We propose an innovative dual-layer attention mechanism. Specifically, the first layer of attention focuses on the interrelationships of spatial locations, effectively capturing spatial correlations in the input data. This helps the model better understand and utilize feature information from different positions. The second layer implements inter-channel attention weighting by adaptively learning attention coefficients to weight the input channel features. This allows the model to more accurately focus on important channel features, thereby enhancing the effectiveness of feature expression and optimizing image enhancement results. Compared to the baseline model, the PSNR index improved by 32.2%, and the SSIM index increased by 7.9%. The UIQM is improved by 17.4% compared to the input image.

We adopted a sub-pixel interpolation upsampling strategy, utilizing a 1.25× scale factor to augment the number of sub-pixels, in conjunction with bilinear interpolation. This strategy preserves the details of the image while preventing the generated images from becoming overly smooth. This approach significantly enhanced the image resolution. Compared to models not using this strategy, the restored images showed a 23.39% improvement in the PSNR and a 7.3% increase in the SSIM.

2. Related Work

2.1. Underwater Image Super-Resolution

Contrasted with atmospheric optical imaging, underwater optical imaging is constrained by its distinctive imaging environment, frequently resulting in a less-than-optimal image quality. This is primarily due to various noise interferences present in underwater environments, such as scattering, absorption, and background light, which collectively degrade the overall image quality. More complexly, the propagation of visible light in water is wavelength-dependent, leading to underwater images often displaying bluish-green hues, significantly reduced contrast, and distorted colors, making it difficult to reveal more image details. Hence, accomplishing accurate, swift, and precise restoration of details and features within such intricate environments continues to pose a pressing challenge for ensuing underwater tasks.

To address the aforementioned complex issues in underwater imaging, researchers have proposed numerous underwater image enhancement and restoration methods, which can be broadly classified into two major categories: physical model-based restoration methods and non-physical model-based image enhancement methods.

The underwater image restoration methods based on physical models are committed to simulating the degradation process of underwater images through mathematical modeling and then estimating model parameters to restore clear underwater images [

7]. These methods must fully consider the physical laws of underwater imaging, including factors such as light absorption, scattering, and color distortion. For instance, there is the self-tuning underwater image restoration filter based on the Jaffe–McGlamery model [

8], as well as algorithms that estimate scene depth to eliminate the effects of light scattering in underwater images [

9]. Although these methods have improved the quality of underwater images to a certain extent, there are still some limitations. This is because existing modeling methods are unable to fully reproduce the imaging process of underwater images, making precise modeling difficult.

Non-physical model approaches focus on directly improving the visual quality of underwater images through image-processing techniques, rather than relying on mathematical models of underwater optical imaging. These methods usually do not involve complex calculations of physical parameters but rather adjust the pixel values of the image directly to enhance image quality. In early research, researchers often directly applied traditional image enhancement methods to underwater images. Jung et al. [

10] proposed an adaptive joint tri-directional filter (AJTF) that could enhance the clarity of images and depth maps. Wang S. H. et al. [

11] focused on processing images under uneven illumination conditions. With the increasing demand for innovation in underwater image enhancement techniques, more and more image enhancement methods that can adapt to underwater conditions have been proposed. Among underwater image enhancement, color distortion is a particularly prominent issue. In 2014, Kan et al. [

12] utilized the water absorption spectrum to estimate changes in tristimulus values and proposed a color-restoration method based on the water absorption spectrum. Additionally, in 2015, Li et al. [

13] successfully improved the contrast, brightness, color, and visibility of underwater images by comprehensively utilizing methods such as color compensation, histogram equalization, saturation, and intensity stretching. Although these methods have improved the visual quality of underwater images to a certain extent, they lack generalizability. However, optimistically, these methods provide more reliable data support for subsequent underwater image analysis and applications.

In recent years, learning-based data-driven methods have achieved significant progress in the field of underwater image enhancement. In 2017, Wang et al. [

14] proposed the UIE-Net end-to-end underwater image enhancement network based on the attenuation model, which utilized a simple convolutional neural network to estimate the transmission image and the attenuation coefficients of three channels, achieving the effect of image color enhancement. In 2017, Li et al. proposed a generative adversarial network named CycleGAN [

15], which generated a model capable of performing multiple types of image-to-image transformations through adversarial training. Based on this, Li et al. proposed WaterGAN [

16] the following year, a two-stage algorithm using two fully convolutional networks, which split underwater image enhancement into two sub-tasks: synthesizing relative depth maps and color restoration. These models effectively improved the quality of underwater images through generative adversarial methods. However, these methods did not comprehensively address the practical application needs of underwater images. Early research focused on restoring image colors, while the most widely used GAN-based methods in recent years often have issues such as uncontrollable output results and severe image degradation. Our proposed method can effectively address these issues.

2.2. Diffusion Model

Since the advent of diffusion models based on non-physical models in 2020, a large number of research works have emerged in the field of computer vision adopting this novel modeling method. The distinctiveness of this model lies in its construction of a Markov chain that links a sample,

, with a pure Gaussian distribution,

(a simple distribution), thereby facilitating a Markov process. Transitions of this chain are learned to reverse a diffusion process, which is a Markov chain that gradually adds noise to the data in the opposite direction of sampling until the signal is destroyed [

5]. By studying the reverse process of this chain, the parameterization of a relatively simple neural network can be achieved.

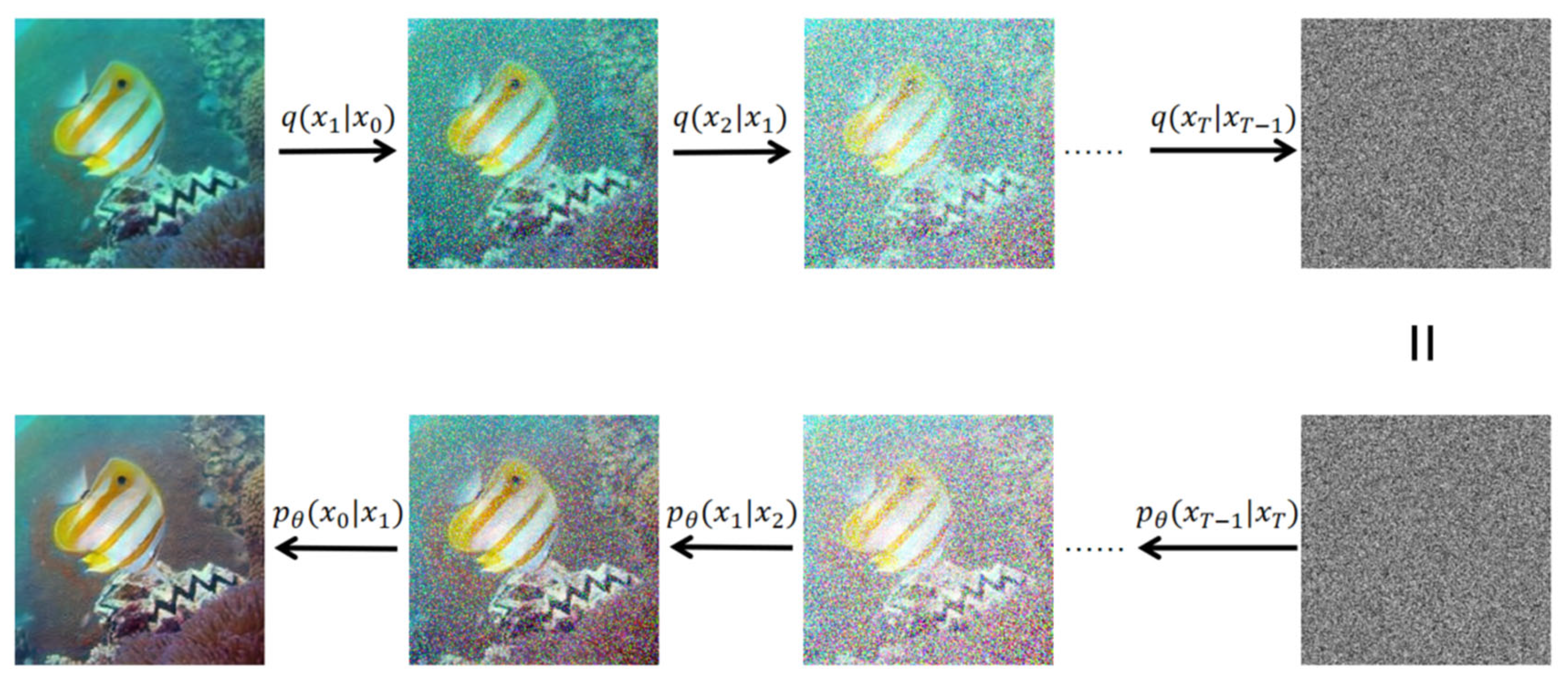

Figure 1 clearly demonstrates the process of adding noise and denoising in the diffusion model. In this process, the gradual addition of noise to the sample is known as the forward process,

, while the process of progressively denoising from pure Gaussian noise is termed the unknown reverse process,

. To approximate this reverse process, neural networks are employed with the minimization of cross-entropy as the training objective, specifically defined by Equation (1):

When the trained model, , closely approximates the true distribution, , it can be inferred that also closely approximates . As previously mentioned, the forward process can be considered a Markov process; hence, its conditional probability, , follows a Gaussian distribution, specifically expressed as , where ranges from 1 to . Here, denotes a Gaussian distribution with mean, , and variance, , and its probability density function is . In diffusion models, is typically chosen such that , with representing the variance of the Gaussian distribution, where is the identity matrix. Here, is a scalar hyperparameter that describes the magnitude of variance, i.e., the intensity of the “Gaussian noise”. Additionally, is the mean of this conditional probability, where . Consequently, can be viewed as a linear combination of the random variable, , and a standard normal distribution, : .

Given that the objective function is the variational lower bound under Markov conditions, the objective function can be expressed using Bayes’ theorem as Equation (2). The terms

, and

are further specified in Equations (2)–(5), respectively, where

. Based on these, Equation (6) is subsequently derived.

Since the state at time, , is pure Gaussian noise, and does not depend on model parameters, can be ignored in the derivation process. Utilizing Bayes’ theorem, the posterior distribution can be represented through and as follows: . Here, and .

3. Methodology

In image enhancement tasks based on diffusion models, the input, , typically consists of the original low-resolution image, , with the corresponding high-quality image, , serving as the reference output. This design establishes a clear supervised-learning framework, clearly defining the model’s learning objective—the mapping process from the low-quality image, , to the high-quality image, .

For the specific task of underwater image enhancement, which this paper focuses on, it is essential not only to enhance the image details but also to improve the coloration. Therefore, the low-quality original underwater image, , is used as the model’s input, , with the corresponding high-quality underwater image, , selected as the reference output, . During the data-preprocessing stage, the Bicubic interpolation method is intentionally used to process low-quality original underwater images. Compared to the general resize method, this approach can more effectively maintain the details and clarity of the image, significantly reducing distortion and aliasing effects. The implementation of this preprocessing strategy aims to enhance the model’s ability to perform super-resolution on low-resolution images, thereby further improving the results of underwater image enhancement.

The approach employed in this study is based on an improved denoising probabilistic model, which holds more distinct advantages compared to the underwater image enhancement methods based on physical models and deep learning mentioned in

Section 2.1. Physical model methods are prone to being influenced by changes in imaging conditions, while GAN methods based on deep learning require a delicate balance between the generator and discriminator during the training process, making their training process less stable. In contrast, the DDPM boasts a simple loss design that provides sufficient theoretical support for training. Moreover, the Markov chain process formed during the DDPM training process means that the current state is only related to the previous moment, making the training process more stable. However, in practical applications, image degradation issues also seem to plague traditional denoising probabilistic models. To address this issue, this study introduces a dual-layer attention mechanism into the model, a strategy that considers both spatial and channel information, enabling it to better capture local and global image features. Additionally, the unique sub-pixel interpolation upsampling strategy of this study can significantly reduce image degradation during the upsampling phase.

3.1. Dual-Layer Attention Mechanism

Similar to the traditional DDPM [

5], the network structure in this experiment employs a modified U-Net model as a crucial component of the generative network to address the dual challenges of underwater image enhancement and denoising. Given the outstanding performance of U-Net in image segmentation and other related tasks, specific modifications have been made to adapt it to the unique requirements of underwater image enhancement.

Due to the distinctive conditions of underwater environments, such as scattering and light attenuation, the network input is specially designed to concatenate and along dimension 1 and embed timestep information to enhance the network’s perception of the temporal dimension. Initially, the network processes the input data through a convolutional layer to extract fundamental feature information. This is followed by a series of residual blocks, each containing multiple convolutional layers, normalization operations, and residual connections. This structure not only aids in capturing more advanced features within the images but also mitigates the issue of gradient vanishing to some extent, thereby improving the training performance of the network. Additionally, the output channel numbers of each residual block are flexibly adjusted according to the specific parameters of the network design, meeting the needs of different levels of feature extraction. During the downsampling phase, a single-layer attention module is introduced, which helps the model focus more on the key information within the image, enhancing attention to specific regions. Conversely, the upsampling block employs a structure symmetrical to the downsampling block, gradually enlarging the feature maps to restore the processed features to the same dimensions as the original input image, and outputs the enhanced or denoised result.

The middle block, as a crucial component connecting the encoder and decoder in the U-Net structure, receives feature maps output from the encoder as input. Through a series of processing operations, it extracts more advanced and abstract feature information. This provides valuable information for the decoder to reconstruct the original input more accurately. During the processing of the input feature maps, an initial integration of features takes place, merging low-level and high-level features, which not only aids in improving feature representation but also promotes gradient flow during the training process. Due to the uniqueness of underwater image enhancement, special attention needs to be paid to the dependencies between image features and multi-channel color spaces. Thus, a dual-layer attention mechanism is introduced in the middle block. This dual-layer attention mechanism consists of two key components: the Attention Block and the Attention Branch.

Figure 2a demonstrates the structure of a multi-head attention mechanism. The input,

x, first undergoes normalization and then generates queries, keys, and values. These generated QKVs are reshaped to fit the multi-head attention computation. After being processed by the attention layer, the results are passed through the projection output layer and added to the residual connection, ultimately producing an output feature map with the same shape as the input.

Figure 2b demonstrates a convolutional neural network branch structure with an attention mechanism. The input,

x, first undergoes feature extraction through a 3 × 3 convolutional layer and then is processed by a Leaky ReLU activation function for non-linear transformation. Next, it passes through a 1 × 1 convolutional layer to change the number of channels and is then normalized by the sigmoid function to generate attention coefficients. The input,

x, also passes through another 3 × 3 convolutional layer, and its output is element-wise multiplied with the attention coefficients. Finally, the result is further processed by another 3 × 3 convolutional layer, producing an output feature map with the same shape as the input. The Attention Block focuses on enhancing the model’s feature-representation capability by performing adaptive spatial attention operations in the middle layers. The Attention Branch, based on the learned attention weights, selectively enhances relevant features in the input data, further boosting the model’s perceptual abilities. This specialized dual-layer attention structure works collaboratively to capture complex relationships within the input data, effectively utilizing feature information across different scales, thereby achieving more accurate and comprehensive performance enhancements in underwater image enhancement tasks.

3.2. Improved DDPM

The loss function of the traditional denoising probability model DDPM is based on the optimization of the negative log probability ELBO, expressed as follows:

where

denotes the expectation,

denotes the

divergence,

is the probability density function of the reverse process,

is the probability density function of the forward process,

represents the state at time

, and

is the total number of time steps. To simplify calculations, the loss function is often simplified to

. However, Nichol et al. [

17] pointed out that, in the loss function of DDPM [

5], the variance term,

, is treated as a fixed constant rather than a learnable parameter, which actually limits the model’s expressive capacity. Therefore, to enhance the model’s flexibility and performance, a penalty term,

, is added when computing the loss, optimizing the variational lower bound, thereby making the variance a learnable term. When

, the variational lower bound is the negative log likelihood,

. When

, the variational lower bound is the

divergence between the predicted

and the true

:

. Thus, the variational lower bound can be represented as

. Through this approach, a composite loss function is constructed, as shown in Equation (8).

In this framework,

is responsible for capturing the main distributional characteristics of the data, while

serves as a penalty term, aiding the model in learning finer details and the intrinsic structure of the data.

is the weight coefficient, and in IDDPM [

17],

is recommended. Compared to directly optimizing the log-likelihood, this hybrid learning objective offers significant advantages. It not only retains

as the primary source of the loss, ensuring that the model can stably learn the main features of the data, but also enhances the model’s fine perception of the data distribution through the introduction of

, thereby improving the convergence speed and effectiveness during model training.

When training the denoising probability model, the core objective is to find a function, , that minimizes the discrepancy between the predicted image, , and the actual high-resolution image, , while preventing the model from overfitting. This process can be described through the following optimization problem formulation: . Here, represents the loss function, which measures the discrepancy between the model prediction, , and the actual ; is a regularization term to prevent overfitting during training; and is a hyperparameter used to adjust the relative weight between the loss function and the regularization term, aiming to achieve an optimal balance between model performance and generalization ability.

3.3. Sub-Pixel Interpolation Upsampling Strategy

Historically, researchers often treated super-resolution tasks with different scaling factors as independent tasks, designing and training separate models for each scaling factor [

18]. However, this strategy was not only inefficient but also limited the versatility and flexibility of the models. To overcome this limitation, Hu et al. [

19] introduced the Meta-SR method, which enables super-resolution processing for arbitrary scaling factors, including non-integer values, through a single model. Inspired by this, Lim et al. [

6] further enhanced super-resolution performance by scaling up the model size. Motivated by these studies, we explored the impact of different scaling factors on super-resolution tasks and discovered through experiments that models exhibit varying super-resolution effects when faced with different scaling factors.

Diffusion models fundamentally involve the precise manipulation of individual pixels in the original input image, aiming to minimize the discrepancy between the predicted and the real images. The relationship between the original noise size and the expected output shape is critical in the model’s upsampling process. When the noise size is less than or equal to the expected output, the limited number of pixels restricts the insertion of details, making it difficult to display more intricacies. Conversely, when the noise size exceeds the expected output, the increased number of sub-pixels provides more room for detail insertion. However, an increase in sub-pixel quantity does not always result in better outcomes. Experiments show that interpolation at different scaling factors yields varied results. If an inappropriate interpolation method is chosen, interpolating among a large number of sub-pixels might prevent effective connectivity between adjacent pixels, leading to an overly smooth image lacking in edge information between instances. In such cases, the final generated image does not appear to have undergone effective super-resolution processing. Therefore, after balancing image details, clarity, and naturalness through experimental comparisons, we chose a 1.25× scaling factor for the upsampling size. This scaling factor effectively preserves image details, while avoiding excessive sharpening, achieving a good balance between clarity and naturalness.

Simultaneously, the interpolation method used in the upsampling process is crucial for the impact on image edge details. In the traditional U-Net structure handling super-resolution tasks, the upsampling block often utilizes nearest-neighbor interpolation. This method is simple and efficient, as it directly assigns the value of each pixel in the target image to the value of the closest pixel in the source image. However, a significant drawback of this method is that it can lead to jagged edges, particularly evident when images are enlarged. To overcome the deficiencies of nearest-neighbor interpolation, this paper opts for bilinear interpolation. Based on the concept of linear interpolation [

20], bilinear interpolation calculates the weighted average of four neighboring pixels to determine the value of a new pixel. This method is more refined and better preserves the edge details of images. As illustrated in

Figure 3, which shows a schematic of bilinear interpolation, assuming the values of four adjacent pixels are known, bilinear interpolation precisely calculates the value of the sub-pixel,

.

Initially, linear interpolation is performed in the

, resulting in the following two formulas:

Subsequently, linear interpolation is carried out in the

, resulting in the following:

The expressions for

and

previously obtained are substituted into the above formulas to ultimately derive the following:

where

represents the weight of each integer point, namely, the bilinear interpolation kernel.

Consequently, for any non-integer point, , on the feature map, , its value can be calculated using bilinear interpolation, expressed as . Here, the coordinates of the non-integer point, , are , while represents the four adjacent integer values, , on the feature map . The bilinear interpolation kernel function, , measures the correlation between the integer point, , and the non-integer point, . The function represents the distance weight between the diagonal integer points and the target point, , ensuring that the interpolation process considers only adjacent integer points and that the weights decrease as the distance increases.

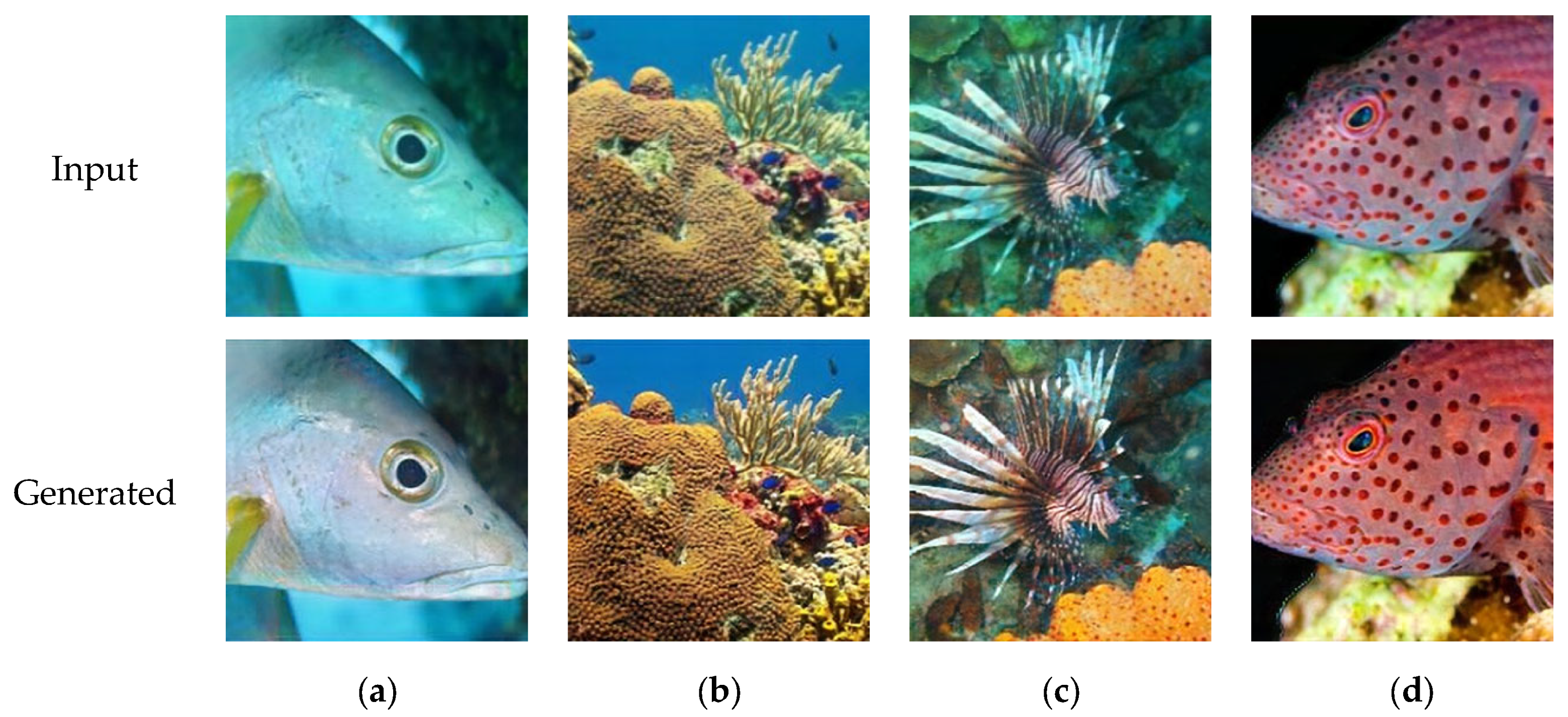

5. Conclusions

This paper introduces an innovative underwater image enhancement model based on a denoising neural network using probabilistic diffusion, combined with a composite loss diffusion model for underwater image enhancement applications. The proposed model effectively generates enhanced images from low-quality underwater inputs, supporting both single and batch image processing. The experimental results demonstrate that the model excels in the core sub-tasks of underwater image super-resolution and color correction. The dual-layer attention mechanism introduced in this study plays a crucial role in color correction, outperforming traditional single-layer attention mechanisms in handling complex color variations in underwater images, significantly enhancing color restoration and detail retention. Furthermore, we explored the impact of sub-pixel completion strategies during the upsampling process on super-resolution outcomes. Our experiments confirm that combining bilinear interpolation with a 1.25 scaling factor significantly mitigates the overly “conservative” issue of sub-pixel completion strategies, enabling our model to achieve super-resolution effects more rapidly and thereby enhancing the visual quality of underwater images.

Looking forward, we aim to explore the possibility of jointly processing multiple scales of super-resolution tasks within a single network, based on the research presented in this paper. This would contribute to the development of a more efficient and flexible underwater image enhancement system, providing stronger technical support for practical applications. We believe that through continuous research and innovation, we can advance underwater image enhancement technologies, contributing further to the research and applications in related fields.