Abstract

The Ground Penetrating Radar (GPR) method is a commonly used method for earth dam disease detection. However, the major challenge is that the obtained GPR image data of earth dam disease mainly relies on human judgment, especially in long-distance earth dam disease detection, which may lead to misjudgments and omissions. The You Only Look Once v5s (YOLOv5s) algorithm is innovatively employed for GPR image recognition to address the current challenge. The YOLOv5s neural network model has advantages over the traditional convolutional neural network in terms of object detection speed and accuracy. In this study, an earth dam disease detection model was established based on YOLOv5s. Raw images from actual earth dam disease detection and GPR forward simulation images were used as the initial dataset. Data augmentation techniques were applied to expand the original dataset. The LabelImg annotation tool was employed to classify and label earth dam disease, thereby creating an object detection dataset that includes earth dam disease features. The model was trained within this dataset. The results indicate that the total loss function of the model trained on the custom dataset initially decreases and then stabilizes, showing no signs of overfitting and demonstrating good generalizability. The earth dam disease detection model based on YOLOv5s achieved average precision rates of 96.0%, 95.5%, and 93.9% for voids, seepage, and loosening disease, respectively. It can be concluded that the earth dam disease detection model based on YOLOv5s may be an effective tool for intelligent GPR image recognition in identifying earth dam disease.

1. Introduction

Earth dams are an essential component of hydraulic engineering infrastructure. Their safe operation is directly related to the safety of people’s lives and property, as well as national economic construction. Under the action of natural disasters such as earthquakes and floods, earth dams may suffer from various diseases, including voids, seepage, and loosening. These diseases can lead to earth damage to earth dams and can even cause serious disasters. Therefore, the timely and accurate detection and assessment of the health status of earth dams are crucial for ensuring their safe operation.

The detection of earth dam diseases primarily relies on some physical detection methods, such as the Ground Penetrating Radar (GPR) method [1,2,3,4], Electromagnetic Profiling (EMP) method [1,5], and Electrical Resistivity Tomography (ERT) method [6,7,8,9]. Compared with other conventional underground detection methods, the Ground Penetrating Radar method has advantages such as fast detection speed, high precision, wide detection range, and non-destructiveness [10]. Just as atmospheric radar is used for precipitation category detection [11], GPR has been widely used in earth dam disease detection, and it can provide abundant image information for earth dam disease detection. The basic principle of the GPR: after the electromagnetic wave emitted by GPR passes through the earth dam’s surface and enters the dam body, the electromagnetic wave will produce different responses according to the different conditions at the interface. This response is collected by the receiving antenna and forms a radar image reflecting the distribution of media inside the dam body. Through the radar image, the earth dam is judged on whether the panel is empty, has leakage, is loose, or has other diseases. Loperte et al. [9] proposed a method based on the combination of geophysical techniques such as GPR and ERT with thermal-infrared mapping and geotechnical in-situ measurements, which was applied for the diagnosis of a rock-fill dam. Dong et al. [12] developed a calculation model of earth dams with shallow diseases using the FETD method and Perfectly Matched Layer (PML) boundary conditions. The propagation process of electromagnetic waves of GPR in earth dams was simulated, and the forward simulation profiles were obtained, which provide a theoretical basis for evaluating the effect of polymer grouting technology to repair earth dams using the GPR. However, the major challenge is that these image data usually require interpretation and analysis by experienced experts, which is not only inefficient and costly but may also have subjective biases. Especially in long-distance earth dam disease detection, there are risks of misjudgments and omissions. Therefore, it is difficult to meet the needs of large-scale and efficient earth dam disease detection.

In recent years, with the rapid development of computer vision technology, image recognition techniques based on convolutional neural networks have achieved significant application results in many fields [13,14,15]. For instance, Haque et al. [16] collected image data of litchi leaf samples and used the Convolutional Neural Network(CNN) model for target detection and recognition, which achieved high accuracy in identifying leaf blight, leaf mite, and red rust diseases of litchi leaves. In particular, the YOLO series of algorithms [17,18,19,20,21,22,23], due to their efficiency and accuracy, have been widely applied to object detection tasks. For instance, Kurdthongmee et al. [24] proposed using the YOLOv3 framework to train a detector for nine classes of objects, and the results show that compared to the other methods, this approach using the YOLOv3 framework can achieve high-accuracy pupil localization while improving the detection speed by 2.8 times. Zhu et al. [23] proposed an improved YOLOv5 algorithm named TPH-YOLOv5 for detecting scene targets captured by drones. Yan [25] and his team successfully implemented apple target detection in apple-picking robots using the modified YOLOv5s algorithm. Wang et al. [26] combined Vision Transformer (ViT) with YOLOv5 to enhance the speed and accuracy of asphalt road crack detection. Sozzi et al. [27] successfully conducted automatic grape cluster detection in white grape varieties using the YOLO series of algorithms and evaluated different versions of the YOLO algorithms.

To intelligently identify and classify GPR images of earth dam disease, an earth dam disease detection model is established based on the YOLOv5 algorithm. Raw images from actual earth dam disease detection and GPR forward simulation images are taken as the initial dataset. The dataset is enriched through data augmentation operations such as rotation and flipping. The target detection dataset containing earth dam disease features is obtained by the LabelImg annotation tool. The earth dam disease detection model is trained and tested within this earth dam disease dataset.

2. YOLOv5s Algorithm

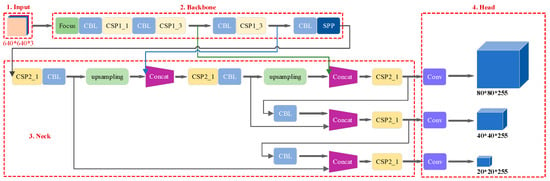

The YOLOv5s network model comprises four components: Input, Backbone, Neck, and Head [28], as shown in Figure 1.

Figure 1.

YOLOv5s network structure.

- (1)

- Input

The input layer is responsible for receiving the images and performing the necessary augmentations and normalizations, which include mosaic data augmentation, adaptive anchor box computation, and adaptive image resizing.

Mosaic data augmentation: The overall dataset has an uneven distribution of large, medium, and small targets. Mosaic data augmentation enriches the dataset by randomly scaling, cropping, and arranging four images in a mosaic pattern, thereby enhancing the network’s robustness.

Adaptive anchor box computation: During YOLOv5s network training, predictions are first made on initial anchor boxes. The predicted boxes are then compared with the actual boxes. Based on the difference between the two, the network parameters are iteratively updated until the optimal anchor box values are computed.

Adaptive image resizing: The length and width of the input image in the YOLOv5 network model may be different. Thus, the original image is usually uniformly scaled to a standard size before being sent into the detection network for training. A letterbox function is used to resize the input image. The letterbox function first calculates the scaling coefficients based on the original image and standard image sizes. It selects the smaller scaling coefficients for image resizing. The standard image size used in this study is 640*640*3.

- (2)

- Backbone

Backbone is composed of some classifiers with excellent performance, which are used to extract features and form convolutional neural networks with image features. The backbone of YOLOv5s includes the focus structure, CBL module, CSP structure, and SPP structure.

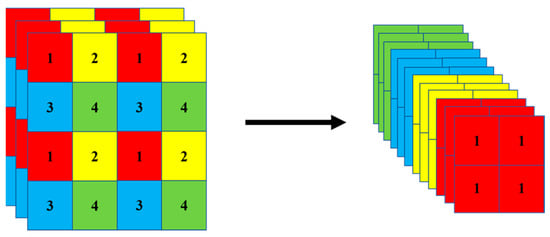

Focus structure: The focus structure is a new addition in YOLOv5s, with slicing being a crucial operation. The slicing operation is illustrated in Figure 2, using a 4*4*3 image as an example. After slicing and combining, it transforms into a 2*2*12 feature map.

Figure 2.

Slicing operation illustration.

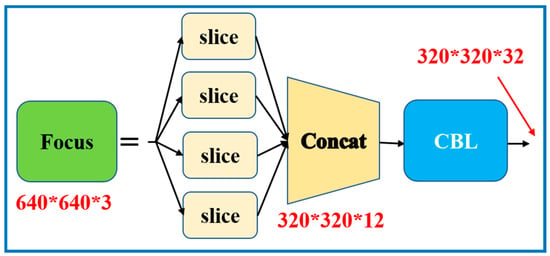

For YOLOv5s, the original input image is 640*640*3. After entering the focus structure and undergoing the slicing operation, the image is divided into four slices, each sized 320*320*3. These slices are then concatenated through tensor concatenation operations, resulting in a feature map of size 320*320*12. After undergoing convolution operations with 32 convolutional kernels, batch normalization, and the leaky ReLu activation function, the output is transformed into a 320*320*32 feature map, which is then passed to the next layer. The focus structure is depicted in Figure 3.

Figure 3.

Focus structure illustration.

Convolution–Batch Normalization–Leaky ReLu activation function (CBL) module: The CBL module consists of Convolution (Conv), Batch Normalization (BN), and the leaky ReLu activation function. Its main function is feature extraction.

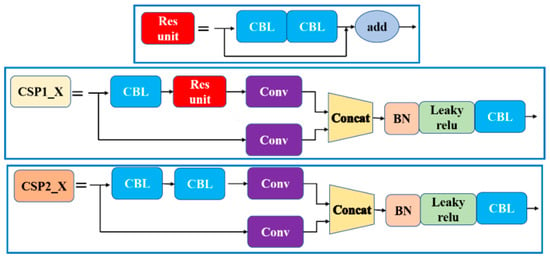

Cross Stage Partial (CSP) Structure: The CSP structure divides the input feature map into two branches. Through the residual structure, it combines features between layers. It then concatenates the two branch tensors using tensor concatenation operations, ensuring the input feature map and output image have the same size. Within the YOLOv5s backbone network, there is a CSP1_X structure, and within the neck network, there is a CSP2_X structure. The CSP1_X and CSP2_X structures are shown in Figure 4.

Figure 4.

CSP structure illustration.

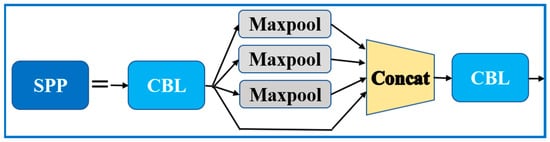

Spatial Pyramid Pooling (SPP) Structure: The SPP structure is designed to convert any sized feature map into a fixed-size feature vector map, as illustrated in Figure 5. The input image for the SPP structure is 20*20*512. Using max-pooling, it extracts feature maps of different sizes, which are then depth concatenated to produce a 20*20*1024 feature map. Finally, after convolution operations, it outputs a feature map of size 20*20*512.

Figure 5.

SPP structure illustration.

- (3)

- Neck

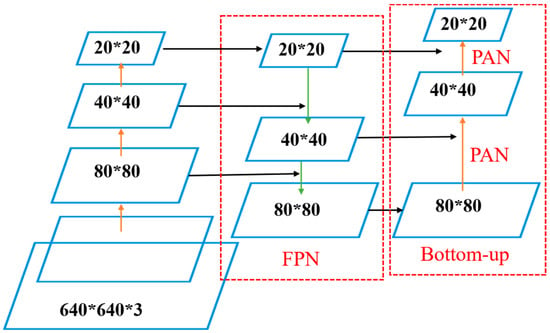

The role of the neck network is to blend and combine feature maps and transfer these features to the head detection layer. It primarily consists of the Feature Pyramid Network (FPN) + Path Aggregation Network (PAN) structure, as shown in Figure 6. The FPN structure has two pathways: bottom-up and top-down. With lateral connections, it can pass high-level semantic features from the top down, ensuring that the fused feature map possesses robust semantic and localization characteristics, thus enhancing the detection of small objects. The PAN structure can transfer the lower-level positioning features upward, which enhances the transmission of the lower-level image features. Each layer of the bottom-up structure takes the feature map of the previous layer as the input image and fuses it with the feature map of the corresponding size of the previous layer after the convolution operation, which enhances the positioning ability on multiple scales. Low-dimensional image features mainly reflect positioning features, and high-dimensional image features mainly reflect semantic features. FPN structure can obtain strong semantic information from the top down, while PAN structure can obtain strong positioning information from the bottom up, and the bottom-up structure can enhance the positioning of multiple scales. Therefore, this method of fusion of feature information from different layers is more conducive to feature information extraction.

Figure 6.

FPN + PAN structure illustration.

- (4)

- Head

After the outputs from the neck network undergo convolution operations, three detection heads are produced, with feature map sizes of 80*80*255, 40*40*255, and 20*20*255, respectively. Together, these three detection heads form the head. Larger images detect smaller objects, while smaller images detect larger objects. When the YOLOv5s network model detects objects of varying sizes, it employs Non-Maximum Suppression (NMS) to eliminate redundant prediction boxes. This enhances the detection capability for multiple objects, including those in occluded scenarios, thereby achieving accurate object detection.

3. Construction of Earth Dam Disease Ground Penetrating Radar Dataset

3.1. Feature Data Acquisition

To conduct research on the recognition of earth dam disease images from GPR, based on the random medium model [29], GPR electrical models of earth dam diseases at different locations and sizes were established. Through GPR forward simulation, 300 images of earth dam diseases were obtained. This includes 180 images of single types of diseases and 120 combined images of two or more types of diseases, forming the earth dam disease dataset. Furthermore, to enhance the robustness of the target detection model and improve its usability, 200 GPR field images with minimal noise depicting individual earth dam diseases were selected from actual earth dam engineering data to enrich the earth dam disease dataset.

3.2. Data Augmentation

When conducting object recognition training, to prevent overfitting, a large amount of data is generally needed for training target detection models. The larger the dataset, the higher the robustness and detection efficiency of the neural network. However, obtaining GPR images of earth dam diseases is challenging. Therefore, data augmentation is often performed based on existing samples. Data augmentation not only enhances the generalization capability of convolutional neural networks but also strengthens the robustness of convolutional features to geometric transformations during training.

In this study, the dataset was augmented through techniques such as cropping, rotating, translating, flipping, HSV adjustments, noise addition, and cutout. Consequently, the initial dataset of 500 images was expanded to 3500 images, which includes 1400 GPR field images and 2100 simulated images. For the purpose of target detection training in this paper, the dataset was divided into training, testing, and validation sets at a ratio of 8:1:1, which respectively corresponds to 2800, 350, and 350 images.

3.3. Data Annotation

Before training the model on the earth dam disease ground-penetrating radar dataset, it is essential to annotate the data with labels containing position and category information. The LabelImg annotation tool was employed for manual annotation, and the PascalVOC xml data format was chosen for annotation.

Both simulated GPR images and original field images were first manually annotated with disease labels in xml format. Following this, data augmentation was performed to obtain the corresponding augmented GPR images and their xml annotation files. The GPR image dataset and the annotated dataset were then integrated. Lastly, the xml annotation format was converted to the txt annotation format to be used for training the YOLOv5 target detection model.

4. Performance Evaluation Indicators

4.1. Evaluation Indicators

The ultimate goal of earth dam disease object detection is to identify and classify GPR images containing earth dam disease. Hence, the images are classified into two categories: with disease and without disease. Here, the positive samples represent images containing earth dam disease, while the negative samples signify images without earth dam disease. TP (True Positive, TP) represents the number of correctly predicted positive samples, i.e., the number of samples that are earth dam disease images and also identified as diseased by the model. FN (False Negative, FN) denotes the number of incorrectly predicted positive samples, i.e., the images are of earth dam disease, but the model considers them as disease-free. FP (False Positive, FP) stands for the number of incorrectly predicted negative samples, i.e., the images are without earth dam disease, but the model considers them as diseased. TN (True Negative, TN) indicates the number of correctly predicted negative samples, i.e., the images are without earth dam disease, and the model also recognizes them as disease-free.

Based on the sample data from the confusion matrix, the evaluation metrics for assessing the detection performance of the target detection model can be obtained. The accuracy rate can only evaluate the overall accuracy. A higher precision and recall rate implies better performance of the target recognition model. However, in practical scenarios, precision and recall show a negative correlation; when the model has high precision, the recall is poor, and vice versa. Therefore, to facilitate the analysis of the overall performance of the model, an average metric for evaluating precision, namely mean precision and mean average precision, has been introduced. A higher mean precision indicates better performance of the model.

- (1)

- Accuracy (Acc) represents the proportion of samples correctly predicted by the earth dam disease detection model among all sample data. Although accuracy can judge the overall correctness, it cannot effectively measure the detection results in the case of imbalanced samples.

- (2)

- Precision (Pre) represents the proportion of correctly identified earth dam disease image samples among all samples identified as diseased, i.e., the accuracy rate of positive samples.

- (3)

- Recall (Rec) represents the proportion of images correctly identified as earth dam disease among all actual disease samples.

- (4)

- Average Precision (AP) equals the area enclosed by the P-R curve and the coordinate axis, representing the average value of the average precision for each category. Mean Average Precision (mAP) represents the average of the average precision APs for each category.

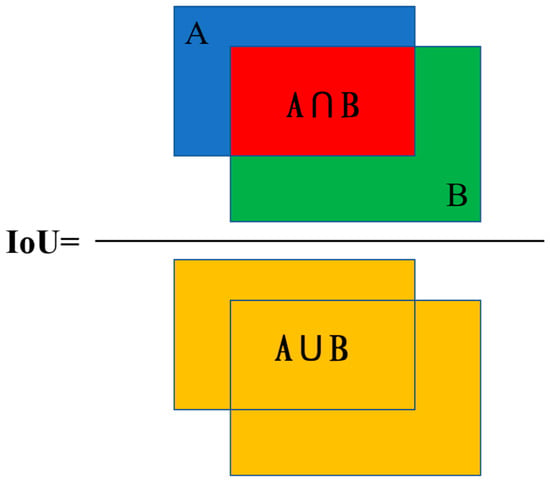

To evaluate the sample recognition effect, judgment on localization accuracy is required when calculating evaluation metrics. Intersection over Union (IoU) is used as the judgment metric. IoU is the ratio of the intersection to the union of the area of the labeled bounding box of the target recognition sample and the true box [30], as shown in Figure 7.

Figure 7.

Schematic diagram of intersection over union (IoU).

Set the overlap threshold as T; when IoU > T, the sample is judged as a positive sample and determined correctly. When 0 < IoU < T, the sample is judged as a positive sample but determined incorrectly. When IoU = 0, the sample is judged as a negative sample and determined incorrectly.

The mean average precision (mAP) can be differentiated based on the value of the overlap threshold T. When the threshold T is set to 0.5, the mAP is also referred to as mAP0.5. There are also other metrics, such as mAP0.75, mAP0.9, and so on, where the numbers following mAP represent the corresponding overlap threshold value. In this paper, the overlap threshold T for the earth dam disease detection model is set to 0.5, i.e., mAP = mAP0.5.

4.2. Loss Function

The loss function of object detection models typically consists of two components: the classification loss and the bounding box regression loss. The YOLOv5s algorithm’s loss function mainly comprises three parts: localization loss, classification loss, and confidence loss. The total loss is the sum of these three components, represented by the following formula:

The localization loss employs the CIOUloss function. The calculation formula for CIOUloss [31] is

where, and respectively represent the prediction bounding box and the label bounding box. and respectively represent the width and height of the prediction box. and respectively represent the width and height of the label box. represents the Euclidean distance between the center of the prediction box and the label box. c represents the farthest distance between the boundaries of the two boxes. is a weight parameter. v is used to measure the variance in width and height.

The classification loss and confidence loss both use the BCEWithLogitsLoss function, which is a binary cross-entropy loss function with logits. Its formula is

where, K × K denotes the number of grids. M denotes the number of boundary boxes predicted per grid. Ci = sigmoid(c), where and respectively represent the confidence score of the prediction box and the label box in the ith grid. pi(c) = sigmoid(c), where and respectively represent the predicted and true class probabilities of the object in the ith grid; represents whether the jth bounding box in the ith grid containing this class object is detected or not, the value is 1 if it is detected; otherwise, the value is 0. is a hyperparameter representing the weight of the confidence value predicted by the grid without the object. It is generally set to 0.5.

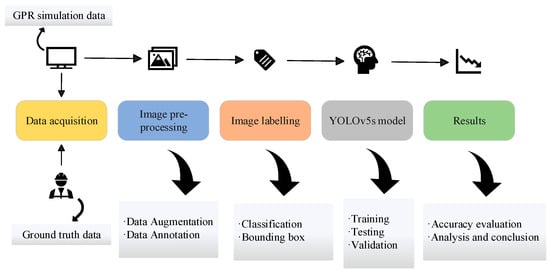

The methodology flowchart proposed in this paper is shown in Figure 8.

Figure 8.

The methodology flowchart.

5. Model Training and Results Analysis

5.1. Training and Testing

The computer environment adopted in this study is as follows: Operating system—Ubuntu 18.04 LTS, CPU model—Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz with a RAM of 64GB. The GPU model is an NVIDIA GeForce RTX 2080Ti with 11GB of VRAM. The CUDA version is v10.2.89, and the cuDNN version is v7.6.5. The deep learning framework used is PyTorch with Python version 3.8. The YOLOv5s algorithm applied in this study has a depth multiple of 0.33 and a width multiple of 0.5. Initially, the image dataset undergoes data augmentation at the input end, followed by being fed into the backbone network for feature extraction. Subsequently, feature refinement operations take place in the neck network. Finally, feature information from three different scales is directed to the prediction head module for target category and position prediction. Initial hyperparameters for model training include: learning rate set to 0.01, the maximum number of iterations is 200, batch size is 16, weight decay coefficient is 0.0005, and momentum factor is 0.937.

5.2. Results Analysis

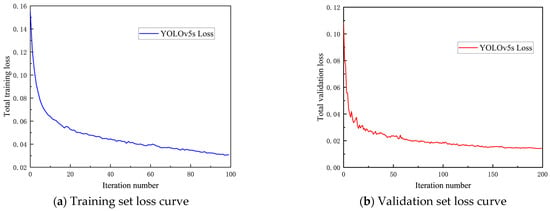

Figure 8 illustrates the overall loss value changes during the training and validation processes of the YOLOv5s object detection model on the dataset. As inferred from Figure 9a, the overall training loss of the object detection model rapidly decreases in the initial phase, then gradually slows down. From Figure 9b, it can be observed that as the number of iterations increases, the trend of the validation loss value tends to stabilize. The trained YOLOv5s object detection model does not exhibit overfitting, indicating that the model in this study has good generalization capability. Since the earth dam earth damage dataset includes both forward simulation images and actual measurement images, and the latter are preprocessed to select those with distinct features, the model converges at a faster rate.

Figure 9.

Overall loss function curve of the YOLOv5s model.

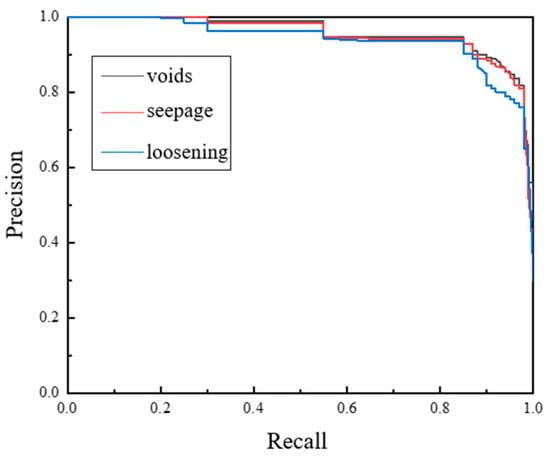

The sample data are divided according to the IoU threshold to get the corresponding accuracy and recall to draw the corresponding P-R curve. In this study, an IoU threshold of 0.5 was selected, and the P-R curve obtained from training the earth dam earth damage dataset with the YOLOv5s model is shown in Figure 10.

Figure 10.

P-R curve of the YOLOv5s model.

Figure 10 shows that the object detection model has a relatively high precision and recall rate for recognizing voids and seepage in GPR images, while the image recognition performance for loosening earth damage is somewhat inferior. The average precision of the YOLOv5s object detection model for identifying different earth dam earth damage in GPR images varies from 96.0% for voids, 95.5% for seepage, and 93.9% for loose earth damage, with an overall average precision of 95.13%. This is because voids and seepage have distinct reflective wave features, with fewer diffracted and refracted waves within their waveforms, making the overall waveform more regular and, hence, easier to identify. In contrast, loosening earth damage contains a large number of diffracted and refracted waves, resulting in chaotic waveforms with smaller amplitude reflected waves, making feature information collection more challenging and identification harder.

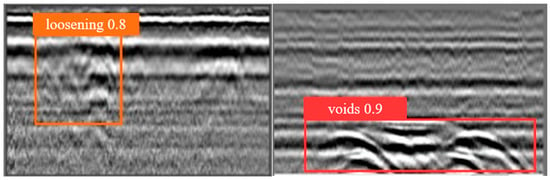

To further validate the actual detection performance of the YOLOv5 object detection model, 350 GPR images of earth dam disease from the test dataset were evaluated. The sample image test results, including the detection boxes and confidence scores, are depicted in Figure 11.

Figure 11.

Test results of the GPR images of earth dam disease.

6. Conclusions

An object detection model for earth dam disease ground-penetrating radar images based on the YOLOv5s algorithm was established to intelligently identify and classify GPR images of earth dam disease. Raw images from actual earth dam disease detection and GPR forward simulation images were used as the initial dataset. Data augmentation techniques were applied to expand the original dataset. The LabelImg annotation tool was employed to classify and label earth dam disease, thereby creating an object detection dataset that includes earth dam disease features. The YOLOv5s model was trained within this dataset. The study results indicate:

- (1)

- The YOLOv5s object detection model, when trained on the custom dataset, displays an initial decrease in the overall loss function followed by stabilization, with no signs of overfitting, demonstrating good generalization capability.

- (2)

- The average precision of the YOLOv5s object detection model varied for different earth dam diseases, with voids, seepage, and loosening diseases having average precision rates of 96.0%, 95.5%, and 93.9%, respectively.

- (3)

- The YOLOv5s object detection model can identify and classify earth dam disease ground-penetrating radar images, offering an effective method for intelligent detection of earth dam disease in practical engineering applications.

- (4)

- Considering that many factors affect the GPR field images, adding more GPR field images to train the target detection mode will improve the robustness of the model and build a more accurate target recognition model.

Author Contributions

Conceptualization, B.X. and J.G.; methodology, B.X.; software, S.H.; validation, Y.L.and B.X.; formal analysis, J.C.; investigation, R.P.; resources, B.X.; data curation, S.H.; writing—original draft preparation, B.X.; writing—review and editing, J.G.; visualization, R.P.; supervision, J.G.; project administration, B.X.; funding acquisition, J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Open Research Fund of Jiangxi Provincial Research Center on Hydraulic Structures with Grant No. 2021SKSG01, the Open Fund of State Key Laboratory of Coastal and Offshore Engineering, Dalian University of Technology with Grant No. LP2114, and the Open Fund of Guangxi Key Laboratory of Water Engineering Materials and Structures with Grant No. GXHRI-WEMS-2022-04.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data, models, and code generated or used during the study appear in the published article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gołębiowski, T.; Piwakowski, B.; Ćwiklik, M.; Bojarski, A. Application of Combined Geophysical Methods for the Examination of a Water Dam Subsoil. Water 2021, 13, 2981. [Google Scholar] [CrossRef]

- Hu, S.; Lu, J.; Wang, G. Application analysis of detecting water-rich areas with ground-penetrating radar. Hydro-Sci. Eng. 2012, 6, 33–37. [Google Scholar]

- Gołębiowski, T.; Małysa, T. Application of GPR Method for Detection of Loose Zones in Flood Levee. E3S Web Conf. 2018, 30, 1008. [Google Scholar] [CrossRef]

- Marcak, H.; Golebiowski, T. The use of GPR attributes to map a weak zone in a river dike. Explor. Geophys. 2014, 45, 125–133. [Google Scholar] [CrossRef]

- Adamo, N.; Al-Ansari, N.; Sissakian, V.; Laue, J.; Knutsson, S. Geophysical Methods and their Applications in Dam Safety Monitoring. J. Earth Sci. Geotech. Eng. 2020, 11, 291–345. [Google Scholar] [CrossRef]

- Martínez-Pagán, P.; Gómez-Ortiz, D.; Martín-Crespo, T.; Martín-Velázquez, S.; Martínez-Segura, M. Electrical Resistivity Imaging Applied to Tailings Ponds: An Overview. Mine Water Environ. 2021, 40, 285–297. [Google Scholar] [CrossRef]

- Neyamadpour, A.; Abbasinia, M. Application of electrical resistivity tomography technique to delineate a structural failure in an embankment dam: Southwest of Iran. Arab. J. Geosci. 2019, 12, 420. [Google Scholar] [CrossRef]

- Shin, S.; Park, S.; Kim, J.-H. Time-lapse electrical resistivity tomography characterization for piping detection in earthen dam model of a sandbox. J. Appl. Geophys. 2019, 170, 103834. [Google Scholar] [CrossRef]

- Loperte, A.; Soldovieri, F.; Lapenna, V. Monte Cotugno Dam Monitoring by the Electrical Resistivity Tomography. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 5346–5351. [Google Scholar] [CrossRef]

- Hui, L.; Haitao, M. Application of Ground Penetrating Radar in Dam Body Detection. Procedia Eng. 2011, 26, 1820–1826. [Google Scholar] [CrossRef]

- Vonnisa, M.; Shimomai, T.; Hashiguchi, H.; Marzuki, M. Retrieval of Vertical Structure of Raindrop Size Distribution from Equatorial Atmosphere Radar and Boundary Layer Radar. Emerg. Sci. J. 2022, 6, 448–459. [Google Scholar] [CrossRef]

- Dong, Z.; Xue, B.; Lei, J.; Zhao, X.; Gao, J. Study on Propagation Characteristics of Ground Penetrating Radar Wave in Dikes and Dams with Polymer Grouting Repair Using Finite-Difference Time-Domain with Perfectly Matched Layer Boundary Condition. Sustainability 2022, 14, 10293. [Google Scholar] [CrossRef]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Haque, I.; Alim, M.; Alam, M.; Nawshin, S.; Noori, S.R.H.; Habib, T. Analysis of Recognition Performance of Plant Leaf Diseases Based on Machine Vision Techniques. J. Human Earth Future 2022, 3, 129–137. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.R.; Stoken, A.; Borovec, J.I.; Chaurasia, A.; Changyu, L.; Hogan, A.; Hajek, J.; Diaconu, L.; Kwon, Y.; Defretin, Y.; et al. ultralytics/yolov5: v5.0—YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations. 2021. Available online: https://api.semanticscholar.org/CorpusID:244964519 (accessed on 26 May 2022).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW 2021), Montreal, BC, Canada, 11–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Kurdthongmee, W.; Kurdthongmee, P.; Suwannarat, K.; Kiplagat, J.K. A YOLO Detector Providing Fast and Accurate Pupil Center Estimation using Regions Surrounding a Pupil. Emerg. Sci. J. 2022, 6, 985–997. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Wang, S.; Chen, X.; Dong, Q. Detection of Asphalt Pavement Cracks Based on Vision Transformer Improved YOLO V5. J. Transp. Eng. Part B Pavements 2023, 149, 04023004. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Jiang, Z.; Zeng, Z.; Li, J.; Liu, F.; Li, W. Simulation and analysis of GPR signal based on stochastic media model with an ellipsoidal autocorrelation function. J. Appl. Geophys. 2013, 99, 91–97. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over union: A metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Xue, J.; Cheng, F.; Li, Y.; Song, Y.; Mao, T. Detection of Farmland Obstacles Based on an Improved YOLOv5s Algorithm by Using CIoU and Anchor Box Scale Clustering. Sensors 2022, 22, 1790. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).