1. Introduction

Water is one of the most important materials on earth. With human industrial production, agricultural breeding, and daily activities, a large amount of sewage is discharged into the surrounding water environment, resulting in environmental pollution and a severe impact on water supply ecology and human health. Monitoring the water quality in time and obtaining the temporal-spatial variation characteristics of regional water pollutant concentration is of great significance for assessing the risk of water pollution and effectively preventing water pollution. Traditional water quality detection methods are costly, time-consuming, and laborious. The pollutant concentration obtained at the sampling point cannot reflect the distribution of pollutants in the whole region. Using remote sensing images can realize regional synchronous observation, obtain the overall distribution of contaminants, and provide timely and reliable prediction results for inland water quality monitoring, which is not available through traditional water quality detection methods.

Water quality monitoring based on remote sensing technology is an important research direction of environmental remote sensing, and NH

3-N is a valuable reference index for water pollution prevention and control. The concentration of NH

3-N in water is related to water eutrophication and suspended solids [

1]. Gong et al. found that the correlation coefficient of nitrogen was the highest at 404 nm and 447 nm [

2]. Wang et al. found that NH

3-N highly correlated with the red, green, and near-infrared (NIR) bands of the SPOT 5 satellite [

3]. Existing studies have shown that the relationship between NH

3-N concentration and reflection spectrum is the theoretical basis for applying remote sensing technology in water quality monitoring.

Current satellite data for remote sensing water quality monitoring include MODIS [

4,

5,

6,

7], MERIS [

8,

9], TM/ETM + [

10,

11], Worldview-2 [

12,

13], HJ-1 CCD [

14,

15], GF-1 [

16,

17], sentinel-2 [

18,

19], etc. Most of these studies focus on large water bodies such as lakes or offshore, and there are few studies on inland rivers, especially on small and medium-sized rivers. The inland river has higher requirements for the spatial resolution and returns period of the satellite, which is hard to meet with a single satellite sensor. Therefore, it is significant to study hyperspectral and high-resolution image fusion algorithms to improve the images’ spatial and temporal resolution for remote sensing monitoring inland rivers. Many works have used the existing Spatio-temporal fusion model for high-frequency remote sensing monitoring of ground feature changes [

20].

The Spatio-temporal fusion algorithm is based on ground features’ spectral changes in the high spatial resolution remote sensing image (MODIS). It fuses these changes into the remote sensing image with a high spatial resolution (OLI) to simulate the image with higher temporal resolution and better spatial resolution, which provides reference and image support for the study of temporal and spatial variations monitoring of surface features. The existing Spatio-temporal fusion algorithms can be classified into five types according to different principles: Spatio-temporal fusion algorithms based on spectral unmixing [

21,

22,

23], algorithms based on weight distribution [

24,

25,

26], Bayesian principle algorithms [

27,

28], feature learning algorithms [

29,

30,

31], and hybrid methods [

32,

33,

34]. The method based on spectral unmixing uses coarse pixels to estimate the value of fine pixels through spectral mixing theory. Niu et al. proposed STDFA (Spatial-Temporal Data Fusion Approach) based on this method [

22]. Unmixing-based methods have huge unmixing errors and lack in-class variation of ground objects. The weight-based algorithms include STARFM (Spatial and Temporal Adaptive Reflectance Fusion Model) [

24], ESTARFM (Enhanced Spatial and Temporal Adaptive Reflectance Fusion Model) [

26], and STAARCH (Spatial and Temporal Adaptive Algorithm for Mapping Reflectance Changes) [

25], etc. Weight-based methods use image information for weight assignment to estimate high-resolution pixel values, are invalid for heterogeneous changes, and the weight function based on experience lacks mobility. The method based on Bayesian estimation theory defines the relationship between the coarse image and the fusing image based on the Bayesian statistical principle, but the function establishment process is complex, and its performance in heterogeneous landscapes is unsatisfactory [

35]. For the learning-based method, machine learning is used to simulate the mapping relationship between high-resolution and low-resolution images so as to predict the fused image, such as SPSTFM (Sparse representation based Spatio-temporal Reflectance Fusion Model) proposed by Huang et al. [

29]. So far, dictionary pairing learning [

29], extreme learning [

36], random forest [

37], deep convolution neural network [

30], and artificial neural network [

38] have been used for Spatio-temporal data fusion. Although the fusion results are improved, the learning cost is high, the mobility is poor, and the spectral principle support is lacking support. The hybrid method integrates two or more methods in the first four categories, such as FSDAF (Flexible Spatiotemporal Data Fusion) proposed by Zhu et al., which combines unmixing, weighting function, and spatial interpolation methods to reduce the input of the image and enhance the prediction of heterogeneous changes [

32]. However, the performance is still not ideal, and it cannot meet the requirement of higher precision change monitoring.

To improve the poor performance in heterogeneous region prediction, we propose an improved Spatio-temporal data fusion model SR-FSDAF (super-resolution flexible spatiotemporal data fusion). Unlike deep learning techniques, which use high-cost training to enhance the accuracy of image fusion, it maintains the simplicity of FSDAF and uses fewer image pairs and less time. SR-FSDAF inherits the hybrid model of FSDAF and improves the thin-plate spline sampling method based on MODIS spectral information to extract spatial variation details and achieves better reconstruction results consistent with resampling purposes. SR-FSDAF can more accurately predict fine-resolution images of heterogeneous regions. Different from other multi-spectral and high-resolution images, OLI and MODIS were launched earlier and allowed free access to more historical image information. Some bands of MODIS are similar to those of OLI and have the band basis for image fusion. Therefore, SR-FSDAF was tested using MODIS and Landsat-8 OLI images and compared with other fusion methods such as STARFM. Then MODIS images, OLI images, and SR-FSDAF were applied to water quality monitoring in Xinyang City to improve the utilization of monitoring frequency and low-resolution images. The NH3-N inversion model was established based on the fused image band, and the NH3-N concentration in the Huaihe River Basin of the Xinyang area was analyzed. For NH3-N concentration inversion, we compared the accuracy of statistical regression model and random forest model, and adopted random forest model to further improve the accuracy of NH3-N concentration prediction.

2. Materials and Methods

2.1. FSDAF

Zhu et al. proposed FSDAF in 2016 [

32]. In the method, fine pixels are classified into different types. Temporal variation values of each type are roughly gained by calculating the changes of different classes of ground objects reflected in the pure pixels of MODIS. The thin plate spline sampling (TPS) is carried out on the MODIS image of the prediction date to obtain the rough spatial variation value of ground objects [

39]. TPS interpolates and resamples the data based on spatial correlation to preserve the local change information of the image. The two kinds of change values are given different weights by neighborhood information, which reduces the deviation of prediction results and has more stability and spatial continuity.

However, FSDAF’s prediction quality still declines much in the case of heterogeneous mutation. Based on the idea of FSDAF, this paper used efficient ESPCN (Efficient sub-pixel convolution neural network) [

40] to replace TPS for MODIS images, which is the main information source of spatial mutation prediction, and retain more texture information, so as to improve the prediction accuracy of spatial heterogeneity.

2.2. ESPCN

ESPCN inherits the idea of the super-resolution algorithm. In the network, the high-resolution image is down-sampled to the low-resolution image, and the convolutional neural network is used to learn the mapping relationship between the low-resolution and high-resolution images so as to realize the super-resolution reconstruction of the image.

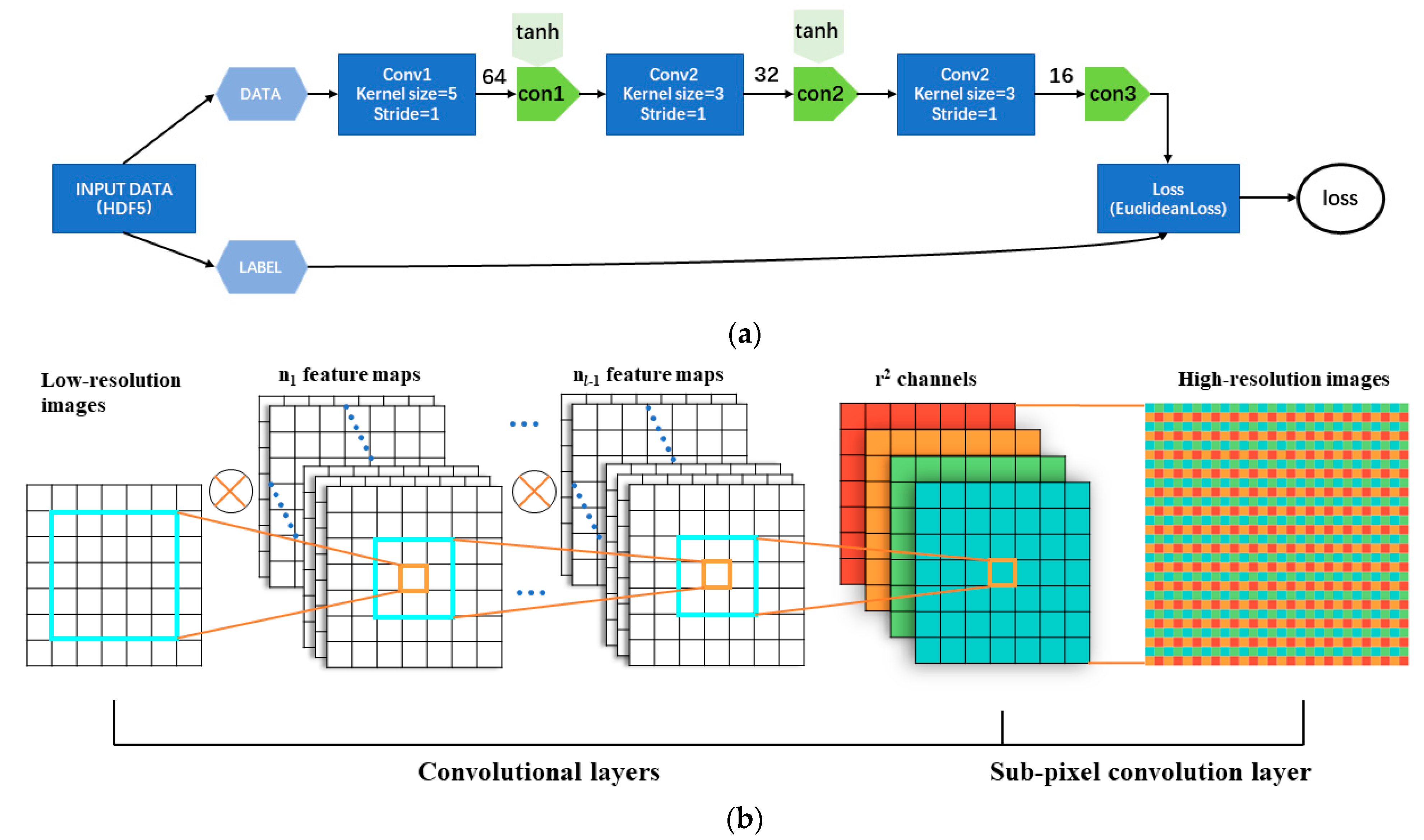

Figure 1 shows the framework and parameters of the ESPCN. ESPCN first applies the 3-layer convolutional neural network directly to low-resolution images to avoid experiencing an amplification before entering the network. After three convolution operations, the low-resolution image is mapped into a feature map with

channels, and

r is the upscaling factor. Finally, the high-resolution image is generated by a sub-pixel convolution layer. The sub-pixel convolutional layer enhances its memory for a position by periodically filtering functions, combining feature maps with channel

into high-resolution images.

The basic calculation of the network is shown in Equations (1)–(4). Equation (1) and Equation (2) follow the principle of convolution theory.

is the low-resolution image of the input network, is the high-resolution image learned by ESPCN, and is the convolution kernel function ( is the convolution layer). and are the weight and bias parameters of the convolution kernel, which are obtained by iterative learning of the network. is a nonlinear activation function. Equations (3) and (4) are function descriptions of the subpixel convolution part. is a periodic shuffle operator, which can reorder tensors of size to tensors of size .

Feeding the oversize image block to the ESPCN method will greatly affect the network effects; therefore, our paper reconstructed the input low-resolution MODIS image twice with the upsampling factor r = 4. The parameters of the network were set to = 3, () = (5, 64), () = (3, 32), and = 3, = 4. For the training samples of network learning, the image pairs are composed of the original Landsat image and the downsampled image to the 1/4 of the original image pair, and the 1/4 image and the further downsampled 1/4 low-resolution image pair. To avoid repetitive training of the original image pixels, the stride for extracting the sub-image blocks from the original image was , and the stride for extracting the sub-image blocks from the lower resolution image in the image pair was .

2.3. Improved Spatiotemporal Fusion Model SR-FSDAF

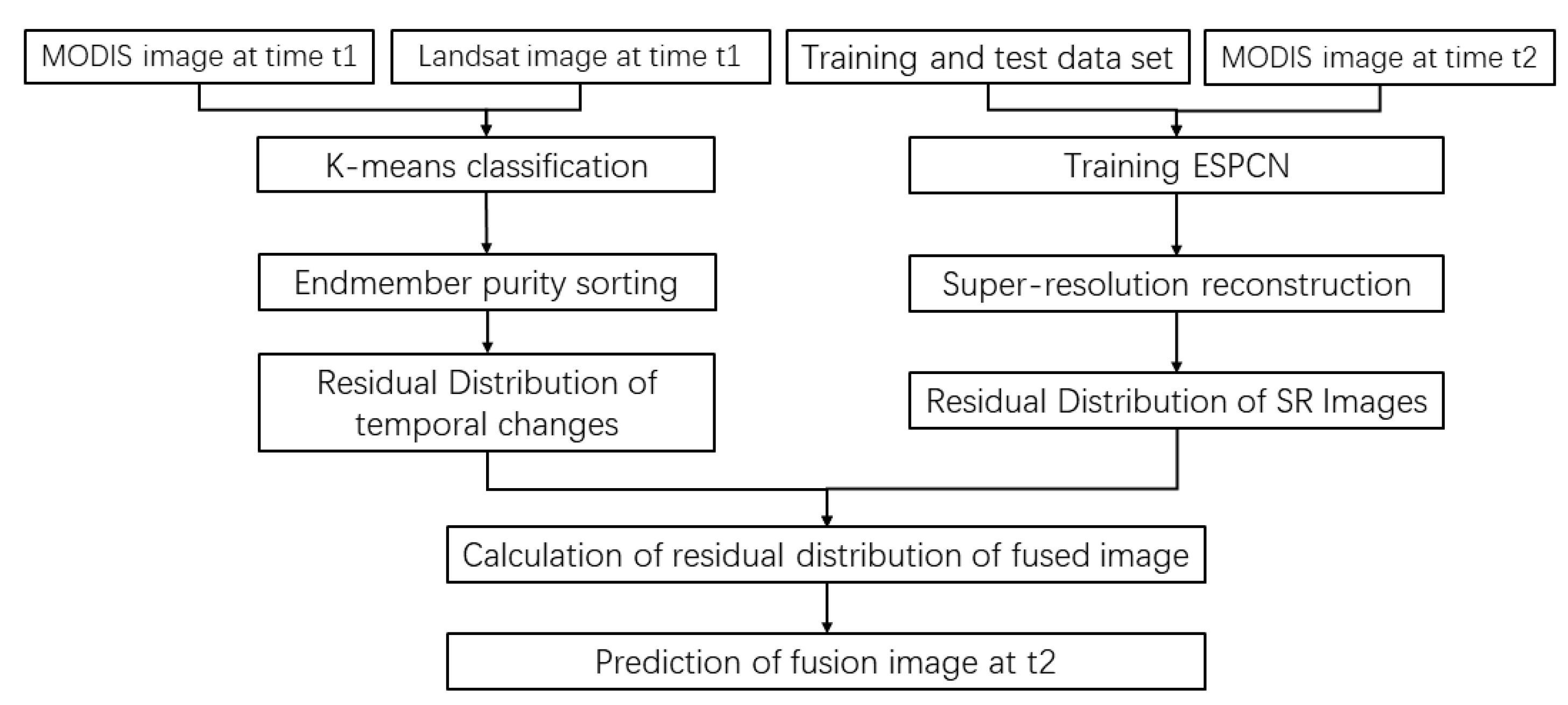

SR-FSDAF combines the super-resolution algorithm and the flexible Spatio-temporal data fusion model algorithm. The SR-FSDAF has six steps: The computing of MODIS pixel purity based on unsupervised classification, coarse estimation of the pixel temporal change, residual computing of pixel temporal changes, image reconstruction and spatial change prediction based on ESPCN super-resolution, residual distribution calculation, and enhancement and fusion based on neighborhood information. For the convenience of the description, the high spatial resolution Landsat image is defined as fine images, and the high temporal resolution MODIS image is expressed as coarse images. Landsat and MODIS images at and the MODIS image at t2 are used to predict Landsat images at t2.

Figure 2 shows the flow of the algorithm. The detailed principle of the algorithm refers to in FSDAF [

32] and ESPCN [

40]. The variables and definitions of the model are as follows:

—the pixel number of the corresponding Landsat-8 image in a MODIS pixel, which is a constant of 16;

—the coordinates of the ith MODIS pixel;

—MODIS pixel index;

—index of Landsat-8 pixels in a MODIS pixel index (: 1, 2, …, m);

, —Values of band on MODIS pixel at and ;

, —Values of the th Landsat-8 pixel in band at MODIS pixel at and ;

—the proportion of class c Landsat-8 pixels in the th MODIS pixel;

the change of the ith MODIS pixel from to at band ;

—changes of category pixels on the th band of Landsat-8 images from to , and is one of the classification results of ground objects.

2.3.1. Unsupervised Classification of Landsat Images at Time

Through image preprocessing, the spatial resolution of the MODIS image is 480 m. The spatial resolution of the Landsat image is 30 m. Therefore, the coverage area of one MODIS pixel is the same as that of 16 Landsat pixels. Based on this correspondence, we use the K-means algorithm to classify the Landsat images at time

t1 and set the number of clustering to four categories: water, farmland, buildings, and woodland.

represents the proportion of Landsat pixels of classification

c in 16 pixels (

c = 1, … 4). For calculation, see Formula (5).

is the number of Landsat pixels of classification c in 16 pixels.

The K-means method first calculates the initial mean of the categories uniformly distributed in the data space and then iterates with the principle of the shortest distance to aggregate the pixels into the nearest cluster. Recalculate the mean values of classes in each iteration and reclassify pixels with these mean values until the variance within classes meets the requirements.

2.3.2. Rough Estimation of Pixel Temporal Change

The second step is calculating the change of MODIS image reflectivity from

to

. The calculation formula shows in Equation (6).

According to the spectral unmixing principle, the spectral value of the MODIS pixel can be expressed by the weighted calculation results of the spectral reflectance value of various ground objects in the pixel. Therefore, the temporal variation of the MODIS pixel can also be calculated by the Formula (7). The

in the Formula (7) is variation values of Landsat class

objects on band

, which can be obtained by least squares inverse solution the Formula (7).

Sort the values of c-class ground objects in MODIS pixels from large to small. The larger the -value is, the higher the proportion of c-type ground objects in MODIS pixels is, and the purer the pixels are. According to the values, select n MODIS pixels from high to low to solve .

2.3.3. Residual Computing of Pixel Temporal Changes

Assuming that there is no spectral information mutation in the ground object, the prediction result of the fine-resolution image at time

is

. The calculation of the prediction result shows in Formula (8):

Usually, the spectral information of the ground objects will change between the two moments, and the resulting deviation defines as

. The calculation Formula shows in Formula (9), where

n is 16.

2.3.4. Image Reconstruction and Spatial Change Prediction Based on ESPCN Super-Resolution

Using the ESPCN network, MODIS images at time

are input to obtain higher resolution images

reconstructed by the super-resolution algorithm. The residual between the high-resolution image obtained by the ESPCN method and the true high-resolution image at

can express as

:

The ESPCN method directly applies the convolution layer to the coarse image to avoid the loss of detailed information. The sub-pixel convolution layer restores the feature map to the super-resolution image. The input MODIS images obtain a high-resolution image by three consecutive convolution operations and sub-pixel layer rearrangement. ESPCN learns the feature mapping relationship between coarse and fine images more comprehensively and can retain the spatial feature information of the input image.

2.3.5. Residual Distribution Calculation

This step uses the homogeneity index to assign weights to residual

and residual

to calculate the final residual. The difference between the two prediction results calculates as the difference residual

, which shows as Formula (11):

Use the 4 × 4 moving window to calculate the ratio

of the number of pixels consistent with the ground object category of the central fine-resolution pixel to the total number of pixels in the window as the homogeneity index. The calculation Formula is (12), where

is the central pixel of the moving window. When the pixel categories in the window are consistent, the value of

is 1, otherwise is 0.

Calculate the weight

according to the homogeneity index

(Formula (13)). The change degree of homogeneous pixels is determined based on the prediction of ESPCN super-resolution, and the change degree of heterogeneous pixels is determined based on the prediction of ESPCN super-resolution.

Normalize the

to obtain

(Formula(14)):

Calculate the residual distribution of the predicted fine resolution image based on the normalized weight

(Formula (15)). Then, calculate the change value of fine-resolution pixel

from time

to time

, as shown in Formula (16).

2.3.6. Enhancement and Fusion Based on Neighborhood Information

Use the neighborhood information to improve the prediction stability and reduce the block effect caused by calculation. For the fine-resolution image pixel

at time

,

n fine pixels with the same class and the least spectral difference with the

in the neighborhood are selected. The computation formula of spectral difference between the kth fine-resolution pixel and the similar neighborhood pixel is

, as shown in Formula (17).

The weight contribution of these similar pixels to the center pixel follows the distance principle (Formula (18)). The size of

w in

depends on the size of the neighborhood when taking 20 similar pixels. The farther the distance, the smaller the weight contribution value. After normalization, the calculation formula of weight

is (19):

After adding the neighborhood information, the final prediction image result is (20):

2.4. Inversion Models

In the process of remote sensing quantitative inversion, the accurate selection of characteristic bands is the basis for obtaining high inversion accuracy. We use the concentration the value of water quality parameter NH3-N to calculate the correlation coefficient between reflectivity and band combinations and select the band or band combination with high correlation as the modeling parameter.

The traditional statistical regression model (TSR) has been widely used in the inversion of water quality parameters [

41,

42].In this paper, the linear function, quadratic function, exponential function, and logarithmic function are used to construct the inversion model (

Table 1). The model with the highest inversion accuracy is selected as the model type of the traditional statistical regression model.

At the same time, this paper selects the random forest model with high learning efficiency as the machine learning algorithm to train and obtains the machine learning model for the back study of water quality parameters.

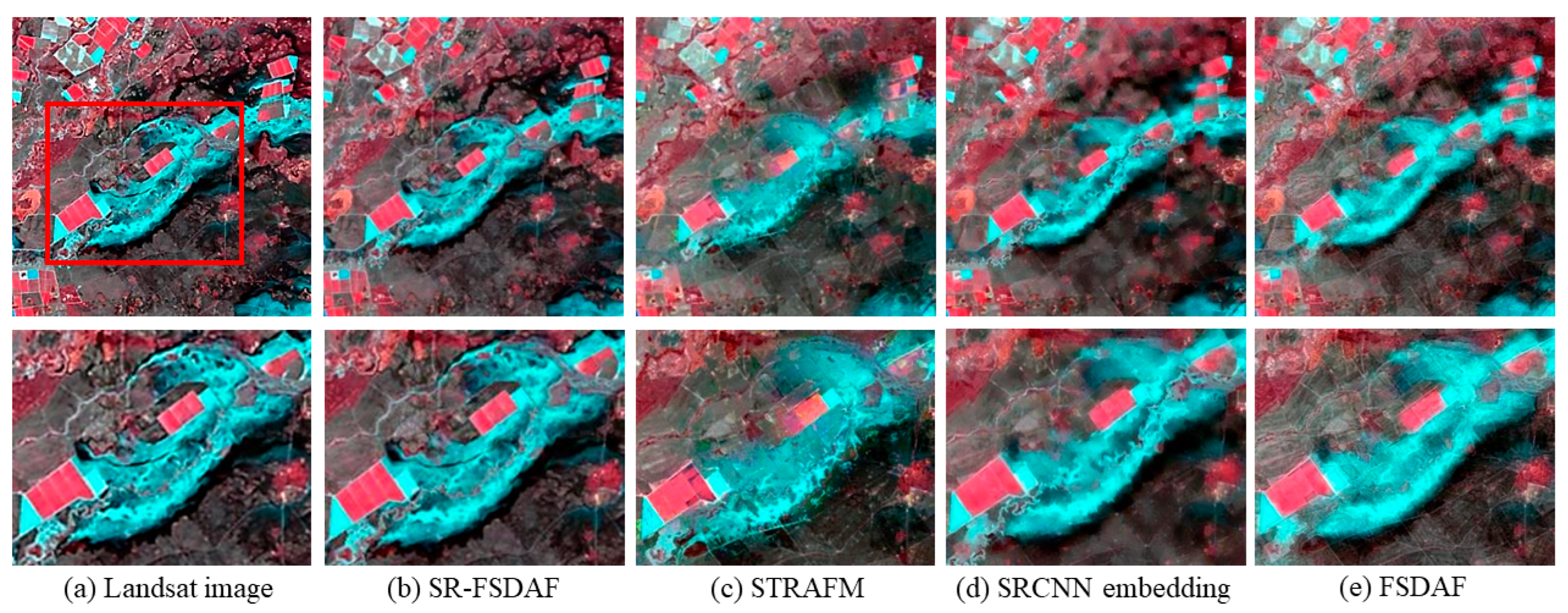

2.5. Evaluation Index

To evaluate the accuracy of the fusion method, this paper uses three algorithms: STARFM, FSDAF, and SRCNN embedding FSDAF as comparison methods. We analyze the experimental results from the qualitative evaluation and quantitative evaluation. The qualitative evaluation method compares the fusion results of different models at time with Landsat images at time . Through visual observation, we can determine whether the local details and the overall spectral difference are too large in the simulation reality. The quantitative evaluation method uses three evaluation indexes to comprehensively evaluate the overall structural similarity of the fused image, the degree of reflectivity reduction of the fusion image, and the spectral fidelity of the fusion image.

The overall structural similarity index is structural similarity SSIM, which is widely used to evaluate the linear relationship strength of two similar images. The calculation method shows in Formula (21):

and are the average values of the Landsat image and the fused image at time t2, respectively; and are the image variances of the two; and are non-0 constants used to ensure that the results are rational. The more similar the overall structure of the two images is, the closer the SSIM value is to 1.

The evaluation index of reflectivity reduction degree is the root mean square error RMSE, which reflects the simulation fusion results of pixel value reduction degree and detail information. The formula shows in (22).

is the Landsat true image, and

is the fusion image.

The evaluation index used for spectral fidelity is the spectral angle SAM (Spectral angle Mapper), which regards the single-pixel spectrum as a high-dimensional vector and calculates the vector angle of the spectral vector of the pixels in the same position of the two images. The smaller the value is, the more similar the spectrum between the pixels is. The specific angle calculation formula is as follows (23).

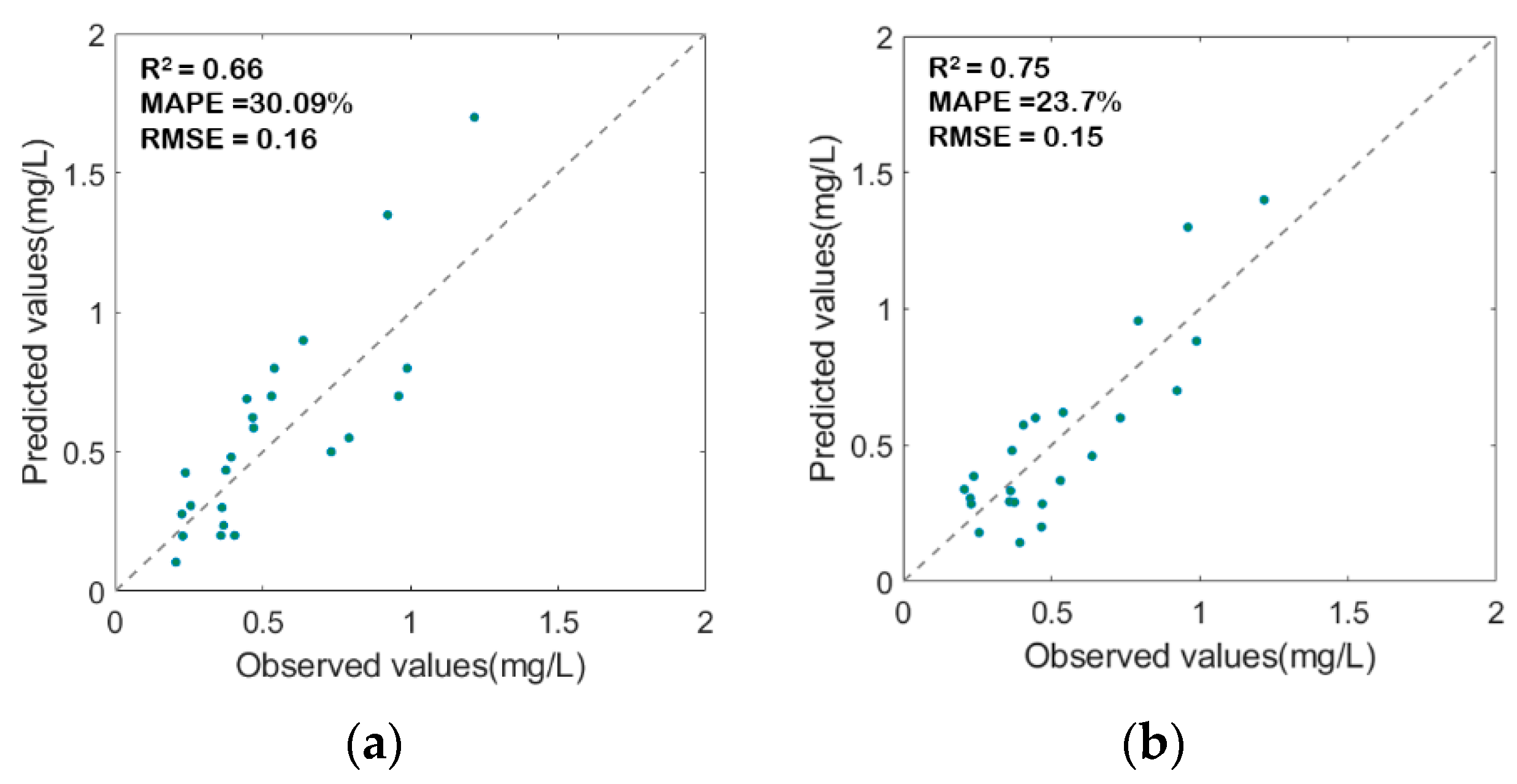

The accuracy of the inversion model was evaluated by a fitting coefficient (R

2), mean absolute percentage error (MAPE), and root mean square error (RMSE). The formula of MAPE shows in (24).

2.6. Study Area

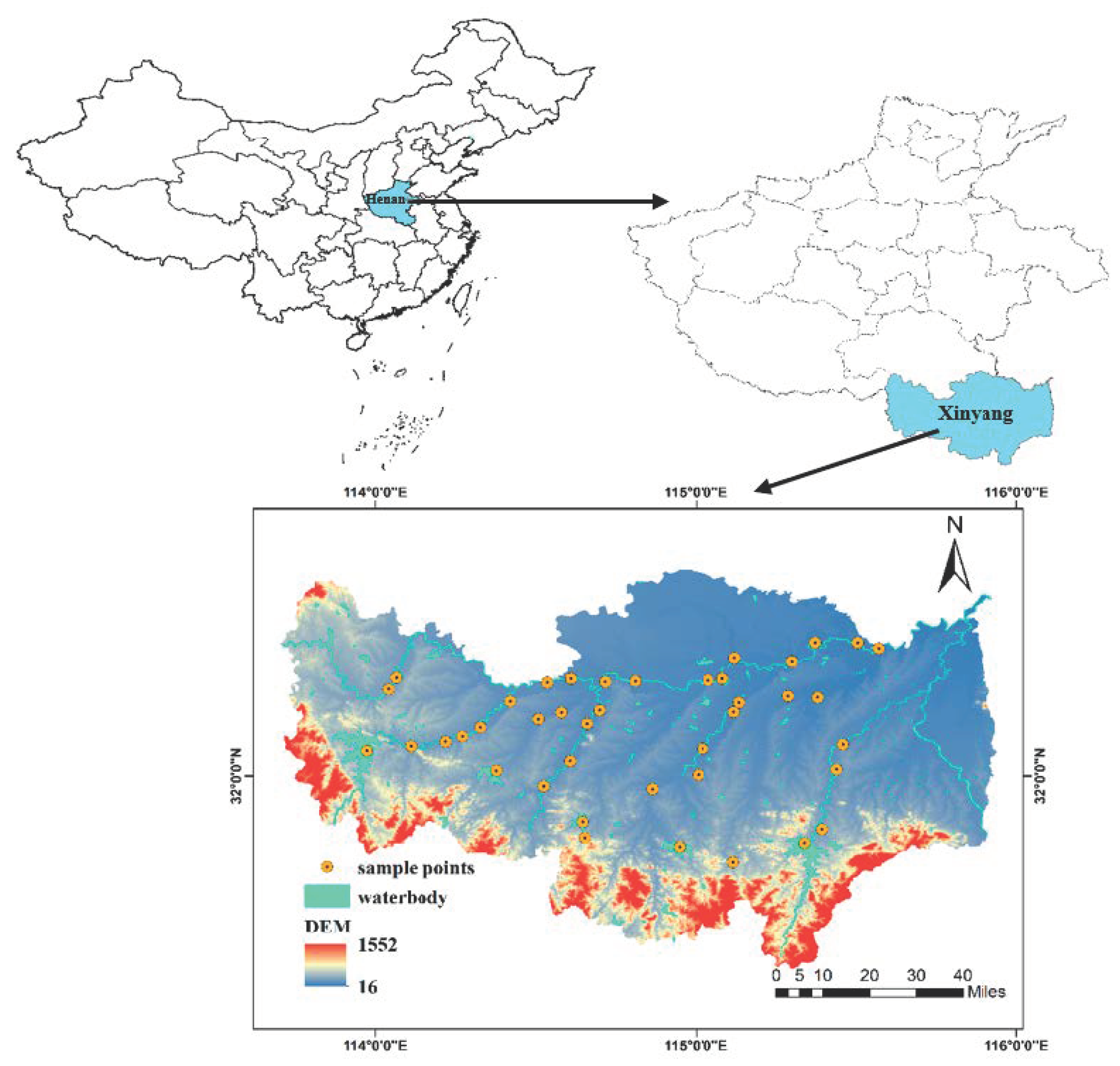

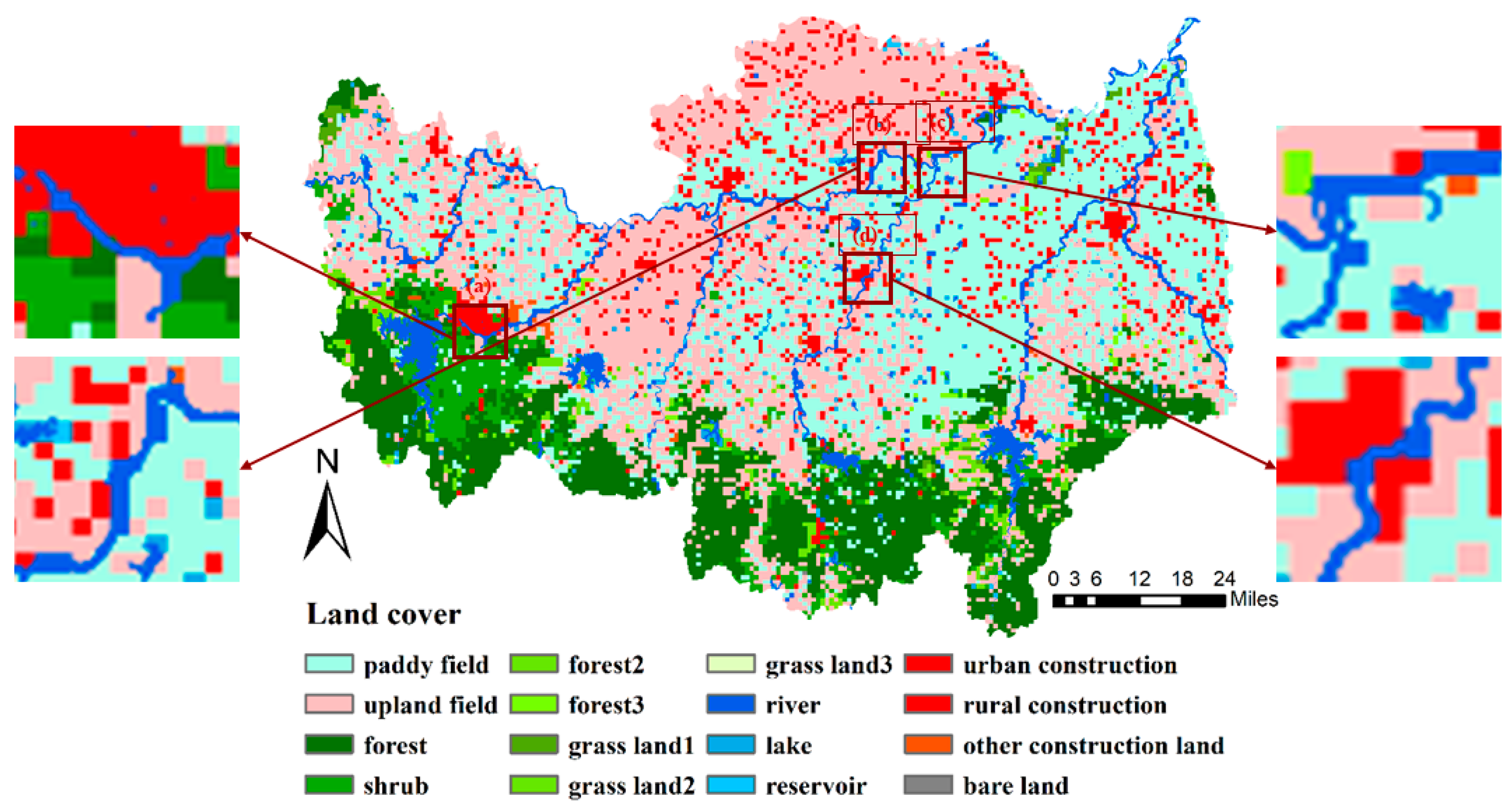

The study area is 113°45′ E~115°55′ E, 30°23′ N~32°27′ N in the Xinyang section of Huaihe River Basin. The study area is located on the boundary line between North and South China (Qinling–Huaihe Line), which belongs to the transition zone between subtropical and temperate monsoon climates and the transition zone between humid and semi-humid regions. The main tributaries in the region are shown in

Figure 2.

On 5 December 2016 and 1 January 2017, the concentrations of NH

3-N in 43 water samples were collected in the study area. The distribution of the measured sampling points and the location of the study area are shown in

Figure 3.

2.7. Landsat-8 OLI

The spatial resolution of Landsat-8 is 30 m, and the return visit period is 16 days, which is the fusion data source commonly used in the Spatio-temporal fusion algorithm. For the test of the fusion model, two sets of MODIS-Landsat image pairs are used in this paper.

The first group is the Landsat and MODIS image pairs of Xinyang City on 8 November 2017, and 24 November 2017. No heterogeneous mutation occurred during the period. The second group is the Landsat and MODIS image pairs on 26 November 2004, and 12 December 2004, in northern New South Wales, Australia, during which flood events in the region caused heterogeneous mutations.

NH

3-N inversion of remote sensing data selected less than 10% of the cloud Landsat-8 OLI data, image bands, and other specific information can be seen in the

Table 2. The selected Landsat-8 OLI images were preprocessed, such as atmospheric correction.

2.8. MODIS

MODIS has a spatial resolution of 500 m and a return visit period of 1 day, a commonly used fusion data source for Spatio-temporal fusion algorithms.

For the fusion model and NH

3-N inversion experiments, this paper uses MODIS daily surface reflectance data on the same date as the corresponding Landsat. The selected MODIS data has been preprocessed, and the MODIS is reprojected and resampled to 480 m to facilitate matching and calculation with Landsat image pixels with a spatial resolution of 16 m. The specific information of MODIS is shown in the

Table 3.

4. Discussion

The reflectance characteristics of different concentrations of water quality parameters in a specific wavelength range are the analytical basis for the quantitative inversion of water quality parameters using the spectral information of remote sensing images. Our study shows that the sensitive bands of NH3-N are Blue, Green, Red, and NIR, showing the combined frequency characteristics of nitrogen-containing functional groups.

In the actual research process, remote sensing inversion is completed by establishing effective connections between the point data obtained by field sampling and the surface data of remote sensing pixels with different spatial resolutions. The difference between the sampling and the satellite transit time, the limited water quantity of inland water, and the significant Spatio-temporal changes cause the error of inversion results. Use the Spatio-temporal fusion method to reduce the bias and reflect the change in water quality parameters better, which is the significance of this study. The time resolution of Landsat images is increased by using the Spatio-temporal algorithm and generating a series of high-frequency sequential images for water quality inversion.

The SR-FSDAF model has better visual effects and index results than the STARFM, SRCNN embedding model, and FSDAF in the case of non-heterogeneous mutation and heterogeneous mutation. For non-heterogeneous mutation images, RMSE, SSIM, and SAM of SR-FSDAF are 0.03 (mean), 0.976 (mean), and 3.417, respectively, and for heterogeneous mutation regions, RMSE, SSIM, and SAM are 0.021 (mean), 0.810 (mean) and 7.439, respectively.

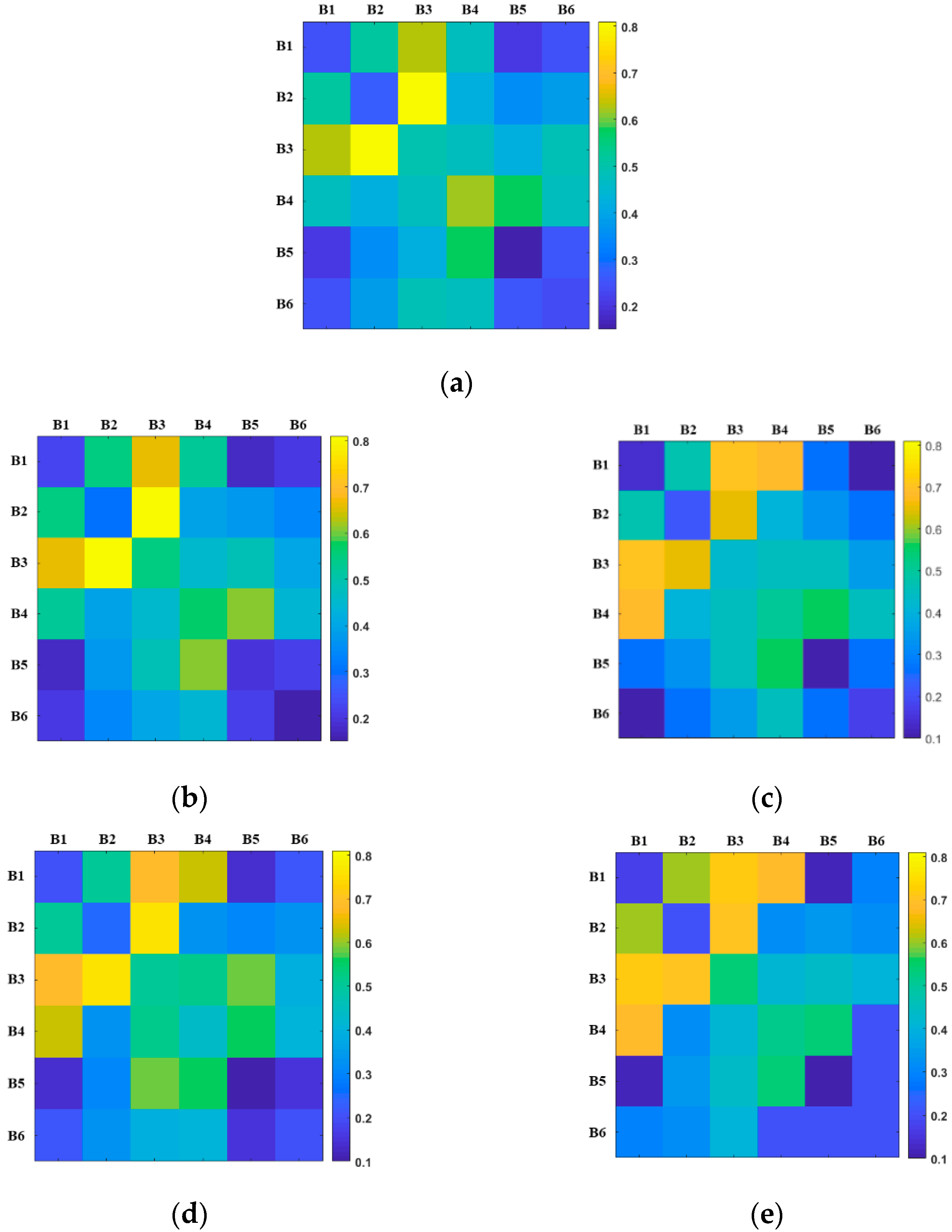

To prove the advantages of the SR-FSDAF fusion method for water quality monitoring, STARFM, SRCNN embedding model, FSDAF, and SR-FSDAF fusion image are used to calculate the correlation coefficient distribution of different band combinations and NH3-N. The most sensitive band combination and correlation of SR-FSDAF are highly consistent with Landsat-8 images. Therefore, the SR-FSDAF method can be used for quantitative inversion of water quality parameters.

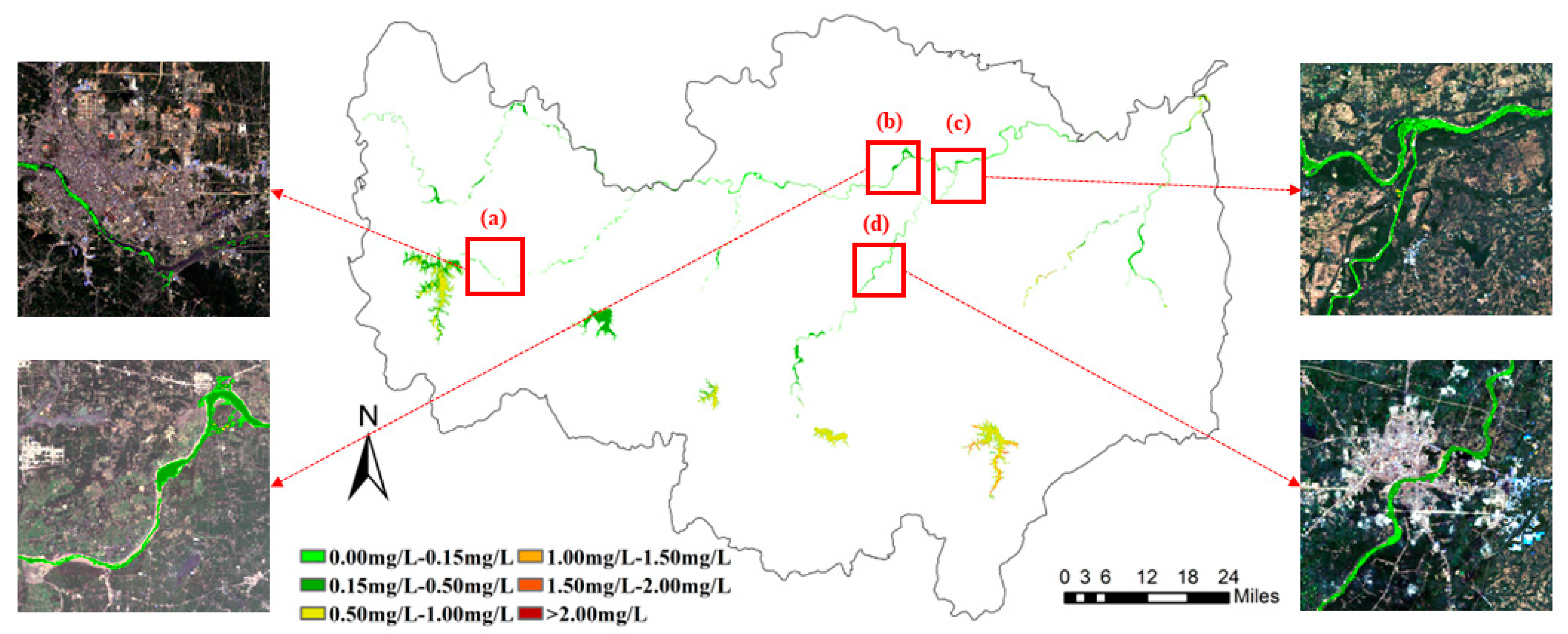

The change in water quality is closely related to the evolution of the surrounding environment. NH

3-N is an essential fertilizer for crop growth and a common component in industrial and domestic sewage. The concentration of NH

3-N in water is often affected by sewage discharge from human production and life and drugs and fertilizers used in agricultural activities. To further analyze the temporal and spatial variation characteristics of water quality in Xinyang City, we selected four regions (

Figure 9a–d). According to the 1 km-land use classification map of Xinyang City in 2017 (

Figure 10) and field survey, region (a) is the main industrial region. (b) and (c) are farmland on both sides of the river, mainly dry and paddy fields. The river in (d) passes through residential areas.

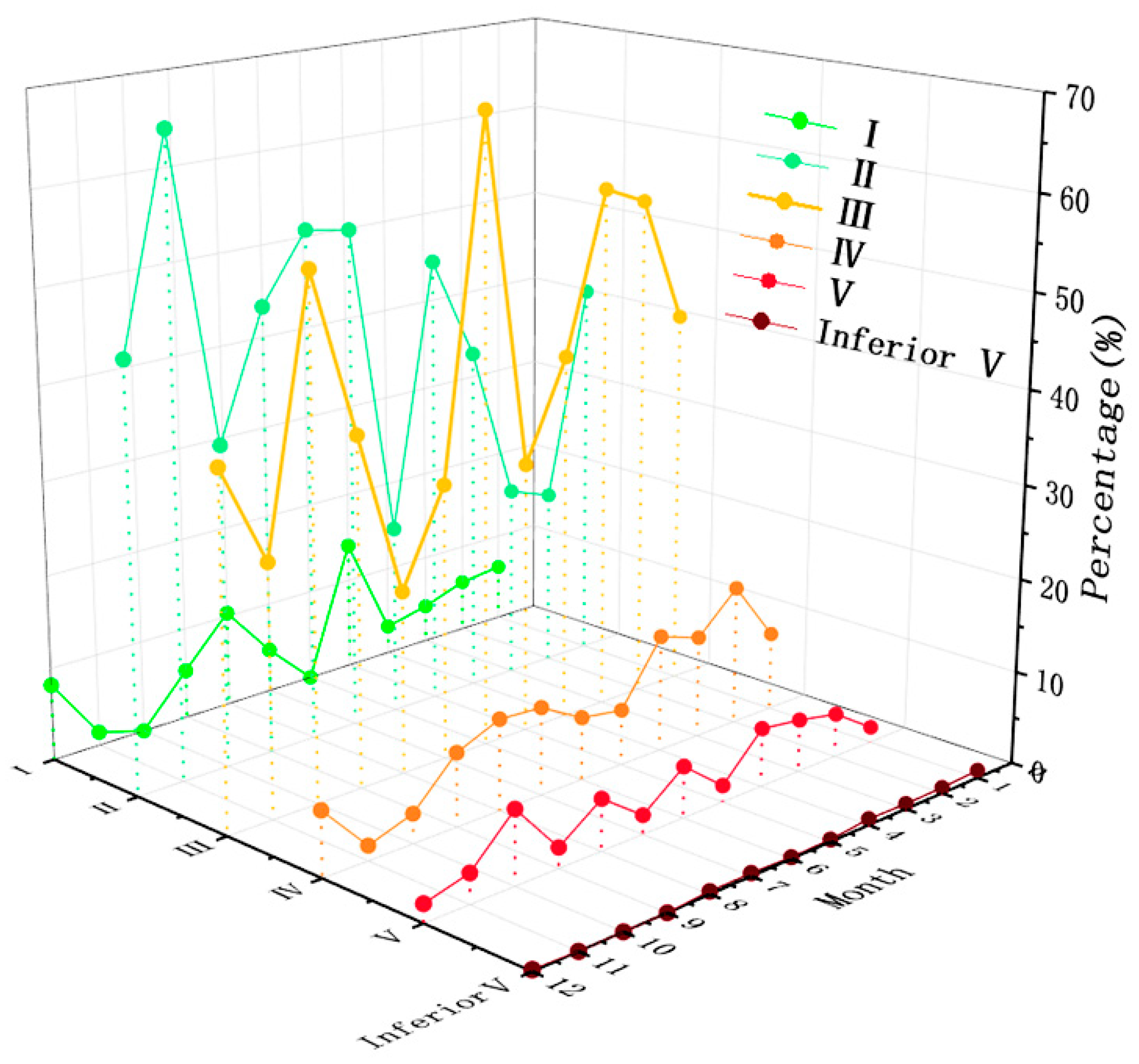

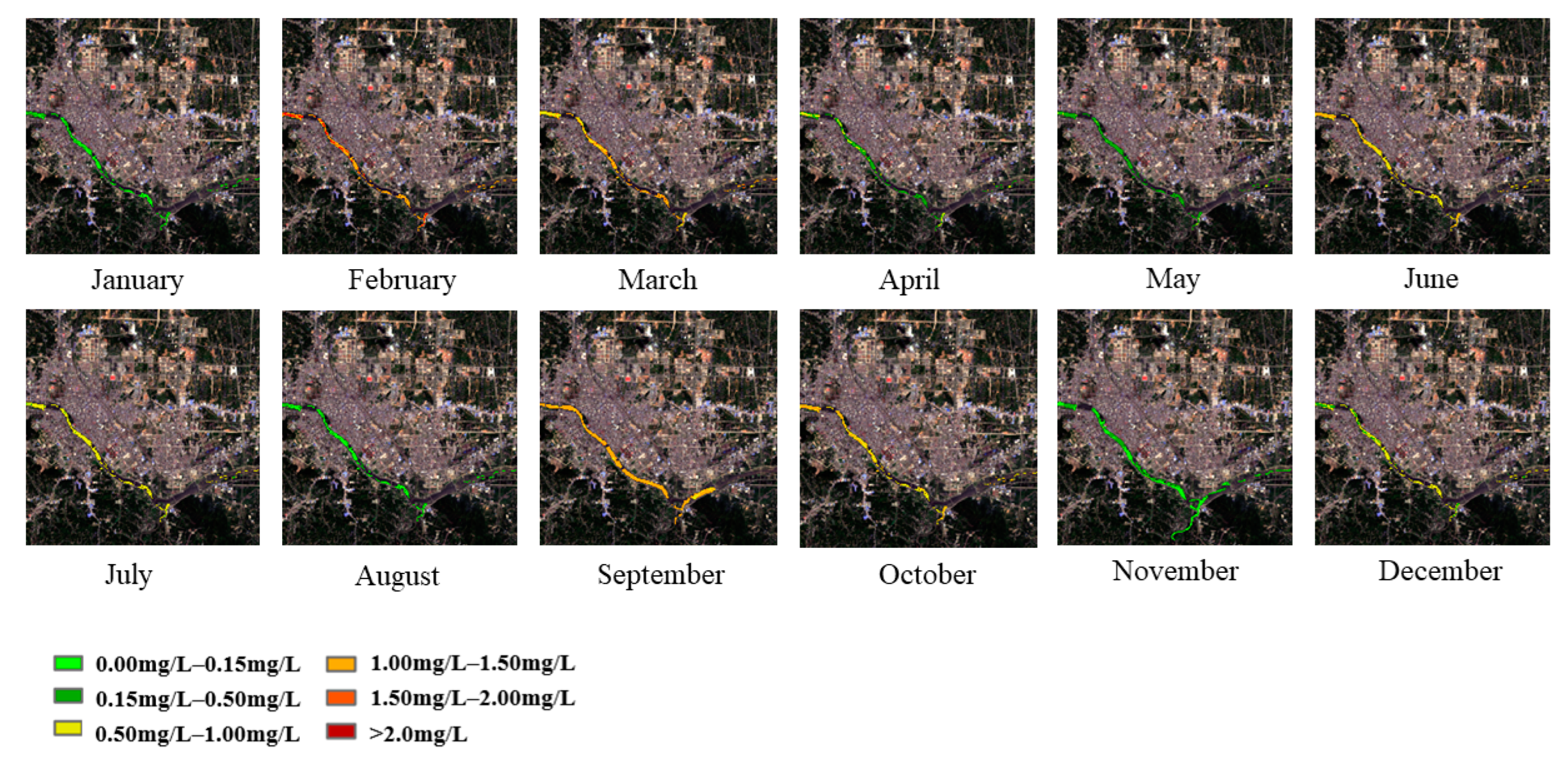

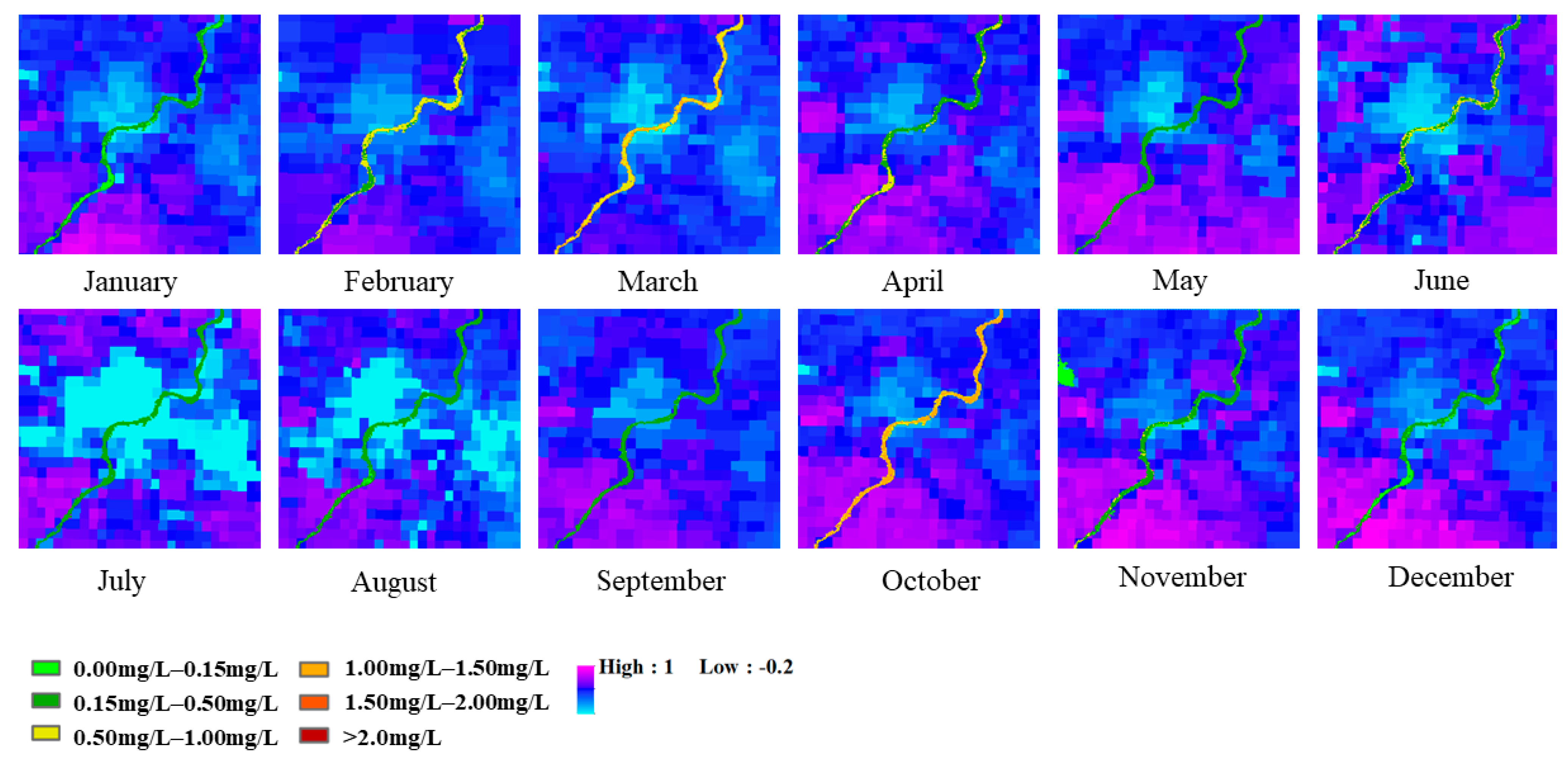

Figure 11,

Figure 12,

Figure 13 and

Figure 14 show the monthly NH

3-N concentration changes in the four regions.

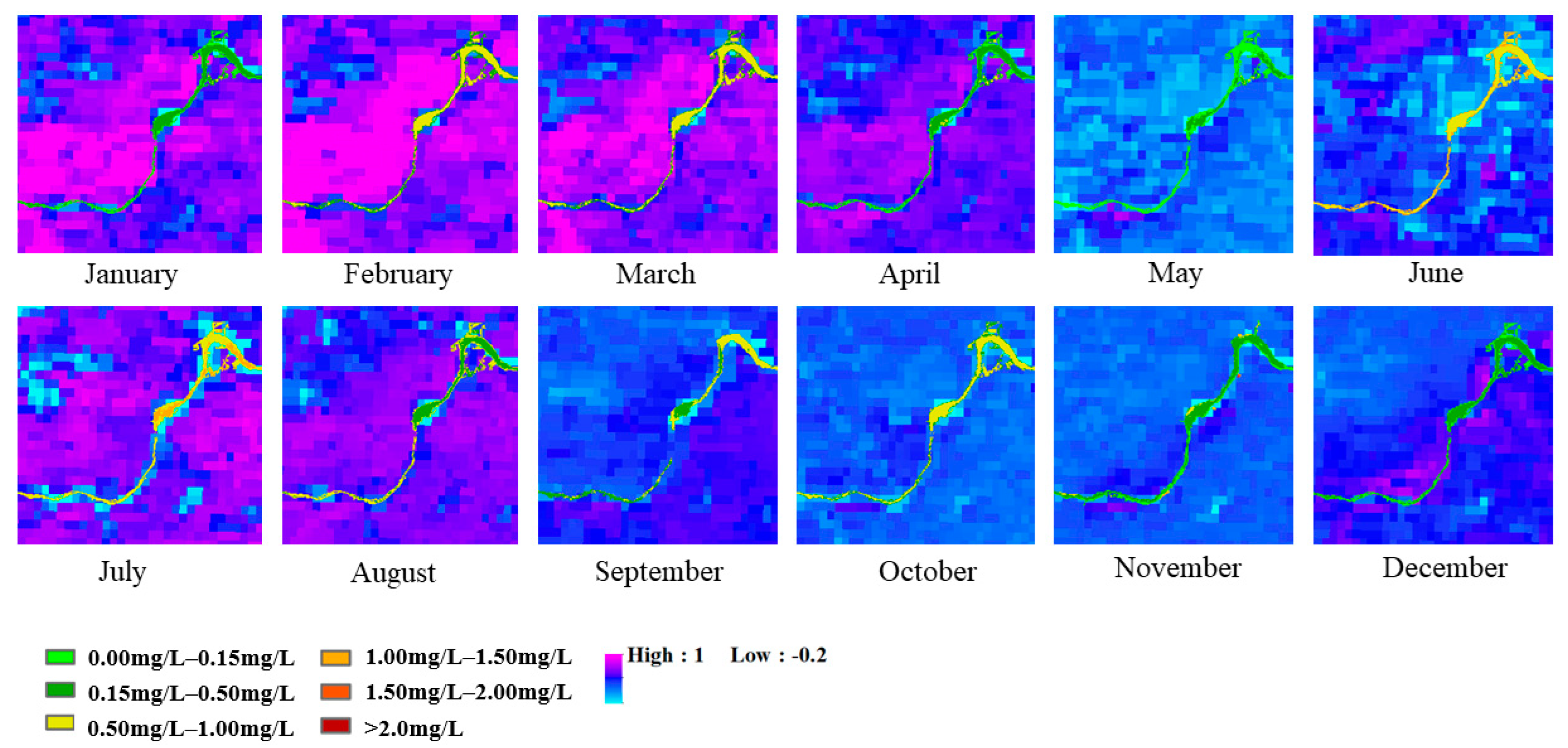

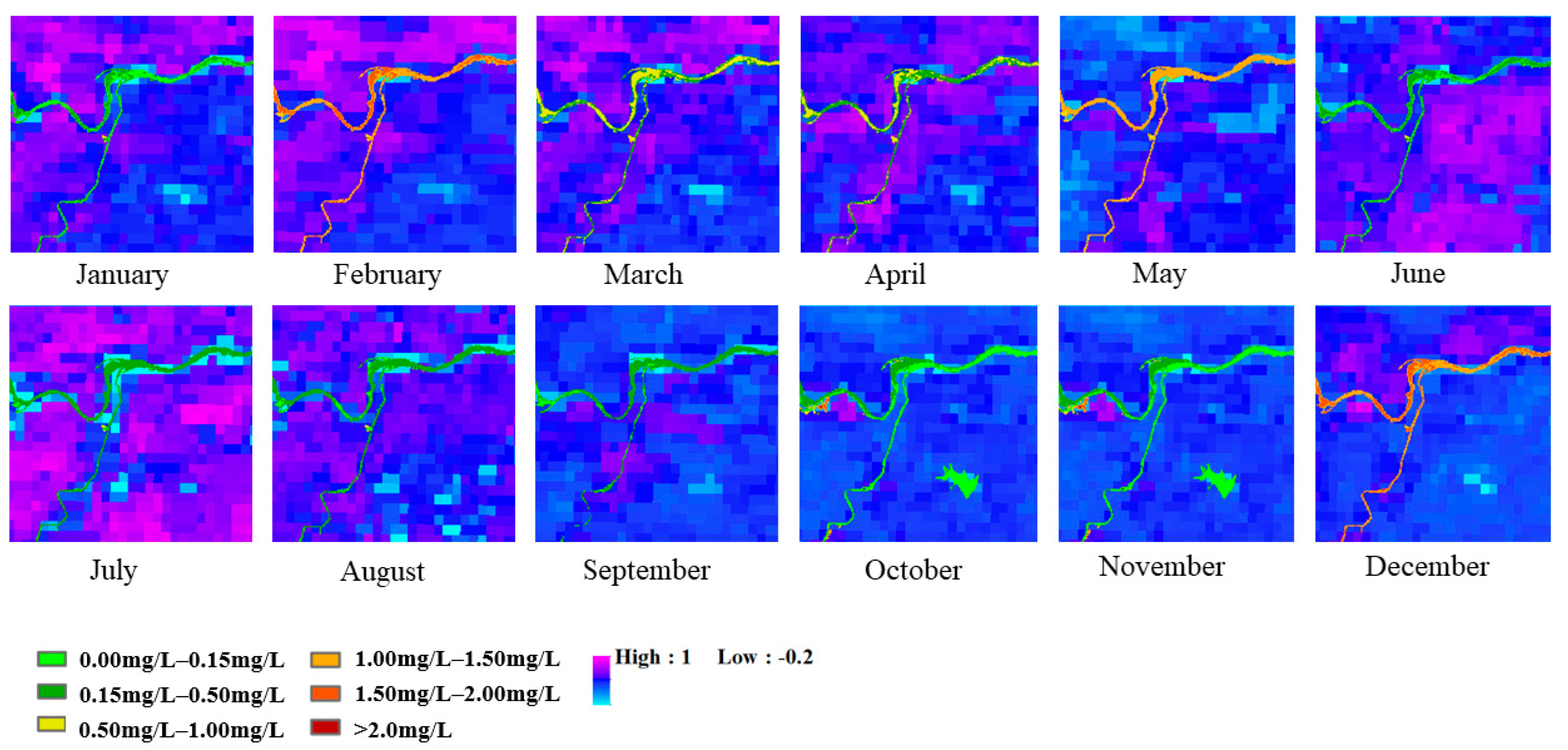

Figure 12,

Figure 13 and

Figure 14 contain more farmland, so we compare the NH

3-N concentration and NDVI results to verify its relationship with agricultural activities. According to the subtropical and temperate monsoon climate in the same period of rain and heat in Xinyang City, if there is no interference from human activities, the change of NH

3-N concentration is mainly affected by the amount of river water, which is higher in December to February and lower in June to August.

In

Figure 11, the river mainly flows through industrial production areas, where NH

3-N concentrations are highest in February, March, September, and October. NH

3-N concentration was low in January, May, August, and November. The NH

3-N concentration in this area did not show obvious seasonal variation and was mainly affected by industrial wastewater discharge.

Figure 12 is mainly farmland area. According to the land use data, the area is mainly dry land, and the main crop is wheat. It can be seen that during the growth period of wheat from January to April and the maturity period of wheat in July, the concentration of NH

3-H is higher due to the use of fertilizer.

The area in

Figure 13 is mainly a paddy field, planting crops for rice. During the rice growing season from February to July, the NH

3-N concentration in the river was higher.

Figure 14 shows the mixed area of residential land and farmland. The annual variation of NH

3-N concentration in this area is relatively gentle, and there is no obvious seasonal variation or agricultural production law, which belongs to the area affected by many factors.

Figure 12 and

Figure 13 show the partial interception of Huaihe River, which is the largest river in the Xinyang area with a large water volume and fast flow velocity. Due to the uneven distribution of water quality and the difference in flow velocity, the difference in NH

3-N concentration at the edge and center of the river is obvious.

Overall, during the rainy season, the NH

3-N concentration was diluted by rainwater, and the overall concentration was lower than in other periods; the concentration of NH

3-N was the highest in the dry season, and the concentration changed gently. In addition to the impact of human activities, during the agricultural production period from January to August, pollution, such as chemical fertilizers in farmland, will lead to the increase in NH

3-N concentration in water, which corresponds to the research results of other scholars [

20]. The irregular high concentration of NH

3-N in cities is mainly caused by industrial wastewater discharge.