1. Introduction

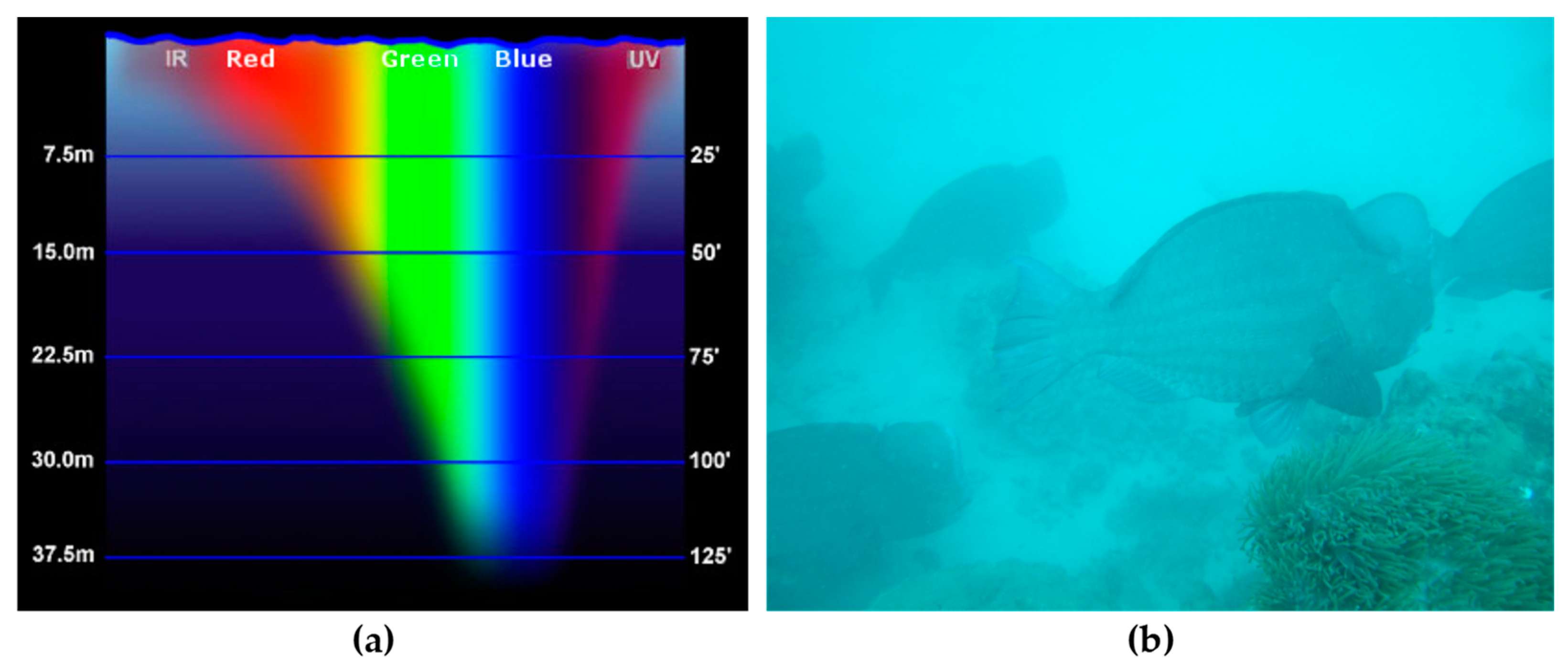

As with light propagating through the air, underwater light propagation is affected by scattering and absorption. Nonetheless, absorption and scattering have enormous magnitudes. While light attenuation coefficients in the air are measured in inverse kilometers, they are measured in inverse meters in an underwater environment [

1]. Such severe light degradation creates significant challenges for imaging sensors attempting to acquire information about the underwater area of interest. Unlike air, water is transparent to the visible spectrum but opaque to all other wavelengths. The visible spectrum’s constituent wavelengths absorb at varying rates, with longer wavelengths absorbing more rapidly. Light energy decays in water in truly remarkable ways. By the time light energy reaches a depth of 150 m in the crystal-clear waters of the middle oceans, less than 1% of light energy remains. Thus, in oceans, the visibility is reduced beyond 20 m and in turbid coastal water the visibility is reduced beyond 5 m. Additionally, no natural light from the sun reaches below 1 km from the sea. As a result, the amount of light contained within water is always less than the amount of light above the water’s surface. This leads to the poor visual quality of the underwater images [

2]. Underwater, light is typically scarce due to two unavoidable facts. One, light loses its true intensity when it is submerged, and two, the likelihood of light scattering within water is quite high. This insufficient amount of light has an immediate effect on the colour distortion and illumination of the underwater scene’s visibility. The absorption of light energy and the random path change of light beams as they travel through a water medium filled with suspended particles are two of the most detrimental effects on underwater image quality. The portion of light energy that enters the water is rapidly absorbed and converted to other forms of energy, such as heat, which energizes and warms the water molecules, causing them to evaporate. Additionally, some of the light energy is consumed by the microscopic plant-based organisms that utilize it for photosynthesis. This absorption reduces the underwater objects’ true colour intensity. As previously stated, some light that is not absorbed by water molecules may not travel in a straight line but rather follows a random Brownian motion. This is due to the presence of suspended matter in water. Water, particularly seawater, contains dissolved salts and organic and inorganic matter that reflects and deflects the light beam in new directions and may also float to the surface of the water and decay back into the air [

3]. The scattering of light in a liquid medium is further classified into two types. Forward scattering refers to the deflection of the light beam after it strikes the object of interest and reaches the image sensor. Typically, this type of scattering causes an image to appear blurry. The other type of scattering is backscattering, which occurs when the light beam strikes the image sensor without being reflected by the object. In some ways, this is the light energy that contains no information about the object. It merely contributes to an image’s degraded contrast.

One way to improve visibility underwater is to introduce an artificial light source, though this solution introduces its own complications [

4]. Apart from the issues mentioned previously, such as light scattering and attenuation, artificial light tends to illuminate the scene of interest in a non-uniform manner, resulting in a bright spotting in the image’s center and darker shades surrounding it. As lighting equipment is cumbersome and expensive, they may require a constant supply of electricity, either from batteries or a surface ship.

As a result, the underwater imaging system is no longer only affected by low light conditions, severely reduced visibility, diminishing contrast, burliness, and light artefacts, but also by colour range and random noise limitations. As a result, the standard image processing techniques that we rely on for terrestrial image enhancement must be modified or abandoned entirely, and new solutions must be developed. Significantly, increasing the quality of underwater images can result in improved image segmentation, feature extraction, and underwater navigation algorithms for self-driving underwater vehicles. Additionally, offshore drilling platforms may benefit from improved imagery for assessing the structural strength of their rigs’ underwater sections.

The absorption of light energy and the dispersion in the media contribute to the scattering effect which may be forward or backward scattering as shown in

Figure 1. The forward scattering of light (also known as random dispersion) is higher in water than in air and that also results in further blurring of the images. Backward scattering is the fraction of light that is reflected back to the camera by water molecules before it hits the object of interest [

5]. Back scattering gives a hazed appearance to the captured image due to the superimposition of reflections.

The images which are considered for analysis in this paper are underwater images. Absorption and scattering are further catalyzed by other elements such as small floating particles or dissolved organic matter. The floating particles present recognized as “marine snow” (highly variable both in nature and concentration) is one common factor that increases the effects of absorption and scattering. Artificial lighting used in underwater imaging is another cause for blurring due to scatter and absorption. Artificial lighting from point sources also causes non-uniform lighting on the image, often giving a brighter center compared to the edges.

In this segment, a few challenges existing in underwater images, like light absorption and the effect of an implicit marine structure, are discussed. White [

6] suggests that marine structure strongly affects light reflection. The water influences the light to either create crinkle patterns or to disperse them, as shown in

Figure 2. Water quality such as sprinkling dust in water [

7] also regulates and determines the features of water filtration. Moreover, the transmitted light is partly horizontally polarized and partly penetrates the water vertically. A vertical polarization enables the object to be less shiny, in turn enabling to retain dark colors which would have been marginalized otherwise.

The density of the medium also plays a vital role in lowering the resolution of the underwater images. The density of water, which is 800 times that of air, induces light to be partly reflected when it travels from the air to water. As we move deeper into the sea, the amount of water entering the camera also decreases. This causes deep-sea images to look darker. Moreover, colors drop off systematically as a function of wavelength with depth. Since color is marked by a band of wavelengths, color degradation occurs with imaging depth.

Table 1 shows the wavelength of different colors in the spectrum. The phenomenon of color dropping can be explained by considering a beam of light entering the sea/water [

8]. As light enters the heavier medium (water), the light gets scattered. This phenomenon is referred to as backscattering in the area of photography where the rays of light are reflected back in the direction of the origin of light. Torres-Méndez and Dudek [

9] report that, at a depth of 3 m, all the red color vanishes (highest wavelength). At the depth of 5 m, the orange color begins to fade. On moving deeper to 5 m, the orange color is completely lost and yellow becomes more vulnerable [

10,

11]. That also fades off at a depth of about 10 m, followed by green and purple disappearing further down the water level. Thus, the shorter the wavelength of the color, the longer the distance it travels. This results in a phenomenon where the color with the shortest wavelength dominates the image. [

12] Blue has the shortest wavelength and a domination of blue color gives the image a low brightness. This paper mainly aims at the reconstruction of underwater images which are caused due to the poor lightening and dust in the deep-water bodies. The algorithm adopted for the removal of noise from the image bypasses the image through a trigonometric bilateral filter. Later, the images are enhanced by applying CLAHE. The experimental analysis and results are discussed in the following sections.

Generally, an image taken underwater always degrades in quality. It loses the true tonal quality and contrast necessary for distinguishing the image’s subject. When neighboring objects have very small differences in pixel intensity values, the situation becomes more difficult. This situation significantly complicates the task of extracting finer details from the data and degrades the performance of the algorithms used to extract information from images. As a result, there is a pressing need for underwater images to be processed in such a way that they accurately represents their tonal details. Underwater imagery is used for a variety of purposes, including the investigation of aquatic life, the assessment of water quality, defense, security, and so on. As a result, images or videos obtained to accomplish these objectives must contain precise details.

The purpose of this research is to develop an improved enhancement technique that is capable of operating in a variety of conditions and employs a standard formulation for evaluating the quality of underwater images. In this work, a hybrid solution is proposed. By training a deep learning architecture on image restoration techniques, a function for image enhancement is learned. A dataset consisting of pairs of raw and restored images is used to train a convolutional network, which is then capable of producing restored images from degraded inputs. The results are compared to those obtained from other image enhancement methods, with the image restoration serving as the system’s ground truth.

3. Proposed Method

In our method, we address two main issues in underwater images, namely noise in the image due to the scattering of light and poor contrast of the image due to low lighting. The noises in an image can be removed in several ways. The most used method of noise removal includes the filtering method, anisotropic diffusion, based on nonlocal averaging of pixels, wavelet transforms, block matching algorithms, deep learning [

38], etc. The dataset used for this experiment is taken from the underwater surveillance database of airis4D (airis4d.com, accessed on 22 May 2021). The most effective way of noise removal is by convolving the image with filters. These filters are mainly low pass filters. A low pass filter is also called a blurring or smoothing filter. The filters can be mainly of two types, linear and non-linear. A linear filter is considered to be more efficient in noise removal compared to a non-linear filter. The best example for a linear filter is the Gaussian filter which works on the Gaussian function.

The Gaussian filter has the advantage that its Fourier transform is also a Gaussian distribution centered around zero frequency. This is referred to as a low pass filter as it attenuates the high-frequency amplitudes. A bilateral filter on the other hand is an edge-preserving filter that replaces each pixel value with the average intensity of its neighboring pixel values. In the proposed method, a bilateral filter with a gaussian trigonometric function is used. This makes the computational complexity of O(N) which is more efficient than the state of art bilateral filtering methods. A non-linear low pass filter on the other hand is a filter whose output is not a linear function to its input. This includes the noise in the domain continuous and discrete as well. Examples of non-linear filters include the median filters, rank-order filters, and Kushner–Stratonovich filters. Other low pass filters include the Butterworth low pass filter, ideal low pass filter, etc. To remove the dust from the high pass filtered image, we introduce a convolutional neural network. To train the network, we create and use a synthetic dataset of 15,000 rainy/clean and dust/clean image pairs. Finally, the denoised high pass filtered image is the resultant dust removed image. CLAHE is applied to remove partial noises, thus improving the contrast of the image.

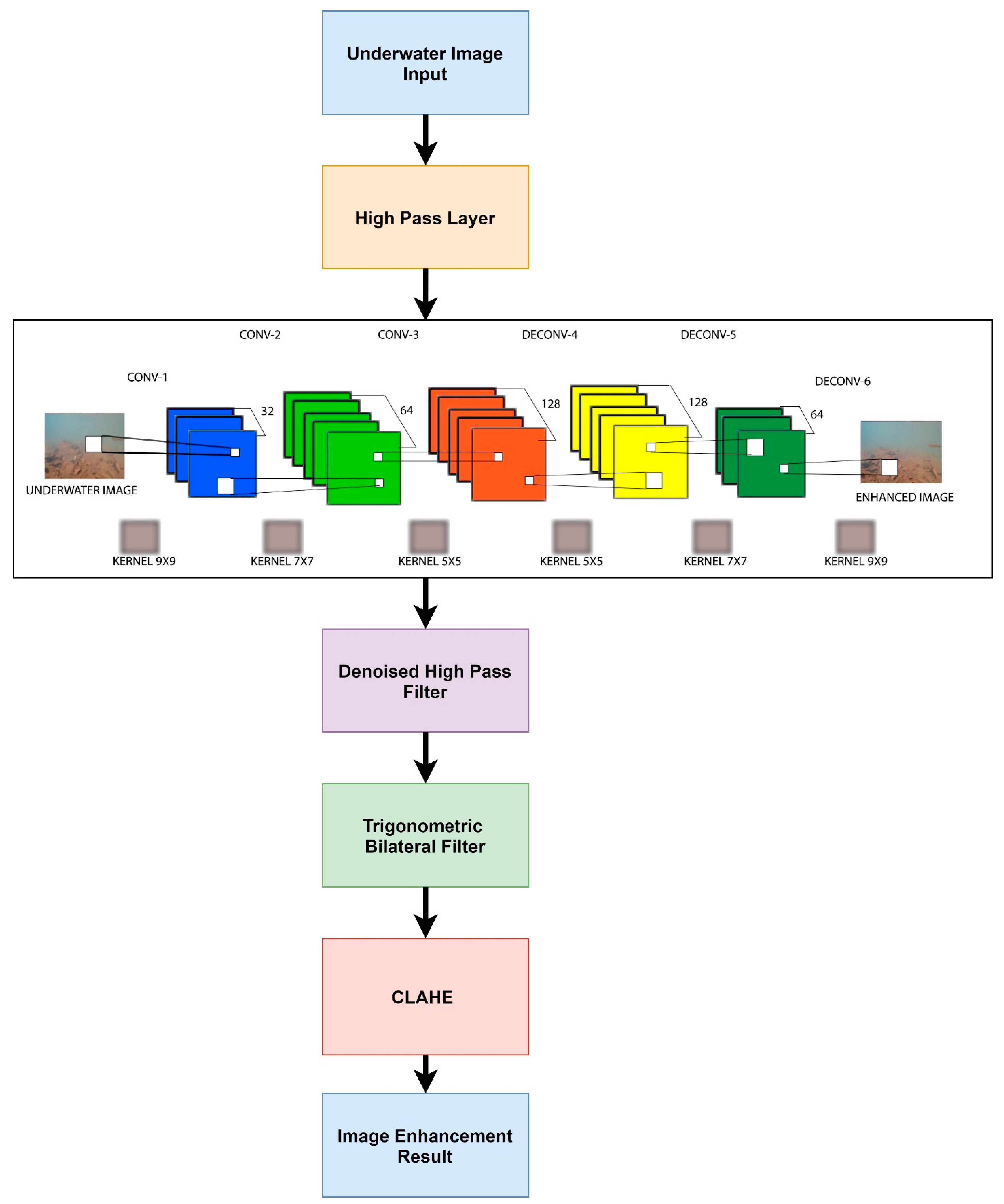

The proposed method uses the deep learning method to remove the noise and haze from underwater images. The proposed method is said to outperform the existing method based on its run time complexity and increased resolution of the reconstructed image. The computational complexity of the traditional bilateral filter is O(N2). The proposed method applies a trigonometric filter which has a complexity of O(N) This proves that it is more efficient than the state-of-the-art bilateral filtering methods. The noise in the underwater images caused by particles such as dust, sediments, and haze is removed by passing the image through a convolutional neural network (CNN).

Figure 3 describes the schematic representation of the proposed framework. In the figure, the input is first passed to a high pass filter layer. For accomplishing this, the image is first converted to its frequency domain. The high pass filter makes the image appear sharper, emphasizing the fine details of the image by using a different convolutional kernel. The CNN is used to remove the unwanted noise in the image. The images that are considered for the noise removal are mainly 3-dimensional images. The images are fed to a convolutional neural network for the removal of noise. The CNN architecture consists of a low pass filter for the process of convolution. The size of the filter depends on the sigma σ (standard deviation) of the image (ideally the value of sigma is taken as 1). The filter size is calculated as 2 × int(4 × σ + 0.5) + 1. This computes the filter size to be 9 × 9. The filter is computed based on the bilateral trigonometric method. The feature map which is produced by sliding the filter over the input image is the dot product taken between the filter and the parts of the input image with respect to the size of the filter. In the proposed method, the network consists of 3 convolutional layers. The first layer consists of the input image of size 176 × 320 × 3. At each layer, the image is convolved with the filter of size 9 × 9. The activation function used for the proposed method is ReLu, which replaces all negative values of the matrix as zero. The images also undergo the process of padding in-order to retain the size of the original images. The image is then passed through a denoised bilateral filter which reduces noise in the image through a blurring effect. It replaces the intensity of each pixel in the image using the weighted average of its neighboring pixels. This weight is based on a Gaussian distribution. The last phase of the network is to apply CLAHE which improves the lost contrast of the image due to noise removal. The resultant image is an enhanced and noise-removed image. A detailed theory of the proposed method is described below.

We have used the method to enhance underwater surveillance videos to get clearer images for analysis. For this purpose, the video is first converted into frames and a trigonometric noise removal function [

39] is applied as described in

Section 3. The images are finally enhanced using the CLAHE algorithm described in

Section 3. The frames are then put back to obtain the video as the result.

3.1. Noise Removal

The noise present in the images is eliminated by using a trigonometric filter. This filter uses a combination of bilateral filters applied on a Gaussian function. The edge-preserving bilateral filter includes a range filter other than a spatial filter. This enables to avoid the averaging of nearby pixels whose intensity is equal to or close to that of the pixel of interest.

As the range pixel operates on pixel intensities, it makes the process of averaging nonlinear and computationally better, even if the spatial filter is large.

In this paper, we have used trigonometric range kernels on the Gaussian bilateral filter. The general trigonometric function is in the form of f(s) = a

0 + a

1cos(γs) + … + a

Ncos(Nγs). This can be expressed exponentially as

Coefficients

must be real and symmetric since f(s) is real and symmetric. The trigonometric kernel applied on the bilateral filter is based on the raised cosine kernel function. Moreover, f(s) have some additional properties to qualify as a valid range kernel. This includes that the function f(s) should be positive or non-negative and must also be monotonic. This ensures that it behaves like a spatial filter in a region having uniform intensity. The raised cosine of the trigonometric property helps to keep the properties of symmetry, nonnegativity, and monotonicity. This is explained by Equation (2).

A bilateral filter is a non-linear filter that smoothens the image by reducing noise and preserving the sharp edges of the image. The intensity of every pixel is replaced by the average weighted value of the intensity of its neighboring pixels in an image. The weights are calculated based on a Gaussian distribution.

There are two parameters that a bilateral filter depends on, σs and σr. The σr is the range parameter and σs is the spatial parameter. The Gσr represents the range kernel Gaussian function which is used for smoothing the differences in intensity value between neighboring pixels. Gσs is the Gaussian function for smoothing the differences in coordinates in the image. The dependency of σs and σr on the bilateral filter can be explained in terms of range parameters and spatial parameters. The widening and flattening of the range Gaussian Gσr lead to more improved accuracy in the computation of Gaussian convolution by the bilateral filter. This is proportional to the distance parameter σr. Increasing the σs spatial parameter smoothens larger characteristics. In Equation (1), the intensity of pixels is represented by a function of a and y.

A bilateral filter has a major disadvantage. If the product of weights in the bilateral filter computes to zero or close to zero, then no smoothening or removal of noise occurs. This is overcome using a Gaussian-based trigonometric filter which uses a raised cosine function with the unique properties of symmetry, nonnegativity, and monotonicity. The bilateral trigonometric filter is defined as (3).

where

and ω is the normalization coefficient.

In our experiment, we assume the intensity values of f(x) are varied in the interval [−T, T], i.e., the difference in the intensity among neighboring pixels (σr). The Gaussian function of σr, Gσr, can be approximated by raised cosine kernels as (5).

for all −T ≤ s ≤ T, where γ = π/2 T and ρ = γσr (normalized radiance of a scene point).

Equation (3) leads to the important conclusion that the variance of the output Gaussian function and raised cosine values are positive and unimodal for all values of N ranging from [−T, T]. Here, T refers to the difference in intensity of a source pixel and its neighboring pixel. In the above equation ‘s’ ranges from the minimum to maximum value of the pixel in the image. ‘γ’ refers to π/2 times the difference in intensity of the image, ‘ρ’ refers to the normalized radiance of a scene point, and N refers to every pixel of the image where the Gaussian range function is applied.

3.2. Contrast Limited Adaptive Histogram—CLAHE

This method is applied on tiles, a small region in the image rather than the whole image. The variance in luminance or color that makes an object different from other objects within the same field of view is defined as a contrast. The intensity of an image is adjusted by the histogram equalization which helps in enhancing the contrast of an image. In the adaptive histogram equalization, every region of the image has its respective histograms thus causing every region of the image to be enhanced separately. This also enables the redistribution of the light in the image. The major advantage of AHE is that it improvises the local contrast and edge sharpness in every region of the image.

AHE mainly depends on 3 properties. Firstly, the size of the region, the larger the size of the region lower is the contrast and vice versa. Secondly, the nature of the histogram equalization determines the value of pixel, which is, in turn, dependent on its rank within the neighborhood. Thirdly, AHE causes over-amplification of noise in large homogeneous regions of the image as the histogram of these regions is highly peaked causing the transformation function to be narrow. The major disadvantage of using AHE over CLAHE is that AHE works on similar fog whereas a CLAHE could be applied over both similar and dissimilar fog images. The second drawback of AHE is that it uses a cumulative function that works only on gray-scale images whereas CLAHE works on both colored and gray-scale images.

CLAHE mainly depends on 3 parameters, namely block size, histogram bins, and clip limit. The block size represents the number of pixels considered in each block. For example, for a block size of 8 × 8, 64 pixels are considered. Eventually, the block size also affects the contrast of images significantly. The local contrast is achieved with smaller block size and the global contrast in the image is attained by larger block size.

For a low-intensity image, the larger the value of the clip limit, the brighter the image will be. This is because the clip limit makes the histogram of the image flatter. Similarly, the contrast of the image is increased when the size of the block is large as the dynamic range is higher.

Figure 4 shows the reconstruction of an image by applying a Trigonometric filter. The first image (a) is the ground truth image. This image is taken from a video of underwater clipping. The SNR of

Figure 4a is found to be 12.92. The second image (b) is the reconstructed image after passing the noise image through a bilateral trigonometric filter. The final image (c) is a contrast-enhanced image after applying CLAHE. The SNR of the reconstructed image is 20.38. This shows that the signal-to-noise ratio is higher in the reconstructed image when compared to the image with noise (S&P).

A similar example is shown in

Figure 5 as well. The image in

Figure 5 is another frame from the same underwater clipping. Here, the ground image has an SNR of 47.85. On applying salt and pepper noise to the image, the noise level increases, thus decreasing the SNR to 15.23. On applying the proposed method to

Figure 5b, the signal strength is improved, bringing the SNR value to 17.8.

3.3. Metrics Used

The quality of an image is measured using the parameters namely, the signal noise ratio (SNR), mean squared error (MSE), underwater image quality measure (UIQM), and structural similarity index (SSIM).

The signal noise ratio (SNR) and mean squared error (MSE) of the reconstructed image and ground image was compared. The MSE of the reference image (ground truth image) is calculated with reference to the noise image and reconstructed image (target images). The SNR of the target image with comparison to the reference image is calculated as shown as (6)

where

Here, vref refers to the ground image or the reference image or the ground truth image and vcmp refers to the reconstructed/noise image.

The MSE of the images are tabulated in

Table 2. These images are different frames of underwater image clippings. It shows the error of the noise image and the reconstructed image with respect to the ground image. The noisy image is generated by applying salt and pepper noise of intensity 0.1.

Figure 6 shows the plot of the MSE noisy image in relation to its corresponding reconstructed image.

A measure of the similarity between 2 images is given by the Structural Similarity Index (SSIM). The Image and Video Engineering Laboratory based at the University of Texas, Austin was the first one to coin the term. There are two key terms here, structural information and distortion. These terms are usually defined with respect to image composition. The property of the object structure that is independent of contrast and brightness is called structural information. A combination of structure, contrast, and brightness gives rise to distortion. Estimation of brightness was undertaken using mean values, contrast using standard deviation, and structural similarity was measured with the help of covariance.

SSIM of two images, x and y can be calculated by:

In Equation (8), the average of x is μx and the average of y is μy. σ2x and σ2y gives the value of variance. The covariance x and y are given by σxy. c1 = (k1L2), c2 = (k2L2) are constants. They are used to preserve stability. The pixel values dynamic range is given by L. Generally, k1 is taken as 0.01, and k2 is given by 0.03.

Underwater image quality measure (UIQM) has also been taken into consideration to quantify parameters like underwater image contrast, color quality, and sharpness. This umbrella term consists of three major parameters to assess the attributes of underwater images, namely, underwater image sharpness measure (UISM), underwater image colorfulness measure (UICM), along with underwater image contrast measure (UIConM). Each of these parameters is used to appraise a particular aspect of the degraded underwater image carefully. Altogether, the comprehensive quality measure for underwater images is then depicted by

Equation (9) relates all three attributes mentioned effectively. The selection of the parameters c1, c2, and c3 are purely based on the parameters’ application. As an illustration, consider UICM. This parameter is given more weightage for applications that involve image color correction while sharpness (UISM) and contrast (UIConM) have more significance while enhancing the visibility of the images. UIQM regresses to an underwater image quality measure when these two parameters achieve null values.

4. Discussions and Result Analysis

Underwater images contain disturbances which are mainly caused by the noise in the image due to the scattering of light. The poor contrast in the image due to low lighting while the image is captured. This is reduced to a great extent by the proposed method by applying a trigonometric filter and CLAHE. The resultant image is an enhanced and noise-removed image. The results attained using the proposed method are compared using the metrics of SNR, SSIM, MSE, and UQIM of the images. The experimental results show that the proposed method performs better in removing haze-like scatters and dust-like scatters.

The experimental results using the proposed produces a more contrast and clear image compared to the ground image. SNR, which is used to define the quality of an image, is used as a metric to validate the images. The greater the value of SNR, the more improved the quality of the image.

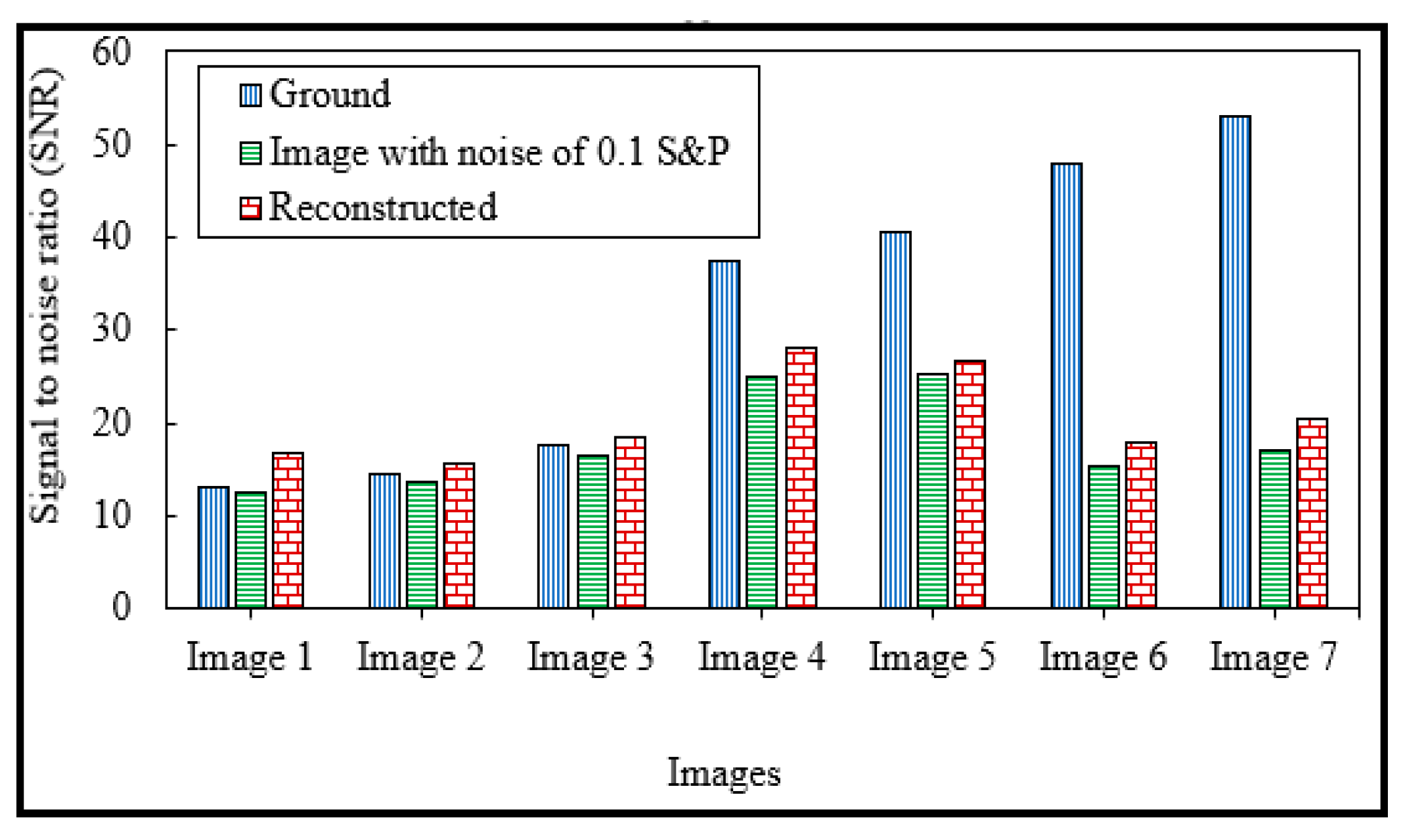

Table 3 provides the complete analysis of SNR values of the ground image and the image after its reconstruction using the proposed method using the trigonometric bilateral filter. The table explains that the image taken for the test has an improved value of signal compared to its noise images. From the results, it can be inferred that the SNR value of the noise image is less than the corresponding reconstructed image. The decreased value of SNR proves the increased noise in the image. From the statistical data in

Table 3, it can be inferred that for images 1, 2, and 3, the SNR of the reconstructed image is greater than the ground images. For raw images with SNR greater than 20, the signal in the reconstructed image is substantially lower when compared to the ground image. Thus, it is not able to reach the quality of the ground image. The grey line depicts the value of reconstructed and the orange line shows the SNR of noise images.

Figure 7 plots the SNR comparison of the images. The SNR of the ground image is shown using a blue line. The graph shows that the SNR of the noise image is less than the reconstructed images, thus showing more noise content in it. The blue line is the SNR of the ground image, orange is the SNR of the noisy image (0.1 salt and pepper noise is applied), and the grey line is the reconstructed SNR of each image. From the graph, for image 1, the SNR of noise is 12.4, and the reconstructed image by applying the proposed method has an SNR of 16.69. This variation helps to understand the efficiency of noise removal in the images. Thus, the proposed method of noise removal using the trigonometric bilateral filter and contrast enhancement using CLAHE is highly effective and efficient.

The proposed method was compared with the existing algorithm stated by Li [

37]. A comparison of the MSE, SNR, and SSIM is shown in

Table 4. The reconstructed image is said to be closer to its ground image if it has a lower MSE and higher PSNR value, while a higher SSIM score means that the result is more similar to its reference image in terms of image structure and texture. Structural similarity index measure (SSIM) is a metric used to compare the perceived quality of an image. It helps to define the similarity between two images.

Table 4 explains that the proposed method has a lower MSE and higher PSNR value compared to state of art methods.

Table 5 shows the image reconstruction by applying different levels of noise. The image is mixed with salt and paper noise. The third column shows the corresponding reconstructed image of the noise image using the proposed method. It can be adduced that, as the noise intensity varies from 0.1 to 1, the SNR value of the noise image decreases, thus showing more content of noise than the signal. Moreover, upon analyzing the reconstructed image, it can be noticed that the SNR value increases, which conveys that the signal is reconstructed from the noised images. This shows the efficiency of our method.

The other inference from this experiment is that even though the noise level in the image increases, the perception of the reconstructed image is good to some extent. Perception is defined as the pattern formed by the visual centers of the brain after an image reaches the human eye. An image can be perceived differently by different humans.

It is the process of what one sees, organizing it in the brain, and making sense of it. Perception is the ability to see from the senses. It is how an individual sees, interprets, and understands visual data. From our experiment, we perceive that after the noise of more than 50% (S&P = 0.5) is applied, the perception of the corresponding reconstructed image starts to decrease. From

Table 5, we infer that the perception of the reconstructed image of 0.1 noise is higher than that of 0.2, 0.3, and so on. The proposed method can reconstruct images up to a noise level of 0.5. The reconstructed images of noise higher than 0.6 have a very low level of perception, even though the signal strength is higher. Thus, the perceptional view decreases even though the signal strength is higher.

Table 6 shows the average run time required for removing noise in images using the existing algorithms stated by Li [

37]. The statistics show that the proposed algorithm works better than the existing methods. This experiment was performed in different sizes of the images like 1280 × 720, 640 × 480, and 500 × 500. The results show the outstanding performance of the proposed algorithm.

Table 7 shows the UIQM SCORES of images using the existing algorithms stated by Li [

37]. The proposed method has a greater UIQM value compared to the existing methods for different image sizes used for the testing purpose.