Comparing Trends in Modeled and Observed Streamflows at Minimally Altered Basins in the United States

Abstract

1. Introduction

2. Data and Methods

2.1. Observed Data

2.2. Data from a Process-Based Model

2.3. Data from Statistical Transfer Models

2.4. Methods

3. Results

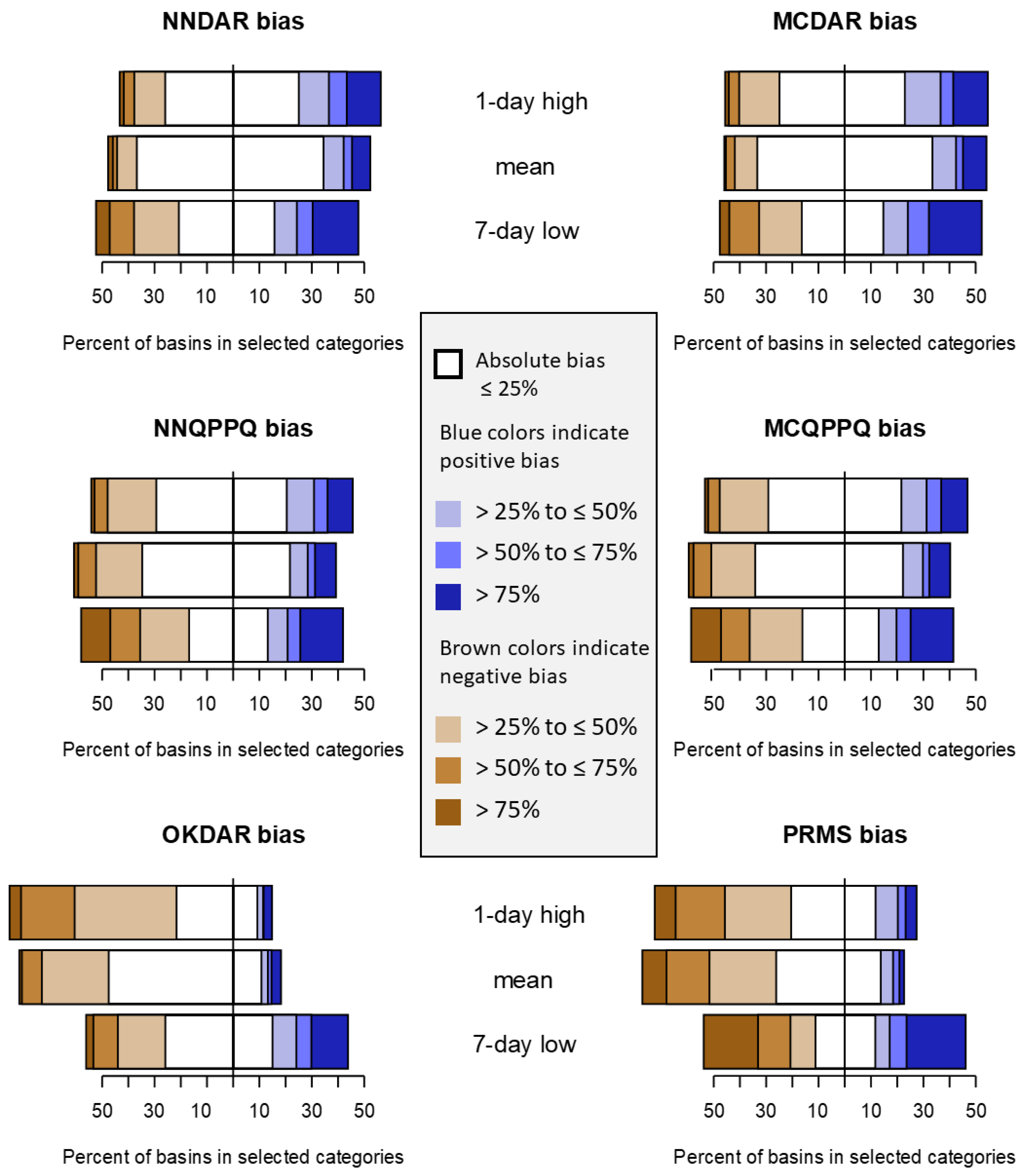

3.1. Overall Streamflow Metric Comparisons

3.2. Comparisons between Models

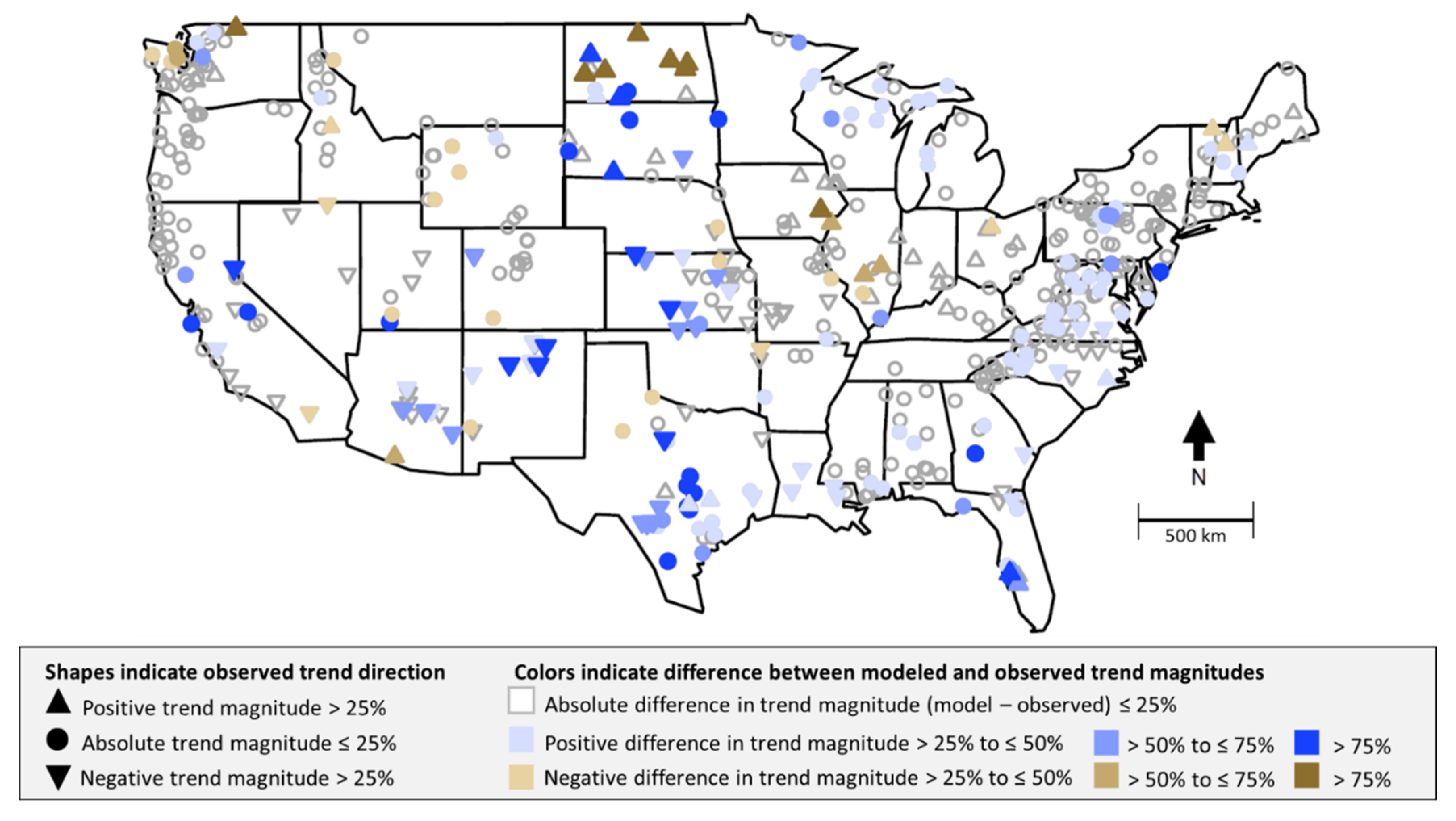

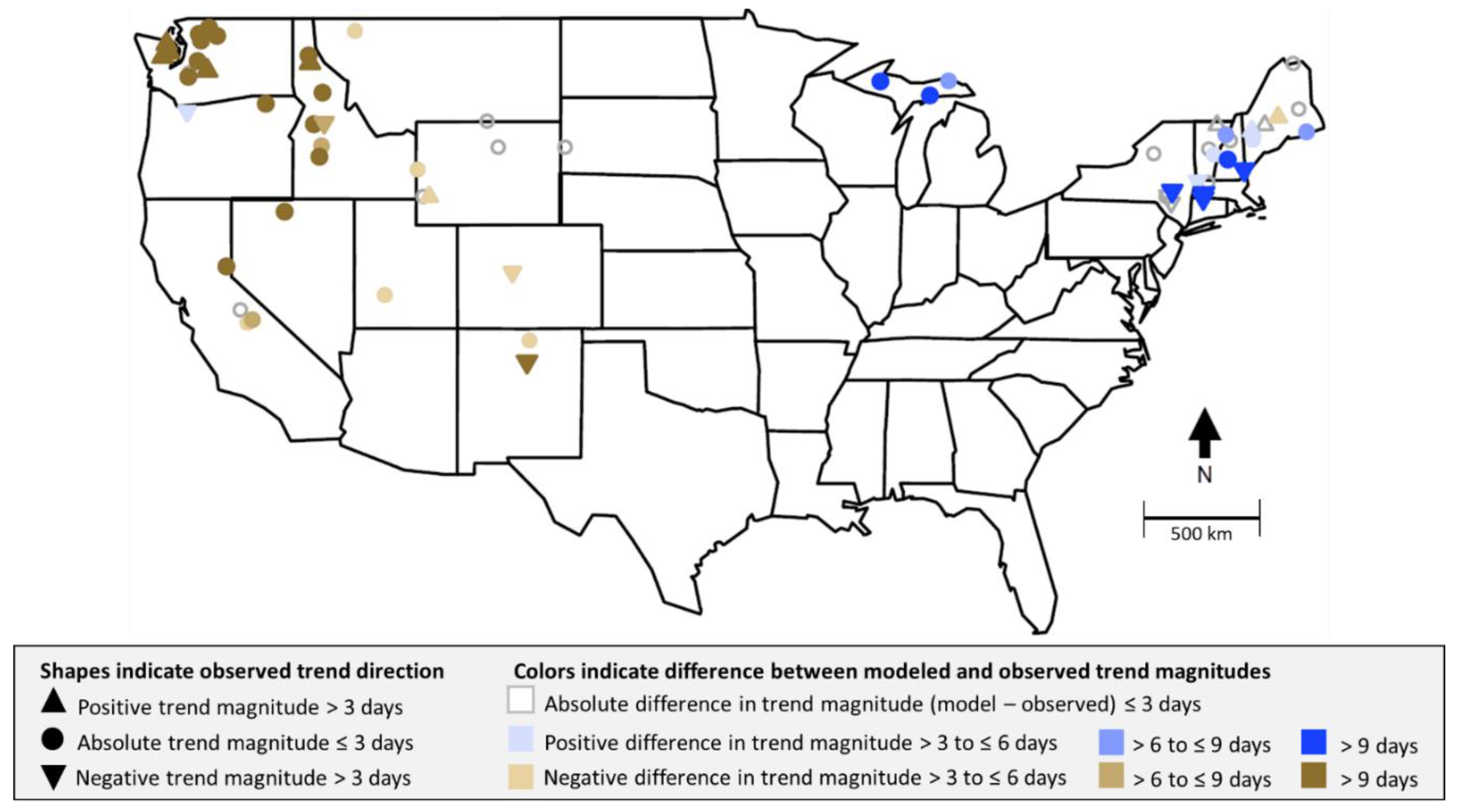

3.3. Geographic Distribution of Results

3.4. Correlations with Basin Characteristics

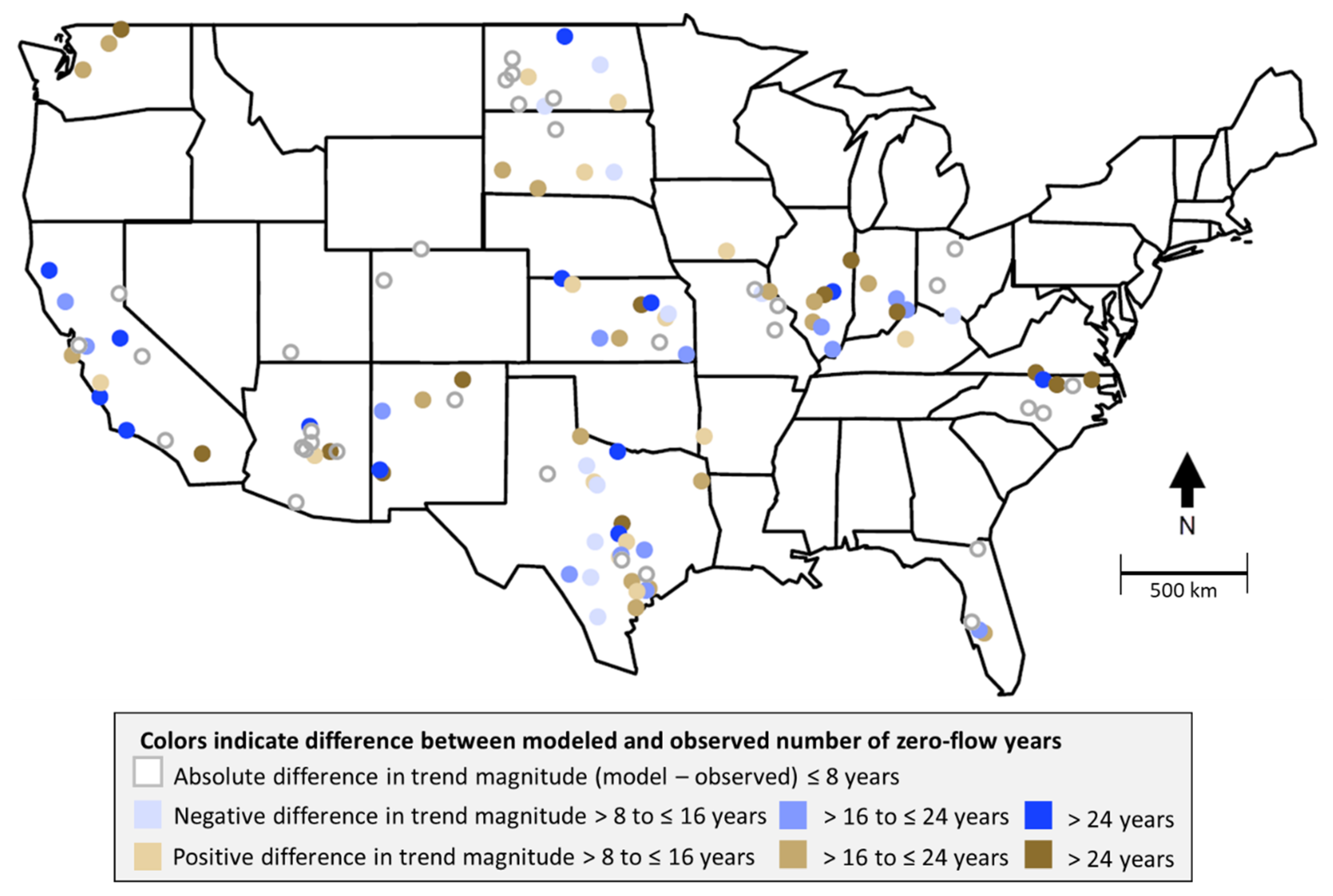

3.5. Streamflow Metric and Model Comparisons for Variables at Basins with a Substantial Number of Years with Zero Flows

4. Discussion

5. Summary

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Farmer, W.H.; Archfield, S.A.; Over, T.M.; Hay, L.E.; LaFontaine, J.H.; Kiang, J.E. A Comparison of Methods to Predict Historical Daily Streamflow Time Series in the Southeastern United States; U.S. Geological Survey Scientific Investigations Report 2014–5231; U.S. Geological Survey: Reston, VA, USA, 2014.

- Arneson, L.A.; Zevenbergen, L.W.; Lagasse, P.F.; Clopper, P.E. Evaluating Scour at Bridges, 5th ed.; U.S. Department of Transportation Federal Highway Administration Technical Report number FHWA-HIF-12-003 HEC-18; U.S. Department of Transportation: Washington, DC, USA, 2013. Available online: https://www.fhwa.dot.gov/engineering/hydraulics/pubs/hif12003.pdf (accessed on 13 February 2020).

- U.S. Environmental Protection Agency. Low Flow Statistics Tools-A How-to Handbook for NPDES Permit Writers; USEPA Document Number EPA-833-B-18-001; U.S. Environmental Protection Agency: Washington, DC, USA, 2018. Available online: https://www.epa.gov/sites/production/files/2018-11/documents/low_flow_stats_tools_handbook.pdf (accessed on 13 February 2020).

- Hodgkins, G.A.; Dudley, R.W.; Archfield, S.; Renard, B. Effects of climate, regulation, and urbanization on historical flood trends in the United States. J. Hydrol. 2019, 573, 697–709. [Google Scholar] [CrossRef]

- Dudley, R.W.; Hirsch, R.M.; Archfield, S.; Blum, A.; Renard, B. Low streamflow trends at human-impacted and reference basins in the United States. J. Hydrol. 2020, 580, 124254. [Google Scholar] [CrossRef]

- Vicente-Serrano, S.M.; Peña-Gallardo, M.; Hannaford, J.; Murphy, C.; Lorenzo-Lacruz, J.; Dominguez-Castro, F.; López-Moreno, J.I.; Beguería, S.; Noguera, I.; Harrigan, S.; et al. Climate, Irrigation, and Land Cover Change Explain Streamflow Trends in Countries Bordering the Northeast Atlantic. Geophys. Res. Lett. 2019, 46, 10821–10833. [Google Scholar] [CrossRef]

- Kiang, J.E.; Stewart, D.W.; Archfield, S.A.; Osborne, E.B.; Eng, K. A National Streamflow Network Gap Analysis; U.S. Geological Survey Scientific Investigations Report 2013-5013; U.S. Geological Survey: Reston, VA, USA, 2013.

- Stahl, K.; Tallaksen, L.M.; Gudmundsson, L.; Christensen, J.H. Streamflow Data from Small Basins: A Challenging Test to High-Resolution Regional Climate Modeling. J. Hydrometeorol. 2011, 12, 900–912. [Google Scholar] [CrossRef]

- Gudmundsson, L.; Tallaksen, L.M.; Stahl, K.; Clark, D.B.; Dumont, E.; Hagemann, S.; Bertrand, N.; Gerten, D.; Heinke, J.; Hanasaki, N.; et al. Comparing Large-Scale Hydrological Model Simulations to Observed Runoff Percentiles in Europe. J. Hydrometeorol. 2012, 13, 604–620. [Google Scholar] [CrossRef]

- Regan, R.S.; Markstrom, S.L.; Hay, L.E.; Viger, R.J.; Norton, P.A.; Driscoll, J.; Lafontaine, J.H. Description of the National Hydrologic Model for use with the Precipitation-Runoff Modeling System (PRMS). In Techniques and Methods; US Geological Survey: Reston, VA, USA, 2018. [Google Scholar]

- Lafontaine, J.H.; Hart, R.M.; Hay, L.E.; Farmer, W.; Bock, A.; Viger, R.J.; Markstrom, S.L.; Regan, R.S.; Driscoll, J. Simulation of water availability in the Southeastern United States for historical and potential future climate and land-cover conditions. In Scientific Investigations Report; US Geological Survey: Reston, VA, USA, 2019. [Google Scholar]

- Markstrom, S.L.; Regan, R.S.; Hay, L.E.; Viger, R.J.; Webb, R.M.; Payn, R.A.; Lafontaine, J.H. PRMS-IV, the precipitation-runoff modeling system, version 4. In Techniques and Methods; US Geological Survey: Reston, VA, USA, 2015. [Google Scholar]

- Leavesley, G.H.; Lichty, R.W.; Troutman, B.M.; Saindon, L.G. Precipitation-Runoff Modeling System—User’s Manual; U.S. Geological Survey Water-Resources Investigations Report 83–4238; U.S. Geological Survey: Reston, VA, USA, 1983.

- Asquith, W.H.; Roussel, M.C.; Vrabel, J. Statewide analysis of the drainage-area ratio method for 34 streamflow percentile ranges in Texas. In Scientific Investigations Report; US Geological Survey: Reston, VA, USA, 2006. [Google Scholar]

- Hughes, D.A.; Smakhtin, V. Daily flow time series patching or extension: A spatial interpolation approach based on flow duration curves. Hydrol. Sci. J. 1996, 41, 851–871. [Google Scholar] [CrossRef]

- Archfield, S.A.; Vogel, R.M. Map correlation method: Selection of a reference streamgage to estimate daily streamflow at ungaged catchments. Water Resour. Res. 2010, 46, 46. [Google Scholar] [CrossRef]

- Farmer, W. Ordinary kriging as a tool to estimate historical daily streamflow records. Hydrol. Earth Syst. Sci. 2016, 20, 2721–2735. [Google Scholar] [CrossRef]

- Alkama, R.; Decharme, B.; Douville, H.; Ribes, A. Trends in Global and Basin-Scale Runoff over the Late Twentieth Century: Methodological Issues and Sources of Uncertainty. J. Clim. 2011, 24, 3000–3014. [Google Scholar] [CrossRef]

- Lohmann, D.; Mitchell, K.E.; Houser, P.; Wood, E.F.; Schaake, J.; Robock, A.; Cosgrove, B.A.; Sheffield, J.; Duan, Q.; Luo, L.; et al. Streamflow and water balance intercomparisons of four land surface models in the North American Land Data Assimilation System project. J. Geophys. Res. Space Phys. 2004, 109, 109. [Google Scholar] [CrossRef]

- U.S. Geological Survey. USGS Water Data for the Nation: U.S. Geological Survey National Water Information System Database. 2020. Available online: https://waterdata.usgs.gov/nwis (accessed on 13 February 2020).

- Falcone, J.A. GAGES-II: Geospatial Attributes of Gages for Evaluating Streamflow. In GAGES-II: Geospatial Attributes of Gages for Evaluating Streamflow; US Geological Survey: Reston, VA, USA, 2011. [Google Scholar]

- Lins, H.F. USGS Hydro-Climatic Data Network 2009 (HCDN-2009). In Fact Sheet; US Geological Survey: Reston, VA, USA, 2012. [Google Scholar]

- Hirsch, R.M.; De Cicco, L.A. User guide to Exploration and Graphics for RivEr Trends (EGRET) and dataRetrieval: R packages for hydrologic data. In Techniques and Methods; US Geological Survey: Reston, VA, USA, 2015. [Google Scholar]

- Anonymous. The R Project for Statistical Computing. Available online: http://www.r-project.org/ (accessed on 13 February 2012).

- Dudley, R.W.; Hodgkins, G.A. Modeled and Observed Trends at Reference Basins in the Conterminous U.S. from October 1, 1983 through September 30, 2016; U.S. Geological Survey data release; U.S. Geological Survey: Reston, VA, USA, 2020.

- Regan, R.S.; Juracek, K.E.; Hay, L.E.; Markstrom, S.; Viger, R.; Driscoll, J.; Lafontaine, J.; Norton, P.A. The U.S. Geological Survey National Hydrologic Model infrastructure: Rationale, description, and application of a watershed-scale model for the conterminous United States. Environ. Model. Softw. 2019, 111, 192–203. [Google Scholar] [CrossRef]

- Hay, L.E.; LaFontaine, J.H. Application of the National Hydrologic Model Infrastructure with the Precipitation-Runoff Modeling System (NHM-PRMS), 1980–2016, Daymet Version 3 Calibration; U.S. Geological Survey Data Release; U.S. Geological Survey: Reston, VA, USA, 2019.

- Viger, R.J.; Bock, A. GIS Features of the Geospatial Fabric for National Hydrologic modeling; U.S. Geological Survey: Reston, VA, USA, 2014.

- Thornton, P.E.; Running, S.W.; White, M.A. Generating surfaces of daily meteorological variables over large regions of complex terrain. J. Hydrol. 1997, 190, 214–251. [Google Scholar] [CrossRef]

- Thornton, P.E.; Hasenauer, H.; White, M. Simultaneous estimation of daily solar radiation and humidity from observed temperature and precipitation: An application over complex terrain in Austria. Agric. For. Meteorol. 2000, 104, 255–271. [Google Scholar] [CrossRef]

- Thornton, P.E.; Thornton, M.M.; Mayer, B.W.; Wei, Y.; Devarakonda, R.; Vose, R.S.; Cook, R.B. Daymet: Daily Surface Weather Data on a 1-km Grid for North America, Version 3; ORNL DAAC: Oak Ridge, TN, USA, 2017. [Google Scholar] [CrossRef]

- Bock, A.R.; Hay, L.E.; Markstrom, S.L.; Emmerich, C.; Talbert, M. The U.S. Geological Survey Monthly Water Balance Model Futures Portal. In Open-File Report; US Geological Survey: Reston, VA, USA, 2017. [Google Scholar]

- National Aeronautics and Space Administration, MODIS evapotranspiration: Moderate Resolution Imaging Spectroradiometer [MODIS] web page. Available online: https://modis.gsfc.nasa.gov/data/dataprod/mod16.php (accessed on 1 October 2017).

- Senay, G.B.; Bohms, S.; Singh, R.K.; Gowda, P.H.; Velpuri, N.M.; Alemu, H.; Verdin, J.P. Operational Evapotranspiration Mapping Using Remote Sensing and Weather Datasets: A New Parameterization for the SSEB Approach. JAWRA J. Am. Water Resour. Assoc. 2013, 49, 577–591. [Google Scholar] [CrossRef]

- National Snow & Ice Data Center. Snow Data Assimilation System (SNODAS) Data Products at NSIDC, Version 1; National Snow & Ice Data Center: Boulder, CO, USA, 2020; ID: G02158. [Google Scholar] [CrossRef]

- Russell, A.M.; Over, T.M.; Farmer, W.H. Cross-Validation Results for Five Statistical Methods of Daily Streamflow Estimation at 1385 Streamgages in the Conterminous United States, Water Years 1981–2017; U.S. Geological Survey Data Release; U.S. Geological Survey: Reston, VA, USA, in press. [CrossRef]

- Over, T.M.; Farmer, W.; Russell, A.M. Refinement of a regression-based method for prediction of flow-duration curves of daily streamflow in the conterminous United States. In Scientific Investigations Report; US Geological Survey: Reston, VA, USA, 2018. [Google Scholar]

- Dudley, R.W.; Hodgkins, G.A.; McHale, M.R.; Kolian, M.J.; Renard, B. Winter-Spring Streamflow Volume and Timing Data for 75 Hydroclimatic Data Network-2009 Basins in the Conterminous United States 1920–2014; U.S. Geological Survey data release; U.S. Geological Survey: Reston, VA, USA, 2016.

- Dudley, R.W.; Hodgkins, G.; McHale, M.; Kolian, M.; Renard, B. Trends in snowmelt-related streamflow timing in the conterminous United States. J. Hydrol. 2017, 547, 208–221. [Google Scholar] [CrossRef]

- Sen, P.K. Estimates of the regression coefficient based on Kendall’s tau. J. Am. Stat. Assoc. 1968, 63, 1379–1389. [Google Scholar] [CrossRef]

- Hosmer, D.W.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression. In Wiley Series in Probability and Statistics, 3rd ed.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2013; p. 500. ISBN 978-0-470-58247-3. [Google Scholar]

- Dudley, R.W.; Archfield, S.A.; Hodgkins, G.A.; Renard, B.; Ryberg, K.R. Peak-Streamflow Trends and Change-Points and Basin Characteristics for 2683 U.S. Geological Survey Streamgages in the Conterminous U.S. (ver. 3.0, April 2019); U.S. Geological Survey data release; U.S. Geological Survey: Reston, VA, USA, 2018.

- Criss, R.; Winston, W.E. Do Nash values have value? Discussion and alternate proposals. Hydrol. Process. 2008, 22, 2723–2725. [Google Scholar] [CrossRef]

- Zambrano-Bigiarini, M. hydroGOF: Goodness-of-Fit Functions for Comparison of Simulated and Observed Hydrological Time Series. R package version 0.3-10. 2017. Available online: http://hzambran.github.io/hydroGOF/ (accessed on 26 March 2020).

| Model | Kendall’s Tau | p-Value | ||||

|---|---|---|---|---|---|---|

| 7-Day Low | Mean | 1-Day High | 7-Day Low | Mean | 1-Day High | |

| NNDAR | −0.17 | −0.20 | −0.22 | 1.25 × 10−6 | 4.65 × 10−11 | 1.23 × 10−13 |

| MCDAR | −0.28 | −0.21 | −0.27 | 3.24 × 10−16 | 1.09 × 10−12 | 3.22 × 10−19 |

| NNQPPQ | −0.26 | −0.30 | −0.31 | 3.65 × 10−14 | 2.32 × 10−23 | 1.33 × 10−24 |

| MCQPPQ | −0.37 | −0.34 | −0.37 | 4.83 × 10−28 | 4.77 × 10−30 | 5.26 × 10−35 |

| OKDAR | −0.30 | −0.22 | −0.25 | 3.38 × 10−18 | 6.24 × 10−14 | 2.08 × 10−17 |

| NHM-PRMS | −0.33 | −0.27 | −0.28 | 4.33 × 10−22 | 2.40 × 10−19 | 6.37 × 10−21 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hodgkins, G.A.; Dudley, R.W.; Russell, A.M.; LaFontaine, J.H. Comparing Trends in Modeled and Observed Streamflows at Minimally Altered Basins in the United States. Water 2020, 12, 1728. https://doi.org/10.3390/w12061728

Hodgkins GA, Dudley RW, Russell AM, LaFontaine JH. Comparing Trends in Modeled and Observed Streamflows at Minimally Altered Basins in the United States. Water. 2020; 12(6):1728. https://doi.org/10.3390/w12061728

Chicago/Turabian StyleHodgkins, Glenn A., Robert W. Dudley, Amy M. Russell, and Jacob H. LaFontaine. 2020. "Comparing Trends in Modeled and Observed Streamflows at Minimally Altered Basins in the United States" Water 12, no. 6: 1728. https://doi.org/10.3390/w12061728

APA StyleHodgkins, G. A., Dudley, R. W., Russell, A. M., & LaFontaine, J. H. (2020). Comparing Trends in Modeled and Observed Streamflows at Minimally Altered Basins in the United States. Water, 12(6), 1728. https://doi.org/10.3390/w12061728