1. Introduction

With increased solar forcing on the earth’s surface in the afternoon, air inside the lower part of atmosphere gets heated due to surface heating resulting in the formation of a Convective Boundary Layer (CBL). Turbulent processes inside CBL range across various scales, from microscale (O(10 m)) to mesoscale, effectively mixing the scalars and pollutants trapped within the boundary layer. Understanding microscale atmospheric boundary layer (ABL) processes is crucial for applications such as air quality modeling, transport and dispersion of airborne agents or hazardous material. With continuous advances in computational technology, use of high-fidelity fluid dynamic models such as Large Eddy Simulations (LES) to model the local scale ABL has become more practical. Pioneering studies [

1,

2,

3,

4] have used LES to reproduce atmospheric turbulence inside ABL using multiple grid points in horizontal and vertical directions. Later studies (e.g., Moeng [

5], Nieuwstadt et al. [

6]) were successful in reproducing the turbulent motions inside CBL using LES approach. However, most LES studies related to CBL were limited to idealized conditions, i.e., using periodic boundary conditions, homogenous surface properties and user-given surface fluxes. This limits the applicability of LES for complex or real-world scenarios where inhomogeneity in horizontal makes the inflow turbulent fluctuations different than those at the outflow boundary [

7]. One way to address this issue is to use the large scale forcings from the mesoscale numerical weather prediction (NWP) models to drive the LES either by nesting the LES domain inside the NWP model or by providing the boundary conditions to LES at specified time steps. However, this methodology is sensitive to the capabilities of the NWP model to effectively downscale the conditions from mesoscale to microscale. The Advanced Research version of Weather Research and Forecast (WRF-ARW, hereafter WRF) [

8] is one such model that can handle the process of downscaling from mesoscale to microscale. WRF model can have multiple nested domains with different grid spacing that can run simultaneously. Studies (e.g., Moeng et al. [

7], Mirocha et al. [

9], Kirkil et al. [

10]) have used WRF-LES in idealized conditions for convective ABLs and showed that WRF-LES can capture most of the turbulent features occurring at microscale. Talbot et al. [

11], Chu et al. [

12] and Cuchiara and Rappenglück [

13] have used nesting approach in WRF to simulate a realistic ABL by downscaling from a grid spacing of 10 km (mesoscale) to 100 m (WRF-LES). Talbot et al. [

11] and Cuchiara and Rappenglück [

13] have further reduced the horizontal grid resolution to 50 m, whereas Chu et al. [

12] showed that WRF-LES works reasonably with the resolution of 100 m.

The methodology of dynamic downscaling from mesoscale to microscale requires careful attention of various aspects: type of parameterizations used in mesoscale runs to characterize the turbulence inside the PBL, resolution at which the transition from mesoscale to microscale occurs and the sub-grid model used in microscale runs. In a mesoscale model, such as WRF, turbulence inside the ABL is fully parameterized using a 1-D Planetary Boundary Layer (PBL) scheme. In LES, a sub-grid scale (SGS) model is used to resolve the most energetic eddies while eddies smaller than the grid size are parameterized. Several studies [

14,

15,

16] have shown that, for resolutions between mesoscale and microscale, i.e., the transition resolution treated as “grey-zone” or “terra-incognita” [

17], neither 1-D PBL schemes nor LES-SGS models could resolve the turbulent features accurately. 1-D PBL schemes have shown acceptable performance in capturing the mean (smooth) flow characteristics inside the grey-zone resolutions [

18,

19], but performs poorly in capturing the fluctuations [

11]. This poses an additional challenge when the mesoscale simulations with grey-zone resolution are used to drive the LES. If the inflow conditions from grey-zone domains does not have enough fluctuations to pass on to LES, it takes time for turbulence to build inside the LES domain. To avoid this, Mirocha et al. [

20] has showed that nesting a fine-LES inside a coarser-LES is superior to nesting a fine-LES inside the mesoscale domain. This way the turbulence starts building inside the coarser-LES before it is passed on to the fine-LES.

To reduce the model bias at mesoscale level itself, nudging process is available in WRF [

21]. Observational nudging is the process of adjusting the model values towards the surface and upper-air observations. This is achieved by feeding the surface and sounding observational data available from Meteorological Assimilation Data Ingest System (MADIS) into a WRF program called as Objective Analysis (OBSGRID). MADIS, developed by National Oceanic Atmospheric Administration (NOAA) Earth System Research Laboratory, collects surface and upper air observational data from several sources, and performs quality control for the data that is accessible to public (

https://madis.ncep.noaa.gov). The initial and boundary conditions for the WRF model can be improved by subjecting the initial datasets to objective analysis process [

21]. Several studies [

22,

23,

24,

25,

26,

27,

28] have used different configurations for nudging at various levels and show that nudging has improved the model performance considerably. Lo et al. [

22] has observed that nudging improves the generation of realistic regional-scale patterns by the model. Otte [

23], Ngan et al. [

24], Gilliam et al. [

25] and Rogers et al. [

26] have observed the improvement in Air Quality predictions when the background meteorological fields were generated by nudging the available observations. Ngan et al. [

27] and Li et al. [

28] have observed that performing nudging even when using re-analyses datasets significantly improved the wind direction predictions that are crucial for atmospheric transport and dispersion. Another challenge of running the LES for realistic ABL is the lack of observations to validate the model performance in regards of mixing and stability parameters. As fine-scale LES runs are computationally expensive, the domain size is often limited to few kilometers (usually <5 km) and having high frequency observations at multiple heights within that range is only possible during tracer field experiments or field campaigns designed to study the effect of local atmospheric turbulence on tracer dispersion. Sagebrush tracer experiment [

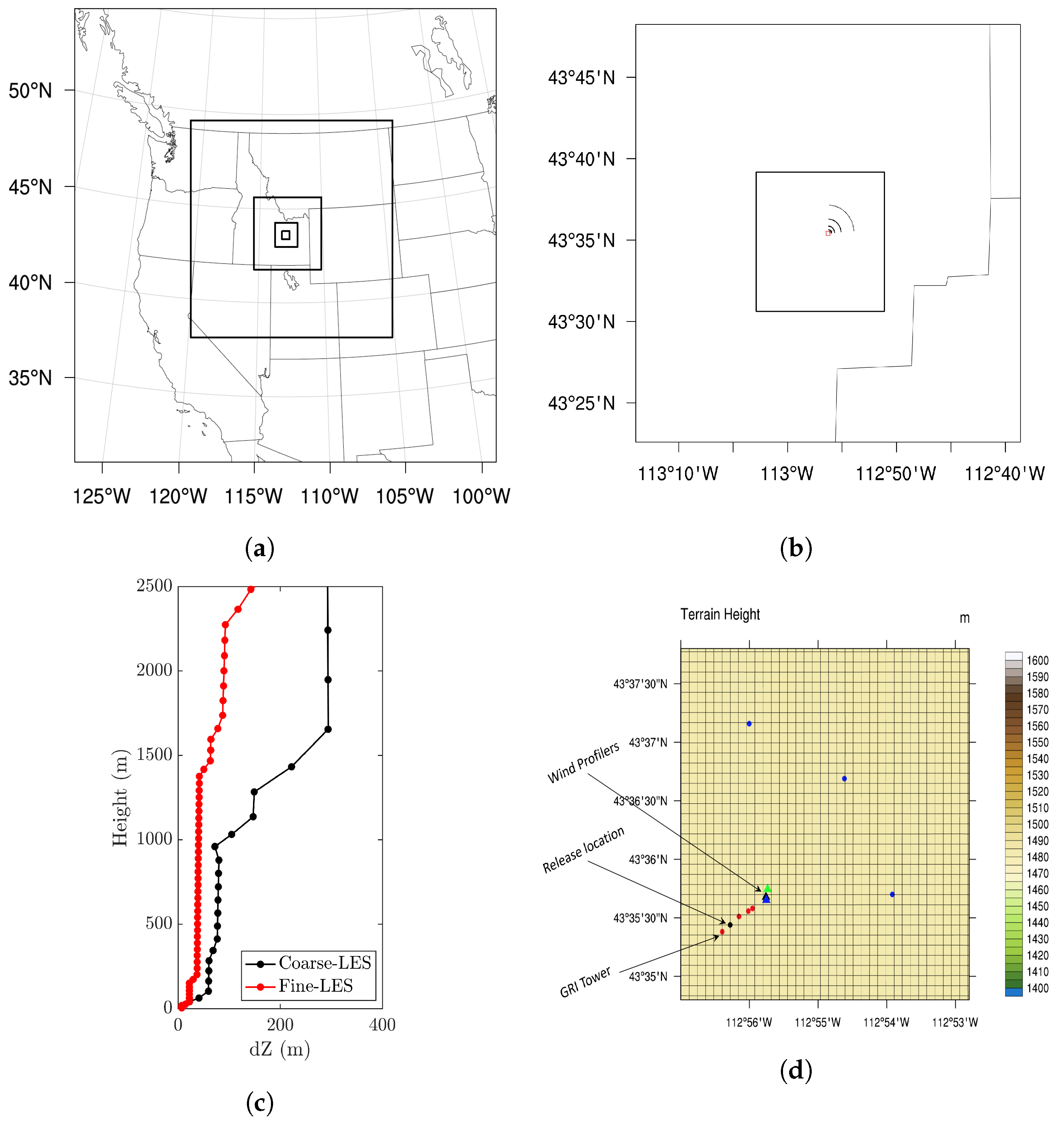

29] is one such campaign with database of turbulence and standard meteorological measurements spanning across a 3200-m domain and at multiple heights. During October 2013, the Field Research Division of the Air Resource Laboratory (ARLFRD) of NOAA conducted a tracer field experiment, which is designated as Project Sagebrush Phase 1 (PSB1, [

29]). Each day of the experiment conducted is designated as Intensive Observation Period (IOP) and five such IOPs were conducted. Out of five IOPs conducted, IOP3 conditions were near-neutral to neutral with calm winds during the morning hours and strong winds during the afternoon hours [

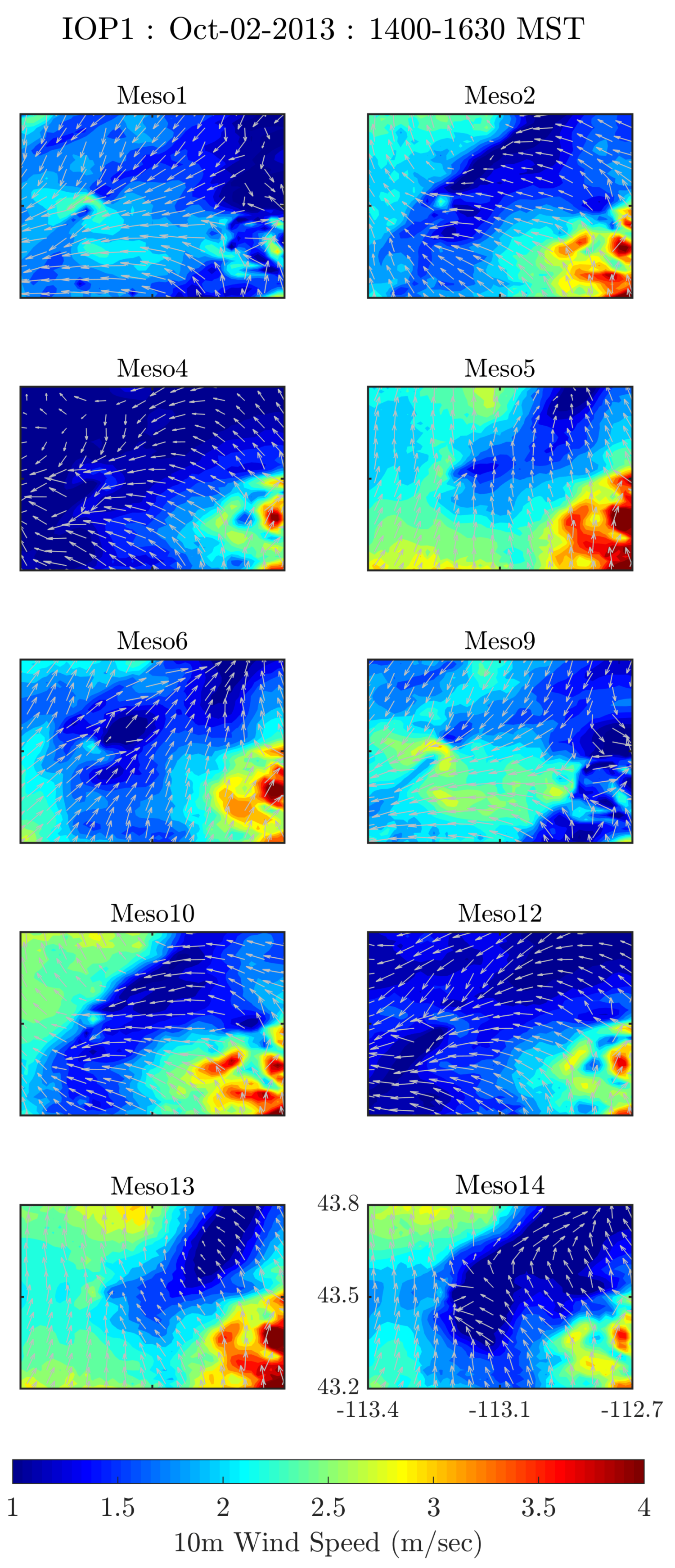

29]. All other IOPs have weakly-unstable to unstable atmospheric conditions. The day of IOP1 was mostly sunny with cirrostratus haze and weak winds (<2 ms

). Days of IOP2, IOP4 and IOP5 were mostly sunny with moderate to strong (2–6 ms

) southwesterly winds.

While the existing studies shed light on limitations and use of WRF-LES, to the authors’ knowledge there has not yet been a study which simulated the detailed ABL conditions during a tracer field experiment using LES scales. The objectives of present study were:

- i

To simulate high-resolution ABL conditions during the Sagebrush Tracer Experiment-Phase 1 using real-world dynamic downscaling in WRF-LES; and

- ii

To assess the performance of WRF model to produce outputs that can be used as input for LES scale tracer simulations.

We evaluated the model performance subjected to different parameterizations used in mesoscale runs and SGS models used in LES simulations. The structure of the paper is as follows: we first describe the methodology followed with a short description of the Sagebrush tracer experiment and WRF model configuration in

Section 2. Sensitivity analysis in mesoscale and microscale simulations is presented in

Section 3 along with the final LES results. Summary and conclusions are given in

Section 4.

4. Summary and Conclusions

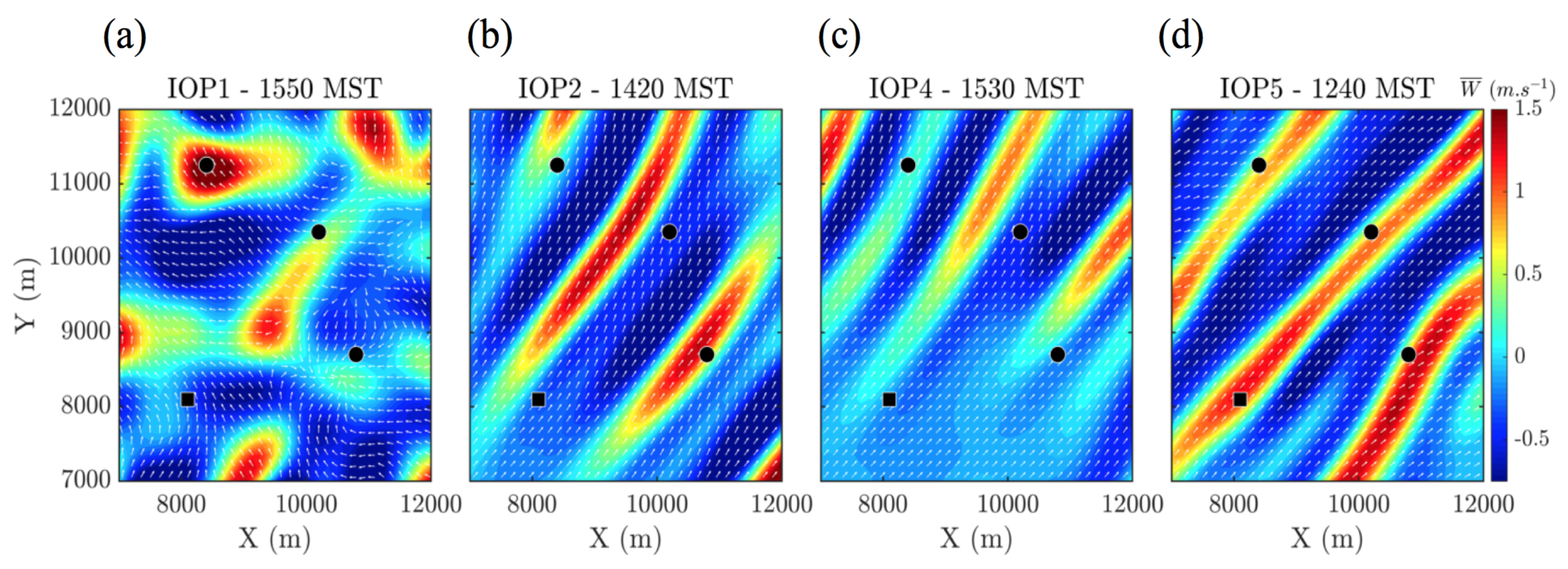

ABL conditions during Sagebrush tracer experiment were simulated at LES spatial and temporal scales using dynamic downscaling methodology in WRF. Five nested-mesoscale domains with grid resolutions ranging from 24 km to 1.3 km and two nested-LES domains with grid resolutions of 450 m and 150 m were simulated. Further reduction of grid resolution was not deemed necessary as the region covered is mostly flatland and does not have any features that can impact the wind field. Output from the mesoscale simulations was used to drive the LES. Sensitivity of WRF model performance to the initial and lateral boundary conditions, physics schemes and sub-grid scale models was evaluated. Mesoscale simulations were conducted with multiple PBL schemes, microphysics and surface layer schemes (

Table 2). Sensitivity analysis following the metrics explained in

Section 3.1 showed that NARR dataset with YSU PBL scheme coupled with Revised Monin–Obukhov surface layer scheme and Morrison-2nd-moment microphysics performed best. Model performance was improved with nudging the boundary fields through OA. Significant improvement was observed in wind direction predictions after the nudging (

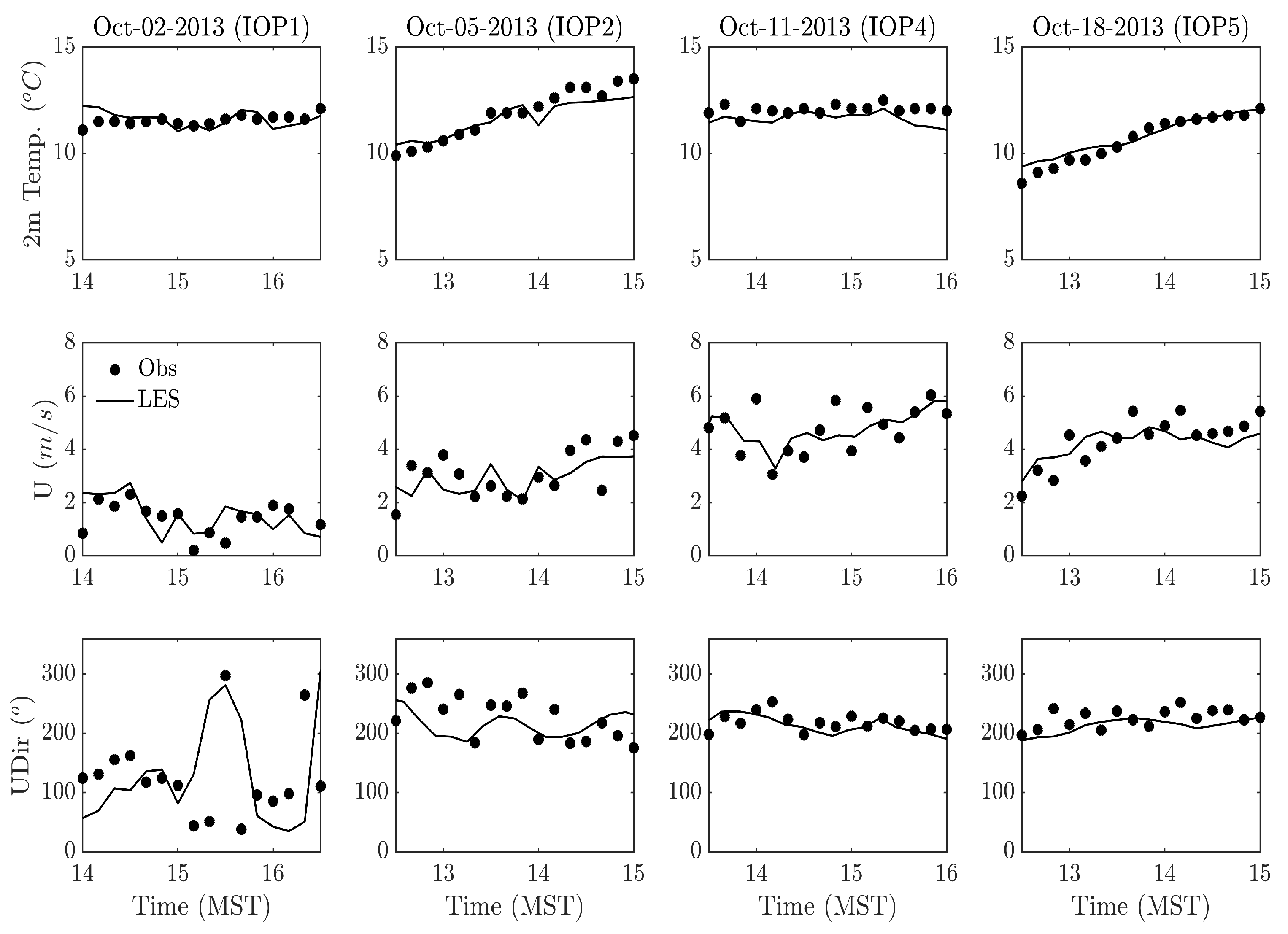

Table A3).

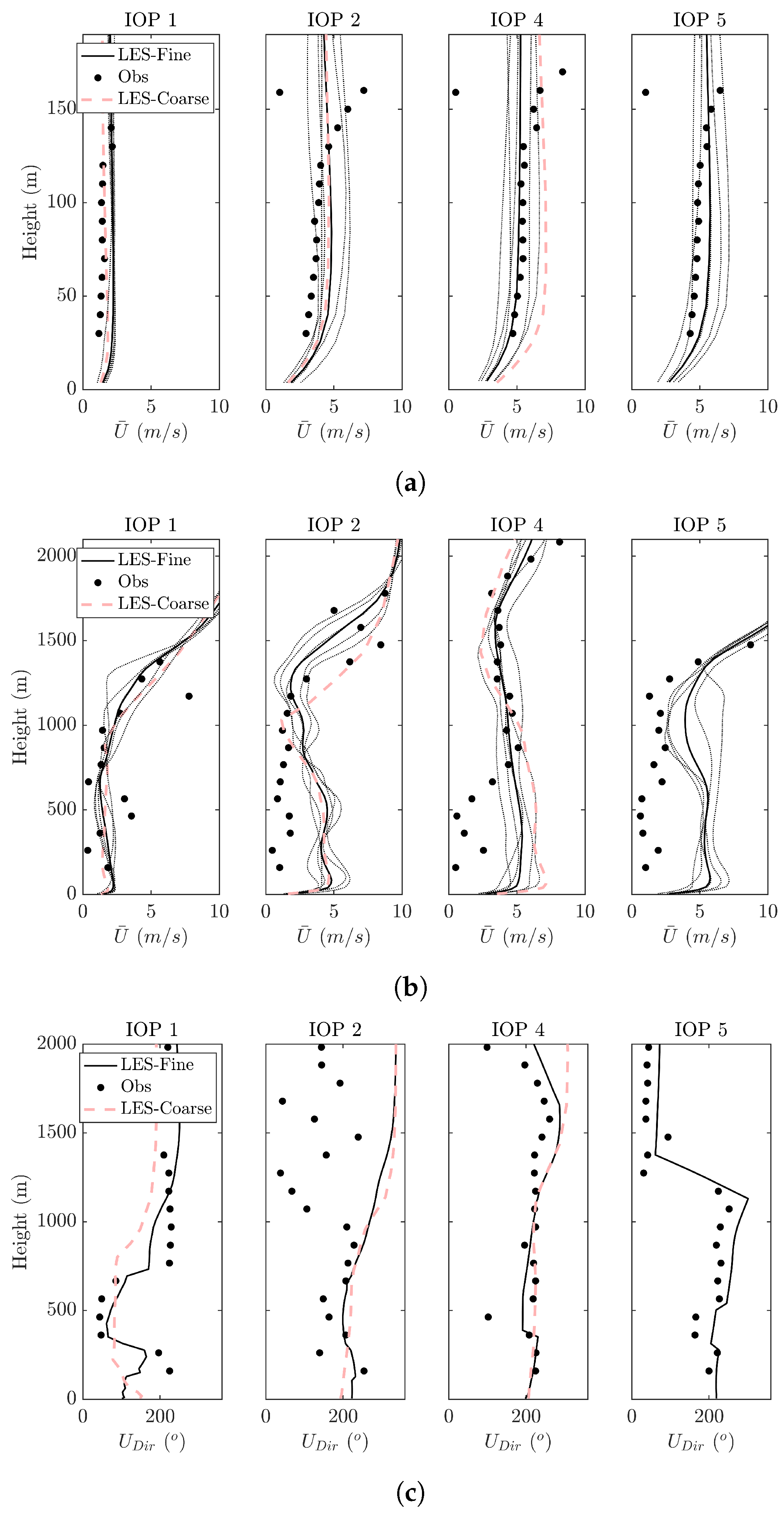

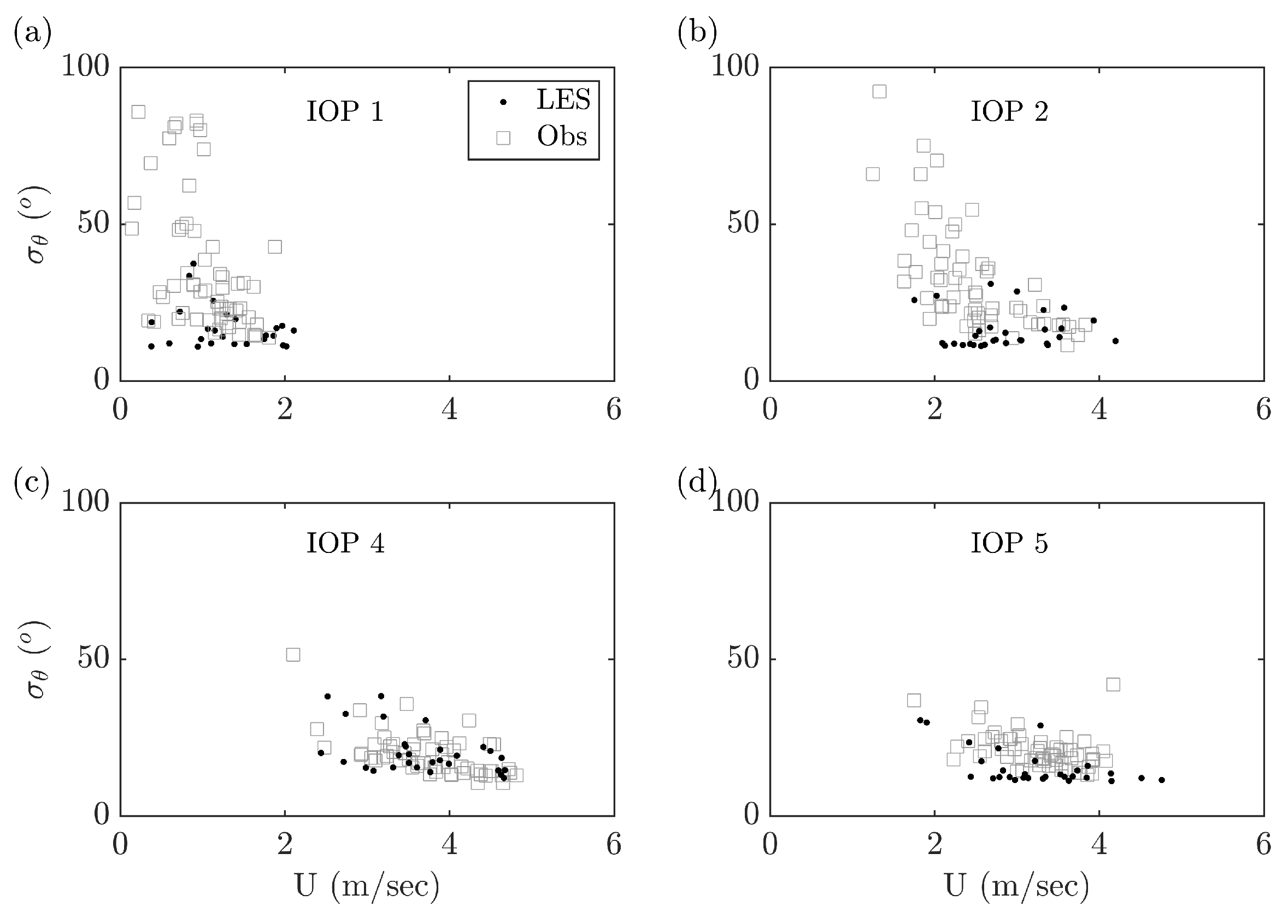

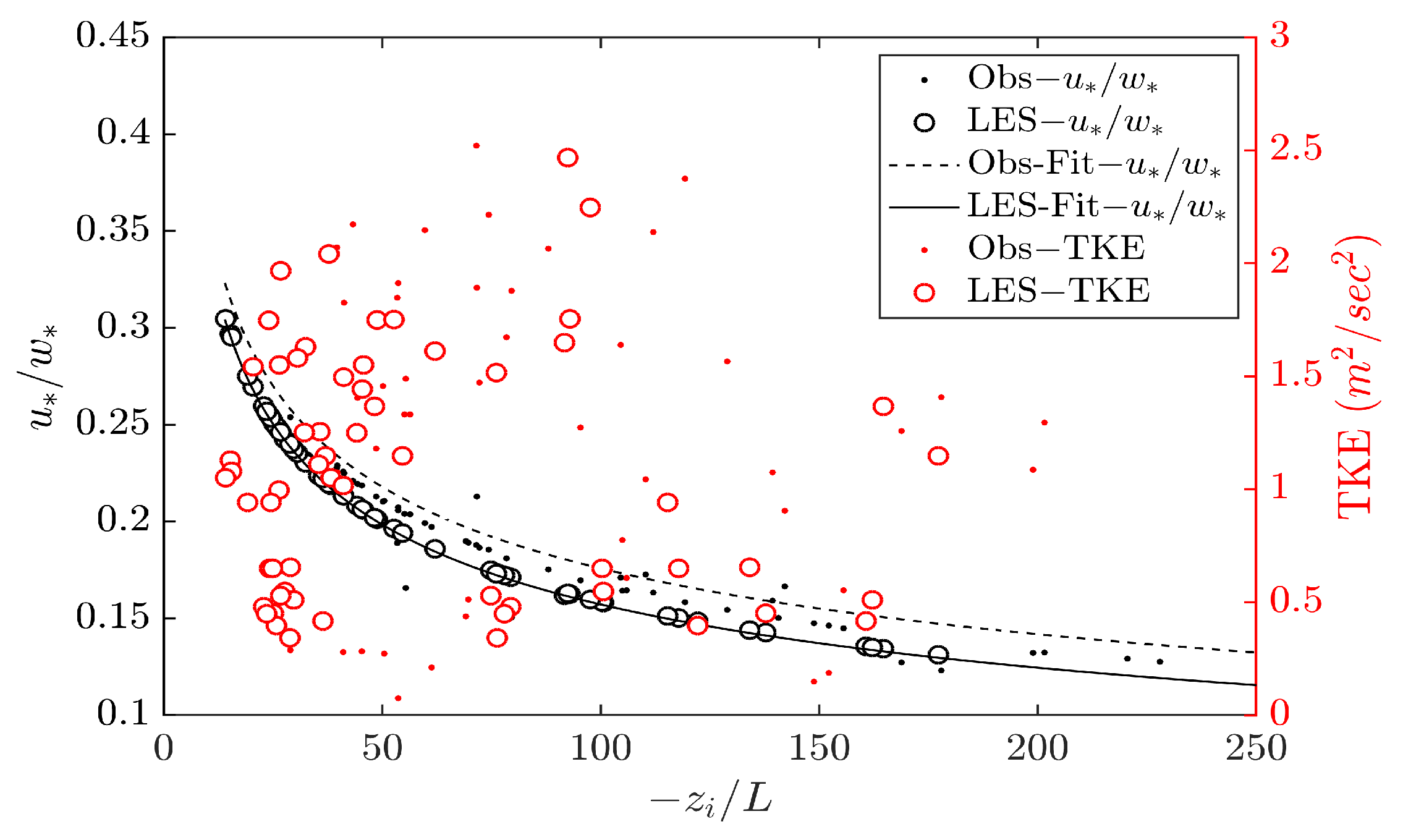

Although there exists no “thumb-rule” to use either mesoscale or LES at “terra-incognita” resolutions, we used LES at a grid resolution of 450-m, thus the turbulence started building up before the finest LES domain of 150-m resolution. Two vertical resolutions were tested inside the LES domains. Wind speed and wind direction profiles were compared against the observed values from SODAR and RASS during PSB1. Having 44 levels inside the boundary layer improved the model performance, especially with wind direction profiles (

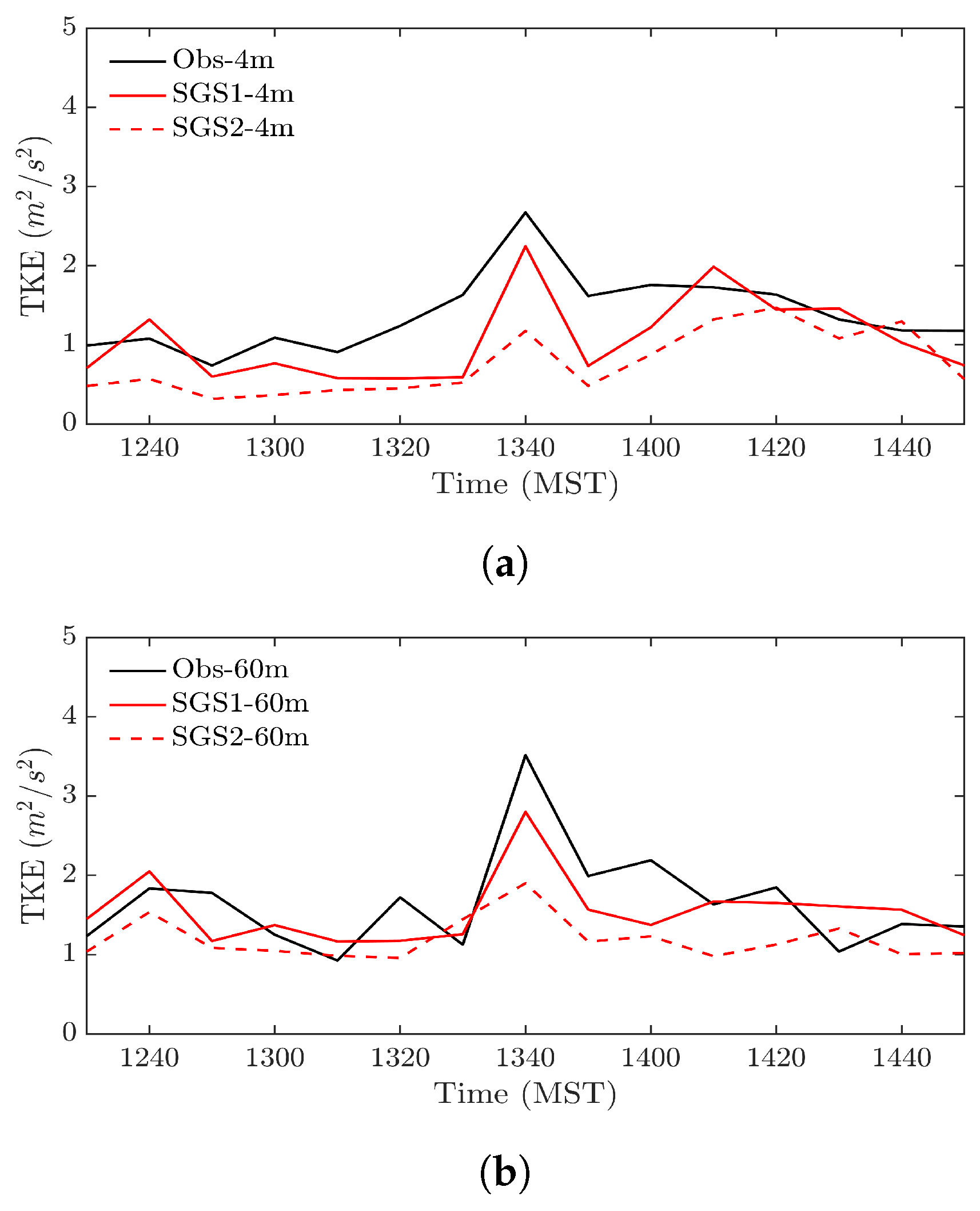

Figure 4c). Two SGS models (3D TKE and Smagorinsky) with NBA turned “on” were tested inside the LES domains. Comparing the time variation of TKE near surface (4-m) and at 60-m with the observed values during 2.5-h of PSB1 showed that both SGS models in WRF underestimated the TKE at 4-m. This might be avoided by using a dynamic sub-grid model, as suggested in previous studies [

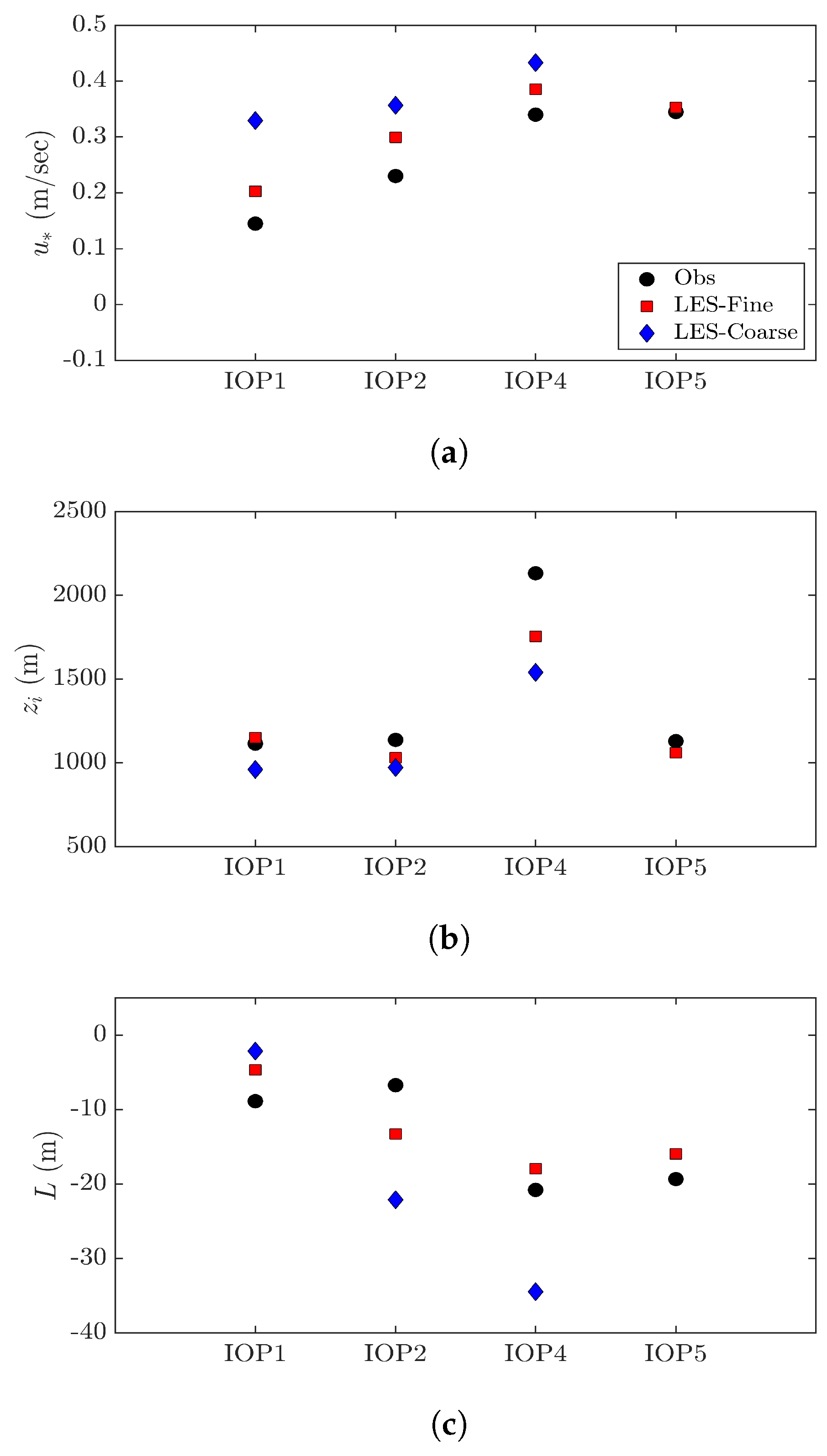

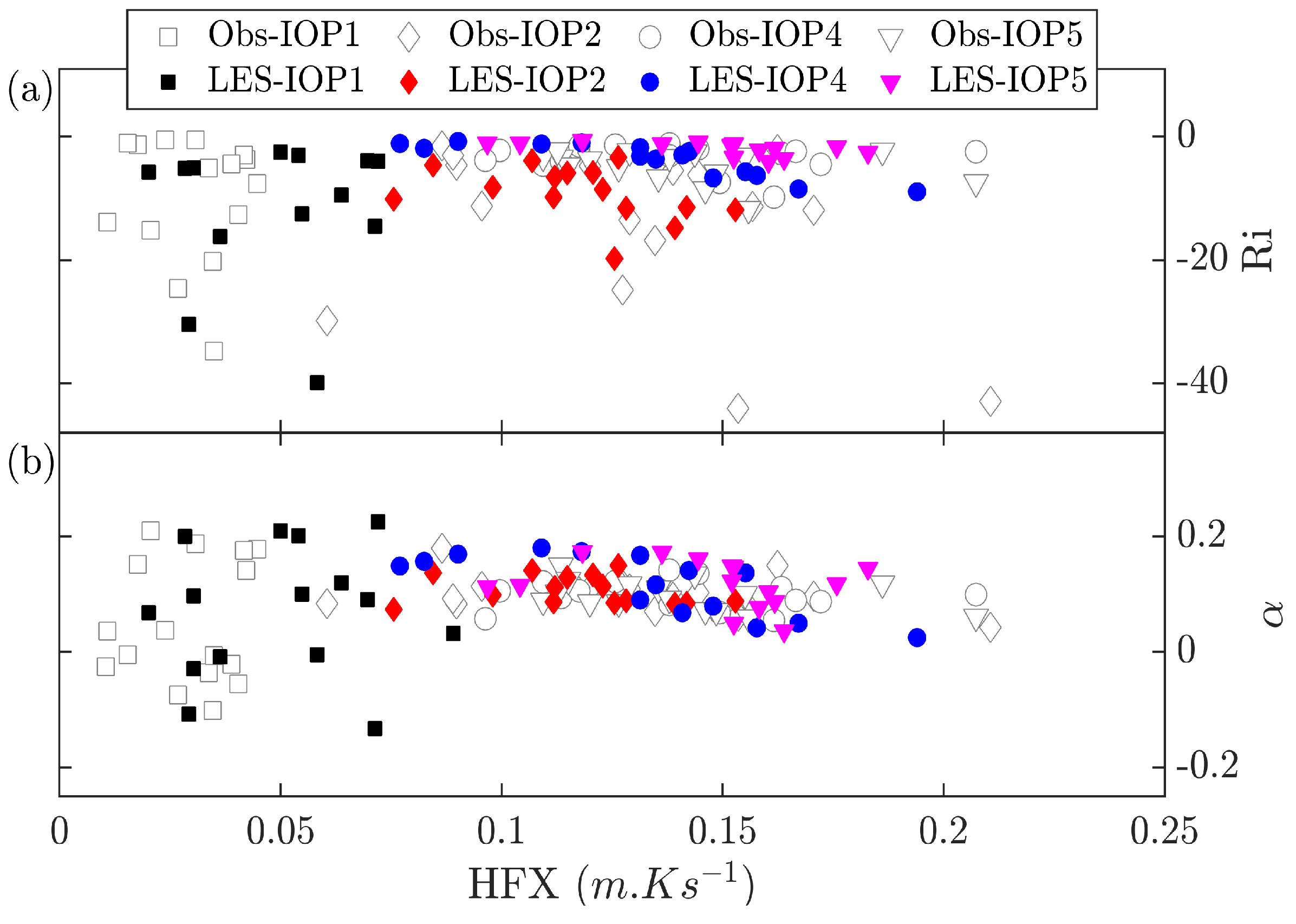

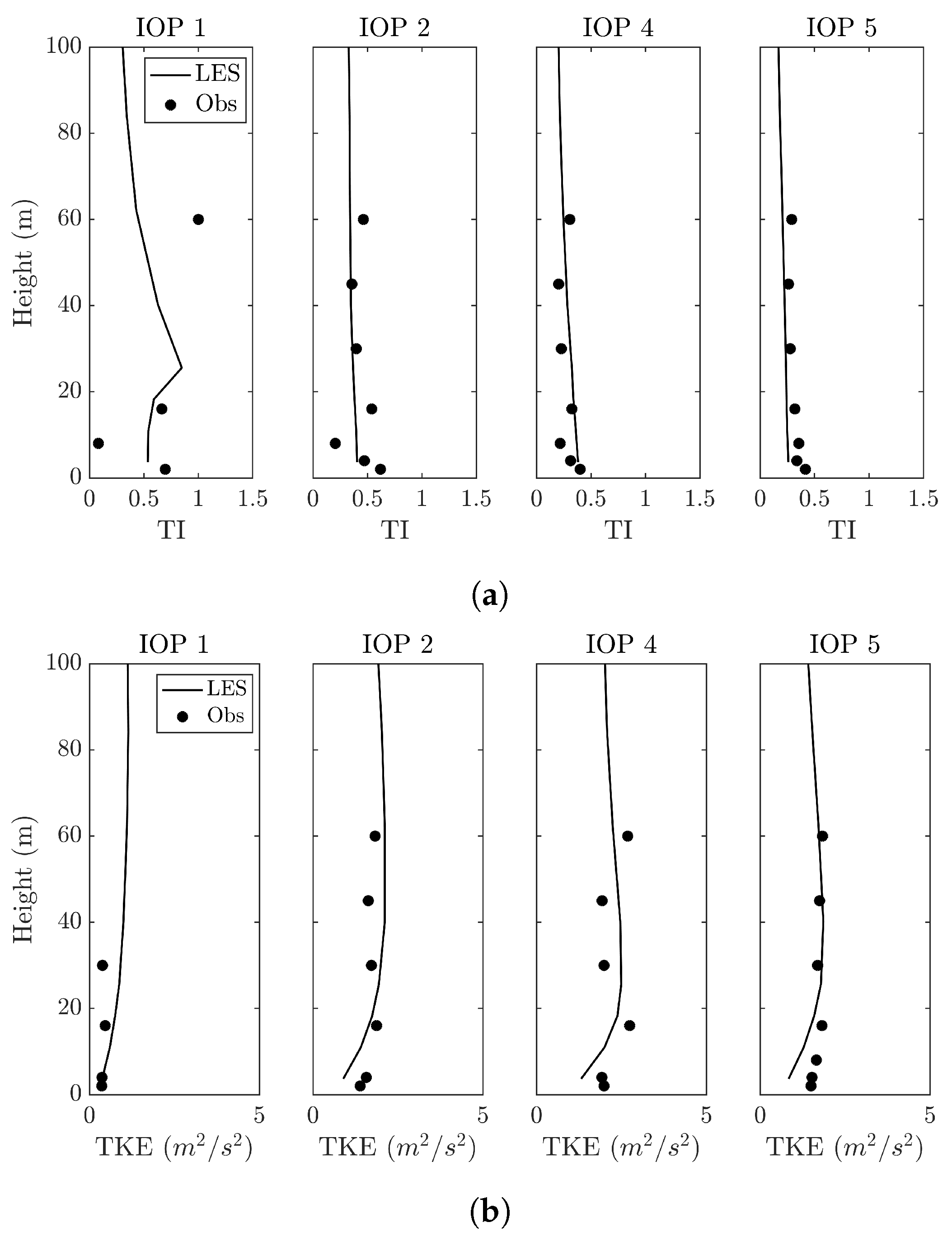

20].When using LES framework for dispersion applications, it is crucial to capture the mixing and stability conditions inside ABL. We compared the friction velocity, PBL height and Monin–Obukhov length against the measured values. Stability during the IOPs was estimated from Richardson number and shear exponent evaluated based on wind speed and temperatures between 4- and 60-m. IOPs 1 and 2 were unstable to weakly unstable, while IOPs 4 and 5 were consistently weakly unstable. Turbulence intensity and TKE profiles were well mixed 40-m above ground. Although rare, standard deviation of wind speed directions in the range of 70

–100

were observed during IOPs 1 and 2 for wind speeds less than 3-ms

and in the range of 10

–40

for IOPs 4 and 5. LES simulated wind direction standard deviations were underestimated for wind speeds less than 3-ms

and were in good agreement during IOPs 4 and 5. Wang and Jin [

56] also observed the not-so-satisfactory performance of WRF model for wind speeds smaller than 2.5-ms

in their mesoscale runs.

In summary, this study shows the use of multiple nested domains to downscale the real boundary conditions from mesoscale resolutions to LES scales. A detailed description of ABL conditions during Sagebrush tracer experiment are given including the parameters that are critical for tracer dispersion studies. With proper selection of parameterizations, WRF model can be used to deliver the necessary initial and boundary conditions required for LES runs. Future study will be performed to validate the tracer dispersion properties from the experiments using the meteorological fields obtained from the ABL simulations.