A Novel Method for the Recognition of Air Visibility Level Based on the Optimal Binary Tree Support Vector Machine

Abstract

:1. Introduction

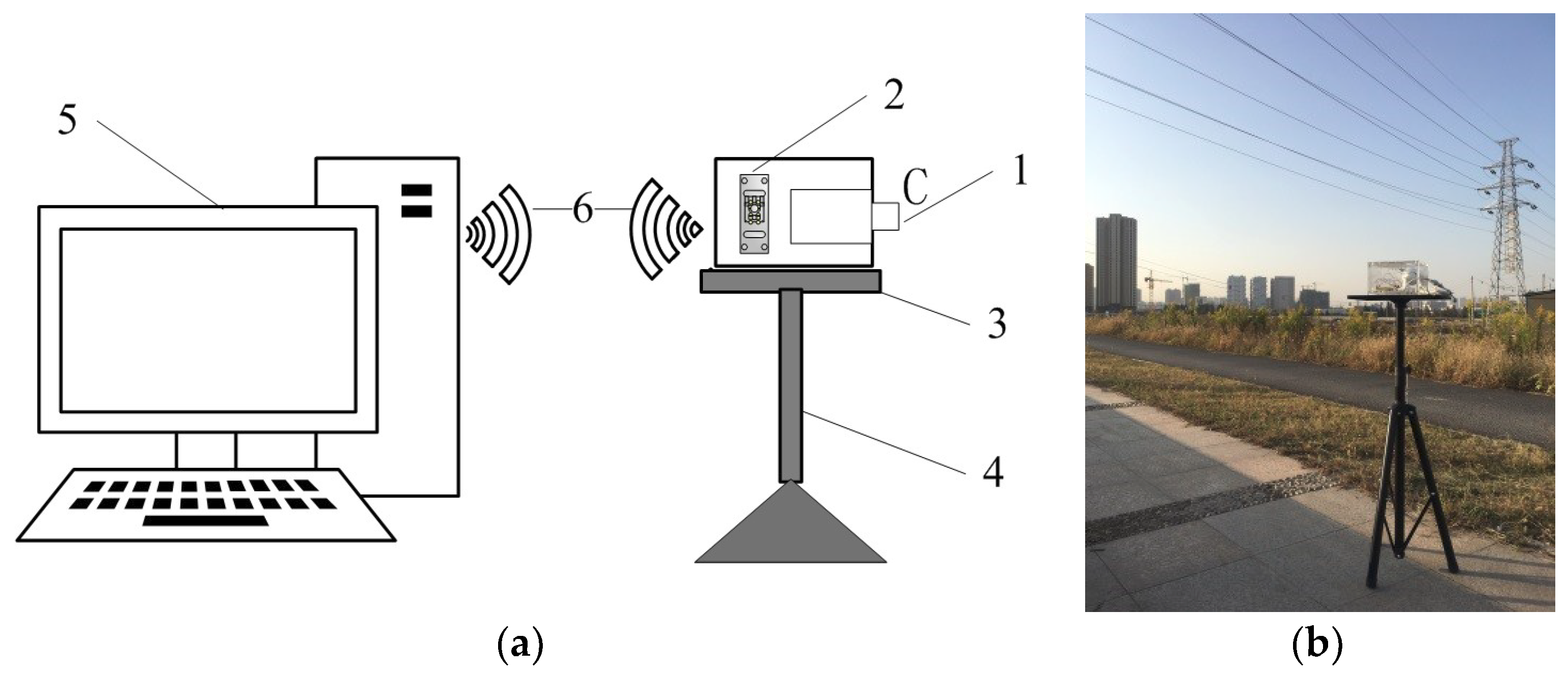

2. Materials and Methods

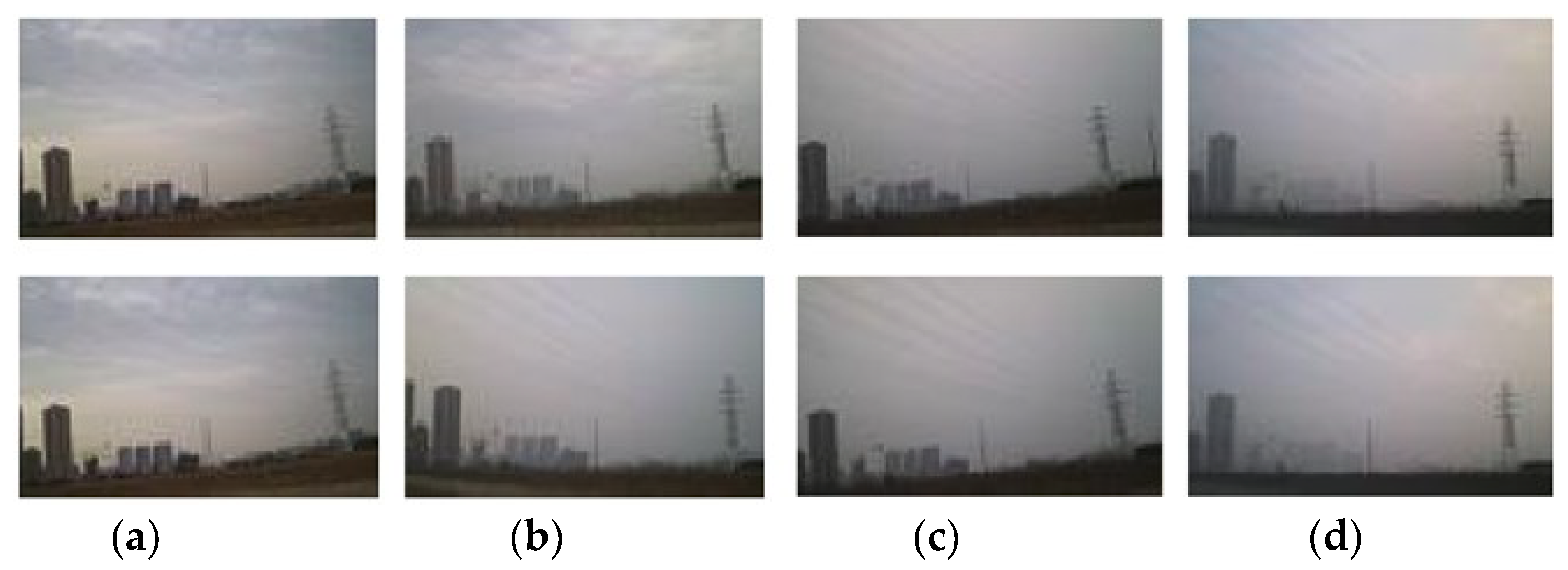

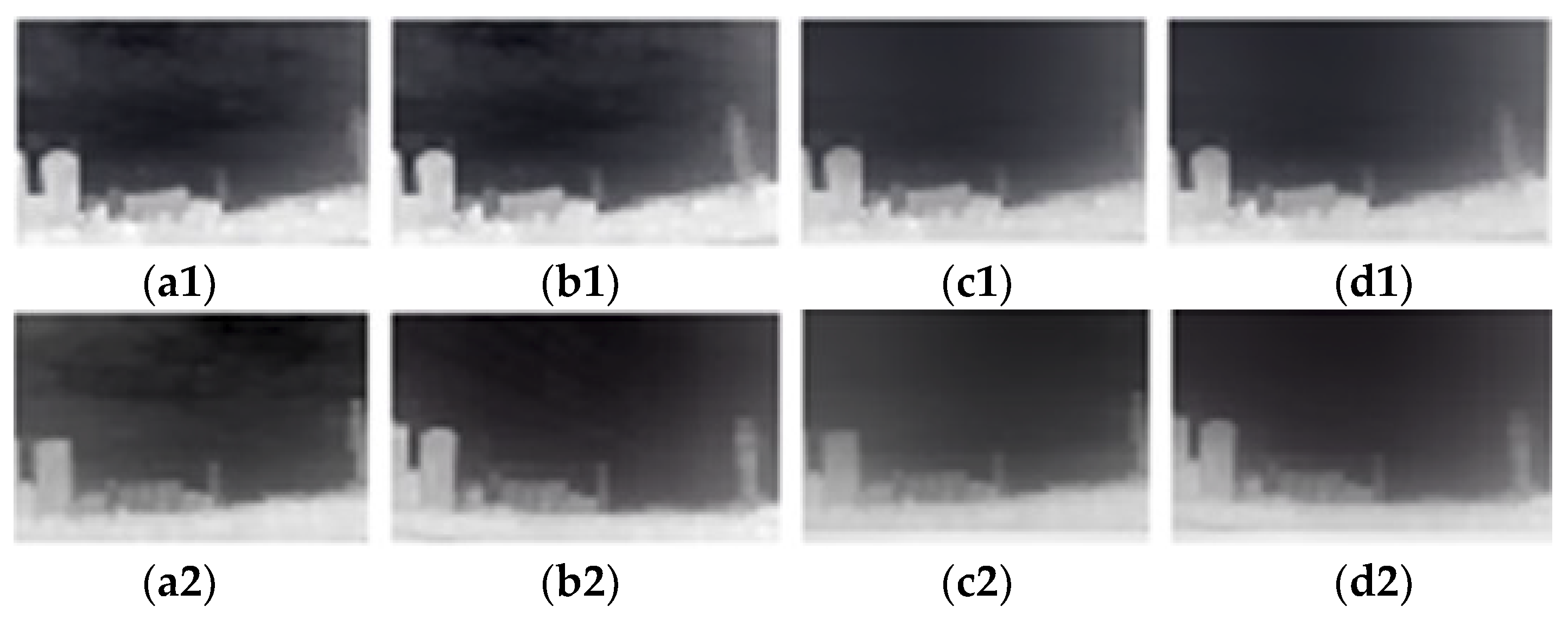

2.1. Materials

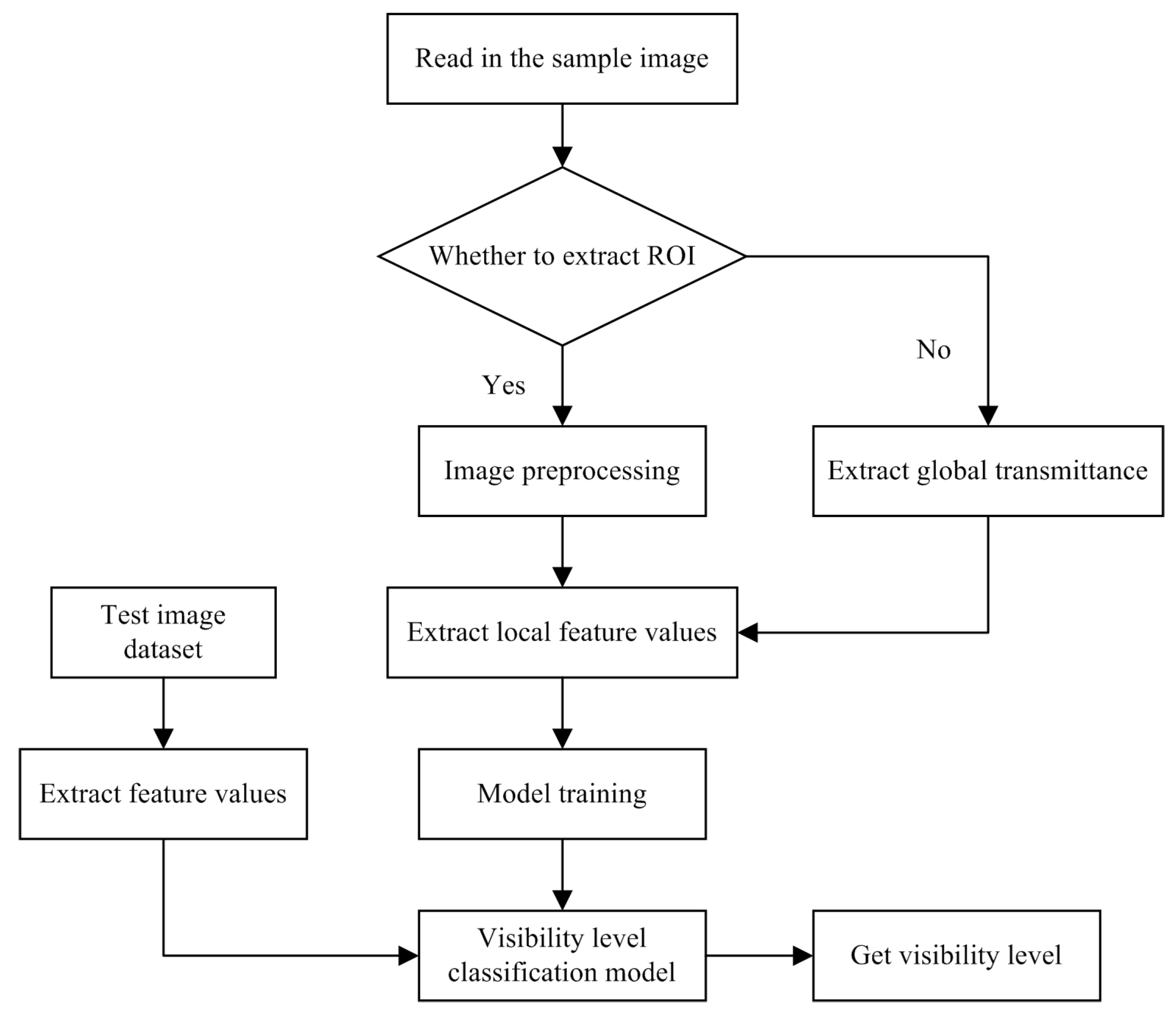

2.2. Visibility Recognition Method

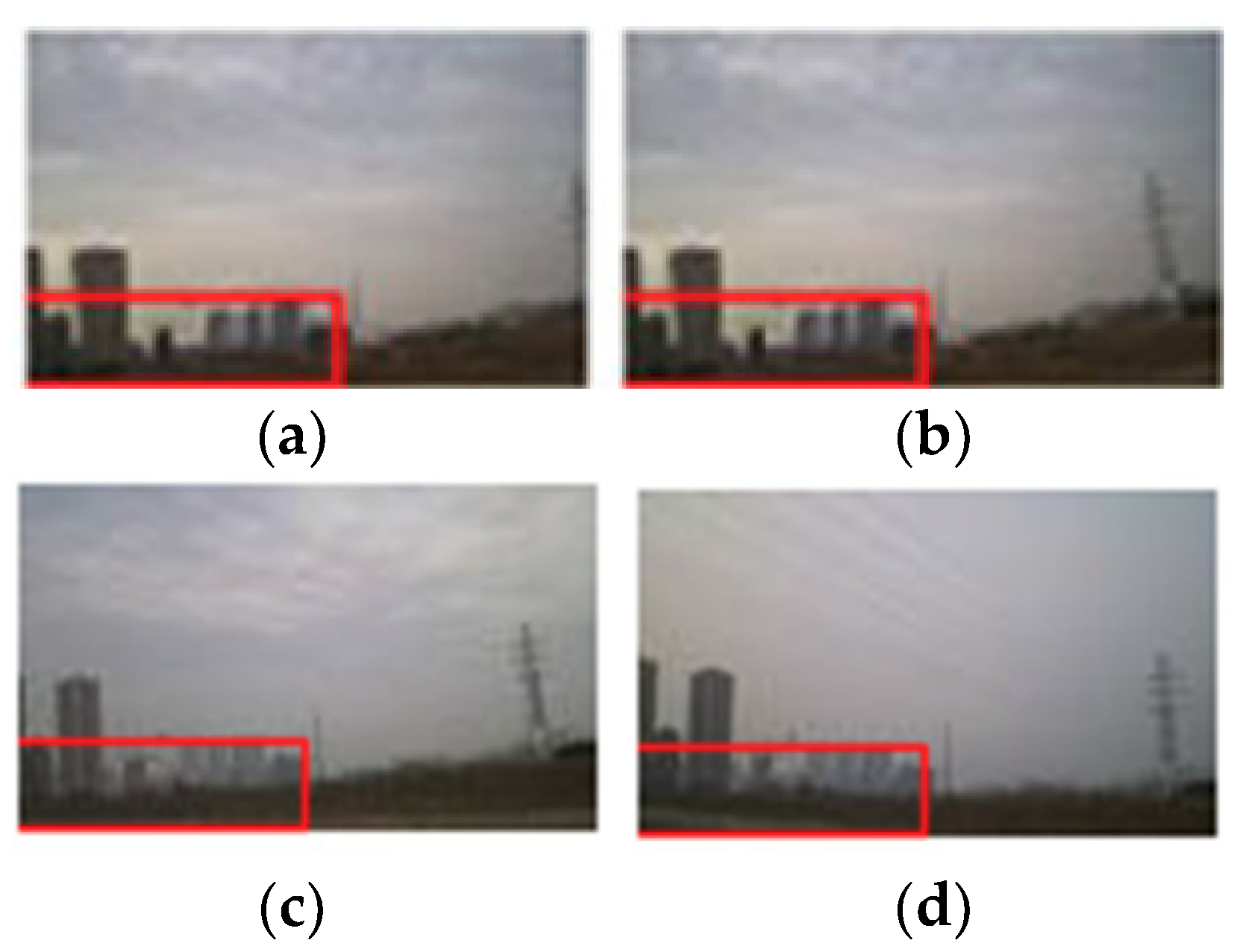

2.2.1. ROI Extraction

2.2.2. Image Preprocessing

2.2.2.1. Expansion and Erosion

2.2.2.2. Linear Contrast Stretch

2.2.3. Extraction of Feature Values

2.2.3.1. Calculation of Transmittance

- (1)

- Extract the value I of the 0.1% pixels with the largest luminance from the dark channel map.

- (2)

- Search the maximum value among these values and take it as the global atmospheric light value.

- (1)

- The filtering result at pixel i can be expressed as a weighted average, as expressed by:where i and j represent the abscissa and ordinate of the image plane, H is the guided image; is the value before filtering, is the value after filtering, and is a function related to the guided image H. This function is independent of the image p to be processed.

- (2)

- Assuming that the guided filter is a local linear model in a two-dimensional window between the guided image H and the filtered output Q, which is expressed by:where a and b are the coefficients of the linear function when the center of the window is at k; is the kernel function related to the transmittance map before filtering; is the current processing window of image I; indicates that any pixel in the current processing window satisfies Equation (7).

- (3)

- To minimize the difference between the pre-filtered image J and the filtered image Q, the kernel functions adopted by the coefficients a and b of the fast guided filter are shown by:where and are is the mean value and variance of the pixel intensity in the current window of the image H, respectively; is the number of pixels in the window; indicates the mean of I values for each pixel in the current window.

2.2.3.2. Edge Features

2.2.3.3. Extraction of Local Contrast

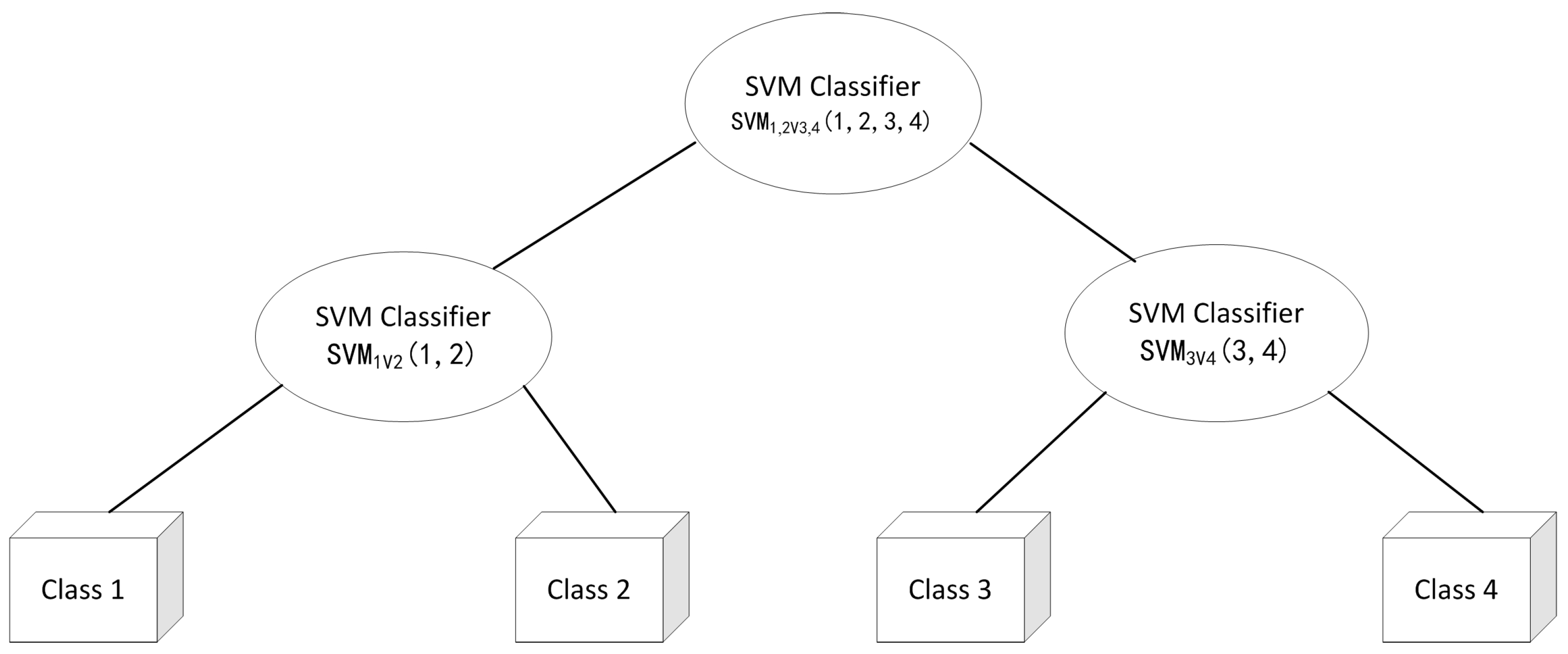

2.2.4. Multi-Classification Model Based on Binary Tree SVM

- Step 1

- Extract the feature vectors of the images of four visibility levels. Firstly, start from the root node 1, calculate the SVM (serial number), and judge the next destination according to the output value. If the visibility level of the image is Class 1 or Class 2, then go to the left leaf node (serial number) of layer 2, and if the visibility level belongs to Class 3 or Class 4, then go to the right leaf node (serial number) of layer 2.

- Step 2

- Go to the left leaf node, calculate the classifier (serial number). If the result of this air visibility level is positive, it belongs to Class 1, otherwise, it belongs to Class 2.

- Step 3

- Go to the right leaf node, calculate the classifier (serial number). If the result of this air visibility level is positive, it belongs to Class 3, otherwise, it belongs to Class 4.

3. Results and Discussion

3.1. Results of Visibility Level Recognition Based on the Proposed Method

3.2. Comparison between the Proposed Method and Traditional Methods

4. Conclusions

- (1)

- Using the saliency map acquired in the frequency domain of the image, the ROI extracted by the saliency region is salient in the image, which can fully reflect the features of the image, so that the feature values extracted in the ROI can be easily distinguished.

- (2)

- The transmittance feature values extracted using the dark channel prior principle have three channels of R, G, and B, which can reflect slight differences between different air visibility levels. In addition, rapid guided filtering is employed to optimize the extraction of the transmittance, so that the feature value of transmittance is more distinguishable for different air visibility levels.

- (3)

- This paper constructs a model for recognizing air visibility level based on the optimal binary tree SVM. After the calculation of the optimal binary tree and three SVMs, four air visibility levels can be recognized. Combined with the cross-validation method, the recognition accuracy of good air quality, mild pollution, moderate pollution, and heavy pollution are 92.00%, 92.00%, 88.00%, and 100.00%, with an average recognition accuracy of 93.00%. Therefore, the method is able to recognize four air visibility levels in a relatively accurate way.

Author Contributions

Funding

Conflicts of Interest

References

- Tai, H.; Zhuang, Z.; Jiang, L.; Sun, D. Visibility measurement in an atmospheric environment simulation chamber. Curr. Opt. Photonics 2017, 1, 186–195. [Google Scholar]

- Kim, K.W. The comparison of visibility measurement between image-based visual range, human eye-based visual range, and meteorological optical range. Atmos. Environ. 2018, 190, 74–86. [Google Scholar] [CrossRef]

- García, J.A.; Rodriguez-Sánchez, R.; Fdez-Valdivia, J.; Martinez-Baena, J. Information visibility using transmission methods. Pattern Recognit. Lett. 2010, 31, 609–618. [Google Scholar] [CrossRef]

- Kim, K.S.; Kang, S.Y.; Kim, W.S.; Cho, H.S.; Park, C.K.; Lee, D.Y.; Kim, G.A.; Park, S.Y.; Lim, H.W.; Lee, H.W.; et al. Improvement of radiographic visibility using an image restoration method based on a simple radiographic scattering model for x-ray nondestructive testing. NDT E Int. 2018, 98, 117–122. [Google Scholar] [CrossRef]

- Gultepe, I.; Müller, M.D.; Boybeyi, Z. A New Visibility Parameterization for Warm-Fog Applications in Numerical Weather Prediction Models. J. Appl. Meteorol. Clim. 2006, 45, 1469–1480. [Google Scholar] [CrossRef]

- He, X.; Zhao, J. Multiple lyapunov functions with blending for inducedl2-norm control of switched lpv systems and its application to an f-16 aircraft model. Asian J. Control 2014, 16, 149–161. [Google Scholar] [CrossRef]

- Yanju, L.; Hongmei, L.; Jianhui, S. Research of highway visibility detection based on surf feature point matching. J. Shenyang Ligong Univ. 2017, 36, 72–77. [Google Scholar]

- Min, X.; Hongying, Z.; Yadong, W. Image visibility detection algorithm based on scene depth for fogging environment. Process Autom. Instrum. 2017, 38, 89–94. [Google Scholar]

- Xi, X.; Xu-Cheng, Y.; Yan, L.; Hong-Wei, H.; Xiao-Zhong, C. Visibility measurement with image understanding. Intern. J. Pattern Recognit. Artif. Intell. 2013, 26, 543–551. [Google Scholar]

- Suárez Sánchez, A.; García Nieto, P.J.; Riesgo Fernández, P.; del Coz Díaz, J.J.; Iglesias-Rodríguez, F.J. Application of an svm-based regression model to the air quality study at local scale in the avilés urban area (spain). Math. Comput. Model. 2011, 54, 1453–1466. [Google Scholar] [CrossRef]

- Bronte, S.; Bergasa, L.M.; Alcantarilla, P.F. Fog detection system based on computer vision. In Proceedings of the 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009. [Google Scholar]

- Singh, K.P.; Gupta, S.; Rai, P. Identifying pollution sources and predicting urban air quality using ensemble learning methods. Atmos. Environ. 2013, 80, 426–437. [Google Scholar] [CrossRef]

- Feng, X.; Li, Q.; Zhu, Y.; Hou, J.; Jin, L.; Wang, J. Artificial neural networks forecasting of PM2.5 pollution using air mass trajectory based geographic model and wavelet transformation. Atmos. Environ. 2015, 107, 118–128. [Google Scholar] [CrossRef]

- Simu, S.; Lal, S.; Nagarsekar, P.; Naik, A. Fully automatic roi extraction and edge-based segmentation of radius and ulna bones from hand radiographs. Biocybern. Biomed. Eng. 2017, 37, 718–732. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.; Robinson, S. Spatial co-registration of ultra-high resolution visible, multispectral and thermal images acquired with a micro-uav over antarctic moss beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Wang, J.-B.; He, N.; Zhang, L.-L.; Lu, K. Single image dehazing with a physical model and dark channel prior. Neurocomputing 2015, 149, 718–728. [Google Scholar] [CrossRef]

- Ling, Z.; Li, S.; Wang, Y.; Lu, X. Adaptive transmission compensation via human visual system for robust single image dehazing. Vis. Comput. 2016, 32, 653–662. [Google Scholar] [CrossRef]

- Jourlin, M.; Breugnot, J.; Itthirad, F.; Bouabdellah, M.; Closs, B. Logarithmic image processing for color images. Adv. Imaging Electron Phys. 2011, 168, 65–107. [Google Scholar]

- Deshpande, A.; Tadse, S.K. Design approach for content-based image retrieval using gabor-zernike features. Int. J. Eng. Sci. Technol. 2012, 3, 42–46. [Google Scholar]

- An, Y.; Ding, S.; Shi, S.; Li, J. Discrete space reinforcement learning algorithm based on support vector machine classification. Pattern Recognit. Lett. 2018, 111, 30–35. [Google Scholar] [CrossRef]

- Tang, F.; Adam, L.; Si, B. Group feature selection with multiclass support vector machine. Neurocomputing 2018, 317, 42–49. [Google Scholar] [CrossRef]

- Manikandan, J.; Venkataramani, B. Study and evaluation of a multi-class svm classifier using diminishing learning technique. Neurocomputing 2010, 73, 1676–1685. [Google Scholar] [CrossRef]

- Qin, G.; Huang, X.; Chen, Y. Nested one-to-one symmetric classification method on a fuzzy SVM for moving vehicles. Symmetry 2017, 9, 48. [Google Scholar] [CrossRef]

| Levels of Visibility | Edge Features | Eraction of Local Contrast | R Channel Transmittance | G Channel Transmittance | B Channel Transmittance |

|---|---|---|---|---|---|

| good air quality | 2009.631 | 0.002457 | 0.40 | 0.40 | 0.43 |

| mild pollution | 461.629 | 0.001429 | 0.39 | 0.39 | 0.40 |

| moderate pollution | 645.9079 | 0.001429 | 0.40 | 0.38 | 0.39 |

| heavy pollution | 730.431 | 0.002457 | 0.34 | 0.33 | 0.35 |

| good air quality | 2002.341 | 0.002457 | 0.40 | 0.40 | 0.42 |

| mild pollution | 460.9177 | 0.001314 | 0.37 | 0.36 | 0.37 |

| moderate pollution | 708.7448 | 0.001714 | 0.36 | 0.35 | 0.35 |

| heavy pollution | 731.2064 | 0.0024 | 0.37 | 0.34 | 0.33 |

| Classifier Recognition Accuracy | Levels of Visibility | |||

|---|---|---|---|---|

| Good Air Quality | Heavy Pollution | Mild Pollution | Moderate Pollution | |

| Classifier SVM1,2V3,4 recognition accuracy (%) | 96.00 | 100 | 96.00 | 92.00 |

| Classifier SVM1V2 recognition accuracy (%) | 92.00 | 100 | NA 1 | NA |

| Classifier SVM3V4 recognition accuracy (%) | NA | NA | 92.00 | 88.00 |

| Single level recognition accuracy (%) | 92.00 | 100 | 92.00 | 88.00 |

| Average recognition accuracy (%) | 93.00 | |||

| Method | Training Time (Unit: s) | Recognition Rate (%) |

|---|---|---|

| one-to-one SVM | 6.5 | 88.00 |

| one-to-many SVM | 6.7 | 90.00 |

| proposed method | 6.0 | 93.00 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, N.; Luo, M.; Zou, X.; Qiu, X.; Lu, J.; Han, J.; Wang, S.; Wei, Y.; Zhang, S.; Yao, H. A Novel Method for the Recognition of Air Visibility Level Based on the Optimal Binary Tree Support Vector Machine. Atmosphere 2018, 9, 481. https://doi.org/10.3390/atmos9120481

Zheng N, Luo M, Zou X, Qiu X, Lu J, Han J, Wang S, Wei Y, Zhang S, Yao H. A Novel Method for the Recognition of Air Visibility Level Based on the Optimal Binary Tree Support Vector Machine. Atmosphere. 2018; 9(12):481. https://doi.org/10.3390/atmos9120481

Chicago/Turabian StyleZheng, Naishan, Manman Luo, Xiuguo Zou, Xinfa Qiu, Jingxia Lu, Jiaqi Han, Siyu Wang, Yuning Wei, Shikai Zhang, and Heyang Yao. 2018. "A Novel Method for the Recognition of Air Visibility Level Based on the Optimal Binary Tree Support Vector Machine" Atmosphere 9, no. 12: 481. https://doi.org/10.3390/atmos9120481

APA StyleZheng, N., Luo, M., Zou, X., Qiu, X., Lu, J., Han, J., Wang, S., Wei, Y., Zhang, S., & Yao, H. (2018). A Novel Method for the Recognition of Air Visibility Level Based on the Optimal Binary Tree Support Vector Machine. Atmosphere, 9(12), 481. https://doi.org/10.3390/atmos9120481