Meteorological Modeling Using the WRF-ARW Model for Grand Bay Intensive Studies of Atmospheric Mercury

Abstract

:1. Introduction

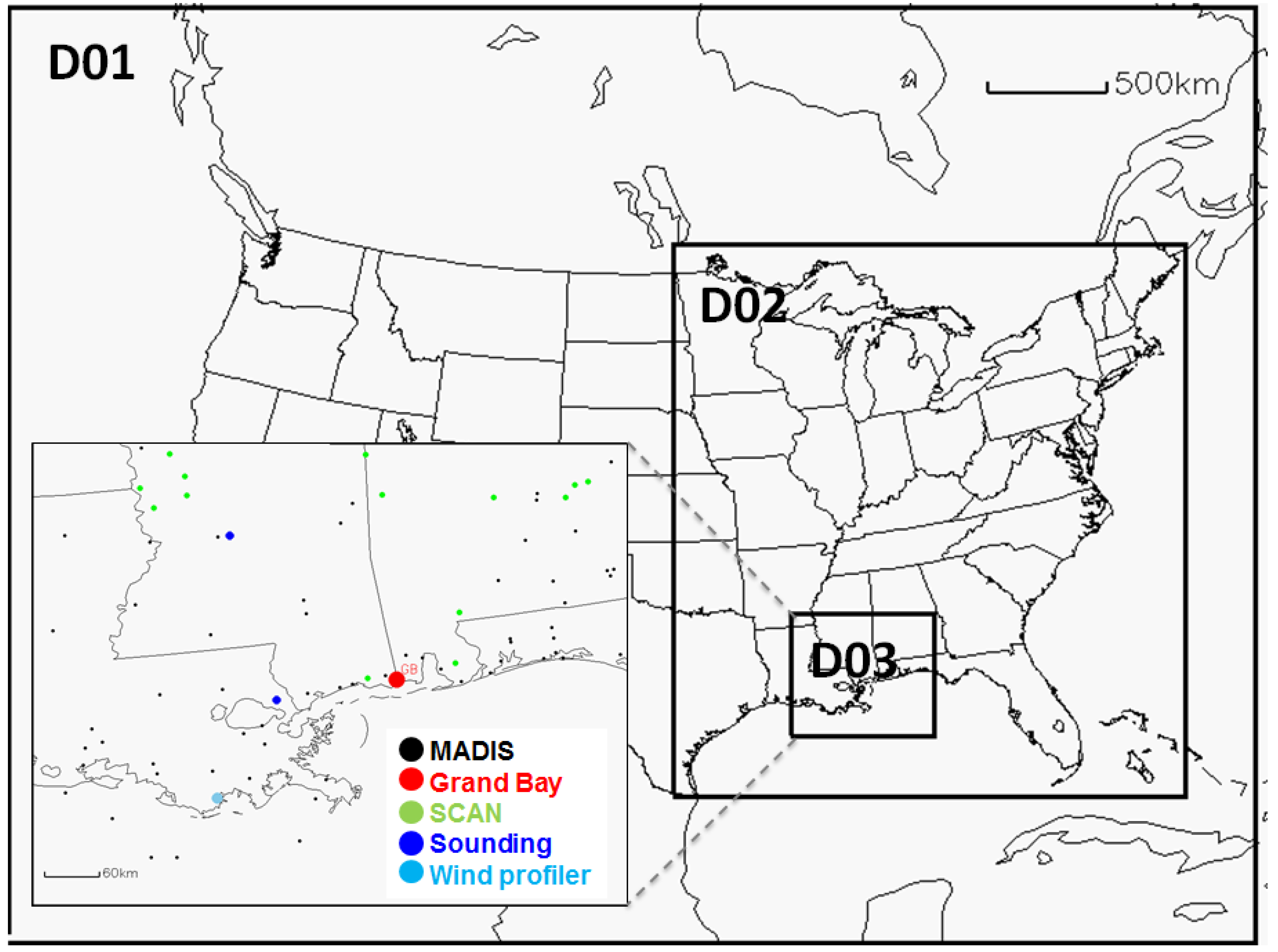

2. Experimental Section

2.1. Configuration of the WRF Model

2.2. WRF Simulation Designs

2.2.1. Reanalysis Data for WRF Model Initialization

2.2.2. Nudging Procedure

2.3. Observation Data for Model Evaluations

2.4. Backward Trajectory Analysis

2.5. Overview of Grand Bay Intensive Measurements Periods

3. Results and Discussion

3.1. Meteorological Modeling for Summer 2010

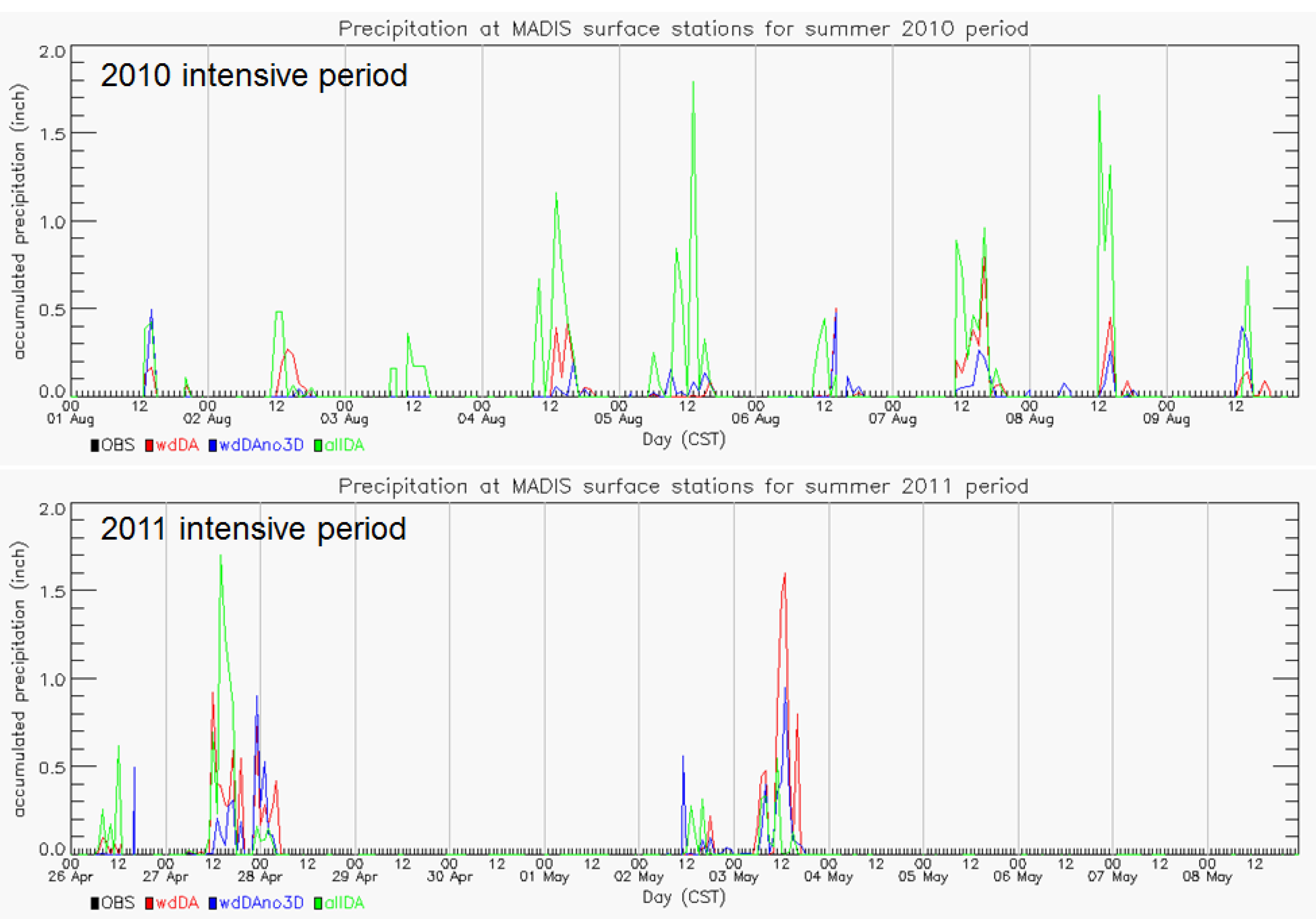

3.1.1. Regional Evaluations

| Variable | IC/LBC | Nudging | R | Bias | RMSE | MAE | SDE | IOA | |

|---|---|---|---|---|---|---|---|---|---|

| Wind speed (m·s−1) 17,447 samples | WRF-NARR | allDA | 0.684 | −0.195 | 1.127 | 0.842 | 1.285 | 0.819 | |

| WRF-NARR | wdDAno3D | 0.617 | 0.022 | 1.222 | 0.938 | 1.554 | 0.783 | ||

| WRF-NARR | wdDA | 0.716 | −0.223 | 1.049 | 0.797 | 1.176 | 0.831 | ||

| Wind direction (degree) 16,247 samples | WRF-GFS | wdDA | 0.756 | −0.339 | 1.009 | 0.757 | 1.038 | 0.842 | |

| WRF-NNRP | wdDA | 0.721 | −0.340 | 1.069 | 0.799 | 1.113 | 0.821 | ||

| WRF-CFSR | wdDA | 0.738 | −0.333 | 1.037 | 0.777 | 1.078 | 0.831 | ||

| WRF-NARR | allDA | 0.719 | −7.396 | 70.223 | 36.123 | 75.511 | 0.850 | ||

| WRF-NARR | wdDAno3D | 0.665 | −6.122 | 76.290 | 42.114 | 84.132 | 0.821 | ||

| WRF-NARR | wdDA | 0.731 | −5.565 | 68.692 | 35.011 | 74.529 | 0.857 | ||

| WRF-GFS | wdDA | 0.765 | −5.504 | 65.571 | 30.246 | 69.867 | 0.878 | ||

| WRF-NNRP | wdDA | 0.729 | −2.319 | 68.984 | 33.354 | 75.608 | 0.858 | ||

| WRF-CFSR | wdDA | 0.744 | −5.693 | 67.782 | 32.612 | 72.710 | 0.866 | ||

| Temperature (°C) 25,585 samples | WRF-NARR | allDA | 0.940 | −0.097 | 1.225 | 0.869 | 1.444 | 0.966 | |

| WRF-NARR | wdDAno3D | 0.830 | −0.212 | 1.992 | 1.505 | 2.366 | 0.901 | ||

| WRF-NARR | wdDA | 0.850 | 0.093 | 1.871 | 1.361 | 2.368 | 0.915 | ||

| WRF-GFS | wdDA | 0.857 | −0.356 | 1.872 | 1.410 | 2.119 | 0.919 | ||

| WRF-NNRP | wdDA | 0.853 | 0.171 | 1.864 | 1.353 | 2.401 | 0.853 | ||

| WRF-CFSR | wdDA | 0.864 | −0.226 | 1.804 | 1.347 | 2.112 | 0.923 |

| ICBC | Nudging | Wind Speed (m·s−1) 3806 samples | Wind Direction (degree) 3961 samples | Temperature (°C) 4158 samples | Relative Humidity (%) 4173 samples |

|---|---|---|---|---|---|

| WRF-NARR | allDA | 1.180 | 61.425 | 2.230 | 8.860 |

| WRF-NARR | wdDAno3D | 1.222 | 60.476 | 1.858 | 7.663 |

| WRF-NARR | wdDA | 1.171 | 59.629 | 2.482 | 8.912 |

| WRF-GFS | wdDA | 1.251 | 60.680 | 1.651 | 8.334 |

| WRF-NNRP | wdDA | 1.366 | 61.566 | 1.787 | 9.246 |

| WRF-CFSR | wdDA | 1.207 | 58.772 | 2.021 | 8.806 |

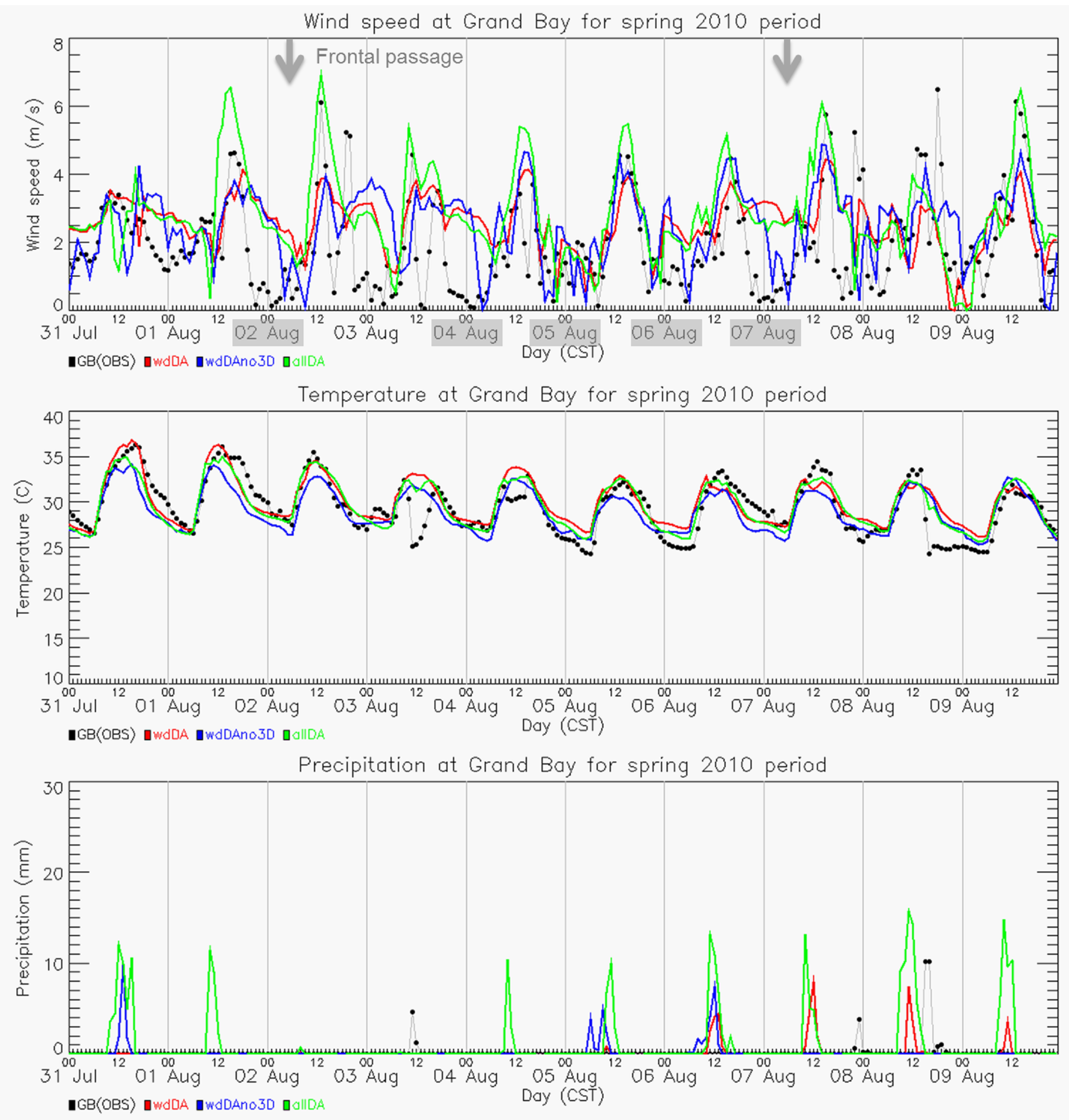

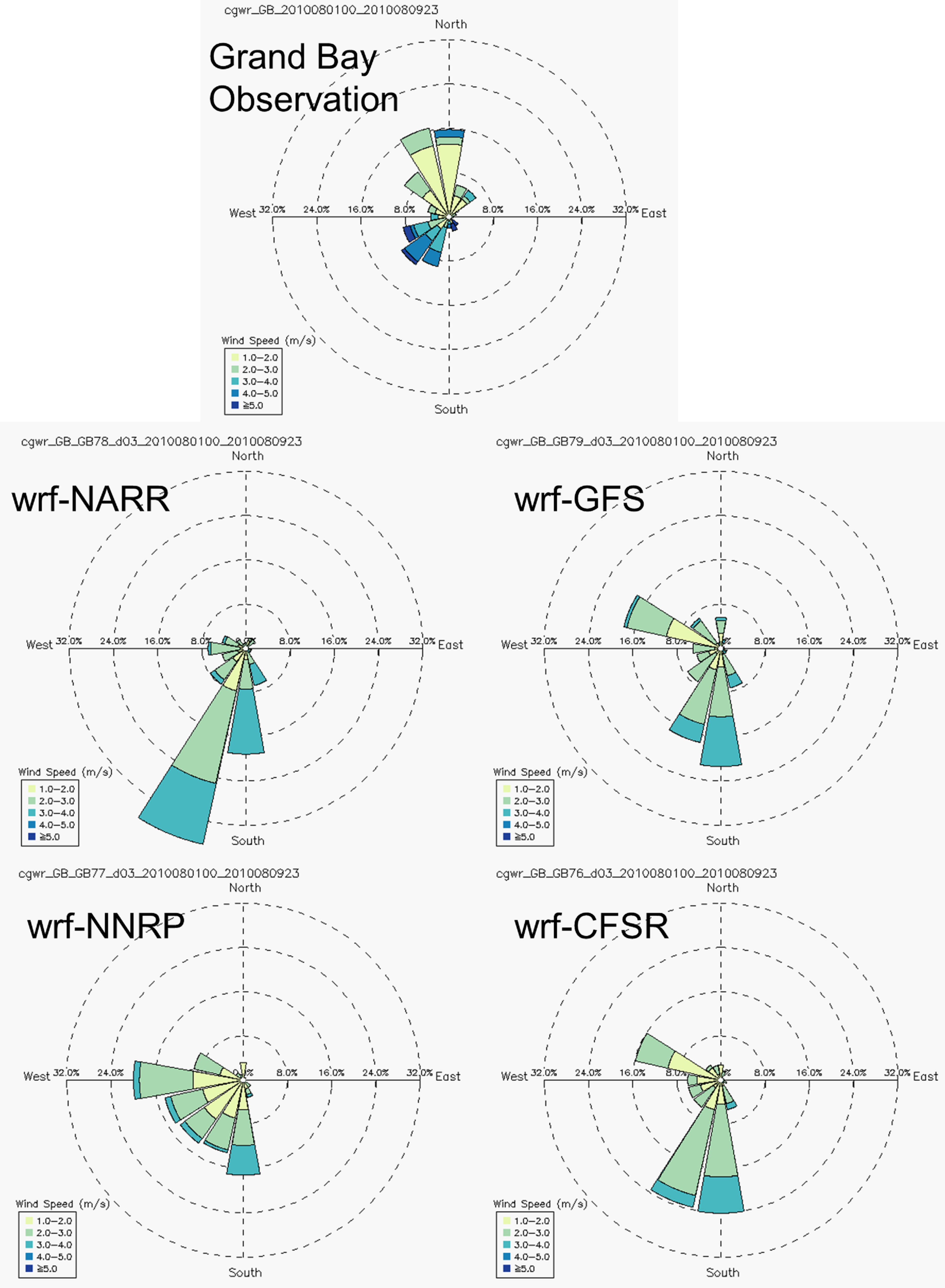

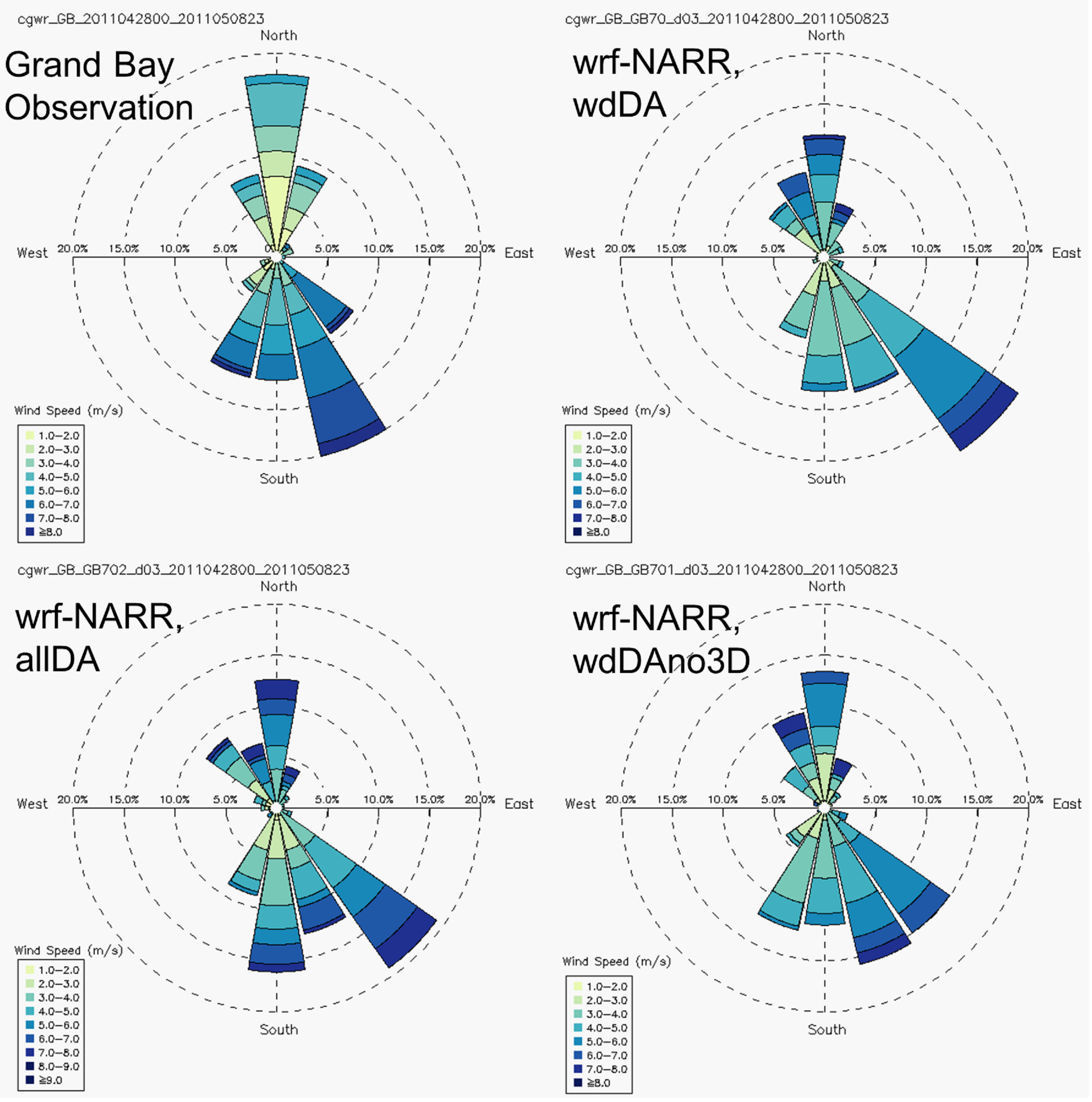

3.1.2. Grand Bay Station Analysis

| ICBC | Nudging | Wind Speed (m·s−1) 392 samples | Wind Direction (degree) 392 samples | Temperature (°C) 392 samples | Relative Humidity (%) 392 samples |

|---|---|---|---|---|---|

| WRF-NARR | allDA | 1.641 | 33.274 | 0.636 | 10.854 |

| WRF-NARR | wdDAno3D | 1.656 | 41.510 | 0.780 | 14.003 |

| WRF-NARR | wdDA | 1.613 | 32.391 | 0.652 | 11.254 |

| WRF-GFS | wdDA | 1.548 | 31.722 | 0.607 | 15.003 |

| WRF-NNRP | wdDA | 2.054 | 33.469 | 0.731 | 9.671 |

| WRF-CFSR | wdDA | 1.898 | 30.434 | 0.603 | 13.425 |

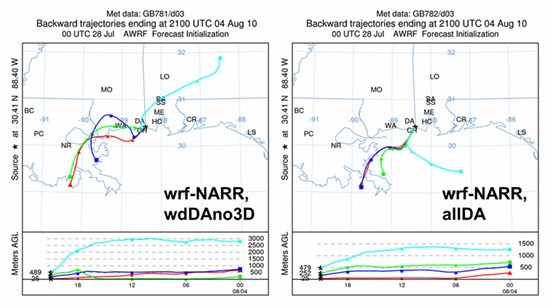

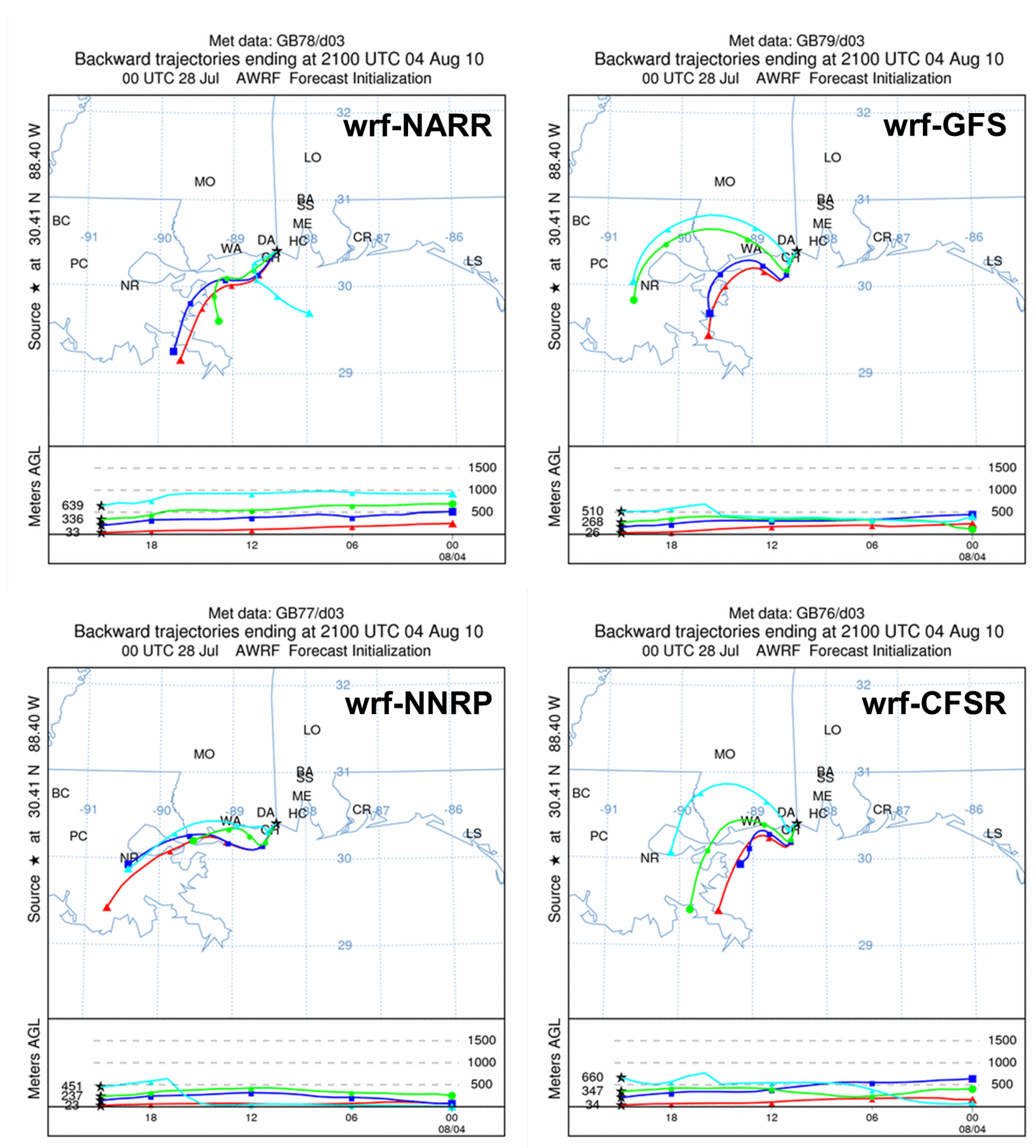

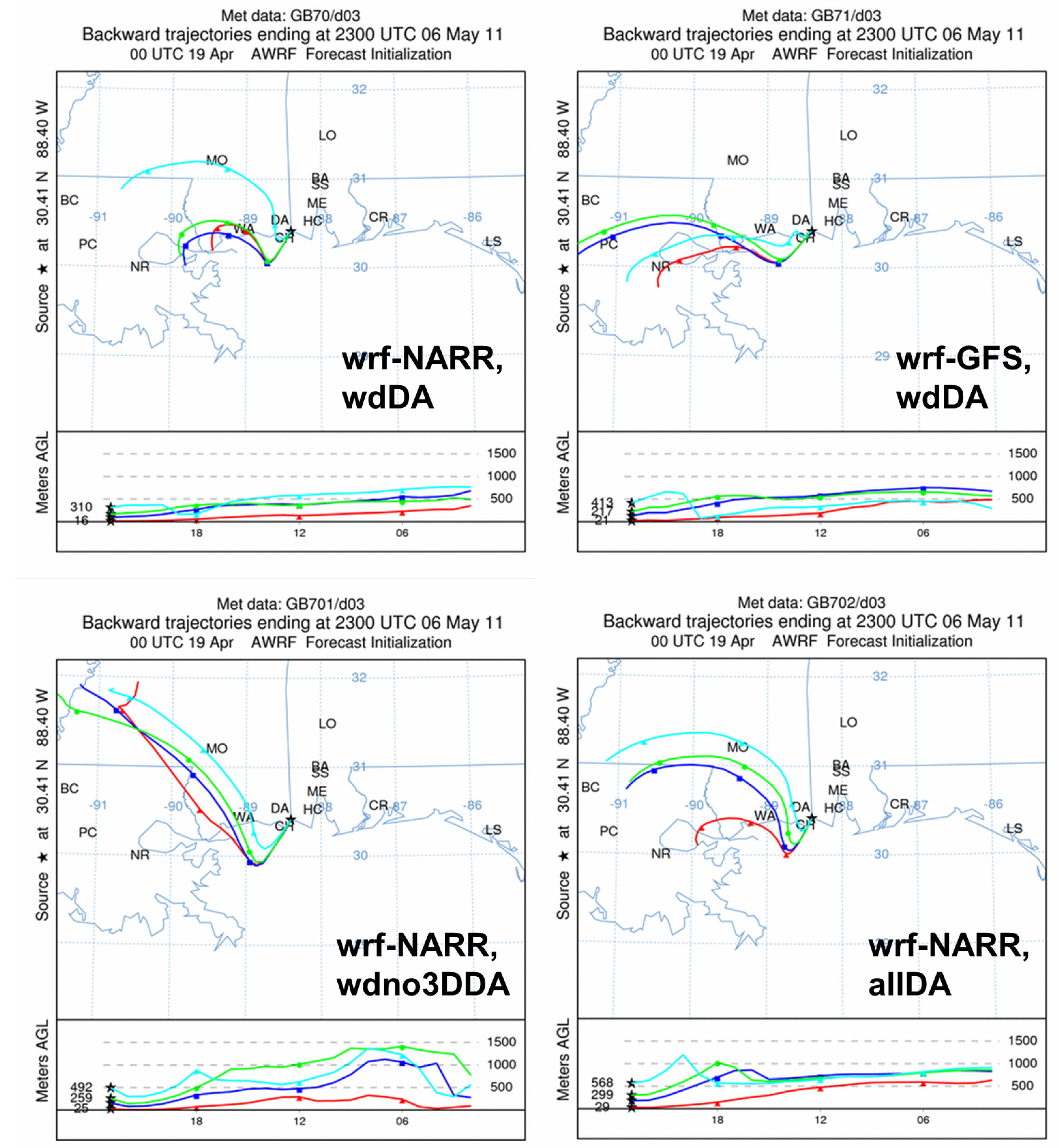

3.1.3. Backward Trajectory Analysis

3.2. Meteorological Modeling for Spring 2011

3.2.1. Regional Evaluations

3.2.2. Grand Bay Station Analysis

| Variable | IC/LBC | Nudging | R | Bias | RMSE | MAE | SDE | IOA |

|---|---|---|---|---|---|---|---|---|

| Wind speed (m·s−1) 2768 samples | WRF-NARR | allDA | 0.723 | −0.268 | 2.068 | 1.565 | 2.426 | 0.835 |

| WRF-NARR | wdDAno3D | 0.725 | −0.407 | 2.070 | 1.571 | 2.340 | 0.819 | |

| WRF-NARR | wdDA | 0.738 | −0.401 | 2.030 | 1.546 | 2.296 | 0.832 | |

| WRF-GFS | wdDA | 0.656 | −1.339 | 2.617 | 1.966 | 2.335 | 0.627 | |

| Wind direction (degree) 2834 samples | WRF-NARR | allDA | 0.513 | 2.688 | 75.448 | 35.057 | 84.320 | 0.744 |

| WRF-NARR | wdDAno3D | 0.400 | 1.244 | 85.365 | 41.831 | 95.609 | 0.683 | |

| WRF-NARR | wdDA | 0.502 | 1.477 | 76.431 | 35.584 | 84.930 | 0.737 | |

| WRF-GFS | wdDA | 0.471 | 8.029 | 80.530 | 39.094 | 92.958 | 0.718 | |

| Temperature (°C) 2844 samples | WRF-NARR | allDA | 0.922 | 0.466 | 2.262 | 1.816 | 3.179 | 0.957 |

| WRF-NARR | wdDAno3D | 0.904 | 0.280 | 2.309 | 1.841 | 3.123 | 0.948 | |

| WRF-NARR | wdDA | 0.910 | 0.433 | 2.296 | 1.855 | 3.212 | 0.951 | |

| WRF-GFS | wdDA | 0.906 | −0.137 | 2.276 | 1.759 | 2.791 | 0.947 | |

| RH (%) 2840 samples | WRF-NARR | allDA | 0.880 | 2.475 | 9.676 | 7.683 | 13.808 | 0.933 |

| WRF-NARR | wdDAno3D | 0.838 | −0.389 | 12.237 | 9.520 | 15.263 | 0.908 | |

| WRF-NARR | wdDA | 0.847 | −1.357 | 12.399 | 9.649 | 14.855 | 0.909 | |

| WRF-GFS | wdDA | 0.837 | 4.237 | 11.879 | 9.414 | 17.593 | 0.901 |

| ICBC | Nudging | Wind Speed (m·s−1) 978 samples | Wind Direction (degree) 978 samples | Temperature (°C) 978 samples | Relative Humidity (%) 917 samples |

|---|---|---|---|---|---|

| WRF-NARR | allDA | 1.698 | 21.938 | 0.915 | 9.797 |

| WRF-NARR | wdDAno3D | 1.869 | 21.822 | 0.895 | 8.825 |

| WRF-NARR | wdDA | 1.683 | 22.059 | 0.838 | 8.575 |

| WRF-GFS | wdDA | 1.649 | 20.128 | 0.626 | 8.432 |

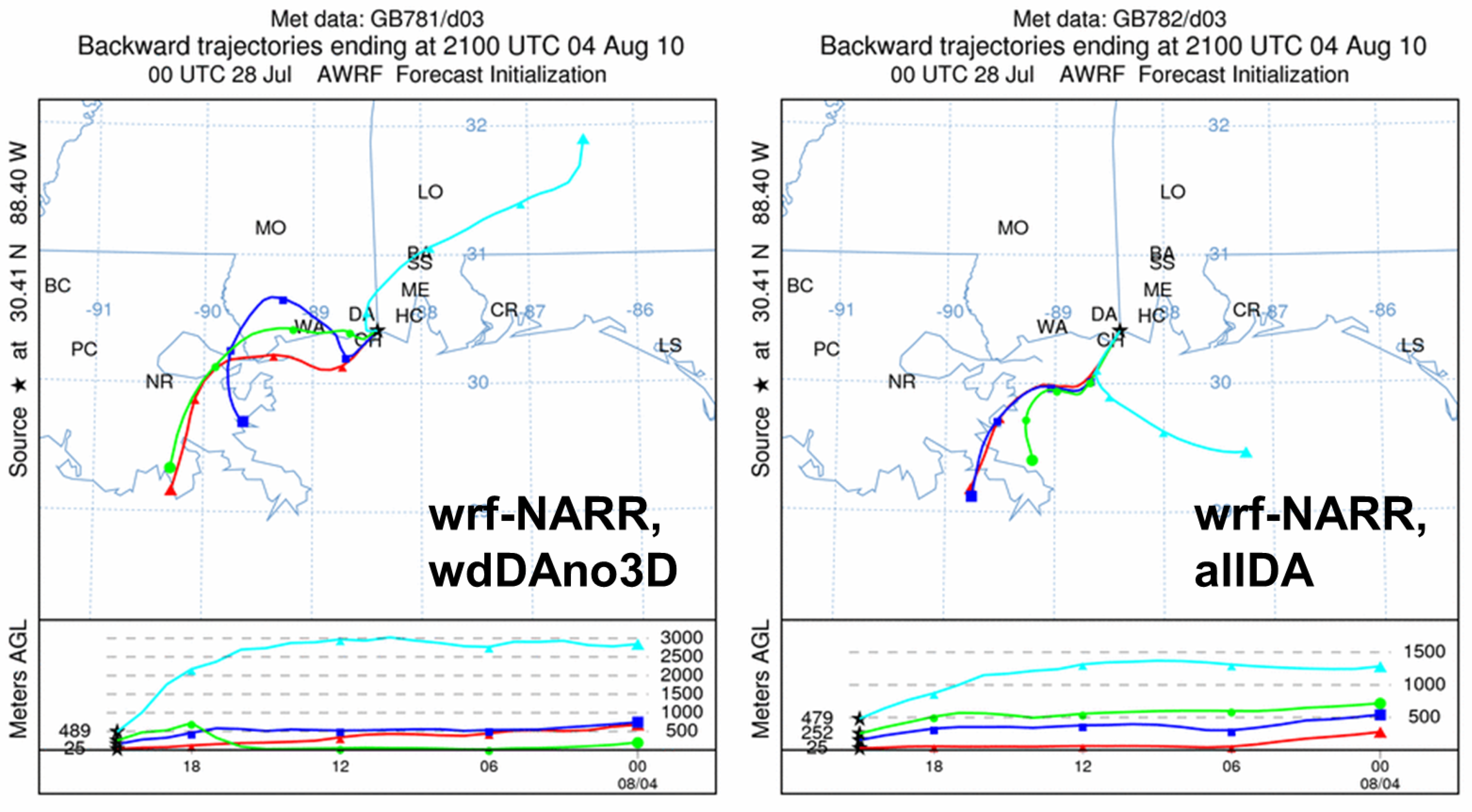

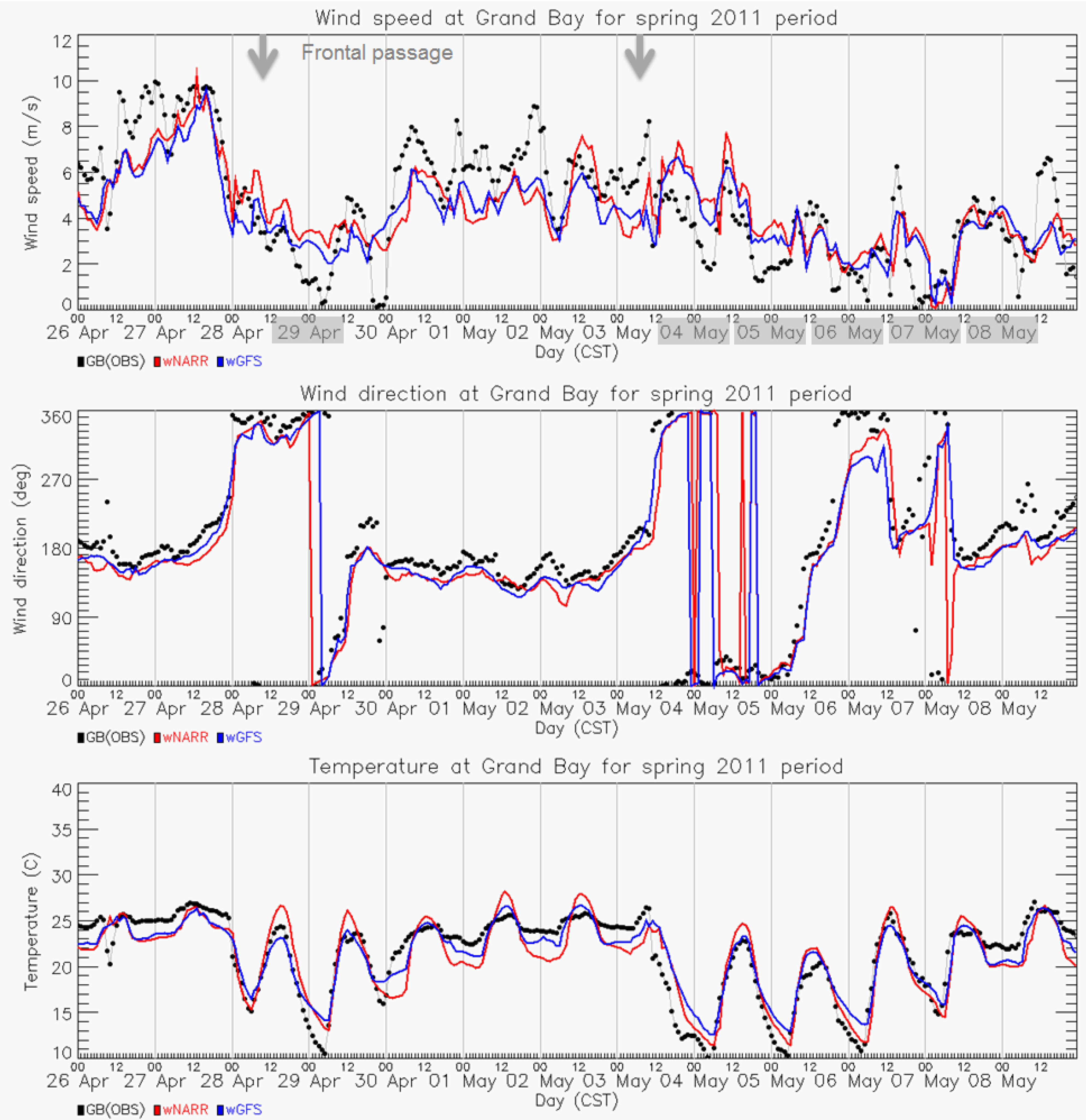

3.2.3. Backward Trajectory Analysis

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Driscoll, C.T.; Manson, R.P.; Chan, H.M.; Jacob, D.J.; Pirron, N. Mercury as a global pollutant: Sources, pathways, and effects. Environ. Sci. Technol. 2013, 47, 4967–4983. [Google Scholar] [CrossRef] [PubMed]

- Cohen, M.; Artz, R.; Draxler, R.; Miller, P.; Poissant, L.; Niemi, D.; Ratte, D.; Deslauriers, M.; Duval, R.; Laurin, R.; et al. Modeling the atmospheric transport and deposition of mercury to the Great Lakes. Environ. Res. 2004, 47, 4967–4983. [Google Scholar]

- Cohen, M.; Artz, R.; Draxle, R. Report to Congress: Mercury Contamination in the Great Lakes; NOAA Air Resources Laboratory: Silver Spring, MD, USA, 2007. [Google Scholar]

- Evers, D.C.; Wiener, J.G.; Basu, N.; Bodaly, R.A.; Morrison, H.A.; Williams, K.A. Mercury in the Great Lakes region: Bioaccumulation, spatiotemporal patterns, ecological risks, and policy. Ecotoxicology 2011, 20, 1487–1499. [Google Scholar] [CrossRef] [PubMed]

- Harris, R.C.; Pollman, C.; Landing, W.; Evans, D.; Axelrad, D.; Hutchinson, D.; Morey, S.L.; Rumbold, D.; Dukhovskoy, D.; Adams, D.H.; et al. Mercury in the Gulf of Mexico: Sources to receptors. Environ. Res. 2012, 119, 42–52. [Google Scholar] [CrossRef] [PubMed]

- Butler, T.J.; Cohen, M.D.; Vermeylen, F.M.; Likens, G.E.; Schmeltz, D.; Artz, R.S. Regional precipitation mercury trends in the eastern USA, 1998–2005: Declines in the Northeast and Midwest, no trend in the Southeast. Atmos. Environ. 2008, 42, 1582–1592. [Google Scholar] [CrossRef]

- Engle, M.A.; Tate, M.T.; Krabbenhoft, D.P.; Kolker, A.; Olson, M.L.; Edgerton, E.S.; DeWild, J.F.; McPherson, A.K. Characterization and cycling of atmospheric mercury along the central U.S. Gulf Coast. Appl. Geochem. 2008, 23, 419–437. [Google Scholar] [CrossRef]

- Ren, X.; Luke, W.T.; Kelley, P.; Cohen, M.; Ngan, F.; Artz, R.; Walker, J.; Brooks, S.; Moore, C.; Swartzendruber, P.; et al. Mercury speciation at a coastal site in the northern Gulf of Mexico: Results from the Grand Bay Intensive Studies in summer 2010 and spring 2011. Atmosphere 2014, 5, 230–251. [Google Scholar] [CrossRef]

- Nair, U.S.; Wu, Y.; Holmes, C.D.; Schure, A.T.; Kallos, G.; Walters, J.T. Cloud-resolving simulations of mercury scavenging and deposition in thunderstorms. Atmos. Chem. Phys. 2013, 13, 10143–10210. [Google Scholar] [CrossRef]

- Draxler, R.R. An overview of the HYSPLIT_4 modeling system for trajectories, dispersion and deposition. Aust. Meteorol. Mag. 1988, 5, 230–251. [Google Scholar]

- Han, Y.J.; Holsen, T.M.; Hopke, P.K.; Yi, S.M. Comparison between back-trajectory based modeling and Lagrangian backward dispersion modeling for locating sources of reactive gaseous mercury. Environ. Sci. Technol. 2005, 39, 1715–1723. [Google Scholar] [CrossRef] [PubMed]

- Rolison, J.M.; Landing, W.M.; Luke, W.; Cohen, M.; Salters, V.J.M. Isotopic composition of species-specific atmospheric Hg in a coastal environment. Chem. Geol. 2013, 336, 37–49. [Google Scholar] [CrossRef]

- Gratz, L.E.; Keeler, G.J.; Marsik, F.J.; Barres, J.A.; Dvonch, J.T. Atmospheric transport of speciated mercury across southern Lake Michigan: Influence from emission sources in the Chicago/Gary urban area. Sci. Total Environ. 2013, 448, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Lei, H.; Liang, X.Z.; Wuebbles, D.J.; Tao, Z. Model analyses of atmospheric mercury: Present air quality and effects of transpacific transport on the United States. Atmos. Chem. Phys. 2013, 13, 10807–10825. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.Y.; Wang, W.; Powers, J.G. A description of the advanced research WRF Version 3. In NCAR Technical Note; NCAR: Boulder, CO, USA, 2008; TN-475+STR. [Google Scholar]

- W., W.; Lynch, A.H.; Rivers, A. Estimating the uncertainty in a regional climate model related to initial and lateral boundary conditions. J. Appl. Climatol. 2005, 18, 917–933. [Google Scholar] [CrossRef]

- Ngan, F.; Byun, D.W.; Kim, H.C.; Lee, D.G.; Rappenglueck, B.; Pour-Biazar, A. Performance assessment of retrospective meteorological inputs for use in air quality modeling during TexAQS 2006. Atmos. Environ. 2012, 54, 86–96. [Google Scholar] [CrossRef]

- Deng, A.; Stauffer, D.; Gaudet, B.; Dudhia, J.; Hacker, J.; Bruyere, C.; Wu, W.; Vandenberghe, F.; Liu, Y.; Bourgeois, A. Update on WRF-ARW end-to-end multi-scale FDDA system. In Proceedings of the 10th WRF Users’ Workshop, Boulder, CO, USA, 23–26 June 2009; NCAR: Boulder, CO, USA, 2009. [Google Scholar]

- Otte, T. The impact of nudging in the meteorological model for retrospective air quality simulations. Part I: Evaluation against national observation networks. J. Appl. Meteor. Climatol. 2008, 47, 1853–1867. [Google Scholar]

- Lo, J.C.F.; Yang, Z.L.; Sr, R.A.P. Assessment of three dynamical climate downscaling methods using the Weather Research and Forecasting (WRF) model. J. Geophys. Res. 2008. [Google Scholar] [CrossRef]

- Godowitch, J.M.; Gilliam, R.C.; Rao, S.T. Diagnostic evaluation of ozone production and horizontal transport in a regional photochemical air quality modeling system. Atmos. Environ. 2011, 45, 3977–3987. [Google Scholar] [CrossRef]

- Rogers, R.; Deng, A.; Stauffer, D.R.; Gaudet, B.J.; Jia, Y.; Soong, S.; Tanrikulu, S. Application of the weatherresearch and forecasting model for air quality modeling in the San Francisco bay area. J. Appl. Meteor. Climatol. 2013, 52, 1953–1973. [Google Scholar] [CrossRef]

- Hegarty, J.; Coauthors. Evaluation of Lagrangian particle dispersion models with measurements from controlled tracer releases. J. Appl. Meteor. Climatol. 2013, 52, 2623–2637. [Google Scholar] [CrossRef]

- Gilliam, R.C.; Godowitch, J.M.; Rao, S.T. Improving the horizontal transport in the lower troposphere with four dimensional data assimilation. Atmos. Environ. 2012, 53, 186–201. [Google Scholar] [CrossRef]

- Mlawer, E.; Taubman, S.; Brown, P.D.; Iacono, M.; Clough, S. Radiative transfer for inhomogeneous atmosphere: RTTM, a validated correlated-k model for the longwave. J. Geophys. Res. 1997, 102, 16663–16682. [Google Scholar] [CrossRef]

- Dudhia, J. Numerical study of convection observed during the winter monsoon experiment using a mesoscale two-dimensional model. J. Atmos. Sci. 1989, 46, 3077–3107. [Google Scholar] [CrossRef]

- Xiu, A.; Pleim, J.E. Development of a land surface model. Part I: Application in a mesoscale meteorological model. J. Appl. Meteor. 2001, 40, 192–209. [Google Scholar] [CrossRef]

- Pleim, J.E.; Xiu, A. Development of a land surface model. Part II: Data assimilation. J. Appl. Meteor. 2003, 42, 1811–1822. [Google Scholar] [CrossRef]

- Pliem, J.E. A combined local and nonlocal closure model for the atmospheric boundary layer. Part I: Model description and testing. J. Appl. Meteor. Climatol. 2007, 46, 1383–1395. [Google Scholar] [CrossRef]

- Grell, A.G.; Devenyi, D. A generalized approach to parameterizing convection combining ensemble and data assimilation techniques. J. Geophys. Res. Lett. 2002. [Google Scholar] [CrossRef]

- Mesinger, F.; DiMego, G.; Kalnay, E.; Mitchell, K.; Shafran, P.C.; Ebisuzaki, W.; Jovic, D.; Woollen, J.; Rogers, E.; Berbery, E.H.; et al. North American regional reanalysis. Bull. Am. Meteor. Soc. 2006, 87, 343–340. [Google Scholar] [CrossRef]

- Kanamitsu, M. Description of the NMC global data assimilation and forecast system. Weather Forecast. 1989, 4, 334–342. [Google Scholar]

- Kalnay, E.; Kanamitsu, M.; Kistler, R.; Collins, W.; Deaven, D.; Gandin, L.; Iredell, M.; Saha, S.; White, G.; Woollen, J.; et al. The NCEP/NCAR 40-year reanalysis project. Bull. Am. Meteor. Soc. 1996, 77, 437–471. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Pan, H.-L.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Kistler, R.; Woollen, J.; Behringer, D.; et al. The NCEP climate forecast system reanalysis. Bull. Am. Meteor. Soc. 2010, 91, 1015–1057. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding RMASE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar]

- Gilliam, R.; Hogrefe, C.; Rao, S. New methods for evaluating meteorological models used in air quality applications. Atmos. Environ. 2006, 40, 5073–5086. [Google Scholar] [CrossRef]

- Yu, S.; Rohit, M.; Pleim, J.; Pouliot, G.; Wong, D.; Eder, B.; Schere, K.; Gilliam, R.; Rao, S. Comparative evaluation of the impact of WRF-NMM and WRF-ARW meteorology on CMAQ simulations for O3 and related species during the 2006 TexAQS/GoMACCS campaign. Atmos. Pollut. Res. 2012, 3, 149–162. [Google Scholar]

- Wilks, D. Statistical Methods in the Atmospheric Sciences; Elsevier: Burlington, MA, USA, 2006; p. 549. [Google Scholar]

- Lee, S.-H.; Kim, S.-W.; Angevine, W.M.; Bianco, L.; McKeen, S.A.; Senff, C.J.; Trainer, M.; Tucker, S.C.; Zamora, R.J. Evaluation of urban surface pa- rameterizations in the WRF model using measurements during the Texas Air Quality Study 2006 field campaign. Atmos. Chem. Phys. 2011, 11, 2127–2143. [Google Scholar] [CrossRef]

- Ngan, F.; Kim, H.; Lee, P.; Al-Wali, K.; Dornblaser, B. A study of nocturnal surface wind speed overprediction by the WRF-ARW model in southeastern Texas. J. Appl. Meteor. Climatol. 2013, 52, 2638–2653. [Google Scholar] [CrossRef]

- Chen, B.; Stein, A.F.; Castell, N.; de laRosa, J.D.; de laCampa, A.; Gonzalez-Castanedo, Y.; Draxler, R.R. Modeling and surface observations of arsenic dispersion from a large Cu-smelter in southwestern Europe. Atmos. Environ. 2012, 49, 114–122. [Google Scholar] [CrossRef]

- Zhang, D.; Zheng, W. Diurnal cycles of surface winds and temperatures as simulated by five boundary layer parameterizations. J. Appl. Meteor. 2004, 1, 157–169. [Google Scholar] [CrossRef]

- Tong, D.; Lee, P.; Ngan, F.; Pan, L. Investigation of Surface Layer Parameterization of the WRF Model and Its Impact on the Observed Nocturnal Wind Speed Bias: Period of Investigation Focuses on the Second Texas Air Quality Study (TexAQS II) in 2006. Available online: http://aqrp.ceer.utexas.edu/index.cfm (accessed on 16 February 2015).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ngan, F.; Cohen, M.; Luke, W.; Ren, X.; Draxler, R. Meteorological Modeling Using the WRF-ARW Model for Grand Bay Intensive Studies of Atmospheric Mercury. Atmosphere 2015, 6, 209-233. https://doi.org/10.3390/atmos6030209

Ngan F, Cohen M, Luke W, Ren X, Draxler R. Meteorological Modeling Using the WRF-ARW Model for Grand Bay Intensive Studies of Atmospheric Mercury. Atmosphere. 2015; 6(3):209-233. https://doi.org/10.3390/atmos6030209

Chicago/Turabian StyleNgan, Fong, Mark Cohen, Winston Luke, Xinrong Ren, and Roland Draxler. 2015. "Meteorological Modeling Using the WRF-ARW Model for Grand Bay Intensive Studies of Atmospheric Mercury" Atmosphere 6, no. 3: 209-233. https://doi.org/10.3390/atmos6030209

APA StyleNgan, F., Cohen, M., Luke, W., Ren, X., & Draxler, R. (2015). Meteorological Modeling Using the WRF-ARW Model for Grand Bay Intensive Studies of Atmospheric Mercury. Atmosphere, 6(3), 209-233. https://doi.org/10.3390/atmos6030209