1. Introduction

Air pollution is a critical environmental challenge with far-reaching consequences for public health, ecological integrity, and socio-economic development [

1,

2]. As urbanization and industrialization intensify worldwide, the burden of airborne pollutants has become increasingly evident in both developed and developing regions. Pollutants such as fine particulate matter (PM

2.5, PM

10), nitrogen dioxide (NO

2), and ground-level ozone (O

3) contribute to a broad spectrum of health issues, ranging from respiratory and cardiovascular diseases to premature mortality [

3,

4,

5]. These impacts are particularly severe for vulnerable groups, including children, the elderly, and those with preexisting health conditions. In addition to human health, air pollution affects agricultural yields [

6], accelerates the deterioration of infrastructure [

7,

8], and contributes to climate change by altering atmospheric composition [

9]. Addressing these multifaceted impacts requires not only mitigation strategies but also reliable tools for air quality forecasting to support early interventions and sustainable policy decisions.

Taiwan serves as a representative case of the broader challenges posed by air pollution. As a subtropical island with a dense population and extensive industrial and transportation activities, it faces persistent air quality issues that vary across regions and seasons [

10,

11]. In urban and industrial hubs such as Xitun District in Taichung City, air pollution stems from multiple sources, including vehicular emissions, industrial processes, and seasonal monsoonal winds that transport transboundary pollutants. During the winter months, atmospheric conditions—particularly temperature inversions—often trap pollutants near the surface, resulting in smog events and elevated public health risks [

12]. Residents in these areas experience frequent fluctuations in air quality, creating a strong demand for accurate and timely forecasting systems. While traditional methods such as linear regression and autoregressive models have been applied to this task, they often fail to capture the nonlinear and dynamic characteristics of air pollution data [

13]. Even classical machine learning algorithms—such as support vector machines (SVM) and random forests (RF)—though more flexible, struggle to model long-term temporal dependencies and the high variability of environmental conditions [

14,

15].

To address the limitations of both traditional and classical machine learning approaches, deep learning has emerged in recent years as a powerful alternative for environmental time series forecasting. Recurrent neural networks (RNNs)—particularly long short-term memory (LSTM) and gated recurrent unit (GRU) architectures—are well-suited to capturing complex temporal dependencies in data [

16,

17]. These models can learn long-range patterns and nonlinear relationships without extensive manual feature engineering, making them especially suitable for air quality index (AQI) prediction [

17,

18]. However, one of the primary challenges in applying deep learning to real-world environmental datasets lies in the presence of noise, missing values, and non-stationary behavior, which can reduce model performance. To mitigate these issues, signal decomposition techniques such as empirical mode decomposition (EMD) and its improved variant, ensemble empirical mode decomposition (EEMD), have been employed to preprocess time series inputs [

18]. These methods decompose complex signals into intrinsic mode function (IMF), enabling the models to learn from denoised and smoothed data representations. Despite these advancements, several research gaps remain. First, there is limited comparative analysis of how different decomposition methods, when integrated with deep learning architectures, affect AQI prediction accuracy [

19]. Second, many existing studies rely on a limited number of pollutant variables, potentially omitting important environmental factors. Finally, while hybrid models have been proposed, few studies evaluate their robustness and generalizability using real-world multivariate air quality data from high-density urban environments.

To address existing gaps in air quality forecasting, this study investigates the following research questions:

Among long short-term memory (LSTM) and gated recurrent unit (GRU) architectures integrated with empirical mode decomposition (EMD) and ensemble empirical mode decomposition (EEMD), which hybrid model yields the highest predictive accuracy for air quality index (AQI) forecasting?

To what extent can deep learning models effectively process high-dimensional, nonlinear environmental datasets?

How can improved model accuracy support government agencies and enterprises in enhancing air pollution monitoring and control strategies?

Can accurate air quality index (AQI) predictions improve public health outcomes by enabling timely responses to pollution events?

To this end, we propose and evaluate a hybrid framework that combines EMD and EEMD with both LSTM and GRU models. Using a comprehensive dataset from Xitun District, including AQI and 18 pollutant and meteorological variables, we train and test various model configurations. Forecasting performance is evaluated using standard metrics: root mean squared error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and the coefficient of determination (R2). The findings of this study demonstrate that integrating signal decomposition techniques with deep learning models significantly improves AQI forecasting performance. Among all configurations, the GRU model combined with EEMD model achieved the highest predictive accuracy, outperforming other hybrid models in terms of RMSE, MAE, MAPE, and R2. Results show that integrating signal decomposition significantly enhances AQI prediction. The EEMD-GRU model achieved the highest accuracy, outperforming all alternatives across metrics. The EEMD preprocessing step effectively reduced data noise and improved stability, while the GRU model benefited from a simpler architecture and faster convergence compared to LSTM. By incorporating a wide range of environmental features, the proposed model captures intricate interactions influencing air quality in densely populated areas. These findings affirm the effectiveness of combining deep learning with signal decomposition for real-time AQI forecasting and suggest meaningful applications in public health alert systems and environmental policy planning. In addition, this study integrates automated hyperparameter optimization (Optuna) and statistical validation to further enhance robustness and ensure the reliability of the results.

The remainder of this paper is organized as follows:

Section 2 describes the study area and data sources.

Section 3 outlines the proposed methodology.

Section 4 presents the implementation and results.

Section 5 concludes the paper and discusses future research directions.

3. Methodology

This study selects Xitun District, Taichung City, Taiwan, as the research site due to its high population density, industrial presence, and traffic-related emissions. As home to the Central Taiwan Science Park and major transportation routes, Xitun experiences frequent air quality fluctuations [

34], making it a representative urban area for AQI forecasting. The district’s established environmental monitoring stations provide high-quality, high-frequency data suitable for time series modeling. Accurate predictions in this context can help residents take preventive health measures and support government and industry efforts in formulating effective pollution control strategies.

3.1. Data Sources and Processing

3.1.1. Air Quality Index and Meteorological Data Collection

The air quality index (AQI) data used in this study were obtained from Taiwans Air Quality Monitoring Network, managed by the Ministry of Environment. The dataset covers Xitun District in Taichung City and spans the period from 1 January 2021 to 31 December 2023. The raw data were stored in Excel format, with each entry containing the following information:

Monitoring station name: The specific location where air quality measurements were recorded.

AQI value: The hourly air quality index, indicating the level of air pollution at a given time.

Date and time (datacreationdate): The timestamp of data generation, accurate to the hour.

Meteorological parameters used in this study are listed in

Table 1. To analyze daily variations in air quality, the original hourly AQI data were aggregated into daily averages. The preprocessing included standardizing the timestamp format, extracting the date component, and grouping the data by day to calculate average AQI values. These steps were implemented using Python’s pandas library (version 2.2.2). The resulting daily dataset was exported in CSV format for use in subsequent modeling and analysis.

3.1.2. Data Pre-Processing

Before model development, several pre-processing steps were conducted to ensure data consistency, accuracy, and suitability for analysis:

Missing value imputation: To handle missing values, linear interpolation—a standard method for time series data—was used. It estimates missing points based on adjacent values, assuming a smooth trend, as shown in the equation below:

where

is the imputed value at time

t, and

and

are the known values at times

and

, respectively.

Normalization and standardization: To address feature scale differences, Min-Max normalization was applied to deep learning models, rescaling inputs to the [0, 1] range:

where

is the normalized value. In contrast, Z-score standardization was used for models sensitive to feature variance, ensuring a mean of zero and standard deviation of one:

where

and

represent the mean and standard deviation of feature

, respectively.

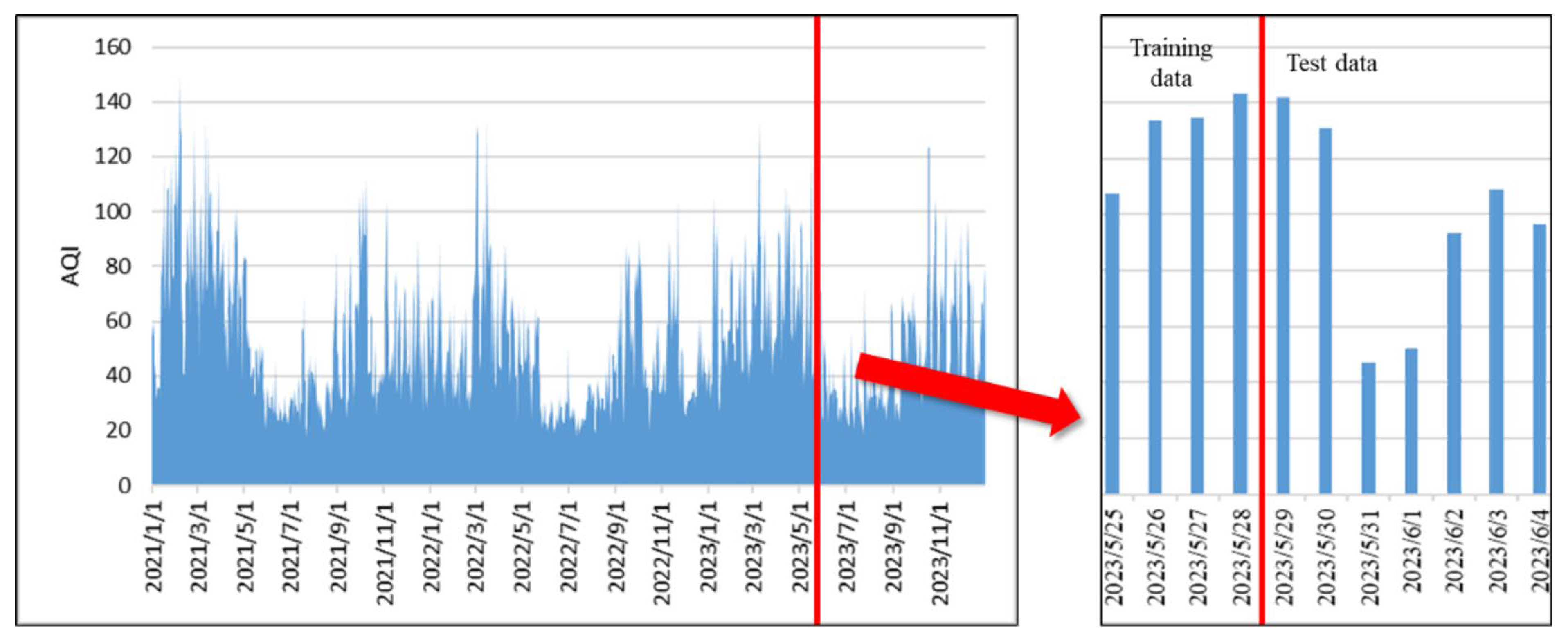

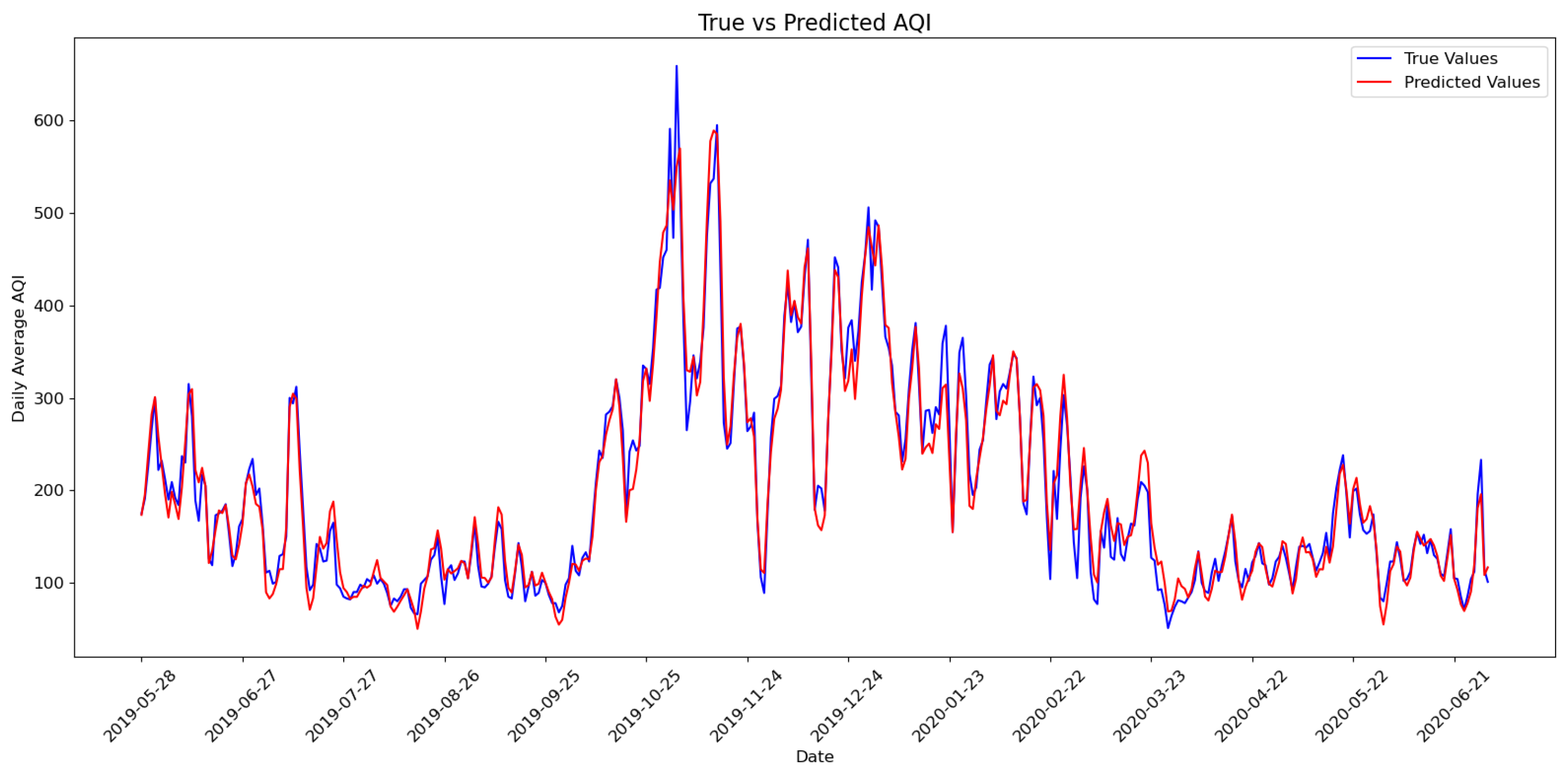

Data splitting: The dataset was split into training and testing sets in an 80:20 ratio to evaluate model performance on unseen data and reduce overfitting. The entire AQI time series used for model development is illustrated in

Figure 1, which highlights both the full dataset (left) and the partitioning into training and testing sets (right), clearly marked by the red vertical line

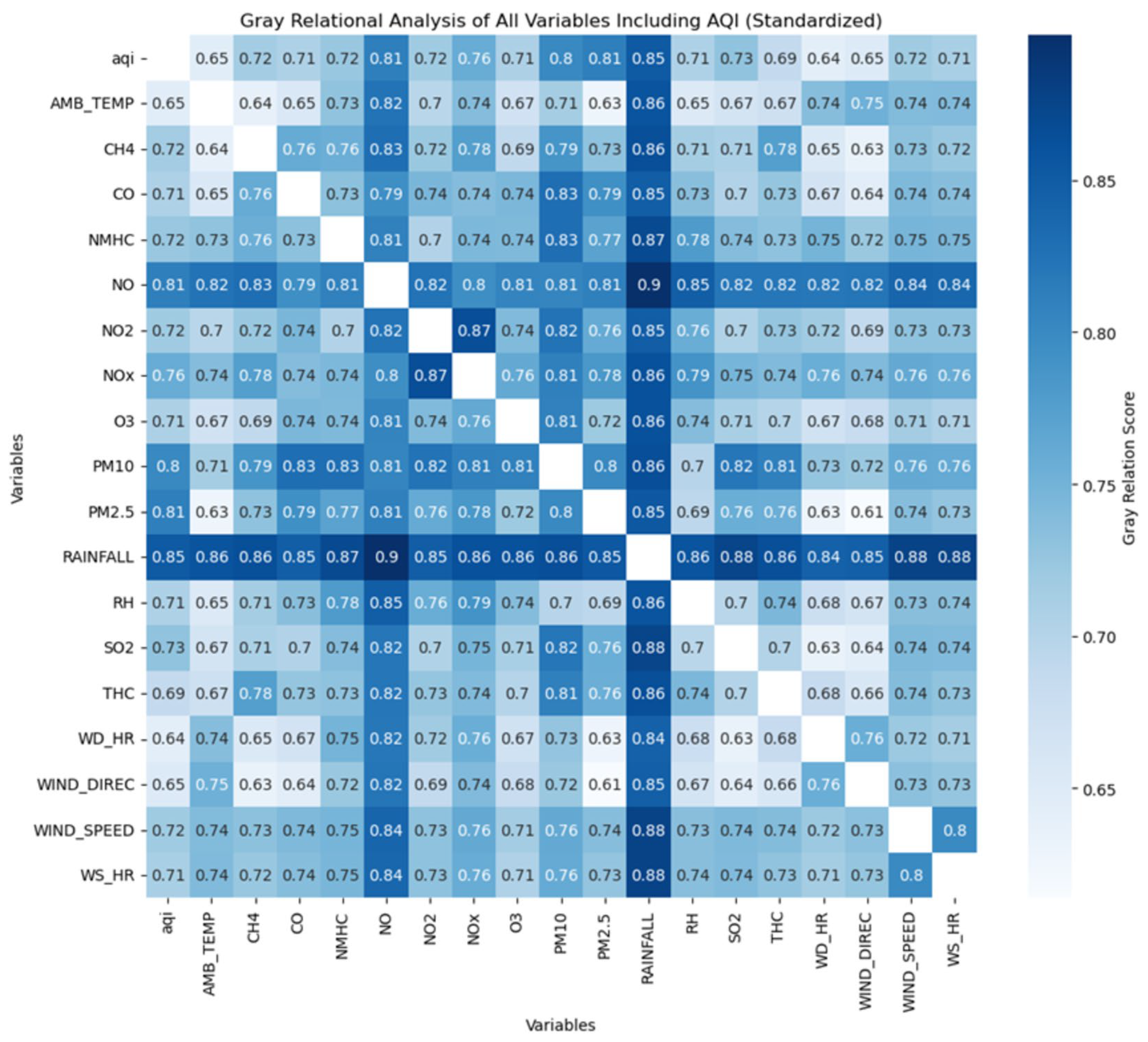

3.1.3. Gray Relational Analysis

Gray relational analysis (GRA) is a widely used method for quantifying the degree of association between variables, particularly effective in cases with limited data or high uncertainty. It is commonly applied in multi-factor analysis to identify variables that most strongly influence a target metric. In this study, GRA is applied to assess the correlation between various meteorological and pollutant variables and AQI in Xitun District. The analysis involves the following steps:

Research data: Environmental monitoring data from 2021 to 2023 were obtained from the Taiwan Air Quality Monitoring Network, including variables such as AQI, Ambient temperature (AMB_TEMP), CH4, CO, Non-methane hydrocarbons concentration (NMHC), NO, NO2, NOx, O3, PM10, PM2.5, RAINFALL, Relative humidity (RH), SO2, Total hydrocarbons concentration (THC), Horizontal component of wind speed (WD_HR), Wind direction (WIND_DIREC), Wind speed (WIND_SPEED), and Vertical component of wind speed (WS_HR).

Missing value imputation: Linear interpolation (as described in the data pre-processing section) was used to fill missing values, ensuring temporal continuity.

Data standardization: Variables were standardized using the Z-score method, consistent with the procedure outlined in the data pre-processing step.

GRA computation: The procedure includes:

- (i)

Gray relational coefficient: AQI is designated as the reference sequence (

), while the remaining variables serve as comparative sequences (

). The coefficient between AQI and each variable is calculated as:

where

and

is the distinguishing coefficient, typically set to 0.5.

- (ii)

Average gray relational degree: The mean coefficient over all time points is computed as:

where

reflects the overall strength of the relationship between variable

i and AQI.

Result visualization: Variables are ranked based on

values to identify key influencers of AQI. A heatmap was also generated using Seaborn (version 0.13.2) to visually interpret the correlation patterns between AQI and other variables.

Although GRA provides a ranking of variable importance, all 18 variables were retained for model development. This decision was made to preserve potential interactions among meteorological and pollutant factors, which might be lost if only top-ranked features were selected. To further examine the effect of dimensionality reduction, we conducted preliminary experiments using principal component analysis (PCA). PCA transforms correlated variables into a smaller set of uncorrelated components while retaining most of the variance. The proportion of variance explained by the first eight components is summarized in

Table 2. While PCA effectively reduced redundancy, predictive performance under all thresholds remained lower than the baseline EEMD-GRU model without PCA. The detailed forecasting results of these PCA experiments are presented in

Section 4.2.4.

3.2. Model Construction

3.2.1. Long Short-Term Memory

Long short-term memory (LSTM), proposed by Hochreiter and Schmidhuber [

16], are a type of recurrent neural network designed to capture long-term dependencies and mitigate the vanishing gradient problem [

35]. Through memory cells and gating mechanisms—namely forget, input, and output gates—LSTM regulate information flow across time steps, allowing them to retain relevant data and filter out noise. This makes them particularly effective in modeling sequential patterns such as seasonality and trends. LSTM have demonstrated success across various domains, including speech recognition [

3], stock price forecasting [

36], and image analysis [

37]. In air quality forecasting, they are well-suited for learning complex dependencies in time series pollutant and meteorological data. The internal computation of an LSTM cell involves the following set of equations:

In these equations, is the input vector at time t, and is the hidden state from the previous step. The vectors , , and represent the outputs of the forget, input, and output gates, respectively, with the sigmoid activation function σ(⋅) mapping values to [0, 1]. The candidate memory content , generated via the hyperbolic tangent function tanh(⋅), is used to update the internal cell state , regulated by the gates. The final hidden state is computed from the activated cell state and output gate. Matrices and are trainable weights for the input and hidden state, and are the bias terms. The operator ∘ denotes the element-wise (Hadamard) product.

3.2.2. Gated Recurrent Unit

The gated recurrent unit (GRU), proposed by Chung et al. [

17], is a streamlined alternative to LSTM that preserves the ability to model long-term dependencies while reducing computational complexity. Unlike LSTM, GRU does not employ a separate memory cell; instead, it integrates the memory function directly within its hidden state through two gating mechanisms: the reset gate and the update gate. The reset gate

controls the degree to which the previous hidden state

contributes to the current candidate activation. The update gate

determines the proportion of the previous hidden state that is retained versus replaced by the new candidate

. These mechanisms allow the model to effectively balance short- and long-term dependencies in sequential data. GRU is particularly advantageous in air quality prediction tasks where model efficiency, faster training, and reduced overfitting are essential [

31]—especially when working with noisy, multivariate environmental data. The computations within a GRU cell are expressed as follows:

Here,

is the input, and

is the previous hidden state, and [] denotes concatenation. The operator ∗ indicates element-wise multiplication. Due to its simpler design, GRU often trains faster and performs well on smaller datasets, offering a practical trade-off between complexity and accuracy. Previous studies (e.g., [

29]) have shown GRU’s effectiveness across various forecasting tasks.

3.2.3. Empirical Mode Decomposition

Empirical mode decomposition (EMD) is a data-driven technique designed to decompose nonlinear and non-stationary signals into intrinsic mode function (IMF). Unlike Fourier or wavelet transforms, EMD does not require predefined basis functions, making it well-suited for complex environmental datasets such as AQI time series. In this study, EMD is employed to extract multi-scale temporal features from AQI data, facilitating more efficient learning in LSTM and GRU models. The EMD process involves the following steps:

Extrema identification: Detect local maxima and minima from the original signal x(t).

Envelope construction: Interpolate extrema using cubic splines to form upper and lower envelopes.

Mean computation: Calculate the local mean m(t) as the average of the two envelopes.

IMF extraction: Compute the candidate IMF via:

and apply the sifting process until the resulting

satisfies the IMF conditions: (1) the number of zero crossings and extrema differ at most by one, and (2) the mean envelope is approximately zero.

Residue calculation: Subtract the IMF from the signal to compute the residue:

Repeat the above steps on to extract additional IMFs.

Final decomposition: The original signal is reconstructed as:

where each

reflects a different temporal scale in the AQI signal. These components are used as model inputs to enhance forecasting accuracy.

3.2.4. Ensemble Empirical Mode Decomposition

Ensemble empirical mode decomposition (EEMD) is an improved variant of empirical mode decomposition (EMD) that addresses the issue of mode mixing—where components of different frequencies are inaccurately combined within or split across intrinsic mode functions (IMFs). This mixing can impair interpretability and reduce forecasting accuracy. EEMD mitigates this by adding Gaussian white noise to the original signal and repeatedly applying EMD to each perturbed version. In this study, the amplitude of added white noise was set to 0.2, and the ensemble size (number of iterations) was set to 100. The resulting IMFs are then averaged across 100 iterations to produce more stable and representative components. This process enhances the robustness of the decomposition and improves the separation of signal components across different temporal scales, which is particularly beneficial for air quality index (AQI) prediction.

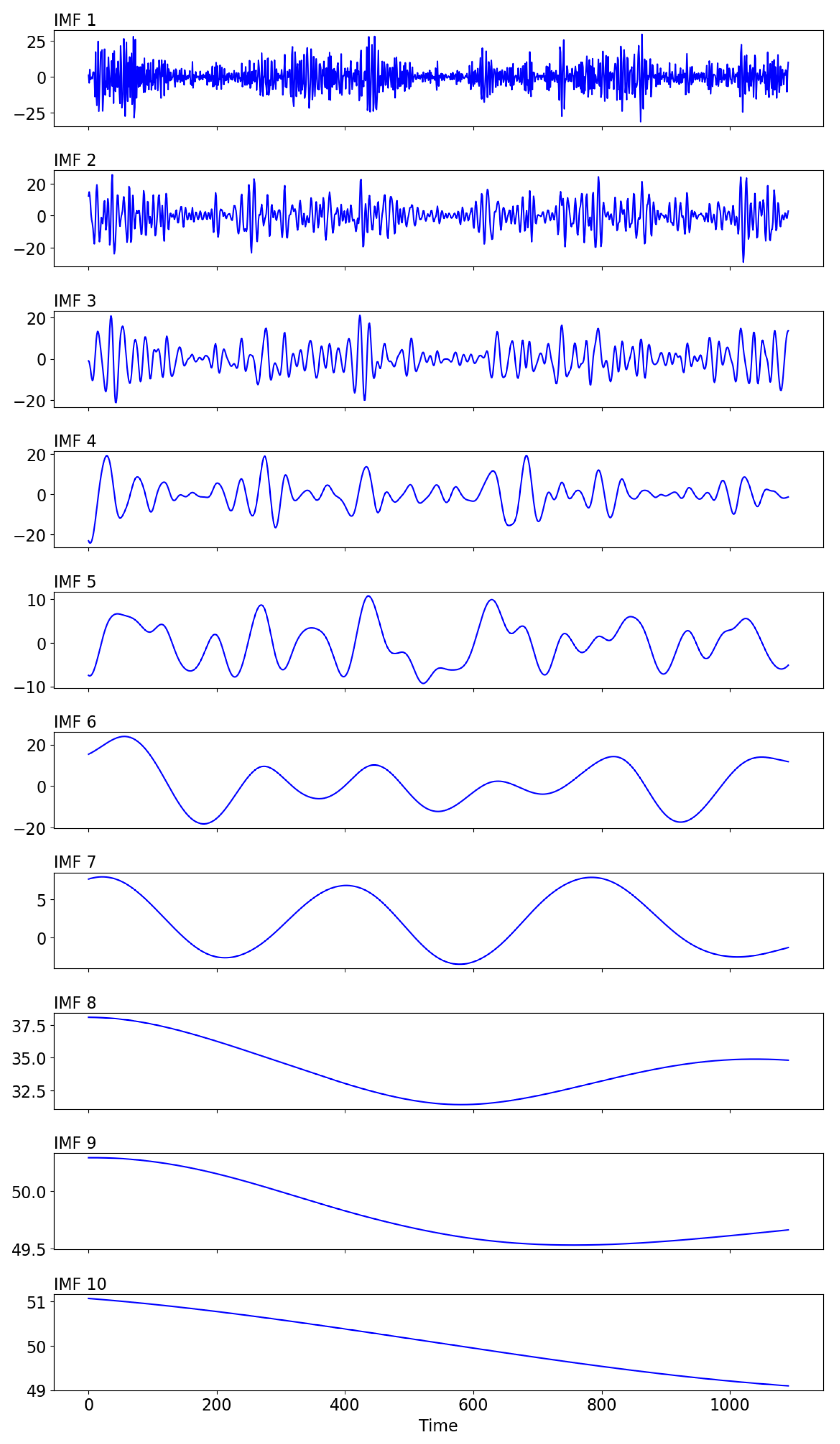

For the AQI time series, EEMD produced 10 IMFs and a residual. Among them, only the first three IMFs were selected as model inputs because they carry the main signal energy and effectively capture short- and mid-term variations. The later IMFs primarily reflect long-term slow trends or random noise, which contribute little to prediction accuracy and may increase model complexity or overfitting risk. This selection therefore preserves essential information, improves stability, and enhances computational efficiency.

Figure 2 illustrates the decomposition of AQI into 10 IMFs. The procedure includes:

This multiscale decomposition allows deep learning models to better capture AQI’s nonlinear and non-stationary behavior.

3.2.5. Hyperparameter Optimization with Optuna

In deep learning, model performance is highly dependent on hyperparameter choices such as the number of layers, units, learning rate, and batch size. To improve predictive accuracy and stability, this study employed Optuna, an automated hyperparameter optimization framework, instead of conventional manual tuning or grid/random search [

38]. Optuna applies Bayesian optimization with a Tree-structured Parzen Estimator (TPE), which dynamically adjusts the search strategy based on previous trial results. For the EEMD-GRU model, the search space was defined as follows:

First-layer GRU units: 32–256

Second-layer GRU units: 32–128

Dropout rates: 0.1–0.5

Learning rate: 1 × 10−5 to 1 × 10−2 (log scale)

Batch size: 16, 32, 64

The objective function minimized the validation RMSE. A total of 20 trials were conducted, and the best-performing configuration was selected for the final model. The chosen parameters were then applied to retrain the EEMD-GRU model using the full training set. To prevent overfitting, an EarlyStopping strategy monitored validation loss and stopped training when no further improvement was observed.

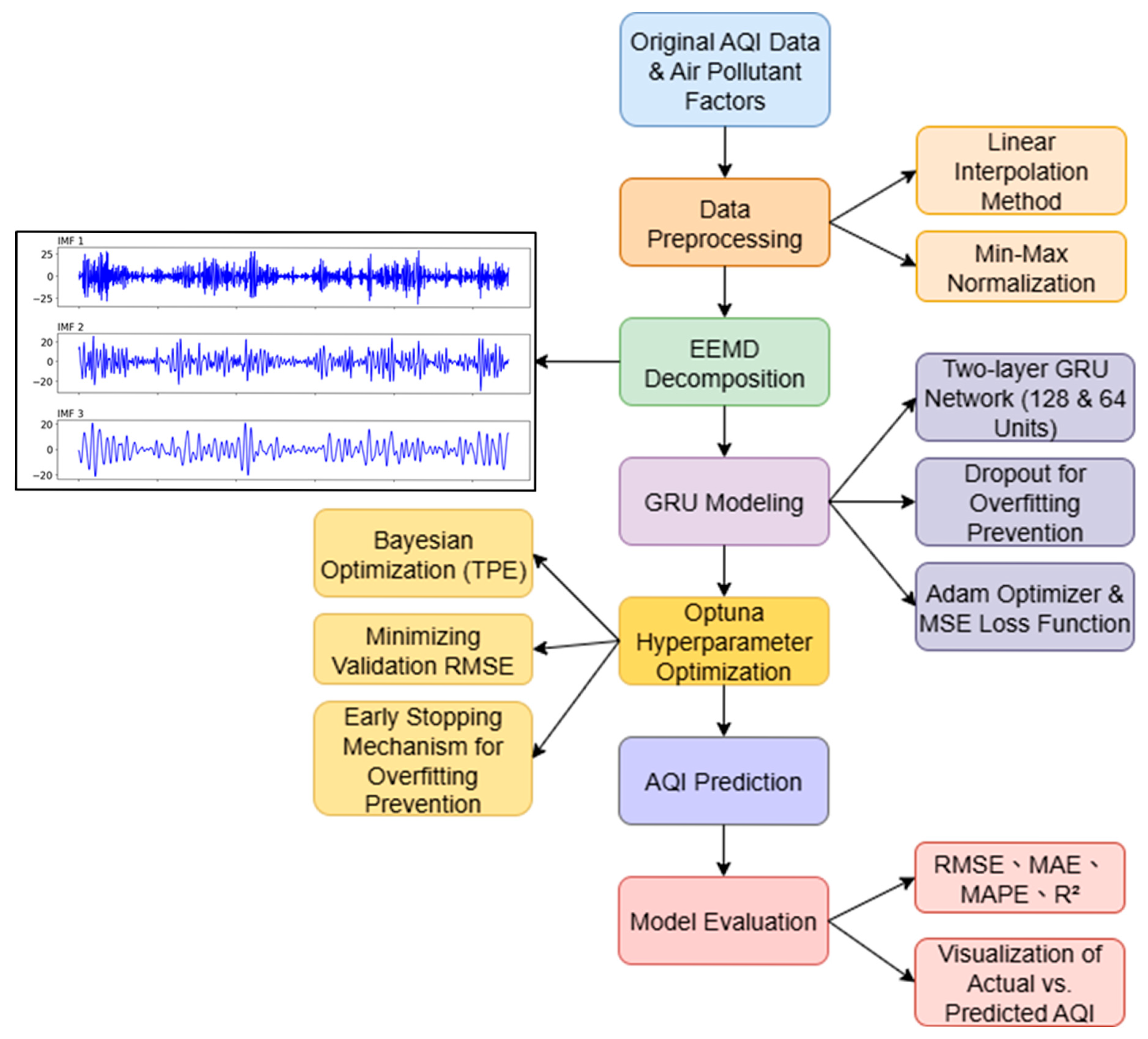

3.3. Proposed Hybrid EEMD-GRU Forecasting Framework

To improve AQI prediction accuracy, this study introduces a hybrid model combining EEMD with GRU neural network, as depicted in

Figure 3. The framework integrates multi-scale decomposition, temporal deep learning, and robust performance evaluation:

Data preprocessing: Daily air quality and meteorological variables were collected, including AMB_TEMP, CH4, CO, NMHC, NO, NO2, NOx, O3, PM10, PM2.5, RAINFALL, RH, SO2, THC, WD_HR, WIND_DIREC, WIND_SPEED, and WS_HR. AQI served as the target variable. Missing values were interpolated using the linear method, followed by Min-Max normalization. This means that each model input consisted of AQI and environmental data from the previous 7 days to predict the AQI for the following day.

Ensemble empirical mode decomposition: The AQI time series was decomposed into 10 IMFs using EEMD with 100 iterations. Out of theses, the first three IMFs were selected to capture the dominant patterns, as they contain the main signal energy related to short- and mid-term AQI variations. Zero-padding was applied when fewer than three IMFs were generated, ensuring consistent input dimensions.

Gated recurrent unit architecture: A two-layer GRU network (128 and 64 units) was employed using tanh and sigmoid activations. Dropout (0.2) prevented overfitting. The model was trained with Adam optimizer and MSE loss, with 20% of the data used for test.

Hyperparameter optimization with Optuna: To identify the best architecture and training configuration, we integrated the Optuna framework, which applies Bayesian optimization (TPE sampler) to minimize validation RMSE. The search space included:

first-layer GRU units (32–256),

second-layer GRU units (32–128),

dropout rates (0.1–0.5),

learning rate (1 × 10−5 to 1 × 10−2, log sampling), and

A total of 20 trials were conducted, with the best configuration applied to the final model. Early stopping monitored validation loss to prevent overfitting.

Air quality index forecasting: Model outputs were inverse-transformed to their original AQI scale. For multi-step forecasting, a recursive approach was used, feeding predictions back as future inputs.

Model evaluation: Forecast performance was measured using RMSE, MAE, MAPE, and R2, with results visualized through prediction plots and training curves.

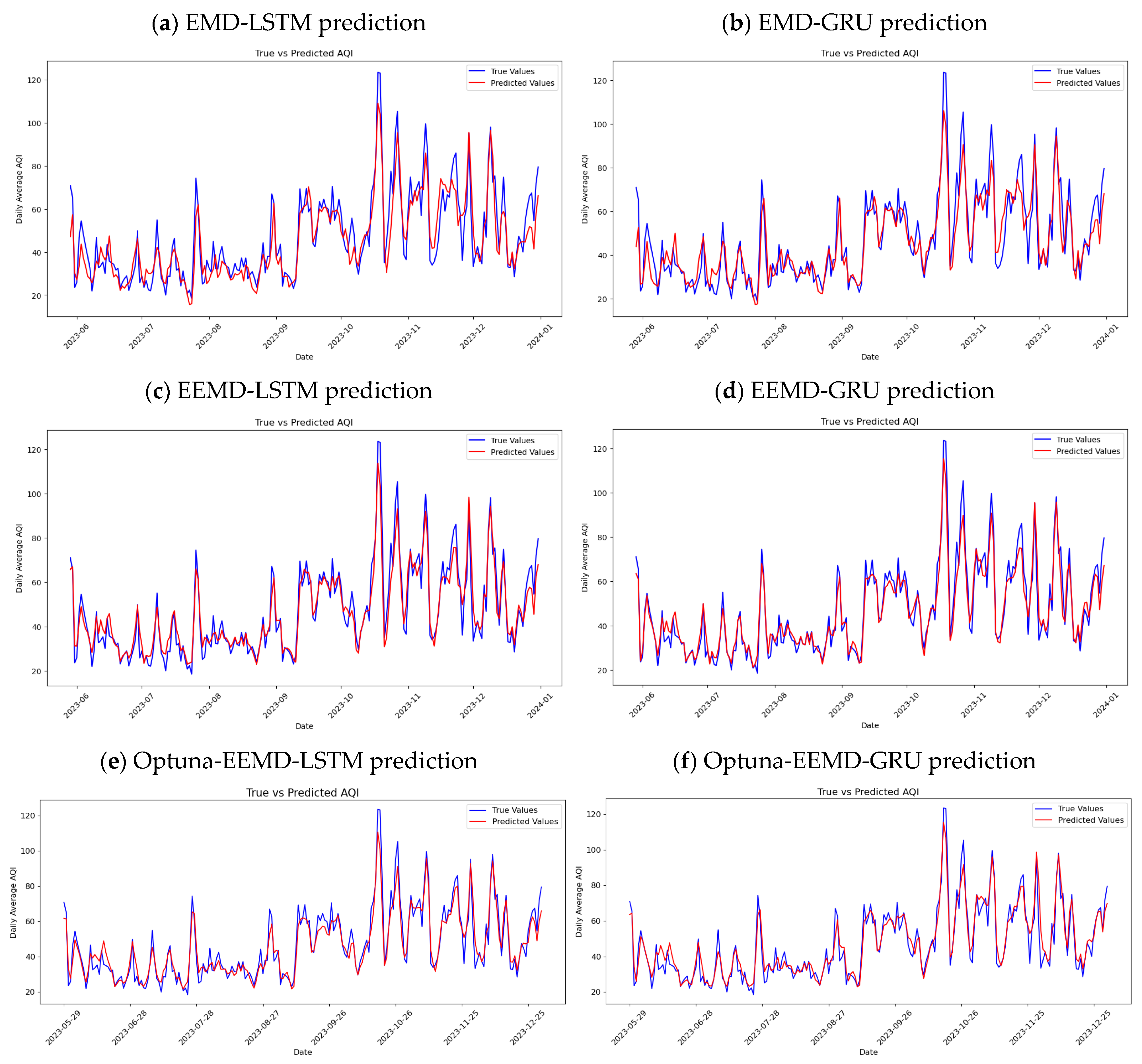

To benchmark the effectiveness of the proposed EEMD-GRU model, a comparative framework was developed, integrating various model combinations including LSTM, GRU, and signal decomposition techniques (EMD and EEMD). As shown in

Figure 4, this framework provides a comprehensive evaluation of how different model architectures and signal decomposition methods impact AQI forecasting performance, offering insights into optimal configurations for real-world environmental monitoring systems.

3.4. Model Evaluation

To assess forecasting performance, four standard metrics are employed: RMSE, MAE, MAPE, and R2. Together, these metrics provide a comprehensive evaluation by capturing absolute, relative, and variance-based prediction errors.

Root mean squared error (RMSE) quantifies the square root of the average squared differences between predicted and actual values, emphasizing larger deviations and making it sensitive to outliers:

where

denotes the actual value,

the predicted value, and

n the total number of observations.

Mean absolute error (MAE) measures the average absolute difference between predictions and observations, offering a straightforward and interpretable assessment of error magnitude. The MAE is defined as:

Mean absolute percentage error (MAPE) expresses errors as a percentage of actual values, facilitating comparisons across datasets with different scales. The MAPE is calculated as:

Coefficient of determination (R

2)evaluates the proportion of variance in the observed data explained by the model:

where

is the mean of the observed values.

This combination of evaluation metrics enables a comprehensive and robust comparison of model performance, supporting the identification of the most effective forecasting architecture for AQI prediction.

5. Conclusions and Future Scope

This study focused on forecasting the air quality index (AQI) by comparing the predictive performance of deep learning models, specifically long short-term memory (LSTM) and gated recurrent unit (GRU) architectures. Both models were implemented with and without integrated data decomposition techniques, including empirical mode decomposition (EMD) and ensemble empirical mode decomposition (EEMD). To ensure the robustness of model training under real-world conditions, comprehensive preprocessing—such as normalization, standardization, and interpolation of missing values—was applied.

Following model development, performance was assessed using multiple evaluation metrics: root mean squared error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and the coefficient of determination (R2). Training loss curves were also examined to understand convergence behavior. Among the tested configurations, the GRU model integrated with EEMD (EEMD-GRU) demonstrated the best overall performance. The EEMD technique effectively decomposed the AQI time series into smoother components, which facilitated improved feature learning and reduced noise interference. Moreover, the GRU model exhibited greater computational efficiency than LSTM because of its simplified structure and fewer parameters, while still maintaining competitive accuracy. This study contributes to the field in three key ways. First, it introduces a hybrid EEMD-GRU framework that significantly enhances predictive performance by improving input quality and learning stability. Second, the study incorporates 18 environmental and pollutant-related variables—far more than those considered in most prior studies—providing a more comprehensive understanding of AQI determinants and improving the model’s generalizability. Third, the proposed approach offers practical value for environmental monitoring and policy-making. It can serve as a real-time forecasting tool for governmental agencies and environmental institutions to track air quality trends and develop more targeted air pollution control strategies.

Despite these strengths, the study has certain limitations. While the model was validated using data from Xitun District (Taichung, Taiwan) and Delhi (India), its applicability to other geographic regions remains to be tested. Future research should validate the model across a wider range of urban settings to assess its generalizability. Moreover, while EEMD significantly improved performance, exploring alternative decomposition methods such as variational mode decomposition (VMD) or complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) could yield further enhancements. Investigating the synergistic effects of different preprocessing techniques may also lead to improved forecasting stability and broader application in environmental informatics.