Abstract

It remains challenging to accurately classify complicated clouds owing to the various types of clouds and their distribution on multiple layers. In this paper, multi-band radiation information from the geostationary satellite Himawari-8 and the cloud classification product of the polar orbit satellite CloudSat from June to September 2018 are investigated. Based on sample sets matched by two types of satellite data, a random forest (RF) algorithm was applied to train a model, and a retrieval method was developed for cloud classification. With the use of this method, the sample sets were inverted and classified as clear sky, low clouds, middle clouds, thin cirrus, thick cirrus, multi-layer clouds and deep convection (cumulonimbus) clouds. The results indicate that the average accuracy for all cloud types during the day is 88.4%, and misclassifications mainly occur between low and middle clouds, thick cirrus clouds and cumulonimbus clouds. The average accuracy is 79.1% at night, with more misclassifications occurring between middle clouds, multi-layer clouds and cumulonimbus clouds. Moreover, Typhoon Muifa from 2022 was selected as a sample case, and the cloud type (CLT) product of an FY-4A satellite was used to examine the classification method. In the cloud system of Typhoon Muifa, a cumulonimbus area classified using the method corresponded well with a mesoscale convective system (MCS). Compared to the FY-4A CLT product, the classifications of ice-type (thick cirrus) and multi-layer clouds are effective, and the location, shape and size of these two varieties of cloud are similar.

1. Introduction

A cloud is one result of atmospheric motions and atmosphere–ocean interactions. Different kinds of clouds have different radiative characteristics, and this information reflects ongoing dynamical and thermal processes in the atmosphere [1]. The accurate classification of clouds is important in many fields of research. For numerical simulations, the microphysical parameters of a cloud obtained from the inversion of cloud classification results can improve the effect of a parameterization scheme [2,3,4]. Cloud classification can also improve the results of precipitation intensity, humidity and temperature field analyses [5,6,7]. Additionally, the identification of cloud types is crucial for the calculation of satellite-derived winds [8,9,10].

In cloud classification studies, satellites are significant tools which can detect clouds from top to bottom without geographical limitations and continuously observe global atmospheric motions and the evolution of clouds. Cloud classification studies based on satellite data usually use inverse methods. Inversion means obtaining the microphysical properties of a cloud by analyzing satellite-detected radiation information based on differences in the absorption and reflection abilities of the cloud’s droplets in different wavelength ranges of the spectrum. Geostationary meteorological satellites are capable of recognizing physical quantities such as the temperature of the top of a cloud and its texture and water vapor content, providing radiation information from the cloud’s surface that can be used to accomplish cloud classification. Purbantoro et al. [11] used a split-window algorithm (SWA) to detect and classify water and ice clouds in the atmosphere using Himawari-8 data and compared the unsupervised classification results of the SWA using various pairs of bands over the area of Japan. Liang et al. [12] used surface weather observations from the ECRA of the CDIAC and cloud retrievals from the International Satellite Cloud Climatology Project (ISCCP) to put forward distributions of optical thickness and cloud-top pressure for cloud types over the time period in different seasons.

The vertical structure of a cloud is an important factor in accurate and reliable cloud classification. The polar orbit satellite CloudSat belongs to the National Aero-nautics and Space Administration (NASA) Earth Systems Science Pathfinder Program, and aims to provide observations of the vertical structures of cloud systems, carrying a cloud profile radar that detects cloud and precipitation particles [13,14]. A combination of geostationary and polar orbit satellite data can contribute to obtaining more structural and physical characteristics of clouds. Zhang et al. [15] used cloud scenario products from CloudSat and developed a procedure for achieving high-spatiotemporal-resolution cloud type classifications for multi-spectral Himawari-8 datasets using a maximum likelihood estimation (MLE) and a random forest classification. Liu et al. [16] accessed cloud retrievals from Himawari-8 and compared them against those obtained from CloudSat and CALIPSO, finding that the retrieved cloud-top altitudes and the optical thicknesses and effective particle radii of the clouds are consistent with passive Moderate Resolution Imaging Spectroradiometer (MODIS) data. However, most research studies have the following problems: Firstly, the spectral bands of satellites are not used abundantly, and the radiation differences between bands should be fully discussed further; secondly, the data from polar orbit and geostationary satellites do not correspond well enough, leading to misclassifications. To this end, in this study, we focus on matching data from the CloudSat and Himawari-8 satellites.

With the rapid development of computer science, sophisticated machine learning (ML) methods, such as fuzzy logic, neural networks, support vector machines (SVMs) and random forests (RFs), are being increasingly applied in cloud research. Jiang et al. [17] developed a new intelligent cloud classification method based on the U-Net network (CLP-CNN) to obtain cloud classification products, and the results match the Himawari-8 product by 84.4%. Li et al. [18] developed a deep-learning-based cloud detection and classification algorithm, achieving cloud detection, cloud phase classification and multi-layer cloud detection from multi-spectral observed radiances and simulated clear-sky radiances using deep neural networks (DNNs). Among these machine learning algorithms, the random forest algorithm is considered appropriate for cloud detection classification due to its advantages of fast training, no overfitting, the lack of a need to reduce dimensionality and its relatively simple parameter settings [19,20,21,22].

The examination of inversion results is important in cloud classification studies. Some researchers used the standard cloud type classification scheme of the ISCCP [23,24]. In addition, Zhang [25] used the cloud classification product generated by the Clouds from AVHRR Extended System (CLAVR-x) from the National Oceanic and Atmospheric Administration (NOAA) as the true value. Fengyun-4A is part of the new generation of Chinese geostationary meteorological satellites; it has high spatial and temporal resolutions, and its cloud type (CLT) product can also be used to examine cloud classification results [26,27].

In this paper, we used multi-band data from June to September 2018 from the Himawari-8/9 geostationary meteorological satellite to match the cloud classification product of CloudSat 2B-CLDCLASS and conducted an inversion study using the satellite radiation information and cloud classification labels. Based on a random forest algorithm, satellite sample sets were trained, and a new method of cloud classification was developed. To be available for both daytime and nighttime classification, the method contained two classifiers. Test sample sets were then used to examine the classifiers. Afterwards, the method was applied to analyze Typhoon Muifa, which occurred in 2022, and the results were compared with the FY-4A CLT product.

2. Data and Methods

2.1. Satellite Data

2.1.1. CloudSat Satellite Data

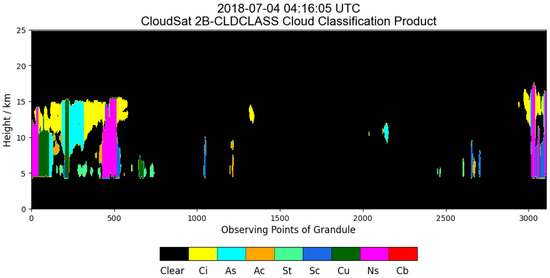

The National Aeronautics and Space Administration (NASA) launched the Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation (CALIPSO) with the CloudSat satellite on 18 April 2006. The satellite carries a 94 GHz Cloud Profiling Radar (CPR) with 1000 times the sensitivity of a standard weather radar [13]. The CPR emits a detection pulse into the atmosphere every 0.16 s and receives an atmospheric return pulse after flying forward 1.1 km in its orbit, completing a vertical detection of the atmosphere. CloudSat’s vertical sounding is divided into 125 layers, each with a resolution of 240 m, and a total of 30 km of atmospheric information is obtained with each pulse [25]. In this study, the CloudSat 2B-CLDCLASS (ftp://ftp.cloudsat.cira.colostate.edu; accessed on 27 June 2023) product was applied; this product obtains cloud classification information via vertical and horizontal cloud characteristics, precipitation occurrence, cloud temperature and radiation data. Currently, eight cloud types [28], including stratus (St), stratocumulus (Sc), cumulus (Cu), nimbostratus (Ns), altocumulus (Ac), altostratus (As), cumulonimbus (Cb) and cirrus (Ci) clouds, can be determined based on different rules for vertical and horizontal hydrometeor scales. Cloud type information in the vertical orientation is shown in Figure 1.

Figure 1.

CloudSat 2B-CLDCLASS product at 04:16:05 on 4 July 2018.

2.1.2. Himawari-8/9 Satellite Data

Himawari-8 was part of a new generation of Japanese geostationary meteorological satellites. It was launched on 7 October 2014 and became operational on 7 July 2015, and the operation was transferred from the Himawari-8 satellite to the Himawari-9 satellite on 13 December 2022. Himawari-8/9 is located at 140.7 E above the equator, with a monitoring range of 60 N–60 S and 80 E–160 W. The satellite is stabilized in three axes, and carries the Advanced Himawari Imager (AHI) with 3 visible light bands, 3 near-infrared bands and 10 infrared bands, which can provide high-resolution observations of the Earth’s system from space with spatial resolutions between 0.5 and 2 km and temporal resolutions between 0.5 and 10 min. The spectral bands of the Himawari-8/9 satellite are shown in Table 1. In this study, L1-level standard data from Himawari-8/9 were used, which can be obtained from the Japan Aerospace Exploration Agency (JAXA) P-Tree system (ftp://ftp.ptree.jaxa.jp; accessed on 27 June 2023).

Table 1.

Spectral bands information of Himawari-8/9.

2.1.3. FY-4A Satellite Data

FengYun-4A (FY-4A) is part of a new generation of Chinese geostationary meteorological satellites. It was launched on 11 December 2016 and became operational on 25 September 2017. It is located at 104.7 E above the equator. FY-4A monitors the same range as Himawari-8/9, and it has 14 bands (3 visible light, 3 near-infrared and 8 infrared) with spatial resolutions between 0.5 and 2 km and a temporal resolution of 15 min [29]. Due to FY-4A possessing fewer spectral bands and lower temporal resolution compared to Himawari-8/9, FY-4A satellite data were not used to classify cloud types in this paper; instead, they were used to examine the results of the cloud classification.

The cloud type (CLT) product is a level 2 product of FY-4A which classifies cloud types based on differences in their microphysical structures and thermodynamic properties, which are represented in various spectral bands. The FY-4A CLT product can be downloaded from the China National Satellite Meteorological Center (NSMC) data website (http://satellite.nsmc.org.cn/portalsite/default.aspx; accessed on 27 June 2023). It provides the following seven cloud types: clear, water, super-cooled, mixed, ice (opaque or thick cirrus), cirrus (specifically thin cirrus) and overlap. The FY-4A CLT product has a temporal resolution of 3 h. In this paper, the FY-4A CLT product was applied as a true value to examine the proposed cloud classification method. Since the types of clouds classified by the method differ from those offered by this product, further explanations will be provided in Section 3.3.

2.2. Methods

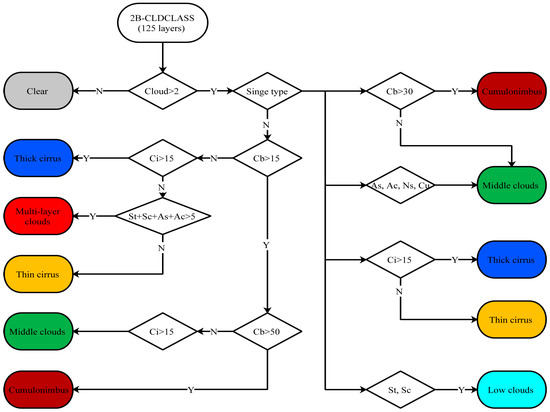

2.2.1. The Determination of CloudSat Cloud Types

To obtain a training sample for cloud classification, the CloudSat 2B-CLDCLASS product needed to be matched with the Himawari-8/9 satellite data. The data from CloudSat comprise the locations of sub-satellite points and 125 layers of cloud type data above them. However, Himawari-8/9 can only detect data on the plane; that is to say, each sub-satellite point corresponds to one set of spectral data. Therefore, in matching the data, the first step was to compress the 125 layers into a single layer. In this paper, a compression method was proposed which considers the most likely result to be the representative cloud type of each sub-satellite point via the use of a decision tree (Figure 2).

Figure 2.

Flow chart of the decision tree for determining representative cloud type based on CloudSat 2B-CLDCLASS product.

- (1)

- Samples with a total cloud thickness of less than 500 m (about 2 layers) were considered clear skies.

- (2)

- Samples with a single type of cloud among all their vertical layers were represented by that type of cloud. Stratus and stratocumulus clouds were regarded as low clouds; altocumulus, altostratus, cumulus and nimbostratus clouds were regarded as middle clouds; and cirrus clouds were separately regarded as thick or thin cirrus clouds according to a cloud thickness greater or less than 3000 m (about 15 layers), respectively.

- (3)

- For samples with more than two types of clouds among all their vertical layers, the representative types of which were determined via the flow chart in Figure 2, the type of multi-layer cloud was considered on the basis of single cloud types.

2.2.2. Matching Satellite Data and Selecting Himawari-8/9 Spectral Bands

After compressing 125 layers of cloud types into a single type in Section 2.2.1, CloudSat 2B-CLDCLASS datasets, including the one-to-one correspondence longitude, latitude and cloud type, were obtained. The second step was to conduct temporal and spatial matching.

The time range of the research data used in this study is from June to September 2018, and the spatial range is 110–140° E and 10–40° N. Within this range, the aforementioned datasets have a spatial resolution of 0.002–0.003° in longitude and 0.01° in latitude, which is higher than the spatial resolution of the Himawari-8/9 data (0.05°). Therefore, we took the closest Himawari-8/9 data for matching on the basis of the CloudSat 2B-CLDCLASS datasets. The Himawari-8/9 full disc data were updated every 10 min to ensure that the matching time error was less than 5 min and the spatial error was less than 0.05°.

Clouds of different phases and types present significant differences in various spectral bands. Middle clouds and low clouds have a wide range of variations in visible albedo and high or moderate infrared brightness temperatures. Cirrus clouds have lower brightness temperatures in both the water vapor and infrared bands. Deep convection clouds have high visible albedo values and very low infrared bright temperatures. The absorption capacity of an ice cloud significantly increases from the water vapor band to the long-wave infrared band of Himawari-8/9; however, this hardly changes for a water cloud. Therefore, the D-value can be used to distinguish between ice or water clouds and thin or thick clouds. In this paper, the reflectivity of one visible band (0.64 m), the brightness temperatures of five infrared bands (1.6, 3.9, 8.6, 11.2 and 12.3 m), one vapor band (7.3 m) and the D-values of these bands were applied. After completing the above matching process, the eventual dataset used for training contained one-to-one correspondences for the longitude, latitude and cloud type and the standardized feature values of the spectral bands in Table 2.

Table 2.

Spectral bands of Himawari-8/9 for cloud classification.

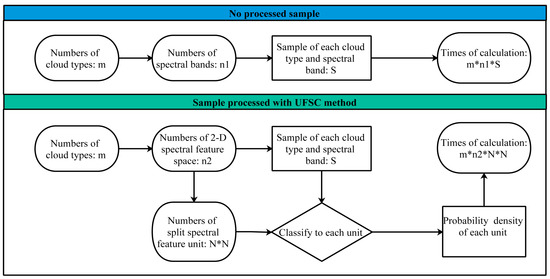

2.2.3. Unit Feature Space Classification

In a single spectral band of a satellite, certain types of cloud can be easily confused because of similar radiative characteristics (e.g., both cirrus and low clouds appear bright white in visible light pictures of clouds). It is necessary to combine two or more bands to analyze the droplet distribution characteristics of cloud particles of different scales and phases so as to distinguish different types of clouds. However, as the quantity of bands (training dimension) increases, the computation of the RF algorithm also sharply increases.

To solve this problem, this paper applied a method called Unit Feature Space Classification (UFSC). The UFSC is a type of pre-processing method that discretizes an initial sample that is continuously distributed over a certain range of values. The bands in Table 2 were combined in pairs to build a two-dimensional spectral feature space which was split into feature units of N × N; the frequency (probability) density of each unit was calculated to obtain the spectral characteristics of each cloud type, and the feature value of each unit was input into the random forest model as a training value. With the use of UFSC, each unit only needed to be calculated once, which greatly improved the training speed of the random forest model (Figure 3). In addition, differences in spectral characteristics between various cloud types could be clearly identified via the probability density distribution in the feature space. This will be discussed in detail in Section 3.1.1 and Section 3.1.2.

Figure 3.

Architectural diagram of the Unit Feature Space Classification (UFSC) method.

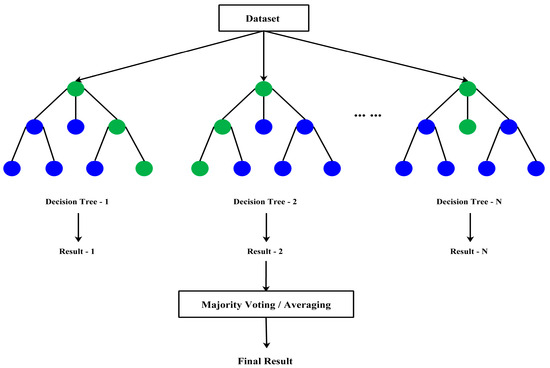

2.2.4. Random Forest Algorithm

The random forest (RF) algorithm evolved from the decision tree algorithm and belongs to ensemble learning methods that use tree classifiers. An RF creates certain decision trees; each tree is trained using a bootstrapped sample from original training data, providing the most possible classification result as a vote, and the final output is determined by the tree with the most votes. A flow chart of a random forest algorithm is shown in Figure 4, referring to unsupervised classification questions which have advantages such as accurately estimating the importance of variables, being of good quality with respect to the robustness of data parsimony and noise and having greater stability compared to a support vector machine (SVM) algorithm.

Figure 4.

Flow chart of the random forest algorithm. Green dots represent the most possible classification result voted by the decision tree at each step, while blue dots represent the other results.

In this paper, the determination of cloud types is a classification question. Since the RF algorithm has no problem with overfitting, high-dimensional data can be processed efficiently. Based on the RF algorithm and model, we built two classifiers to be applied to cloud classifications during the daytime and nighttime, respectively. The daytime classifier took datasets including cloud type data from CloudSat and spectral feature data from the seven bands in Table 2 as input (processed via the UFSC method), totaling 127,192 groups of sample data. The nighttime classifier input was similar to the daytime input except for the spectral feature data from band 03 and band 05 (since visible light bands are unavailable at night), totaling 72,934 groups of sample data.

In this paper’s RF model, the cloud type output contained the following seven dimensions: clear sky, low clouds, middle clouds, thin cirrus clouds, thick cirrus clouds, multi-layer clouds and deep convection clouds. Furthermore, the model needed two parameters, namely, the number of variables selected at each node (M) and the number of decision trees (N). In order to balance the training speed and accuracy of the model, M was set at 2 and N was 500.

3. Results

3.1. Spectral Features of Various Cloud Types

To help solve the cloud classification question in this paper, it is necessary to analyze the differences in the spectral features in satellite bands between various types of clouds. Taking advantage of the UFSC method mentioned in Section 2.2.3, several cloud types are analyzed in this section.

3.1.1. Daytime Spectral Features

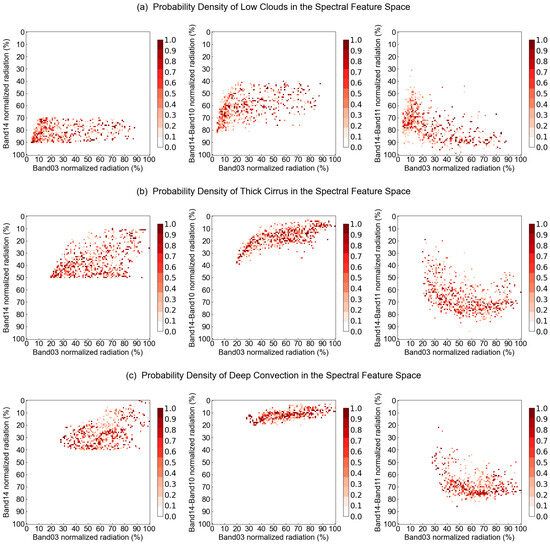

Considering the ability to observe cloud particle characteristics in different satellite bands, three kinds of spectral feature spaces for daytime were built using band 03 and band 14, band 14–10 and band 14–11. The distributions of the probability density of certain cloud types are shown in Figure 5.

Figure 5.

Probability density distributions of low clouds (a), thick cirrus (b) and deep convection (c) in spectral feature space built by different band combinations.

- Low clouds have high brightness temperature values distributed in a range of normalized values between 70% and 100% in band 14 (Figure 5a); this is obviously different from thick cirrus and deep convection clouds, which have values below 50% and 40% (Figure 5b,c). This significant difference between cloud types is beneficial for sample training and classification.

- The reflectivity values of thick cirrus clouds have a distribution area that overlaps with the distribution area of deep convection clouds, especially in the range of normalized values between 60% and 80% in band 03 (Figure 5b,c). This confusion is due to the fact that cirrus clouds are high clouds whose cloud-bottom heights are usually above 6 km; in the case of a cirrus cloud thicker than 3 km, the cloud-top height may be close to 10 km. Therefore, satellites easily misjudge these thick cirrus clouds as developing cumulonimbus clouds.

3.1.2. Nighttime Spectral Features

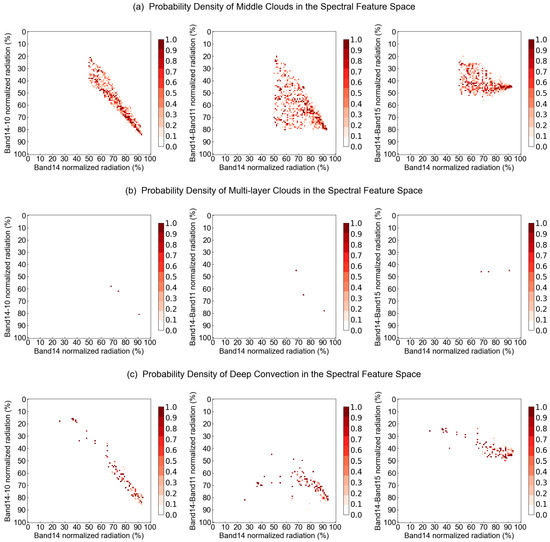

Similarly, three kinds of spectral feature space were built for nighttime using band 14 and band 14–10, band 14–11 and band 14–15. The distributions of the probability density of certain cloud types are shown in Figure 6.

Figure 6.

Probability density distributions of middle clouds (a), multi-layer clouds (b) and deep convection (c) in spectral feature space built by different band combinations.

- For middle clouds, the probability density distribution in the spectral space built by band 14 and band 14–10 is more concentrated than in the other two kinds of spectral space (Figure 6a). For multi-layer clouds and deep convection clouds, the difference between the probability density distributions in the three kinds of feature space is not obvious (Figure 6b,c).

- Middle clouds have a wide range of normalized values between 20% and 80% in band 14–10 and band 14–11 (Figure 6a). Multi-layer clouds have values above 40% in all three spectral D-value bands (Figure 6b). Deep convection clouds have a range of values between 10% and 90% in band 14–10, wider than those in the other two kinds of spectral space (Figure 6c). Compared to the daytime distributions, the differences between various types of clouds are less significant, indicating that visible light band 03 provides an important contribution to the work of cloud classification.

- In all kinds of space, middle clouds and deep convection clouds have overlapping distribution areas (Figure 6a,c), a phenomenon that is more concentrated in band 14–10. This confusion is due to the fact that the differences in the infrared band D-values between these two cloud types are far less significant than the difference in visible band reflectivity; this is also one of the reasons why it is more difficult to perform cloud classification at night than during the day.

3.2. Results of the Cloud Classification

After carrying out processing via the UFSC method and training the RF algorithm, the results must be examined. In this paper, the sample datasets were divided into a test set and a training set. The CloudSat 2B-CLDCLASS cloud type data in the test set were used as the truth values to examine the cloud classification results obtained by the RF model on the training set.

3.2.1. Daytime Results

The data from the 1st to the 25th of June, July, August and September 2018 were taken for training sample sets in which data from 00:00–09:59 UTC (local time 08:00–17:59) were regarded as the daytime training set. Meanwhile, the data from the 26th to the end of each month were taken as the testing sample set with the same time range regarded as the daytime test set. Table 3 provides a confusion matrix to show the accuracies of the daytime cloud classifier: the types in the first row represent the true value, i.e., the CloudSat multi-layer cloud classification results, and the types in the first column represent the test value, i.e., the results produced by the daytime cloud classifier.

Table 3.

Confusion matrix for cloud classification accuracies (%) of daytime classifier.

- During the daytime, the average accuracy of the classifier for all cloud types is 88.4%, and the accuracy of the classifier for a clear sky is 100%, indicating that the classifier can credibly determine the presence or absence of clouds, and a clear sky would not be distinguished as a cloudy type of weather. In addition, the accuracies of middle cloud and thin cirrus cloud classifications are above 95%, followed by cumulonimbus clouds at 87.5% and low clouds at 82.1%. The classifications of thick cirrus and multi-layer clouds have lower accuracies of 75% and 77.1%.

- Among the various types of cloudy weather, about 13.4% of low clouds are identified as middle clouds, and another 3.6% are identified as multi-layer clouds. For multi-layer clouds, 7.1% are distinguished as low clouds, and another 15.8% are identified as middle clouds. Because some multi-layer clouds are composed of low or middle clouds covered with cirrus clouds, if the upper cirrus cloud has a small thickness and a high level of radiation transmittance, geostationary satellites will receive more radiation from the middle and low clouds, resulting in classification confusion.

- This confusion also exists for thick cirrus and cumulonimbus clouds; about 20% of thick cirrus clouds are identified as cumulonimbus clouds, while 12.5% of cumulonimbus clouds are regarded as the former. Although the daytime classifier applies seven bands to distinguish cloud types, as mentioned in Section 3.1.1, thick cirrus clouds of certain thicknesses show similar spectral features to cumulonimbus clouds in both the visible light and infrared bands. This confusion usually causes interference for the training sample, which was input into a random forest algorithm model, leading to erroneous cloud classification results.

3.2.2. Nighttime Results

Similar to Section 3.2.1, the data from the 1st to the 25th of June, July, August and September 2018 were taken for training sample sets in which data from 10:00–23:59 UTC (local time 18:00–07:59) were regarded as the nighttime training set. Meanwhile, the data from the 26th to the end of each month with the same time range were regarded as the nighttime test set. Table 4 provides a confusion matrix to show the accuracies of the nighttime cloud classifier. As in Table 3, the types in the first row represent the true values, and the first column represents the results produced by the nighttime cloud classifier.

Table 4.

Confusion matrix for cloud classification accuracies (%) of nighttime classifier.

- During the nighttime, the average accuracy of the classifier for all cloud types is 79.1%, which is 9.3% lower than the accuracy of the daytime classifier. Because visible light bands are unavailable at night, the nighttime classifier instead applies the D-value of the brightness temperature between infrared bands, although it is still difficult to achieve the same effect as the visible band. The positive aspect of this is that the accuracy of identifying a clear sky still reaches 100% at night, so these two classifiers can be combined to accurately distinguish between a clear sky and cloudy weather for an entire day.

- Among the various types of cloudy weather, about 14.7% of low clouds and 12.5% of middle clouds are identified as thick cirrus clouds, which differs from the confusion between low, middle and multi-layer clouds during the daytime. This is also due to the lack of availability of visible light bands at night and that these three cloud types do not differ significantly in brightness temperature or D-value between infrared bands.

- Similar to the daytime classifier, the nighttime classifier also confuses thick cirrus and cumulonimbus clouds. About 18.3% of thick cirrus clouds are identified as cumulonimbus clouds, while 9.4% of cumulonimbus clouds are regarded as the former. Compared to the daytime situation, the false positive rates for both types are reduced. This is due to the fact that, compared to cumulonimbus clouds, thick cirrus clouds have significantly different D-values between infrared bands; however, this difference is insignificant in visible light bands. Thus, the accuracy is improved by expunging band 03.

3.3. Typhoon Case Study

In Section 3.2, test sets from the training sample were applied to examine the daytime and nighttime classifiers, respectively. In addition, this paper selected the case of Typhoon Muifa, which occurred in 2022, to test the typhoon cloud analysis of the two cloud classifiers. The data used for this comparison were obtained from the FY-4A CLT product. It should be noted that the CLT product is inverted based on the four visible light bands of FY-4A, and the classification results only represent the types of cloud tops, while the results proposed in this paper represent the types of entire cloud bodies. In order to facilitate the examination, these two types of results were united according to Table 5.

Table 5.

Rules of unifying FY-4A CLT product and cloud classification results.

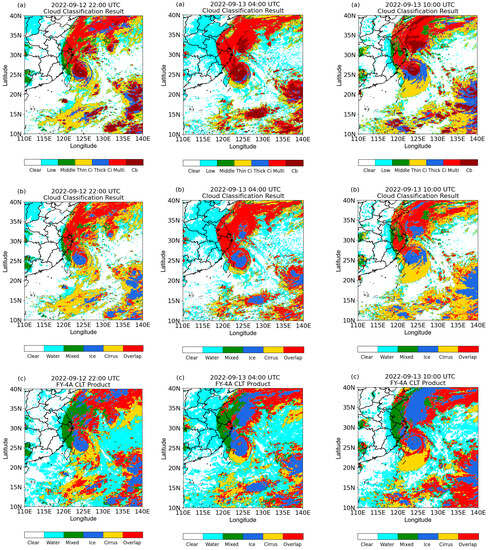

Typhoon Muifa formed on 8 September 2022. It developed into a severe typhoon on 11 September and then moved north while developing into its strongest form between 12 and 14 September. It continued to move in a northward direction with weakening intensity, finally denaturizing into an extratropical cyclone on 16 September. Typhoon Muifa had a long lifetime of up to nine days, and it landed on China four times as a severe typhoon, typhoon and tropical storm, respectively. Figure 7 shows the cloud classification results obtained via the daytime and nighttime classifiers in this paper, with or without type unification (a–b) and via the FY-4A CLT product (c).

Figure 7.

Typhoon Muifa cloud classification results (a), result adjusted to CLT cloud types (b) and CLT product (c) at 22:00 on 12 September, and at 04:00 and 10:00 on 13 September.

- With the joint inversion of the daytime and nighttime cloud classifiers, the cloud system types and features of Typhoon Muifa were analyzed (Figure 7a). The center of the typhoon was a clear sky area, i.e., the eye of the typhoon. The center outward comprised the main body of the typhoon, which mainly consisted of cumulonimbus (deep convection) and multi-layer clouds. Several broad spiral cloud bands surrounded the main body, comprising cirrus and multi-layered clouds. As the intensity of the typhoon increased, convection developed and the ascending motion inside the typhoon intensified; therefore, the cumulonimbus area expanded and presented as a mesoscale convective system (MCS) on the images of the classification results. Because the FY-4A CLT product contains no cumulonimbus type, the method proposed in this paper can more effectively analyze the structure and evolution of a typhoon.

- The results adjusted to the CLT types (Figure 7b) and the CLT product (Figure 7c) were compared to examine the effects of the cloud classifiers proposed in this paper. The results show that the classification of ice-type (thick cirrus) and multi-layer clouds is effective since the locations, shapes and sizes of these two were similar. As for low, middle and thin cirrus clouds, the classifiers provided some misclassifications. This is not only because of errors from the sample sets or random forest model but also observation errors. Himawari-8/9 and FY-4A have different detectors and detection methods, making for different radiative detection values in spectral bands which affect the results obtained via both the classifier and the CLT product.

- A large number of water types (low clouds) were distinguished as clear sky; one reason for this is the satellite detection error mentioned in (2), and the other reason is that the ocean and land were not separated for classification in this paper. In visible light bands, the ocean has a darker hue than the land does; additionally, typhoons are usually generated in the summer, so the brightness temperature of the sea is lower than that of the land in infrared bands. These two differences lead to the result that the radiative features of low clouds over the land and clear sky over the sea are very similar to each other, which interferes with the training results of the classifiers.

4. Discussion

In previous research studies, sample calibration is the main difficulty in performing supervised cloud classifications. The labels of training sets, i.e., the cloud types, were always determined via a comprehensive situation of the atmospheric circulation, weather system and surface meteorological observations. In this paper, the cloud type information was extracted from CloudSat, which exerts the advantages of satellite detection, and a method of compressing the vertical 125-layer cloud types of the 2B-CLDCLASS product into a single layer was proposed. This method chooses the most likely cloud type to represent the type of satellite point, thus providing labels for the training sample to classify as clouds. The results in this paper also show the improvements in the reliability of the sample achieved via this compression method.

The RF algorithm has been widely used in cloud classification research and is considered effective [25,26]. However, the combined use of the UFSC method and an RF model has rarely been seen. The UFSC method is based on the cache lookup table principle, which processes the sample before training. It not only improves the computing speed of the RF model but also shows the clusters of different cloud types via probability density distributions in the spectral feature space so that erroneous samples can be screened out, improving the accuracy of both the model and the classifiers.

Compared to cloud classification in the daytime, cloud classification at night is more difficult. In this study, the split-window channels of Himawari-8/9 were used, and the D-values of various infrared bands were taken as the feature quantity. Among the split-window channels, band 14–10, band 14–11 and band 14–15 provided important contributions to nighttime cloud classification, which is consistent with results of the research by Zhang [25]. The method increases the scale of sample training in the nighttime, thus reducing the level of error until it is similar to the daytime results. In fact, the classification accuracy of clear sky, middle clouds and thin cirrus clouds in the daytime and nighttime are numerically close and greater than 80% (Table 3 and Table 4). By combining the daytime and nighttime classifiers developed in this paper, continuous cloud classification is possible all day long, and the time resolution is up to 10 min. High-resolution cloud classification is important for the continuous monitoring and disaster prevention of strong weather, especially short-term heavy precipitation.

A comparative verification between the cloud classification results and the FY-4A CLT product further proved the effectiveness of this method. In the case of Typhoon Muifa, most cloud types could be classified accurately except for low clouds over the ocean, which were difficult to distinguish due to the limitations of the satellite. Furthermore, in comparison with the CLT product, the cumulonimbus type of cloud was added in this study, which is extremely important for monitoring the structure, evolution and strong zones of a typhoon.

Some questions in this study remain. Firstly, in the periods of morning and evening twilight, the results from the daytime and nighttime classifiers are not an exact match, which leads to a sudden change in the image of the distribution of cloud types. In the next step, we will attempt to use more spectral bands to train the model and propose a classifier that is applicable for morning and evening twilight. We will also try to use the FY-3E, a dawn and dusk orbiting satellite, to solve the problem of inconsistent results at dawn and dusk, and to improve all-day cloud classification results. Secondly, in addition to random forest, the state-of-the-art (SOTA) algorithms in cloud classification research also include feedforward neural network (FNN), convolutional neural network (CNN) and so on. Next, we will attempt to apply the aforementioned deep learning algorithms, adding texture features on the basis of spectral features in training samples, to improve the accuracy of the cloud classification.

5. Conclusions

Himawari-8/9 and CloudSat satellite data from June to September 2018 were applied in this paper, a method of cloud classification based on a random forest algorithm was presented and test samples and a typhoon case were taken to examine the method. The main conclusions are as follows:

- Various cloud types have different probability density distributions in the majority of geostationary satellite bands. During the daytime, the most obvious difference appears in the two-dimensional spectral space composed of visible light band 03 and infrared band 14, while the most obvious difference at night appears in the spectral space comprising infrared band 14 and the D-value between band 14 and 11.

- The daytime classifier has an accuracy of 88.4% for all cloud types, and the accuracies for a clear sky, middle clouds and thin cirrus clouds are above 95%; the other types have accuracies between 75% and 87.5%. Regarding low clouds, 17% are identified as multi-layer clouds, and 22.9% of the latter are identified as low and middle clouds. Between thick cirrus and cumulonimbus clouds, 20% and 12.5% are mistaken for one another. The nighttime classifier has an accuracy of 79.1% for all cloud types, which is 9.3% lower than the daytime accuracy. The classification accuracies for a clear sky, thin cirrus clouds and multi-layer clouds are above 95%, while other types have accuracies between 47.9% and 84.4%. For low and middle clouds, 14.7% and 12.5% are identified as thin cirrus clouds, respectively. Between thick cirrus and cumulonimbus clouds, 18.3% and 9.4% are mistaken for one another. Compared to the daytime situation, false positives are reduced.

- In the case of Typhoon Muifa, the center of the typhoon comprised a clear sky, the main body of the typhoon comprised cumulonimbus and multi-layer clouds and the spiral cloud bands that surrounded the main body consisted of cirrus and multi-layered clouds. The cumulonimbus area classified by the classifiers corresponded well with a mesoscale convective system (MCS). Compared to the FY-4A CLT product, the classifications of ice-type (thick cirrus) and multi-layer clouds are effective since the locations, shapes and sizes of these two were similar. As for low, middle and thin cirrus clouds, the classifiers provided some misclassifications. Additionally, the ocean and land were not separated for classification, leading to confusion between low clouds over the ocean and clear skies.

- This study on cloud classification using multiple satellite data can distinguish cloud types both during the day and at night and characterize continuous changes in cloud systems in weather processes. It shows good application value and is worth further study. The errors in this study partly come from the observation error caused by the different detection methods of multiple satellites, which affected the data quality. The other portion of errors was caused by the similarity of certain cloud types in spectral bands, which interfered with the random forest model. As a next step, we will attempt to reduce errors stemming from these two aspects while improving the random forest model for further study.

Author Contributions

Conceptualization, data collection and analysis, Y.W., C.H. and Z.W.; review, editing, supervision and funding acquisition, Z.D. and X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This study was jointly supported by the National Major Projects on High-Resolution Earth Observation System (Grant No. 21-Y20B01-9001-19/22), the Chongqing Special Key Projects for Technological Innovation and Application Development (Grant No. CSTB2022TIAD-KPX0123) and the China Meteorological Administration Review and Summary Special Project (Grant No. FPZJ2023-107).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data can be accessed through the provided website.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, Y.; Xia, J.; Shi, C.-X.; Hong, Y. An Improved Cloud Classification Algorithm for China’s FY-2C Multi-Channel Images Using Artificial Neural Network. Sensors 2009, 9, 5558–5579. [Google Scholar] [CrossRef] [PubMed]

- Murakami, M.; Clark, T.L.; Hall, W.D. Numerical Simulations of Convective Snow Clouds over the Sea of Japan. J. Meteorol. Soc. Jpn. Ser. II 1994, 72, 43–62. [Google Scholar] [CrossRef]

- Stratmann, F.; Kiselev, A.; Wurzler, S.; Wendisch, M.; Heintzenberg, J.; Charlson, R.J.; Diehl, K.; Wex, H.; Schmidt, S. Laboratory Studies and Numerical Simulations of Cloud Droplet Formation under Realistic Supersaturation Conditions. J. Atmos. Ocean. Technol. 2004, 21, 876–887. [Google Scholar] [CrossRef]

- Stephens, G.L. Cloud Feedbacks in the Climate System: A Critical Review. J. Clim. 2005, 18, 237–273. [Google Scholar] [CrossRef]

- Hong, Y.; Hsu, K.-L.; Sorooshian, S.; Gao, X. Precipitation Estimation from Remotely Sensed Imagery Using an Artificial Neural Network Cloud Classification System. J. Appl. Meteorol. 2004, 43, 1834–1853. [Google Scholar] [CrossRef]

- Udelhofen, P.M.; Hartmann, D.L. Influence of tropical cloud systems on the relative humidity in the upper troposphere. J. Geophys. Res. Atmos. 1995, 100, 7423–7440. [Google Scholar] [CrossRef]

- Inoue, T.; Kamahori, H. Statistical Relationship between ISCCP Cloud Type and Vertical Relative Humidity Profile. J. Meteorol. Soc. Jpn. Ser. II 2001, 79, 1243–1256. [Google Scholar] [CrossRef]

- Schmetz, J.; Holmlund, K.; Hoffman, J.; Strauss, B.; Mason, B.; Gaertner, V.; Koch, A.; Van De Berg, L. Operational Cloud-Motion Winds from Meteosat Infrared Images. J. Appl. Meteorol. 1993, 32, 1206–1225. [Google Scholar] [CrossRef]

- Sieglaff, J.M.; Cronce, L.M.; Feltz, W.F.; Bedka, K.M.; Pavolonis, M.J.; Heidinger, A.K. Nowcasting Convective Storm Initiation Using Satellite-Based Box-Averaged Cloud-Top Cooling and Cloud-Type Trends. J. Appl. Meteorol. Clim. 2011, 50, 110–126. [Google Scholar] [CrossRef]

- Snodgrass, E.R.; Di Girolamo, L.; Rauber, R.M. Precipitation Characteristics of Trade Wind Clouds during RICO Derived from Radar, Satellite, and Aircraft Measurements. J. Appl. Meteorol. Clim. 2009, 48, 464–483. [Google Scholar] [CrossRef]

- Purbantoro, B.; Aminuddin, J.; Manago, N.; Toyoshima, K.; Lagrosas, N.; Sumantyo, J.T.S.; Kuze, H. Comparison of Cloud Type Classification with Split Window Algorithm Based on Different Infrared Band Combinations of Himawari-8 Satellite. Adv. Remote Sens. 2018, 7, 218–234. [Google Scholar] [CrossRef]

- Liang, P.; Chen, B.D.; Tang, X. Identification of cloud types over Tibetan Plateau by satellite remote sensing. Plateau Meteor 2010, 29, 268–277. (In Chinese) [Google Scholar] [CrossRef]

- CloudSat Project, 2008: CloudSat Standard Data Products Handbook; CIRA, Colorado State University: Fort Collins, CO, USA, 2008; 5p, Available online: http://www.cloudsat.cira.colostate.edu/cloudsat_documentation/CloudSat_Data_Users_Handbook.pdf (accessed on 27 June 2023).

- Tanelli, S.; Durden, S.L.; Im, E.; Pak, K.S.; Reinke, D.G.; Partain, P.; Haynes, J.M.; Marchand, R.T. CloudSat’s Cloud Profiling Radar After Two Years in Orbit: Performance, Calibration, and Processing. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3560–3573. [Google Scholar] [CrossRef]

- Zhang, C.; Zhuge, X.; Yu, F. Development of a high spatiotemporal resolution cloud-type classification approach using Himawari-8 and CloudSat. Int. J. Remote Sens. 2019, 40, 6464–6481. [Google Scholar] [CrossRef]

- Liu, C.; Chiu, C.; Lin, P.; Min, M. Comparison of Cloud-Top Property Retrievals from Advanced Himawari Imager, MODIS, CloudSat/CPR, CALIPSO/CALIOP, and Radiosonde. J. Geophys. Res. Atmos. 2020, 125, e2020JD032683. [Google Scholar] [CrossRef]

- Jiang, Y.; Cheng, W.; Gao, F.; Zhang, S.; Wang, S.; Liu, C.; Liu, J. A Cloud Classification Method Based on a Convolutional Neural Network for FY-4A Satellites. Remote Sens. 2022, 14, 2314. [Google Scholar] [CrossRef]

- Li, W.; Zhang, F.; Lin, H.; Chen, X.; Li, J.; Han, W. Cloud Detection and Classification Algorithms for Himawari-8 Imager Measurements Based on Deep Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3153129. [Google Scholar] [CrossRef]

- Ghasemian, N.; Akhoondzadeh, M. Introducing two Random Forest based methods for cloud detection in remote sensing images. Adv. Space Res. 2018, 62, 288–303. [Google Scholar] [CrossRef]

- Thampi, B.V.; Wong, T.; Lukashin, C.; Loeb, N.G. Determination of CERES TOA Fluxes Using Machine Learning Algorithms. Part I: Classification and Retrieval of CERES Cloudy and Clear Scenes. J. Atmos. Ocean. Technol. 2017, 34, 2329–2345. [Google Scholar] [CrossRef]

- Tan, Z.; Huo, J.; Ma, S.; Han, D.; Wang, X.; Hu, S.; Yan, W. Estimating cloud base height from Himawari-8 based on a random forest algorithm. Int. J. Remote Sens. 2021, 42, 2485–2501. [Google Scholar] [CrossRef]

- Tan, Z.; Ma, S.; Han, D.; Gao, D.; Yan, W. Estimation of cloud base height for fy-4a satellite based on random forest algorithm. J. Infrared Millim. Waves 2019, 38, 381–388. (In Chinese) [Google Scholar]

- Wang, S.-H.; Han, Z.-G.; Yao, Z.-G. Comparison of cloud amounts from isccp and cloudsat over China and its neighborhood. Chin. J. Atmos. Sci. 2010, 34, 767–779. (In Chinese) [Google Scholar]

- Wrenn, F. Documenting Hydrometeor Layer Occurrence within International Satellite Cloud Climatology Project-Defined Cloud Classifications Using CLOUDSAT and CALIPSO. Ph.D. Thesis, The University of Utah, Salt Lake City, UT, USA, 2012. [Google Scholar]

- Zhang, C.-W. The Cloud-Type Classification Research and Its Application for the New Generation Geostationary Satellite Himawari-8. Ph.D. Thesis, Nanjing University, Nanjing, China, 2019. (In Chinese). [Google Scholar] [CrossRef]

- Yu, Z.; Ma, S.; Han, D.; Li, G.; Gao, D.; Yan, W. A cloud classification method based on random forest for FY-4A. Int. J. Remote Sens. 2021, 42, 3353–3379. [Google Scholar] [CrossRef]

- Li, R.; Wang, G.; Zhou, R.; Zhang, J.; Liu, L. Seasonal Variation in Microphysical Characteristics of Precipitation at the Entrance of Water Vapor Channel in Yarlung Zangbo Grand Canyon. Remote Sens. 2022, 14, 3149. [Google Scholar] [CrossRef]

- General Meteorological Standards and Recommended Practices. Technical Regulations, Volume I (WMO-No. 49), 2019 Edition. Available online: https://cloudatlas.wmo.int/en/clouds-definitions.html (accessed on 20 June 2023).

- FY-4A Satellite. Available online: http://www.nsmc.org.cn/nsmc/en/satellite/FY4A.html (accessed on 27 June 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).