1. Introduction

Particulate matter (PM) refers to materials scattered throughout the atmosphere. PM with diameter 2.5 micrometers or less is defined as PM

[

1]. PM

is readily absorbed during breathing owing to their small size and lightweight. PM

absorbed into the body can cause bronchitis, pneumonia, chronic obstructive pulmonary disease, heart disease, stroke, and respiratory diseases [

2,

3,

4]. Therefore, many industrialized countries have made significant efforts to reduce the risk of PM exposure. The U.S. Environmental Protection Agency defines daily average PM

concentrations of 35

g/m

or more as high-concentrations and regulates daily average concentrations that do not exceed 12

g/m

. The European Environment Agency regulates PM

emissions when the daily average PM

concentration exceeds 25

g/m

. The South Korean Government defines high concentrations of PM

as 36

g/m

or more. When the PM

concentration is 75

g/m

or higher and lasts for 2 h, the government issues a PM

alert and implements air pollution reduction measures. PM

management requires continuous monitoring and accurate forecasting of PM

concentrations. In particular, high-concentration PM

is difficult to accurately predict due to natural sources, such as yellow dust and wildfire ash, as well as non-natural sources, such as emissions from fossil fuel-powered power plants and factories and automobile exhaust. Much research is underway to address these issues.

PM

forecast models are categorized into numerical modeling and data-driven forecasting based on a methodology [

5,

6,

7]. Numerical modeling entails numerically converting meteorological conditions, air pollution emissions, and traffic volumes to a model for forecasting. The numerical modeling method includes chemical transport models [

8], weather research and forecasting models [

9], weather research and forecasting models coupled with chemistry [

10], weather research, and forecasting community multi-scale air quality models [

11]. The numerical method is powerful for modeling air quality with detailed spatial and temporal resolution and complex chemical and physical modeling. However, these methods require large amounts of meteorological information, air pollution emissions, and traffic data. Moreover, they have low forecasting performance owing to the enormous computational complexity of the process and uncertainty of the meteorological factors owing to complex pollutant diffusion mechanisms [

12]. Data-driven modeling analyses patterns and trends in time-series data to make forecasts. Traditional time-series forecasting methods include the autoregressive moving average (ARMA) model [

13], autoregressive integrated moving average (ARIMA) model [

14], and multiple linear regression (MLR) [

15]. These methods are relatively simple and more intuitive than the numerical model. However, the performance of these methods is limited in PM

forecasting because of the non-linearity between meteorological factors and air quality pollutants [

16,

17]. To overcome these shortcomings, models have been implemented using machine learning techniques, such as multi-layer perceptron [

18], recurrent neural network [

19], decision trees [

20], random forests [

21], and support vector machines [

22]. Following recent developments in hardware, convolutional neural networks [

23,

24], long short-term memory (LSTM) [

7,

24], gated recurrent unit (GRU) [

24,

25], and bidirectional long short-term memory (Bi-LSTM) [

26] have been widely used to forecast PM

. In contrast to the models mentioned above, LSTM, GRU, and Bi-LSTM can leverage the learning results from the hidden layers and incorporate them into the current forecast. This characteristic has led to their widespread adoption in time-series data forecasting.

Forecasting the PM

concentration involves the selection of an appropriate model and careful consideration of which input variables to include in the analysis [

27]. The input variable selection is categorized into filter methods, wrapped methods, and embedded methods [

28]. The filter methods select variables according to statistical criteria to analyse the features between the input and forecaster variables. Filter methods such as the correlation coefficient [

29] and chi-square test [

30] are employed for input variable selection. Filter methods are typically applied to large datasets because they involve simple calculations and can quickly obtain results. However, the forecasting performance of the model could be improved because it only considers linear relationships with forecasters. The wrapped methods generate subsets of input variables, train a prediction model, and then evaluate the model performance for the generated subsets used to select the best subset. Wrapped methods include recursive feature elimination [

31] and stepwise regression [

32]. These methods directly affect the performance of the forecasting model. Thus, the input variables are likely to increase the accuracy of the forecasting model. However, they are computationally more expensive than the other methods. Consequently, the methods are difficult to use when there are too many variables because the model performance can vary significantly for different subsets of input variables. The embedded method calculates variable importance to only select helpful variables for training a model. Unlike wrapped methods, the embedded methods have a relatively low computational cost. Moreover, they can be applied to both linear and non-linear models because the relationship of data is not assumed.

The effectiveness of PM

forecasting models heavily relies on the distribution of the training data. When training a model with imbalanced data, the problem arises that the model is trained biased towards the data of the majority class. Training on data from minority classes is insufficient, resulting in incorrect predictions for minority classes. Furthermore, since the model is trained biased toward the majority class, its generalization ability may suffer. These data imbalance issues can limit the model’s performance and reduce its reliability. Various methods have been proposed to solve this data imbalance problem. Solving imbalanced data requires sampling-based methods and cost-sensitive learning methods [

33]. Sampling-based methods adjust the proportion of the data via data sampling. The sampling-based methods are divided into undersampling and oversampling. Undersampling only uses a portion of the data from a large number of classes to balance the ratio of data from a large number of classes to a small number of classes, such as Tomek links and cluster centroids. These methods are more accessible to scale than oversampling; however, they result in data loss because they reduce the existing data. Oversampling addresses data imbalance by augmenting the minority class data and includes synthetic minority oversampling, adaptive synthetic sampling, borderline synthetic minority oversampling techniques, and Kriging [

34]. Unlike undersampling, oversampling does not result in data loss. However, because it replicates the data from fewer classes, it may overfit the training data and degrade the performance of the test data. Moreover, the replicated data for minority classes may not resemble the existing data. When this occurs, the newly generated data can be considered as noise, which may negatively affect the overall forecasting performance of the model. Cost-sensitive learning allocates greater weight to minority classes to improve the classification performance of minority classes in imbalanced data. The advantage of this method is that it preserves existing data and does not result in information loss. Additionally, it avoids the problem of reducing the generalization ability of the model owing to duplicate data. The contributions of this study are summarized as follows:

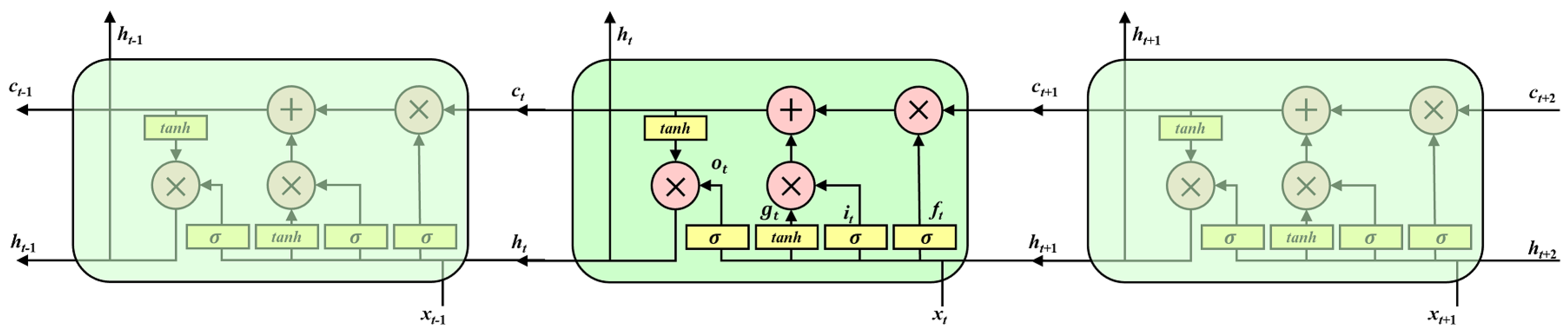

Traditional time-series forecasting methods, such as ARMA, ARIMA, and MLR, often have limited capabilities in accounting for non-linear relationships. Meanwhile, machine learning models, such as SVM and decision trees, cannot incorporate past time points during the forecasting process. In contrast, Bi-LSTM, a recursive model that uses past output values as inputs to the hidden layer, can effectively solve the above-mentioned limitations. When comparing recursive methods, LSTM and GRU, which are unidirectional models that consider past output values in the hidden layer, have concerns that prediction performance deteriorates as the forecast time point becomes longer. On the other hand, Bi-LSTM, which is trained bidirectionally, can reflect more information than unidirectional-based models.

Selection of input variables is necessary to accurately forecast PM. In general, the wrapping method, in which input variables are selected depending on experience, is time-consuming and requires a lot of computational costs. RF can select variables that are effective for prediction by calculating the importance of each variable. In particular, RF can reduce the time cost compared to heuristically reliant wrapping methods. In addition, unlike the filter method, it is effective when implementing a PM concentration forecast model considering non-linearity.

To address the data imbalance problem, the proposed method utilizes the weighting method, which is a cost-learning method used during model training. Unlike the sampling method, which adjusts the proportion of data, the weighting method does not result in information loss. Accordingly, the approach prevents any bias towards the main class data during model training, which is a common problem with imbalanced datasets.

The remainder of this paper is organized as follows. In

Section 2, we provide detailed descriptions of the study site, data used in this study, and the proposed method for forecasting PM

concentration. The experimental results are presented in

Section 3, where the performance of the proposed method is evaluated using various performance index. Finally,

Section 4 discusses the results and their implications, as well as conclusions drawn from this study.

3. Experiments and Results

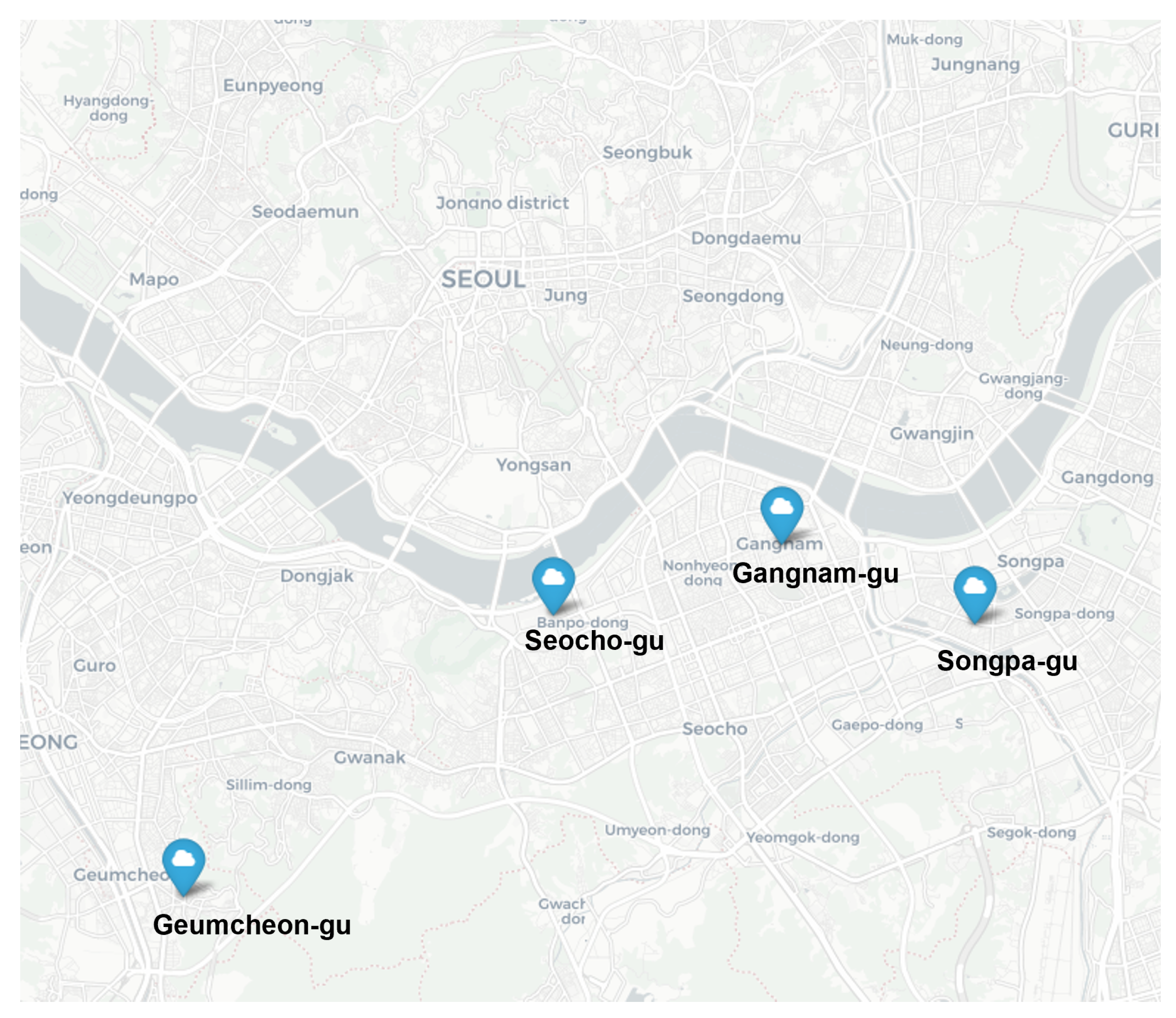

Air pollution data and meteorological data were used to forecast the PM concentration. Data from four years (2015–2018) were used in the forecasting, and the training data were from three years (2015–2017), and the test data was from 2018. To compare the performance of the proposed model, we performed experiments for two cases. In case study 1, we selected input variables to reduce the complexity of the model and increase its comprehensibility and added weighting variables to solve the data imbalance problem and compared the results of forecasting the model without doing so. Case study 2 compares the performances of three deep learning models (LSTM, Bi-LSTM, and GRU) and conventional machine learning models (MLP, SVM, decision tree, random forest) by the station to compare the performances of the forecast models in the proposed method. In both experiments (case study 1 and 2), in order to consider the past time points and that from the current time point (t) to 23 h before the past time, point (t ) was used as the input to forecast one hour (t ) later.

3.1. Performance Index

To numerically compare the experimental results of the case study, we used three performance indices used in regression: RMSE, mean absolute error (MAE), relative root mean square error (RRMSE), and R

. The RMSE was obtained by averaging the squares of the error difference between the forecast and actual values and taking the square root of the result. The MAE was calculated as the mean of the absolute errors. The RRMSE is the relative value of the RMSE between the forecasted and actual values divided by the average of the actual values. The lower the values of RMSE, MAE, and RRMSE, the better the forecasting performance. R

is an index that evaluates the extent to which the forecast describes its true value. R

has a value between 0 and 1. The closer it is to 1, the better the model describes the data. Equations (

11)–(

14) are used to determine RMSE, MAE, RRMSE, and R

. In each of these formulas,

refers to the

i-th forecasted value, and y

refers to the

i-th observed value, where

denotes the mean of the observed values.

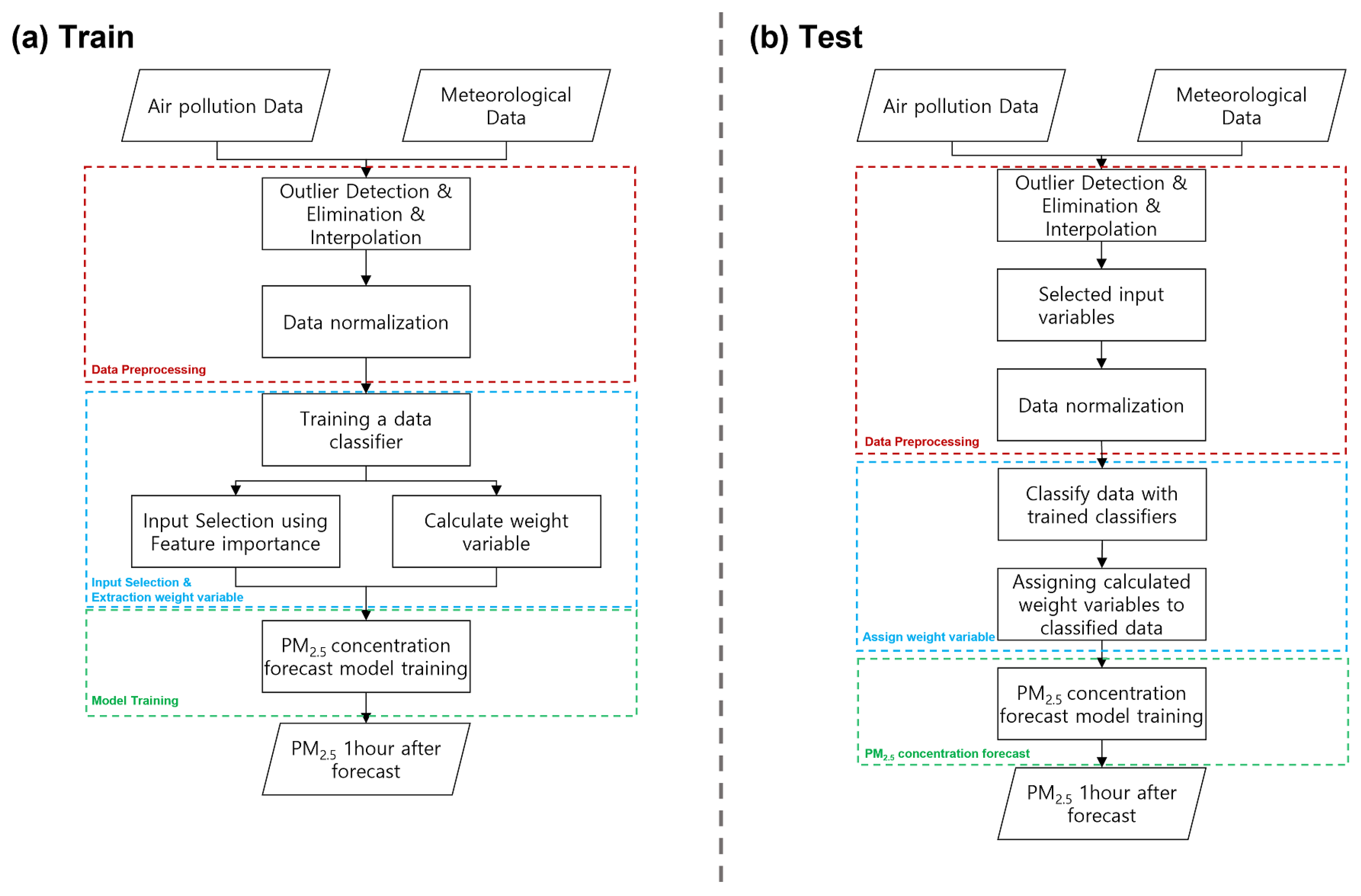

3.2. Case Study 1: Comparing the Conventional Method with the Proposed Method

Case study 1 compares the proposed method with the conventional method. The conventional method forecasts using all the variables in the data. The proposed method uses a random forest to select the input variables and a weight variable to solve the unbalanced data and uses Bi-LSTM to forecast the PM

concentration. The results of calculating the feature importance using a random forest to select the input variables are shown in

Table 11. In

Table 11, PM

has the highest value for all monitoring stations. PM

is the second highest at all stations except Geumcheon-gu. Among the meteorological variables, the temperature had the highest value at all stations except Songpa-gu. Among the values calculated in

Table 11, non-zero and weight variables were used as input variables for the forecasting model.

In addition, to assign weighting variables, the data must be classified by thePM

class. Therefore, this paper performs the classification using a random forest. The input variables are the same as those of the forecasting model selected earlier. Since each monitoring station has a different data range and distribution, they were trained separately, and the input variables at time

t were used to classify the PM

class at time

t. To compare the classification accuracy according to the selection of input variables, we conducted experiments before and after the selection of input variables.

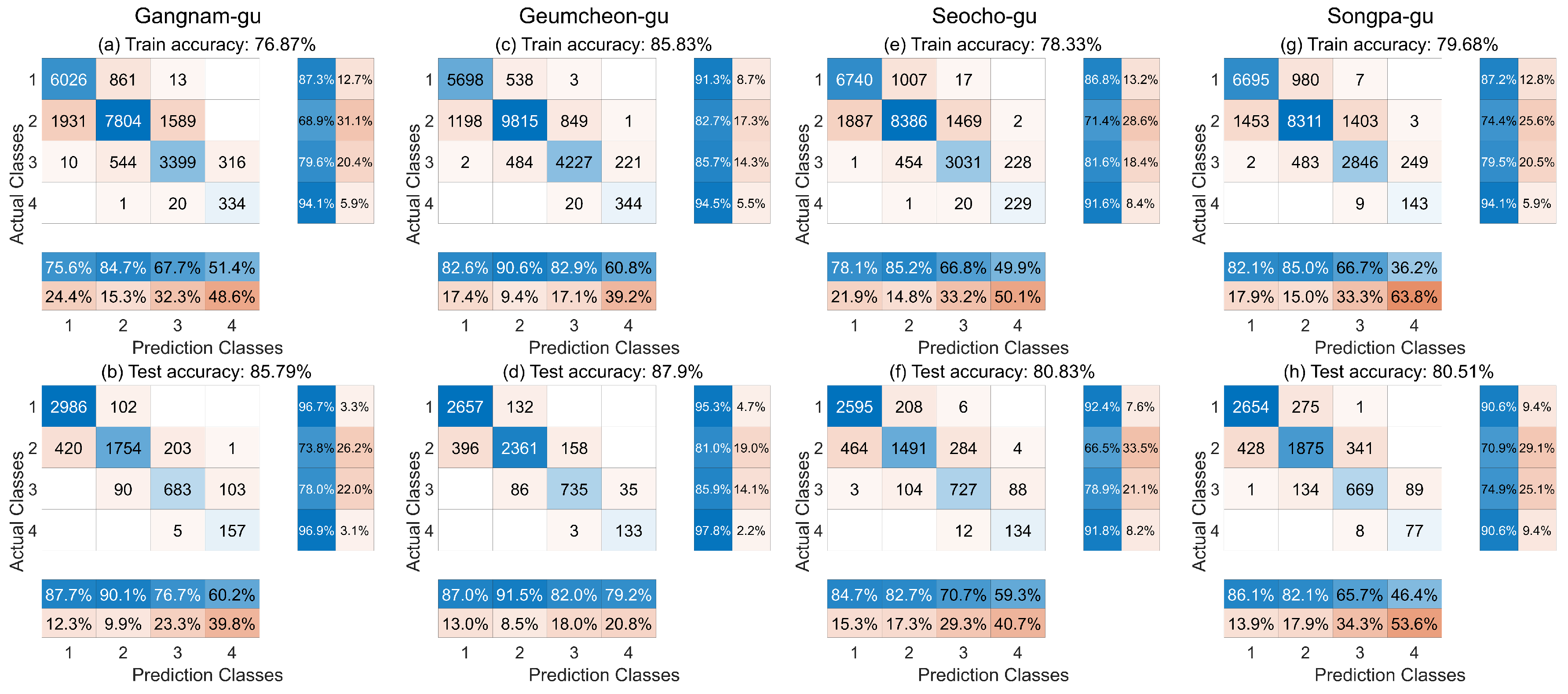

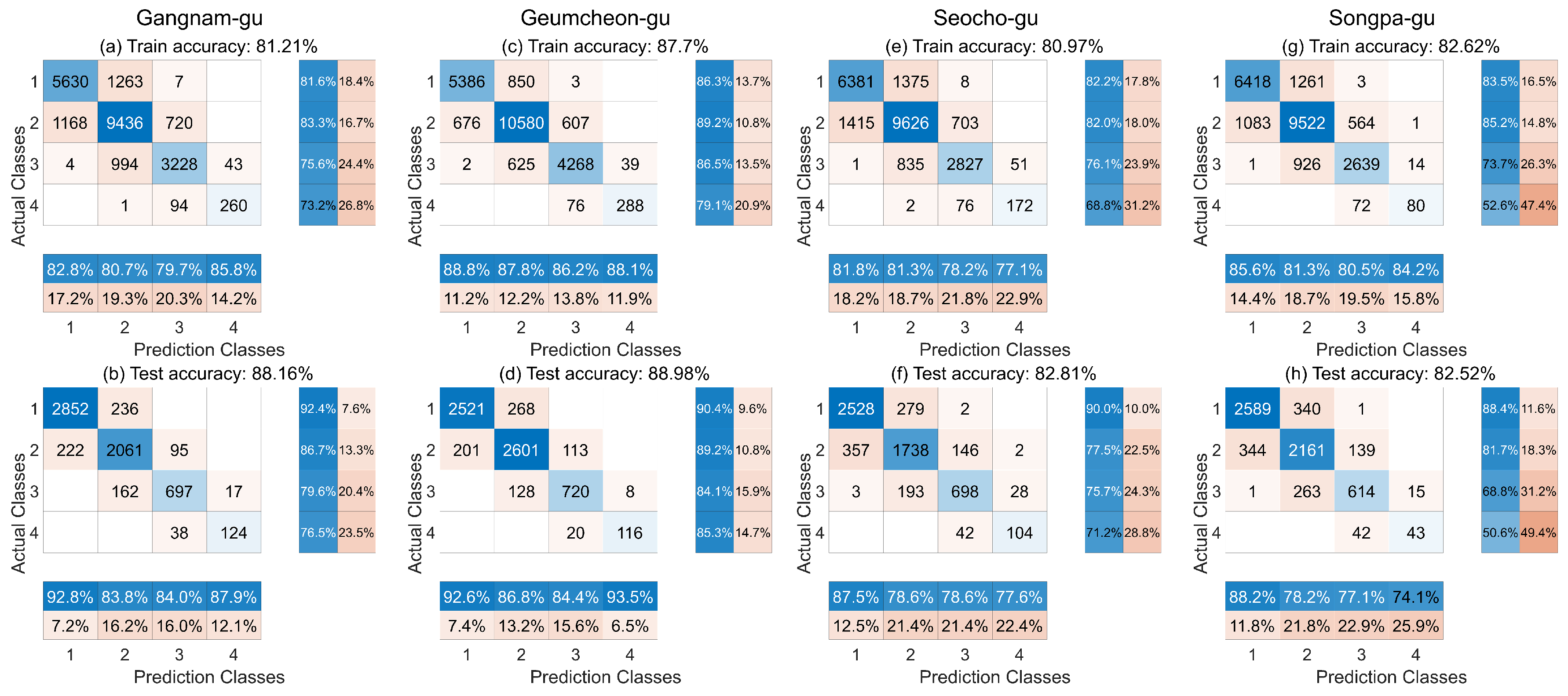

Figure 4 and

Figure 5 show the confusion matrix before and after the selection, respectively. In each figure, (a) and (b) show the confusion matrices for Gangnam-gu, (c) and (d) for Geumcheon-gu, (e) and (f) for Seocho-gu, and (g) and (h) for Songpa-gu. Using input selection for data classification improved the training and test data classification accuracy. The most considerable improvement was observed for Gangnam-gu, where the accuracy increased by 4.34%p in the training data and 2.37%p in the test data.

The results of calculating the weighting variables are shown in

Table 12. In

Table 12, ’Worst’ has the smallest data percentage and the highest weighting variable.

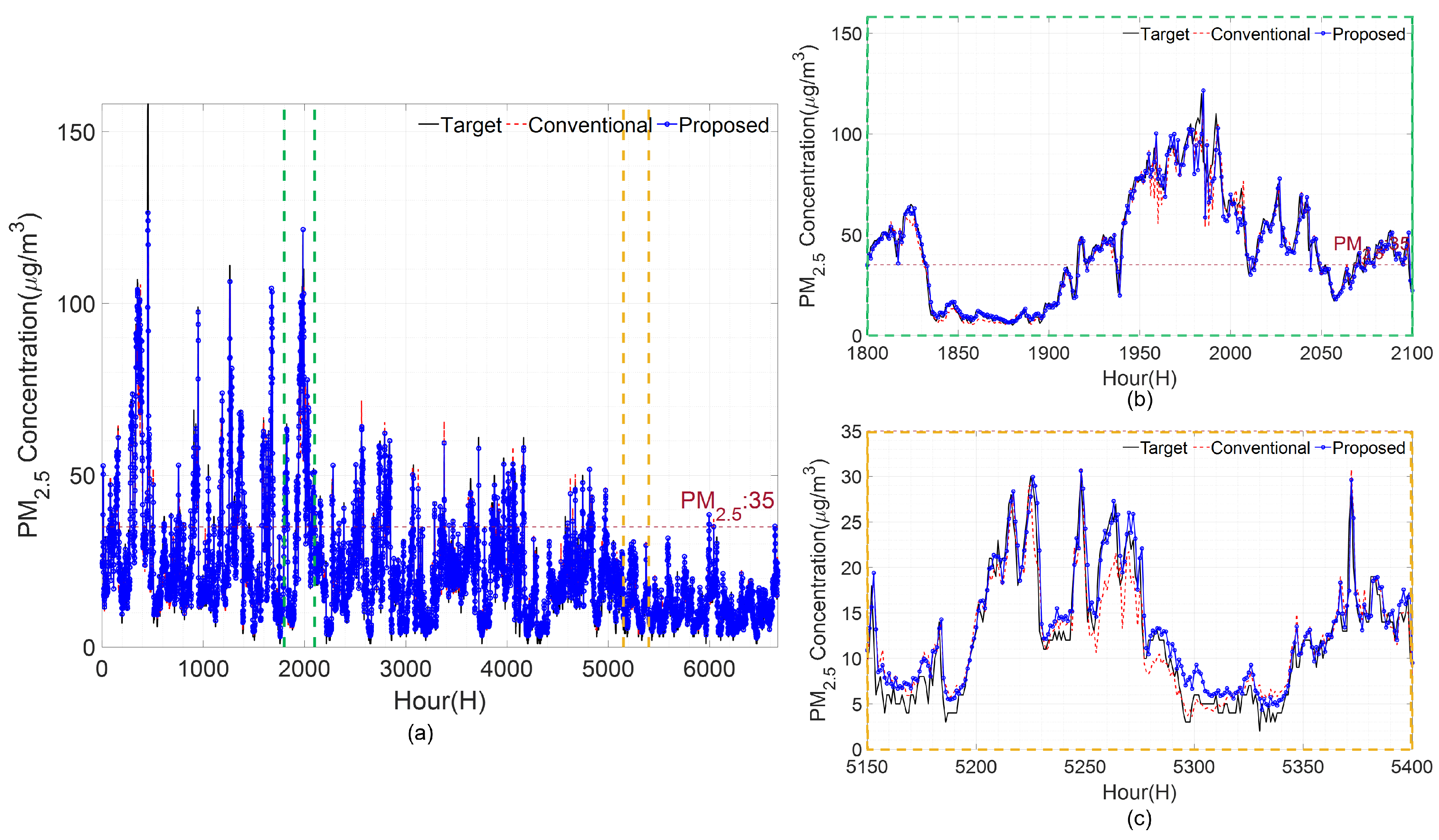

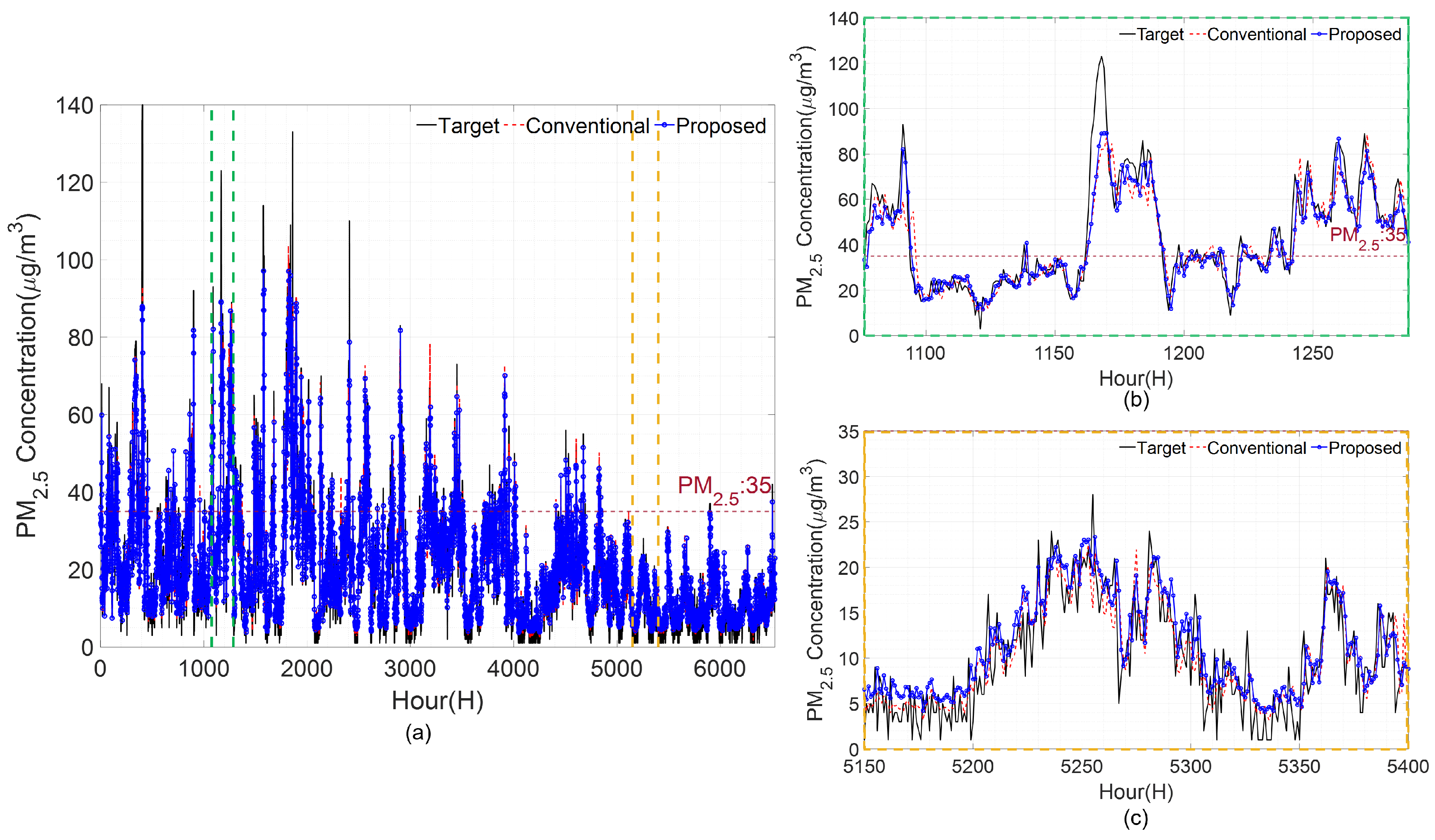

Figure 6,

Figure 7,

Figure 8 and

Figure 9 show the forecast results of each station using the conventional and proposed methods. In the figures shown in

Figure 6,

Figure 7,

Figure 8 and

Figure 9, (a) shows the test period; (b) shows the period between the two green dashed lines, which is a section of active high-concentration; while (c) shows the period between the two yellow dashed lines, which corresponds to a low-concentration section. The x-axis in the figure represents time, and the y-axis represents the concentration. The black solid line represents the actual PM

concentration measured at each monitoring station, while the red dashed line represents the forecasted value using the conventional method. The blue circled line represent the forecasted values obtained using the proposed method. The magenta dotted line indicates the threshold value of the PM

concentration at 35, which is the standard for high concentration. The proposed and conventional methods are under forecasting when forecasting the PM

concentration in Gangnam-gu. Between 445 and 450 h, the target value continues to increase, and the forecast value of the conventional method decreases. However, the proposed method exhibits a lower error in forecasting by increasingly following the target value. In the low-concentration period (

Figure 6c), the forecast result of the conventional method is under forecast, and it is evident that the forecast performance is lower than that of the proposed method in the 5250–5300 h. In the high-concentration section of Geumcheon-gu (

Figure 7b), which spans from 1950 to 1980 h, the conventional method shows a sharp decrease in forecasted values, while the proposed method forecasts the target PM

concentrations. In

Figure 7c, which shows the forecast result of the low-concentration section, the proposed method forecasts better than the conventional method. In the high-concentration section of Seocho-gu, the conventional and proposed methods are primarily under forecasting. The proposed method has a better forecast performance from 330 to 380 h, where the concentration is above 75 and changes rapidly. For the low-concentration section, the conventional method over forecasts compared with the proposed method and has a lower forecast performance than the proposed method. For the high-concentration section of Songpa-gu, between 1090 and 1095 h, the conventional method does not forecast more than 60, while the proposed method forecasts up to 80, exhibiting a lower error. However, from 1165 to 1170 h, where the concentration is above 100, both methods have errors due to under forecasting.

In

Figure 6,

Figure 7,

Figure 8 and

Figure 9b, the high-concentration range of each station, it can be seen that the conventional method under forecasts the proposed method because it is trained with a bias towards low-concentration, which is the major class data.

Table 13,

Table 14,

Table 15 and

Table 16 list the RMSE, MAE, RRMSE and R

values of the conventional and proposed methods, respectively. The values in each table are the averages of the results of 10 iteration experiments. As listed in

Table 10, the proposed method has an average RMSE of 0.2095 (3.98%p), which is lower than that of the conventional method. Specifically, in the high-concentration section, the proposed method is 0.3011 (3.21%p) lower than the conventional method. Notably, the Gangnam-gu forecast model shows the most significant difference, with the proposed method differing from the conventional method by 0.3262 (6.88%p) for the overall RMSE and 0.534 (6.42%p) for the RMSE of the high-concentration section. Furthermore, even in the low-concentration section, the proposed method shows an average RMSE of 0.1931 (4.78%p), which is lower than that of the conventional method, with the smallest difference of 0.38 (7.43%p) observed for Songpa-gu. Additionally, the performance improvement of the proposed method is more significant in the high concentration range for Geumcheon-gu and Seocho-gu stations.

Table 14 indicates that the proposed method has, on average, a 5.87% lower MAE than the conventional method. In the high-concentration section, the proposed method outperforms the conventional method by 4.36% for all stations. Moreover, the proposed method exhibits a 6.63% lower MAE than the conventional method in the low-concentration section. The largest differences in MAE between the proposed and conventional methods are observed for the stations in Gangnam-gu, with reductions of 10.49, 8.65, and 11.29%, respectively, for the entire test period, high-concentration, and low-concentration sections, respectively. Among the high-concentration sections, the station in Songpa-gu shows the largest difference of 0.0011 (0.11%p).

Table 15 shows that the proposed method is, on average, 0.0097 (3.96%p) lower than the conventional method. In the high-concentration range, the proposed method is lower on average by 0.0056 (3.21%p), with the largest difference (0.0096, 6.46%p) in Gangnam-gu. In the low-concentration range, the proposed method is also lower than the conventional method by 0.013 (4.76%p), with the most significant difference (0.0263, 7.42%p) in Seocho-gu.

Table 16 shows that the R

value of the proposed method is 0.0066 (0.72%p) higher than the conventional method. In the high-concentration range, the proposed method is higher by 0.0007 (0.07%p). Especially in the high-concentration range, Songpa-gu shows the most significant difference of 0.0011 (0.11%p). In the low-concentration range, the proposed method is also higher than the conventional method by 0.018 (2.23%p). Especially in Seocho-gu, the proposed method is higher than the conventional method by 0.0094 (1.06%p) and 0.0398 (5.50%p) in the test period and low-concentration range.

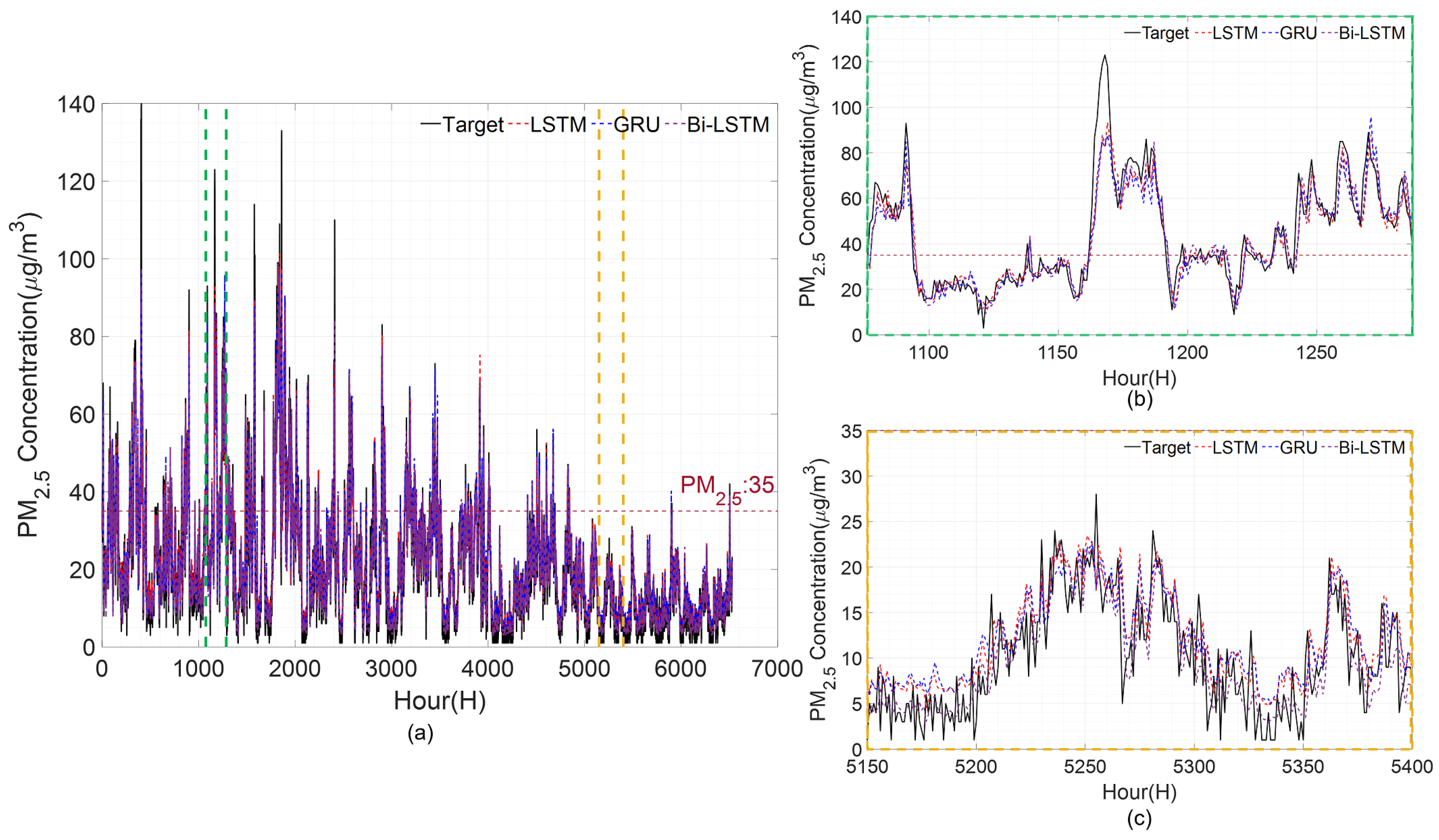

3.3. Case Study 2: Comparing the Deep Learning Model and Conventional Machine Learning

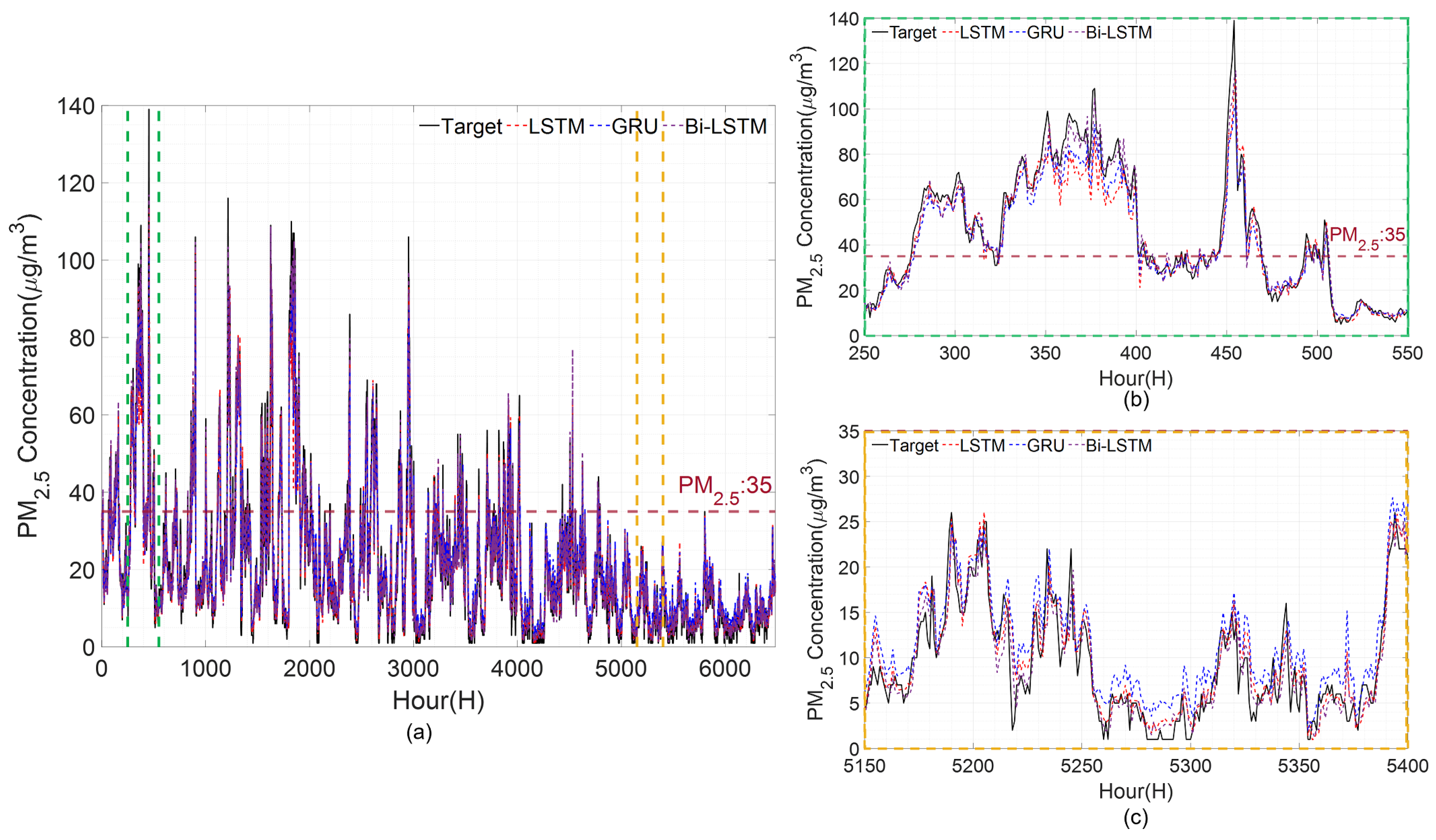

In case study 2, we evaluate the forecasting accuracy of PM

concentrations using deep learning models (LSTM, GRU, and Bi-LSTM) with superior forecasting performance and conventional used machine learning models (MLP, SVM, DT, and RF). The input variables of the models are those proposed in case study 1. The forecast results of the LSTM, GRU, and Bi-LSTM models for each station are illustrated in

Figure 10,

Figure 11,

Figure 12 and

Figure 13. The

x-axis represents time, and the

y-axis represents the PM

concentration. The black line represents the actual values of each station, while the red and blue dashed lines indicate the forecasting results of the LSTM and GRU models. The forecast result of Bi-LSTM is indicated by the purple dashed line. The magenta-coloured dotted line represents the point where the PM

concentration value is 35. In each figure, (a) represents test periods; (b) represents the period between the two green dashed lines, which are sections of high-concentration; while (c) shows the period between the two yellow dashed lines, which is the low-concentration section.

Regarding comparing forecast performance among different models in case study 2, it can be observed that all models underestimate the actual PM

concentration in the high-concentration section of Gangnam-gu shown in

Figure 10b. However, the Bi-LSTM model provides the best forecasting performance among the areas with a concentration above 75, particularly between 350 and 390 h. In the low-concentration section, all models over forecast. For the high-concentration section of Geumcheon-gu, the Bi-LSTM model performs better than the other two models during 1940–2010 h, where the concentration changes rapidly. On average, in the low-concentration section, the Bi-LSTM over forecasted, while LSTM and GRU under forecasted. The Bi-LSTM exhibits better forecasting performance in the normal range of 15 and above and 35 and below. Within the PM

concentrations between 0 and 15, LSTM exhibits the most accurate forecasting performance.

In the high-concentration section of Seocho-gu, all models under forecast on average, and Bi-LSTM outperforms LSTM and GRU in the range of 330–400 h. All models over forecast in the low-concentration section, and the GRU exhibits the best performance in the range of 0–10, followed by Bi-LSTM. For Songpa-gu, GRU exhibits the best forecasting performance in the high-concentration section of 1080–1095 h, and LSTM exhibits the best forecast performance in the increasing section of 1240–1285 h, followed by Bi-LSTM with the second best forecasting performance. In the low-concentration section, LSTM and GRU over forecast on average, while Bi-LSTM under forecasts. Therefore, it exhibits the best overall forecasting performance in this section.

Table 17 and

Table 18 list the performance indices for the PM

concentration forecast results using the deep learning model. The numbers in

Table 17 and

Table 18 are the averages of the results from 10 replicates. In the case of RMSE, MAE, and RRMSE, Bi-LSTM performs better at all stations except for Geumcheon-gu. Comparing the average RMSE, Bi-LSTM outperforms LSTM and GRU by 0.1405 (2.6977%p) and 0.15 (2.8748%p), respectively. Comparing the average MAE of the models, the performance of Bi-LSTM is higher than LTSM and GRU by 0.0295 (0.844%p) and 0.1302 (3.6264%p), respectively. When using Bi-LSTM, we can see that the RRMSE is lower than LSTM and GRU by 0.0070 (2.8866%p) and 0.0074 (3.0266p%), respectively. For R

, the LSTM performance is best for the Geumcheon-gu and Songpa-gu stations, whereas the Bi-LSTM performance is best for the Gangnam-gu and Seocho-gu stations. With respect to the average R

, Bi-LSTM outperforms LSTM and GRU by 0.0029 (0.3196%p) and 0.0044 (0.4759%p), respectively.

Table 19 and

Table 20 show the performance index values of conventional machine learning. Among the machine learning methods, the performance index shows the best performance when forecasting is performed using RF. Compared to Bi-LSTM, the best performer in deep learning, Bi-LSTM was better than RF by RMSE: 26.96%, MAE: 32.56%, R

: 5.02%, and RRMSE: 20.83%. To summarize case study 2, Bi-LSTM has the best performance regarding RMSE, MAE, RRMSE, and R

compared to other deep learning and machine learning methods because it considers bi-directionality to make a forecast.

3.4. Discussion

This study aims to forecast the concentration after 1 h of PM

that can harm the human body. The proposed method is conducted in two steps; (1) selection of appropriate input variables and weight assignment using random forest, and (2) forecasting of PM

using Bi-LSTM. Appropriate input variables for forecasting were selected by calculating the importance of each variable using RF. However, the data usually consists of imbalanced data where the categories are not proportioned. Imbalanced data can lead to bias problems and degrade predictive performance. To improve this problem, a weight variable was added according to the grade classified through RF and used as an input variable for the forecast. Finally, the PM

concentration was forecasted by applying Bi-LSTM to the input and weight variables selected through RF. To validate the proposed method, two case studies were applied to monitoring stations in South Korea. Case study 1 (

Section 3.2) compares the prediction performance according to the selection of the input variables. Case study 2 (

Section 3.3) compares the forecast performance between the deep learning and conventional machine learning methods. Experimental results confirm that the proposed method is improved compared to conventional methods, such as LSTM, GRU, MLP, SVM, DT, and RF. In particular, it is shown that the prediction can be effectively performed even if there is a data imbalance problem by assigning weights using RF. In future work, we will discuss various multi-step forward forecasting strategies such as recursive, direct, and multi-input multi-output to perform long-term forecasting.

4. Conclusions

As the incidence of disease caused by PM exposure increases, it is essential to forecast PM concentrations to prevent PM exposure. In this study, we proposed a method for forecasting PM after 1 h from PM data with imbalanced data. Appropriate input variables were selected through RF and then used to add weight variables to improve the prediction performance. Consequently, using RF reduces model complexity and improves the forecasting performance. Then, PM forecasting was performed using Bi-LSTM, one of the deep learning models. For the number of nodes in the hidden layer, the node with the smallest RMSE was selected as an appropriate node through trial and error. The performance of the proposed method was verified through two case studies at four monitoring stations in Korea: Forecasting performance according to preprocessing of input variables and forecasting performance between deep learning and machine learning. The experimental results showed that the proposed method improved RMSE: 3.98%, MAE: 5.87%, RRMSE: 3.96%, and R: 0.72% when comparing the conventional method and the proposed method. In particular, at high concentrations, the proposed method outperformed each of the performances indicated by RMSE: 3.21%, MAE: 4.36%, R: 0.07%, and RRMSE: 3.21%. In addition, the proposed method outperforms other deep learning models on average with RMSE:2.79%, MAE: 2.25%, RRMSE: 2.96%, and R: 0.40%. Furthermore, compared to machine learning, the proposed method outperformed RMSE:27.38%, MAE: 27.57%, RRMSE: 27.60%, and R: 7.71%.