Abstract

The Atlantic Niño/Niña, one of the dominant interannual variability in the equatorial Atlantic, exerts prominent influence on the Earth’s climate, but its prediction skill shown previously was unsatisfactory and limited to two to three months. By diagnosing the recently released North American Multimodel Ensemble (NMME) models, we find that the Atlantic Niño/Niña prediction skills are improved, with the multi-model ensemble (MME) reaching five months. The prediction skills are season-dependent. Specifically, they show a marked dip in boreal spring, suggesting that the Atlantic Niño/Niña prediction suffers a “spring predictability barrier” like ENSO. The prediction skill is higher for Atlantic Niña than for Atlantic Niño, and better in the developing phase than in the decaying phase. The amplitude bias of the Atlantic Niño/Niña is primarily attributed to the amplitude bias in the annual cycle of the equatorial sea surface temperature (SST). The anomaly correlation coefficient scores of the Atlantic Niño/Niña, to a large extent, depend on the prediction skill of the Niño3.4 index in the preceding boreal winter, implying that the precedent ENSO may greatly affect the development of Atlantic Niño/Niña in the following boreal summer.

1. Introduction

The sea surface temperature (SST) in the tropics is one of the major sources of predictability, since tropical SST plays a center role in affecting the global weather and climate through its teleconnection effect [1,2,3,4,5]. A prominent interannual variation of SST in the tropical region is the El Niño-Southern Oscillation (ENSO), which is a well-known phenomenon due to its far-reaching global effects. Likewise, the SST variability in the equatorial Atlantic impacts the weather and climate on the surrounding continents [6,7,8,9]. In previous studies, diverse names are defined for the interannual variation of SST variability in the eastern equatorial Atlantic, e.g., the Atlantic coupled mode [10], Atlantic zonal mode [11], Atlantic El Niño mode [12], Atlantic Niño [13,14], and so on. To facilitate the discussion, here we use the terminology of Atlantic Niño and Atlantic Niña (hereafter Atlantic Niño/Niña) to denote the anomalous warming and cooling SST event occurred in the eastern equatorial Atlantic, respectively. As pointed out by Zebiak [10], the features of Atlantic Niño and Niña resemble those of ENSO in the equatorial Pacific, and the ocean-atmosphere coupled processes involved in Atlantic variability dynamics is, to the first order, similar to those in ENSO [4,15,16,17]. Nonetheless, in contrast to ENSO, Atlantic variability has some distinctive characteristic, e.g., it has relatively small zonal scale and relatively short life cycle, and it usually peaks in boreal early summer (June–July) [13,17,18].

Accurately predicting the tropical SST anomalies at seasonal-interannual time-scale has profound socio-economic consequences [4,13]. It is believed that the predictability limit of the oceanic SST anomalies is expected to be longer than the atmospheric anomalies, as the upper ocean memory is longer than the atmosphere [2]. In this sense, predicting climate anomalies at the seasonal-interannual time-scale (i.e., forecasting at lead time of one or a few seasons) is predominantly a matter of SST prediction, which may be traced back to the upper ocean heat content. For instance, Wang et al. [19] constructed a physical based empirical model which is primarily built on SST anomalies, and found this model has good performance in predicting the western Pacific Subtropical High. Currently, the SST anomalies in the equatorial Pacific associated with the most prominent interannual variability—ENSO can be successfully predicted three seasons ahead [5,20,21,22,23,24,25,26]. The SST anomalies in the equatorial Indian Ocean associated with the so-called Indian Ocean Dipole (IOD) can be predicted three to four months in advance [27,28,29,30].

However, the prediction skill of SST anomalies in the equatorial Atlantic is unsatisfactory. In general, both the statistical model and the dynamical model are used to predict SST anomalies. Previous studies illustrate that the prediction skill of the SST anomalies in the core region of Atlantic Niño/Niña is restricted in a short lead time in either the statistical models or dynamical models. Wang and Chang [31] developed a linear stochastic climate model of intermediate complexity [32,33], which includes the dynamics, the thermodynamics and random atmospheric stochastic forcing, to study the SST prediction in the tropical Atlantic region. In their statistical model, the prediction skill for the SST anomalies in the equatorial Atlantic is restricted to two months, with a fast error increase beyond two months. Li et al. [34] investigated the predictability of SST anomalies in the core region of Atlantic Niño/Niña using two statistical approaches, linear inverse modelling (LIM) and analogue forecast with a coupled model data. The prediction skill of the SST anomalies associated with Atlantic Niño/Niña in their statistical model reaches constrainedly up to three months, but only for the forecasts started in January, April and September. In addition to the statistical approach, dynamical models are applied for predicting the SST anomalies in tropical Atlantic. Stockdale et al. [35] documented the prediction skill of SST anomalies in the European Centre for Medium-Range Weather Forecasts (ECMWF) model, which consisted nine members and participated in the Development of a European Multi-model Ensemble System for Seasonal to Interannual Prediction (DEMETER) project. They pointed out this model can predict the SST anomalies of Atlantic variability only two or nearly three months ahead, when measured by the SST anomalies correlation between the observation and the prediction. They also reported that even the multi-model ensemble (MME) made by the DEMETER models does not show obvious improvements in the prediction skill compared to the single ECMWF model. Hu and Huang [36] investigated the predictive skill performed by the NCEP Climate Forecast System (CFS), and found that the prediction skill of SST anomalies in eastern equatorial Atlantic is restricted to about three months in NCEP-CFS model. Overall, these dynamical models and statistical models are still struggling with predicting the SST anomalies in the eastern equatorial Atlantic at relatively long lead times.

Recently the North American Multimodel Ensemble (NMME) project [37,38] provides seasonal-to-interannual prediction experiments with some state-of-the-art coupled models. Some recent studies have investigated the predictability of the interannual variability of SST anomalies associated with ENSO and IOD [22,38,39,40,41], and focused on the seasonal prediction skill of summer monsoon and its relationship with SST prediction (e.g., [42,43]). It is found that the MME made by the NMME models can predict ENSO three seasons ahead [22,38,39,40,44]. Newman and Sardeshmukh [45] even suspect that the NMME ensemble mean skill of predicting SST anomalies in some regions of tropical Indo-Pacific seems to be only slightly lower than the potential skill estimated by the signal-to-noise ratio.

As to the SST anomalies in Atlantic sector, a few studies [46,47] have examined the prediction skill of SST anomalies in the tropical North Atlantic Ocean (10° N–20° N, 85° W–20° W) and pointed out that the corresponding prediction is more skillful in NMME models than previous coupled models. However, few studies have paid attention to the prediction skill of SST anomalies in the equatorial Atlantic region associated with Atlantic Niño/Niña for the NMME models. Therefore, this promotes us to investigate the prediction skill of Atlantic Niño/Niña in the retrospective forecasts performed by the latest NMME models. Note that the “prediction” and “forecast” are viewed synonymously throughout the remaining paper.

In terms of the forecast errors, previous studies suggested that the mean state simulation biases may greatly affect the seasonal-interannual SST anomalies prediction in coupled models (e.g., [2,48]), in addition to the errors in model initialization. A number of studies have pointed out that the current coupled models show significant mean state biases in Atlantic sector. For instance, warm bias of mean SST in the eastern equatorial Atlantic is prevalent in coupled models [49,50,51], which is suggested to be related to the mean state biases of trade winds, alongshore winds and coastal upwelling [52], and/or attributed to the unrealistic representation in cloud cover and ocean mixed layer in coupled models [53]. Such mean state biases, especially the warm SST bias may affect the Atlantic variability prediction, as suggested by Richter et al. [48]. Another argument is that the ability of predicting ENSO may affect the predictive skill of SST anomalies associated with Atlantic Niño and Atlantic Niña, due to the strong connection between ENSO and the SST anomalies in Atlantic sector [36,52]. A recent study [54] found that most of the Atlantic Niño/Niña events are preconditioned in boreal spring by either the Atlantic meridional mode or Pacific SST conditions like ENSO, while for some of the events that has a relatively late onset timing, there is no clear source of external forcing. This indicates that whether ENSO would influence the Atlantic Niño/Niña in the seasonal prediction systems needs to be examined. Therefore, this study will investigate whether there is any common factors responsible for the skill of predicting SST anomalies associated with Atlantic Niño/Niña, based on the NMME models.

The paper is organized as follows. Section 2 introduces the NMME models and the datasets used in this study. Section 3 presents the overview prediction skill of the Atlantic Niño/Niña, the corresponding seasonality of prediction skill, and the comparison for the prediction skill between the developing phase and decaying phase, and the warm and cold events. Section 4 presents the prediction biases in mean states, and investigates the possible factors responsible for the forecast errors based on the multiple model results. Finally, a summary is giving in Section 5.

2. Models and Data

The North American Multi-model Ensemble project (NMME) is a multi-model forecasting system composed of coupled models from the modeling centers of the United States and Canada [37]. The analysis in this study is conducted based on the forecast results from 13 coupled models participated in NMME Phase 1 and Phase 2, including CanCM4i, CanSIPv2, CMC1-CanCM3, CMC2-CanCM4, GEM-NEMO, NASA-GEOSS2S, NCAR-CESM1, NCEP-CFSv2 in Phase 1, and CanCM3, CanCM4, CCSM4, CESM1, FLORB-01 in Phase 2. Table 1 lists a brief summary of model specification, including the forecast length, lead time and ensemble size. The retrospective forecasts encompass at least 10 members, and the lead time ranges from 10 to 12 months. In this study, we will focus on the common period of 1980–2010 and analyze the prediction skills for each of the NMME models and the MME. In general, the MME prediction skill is better than most of the individual model’s skill and the averaged skill of all models [21,38]. When we detect the forecast skill without pointing out a specific model, it is regarded as the ability of the MME made by 13 NMME models. For verification, the monthly SST dataset from the HadISST [55] is utilized.

Table 1.

Description of 13 NMME models used in this study. The mark of asterisk denotes the model that has provided the output of zonal wind at 850 hPa.

Next, some ambiguous expressions and some detailed information are clarified below. Firstly, the terminology of the “predict” and “forecast” are viewed synonymously in this study. Secondly, “one-month-lead forecast” means the forecast conducted from the first day of the starting month to the current month itself. In this sense, “two-month-lead forecast” denotes the forecast from the starting month to the second month. For example, in terms of the forecasts initiated in 1st January 2000, the first monthly mean (i.e., the average of 1–31 January 2000) of the forecast is defined as one-month-lead, the second monthly mean (i.e., the average of 1–28 February 2000) is defined as two-month-lead, and the remaining lead times are defined analogously. Then, the “anomalies” or “anomaly” is the departure from the long-term annual cycle rather than the departure from annual mean. Specifically, for a certain model, we firstly obtain the annual cycle of SST for different lead times (note that the annual cycle of SST in a same model may vary with the increase of lead time). For a given lead time, the annual cycle of SST at this lead time is subtracted from the raw outputs, and then the SST anomalies are obtained. In this way, both the anomaly field and the mean state field (or the annual cycle field) are obtained. Then, the “bias” is denoted by the deviation of a predictand from the counterpart in the observation (i.e., model forecast minus observation), which includes the anomaly bias part and the mean state bias part.

This study applied an equally-weighted multiple model average strategy when obtaining the multi-model ensemble result, as eleven out of thirteen models have ten members and only two models have more members. Additionally, the linear trend has not been removed. It is worth mentioning that a previous study [56] has investigated that the impact of global warming trend on seasonal-to-interannual climate prediction. They found that the SST warming trend’s impact is negligible for short lead predictions, and the linear trend should be considered for only the seasonal prediction at long times (more than one year). They further pointed out that the impact of the SST warming trend on the seasonal prediction is considerable for extratropical oceans but marginal for the tropical oceans. Therefore, for the case in this study, the SST linear trend may not influence the prediction skill of Atlantic Niño/Niña. For the deterministic measures, previous studies [21,57,58,59] usually chose 0.6 or 0.5 as the cut off value for anomaly correlation coefficient, and chose one standard deviation of the oscillation itself or some values slightly less than one standard deviation as the threshold value for the root-mean-squared errors.

3. Results

In this section, the prediction skills of SST anomalies in the eastern equatorial Atlantic performed by 13 coupled models in NMME Phase 1 and Phase 2 are investigated in detail.

3.1. An Overview of the Atlantic Niño/Niña Prediction Skill in the Deterministic Sense

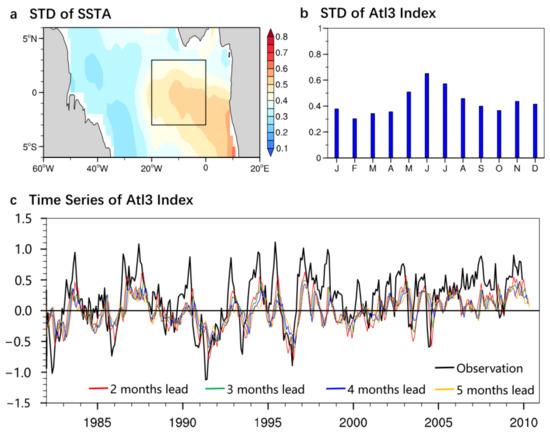

Figure 1a shows the standard deviation (STD) of SST anomalies in the equatorial Atlantic Ocean. In general, the STD of SST anomalies are large in the eastern equatorial Atlantic with values reaching 0.5 K (Figure 1a). Previous studies (e.g., [10]) introduced a SST index called Atl3 index for the SST anomalies (SSTA) averaged in the region 3° S–3° N, 20° W–0° W to define an event of Atlantic Niño or Niña. Analogous to what the Niño3.4 index (SSTA averaged over 5° S–5° N, 170° W–120° W) means to ENSO, the Atl3 index is applied to measure the variability of Atlantic Niño/Niña and to gauge the prediction skill of Atlantic Niño/Niña. Previous studies found that Atlantic Niño/Niña usually develops in boreal spring, matures in boreal summer and then decays in the subsequent months. As indicated in Figure 1b, the evolution of the STD of Atl3 index along with the calendar month shows that Atlantic Niño/Niña prefers to peak in boreal summer. Figure 1c shows the time evolution of Atl3 index derived from the observation and the prediction from the MME made by the NMME models. In general, the fluctuation of the Atl3 index derived from the two- and five-month-lead MME forecasts resembles that in the observation to some extent. An obvious bias is that the amplitude of Atlantic Niño/Niña is underestimated in the MME forecasts (Figure 1c). The amplitude of the predicted Atlantic Niño/Niña in the MME forecasts at two- to five-month-lead are shown in Table 2. The STD of Atl3 index in the observation is up to 0.465, and the STD of the predicted Atl3 index in the MME forecast is 0.296 in the two-month-lead forecast. Such underestimation deteriorates with the increase of lead time, and reaches only 0.192 in the five-month-lead forecast. The amplitude bias in predicting the Atlantic Niño/Niña provokes our further exploration in Section 4.

Figure 1.

(a) Standard deviation (STD) of observed SST anomalies in the tropical Atlantic. The black box denotes the Atl3 region (3° S–3° N, 20° W–0° E), and the SST anomalies averaged over the Atl3 region denote the Atl3 index. (b) STD of Atl3 index as a function of calendar month. (c) Time series of the Atl3 index for two-month-lead (red curve), three-month-lead (green curve), four-month-lead (blue curve), five-month-lead (orange curve) forecasts and the observation (black curve).

Table 2.

The standard deviation of the observed Atl3 index and the predicted Atl3 index in MME. The time series of the predicted Atl3 index is displayed by colored lines in Figure 1c.

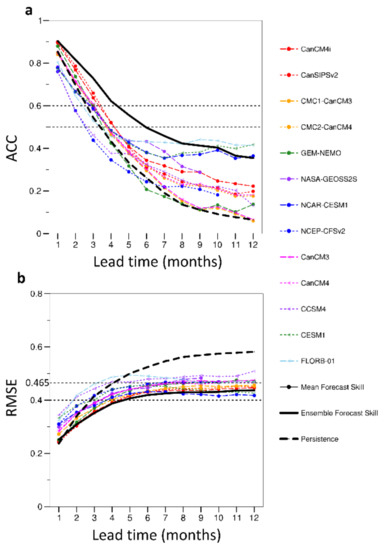

To quantitatively assess the predictive skill of Atlantic Niño/Niña, the analysis based on the anomalies correlation coefficient (ACC) and the root-mean-squared errors (RMSE) is carried out. The ACC between the Atl3 index in the forecast and the counterpart in the observation are displayed in Figure 2a. In most of the individual models, the ACC scores are above the persistence, although few model forecasts show poor ACC scores. When 0.6 is chose as the cut off value for ACC, the prediction skills in half of the NMME models can reach three months. Among the NMME models, CanCM4i and CanSIPSv2 show the best skill in predicting Atlantic Niño/Niña. The MME result shows better prediction skill than any individual model. Specifically, the prediction skill for the MME reaches 6 (more than 4) months when 0.5 (0.6) is chose as the passing score for ACC.

Figure 2.

(a) Anomaly correlation coefficient (ACC, vertical axis) of Atl3 index between the observation and 13-model forecast results with respect to the lead time (horizontal axis; unit: months). The black solid curve is the NMME multi-model ensemble (MME) forecast result, the dashed black curve denotes the persistence, and the colored dotted curves denote the individual models as shown in the legend. (b) same as (a), but for the root-mean-square error (RMSE, vertical axis) of Atl3 index. Auxiliary lines in (b) are 0.465 (one STD of the observed Atl3 index equals to 0.465) and 0.4, respectively.

The RMSE results from each model and the MME result are displayed in Figure 2b. From the perspective of RMSE, most of the models are capable to predict Atl3 index at 3-month-lead with the RMSE less than 0.4. In particular, four models (CanCM4i, CanSIPSv2, CMC1-CanCM3, and CMC2-CanCM4) show the RMSE results below 0.4 at 4-month-lead. When one STD of the observed Atl3 index (say, 0.465) is chose as the threshold value (see grey dashed line in Figure 2b), most of the models have the ability to predict Atl3 index at 7-month-lead or even 12-month-lead. Likewise, the MME result shows better performance than any individual model, in terms of the RMES. Specifically, the prediction skill for the MME result can reach nearly five months when 0.4 is chose as the threshold value. Both ACC and RMES results demonstrate that the MME approach is a more effective way to obtain better skill in predicting Atlantic Niño/Niña.

Previous studies have pointed out that prediction skill of Atlantic Niño/Niña reaches around two months or constrainedly up to three months for some particular initial months in either a statistical model [31,34] or a dynamical model [35]. From the perspective of an individual model, more than half of the NMME models exhibit slightly better prediction skills of Atlantic Niño/Niña than previous models. Among the NMME models, both CanCM4i and CanSIPSv2 show the best prediction skill. From the perspective of the MME result, Stockdale et al. [35] shows that the MME forecast skill based on DEMETER models reaches only 4 months with the RMSE less than 0.4 (see Figure 10b in Stockdale et al. [35]). Our current result shows that the prediction skill for the MME made by NMME models reaches five months with the RMSE less than 0.4. The results above show that it is encouraging to find that the current NMME models exhibit slight improvements in the prediction skill of Atlantic Niño/Niña compared to previous models.

3.2. Seasonal Dependence of the Atlantic Niño/Niña Prediction Skill

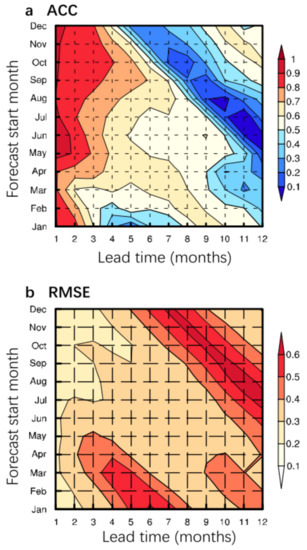

We further investigate the seasonal dependence of the Atlantic Niño/Niña prediction skill in this section. Figure 3 provides the ACC for the MME as a function of lead time (horizontal axis) and the forecast start month (vertical axis). The ACC results show clear seasonality. The forecasts starting in boreal summer (including May, June, July and August) have higher skill than those starting in boreal winter (including December, January, and February). The former that starts in boreal summer shows the corresponding ACC higher than 0.6 (0.5) can hold through 6 (8–10) months lead, whereas the latter that starts in boreal winter shows the corresponding ACC higher than 0.6 (0.5) can hold through 3–4 (2–4) month lead. It is noted that the MME ACC result for the forecasts starting in December, January, February and March rebound at the lead time from 7- to 10-month, following the quick decline of the prediction skill in the first 3 months. It is argued that this is spurious and is primarily attributed to the error cancellation in MME mean, because the forecasts starting in boreal winter sharply drops when the forecast lead time exceeds 3 months, and the forecasts at the following 7- and 10-month-lead are almost totally wrong in the sight of any individual model (figure not shown). It is worth mentioning that such skill rebound feature is also noted by some other relevant seasonal prediction studies [21,27,59,60]. Through decomposing the RMSE results by the forecast start month, similar seasonality feature is found. Specifically, the skill for the forecasts starting in boreal summer is higher than those starting in boreal winter. Therefore, our results show that the prediction skill of Atlantic Niño/Niña has seasonal dependency.

Figure 3.

(a) The ACC of Atl3 index as a function of forecast start time spanning all the calendar months (y-axis) and the forecast lead time (x-axis). (b) same as (a), but for the RMSE of Atl3 index.

Based on the ACC and RMSE results, the prediction skill of Atlantic Niño/Niña generally reaches more than 6 months for the forecasts starting from May to November, but is limited within 4 months for the forecasts starting in boreal winter. The results above show that there is a marked dip across the boreal spring in terms of the prediction skill, indicating that the prediction of Atlantic Niño/Niña in NMME models suffers a “spring predictability barrier”. It is worth mentioning that previous studies recognized that the ENSO prediction skill also declines promptly in boreal spring, and such “spring predictability barrier” for ENSO prediction widely occurs in the dynamical model forecasts [2,20,61,62]. The spring predictability barrier in the Atlantic Niño/Niña prediction may be partly due to the lack of stochastic noise forcing in the climate models used for forecasts, as it is argued that this is the case for ENSO prediction, that is, a previous study has pointed out that the spring predictability barrier on ENSO is largely attributed to the lack of stochastic noise in the climate models [63]. The specific reasons for the spring predictability barrier in the Atlantic Niño/Niña prediction is complicated and merits thorough investigation in the future.

3.3. Comparisons for the Atlantic Niño and Niña Prediction Skills

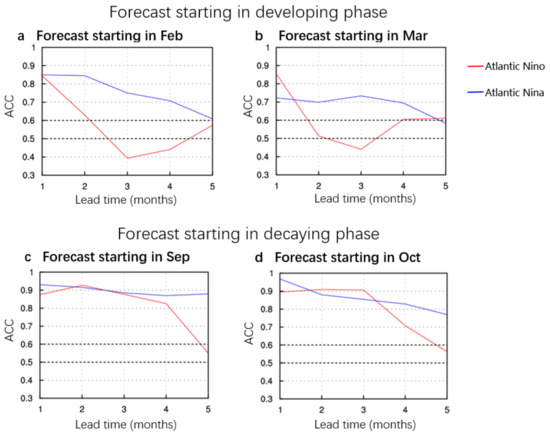

To specify the prediction skill of the Atlantic variability in detail, we further evaluate the forecast skills for Atlantic Niño and Atlantic Niña, respectively. We firstly selected seven Atlantic Niño events (1987, 1988, 1991, 1995, 1996, 1999, 2008) and six Atlantic Niña events (1982, 1983, 1992, 1994, 1997, 2005) in the observation. The criteria for a certain Atlantic Niño (Niña) event is that the observed SST anomalies exceed one (minus one) standard deviation for at least two consecutive months. In general, most of the Atlantic Niño/Niña events are initiated from early boreal spring, peak in June–July, and then decay in the following months. The ACC for the selected Atlantic Niño events and Atlantic Niña events in the MME forecasts starting in the developing phase (i.e., February, March) and the decaying phase (i.e., August, September) is shown in Figure 4. Obviously, the forecasts starting in the decaying phase of Atlantic Niño (see red lines in Figure 4c,d) have higher skills than those starting in the developing phase (see red lines in Figure 4a,b). Such results hold for the Atlantic Niña (blue lines in Figure 4c,d versus blue lines in Figure 4a,b). The fact that the forecast skills are minimal in the developing phase is consistent with that the “spring predictability barriers” of Atlantic Niño/Niña prediction.

Figure 4.

(a,b) The ACC of Atl3 index as a function of lead time (x-axis) for the forecasts starting in the developing phase. Red (blue) line for the selected Atlantic Niño (Atlantic Niña) events. (a) and (b) for the forecast starting in February and March, respectively. (c,d) same as (a,b) but for the forecasts starting in the decaying phase, that is, (c) for September and (d) for October.

The forecast skills for Atlantic Niño are further compared with that for Atlantic Niña. It is noted that the forecast skills for Atlantic Niña are generally higher than those for the Atlantic Niño (red line in Figure 4 versus blue line in Figure 4), no matter the developing phase or decaying phase is focused on.

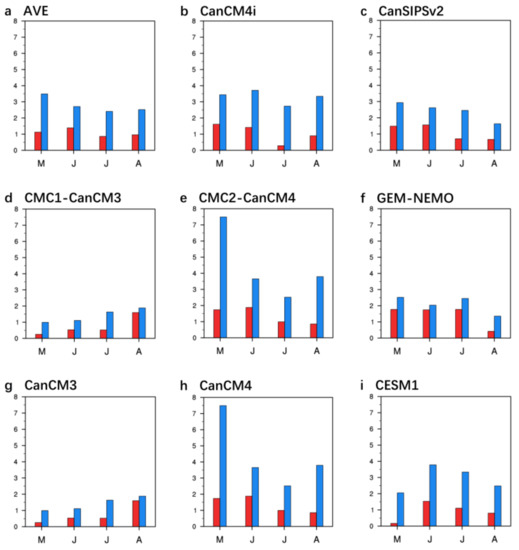

Regarding the reasons for the contrasting prediction skills between the Atlantic Niño and the Atlantic Niña, a preliminary analysis is conducted. Following some recent studies (e.g., Larson and Kirtman [64]; Hu et al. [65]), we calculated the signal-to-noise ratio (SNR) of zonal wind anomaly for the models. Due to data availability, only eight models that provided the zonal wind outcome are used here. As shown in Figure 5, all the models show that the SNR for the Atlantic Niña prediction is obviously larger than the SNR for the Atlantic Niño prediction, indicating that the Atlantic Niña is more predictable than Atlantic Niño. This may partly explain why the prediction skill of Atlantic Niña is higher than that of Atlantic Niño in NMME models. It is worth mentioning that such result (i.e., SNR for the Atlantic Niña is larger than that for Atlantic Niño) still holds, when using the forecast results at different lead times or slightly altering the specific equatorial Atlantic region for calculation (figures not shown).

Figure 5.

Signal-to-noise ratios (SNR) of zonal wind anomalies at 850hPa averaged over western equatorial Atlantic (3° S–3° N, 40° W–20° W) for (a) the average of eight models, and (b–i) the individual models. The SNR are derived from 3-month-lead forecasts, and the target months of May, June, July and August are chosen for verification, respectively. Red (blue) bars are for the selected Atlantic Niño (Atlantic Niña) events.

3.4. Overall Probability Forecast Skill

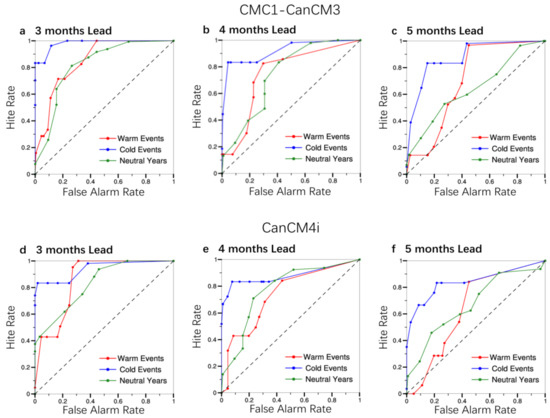

In addition to the deterministic measures, we further assessed the prediction skill in a probabilistic sense. Specifically, the probabilistic measures, including the Brier skill score (BSS), ranked probability score skill (RPSS) and relative operating characteristics (ROC), are used to verify the probabilistic forecasts. In general, the results based on the probabilistic measures are consistent with those based on the deterministic measures. Here two representative models (CMC1-CanCM3 and CanCM4i) are selected for presenting detailed results in the probabilistic sense.

3.4.1. BSS

The Brier score (BS) is a widely-used verification measure to assess the accuracy of probability forecasts. It is the mean squared error between the forecast probability and the observed frequency. To clarify the definition of BS, we firstly define the forecast probability for the ith forecast (Pi) as:

where j (=1,…, M) denotes the jth member in a given forecast model (here total member M is 10 here); i (=1,…, N) denotes the ith probability forecast in a series of forecasts (N = 29 here). For the ith forecast, fi,j is obtained by the binary form 1 or 0, as illustrated in Equation (2), and Pi is obtained by averaging all the values of the fi,j. For the ith observation, the observed frequency (Oi) has a value of 1 or 0 based on whether the event occurred or not:

Then the BS can be obtained as follows:

To compare the BS to that for a reference forecast system BSref, the Brier score skill (BSS) is defined by the BS and the reference forecast (BSref) as below:

where the BSref denotes the reference forecast, and can be obtained by . Here the observed frequency is taken as the climatological probability. In this way, BSS indicates the skill of probability forecast relative to the climatological forecast. When the BSS is greater than 0, it means the probability forecast is skillful; and BSS equaling to 1 means the probability forecast is ideally perfect.

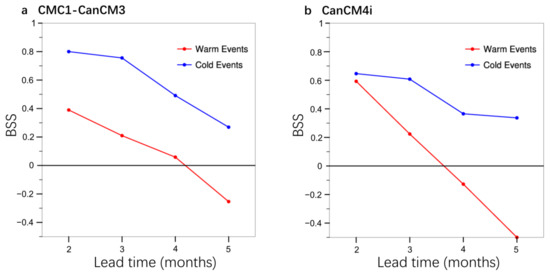

With the aid of verification method of BSS, we investigated the probabilistic prediction skill of Atlantic Niño/Niña in two representative models (CMC1-CanCM3 and CanCM4i) over the verification period (29 years). Here each model has 10 members, and ensemble members are weighted equally for a given model. Figure 6 shows the BSS results for the Atlantic Niño prediction (red) and Atlantic Niña prediction (blue) in CanCM4i model (solid line) and CMC1-CanCM3 (dashed line). In general, the BSS results reveal that the prediction skill can reach four or even five months for the prediction of Atlantic Niño and Atlantic Niña in these two models. Moreover, it is found that the probabilistic forecast skill of Atlantic Niña is superior to that of Atlantic Niño in these two models. For instance, the BSS of Atlantic Niña is greater than 0.6 at 2- and 3-month-lead forecasts and can keep above 0.2 at 5-month-lead forecast; while the BSS of Atlantic Niño reaches close to 0 at 4-month-lead forecast. Here the finding that the prediction skill of Atlantic Niña is higher than that of Atlantic Niño agrees with previous finding based on deterministic measures.

Figure 6.

(a) The BSS as a function of lead time (month) for the Atlantic Niño prediction (red) and Atlantic Niña prediction (blue) in the model of CMC1-CanCM3. (b) same as (a) but for the models of CanCM4i.

3.4.2. RPSS

The ranked probability score skill (RPSS) is another widely-used method to verify the probability forecasts. The RPSS is based on ranked probability score (RPS) for a set of forecasts. For the ith forecast, the RPS is defined as the sum of squared differences of the cumulative probabilities in the forecasts and the corresponding outcomes in the observation:

In this study, the Atlantic Niño/Niña events are grouped into three categories (l = 1, 2, 3), including Atlantic Niño event year, Atlantic Niña event year, and neutral year. For the ith forecast, Pk,i is the forecast probability of the kth category (k = 1,…, l), and can be obtained based on Equation (1). Similarly, Ok,i is the observed frequency of the kth category, and can be obtained based on Equation (3). Therefore, is the sum of probabilities from the first category to the lth category. is the sum but for the corresponding outcomes in the observation. For the ith forecast, the RPS of a single forecast is the sum of squared differences of three categories.

Then the RPSS is defined as follows:

where and are the RPS of the forecasts being evaluated and a reference forecast, respectively, averaged over multiple forecasts. For the RPSref, constant cumulative categorical probabilities Qi is used, where Q = [0.31, 0.38, 0.31], following Tippette et al. [66]. Same as BSS, a good probabilistic forecast should have a small RPS and a large RPSS score.

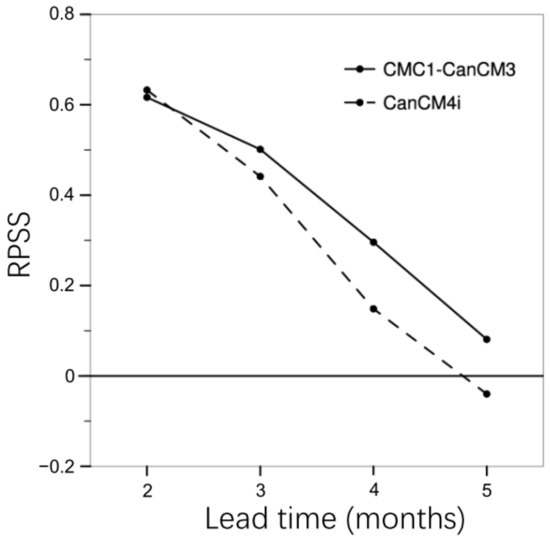

We next used the method of RPSS to measure the predicted errors between the probability forecast and the observed frequency for the accumulated forecast skill of Atl3 index. It is worth mentioning that three categories are applied here, and the results are not sensitive to the number of categories, as previous study [66] stressed that the forecast skill does not depend strongly on the number of forecast categories too much. As shown in Figure 7, the RPSS is greater than 0.4 for both models. The value of RPSS reaches 0.15 for the 4-month-lead forecast in CanCM4i, and 0.1 for the 5-month-lead forecast in CMC1-CanCM3. This indicates that from the perspective of RPSS, the prediction skill can reach 4 months for CanCM4i and 5 months for CMC1-CanCM3, which is generally consistent with the BSS results.

Figure 7.

The RPSS as a function of lead time (month) for CMC1-CanCM3 (solid line) and CanCM4i (dashed line).

3.4.3. ROC

Another commonly-used method of verifying probability forecasts is relative operating characteristics (ROC), which is proposed by Kirtman [67]. Relying on the ratio of hit rate (HR) to false alarm rate (FAR), the ROC curves denote the ratios of different member-based probability forecasts:

where O1 indicates the number of correct forecasts of the events, while O2 is the number of misses. NO1 denotes the number of false alarms and NO2 is the number of the correct rejections. HR means the fraction of events that were forewarned the ratio of correct forecasts, and FAR denotes the fraction of nonevents that occurred after a warning was issued. An HR of one means that all occurrences of the event were correctly predicted and an HR of zero indicates that none of the events were correctly predicted. The FAR also ranges from zero to one with a value of zero indicating that no false alarms were issued. Therefore, an ideally perfect probabilistic forecast system would have an HR of 1 and an FAR of 0.

The ROC curve consists of ten points (for the model used here, there are ten members) distributed from lower-left corner to the upper-right corner. The first point on the ROC curve denotes ten out of ten ensemble members that have forecasted a particular event, indicating how skillful the model is when all members consistently forecast the given event to occur. The second point indicates that nine out of ten ensemble members forecasted the event, and the remaining point along the curve vary analogously. In this sense, if all the points on the ROC curve lie close to the diagonal line, it means the probabilistic forecast system has no skill; and if the points on ROC cluster close to the upper-left corner, it means the model has high probabilistic forecast skill.

At last, we accessed the probabilistic forecast skill using the relative operating characteristics (ROC) analysis. To eliminate the uncertainty of exceptional member forecast in the ROC curves, we repeat the ROC curves by Monte Carlo technique, following Kirtman [67]. Specifically, for a given model that has ten members, we randomly select nine out of ten samples to calculate the ROC results. Then the average of the ROC results derived from different combinations is given. Figure 8 shows the ROC curves for the Atl3 hindcasts at lead time of three months, four months, and five months. In general, the ROC curves show that both models have considerable skills in predicting the warm events (red curve), cold events (blue curve) and the neutral status (green curve) at three-, four-, and five-month-lead forecasts. For the three-month-lead forecasts, all the three ROC curves are far away from the diagonal in the two models, indicating that both models have large hit rates but small false alarm rates at three-month-lead. Along with the increase of lead time, the ROC curves gradually get close to the diagonal, indicating the drop in the probabilistic forecast skill. For instance, the ROC curves for Atlantic Niño prediction and neutral status prediction in the five-month-lead forecasts lie close to the diagonal, indicating relatively low probabilistic forecast skill at five-month-lead. It is further found that the probabilistic forecast skill of Atlantic Niña prediction is (see blue curves) higher than those of Atlantic Niño prediction (red curve) and neutral status prediction (green curve), and such contrast holds for all the three-, four-, and five-month-lead forecasts analyzed here. Here the probabilistic forecasts skills revealed by ROC curves are generally consistent with the findings based on BSS and RPSS.

Figure 8.

ROC curves of Atl3 index for a lead time of (a,d) three months, (b,e) four months and (c,f) five months from the model of (a–c) CMC1-CanCM3 and (d–f) CanCM4i. The Atlantic Niño (Atlantic Niña) events are denoted in red (blue) and the neutral conditions are in green.

Overall, the analysis above based on the three methods (BSS, RPSS, and ROC) show consistent results, that is, the prediction skill of the two models reaches about four or even five months, and the prediction skill for Atlantic Niña is higher than Atlantic Niño. Such results in the probabilistic sense agree well with previous findings based on the deterministic metrics. It is worth mentioning that many previous studies (e.g., Wang et al. [21]; Liu et al. [68]) have pointed out that the probabilistic forecast skill and the deterministic forecast skill have monotonic nonlinear relationship, and the results from these two aspects can be considered as the supplement for each other.

4. Possible Factors Responsible for the Atlantic Niño/Niña Forecast Errors

The analysis above has revealed the amplitude bias of the predicted Atlantic Niño/Niña, and the prediction skills based on the ACC results in NMME models. In this section, we will investigate the possible factors contributing to the Atlantic Niño/Niña forecast errors. Previous studies have pointed out that the background mean state is essential for the Atlantic Niño/Niña development in the observation [13,15,69], and the Atlantic Niño/Niña simulation and prediction [49,70,71]. Therefore, we will examine whether the mean state biases make a contribution to the Atlantic Niño/Niña forecast errors. Besides, as the remote influence of ENSO on the Atlantic SST variability is widely admitted [9,14,72], we thus will examine whether prediction skill of ENSO or the SST anomalies in the central-eastern equatorial Pacific affects the forecast skill of Atlantic Niño/Niña. It is accepted that the factors determining the forecast skill may be model-dependent. Our strategy here is to identify whether there is any common factors influencing the forecast skills across the NMME models.

4.1. Mean State Biases in Equatorial Atlantic Sector

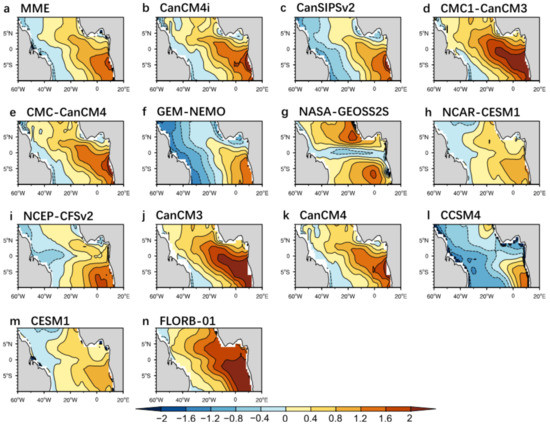

We firstly present the mean state bias and annual cycle bias in the equatorial Atlantic sector. Figure 9 shows mean SST bias for the MME result (Figure 9a) and each model (Figure 9b–n). In general, almost all the NMME models (except for NASA-GEOSS2S and CCSM4), show some common feature regarding the spatial pattern of mean SST bias. In particular, there is obvious warm bias of mean SST locating in the central-eastern equatorial Atlantic, which extends along the western Africa coast. In the MME result, the mean SST bias reaches around 1 °C east of 20° W. In contrast, a cold bias of mean SST emerges along the western edge of equatorial Atlantic basin, leading to a weakening in the zonal gradient of mean SST in the equatorial Atlantic. Accordingly, MME shows a weakening in the easterly trade wind and a reduced mean thermocline slope in the equatorial Atlantic (figure not shown), which is consistent with the mean SST bias.

Figure 9.

The biases of the predicted mean SST for (a) MME and (b–n) the individual models. The mean SST biases shown here are derived from the four-month-lead forecasts, which resemble those at different lead times. In general, the mean state biases have the similar spatial pattern for different lead time forecasts except that the magnitude of the biases increases with the increasing lead time.

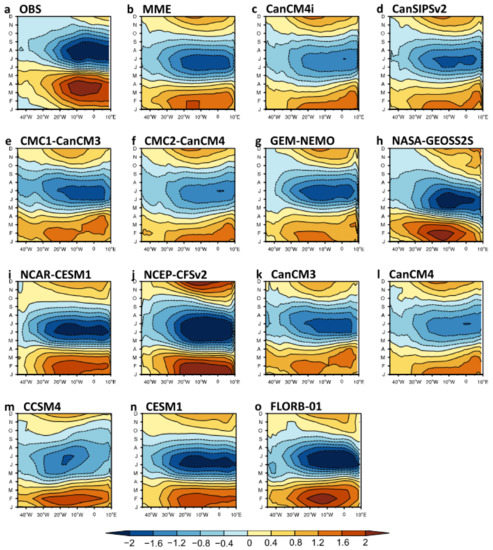

The systematic bias of the predicted annual cycle in the eastern equatorial Atlantic is also examined. Figure 10 shows the annual cycle of SST in which the annual mean has been subtracted. In the observation (Figure 10a), an annual period of mean SST dominated in the eastern equatorial Atlantic, with a visible extension towards the west; and the warmest (coldest) mean SST in the eastern equatorial Atlantic occurs in March–April (August) with a magnitude more than 2 °C. For the forecast results, the majority of the NMME models have underestimated the intensity of the annual cycle of SST, except that few models (e.g., NCEP-CFSv2) show an overestimated annual cycle of SST. Additionally, the phase of the mean SST annual cycle tends to shift slightly earlier than that in the observation. Previous studies (e.g., [2]) have suggested that the bias of the amplitude of the annual cycle of SST could influence the ENSO prediction skill, thus we will next pay more attention to relationship between the amplitude bias of SST annual cycle and the Atlantic Niño/Niña prediction skill.

Figure 10.

Annual cycle of SST along the equatorial Atlantic (averaged on 3° S–3° N) for (a) the observation, (b) MME and (c–o) the individual models. Here the mean SST subtracting the annual mean SST denotes the annual cycle of SST, and the SST annual cycle for the MME and the individual models are derived from the four-month-lead forecast results, which resemble those in the other lead time forecasts.

4.2. Factors Responsible for the Amplitude Bias of Atlantic Niño/Niña Prediction

Previous sections have shown that the forecast results have mean state biases, including the warm bias of mean SST in the eastern equatorial Atlantic, the underestimation in the zonal gradient of mean SST in the equatorial Atlantic, and the underestimated intensity of the annual cycle. We will examine whether there is any link between the mean state biases and the prediction biases among the NMME models.

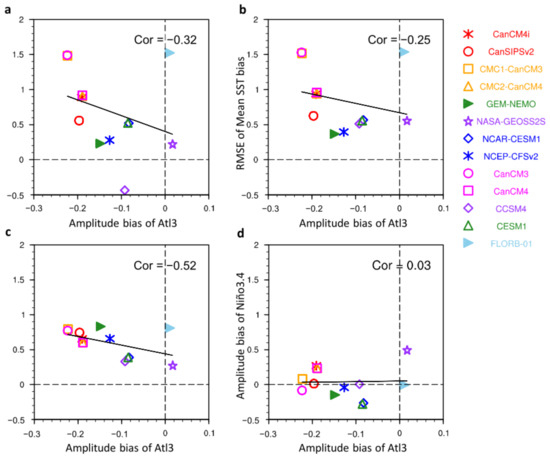

As shown above, the underestimation in the amplitude of the predicted Atlantic Niño/Niña is prevail in the forecasts by the NMME models. Here the difference between the STD of the predicted Atl3 index and the STD of the observed Atl3 index (i.e., model forecast minus observation) denotes the amplitude bias in predicting Atlantic Niño/Niña. We firstly investigate the relationship between the mean SST biases and the bias in predicting the Atlantic Niño/Niña amplitude. Figure 11a shows the scatter diagram between the amplitude bias in predicting Atlantic Niño/Niña and the bias of mean SST averaged over Atl3 region. The corresponding correlation coefficient is relatively low and does not exceed the 95% confidence level (here the critical value for 95% confidence level is 0.55). Similarly, low and insignificant correlation coefficient between the amplitude bias and the RMSE of mean SST biases over the Atl3 region (Figure 11b). These indicate that the mean SST bias in the eastern equatorial Atlantic may be not responsible for the amplitude bias. Furthermore, the amplitude bias in predicting Atlantic Niño/Niña is also not significantly correlated with the bias of the zonal gradient of mean SST in the equatorial Atlantic at 95% confidence level, as shown in Figure 11c. Analogous to the amplitude bias in predicting Atl3 index, we calculated the amplitude bias of Niño3.4 index in the nine-month-lead forecasts for each model. The relationship between the amplitude bias of the predicted Atl3 index and the amplitude bias of the predicted Niño3.4 index is shown in Figure 11d. The low and insignificant correlation coefficient between them indicates that the amplitude bias in predicting ENSO is not responsible for the amplitude bias in predicting Atlantic Niño/Niña. Note that the results above (i.e., the amplitude bias of predicting Atlantic Niño/Niña) is derived from the three-month-lead forecast. We have further calculated the results for the other lead times (e.g., the results derived from the five-month-lead forecast the shown in Figure 12), and found that the main conclusion can hold. Nonetheless, the aforementioned insignificant statistic results cannot indicate that the mean state bias has no impact on the prediction errors, and this may arise from the possibility that the impact of mean state bias is model dependent.

Figure 11.

Scatter diagram showing the relationship between the amplitude bias of the predicted Atl3 index (x-axis) and (a) mean SST bias averaged over Atl3 region, (b) the RMSE of mean SST biases averaged over Atl3 region, (c) the bias of the zonal gradient of mean SST, (d) the amplitude bias of the predicted Niño3.4 index (i.e., SST anomalies averaged for 5° S–5° N, 170° W–120° W). Here the amplitude bias of the predicted Atl3 index is measured by the difference between the STD of the predicated Atl3 index and the STD of the observed Atl3 index (i.e., model forecast minus observation). The bias of zonal gradient of mean SST is represented by mean SST bias averaged over the Atl3 region subtracting that in the western equatorial Atlantic (3° S–3°N, 40° W–20° W). The linear regression line is presented in the figure and the correlation coefficient is shown on the top right of each panel. Note that the relationship shown here are derived from the three-month-lead forecast, which resemble those at different lead times.

Figure 12.

Same as Figure 11, except for five-month-lead forecasts.

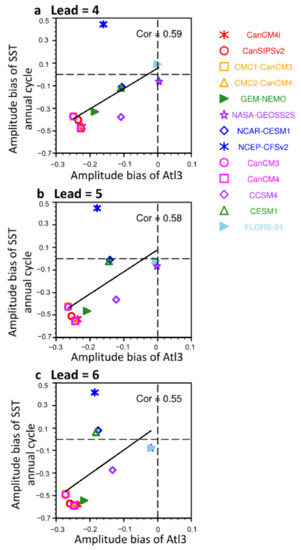

Our results suggest that the amplitude bias in predicting Atlantic Niño/Niña is associated with the amplitude bias of the annual cycle of SST. As shown in Figure 13, the fidelity of predicting the amplitude of Atlantic Niño/Niña is well correlated with the fidelity of predicting the amplitude of SST annual cycle. The correlation coefficients between them for the four-, five-, and six-month-lead forecasts are, respectively, 0.59, 0.58, and 0.55, which exceed 95% confidence level. Additionally, the correlation coefficients among the NMME models could increase much more when an outlier (i.e., the forecast results in NCEP-CFSv2) is excluded. Overall, our results indicate that the amplitude bias in predicting Atlantic Niño/Niña could be attributed to the system bias in predicting the amplitude of SST annual cycle. This may be due to the fact that the background mean state plays a vital role in modulating the amplitude of interannual variability. The detailed reason behind will be thoroughly analyzed in the future.

Figure 13.

Scatter diagram showing the relationship between the amplitude bias of the predicted Atl3 index (x-axis) and the amplitude bias of the annual cycle of SST averaged in Atl3 region (y-axis) for (a) four-month-lead forecast, (b) five-month-lead forecast and (c) six-month-lead forecast. Here the amplitude of annual cycle is defined as the standard deviation from annual mean, and the amplitude bias of SST annual cycle is denoted by the amplitude of SST annual cycle subtracting the counterpart in the observation. The linear regression line is presented in the figure and the correlation coefficient is shown on the top right of each panel.

4.3. Factors Responsible for the Prediction Skills Based on ACC

Based on ACC, the MME forecast skill of the Atlantic Niño/Niña, with a longer lead time, is superior to those reported by previous studies. However, the forecast skill is limited in the lead time of around five months, and it cannot overcome the aforementioned “spring predictability barrier”. Hence it is worth finding out the factors responsible for the forecast skills based on ACC.

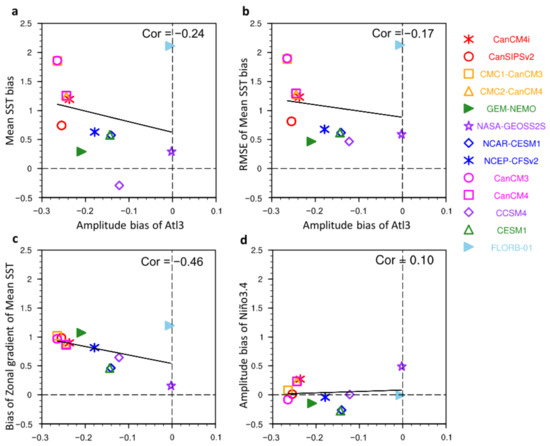

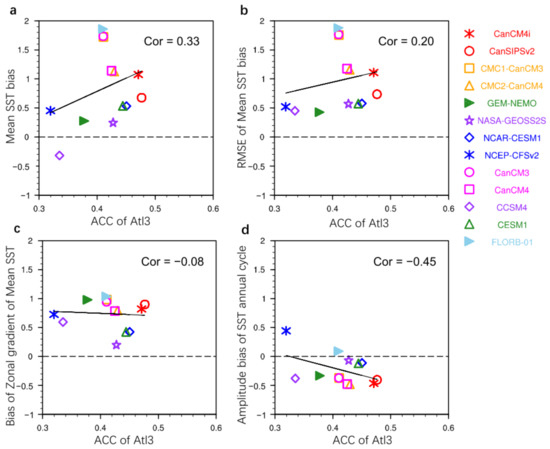

Again, to avoid that the factors that may be model-dependent, we will attempt to identify the common factors according to the multi-model forecast results. Figure 14a–d show the scatter diagrams between the ACC results for the Atlantic Niño/Niña forecasts and the biases of mean SST averaged in Atl3 region (Figure 14a), the RMSE of mean SST biases in Atl3 region (Figure 14b), the biases of the zonal gradient of mean SST in the equatorial Atlantic (Figure 14c), the amplitude biases of SST annual cycle (Figure 14d), respectively. The corresponding correlation coefficients are relatively low and insignificant, indicating that the local mean SST bias, the bias of the zonal gradient of mean SST, and the bias of SST annual cycle are not responsible for the forecast skills based on ACC.

Figure 14.

Scatter diagram shown the relationship between the ACC of Atl3 index (x-axis) and (a) mean SST bias averaged over Atl3 region, (b) the RMSE of mean SST biases averaged over Atl3 region, (c) the bias of zonal gradient of mean SST, (d) the amplitude bias of the annual cycle of SST averaged over Atl3 region. The linear regression line is presented and the correlation coefficient is shown on the top right of each panel. Note that the relationship shown here are derived from the four-month-lead forecast, which resemble those at different lead times.

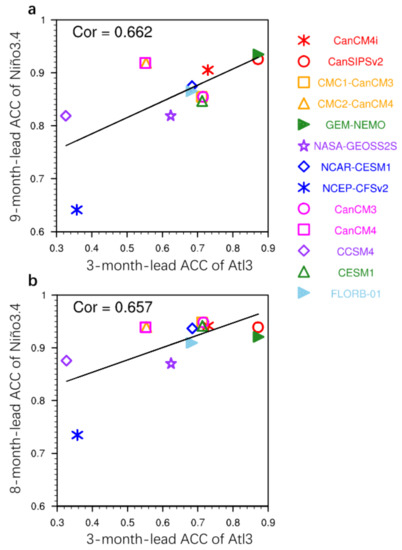

Since recent studies suggested that there is close linkage between the interannual variability in tropical Pacific and the interannual variability in tropical Atlantic (e.g., [9,72]), we further investigate whether the prediction skill of ENSO has an impact on the prediction skill of Atlantic Niño/Niña. The Atlantic Niño and Niña events in the observation and the selection criteria are described in Section 3.3. For a given model, we firstly calculated the forecast skill for all the selected Atlantic Niño and Niña events (e.g., the ACC of the June–July averaged Atl3 index in the April-initiated forecast is 0.73 in CanCM4i), and then calculated the forecast skill for the Niño3.4 index in the preceding boreal winter (e.g., the ACC of the preceding December–February averaged Niño3.4 index in the May-initiated forecast and June-initiated forecast is, respectively, 0.91 and 0.94 in CanCM4i). Finally we obtain the relationship between the prediction skill of the Atlantic Niño/Niña events and the prediction skill of the preceding ENSO or the preceding December–February averaged Niño3.4 index among the NMME models. As shown in Figure 15a, the correlation coefficient reaches 0.66, showing a significantly positive correlation between the Atlantic Niño/Niña prediction skill and the preceding ENSO prediction skill. Consistently, the results based on the eight-month-lead forecasts of Niño3.4 index show same relationship between them, as shown in Figure 15b. Our results indicate that how well a model predicts the Atlantic Niño/Niña may greatly depend on how well it predicts the preceding ENSO or the SST anomalies in the central-eastern equatorial Pacific.

Figure 15.

Scatter diagram showing the relationship between (a) the three-month-lead ACC of the Atl3 index (x-axis) and the nine-month-lead ACC of the Niño3.4 index (y-axis). To specifically examine the relationship between the prediction skills of Atlantic Niño/Niña and ENSO (or the SST anomalies in the central-eastern equatorial Pacific), here the ACC is calculated based on the selected Atlantic Niño and Niña years, and the June–July averaged Atl3 index is chose for verifying the Atlantic Niño/Niña prediction skill and the preceding December–February averaged Niño3.4 index is chosen for verifying the preceding ENSO prediction skill. (b) same as (a) but for 8-month-lead ACC of Niño3.4 index. Note that the ACC results with different lead time generally show same positive correlation between them.

It is worth mentioning that Hu et al. [36] building on a single dynamical model forecast results might be among the first to suggest that the prediction skill of ENSO influences the prediction skill of Atlantic variability. Our results based on the NMME models further confirm this viewpoint, that is, the Atlantic Niño/Niña prediction skill, in particular the ACC, is primarily dependent on the preceding ENSO prediction skill. Such interesting result is consistent with some recent studies (e.g., [3,14,73,74]) that suggested ENSO can impact the Atlantic Niño/Niña via the basin interaction between tropical Pacific and Atlantic.

5. Conclusions

Atlantic Niño/Niña is one of the dominant interannual variability in the climate system and exerts great influence on the weather and climate in the surrounding continental regions. However, previous studies showed that the prediction skill of the SST anomalies associated with Atlantic Niño/Niña reaches around two months or constrainedly up to three months, showing a relatively low prediction skill. As the latest NMME models have released forecast results, this study assessed the prediction skill of Atlantic Niño/Niña for the NMME models based on both deterministic and probabilistic measures, and investigated the possible factors responsible for the forecast errors. The main conclusions are summarized below.

- (1)

- Almost all the NMME models have underestimated the amplitude of Atlantic Niño/Niña, and the amplitude bias generally increases with the increasing lead time. From the perspective of the individual models, the prediction skill of Atlantic Niño/Niña for the majority of the NMME models can reach three months. Specifically, most of the models are capable to predict Atlantic Niño/Niña at three-month-lead with the RMSE less than 0.4. Particularly, four models (CanCM4i, CanSIPSv2, CMC1-CanCM3, and CMC2-CanCM4) show the RMSE results below 0.4 at 4-month-lead. When one STD of the observed Atl3 index is chose as the threshold value, most of the models have the ability to predict Atl3 index at seven-month-lead or even 12-month-lead. When 0.6 is chose as the cut off value for ACC, the prediction skills in half of the NMME models can reach three months. Among the NMME models, CanCM4i and CanSIPSv2 show the best skill in predicting Atlantic Niño/Niña. Two representative models are selected for further assessing the prediction skill in a probabilistic sense. The results based on the probabilistic measures (BSS, RPSS and ROC) agree with each other, and are generally consistent with those based on the deterministic measures.

- (2)

- The MME made by the NMME models shows better prediction skills than any of individual models. Specifically, the prediction skill for the MME reaches 6 (more than 4) months when 0.5 (0.6) is chose as the cut off value for ACC. As to the RMSE, the MME result keeps far below one STD of the observed Atl3 index for even 12-month-lead forecast, and the prediction skill for the MME result can reach nearly five months when 0.4 is chose as the threshold value. Therefore, the prediction skill for the MME can reach around five months, indicating that the MME method is an effective approach for reducing forecast errors.

- (3)

- It is further found that the prediction skill of Atlantic Niño/Niña shows clear seasonality. Both ACC and RMSE results show that the prediction skill of Atlantic Niño/Niña generally reaches more than six months for the forecasts starting from May to November, but is limited within four months for the forecasts starting in boreal winter. As the prediction skill shows a marked dip across the boreal spring, it is suggested that the prediction of Atlantic Niño/Niña in NMME models suffers a “spring predictability barrier”.

- (4)

- The more detailed assessments document that the prediction skill for Atlantic Niña is higher than that for Atlantic Niño, and the prediction skill in the developing phase is better than that in the decaying phase. A preliminary analysis reveals that all the models show that the SNR for the Atlantic Niña prediction is obviously larger than the SNR for the Atlantic Niño prediction, indicating that the Atlantic Niña is more predictable than Atlantic Niño. The contrasting potential predictability estimated by SNR may partly explain why the prediction skill of Atlantic Niña is higher than that of Atlantic Niño in NMME models.

- (5)

- Our further analysis show that the amplitude bias of the predicted Atlantic Niño/Niña is primarily attributed to the amplitude bias of the annual cycle of SST, while the mean state bias (e.g., mean SST bias in Atl3 region) and the amplitude bias of Niño3.4 index are not the common factors among the models. Generally speaking, a weak annual cycle of SST corresponds to an underestimation of the Atlantic Niño/Niña variability, and vice versa. The detailed reason behind this needs further investigation in the future. From the perspective of ACC scores, we found that the prediction skill for the Atlantic Niño/Niña events, to a large extent, relies on the prediction skill for the preceding boreal winter (December–February) averaged Niño3.4 index (or the preceding ENSO).

The factors affecting the seasonal prediction skills are various and may be model-dependent. For instance, the model formulation, such as the initialization methods, the model resolution and physical parameterization schemes, can greatly influence the seasonal prediction skill in a certain model. Thus, our strategy in this study is to identify the common factors influencing the prediction skill of Atlantic Niño/Niña with the aid of multiple models from the latest NMME. Based on the multiple model results, it is suggested that the prediction skill of Atlantic Niño/Niña may benefit from a more realistic annual cycle in eastern equatorial Atlantic sector and an improved ENSO prediction skill in the dynamical models.

Author Contributions

Conceptualization, R.W. and L.C.; methodology, R.W.; software, R.W.; validation, R.W. and L.C.; formal analysis, R.W.; investigation, R.W., L.C. and J.-J.L.; resources, R.W.; data curation, R.W.; writing—original draft preparation, R.W. and L.C.; writing—review and editing, L.C., T.L. and J.-J.L.; visualization, R.W.; supervision, T.L.; project administration, L.C.; funding acquisition, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The NMME model datasets can be obtained from https://www.cpc.ncep.noaa.gov/products/NMME/data.html (accessed on 31 May 2021), and the HadISST data can be obtained from https://www.metoffice.gov.uk/hadobs/hadisst/data/download.html (accessed on 31 May 2021).

Acknowledgments

This work was jointly supported by the National Key Research and Development Program on Monitoring, Early Warning and Prevention of Major Natural Disaster (2019YFC1510004, 2018YFC1506002), NSFC Grants (Nos. 42005020, 42088101, 41630423, NSF Grant AGS-2006553, NSF of Jiangsu (BK20190781).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, X.; Xie, S.-P.; Gille, S.T.; Yoo, C. Atlantic-induced pan-tropical climate change over the past three decades. Nat. Clim. Chang. 2016, 6, 275–279. [Google Scholar] [CrossRef]

- Jin, E.K.; Kinter, J.L.; Wang, B.; Park, C.-K.; Kang, I.-S.; Kirtman, B.P.; Kug, J.-S.; Kumar, A.; Luo, J.-J.; Schemm, J.; et al. Current status of ENSO prediction skill in coupled ocean—Atmosphere models. Clim. Dyn. 2008, 31, 647–664. [Google Scholar] [CrossRef]

- Wang, C.; Kucharski, F.; Barimalala, R.; Bracco, A. Teleconnections of the tropical Atlantic to the tropical Indian and Pacific Oceans: A review of recent findings. Meteorol. Z. 2009, 18, 445–454. [Google Scholar] [CrossRef]

- Lübbecke, J.F.; Rodríguez-Fonseca, B.; Richter, I.; Martín-Rey, M.; Losada, T.; Polo, I.; Keenlyside, N.S. Equatorial Atlantic variability—Modes, mechanisms, and global teleconnections. Wiley Interdiscip. Rev. Clim. Chang. 2018, 9, e527. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, R.-H.; Liu, T.; Duan, W.; Yang, D.; Zheng, F.; Ren, H.; Lian, T.; Gao, C.; Chen, D.; et al. Progress in ENSO prediction and predictability study. Natl. Sci. Rev. 2018, 5, 826–839. [Google Scholar] [CrossRef]

- Nobre, P.; Shukla, J. Variations of Sea Surface Temperature, Wind Stress, and Rainfall over the Tropical Atlantic and South America. J. Clim. 1996, 9, 2464–2479. [Google Scholar] [CrossRef]

- Folland, C.K.; Colman, A.W.; Rowell, D.P.; Davey, M.K. Predictability of Northeast Brazil Rainfall and Real-Time Forecast Skill, 1987–98. J. Clim. 2001, 14, 1937–1958. [Google Scholar] [CrossRef]

- Giannini, A.; Saravanan, R.; Chang, P. The preconditioning role of Tropical Atlantic Variability in the development of the ENSO teleconnection: Implications for the prediction of Nordeste rainfall. Clim. Dyn. 2004, 22, 839–855. [Google Scholar] [CrossRef]

- Wang, C. Three-ocean interactions and climate variability: A review and perspective. Clim. Dyn. 2019, 53, 5119–5136. [Google Scholar] [CrossRef]

- Zebiak, S.E. Air–Sea Interaction in the Equatorial Atlantic Region. J. Clim. 1993, 6, 1567–1586. [Google Scholar] [CrossRef]

- Murtugudde, R.G.; Beauchamp, J.; Busalacchi, A.J.; Ballabrera-Poy, J. Relationship between zonal and meridional modes in the tropical Atlantic. Geophys. Res. Lett. 2001, 28, 4463–4466. [Google Scholar] [CrossRef]

- Polo, I.; Rodríguez-Fonseca, B.; Losada, T.; García-Serrano, J. Tropical Atlantic Variability Modes (1979–2002). Part I: Time-Evolving SST Modes Related to West African Rainfall. J. Clim. 2008, 21, 6457–6475. [Google Scholar] [CrossRef]

- Xie, S.-P.; Carton, J.A.; Wang, C. Tropical Atlantic Variability: Patterns, Mechanisms, and Impacts. Large Igneous Prov. 2013, 147, 121–142. [Google Scholar] [CrossRef]

- Chang, P.; Fang, Y.; Saravanan, R.; Ji, L.; Seidel, H. The cause of the fragile relationship between the Pacific El Niño and the Atlantic Niño. Nature 2006, 443, 324–328. [Google Scholar] [CrossRef]

- Keenlyside, N.S.; Latif, M. Understanding Equatorial Atlantic Interannual Variability. J. Clim. 2007, 20, 131–142. [Google Scholar] [CrossRef]

- Lübbecke, J.F.; McPhaden, M.J. On the Inconsistent Relationship between Pacific and Atlantic Niños. J. Clim. 2012, 25, 4294–4303. [Google Scholar] [CrossRef]

- Richter, I.; Xie, S.-P.; Morioka, Y.; Doi, T.; Taguchi, B.; Behera, S. Phase locking of equatorial Atlantic variability through the seasonal migration of the ITCZ. Clim. Dyn. 2017, 48, 3615–3629. [Google Scholar] [CrossRef]

- Carton, J.A.; Huang, B. Warm Events in the Tropical Atlantic. J. Phys. Oceanogr. 1994, 24, 888–903. [Google Scholar] [CrossRef]

- Wang, B.; Xiang, B.; Lee, J.-Y. Subtropical High predictability establishes a promising way for monsoon and tropical storm predictions. Proc. Natl. Acad. Sci. USA 2013, 110, 2718–2722. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.-J.; Masson, S.; Behera, S.K.; Yamagata, T. Extended ENSO Predictions Using a Fully Coupled Ocean–Atmosphere Model. J. Clim. 2008, 21, 84–93. [Google Scholar] [CrossRef]

- Wang, B.; Lee, J.-J.; Kang, I.-S.; Shukla, J.; Park, C.-K.; Kumar, A.; Schemm, J.; Cocke, S.; Kug, J.-S.; Luo, J.-J.; et al. Advance and prospectus of seasonal prediction: Assessment of the APCC/ CliPAS 14-model ensemble retrospective seasonal prediction (1980–2004). Clim. Dyn. 2009, 33, 93–117. [Google Scholar] [CrossRef]

- Kumar, A.; Hu, Z.-Z.; Jha, B.; Peng, P. Estimating ENSO predictability based on multi-model hindcasts. Clim. Dyn. 2017, 48, 39–51. [Google Scholar] [CrossRef]

- Zheng, F.; Zhu, J.; Wang, H.; Zhang, R.-H. Ensemble hindcasts of ENSO events over the past 120 years using a large number of ensembles. Adv. Atmospheric Sci. 2009, 26, 359–372. [Google Scholar] [CrossRef]

- Zheng, F.; Zhu, J. Improved ensemble-mean forecasting of ENSO events by a zero-mean stochastic error model of an intermediate coupled model. Clim. Dyn. 2016, 47, 3901–3915. [Google Scholar] [CrossRef]

- Zhang, R.-H.; Yu, Y.; Song, Z.; Ren, H.-L.; Tang, Y.; Qiao, F.; Wu, T.; Gao, C.; Hu, J.; Tian, F.; et al. A review of progress in coupled ocean-atmosphere model developments for ENSO studies in China. J. Oceanol. Limnol. 2020, 38, 930–961. [Google Scholar] [CrossRef]

- Zhang, R.-H.; Gao, C. The IOCAS intermediate coupled model (IOCAS ICM) and its real-time predictions of the 2015–2016 El Niño event. Sci. Bull. 2016, 61, 1061–1070. [Google Scholar] [CrossRef]

- Luo, J.-J.; Masson, S.; Behera, S.; Yamagata, T. Experimental Forecasts of the Indian Ocean Dipole Using a Coupled OAGCM. J. Clim. 2007, 20, 2178–2190. [Google Scholar] [CrossRef]

- Liu, H.; Tang, Y.; Chen, D.; Lian, T. Predictability of the Indian Ocean Dipole in the coupled models. Clim. Dyn. 2016, 48, 2005–2024. [Google Scholar] [CrossRef]

- Tan, X.; Tang, Y.; Lian, T.; Zhang, S.; Liu, T.; Chen, D. Effects of Semistochastic Westerly Wind Bursts on ENSO Predictability. Geophys. Res. Lett. 2020, 47, 086828. [Google Scholar] [CrossRef]

- Doi, T.; Storto, A.; Behera, S.K.; Navarra, A.; Yamagata, T. Improved Prediction of the Indian Ocean Dipole Mode by Use of Subsurface Ocean Observations. J. Clim. 2017, 30, 7953–7970. [Google Scholar] [CrossRef]

- Wang, F.; Chang, P. A Linear Stability Analysis of Coupled Tropical Atlantic Variability. J. Clim. 2008, 21, 2421–2436. [Google Scholar] [CrossRef]

- Thompson, C.J.; Battisti, D.S. A Linear Stochastic Dynamical Model of ENSO. Part I: Model Development. J. Clim. 2000, 13, 2818–2832. [Google Scholar] [CrossRef]

- Thompson, C.J.; Battisti, D.S. A Linear Stochastic Dynamical Model of ENSO. Part II: Analysis. J. Clim. 2001, 14, 445–466. [Google Scholar] [CrossRef]

- Li, X.; Bordbar, M.H.; Latif, M.; Park, W.; Harlaß, J. Monthly to seasonal prediction of tropical Atlantic sea surface temperature with statistical models constructed from observations and data from the Kiel Climate Model. Clim. Dyn. 2020, 54, 1829–1850. [Google Scholar] [CrossRef]

- Stockdale, T.N.; Balmaseda, M.A.; Vidard, A. Tropical Atlantic SST Prediction with Coupled Ocean–Atmosphere GCMs. J. Clim. 2006, 19, 6047–6061. [Google Scholar] [CrossRef]

- Hu, Z.-Z.; Huang, B. The Predictive Skill and the Most Predictable Pattern in the Tropical Atlantic: The Effect of ENSO. Mon. Weather Rev. 2007, 135, 1786–1806. [Google Scholar] [CrossRef]

- Kirtman, B.P.; Min, D.; Infanti, J.M.; Kinter, J.L.; Paolino, D.A.; Zhang, Q.; Dool, H.V.D.; Saha, S.; Mendez, M.P.; Becker, E.; et al. The North American Multimodel Ensemble: Phase-1 Seasonal-to-Interannual Prediction; Phase-2 toward Developing Intraseasonal Prediction. Bull. Am. Meteorol. Soc. 2014, 95, 585–601. [Google Scholar] [CrossRef]

- Becker, E.; Dool, H.V.D.; Zhang, Q. Predictability and Forecast Skill in NMME. J. Clim. 2014, 27, 5891–5906. [Google Scholar] [CrossRef]

- Chen, L.C.; Huug, V.D.D.; Becker, E.; Zhang, Q. ENSO Precipitation and Temperature Forecasts in the North American Multi-Model Ensemble: Composite Analysis and Validation. Am. Meteorol. Soc. 2017, 30, 1103–1125. [Google Scholar]

- Zhang, W.; Villarini, G.; Slater, L.; Vecchi, G.; Bradley, A. Improved ENSO Forecasting Using Bayesian Updating and the North American Multimodel Ensemble (NMME). J. Clim. 2017, 30, 9007–9025. [Google Scholar] [CrossRef]

- Wu, Y.; Tang, Y. Seasonal predictability of the tropical Indian Ocean SST in the North American multimodel ensemble. Clim. Dyn. 2019, 53, 3361–3372. [Google Scholar] [CrossRef]

- Pillai, P.A.; Rao, S.A.; Ramu, D.A.; Pradhan, M.; George, G. Seasonal prediction skill of Indian summer monsoon rainfall in NMME models and monsoon mission CFSv2. Int. J. Clim. 2018, 38, e847–e861. [Google Scholar] [CrossRef]

- Singh, B.; Cash, B.; Iii, J.L.K. Indian summer monsoon variability forecasts in the North American multimodel ensemble. Clim. Dyn. 2019, 53, 7321–7334. [Google Scholar] [CrossRef]

- Hua, L.; Su, J. Southeastern Pacific error leads to failed El Niño forecasts. Geophys. Res. Lett. 2020, 47, 088764. [Google Scholar] [CrossRef]

- Newman, M.; Sardeshmukh, P.D. Are we near the predictability limit of tropical Indo-Pacific sea surface temperatures? Geophys. Res. Lett. 2017, 44, 8520–8529. [Google Scholar] [CrossRef]

- Lee, D.E.; Chapman, D.; Henderson, N.; Chen, C.; Cane, M.A. Multilevel vector autoregressive prediction of sea surface temperature in the North Tropical Atlantic Ocean and the Caribbean Sea. Clim. Dyn. 2016, 47, 95–106. [Google Scholar] [CrossRef]

- Harnos, D.S.; Schemm, J.-K.E.; Wang, H.; Finan, C.A. NMME-based hybrid prediction of Atlantic hurricane season activity. Clim. Dyn. 2017, 53, 7267–7285. [Google Scholar] [CrossRef]

- Richter, I.; Doi, T.; Behera, S.K.; Keenlyside, N. On the link between mean state biases and prediction skill in the tropics: An atmospheric perspective. Clim. Dyn. 2018, 50, 3355–3374. [Google Scholar] [CrossRef]

- Penland, C.; Matrosova, L. Prediction of Tropical Atlantic Sea Surface Temperatures Using Linear Inverse Modeling. J. Clim. 1998, 11, 483–496. [Google Scholar] [CrossRef]

- Xu, Z.; Chang, P.; Richter, I.; Kim, W.; Tang, G. Diagnosing southeast tropical Atlantic SST and ocean circulation biases in the CMIP5 ensemble. Clim. Dyn. 2014, 43, 3123–3145. [Google Scholar] [CrossRef]

- Richter, I.; Xie, S.-P.; Behera, S.K.; Doi, T.; Masumoto, Y. Equatorial Atlantic variability and its relation to mean state biases in CMIP5. Clim. Dyn. 2014, 42, 171–188. [Google Scholar] [CrossRef]

- Huang, B.; Schopf, P.S.; Shukla, J. Intrinsic Ocean–Atmosphere Variability of the Tropical Atlantic Ocean. J. Clim. 2004, 17, 2058–2077. [Google Scholar] [CrossRef]

- Exarchou, E.; Prodhomme, C.; Brodeau, L.; Guemas, V.; Doblas-Reyes, F. Origin of the warm eastern tropical Atlantic SST bias in a climate model. Clim. Dyn. 2017, 51, 1819–1840. [Google Scholar] [CrossRef]

- Vallès-Casanova, I.; Lee, S.; Foltz, G.R.; Pelegrí, J.L. On the Spatiotemporal Diversity of Atlantic Niño and Associated Rainfall Variability Over West Africa and South America. Geophys. Res. Lett. 2020, 47, 087108. [Google Scholar] [CrossRef]

- Rayner, N.A.; Parker, D.E.; Horton, E.B.; Folland, C.K.; Alexander, L.V.; Rowell, D.P.; Kent, E.; Kaplan, A.L. Global analyses of sea surface temperature, sea ice, and night marine air temperature since the late nineteenth century. J. Geophys. Res. Space Phys. 2003, 108, 108. [Google Scholar] [CrossRef]

- Luo, J.-J.; Behera, S.K.; Masumoto, Y.; Yamagata, T. Impact of Global Ocean Surface Warming on Seasonal-to-Interannual Climate Prediction. J. Clim. 2011, 24, 1626–1646. [Google Scholar] [CrossRef]

- Kirtman, B.P.; Huang, B.; Zhu, Z.; Schneider, E.K. Multi-seasonal prediction with a coupled tropical ocean—Global at-mosphere system. Am. Meteorol. Soc. 1997, 125, 789–808. [Google Scholar]

- Ham, Y.-G.; Kim, J.-H.; Luo, J.-J. Deep learning for multi-year ENSO forecasts. Nat. Cell Biol. 2019, 573, 568–572. [Google Scholar] [CrossRef]

- Luo, J.-J.; Masson, S.; Behera, S.K.; Shingu, S.; Yamagata, T. Seasonal Climate Predictability in a Coupled OAGCM Using a Different Approach for Ensemble Forecasts. J. Clim. 2005, 18, 4474–4497. [Google Scholar] [CrossRef]

- Zhao, S.; Stuecker, M.F.; Jin, F.-F.; Feng, J.; Ren, H.-L.; Zhang, W.; Li, J. Improved Predictability of the Indian Ocean Dipole Using a Stochastic Dynamical Model Compared to the North American Multimodel Ensemble Forecast. Weather Forecast. 2020, 35, 379–399. [Google Scholar] [CrossRef]

- Latif, M.; Barnett, T.P.; Cane, M.A.; Flügel, M.; Graham, N.E.; von Storch, H.; Xu, J.-S.; Zebiak, S.E. A review of ENSO prediction studies. Clim. Dyn. 1994, 9, 167–179. [Google Scholar] [CrossRef]

- Webster, P.J.M. The annual cycle and the predictability of the tropical coupled ocean-atmosphere system. Meteorol. Atmos. Phys. 1995, 56, 33–55. [Google Scholar] [CrossRef]

- Lopez, H.; Kirtman, B.P. WWBs, ENSO predictability, the spring barrier and extreme events. J. Geophys. Res. Atmos. 2014, 119, 10–114. [Google Scholar] [CrossRef]

- Larson, S.M.; Pegion, K. Do asymmetries in ENSO predictability arise from different recharged states? Clim. Dyn. 2019, 54, 1507–1522. [Google Scholar] [CrossRef]

- Hu, Z.-Z.; Kumar, A.; Zhu, J. Dominant modes of ensemble mean signal and noise in seasonal forecasts of SST. Clim. Dyn. 2021, 56, 1251–1264. [Google Scholar] [CrossRef]

- Tippett, M.K.; Ranganathan, M.; L’Heureux, M.; Barnston, A.G.; Delsole, T. Assessing probabilistic predictions of ENSO phase and intensity from the North American Multimodel Ensemble. Clim. Dyn. 2017, 53, 7497–7518. [Google Scholar] [CrossRef]

- Kirtman, B.P. The COLA Anomaly Coupled Model: Ensemble ENSO Prediction. Mon. Weather Rev. 2003, 131, 2324–2341. [Google Scholar] [CrossRef]

- Liu, T.; Tang, Y.; Yang, D.; Cheng, Y.; Song, X.; Hou, Z.; Shen, Z.; Gao, Y.; Wu, Y.; Li, X.; et al. The relationship among probabilistic, deterministic and potential skills in predicting the ENSO for the past 161 years. Clim. Dyn. 2019, 53, 6947–6960. [Google Scholar] [CrossRef]

- Li, T.; Philander, S.G.H. On the Seasonal Cycle of the Equatorial Atlantic Ocean. J. Clim. 1997, 10, 813–817. [Google Scholar] [CrossRef][Green Version]

- Ding, H.; Keenlyside, N.; Latif, M.; Park, W.; Wahl, S. The impact of mean state errors on equatorial A tlantic interannual variability in a climate model. J. Geophys. Res. Oceans 2015, 120, 1133–1151. [Google Scholar] [CrossRef]

- Lee, J.-Y.; Wang, B.; Kang, I.-S.; Shukla, J.; Kumar, A.; Kug, J.-S.; Schemm, J.K.E.; Luo, J.-J.; Yamagata, T.; Fu, X.; et al. How are seasonal prediction skills related to models’ performance on mean state and annual cycle? Clim. Dyn. 2010, 35, 267–283. [Google Scholar] [CrossRef]

- Cai, W.; Wu, L.; Lengaigne, M.; Li, T.; McGregor, S.; Kug, J.-S.; Yu, J.-Y.; Stuecker, M.F.; Santoso, A.; Li, X.; et al. Pantropical climate interactions. Science 2019, 363, eaav4236. [Google Scholar] [CrossRef]

- Wang, C. ENSO, Atlantic Climate Variability, and the Walker and Hadley Circulations. In The Hadley Circulation: Present, Past and Future; Springer: Dordrecht, The Netherlands, 2004; Volume 21, pp. 173–202. [Google Scholar]

- Wang, C. An overlooked feature of tropical climate: Inter-Pacific-Atlantic variability. Geophys. Res. Lett. 2006, 33, 12702. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).