1. Introduction

In the era of personalised healthcare, interpreting the effects of variants in human DNA has become increasingly important to inform treatment and predict outcomes [

1,

2]. Machine learning and deep learning models in particular have become widely used to support this endeavour, as they are well-suited to analyse the enormous amounts of genomic data produced by next-generation sequencing technologies [

3,

4,

5].

Due to similarities in the structure of natural languages and genomic/proteomic sequences, natural language processing techniques have demonstrated significant applicability in bioinformatics [

6,

7]. Since the advent of the Transformer, large pretrained language models, called biological foundation models, have become widespread for solving a variety of bioinformatics tasks. Among them, large language models (LLMs) have been widely used for variant effect prediction, achieving promising results in many cases. However, recent reviews of the field have identified some key challenges that have yet to be addressed [

8,

9]. While LLMs have achieved good results on variants within the coding regions of the human genome, non-coding variants are still underexplored, and results on such problems are sub-optimal [

10,

11]. Furthermore, the problem of non-coding variant effect prediction necessitates longer-range context, and hence, it requires longer DNA sequences as input to the model. However, due to the attention mechanism’s quadratic scaling of computational complexity with sequence length, Transformer-based LLMs are highly inefficient for longer-context (1000+ bp) problems. Recent research has explored alternatives to the attention mechanism, such as Hyena and Mamba; however, models using these alternatives still produce unsatisfactory results on non-coding variant effect prediction [

11,

12,

13]. Hence, Transformer-based LLMs remain the prevalent technology in the field.

As suggested by their name, LLMs are defined by their immense scale, typically comprising anywhere from a dozen to several hundred layers and containing millions to billions of parameters. This is equally true for genomic LLMs; for instance, the widely used Nucleotide Transformer series [

14] contains models with up to 2.5 billion parameters. Although genomic LLMs have been steadily increasing in size in the pursuit of better modelling biological information, recent research has shown that the relationship between the number of layers and the context captured by a model is not as straightforward as previously hypothesised [

15,

16]. In particular, a number of papers have reported that not all layers are equal, with different layers learning different amounts and types of information [

17,

18]. Crucially, multiple studies have evidenced that pruning (removing) some layers of a model drastically impacts the model’s performance on downstream tasks, whereas the pruning of others has a negligible effect [

18,

19,

20]. The most important layers have been referred to “cornerstone” layers [

18], whose removal causes a “significant performance drop”.

While these studies have made significant strides in uncovering the inner workings of LLMs, they have focused on English language models. In contrast, such research is still lacking for genomic LLMs. As personalised medicine becomes more mainstream, it is crucial for the research community to work together to make models which are accurate, efficient, and unbiased. However, existing genomic LLMs often take days or weeks to train, using multiple GPUs, hence making them inaccessible to researchers without high-performance computing infrastructure. Fully understanding the composition of LLMs and deciphering the impact of each layer will enable the creation of streamlined models that are more computationally efficient, broadly accessible, and suitable for deployment in clinical environments.

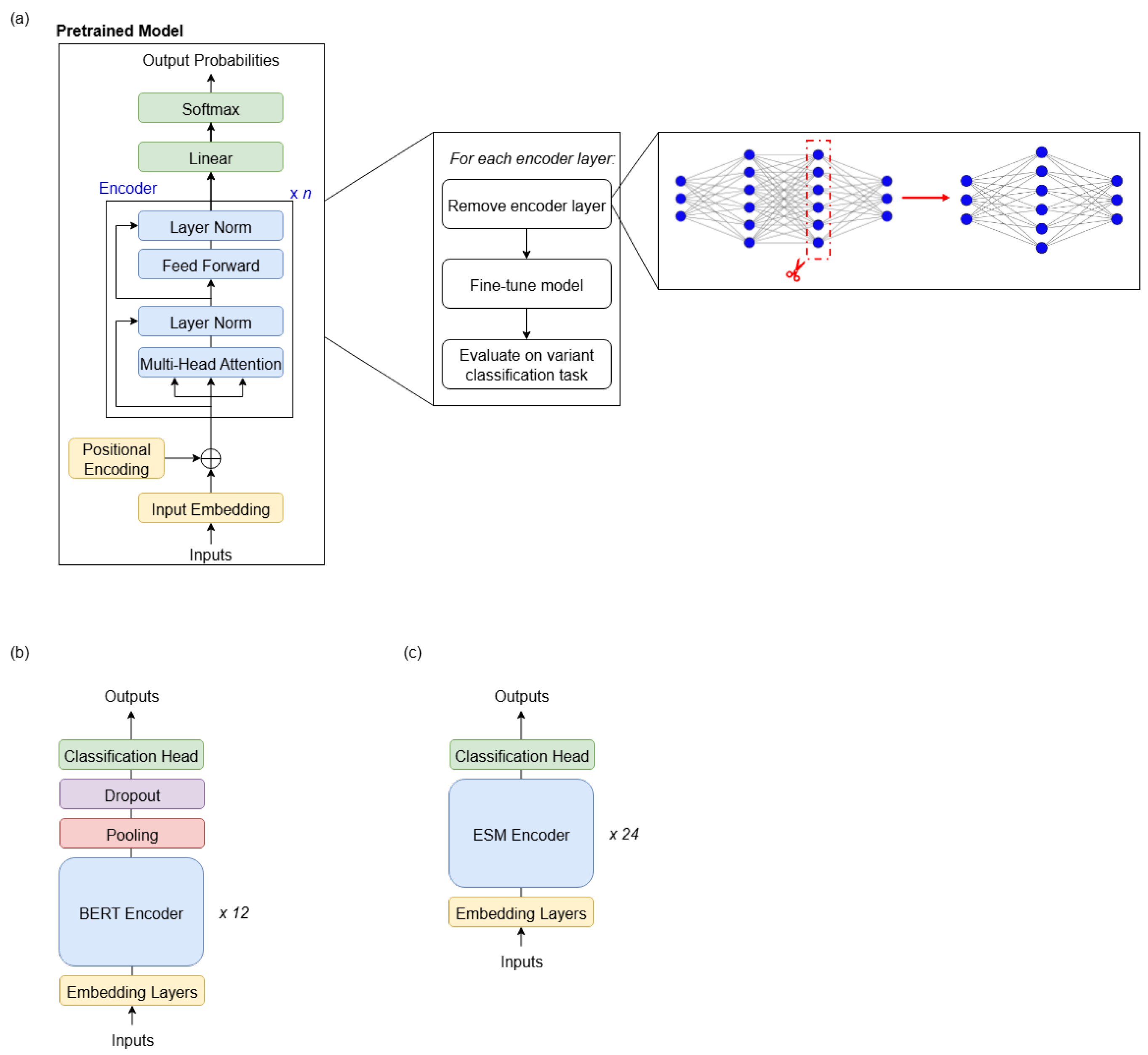

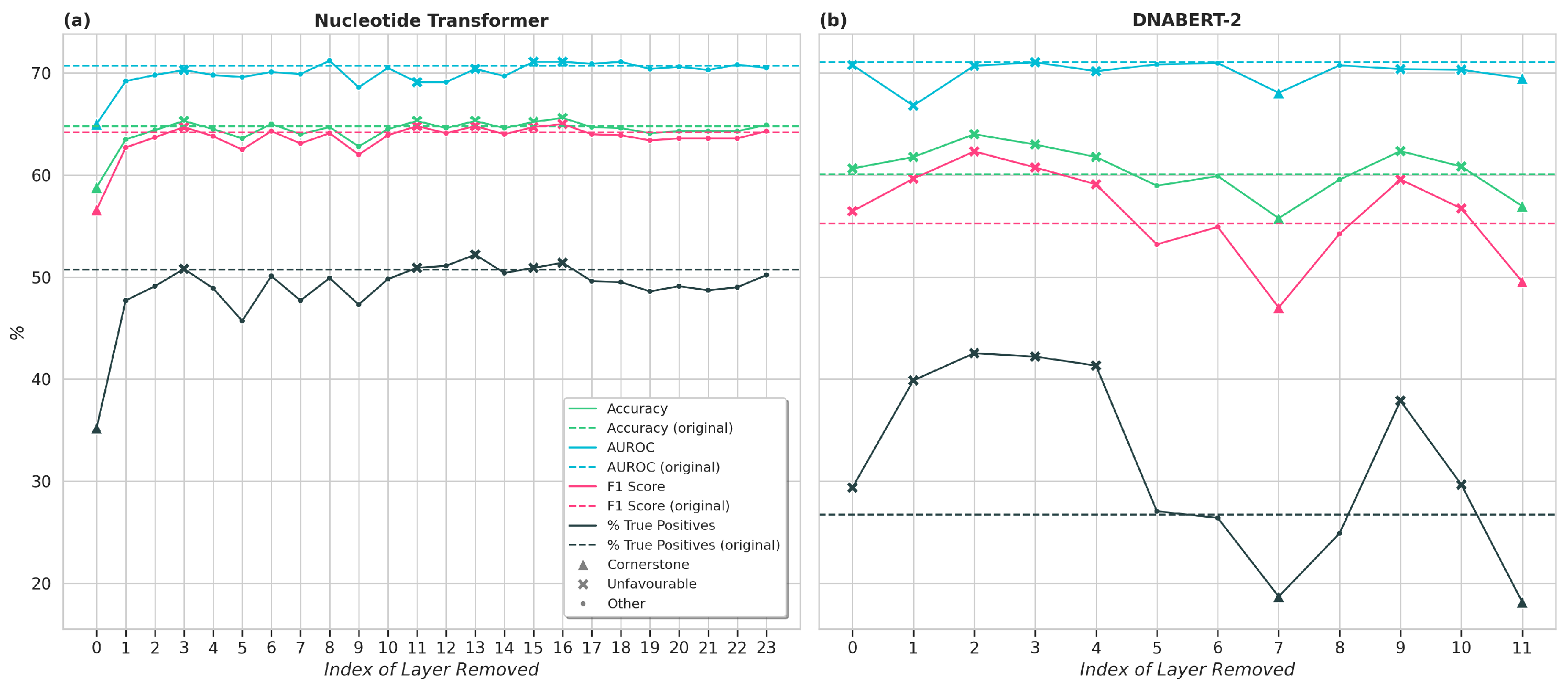

This study investigates the impacts of different LLM layers on downstream performance by performing layer-wise ablation of two modern genomic LLMs, DNABERT-2 [

21] and the Nucleotide Transformer [

14]. Consistent with findings in natural language models, the results demonstrate that layer pruning can reduce fine-tuning time while maintaining or even improving model performance.

2. Materials and Methods

This study aims to support the production of efficient language models for the prediction of human non-coding DNA variants. The role and/or redundancy of different LLM layers in modelling DNA sequences is investigated by performing systematic layer-wise pruning of DNABERT-2 [

21] and Nucleotide Transformer [

14], which have both been widely used for DNA variant effect prediction tasks. By doing so, the study investigates whether structured pruning can preserve LLM performance while reducing fine-tuning and evaluation time, as has been demonstrated for natural language processing tasks [

18,

19,

20]. The models are trained and evaluated on the relevant splits of the eQTL variant data derived from the Enformer paper [

3]. The task explored is the binary classification of single-nucleotide variants as pathogenic or benign from a single DNA sequence input. The dataset details are summarised in

Table 1.

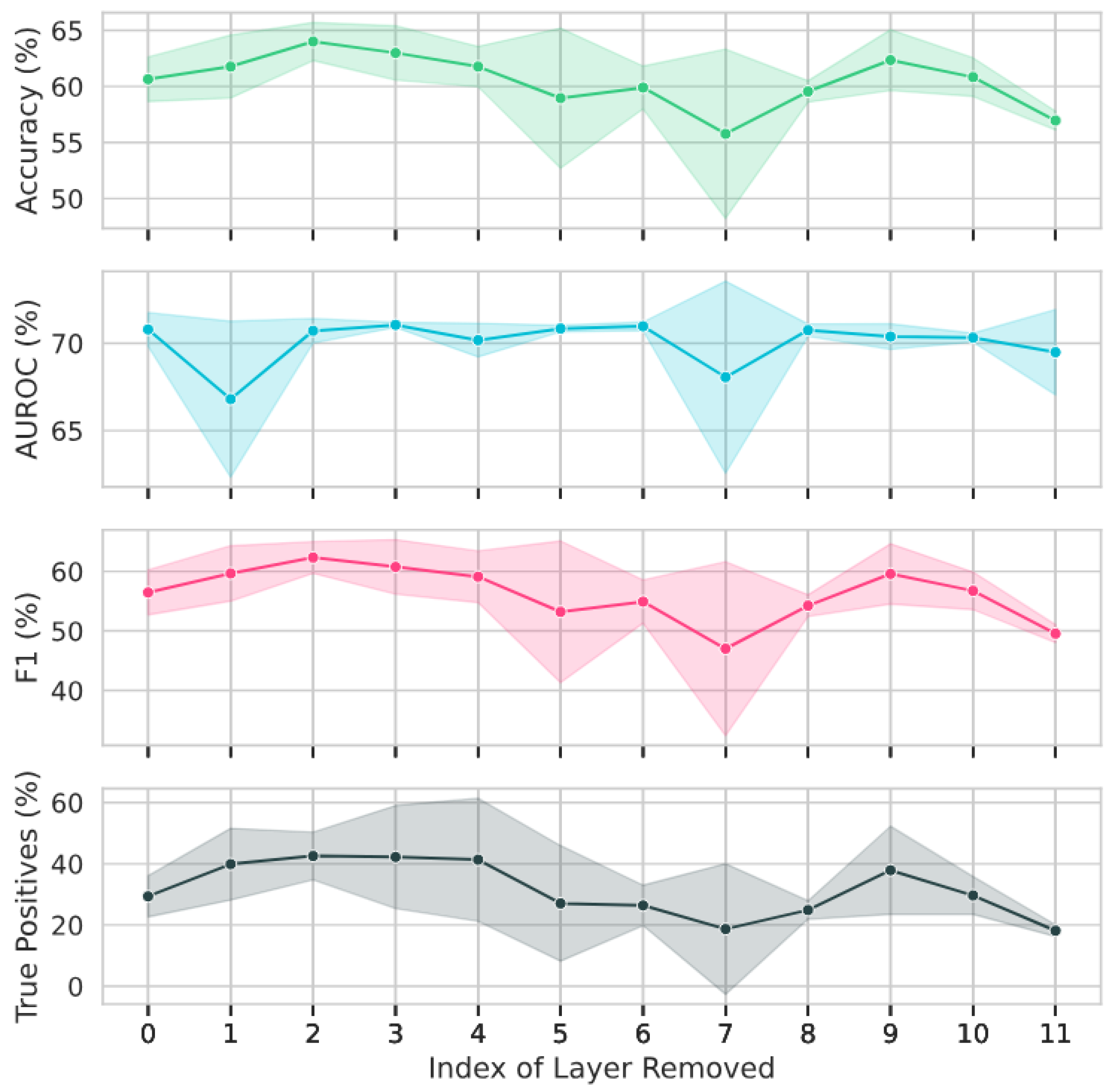

The methodology shown in

Figure 1a was followed for both state-of-the-art DNA LLMs. In each experiment, a copy of the pretrained model is created and the desired layer removed. This copy is then fine-tuned on the 88,717 variant-centred DNA sequences in the training split of the eQTL dataset [

3]. The initial goal was to repeat each experiment for each model three times, with different random seeds, on the same hardware. However, due to the time-consuming nature of the experiments, it was necessary to train the models on two different machines. Each DNABERT-2 model was trained until convergence of the validation loss and repeated three times with different random seeds. The training was performed on 2 × Quadro RTX 8000 GPUs, taking around 1 h per pruned model on average. The Nucleotide Transformer had a much larger size and hence a significantly longer training time; training to convergence on 2 × NVIDIA TITAN Xp GPUs took approximately 19 h. Hence, due to time and resource constraints, it was not possible to train each version of the model to convergence three times with different random seeds. Instead, to make experiments manageable, each model was trained for 5 epochs, which was the minimal number of epochs to achieve an AUROC above 70%, i.e., substantially better than random, on the eQTL variant classification task (

Table 1). This reduced the fine-tuning time for each model to approximately 7 h. Repeated runs were performed for the original (un-pruned), cornerstone, and best models with three different random seeds, demonstrating good agreement (

Table A1,

Table A2 and

Table A3).

Enformer [

3] itself maintains the state-of-the-art performance on the eQTL dataset. However, technical constraints prevented the use of this model in the study. Additionally, it is important to note that the Enformer methodology differs from that described above. Rather than fine-tuning the pretrained LLM, the embeddings are instead extracted and used as feature inputs for a random forest classifier. The figures quoted for this model are derived from the literature.

Finally, the models were evaluated on the binary variant classification task, across the 8846 variant-centred DNA sequences in the evaluation split of the eQTL dataset. Instructions for accessing the training and evaluation data are included in the

Data Availability section of this paper. It should be noted that the training and evaluation datasets are both balanced, containing an equal number of samples in the positive and negative classes. The model architectures for DNABERT-2 and Nucleotide Transformer are summarised in

Figure 1b and

Figure 1c, respectively, and key details are highlighted in

Table 2. DNABERT-2 was pretrained on human and multispecies reference genomes. The variant of Nucleotide Transformer used in this study was pretrained on the human reference genome only. Instructions for accessing the pretrained models are available in

Appendix A.

As is standard in the field, the models were assessed across multiple metrics during evaluation, i.e., accuracy, AUROC, and F1 score. The rates of true and false positives and negatives were also recorded for each experiment.

For each model, “cornerstone” and “unfavourable” layers were identified via comparison to the baseline model (i.e., the original model with no layers removed). As per the definition in [

18],

cornerstone layers are those that significantly contribute to the model’s performance across all metrics. Ref. [

18] quantifies this by selecting these as layers which, when removed, result in the model’s performance dropping to random. However, as no such layers exist in the models used here,

we instead quantify cornerstone layers as those which result in all metrics being at least 5% worse than the baseline when removed.

Unfavourable layers were identified as those that either had a negligible impact on the downstream prediction or in fact made it worse. When individually removed, these layers resulted in all metrics being better than or equal to the baseline. A reduction of within one standard deviation of the average metric observed across the three runs was considered to be of equal performance to the baseline. Hence, the criteria used were that at least three out of the four equations in (1) must be fulfilled. Here,

refers to the mean of a metric recorded across three runs,

refers to the baseline (un-pruned) model, and

refers to the model with a specific layer pruned. The percentage of true positives,

, is calculated as the number of true positives over the total number of positive samples.

Versions of the models (a) removing all the unfavourable layers and (b) removing all non-cornerstone layers were fine-tuned and evaluated on the downstream task, and compared to the baseline.

4. Discussion and Conclusions

As demonstrated in [

18], the layer-wise pruning experiments evidenced the existence of “cornerstone” layers whose removal significantly degraded the models’ performance. Preserving only the cornerstone layers, both models were able to maintain results within 5% of the original model. For Nucleotide Transformer, this approach significantly reduced the fine-tuning and evaluation time. For DNABERT-2, however, the fine-tuning time in fact increased, as the model took longer to converge. This suggests that aggressive pruning does not correlate exactly with model efficiency, and reflects findings from previous studies that very small models may face issues with convergence [

26]. Additionally, the decrease in performance on the evaluation task resulting from this aggressive pruning was not insignificant, suggesting that non-cornerstone layers still play a key role in modelling context required for the downstream task.

For both models investigated, multiple layers were identified which, when removed, resulted in similar or better performance on the evaluation task; these were termed “unfavourable“ layers. When versions of the models with all unfavourable layers were fine-tuned, their performance on the evaluation task in fact improved, while the training and evaluation times were significantly reduced. This improvement in results with layer removal suggests the existence of redundancy within the removed layers. Such behaviour has previously been observed in similar experiments on LLMs for natural language processing [

27]. In both cases, removing all unfavourable layers from the model resulted in similar or better performance on the downstream task, while reducing fine-tuning and evaluation times.

Though the existence of cornerstone and unfavourable layers was clear across both models, there was no consistent pattern observed regarding which parts of the model were most relevant to the downstream task. This suggests that layer importance varies significantly by model architecture. While the layer-wise ablation here was able to identify the most and least important layers, it is a time-consuming task, and hence, it is a sub-optimal method for estimating layer-wise importance for enhancing model efficiency. However, this work provides a basis for comparison with layer-wise importance estimation methods established in the natural language field [

28,

29] to test whether they are applicable to genomic language models.

Comparison with the state of the art [

3] demonstrated that the pruned models produced in this study achieved close to state-of-the-art performance, and DNABERT-2 did so while using fewer than 50% of the number of parameters. This finding aligns with recent large language model studies indicating that larger model size does not necessarily translate to superior downstream task performance [

30,

31]. While technical constraints prevented the use of the state-of-the-art model for this problem, the results suggest that distinct cornerstone and unfavourable layers are likely to exist across genomic language models with multiple encoder layers. Future research will investigate the application of structured pruning techniques to state-of-the-art models to determine whether targeted layer optimisation can yield further performance improvement.

This work provides a basis for further exploration of how LLM efficiency can be enhanced in order to enable large-scale genomic studies. However, it is crucial to consider how efficiency and accuracy should be balanced, particularly in clinical settings, where incorrect predictions can be devastating.