Computing Power and Sample Size for the False Discovery Rate in Multiple Applications

Abstract

1. Introduction

2. Background

3. Supported Tests

- Two-sample -test

- One-sample -test

- Rank–sum test

- Signed–rank test

- Fisher’s exact test

- -test for correlation

- Comparison of two Poisson distributions

- Comparison of two negative binomial distributions

- Two-proportions -test

- One-way ANOVA

- Cox proportional hazards regression

4. Algorithmic Details

- Given the desired FDR , the desired average power , the proportion of tests with a true null, and the desired option for (BH, HH, HM, Jung), determine the p-value threshold that achieves the desired FDR and average power.

- Compute the average power for each of the two initial sample sizes (default and ; other values can be specified by the user).

- If the average power for both of these initial sample sizes is greater than the desired average power, then report and its average power as the result.

- If the average power for both of these initial sample sizes is less than desired, then define a new and and compute the average power for these new and . Repeat until the average power for is greater than the desired average power and the average power for is less than the desired average power.

- With and and their average powers as initial values, use bisection to determine the smallest with average power greater than or equal to the desired average power .

- To avoid excessive computing time, stop iterative calculations of steps 4 and 5 after average power has been computed a specified maximum number of times and report the results achieved thus far.

5. Examples

5.1. Example 1: Study Involving Many Sign Tests

| theta = rep(c(0.8,0.5), c(100,9900)) # 9900 null; 100 non-null |

| res = n.fdr.signtest(fdr = 0.1, pwr = 0.8, p = theta, pi0.hat = “BH”) |

| res |

| ## $n |

| ## [1] 45 |

| ## |

| ## $computed.avepow |

| ## [1] 0.8095842 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $desired.fdr |

| ## [1] 0.1 |

| ## |

| ## $input.pi0 |

| ## [1] 0.99 |

| ## |

| ## $alpha |

| ## [1] 0.0008879023 |

| ## |

| ## $n0 |

| ## [1] 44 |

| ## |

| ## $n1 |

| ## [1] 45 |

| ## |

| ## $n.its |

| ## [1] 8 |

| ## |

| ## $max.its |

| ## [1] 50 |

| adj.p = alpha.power.fdr(fdr = 0.1, pwr = 0.8, pi0 = 0.99, method = “BH”) |

| adj.p |

| ## [1] 0.0008879023 |

| find.sample.size(alpha = adj.p, pwr = 0.8, avepow.func = average.power.signtest, p = theta) |

| ## $n |

| ## [1] 45 |

| ## |

| ## $computed.avepow |

| ## [1] 0.8095842 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $alpha |

| ## [1] 0.0008879023 |

| ## |

| ## $n.its |

| ## [1] 8 |

| ## |

| ## $max.its |

| ## [1] 50 |

| ## |

| ## $n0 |

| ## [1] 44 |

| ## |

| ## $n1 |

| ## [1] 45 |

| n.fdr.signtest(fdr = 0.1, pwr = 0.8, p = theta, pi0.hat = “HH”) |

| ## $n |

| ## [1] 45 |

| ## |

| ## $computed.avepow |

| ## [1] 0.810428 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $desired.fdr |

| ## [1] 0.1 |

| ## |

| ## $input.pi0 |

| ## [1] 0.99 |

| ## |

| ## $alpha |

| ## [1] 0.0008958549 |

| ## |

| ## $n0 |

| ## [1] 44 |

| ## |

| ## $n1 |

| ## [1] 45 |

| ## |

| ## $n.its |

| ## [1] 8 |

| ## |

| ## $max.its |

| ## [1] 50 |

| theta = rep(c(0.8,0.5), c(500,9500)) # 9500 null; 500 non-null |

| n.fdr.signtest(fdr = 0.1, pwr = 0.8, p = theta, pi0.hat = “HH”) |

| ## $n |

| ## [1] 35 |

| ## |

| ## $computed.avepow |

| ## [1] 0.815116 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $desired.fdr |

| ## [1] 0.1 |

| ## |

| ## $input.pi0 |

| ## [1] 0.95 |

| ## |

| ## $alpha |

| ## [1] 0.004624029 |

| ## |

| ## $n0 |

| ## [1] 34 |

| ## |

| ## $n1 |

| ## [1] 35 |

| ## |

| ## $n.its |

| ## [1] 8 |

| ## |

| ## $max.its |

| ## [1] 50 |

5.2. Example 2: Design of a Clinical Trial to Find Prognostic Genes

| log.HR = log(rep(c(1,2),c(9900,100))) # log hazard ratio for each gene |

| v = rep(1,10000) # variance of each gene |

| res = n.fdr.coxph(fdr = 0.1, pwr = 0.8, |

| logHR = log.HR, v = v, pi0.hat = “BH”) |

| res |

| ## $n |

| ## [1] 37 |

| ## |

| ## $computed.avepow |

| ## [1] 0.8139159 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $desired.fdr |

| ## [1] 0.1 |

| ## |

| ## $input.pi0 |

| ## [1] 0.99 |

| ## |

| ## $alpha |

| ## [1] 0.0008879023 |

| ## |

| ## $n0 |

| ## [1] 36 |

| ## |

| ## $n1 |

| ## [1] 37 |

| ## |

| ## $n.its |

| ## [1] 7 |

| ## |

| ## $max.its |

| ## [1] 50 |

5.3. Example 3: Differential Expression Analysis of RNA-Seq Data

| theta = log(rep(c(1,2.7),c(9900,100))) # log fold change for each gene |

| mu = rep(5,10000) # read depth |

| sig = rep(0.6,10000) # coefficient of variation |

| res = n.fdr.negbin(fdr = 0.1, pwr = 0.8, log.fc = theta, |

| mu = mu, sig = sig, pi0.hat = “BH”) |

| res |

| ## $n |

| ## [1] 20 |

| ## |

| ## $computed.avepow |

| ## [1] 0.8087842 |

| ## |

| ## $desired.avepow |

| ## [1] 0.8 |

| ## |

| ## $desired.fdr |

| ## [1] 0.1 |

| ## |

| ## $input.pi0 |

| ## [1] 0.99 |

| ## |

| ## $alpha |

| ## [1] 0.0008879023 |

| ## |

| ## $n0 |

| ## [1] 19 |

| ## |

| ## $n1 |

| ## [1] 20 |

| ## |

| ## $n.its |

| ## [1] 6 |

| ## |

| ## $max.its |

| ## [1] 50 |

5.4. Example 4: Computing Power of -Tests for a Given Sample Size and Effect Sizes

| delta = rep(c(0,2),c(95,5)) # 95% null; 5% with delta = 2 res=fdr.avepow(10, # per-group sample size average.power.t.test, # average power function null.hypo = “delta == 0”, # null hypothesis delta = delta) # effect size vector head(res$res.tbl) |

| ## alpha fdr pwr ## [1,] 0.001 0.02800492 0.6951602 ## [2,] 0.002 0.04869462 0.7834460 ## [3,] 0.003 0.06773070 0.8288612 ## [4,] 0.004 0.08567764 0.8577325 ## [5,] 0.005 0.10276694 0.8780755 ## [6,] 0.006 0.11912689 0.8933293 |

6. Simulations

7. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

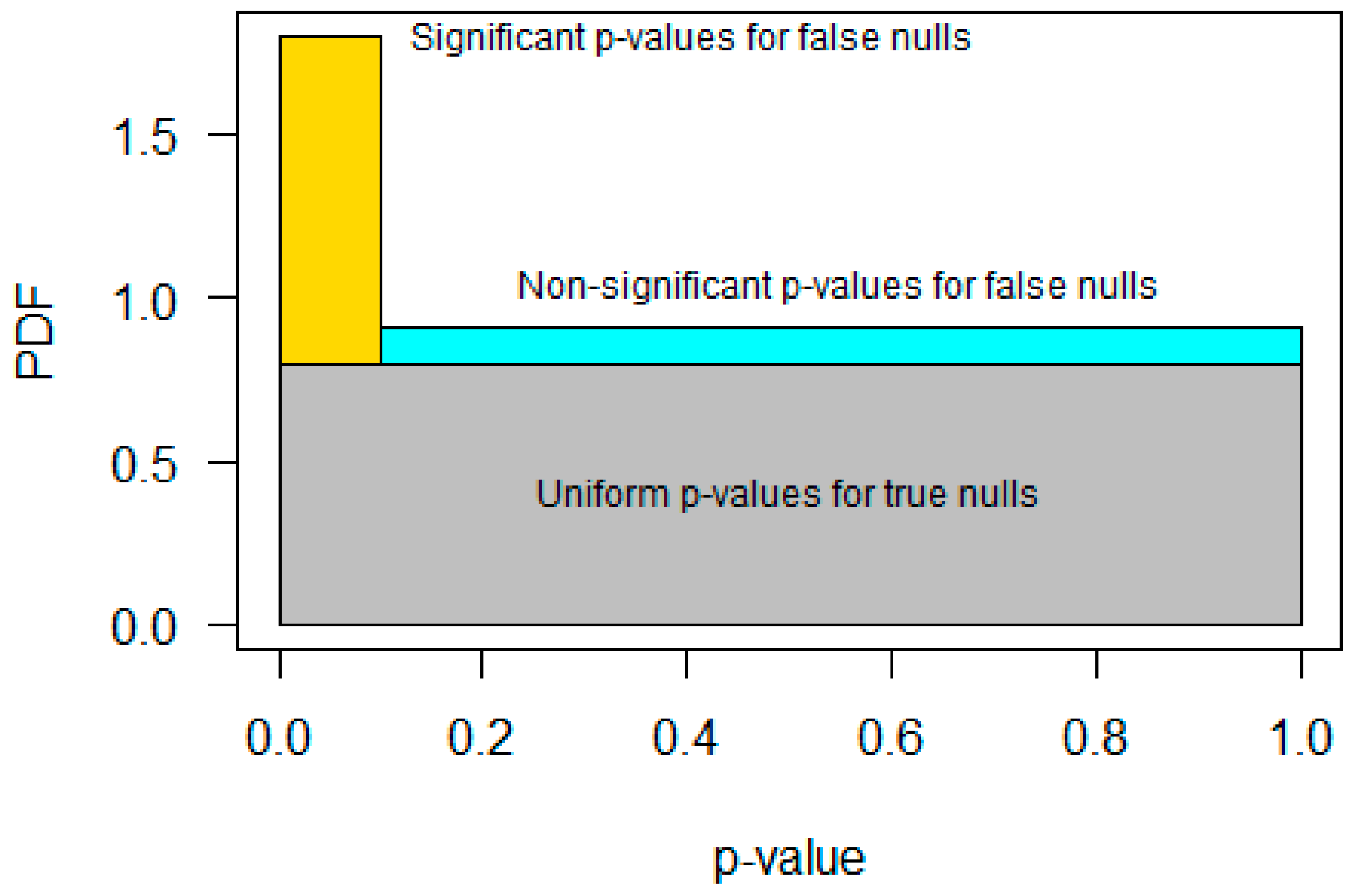

Appendix A

- Histogram Height

- 2.

- Histogram Mean

References

- Benjamini, Y.; Hochberg, Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. J. R. Stat. Soc. Ser. B 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Storey, J.D. False Discovery Rate. Int. Encycl. Stat. Sci. 2011, 1, 504–508. [Google Scholar]

- Storey, J.D. A Direct Approach to False Discovery Rates. J. R. Stat. Soc. Ser. B Stat. Methodol. 2002, 64, 479–498. [Google Scholar] [CrossRef]

- Nettleton, D.; Hwang, J.G.; Caldo, R.A.; Wise, R.P. Estimating the Number of True Null Hypotheses from a Histogram of p Values. J. Agric. Biol. Environ. Stat. 2006, 11, 337–356. [Google Scholar] [CrossRef]

- Pounds, S.B.; Gao, C.L.; Zhang, H. Empirical Bayesian Selection of Hypothesis Testing Procedures for Analysis of Sequence Count Expression Data. Stat. Appl. Genet. Mol. Biol. 2012, 11. [Google Scholar] [CrossRef]

- Pounds, S.; Cheng, C. Robust Estimation of the False Discovery Rate. Bioinformatics 2006, 22, 1979–1987. [Google Scholar] [CrossRef] [PubMed]

- Jung, S.-H. Sample Size for FDR-Control in Microarray Data Analysis. Bioinformatics 2005, 21, 3097–3104. [Google Scholar] [CrossRef]

- Gadbury, G.L.; Page, G.P.; Edwards, J.; Kayo, T.; Prolla, T.A.; Weindruch, R.; Permana, P.A.; Mountz, J.D.; Allison, D.B. Power and Sample Size Estimation in High Dimensional Biology. Stat. Methods Med. Res. 2004, 13, 325–338. [Google Scholar] [CrossRef]

- Pounds, S.; Cheng, C. Sample Size Determination for the False Discovery Rate. Bioinformatics 2005, 21, 4263–4271. [Google Scholar] [CrossRef]

- Noether, G.E. Sample Size Determination for Some Common Nonparametric Tests. J. Am. Stat. Assoc. 1987, 82, 645–647. [Google Scholar] [CrossRef]

- Hsieh, F.Y.; Lavori, P.W. Sample-Size Calculations for the Cox Proportional Hazards Regression Model with Nonbinary Covariates. Control. Clin. Trials 2000, 21, 552–560. [Google Scholar] [CrossRef]

- Hart, S.N.; Therneau, T.M.; Zhang, Y.; Poland, G.A.; Kocher, J.P. Calculating Sample Size Estimates for RNA Sequencing Data. J. Comput. Biol. 2013, 20, 970–978. [Google Scholar] [CrossRef]

- Liu, P.; Hwang, J.G. Quick Calculation for Sample Size While Controlling False Discovery Rate with Application to Microarray Analysis. Bioinformatics 2007, 23, 739–746. [Google Scholar] [CrossRef] [PubMed]

- Orr, M.; Liu, P. Sample Size Estimation While Controlling False Discovery Rate for Microarray Experiments Using the Ssize. Fdr Package. R J. 2009, 1, 47. [Google Scholar] [CrossRef][Green Version]

- Wu, H.; Wang, C.; Wu, Z. PROPER: Comprehensive Power Evaluation for Differential Expression Using RNA-Seq. Bioinformatics 2015, 31, 233–241. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Zou, F.; Wright, F.A. Practical FDR-Based Sample Size Calculations in Microarray Experiments. Bioinformatics 2005, 21, 3264–3272. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.; Tseng, C.H. Sample Size Calculation with Dependence Adjustment for FDR-Control in Microarray Studies. Stat. Med. 2007, 26, 4219–4237. [Google Scholar] [CrossRef]

- Pawitan, Y.; Michiels, S.; Koscielny, S.; Gusnanto, A.; Ploner, A. False Discovery Rate, Sensitivity and Sample Size for Microarray Studies. Bioinformatics 2005, 21, 3017–3024. [Google Scholar] [CrossRef]

- Schmid, K.T.; Höllbacher, B.; Cruceanu, C.; Böttcher, A.; Lickert, H.; Binder, E.B.; Theis, F.J.; Heinig, M. scPower Accelerates and Optimizes the Design of Multi-Sample Single Cell Transcriptomic Studies. Nat. Commun. 2021, 12, 6625. [Google Scholar] [CrossRef]

- Ching, T.; Huang, S.; Garmire, L.X. Power Analysis and Sample Size Estimation for RNA-Seq Differential Expression. RNA 2014, 20, 1684–1696. [Google Scholar] [CrossRef]

- Jung, S.-H.; Sohn, I.; George, S.L.; Feng, L.; Leppert, P.C. Sample Size Calculation for Microarray Experiments with Blocked One-Way Design. BMC Bioinform. 2009, 10, 164. [Google Scholar] [CrossRef] [PubMed]

- Lee ML, T.; Whitmore, G.A. Power and Sample Size for DNA Microarray Studies. Stat. Med. 2002, 21, 3543–3570. [Google Scholar]

- Li, C.I.; Su, P.F.; Shyr, Y. Sample Size Calculation Based on Exact Test for Assessing Differential Expression Analysis in RNA-Seq Data. BMC Bioinform. 2013, 14, 357. [Google Scholar] [CrossRef]

- Glueck, D.H.; Mandel, J.; Karimpour-Fard, A.; Hunter, L.; Muller, K.E. Exact Calculations of Average Power for the Benjamini-Hochberg Procedure. Int. J. Biostat. 2008, 4, 11. [Google Scholar] [CrossRef]

| 0.90 | 0.05 | 0.5 | 0.002618 | 0.002762 | 0.002762 | 0.002924 |

| 0.95 | 0.05 | 0.5 | 0.001312 | 0.001348 | 0.001348 | 0.001385 |

| 0.99 | 0.05 | 0.5 | 0.000263 | 0.000264 | 0.000264 | 0.000266 |

| 0.90 | 0.10 | 0.5 | 0.005495 | 0.005812 | 0.005810 | 0.006173 |

| 0.95 | 0.10 | 0.5 | 0.002762 | 0.002841 | 0.002840 | 0.002924 |

| 0.99 | 0.10 | 0.5 | 0.000555 | 0.000558 | 0.000558 | 0.000561 |

| 0.90 | 0.50 | 0.5 | 0.045455 | 0.049740 | 0.049510 | 0.055556 |

| 0.95 | 0.50 | 0.5 | 0.023810 | 0.024968 | 0.024938 | 0.026316 |

| 0.99 | 0.50 | 0.5 | 0.004950 | 0.005000 | 0.005000 | 0.005051 |

| 0.90 | 0.05 | 0.8 | 0.004188 | 0.004571 | 0.004569 | 0.004678 |

| 0.95 | 0.05 | 0.8 | 0.002100 | 0.002192 | 0.002192 | 0.002216 |

| 0.99 | 0.05 | 0.8 | 0.000421 | 0.000424 | 0.000424 | 0.000425 |

| 0.90 | 0.10 | 0.8 | 0.008791 | 0.009636 | 0.009627 | 0.009877 |

| 0.95 | 0.10 | 0.8 | 0.004420 | 0.004624 | 0.004623 | 0.004678 |

| 0.99 | 0.10 | 0.8 | 0.000888 | 0.000896 | 0.000896 | 0.000898 |

| 0.90 | 0.50 | 0.8 | 0.072727 | 0.084772 | 0.083619 | 0.088889 |

| 0.95 | 0.50 | 0.8 | 0.038095 | 0.041201 | 0.041063 | 0.042105 |

| 0.99 | 0.50 | 0.8 | 0.007921 | 0.008048 | 0.008047 | 0.008081 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, Y.; Seffernick, A.E.; Onar-Thomas, A.; Pounds, S.B. Computing Power and Sample Size for the False Discovery Rate in Multiple Applications. Genes 2024, 15, 344. https://doi.org/10.3390/genes15030344

Ni Y, Seffernick AE, Onar-Thomas A, Pounds SB. Computing Power and Sample Size for the False Discovery Rate in Multiple Applications. Genes. 2024; 15(3):344. https://doi.org/10.3390/genes15030344

Chicago/Turabian StyleNi, Yonghui, Anna Eames Seffernick, Arzu Onar-Thomas, and Stanley B. Pounds. 2024. "Computing Power and Sample Size for the False Discovery Rate in Multiple Applications" Genes 15, no. 3: 344. https://doi.org/10.3390/genes15030344

APA StyleNi, Y., Seffernick, A. E., Onar-Thomas, A., & Pounds, S. B. (2024). Computing Power and Sample Size for the False Discovery Rate in Multiple Applications. Genes, 15(3), 344. https://doi.org/10.3390/genes15030344