Abstract

In the studies of Alzheimer’s disease (AD), jointly analyzing imaging data and genetic data provides an effective method to explore the potential biomarkers of AD. AD can be separated into healthy controls (HC), early mild cognitive impairment (EMCI), late mild cognitive impairment (LMCI) and AD. In the meantime, identifying the important biomarkers of AD progression, and analyzing these biomarkers in AD provide valuable insights into understanding the mechanism of AD. In this paper, we present a novel data fusion method and a genetic weighted random forest method to mine important features. Specifically, we amplify the difference among AD, LMCI, EMCI and HC by introducing eigenvalues calculated from the gene p-value matrix for feature fusion. Furthermore, we construct the genetic weighted random forest using the resulting fused features. Genetic evolution is used to increase the diversity among decision trees and the decision trees generated are weighted by weights. After training, the genetic weighted random forest is analyzed further to detect the significant fused features. The validation experiments highlight the performance and generalization of our proposed model. We analyze the biological significance of the results and identify some significant genes (CSMD1, CDH13, PTPRD, MACROD2 and WWOX). Furthermore, the calcium signaling pathway, arrhythmogenic right ventricular cardiomyopathy and the glutamatergic synapse pathway were identified. The investigational findings demonstrate that our proposed model presents an accurate and efficient approach to identifying significant biomarkers in AD.

1. Introduction

As the most common type of dementia, Alzheimer’s disease (AD) affects the cognition, behavior, and memory of senior adults [1]. In the research of AD, biomarkers can provide new targets for AD and facilitate the diagnosis and treatment of AD. Recently, scientists have obtained a large amount of imaging data (such as the Magnetic Resonance Imaging (MRI), Computed Tomography (CT) and Diffusion Tensor Imaging (DTI)) and the corresponding genetic data (such as the genetic sequencing data). These imaging data and genetic data were used to explore the association between them, detect the biomarker and diagnose the early stage of the disease. Genome-wide association analysis (GWAS) was proposed to identify single nucleotide polymorphisms (SNPs) associated with phenotype [2]. On this basis, FGWAS [3], vGWAS [4], vGeneWAS [5] and other methods [6] were developed to identify genetic markers. To avoid the disadvantages of the single GWAS method, studies based on GWAS and machine learning were performed to capture the markers. Compared with pure association analysis, the combination of GWAS method and machine learning was more effective in analyzing the association between imaging data and genetic data.

Gaetani et al. [7] identified that SIRT2, HGF, MMP-10 and CXCL5 reflected neuroinflammation in early AD using cerebrospinal fluid analysis, spearman correlation and least absolute shrinkage and selection operator. Popuri et al. [8] proposed a model to predict the probability of individuals diagnosed with dementia of Alzheimer’s type (DAT) and achieved the classification area under the curve (AUC) of 0.78 from individuals diagnosed with DAT and from individuals not diagnosed with DAT. Using multimodal neuroimaging biomarkers, Luckett et al. [9] applied artificial neural networks to investigate the changes in Aβ deposition, glucose metabolism and brain atrophy of 131 mutation carriers and 74 non-carriers. They obtained the caudate, cingulate, and precuneus as predictors and identified the biphasic response in metabolism. Sinead et al. [10] used 304 subjects from the INSIGHT-preAD cohort to construct the candidate features and applied the random forest method, logistic regression, and support vector machine to predict the amyloid status. Ezzati et al. [11] used the K-nearest neighbors (KNN) algorithm to identify individuals with declining cognitive function and stable cognitive function among the 202 participants from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) and the 77 participants from the placebo arm of the phase III trial of Semagacestat. They obtained a positive prediction accuracy of 80.8%. However, there are still limitations in these studies. Although machine learning methods have been applied and promising results found, the machine learning models used in these studies were traditional models, and there is no performance comparison against the other methods. For example, if we want to extract an important feature set from thousands of features, hundreds of thousands of experiments should be repeated using traditional models and this will lead to a large cost of time. The fusion model of different algorithms provides an efficient method to resolve the problem. Moreover, due to the large number of features generated from voxel-based MRI, how to refine and fuse the multi-features and extract effective information is a field worthy of further investigation.

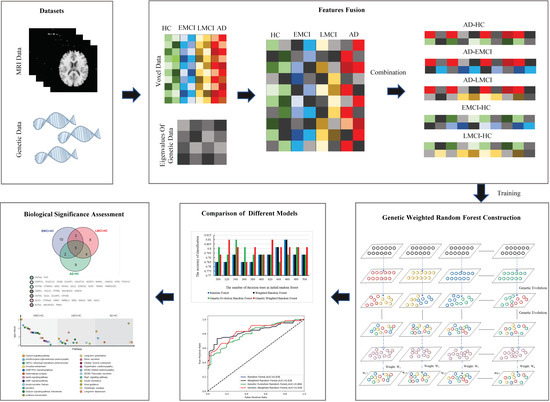

The workflow of this study is shown in Figure 1.

Figure 1.

The workflow of the presented study.

In this study, we have proposed a novel method to fuse multi-features and a genetic weighted random forest model to identify the important fused features (calculated by the eigenvalues from genetic data and the voxel values from voxel-based MRI data) from different datasets. Firstly, we constructed a matrix using the genetic data associated with AD and calculated the eigenvalues of the matrix using the datasets of HC and AD. Then, we extracted the voxel features from imaging data and applied the eigenvalues of genetic data to construct the fused features. Subsequently, we proposed a genetic weighted random forest model to mine the important features from the fused features and applied different models for modeling comparison. We assessed the biological significance using the extracted features. Finally, we introduced the EMCI and LMCI datasets to evaluate the changes of genes and pathways that might lead to the transaction from EMCI to AD. The results prove that our model is efficient to extract the important features and to provide the candidate biomarkers for the AD diagnosis. In addition, our method can be used for other neurological diseases.

2. Materials and Methods

2.1. Imaging and Genetic Data

In this study, we used the imaging and genetic data for the prediction of AD biomarkers. We downloaded the MRI data of 680 males and 587 females, including 310 HC, 271 EMCI, 390 LMCI and 296 AD subjects from ADNI (adni.loni.usc.edu, accessed on 15 May 2020). The details of these participants are shown in Table 1.

Table 1.

Participant characteristics. HC = healthy control; EMCI = Early Mild Cognitive Impairment; LMCI = Late Mild cognitive Impairment; AD = Alzheimer’s disease; M/F = male/female; Edu = education; sd = standard deviation; p = p-value calculated by t-test.

We applied voxel-based morphometry (VBM) to preprocess the MRI scans. The downloaded images were skull-stripped and segmented using CAT12 [12]. Then, panning, rotating and swivel were applied to the resulting images [13]. The images were then subjected to local nonlinear deformation and aligned to the Montreal Neurological Institute (MNI) space. To ensure uniformity of the imaging data for each participant and to preserve inter-individual variation, we modulated the images and obtained the gray matter images. Finally, to reduce the cost of data processing, we down-sampled the obtained images from 181 × 218 × 181 to 61 × 73 × 61 voxels and aligned them into the anatomical automatic labeling (AAL) template [14,15].

The Illumina GWAS arrays (610-Quad, OmniExpress or HumanOmni2.5-4v1) (Illumina, Inc., San Diego, CA, USA) and blood genomic DNA samples were used to genotype the participants [16]. Then, we applied PLINK v1.9 [17] to extract SNPs using the following process: (1) extracting SNPs on chromosome 1–22; (2) call rate of each SNP ≥ 95%; (3) minor allele frequency of each SNP ≥ 5%; (4) Hardy–Weinberg equilibrium test p ≥ 1 × 106; (5) call rate of each participant ≥ 95%. Finally, we obtained 5,574,300 SNPs that passed the quality control.

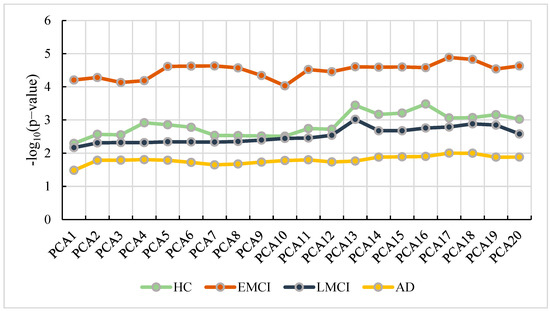

In this study, we used the principal components from population stratification analysis as the covariates for GWAS (linear regression). We separated the participants into four groups based on the diagnosis statues of the participants. To determine the optimal number of principal component analysis (PCA) in our study, we conducted GWAS repeatedly with different numbers of PCA ranging from 1 to 20. Then, we applied the GATEs (gene-based association test that uses extended Simes) method [18] to calculate p-values for genes. Due to the biological significance of APP, we selected APP as the candidate gene to determine the optimal number of PCA. Figure 2 shows the results of APP with different numbers of PCA.

Figure 2.

The number of PCA vs. the corresponding p-value of APP.

From Figure 2, we can observe that the best number of PCA in HC is 16, while the number in EMCI, LMCI and AD are 17, 13 and 17, respectively. With the optimal parameters, we performed GWAS (linear regression) using the genotype, phenotype, and covariates (including age, gender, education, and the optimal number of PCA) in each group and conducted Bonferroni correction for multiple testing. Then, we obtained the GWAS results with 5,574,300 SNPs. We selected SNPs with a p-value less than 0.05 and mapped them into corresponding genes.

2.2. Features Fusion

To construct the fused features, we analyzed the MRI and genetic data for each group separately. For the MRI data, we used the 90 brain regions from AAL atlas to calculate the voxels of each participant and saved them as matrices M. The voxel-based MRI data of 1267 participants was used in our work and the number of voxels in 90 brain regions was 43,851. Since there were a total of 43,851 voxels in 90 brain regions, the size of M was 1267 × 43,851. For our binary classification, two sets of data were required in our experiment. So, we obtained the , , and from M according to Table 1, while the size of , , and were 296 43,851, 390 43,851, 271 43,851 and 310 43,851, respectively.

For the genetic data, we calculated SNPs in 24 genes [19] associated with AD. Then, we used the corresponding SNPs to construct the vector (Equation (1)).

where is the jth SNP in . The vector contained information of SNPs in 24 genes and used the p-value to generate vector (Equation (2)).

From Equation (2), we obtained the vector () in each group. Since the number of SNPs in some genes were less than 24, we filled in the missing values with 0. Using the resulting vectors, we obtained the matrix () from Equation (3).

Then, we applied Equation (4) to calculate the eigenvalue of each .

where E is the unity matrix, x is an eigenvector, and is the corresponding eigenvalue. Since there were 24 eigenvalues in each group, we selected the as the final eigenvalue of each group and defined them as , , and .

Using the resulting and M, we defined the initial datasets as S, while the elements of them were calculated by . Let , , , and denote the five initial datasets. These datasets were defined as Equation (5).

where , , , and were 606 43,851, 567 43,581, 686 43,581, 700 43,581 and 581 43,581, respectively.

2.3. Genetic Weighted Random Forest Construction

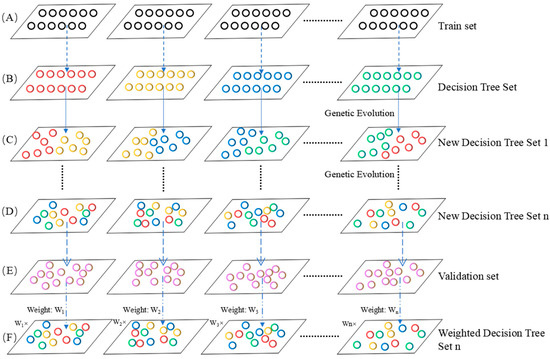

In this study, we applied the genetic weighted random forest method to extract the important features contributing to the classification of different groups. The workflow of the genetic weighted random forest method is shown in Figure 3.

Figure 3.

The workflow of genetic weighted random forest. (A) The training set. (B) The decision tree set constructed using the train set. (C) The new decision tree set after genetic evolution for once. (D) The new decision tree set after genetic evolution for n times. (E) The calculation of weight of each decision tree in validation set. (F) The weighted decision tree using the weight from E.

- Definition of the , and .

In this model, we introduced genetic algorithm and weight to a traditional random forest. Specifically, taking the as an example, we selected features randomly from dataset to construct the initial random forest. The dataset was defined as Equation (6).

where is the features of sample i in , is the label (“1” or “−1”) of the corresponding features and N is the sample number. The label corresponding to HC is “1”, the label corresponding to AD samples is “−1”, and N is 606.

To find the significant features, we separated the S into , and with the ratio defined in Equation (7).

- Construction of the decision tree.

To construct the initial decision trees, we randomly selected the features from Equation (8).

where is the features in decision tree f, is the features in , is the upward rounding function and is the number of features in . Then, we obtained one decision tree. The step was repeated for n times to obtain n decision trees, and the initial random forest was composed of n decision trees.

- Genetic evolution.

The initial random forest was regarded as the initial population. The decision tree in each group with the best classification accuracy in was picked out for the genetic evolution and two groups of five decision trees were selected as the parents. A new decision tree was generated from the selected parents. Then, the genetic evolution was repeated for n times and n new decision trees were generated. By repeating the steps above for (1, 50) times, we obtained a new random forest.

- Weight calculation.

The classification accuracy of decision tree was defined as Equation (9).

where is the classification accuracy of decision tree f in , is the number of samples that classified correctly by tree f in , and is the number of samples in .

- Weighted decision tree.

The accuracy of each resulting decision tree was used as the weight to adjust the corresponding decision tree (Equation (10)).

where is the resulting decision trees, is the weighted decision tree f and is the weight of decision tree f. To obtain the final classification accuracy of the genetic weighted random forest, we utilized to calculate the classification accuracy using Equation (11).

where the is the final classification accuracy of the genetic weighted random forest, the is the number of samples that classified correctly by all weighted decision trees in and the is the number of samples in .

2.4. Comparison of Different Models

To determine the repeatability of our model, we performed 10 independent experiments. We also applied the traditional random forest, genetic evolution random forest and weighted random forest as the comparison models to verify our model. Moreover, to evaluate the stability of our model, we performed 10 independent experiments and calculated the accuracy with 5 datasets.

2.5. Biological Significance Assessment

Through the process described in Section 2.3, we obtained five sets of features from , , , and . To extract the important features, we sorted the features from and according to their frequency and selected the top 1000 features as candidate features. Then, we defined the range of features as [k, 1000], where k was the smallest integer greater than and divisible by 10, and the step was 10. Subsequently, we applied the genetic weighted random forest to select the important features.

Using the resulting features, we calculated the optimal number of PCA and performed GWAS with covariates (age, gender, education and PCA results). The ECS (Effective chi-square test) method [20] was applied to calculate the p-values of genes and Bonferroni correction was applied to perform multiple testing. Finally, we selected the genes (corrected p-value < 0.001) and introduced the pathway analysis [21] to assess the biological significance of the important features.

3. Results

3.1. The Results of Parameter Optimization

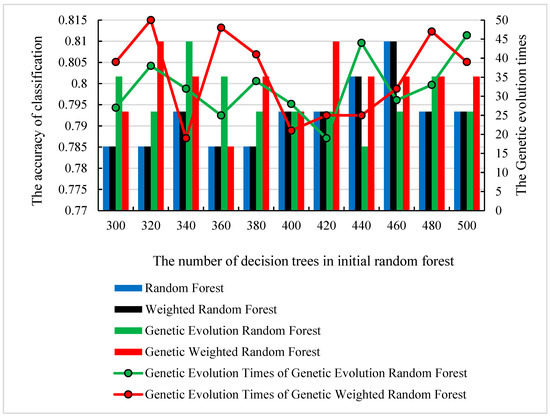

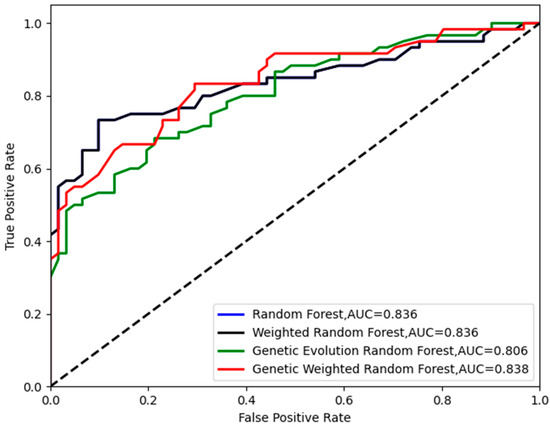

We used the as the dataset S. Initially, features were randomly extracted from the initial datasets to construct one initial decision tree. Then, the number of decision trees f was set to the range of (300, 500) and f decision trees were randomly constructed. We randomly selected and traversed all the parameters described in Section 2.3. To find the optimal parameter of each model, we conducted 12 repeated independent experiments. To avoid the accidental best parameter, we removed the best and the worst results. The accuracy and the corresponding number of decision trees are shown in Figure 4. The corresponding ROC curves (receiver operating characteristic curve) are shown in Figure 5.

Figure 4.

The optimal number of decision trees, genetic evolution times and their corresponding classification accuracy.

Figure 5.

The ROC curves of the four models in .

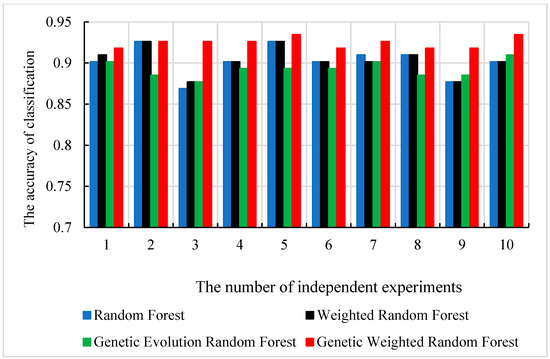

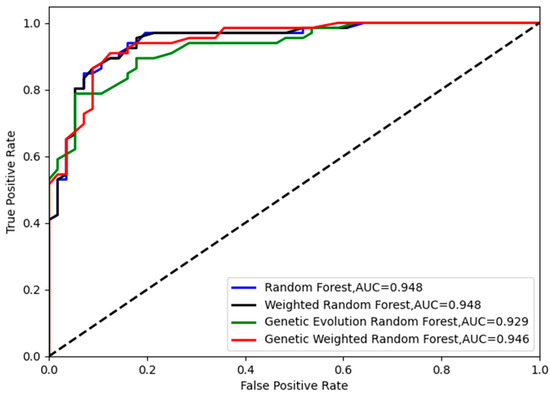

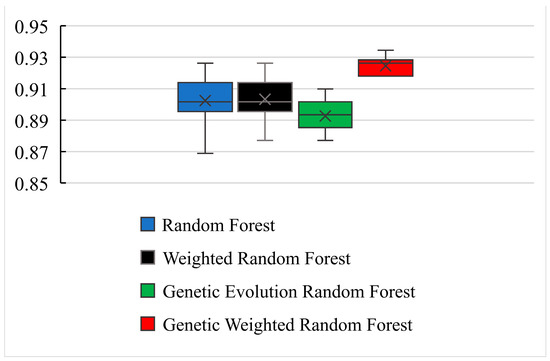

As shown in Figure 4, all of the peaks of classification accuracy in four models are 80.99%. The nodes of random forest and weighted random forest are 460, while the nodes of genetic evolution random forest and genetic weighted random forest are (340, 32) and (320, 50). From Figure 5, we find that the AUC values (Area Under Curve) of four models are all above 0.8 and our model has the best AUC of 0.838. Then, we applied the optimal parameters to obtain the final classification accuracy in . The 10 independent experiments were used to ensure greater credibility in our findings. Figure 6 shows the results of 4 models in and Figure 7 shows the ROC curves of the four models in . Figure 8 shows the boxplot of 4 models in 10 independent experiments.

Figure 6.

The classification accuracy of 4 models in 10 independent experiments.

Figure 7.

The ROC curves of the four models in .

Figure 8.

The boxplot of 4 models in 10 independent experiments.

As shown in Figure 6 and Figure 8, the best accuracy is found in our model, and the accuracy of our model is above 91%. The best is 93.44% and the worst is 91.8%. The genetic evolution random forest is lower than our model while the genetic evolution is applied in them. From Figure 7, we find that the AUC values of four models are all above 0.92. Moreover, the random forest model and weighted random forest model have the same classification accuracy seven times, and the other three times, the accuracy of the weighted random forest model is better than the random forest model. This shows that although the use of weights contributes to classification, the improvement is limited. When the genetic evolution was introduced in the random forest, the accuracy changed a lot. There are six times that the accuracy worsens, one time that the accuracy is the same, and only 3 times that it gets better. This shows that genetic evolution introduces changes to decision trees, but the introduced change is not necessarily positive. However, the use of weights after genetic evolution greatly increases the accuracy.

We also calculated the Precision, Recall and F1 score and present them in Table 2.

Table 2.

The Precision, Recall and F1 score of valid set and test set.

3.2. Extraction of Important Features

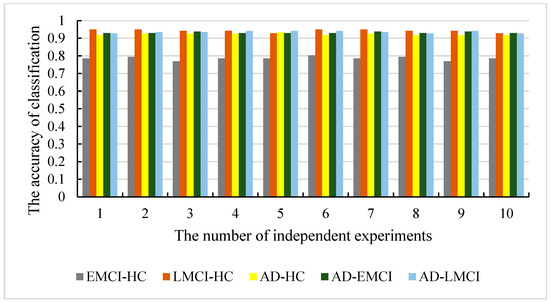

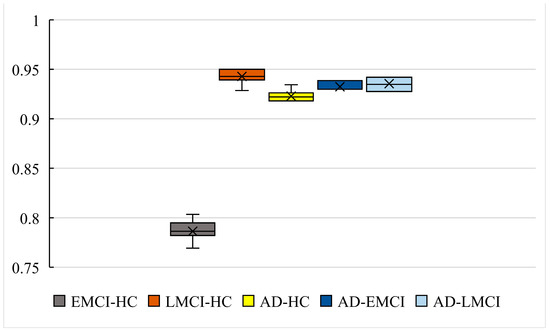

To evaluate the universality of our model and to avoid the contingency of the experiment, we conducted 10 independent experiments with the other 4 datasets (, , and ). Figure 9 shows the accuracy of the 10 independent experiments with all 5 datasets. Figure 10 shows the boxplot of our models in five datasets. Table 3 presents the Precision, Recall and F1 score of our model in 10 independent experiments.

Figure 9.

The classification accuracy of our models in five datasets.

Figure 10.

The boxplot of our models in five datasets.

Table 3.

The Precision, Recall and F1 score of our model in 10 independent experiments.

As shown in Figure 9 and Figure 10, the accuracy of is 93.44% and the worst one is 91.8%. The difference between them is only 1.64%. The results with show better stability (the best is 93.86% and the worst is 92.98%), while the results with also give a difference with 1.45% (the best is 94.2% and the worst is 92.75%). The accuracy with is the best among the five datasets (the best is 95% and the worst is 92.85%). The best accuracy with is 80.34% and the worst is 76.92%, which is also the worst in five datasets. However, the differences in the best and worst accuracy results are less than 4% for all five datasets. This proves that that the results are stable, and there is no experimental contingency in the results.

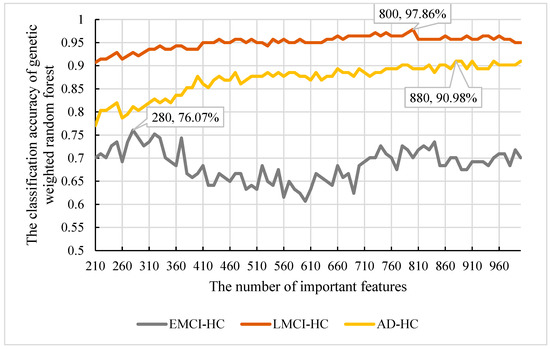

From Section 3.1, we obtained the final features using our model. However, the features were too many for the biological significance assessment. We needed to extract the features with the best classification and refer to them as important features. To determine the important features, we calculated the frequency of all features obtained and sorted them according to their frequency. Then, we used the top 1000 features as the candidate features and used our model to select the important features. Considering that there were 209 nodes in each of our decision trees, we set the new number of nodes in the range of [210, 1000] and the step size to 10. Subsequently, we applied the parameters obtained in Section 3.1 to construct the initial random forest. The classification accuracy of the features is shown in Figure 11.

Figure 11.

The classification accuracy and important features in , and .

From Figure 11, we can observe that the best accuracy was 90.98% with 880 features. So, the top 880 features were the important features in . Moreover, considering that the best accuracy of 95% is in and the worst is in , we also extracted the important features in and and found that the best classification accuracy of was 97.86% and was 76.07%. The corresponding node were 800 and 280, respectively. So, the important features of were the top 800 features and the important features of were the top 280.

3.3. Biological Significance Assessment

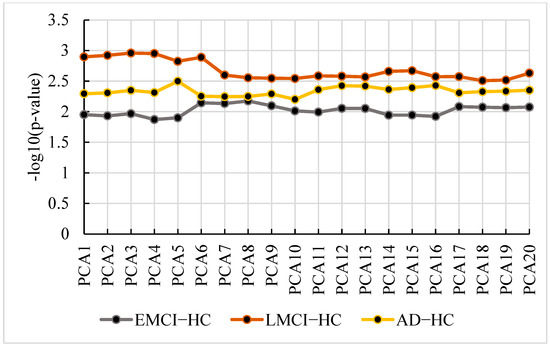

Using the extracted features, we calculated the optimal PCA number of each group. Figure 12 shows the p-value and the PCA number.

Figure 12.

The p-value and PCA number in , and .

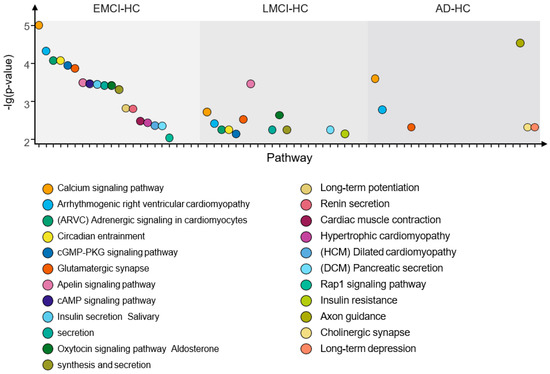

From Figure 12, we find the optimal PCA number in , and is 5, 3 and 8, respectively. Using the selected number, we performed GWAS in each group and calculated p-values for genes. Then, we applied the genes with corrected p-values < 0.001 (Bonferroni corrected) for pathway analysis. We selected the pathways with corrected p-value < 0.01 in each group. The pathways and their corrected p-value of each group are shown in Figure 13 [22] and the top 20 genes in 3 groups are shown in Figure 14.

Figure 13.

The pathways with corrected p-value < 0.01 of the EMCI−HC group, LMCI−HC group and AD−HC group.

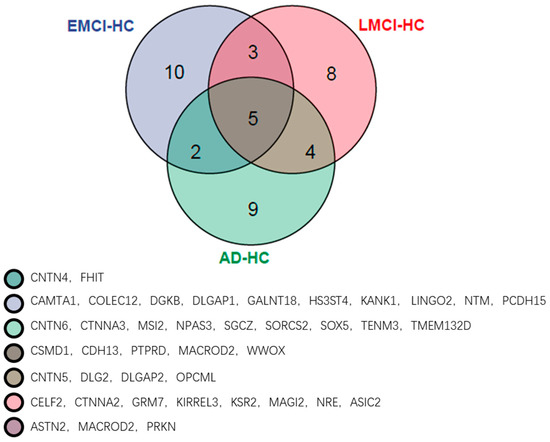

Figure 14.

The top 20 genes of the EMCI−HC group, LMCI−HC group and AD−HC group.

As shown in Figure 13, there are three intersection pathways with corrected p-value < 0.01 in EMCI−group, LMCI−HC group and AD-HC group, while the three pathways are the significant pathways related to AD. This indicates that there are some common pathways among the EMCI, LMCI and AD groups, possibly because the EMCI and LMCI are the transaction statues between HC and AD. In addition, we also find that the number of top pathways is quite different in each group, suggesting that this may be caused by the different top genes with corrected p-value < 0.001 in each group. Therefore, we calculated the top 20 genes of each group and presented them in Figure 14.

From Figure 14, we can observe that there are five of the same top genes in three groups, and these genes are all associated with AD. The numbers of genes in only one group are 10 (EMCI−HC), 8 (LMCI−HC) and 9 (AD−HC), and the numbers of genes between two groups are 3 (EMCI−HC and LMCI−HC), 4 (LMCI−HC and AD−HC) and 2 (EMCI−HC and AD−HC).

4. Discussion

In this study, we proposed a novel feature construction method using the eigenvalues of gene matrix to amplify the features of each group. Moreover, we also proposed a feature detection model, which was used to mine the underlying information of the fused features.

From Figure 2, we find that the p-values changed depending on the number of principal components. However, due to the diversity of imaging data and genetic data in each group, the change was not a linear process and the GWAS result of each group was also quite different. This indicated that the difference of data in each group was amplified by jointly analyzing imaging and genetic data. Some researchers used the gene data to fuse features in other methods [23,24,25] and obtained satisfactory classification performance. In this study, we selected the GWAS results associated with AD to construct a matrix and calculated the eigenvalues to adjust the imaging features. Using the resulting features as the fused features, we proposed a genetic weighted random forest to mine the features contributed to AD.

As shown in Figure 4, we find that the optimal numbers of initial random forests of 4 models is 460, 460, 340 and 320, respectively. The training process and training results in random forest model and weighted random forest are roughly the same. The difference between the two models is used as an additional weight coefficient in the weighted random forest. In the 10 independent experiments of , we also found that the accuracy of weighted random forest is better than random forest. This indicates that the weight is able to improve the performance of random forest model. When the genetic evolution was added into random forest instead of the weight, the accuracy of the model fluctuated and no trend was found. In , the genetic evolution random forest did not perform as well as it did with . However, the accuracy became the best in 10 independent experiments when the weight and genetic evolution were added into the random forest. Although genetic evolution did not improve the performance of the decision trees, it changed the features of the decision trees, and the addition of weight made a qualitative change in the model. The accuracy of the new model reached 93.44% and the fluctuation of accuracy was less than 2% in the 10 independent experiments. This suggests that the combination of genetic evolution and weight greatly enhanced the performance and stability of the model. To evaluate the universality of our model, we performed another 10 independent experiments with , , and . From Figure 9, we observe that although the application of genetic data amplifies the differences between imaging features, the accuracy of is the lowest. This may be caused by the smaller difference in features between the EMCI and HC groups. The accuracy of the other four datasets is all above 90% and the gaps of accuracy in the four datasets are all approximately 2%. This proves that our model outperforms the other three models and the features of AD are quite different from the other three groups, as well as the features of the LCMI and HC groups. As shown in Figure 11, we identified the important features of three datasets and the accuracy of the important features were 76.07%, 97.86% and 90.98%, which were similar to the results obtained from . This proves that the results mined by our model are valid candidates for filtering important features. Likewise, there are other neurological diseases for which imaging data and genetic data are available. By fusing the corresponding voxel-based image data and genetic data, it is certainly the case that our model could also be applied effectively to these diseases and we expect to develop further deep learning-based models for a wider range of diseases.

By analyzing Figure 13 and Figure 14, the CSMD1 was found to be related to AβPP metabolism and AD [26]. The decreasing of the CDH13 expression level reduced the cell apoptosis in AD [27]. Taylor et al. found that the PTPRD altered in AD and the PTPRD mediated by BACE1 provided potential important new mechanisms for AD risks [28]. In another study, PTPRD was identified as the significant gene associated with AD [29]. The MACROD2, which was the neurodevelopmental-related gene, was reported as the risk loci of autism spectrum disorder [30], while the autism spectrum disorder had overlapping mechanisms of pathogenesis with AD [31]. Therefore, we suggested that the MACROD2 was associated with AD by affecting the autism spectrum disorder. The WWOX was down-regulated in the hippocampus of AD patients [32] and conferred AD risk [33]. Sze et al. reported that the reduction of WWOX modulated the generation of Aβ [32,34]. Furthermore, we also identified specific genes in each group. The CAMTAs were bind calmodulin to activate transcription [35] and the calmodulin played a role in long-term potentiation, learn and memory [28]. The CTNNA2 was found to be related to general cognitive function by analysis of GWAS data [36]. Li et al. found that there were three mutations of SOX5 associated with AD by segregating with the affection status, and they suggested that the SOX5 might be a new candidate gene of AD [37].

We found that the EMCI-HC group had more pathways with corrected p-value < 0.01 than LMCI-HC and AD-HC groups. The pathways were constantly streamlined from EMCI-HC and LMCI-HC to AD-HC group. The reason might be that EMCI and LMCI were the precursor stages of AD, and the increasing difference in each group might be another reason for pathway reduction. We also found that there were three intersection pathways in the three groups, and the calcium signaling pathway (corrected p-value = 9.83 × 106 in EMCI-HC, corrected p-value = 1.9 × 103 in LMCI-HC and corrected p-value = 2.54 × 104 in AD-HC) was the significant pathway. For example, excessive Ca2+ played a role in AD by increasing Aβ level, and the Ca2+ could be increased by Aβ, too [38,39,40,41]. The arrhythmogenic right ventricular cardiomyopathy (corrected p-value = 4.75 × 105 in EMCI-HC, corrected p-value = 3.8 × 103 in LMCI-HC and corrected p-value = 1.63 × 103 in AD-HC) was mapped to the region of chromosome 14q24 using linkage analysis, while one of the early-onset AD genes (AD3) was identified in this region [42]. For the glutamatergic synapse pathway (corrected p-value = 1.37 × 104 in EMCI-HC, corrected p-value = 2.95 × 103 in LMCI-HC and corrected p-value = 4.78 × 103 in AD-HC), the glutamate played an important role in memory and learning and the absence of glutamate could affect the memory, cognition, and behavior [43,44]. As the receptor of glutamate, N-methyl-D-aspartic-acid could be identified by inducting long-term potentiation (corrected p-value = 1.52 × 103 in EMCI-HC) and long-term depression (corrected p-value = 4.78 × 103 in AD-HC) [45,46]. The changes in synaptic strength were caused by the long-term potentiation and long-term depression and affected memory and learning [47]. The connection between memory and long-term potentiation in the hippocampus was identified by molecular genetic approaches [48] and tau phosphorylation in the hippocampus was enhanced by the induction of long-term depression [49,50]. From EMCI to AD, the long-term potentiation was not found in the AD-HC group and the long-term depression became a significant pathway. We suggested that the changes of these pathways might be the induced reason of the transaction from EMCI to AD.

5. Conclusions

In this paper, imaging-genetics research was performed to detect important features using genetic weighted random forest. Specifically, a novel feature fusion method was proposed to construct the fused features. Moreover, we analyzed the significance of the extracted features and proved that our model was able to produce promising performance in screening the potential features. We evaluated the robustness and stability with other data groups and this suggested that our model could be used in other diseases. By analyzing the results, we identified the intersection genes in the EMCI-HC group, LMCI-HC group and AD-HC group, including CSMD1, CDH13, PTPRD, MACROD2 and WWOX. We also identified some significant pathways associated with AD, such as the calcium signaling pathway (corrected p-value = 9.83 × 106 in EMCI-HC), arrhythmogenic right ventricular cardiomyopathy (corrected p-value = 4.75 × 105 in EMCI-HC and glutamatergic synapse pathway (corrected p-value = 1.37 × 104 in EMCI-HC). The findings demonstrate that our proposed method is able to outperform several important biomarkers for the transaction of AD diagnosis and to identify potential biomarkers for other diseases. Naturally, our work has some limitations. For example, deep learning is a new field of machine learning that uses a machine learning technique called artificial neural networks to extract features and make predictions. The application of deep learning may improve the performance of our work. We will explore a deep learning approach in our future work to investigate our proposed ideas.

Author Contributions

Z.H., L.M. and X.M. led and supervised the research. Z.H., L.M., W.L. and X.M. designed the research and wrote the article. Z.H. and X.W. performed the data processing, visualization of results. Z.H. and L.M. performed the pathway analysis. W.L. and F.W. performed data pre-processing and quality control. Z.H., L.M. and X.M. revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the National Natural Science Foundation of China (61901063, 61875022), the MOE (Ministry of Education in China) Project of Humanities and Social Sciences (19YJCZH120), and the Science and Technology Plan Project of Changzhou (CE20205042, CJ20220151). This work was also sponsored by the Qing Lan Project of Jiangsu Province (2020).

Institutional Review Board Statement

Ethical review and approval was not required for the study on human participants as data collection and sharing for this project was funded by a public database (the Alzheimer’s Disease Neuroimaging Initiative, ADNI).

Informed Consent Statement

We applied the access from ADNI. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Data Availability Statement

Data used for this study were obtained from ADNI studies via data sharing agreements that did not include permission to further share the data. Data from ADNI are available from the ADNI database (adni.loni.usc.edu) upon registration and compliance with the data usage agreement.

Acknowledgments

Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI). The complete ADNI Acknowledgement is available at http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf (accessed on 15 May 2020).

Conflicts of Interest

The authors declare no conflict of interests.

References

- Alzheimer’s Association. 2019 Alzheimer’s disease facts and figures. Alzheimer’s Dement. 2019, 15, 321–387. [Google Scholar] [CrossRef]

- Newton-Cheh, C.; Hirschhorn, J.N. Genetic association studies of complex traits: Design and analysis issues. Mutat. Res. Fundam. Mol. Mech. Mutagen. 2005, 573, 54–69. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Thompson, P.; Wang, Y.; Yu, Y.; Zhang, J.; Kong, D.; Colen, R.R.; Knickmeyer, R.C.; Zhu, H. FGWAS: Functional genome wide association analysis. NeuroImage 2017, 159, 107–121. [Google Scholar] [CrossRef] [PubMed]

- Stein, J.L.; Hua, X.; Lee, S.; Ho, A.J.; Leow, A.D.; Toga, A.W.; Saykin, A.J.; Shen, L.; Foroud, T.; Pankratz, N.; et al. Voxelwise genome-wide association study (vGWAS). NeuroImage 2010, 53, 1160–1174. [Google Scholar] [CrossRef] [PubMed]

- Hibar, D.P.; Stein, J.L.; Kohannim, O.; Jahanshad, N.; Saykin, A.J.; Shen, L.; Kim, S.; Pankratz, N.; Foroud, T.; Huentelman, M.J.; et al. Voxelwise gene-wide association study (vGeneWAS): Multivariate gene-based association testing in 731 elderly subjects. NeuroImage 2011, 56, 1875–1891. [Google Scholar] [CrossRef]

- Vounou, M.; Nichols, T.E.; Montana, G.; Alzheimer’s Disease Neuroimaging, I. Discovering genetic associations with high-dimensional neuroimaging phenotypes: A sparse reduced-rank regression approach. NeuroImage 2010, 53, 1147–1159. [Google Scholar] [CrossRef] [PubMed]

- Gaetani, L.; Bellomo, G.; Parnetti, L.; Blennow, K.; Zetterberg, H.; Di Filippo, M. Neuroinflammation and Alzheimer’s Disease: A Machine Learning Approach to CSF Proteomics. Cells 2021, 10, 1930. [Google Scholar] [CrossRef]

- Popuri, K.; Balachandar, R.; Alpert, K.; Lu, D.; Bhalla, M.; Mackenzie, I.R.; Hsiung, R.G.-Y.; Wang, L.; Beg, M.F. Development and validation of a novel dementia of Alzheimer’s type (DAT) score based on metabolism FDG-PET imaging. NeuroImage Clin. 2018, 18, 802–813. [Google Scholar] [CrossRef]

- Luckett, P.H.; McCullough, A.; Gordon, B.A.; Strain, J.; Flores, S.; Dincer, A.; McCarthy, J.; Kuffner, T.; Stern, A.; Meeker, K.L.; et al. Modeling autosomal dominant Alzheimer’s disease with machine learning. Alzheimer’s Dement. 2021, 17, 1005–1016. [Google Scholar] [CrossRef]

- Gaubert, S.; Houot, M.; Raimondo, F.; Ansart, M.; Corsi, M.-C.; Naccache, L.; Sitt, J.D.; Habert, M.-O.; Dubois, B.; De Vico Fallani, F.; et al. A machine learning approach to screen for preclinical Alzheimer’s disease. Neurobiol. Aging 2021, 105, 205–216. [Google Scholar] [CrossRef]

- Ezzati, A.; Lipton, R.B.; for the Alzheimer’s Disease Neuroimaging, I. Machine Learning Predictive Models Can Improve Efficacy of Clinical Trials for Alzheimer’s Disease. J. Alzheimer’s Dis. 2020, 74, 55–63. [Google Scholar] [CrossRef] [PubMed]

- Gaser, C.; Dahnke, R.; Thompson, P.M.; Kurth, F.; Luders, E. CAT-a computational anatomy toolbox for the analysis of structural MRI data. bioRxiv 2022. [Google Scholar] [CrossRef]

- Kurth, F.; Gaser, C.; Luders, E. A 12-step user guide for analyzing voxel-wise gray matter asymmetries in statistical parametric mapping (SPM). Nat. Protoc. 2015, 10, 293–304. [Google Scholar] [CrossRef] [PubMed]

- Jenkinson, M.; Beckmann, C.F.; Behrens, T.E.; Woolrich, M.W.; Smith, S.M. FSL. NeuroImage 2012, 62, 782–790. [Google Scholar] [CrossRef] [PubMed]

- Tzourio-Mazoyer, N.; Landeau, B.; Papathanassiou, D.; Crivello, F.; Etard, O.; Delcroix, N.; Mazoyer, B.; Joliot, M. Automated Anatomical Labeling of Activations in SPM Using a Macroscopic Anatomical Parcellation of the MNI MRI Single-Subject Brain. NeuroImage 2002, 15, 273–289. [Google Scholar] [CrossRef] [PubMed]

- Saykin, A.J.; Shen, L.; Foroud, T.M.; Potkin, S.G.; Swaminathan, S.; Kim, S.; Risacher, S.L.; Nho, K.; Huentelman, M.J.; Craig, D.W.; et al. Alzheimer’s Disease Neuroimaging Initiative biomarkers as quantitative phenotypes: Genetics core aims, progress, and plans. Alzheimer’s Dement. 2010, 6, 265–273. [Google Scholar] [CrossRef] [PubMed]

- Purcell, S.; Neale, B.; Todd-Brown, K.; Thomas, L.; Ferreira, M.A.R.; Bender, D.; Maller, J.; Sklar, P.; de Bakker, P.I.W.; Daly, M.J.; et al. PLINK: A Tool Set for Whole-Genome Association and Population-Based Linkage Analyses. Am. J. Hum. Genet. 2007, 81, 559–575. [Google Scholar] [CrossRef]

- Li, M.-X.; Gui, H.-S.; Kwan, J.S.; Sham, P.C. GATES: A Rapid and Powerful Gene-Based Association Test Using Extended Simes Procedure. Am. J. Hum. Genet. 2011, 88, 283–293. [Google Scholar] [CrossRef]

- Lambert, J.-C.; Ibrahim-Verbaas, C.A.; Harold, D.; Naj, A.C.; Sims, R.; Bellenguez, C.; Jun, G.; Destefano, A.L.; Bis, J.C.; Beecham, G.W.; et al. Meta-analysis of 74,046 individuals identifies 11 new susceptibility loci for Alzheimer’s disease. Nat. Genet. 2013, 45, 1452–1458. [Google Scholar] [CrossRef]

- Li, M.; Jiang, L.; Mak, T.S.H.; Kwan, J.S.H.; Xue, C.; Chen, P.; Leung, H.C.-M.; Cui, L.; Li, T.; Sham, P.C. A powerful conditional gene-based association approach implicated functionally important genes for schizophrenia. Bioinformatics 2019, 35, 628–635. [Google Scholar] [CrossRef]

- Bu, D.; Luo, H.; Huo, P.; Wang, Z.; Zhang, S.; He, Z.; Wu, Y.; Zhao, L.; Liu, J.; Guo, J.; et al. KOBAS-i: Intelligent prioritization and exploratory visualization of biological functions for gene enrichment analysis. Nucleic Acids Res. 2021, 49, W317–W325. [Google Scholar] [CrossRef] [PubMed]

- Shenoy, A.R. grafify: An R Package for Easy Graphs, ANOVAs and Post-Hoc Comparisons; v1.4.1; Zenodo: Geneva, Switzerland, 2021. [Google Scholar] [CrossRef]

- Bi, X.a.; Hu, X.; Wu, H.; Wang, Y. Multimodal Data Analysis of Alzheimer’s Disease Based on Clustering Evolutionary Random Forest. IEEE J. Biomed. Health Inform. 2020, 24, 2973–2983. [Google Scholar] [CrossRef] [PubMed]

- Bi, X.a.; Zhou, W.; Li, L.; Xing, Z. Detecting Risk Gene and Pathogenic Brain Region in EMCI Using a Novel GERF Algorithm Based on Brain Imaging and Genetic Data. IEEE J. Biomed. Health Inform. 2021, 25, 3019–3028. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, W.; Cao, L.; Luo, H.; Xu, S.; Bao, P.; Meng, X.; Liang, H.; Fang, S. Hippocampal Subregion and Gene Detection in Alzheimer’s Disease Based on Genetic Clustering Random Forest. Genes 2021, 12, 683. [Google Scholar] [CrossRef]

- Parcerisas, A.; Rubio, S.E.; Muhaisen, A.; Gómez-Ramos, A.; Pujadas, L.; Puiggros, M.; Rossi, D.; Ureña, J.; Burgaya, F.; Pascual, M.; et al. Somatic Signature of Brain-Specific Single Nucleotide Variations in Sporadic Alzheimer’s Disease. J. Alzheimer’s Dis. 2014, 42, 1357–1382. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, Z.; Chen, W.; Gu, H.; Yan, Q.J. Regulatory mechanism of microRNA-377 on CDH13 expression in the cell model of Alzheimer’s disease. Eur. Rev. Med. Pharmacol. Sci. 2018, 22, 2801–2808. [Google Scholar]

- Huentelman, M.J.; Papassotiropoulos, A.; Craig, D.W.; Hoerndli, F.J.; Pearson, J.V.; Huynh, K.-D.; Corneveaux, J.; Hänggi, J.; Mondadori, C.R.A.; Buchmann, A.; et al. Calmodulin-binding transcription activator 1 (CAMTA1) alleles predispose human episodic memory performance. Hum. Mol. Genet. 2007, 16, 1469–1477. [Google Scholar] [CrossRef]

- Bi, X.-A.; Li, L.; Xu, R.; Xing, Z. Pathogenic Factors Identification of Brain Imaging and Gene in Late Mild Cognitive Impairment. Interdiscip. Sci. Comput. Life Sci. 2021, 13, 511–520. [Google Scholar] [CrossRef]

- Anney, R.J.L.; Ripke, S.; Anttila, V.; Grove, J.; Holmans, P.; Huang, H.; Klei, L.; Lee, P.H.; Medland, S.E.; Neale, B.; et al. Meta-analysis of GWAS of over 16,000 individuals with autism spectrum disorder highlights a novel locus at 10q24.32 and a significant overlap with schizophrenia. Mol. Autism 2017, 8, 21. [Google Scholar] [CrossRef]

- Nadeem, M.S.; Hosawi, S.; Alshehri, S.; Ghoneim, M.M.; Imam, S.S.; Murtaza, B.N.; Kazmi, I. Symptomatic, Genetic, and Mechanistic Overlaps between Autism and Alzheimer’s Disease. Biomolecules 2021, 11, 1635. [Google Scholar] [CrossRef]

- Sze, C.-I.; Su, M.; Pugazhenthi, S.; Jambal, P.; Hsu, L.-J.; Heath, J.; Schultz, L.; Chang, N.-S. Down-regulation of WW Domain-containing Oxidoreductase Induces Tau Phosphorylation in Vitro: A Potential Role in Alzheimer’s Disease. J. Biol. Chem. 2004, 279, 30498–30506. [Google Scholar] [CrossRef] [PubMed]

- Kunkle, B.W.; Grenier-Boley, B.; Sims, R.; Bis, J.C.; Damotte, V.; Naj, A.C.; Boland, A.; Vronskaya, M.; van der Lee, S.J.; Amlie-Wolf, A.; et al. Genetic meta-analysis of diagnosed Alzheimer’s disease identifies new risk loci and implicates Aβ, tau, immunity and lipid processing. Nat. Genet. 2019, 51, 414–430. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-C.; Ho, P.-C.; Lee, I.-T.; Chen, Y.-A.; Chu, C.-H.; Teng, C.-C.; Wu, S.-N.; Sze, C.-I.; Chiang, M.-F.; Chang, N.-S. WWOX phosphorylation, signaling, and role in neurodegeneration. Cells 2018, 12, 563. [Google Scholar] [CrossRef] [PubMed]

- Bouché, N.; Scharlat, A.; Snedden, W.; Bouchez, D.; Fromm, H. A Novel Family of Calmodulin-binding Transcription Activators in Multicellular Organisms *. J. Biol. Chem. 2002, 277, 21851–21861. [Google Scholar] [CrossRef] [PubMed]

- Smeland, O.B.; Frei, O.; Kauppi, K.; Hill, W.D.; Li, W.; Wang, Y.; Krull, F.; Bettella, F.; Eriksen, J.A.; Witoelar, A.; et al. Identification of Genetic Loci Jointly Influencing Schizophrenia Risk and the Cognitive Traits of Verbal-Numerical Reasoning, Reaction Time, and General Cognitive Function. JAMA Psychiatry 2017, 74, 1065–1075. [Google Scholar] [CrossRef]

- Li, A.; Hooli, B.; Mullin, K.; Tate, R.E.; Bubnys, A.; Kirchner, R.; Chapman, B.; Hofmann, O.; Hide, W.; Tanzi, R.E. Silencing of the Drosophila ortholog of SOX5 leads to abnormal neuronal development and behavioral impairment. Hum. Mol. Genet. 2017, 26, 1472–1482. [Google Scholar] [CrossRef]

- Berridge, M.J. Calcium hypothesis of Alzheimer’s disease. Pflügers Arch. Eur. J. Physiol. 2010, 459, 441–449. [Google Scholar] [CrossRef]

- Celsi, F.; Pizzo, P.; Brini, M.; Leo, S.; Fotino, C.; Pinton, P.; Rizzuto, R. Mitochondria, calcium and cell death: A deadly triad in neurodegeneration. Biochim. Biophys. Acta Bioenergy 2009, 1787, 335–344. [Google Scholar] [CrossRef]

- Bojarski, L.; Herms, J.; Kuznicki, J. Calcium dysregulation in Alzheimer’s disease. Neurochem. Int. 2008, 52, 621–633. [Google Scholar] [CrossRef]

- Alzheimer’s Association Calcium Hypothesis, W.; Khachaturian, Z.S. Calcium Hypothesis of Alzheimer’s disease and brain aging: A framework for integrating new evidence into a comprehensive theory of pathogenesis. Alzheimer’s Dement. 2017, 13, 178–182.e117. [Google Scholar] [CrossRef]

- Roux, A.-F.; Rommens, J.M.; Read, L.; Duncan, A.M.V.; Cox, D.W. Physical and Transcription Map in the Region 14q24.3: Identification of Six Novel Transcripts. Genomics 1997, 43, 130–140. [Google Scholar] [CrossRef] [PubMed]

- Cassano, T.; Serviddio, G.; Gaetani, S.; Romano, A.; Dipasquale, P.; Cianci, S.; Bellanti, F.; Laconca, L.; Romano, A.D.; Padalino, I. Glutamatergic alterations and mitochondrial impairment in a murine model of Alzheimer disease. Neurobiol. Aging 2012, 33, 1121.e1–1121.e12. [Google Scholar] [CrossRef] [PubMed]

- Rupsingh, R.; Borrie, M.; Smith, M.; Wells, J.; Bartha, R. Reduced hippocampal glutamate in Alzheimer disease. Neurobiol. Aging 2011, 32, 802–810. [Google Scholar] [CrossRef]

- Styr, B.; Slutsky, I. Imbalance between firing homeostasis and synaptic plasticity drives early-phase Alzheimer’s disease. Nat. Neurosci. 2018, 21, 463–473. [Google Scholar] [CrossRef] [PubMed]

- Whitlock, J.R.; Heynen, A.J.; Shuler, M.G.; Bear, M.F. Learning induces long-term potentiation in the hippocampus. Science 2006, 313, 1093–1097. [Google Scholar] [CrossRef] [PubMed]

- Citri, A.; Malenka, R.C. Synaptic plasticity: Multiple forms, functions, and mechanisms. Neuropsychopharmacology 2008, 33, 18–41. [Google Scholar] [CrossRef] [PubMed]

- Fukunaga, K.; Miyamoto, E. A working model of CaM kinase II activity in hippocampal long-term potentiation and memory. Neurosci. Res. 2000, 38, 3–17. [Google Scholar] [CrossRef] [PubMed]

- Kimura, T.; Whitcomb, D.J.; Jo, J.; Regan, P.; Piers, T.; Heo, S.; Brown, C.; Hashikawa, T.; Murayama, M.; Seok, H. Microtubule-associated protein tau is essential for long-term depression in the hippocampus. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20130144. [Google Scholar] [CrossRef]

- Regan, P.; Piers, T.; Yi, J.-H.; Kim, D.-H.; Huh, S.; Park, S.J.; Ryu, J.H.; Whitcomb, D.J.; Cho, K. Tau phosphorylation at serine 396 residue is required for hippocampal LTD. J. Neurosci. 2015, 35, 4804–4812. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).