A Comparative Study of Supervised Machine Learning Algorithms for the Prediction of Long-Range Chromatin Interactions

Abstract

1. Introduction

2. Materials and Methods

2.1. Processing Publicly Available Data

2.2. Identification of RAD21-Associated Loops

2.3. Machine Learning Data Matrix

2.4. Supervised Learning

2.4.1. Decision Trees (DT)

2.4.2. Random Forests (RF)

2.4.3. XGBoost

2.4.4. Deep Learning

2.4.5. Support Vector Machines (SVM)

- True Positive (TP): a true positive represents the case when the actual class matches the predicted class;

- True Negative (TN): a true negative is similar to a true positive, the only difference being that the actual and predicted classes are part of the negative examples;

- False Positive (FP): a false positive is the case when the actual class negative, but the example is classified as positive;

- False Negative (FN): similar to false positive, but it this case this refers to the case where a positive example is classified as negative.

3. Results and Discussion

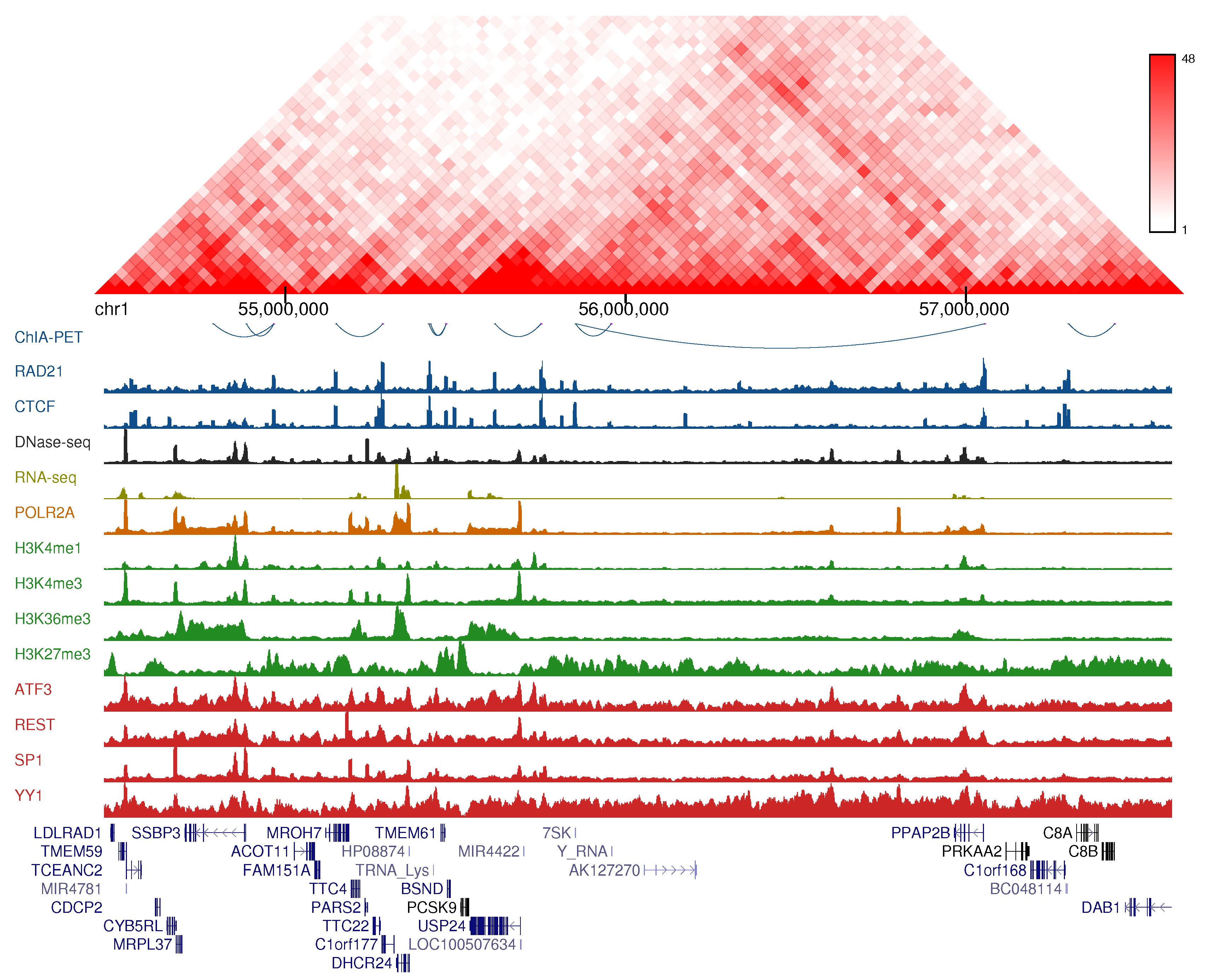

3.1. Association of Genomic and Epigenomic Features with Chromatin Loops

3.2. An Integrative Approach to Predict Chromatin Loops

3.3. Model Performance

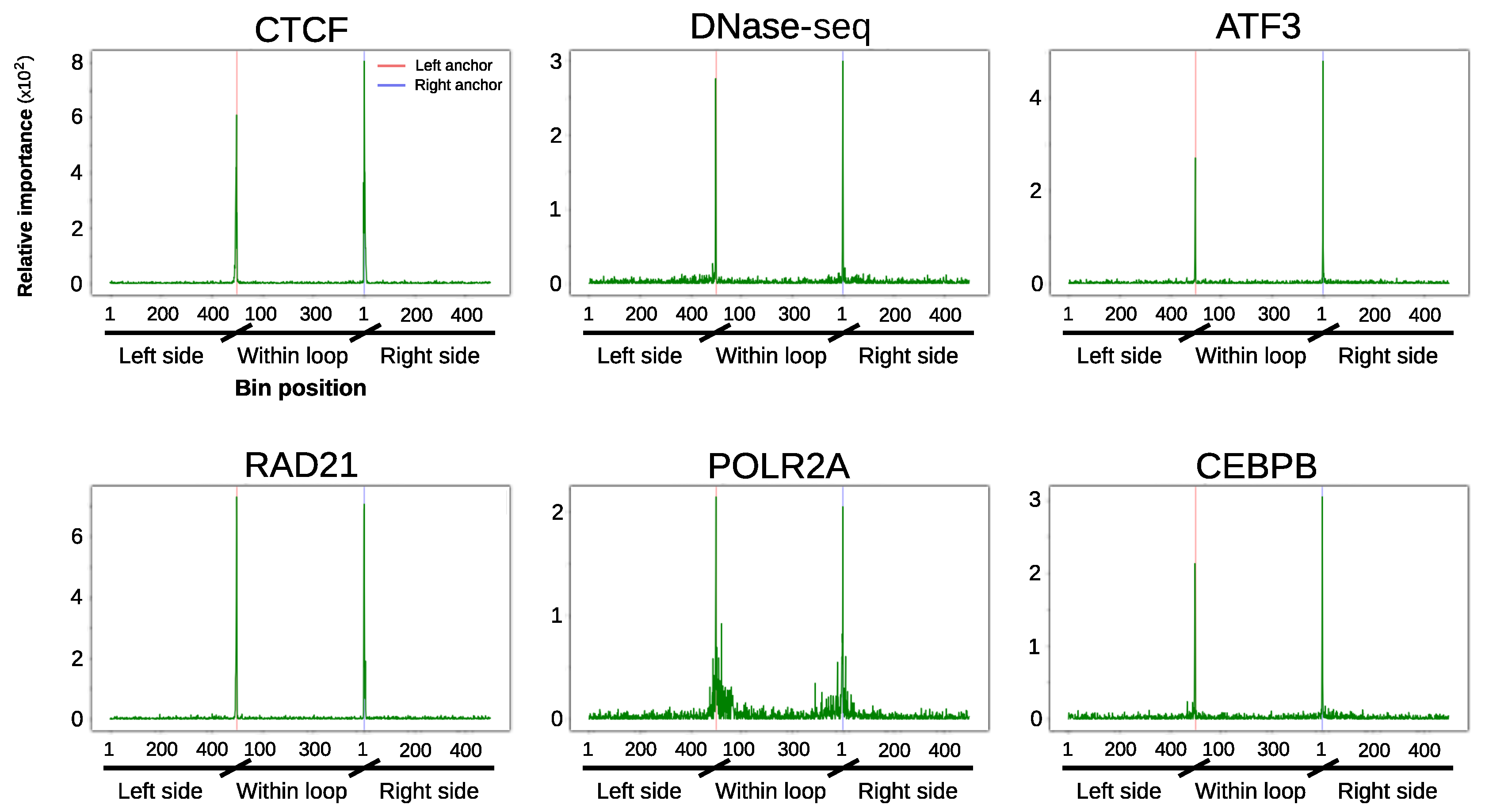

3.4. Loop Anchors Are the Most Informative Regions

3.5. Removing Architectural Features Has a Modest Effect on XGboost Performance for K562

3.6. XGboost Models Achieve Accurate Predictions When Trained with Subsets of Chromatin Features

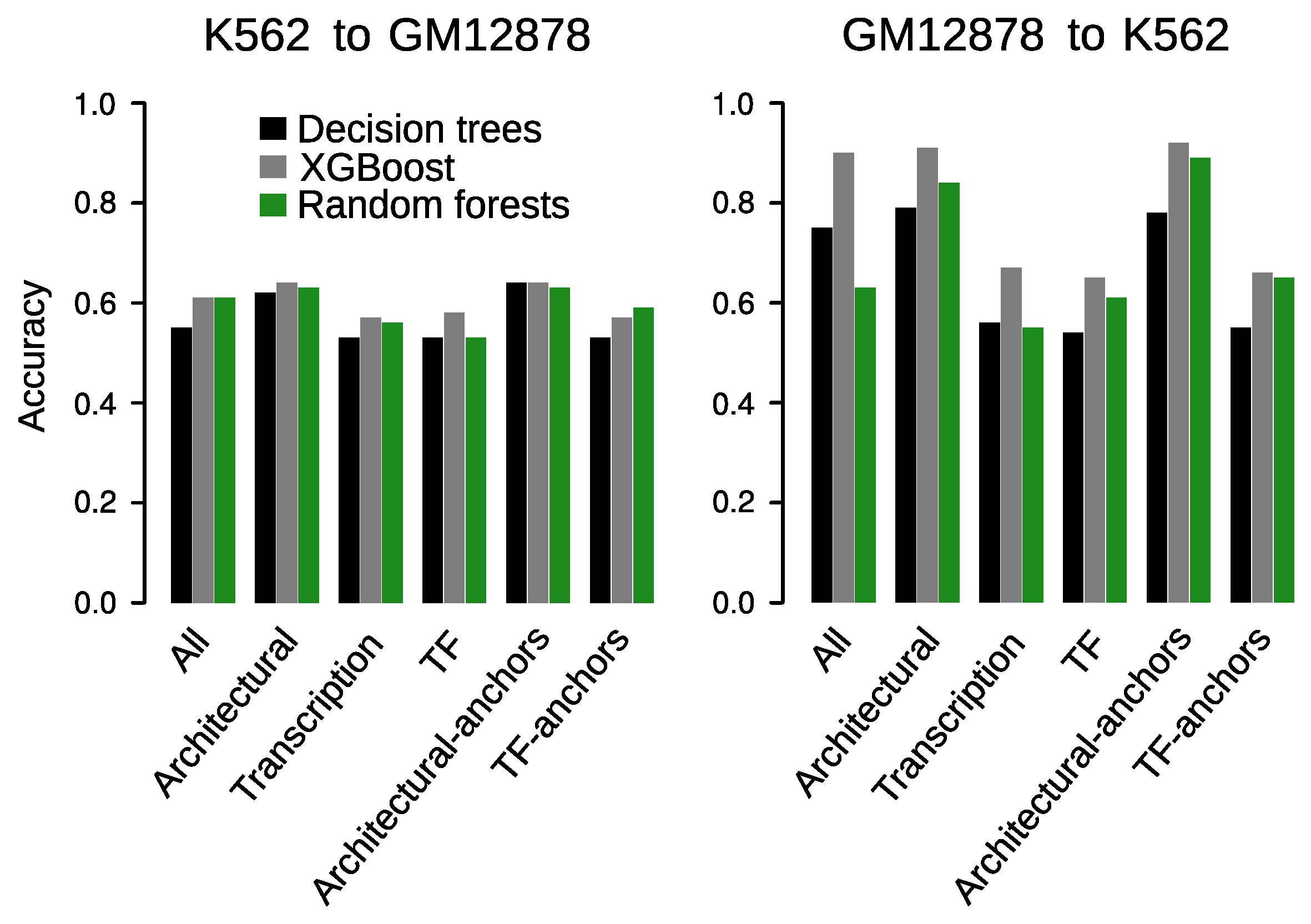

3.7. Prediction across Cell Types

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bickmore, W.A.; Van Steensel, B. Genome architecture: Domain organization of interphase chromosomes. Cell 2013, 152, 1270–1284. [Google Scholar] [CrossRef] [PubMed]

- Bonev, B.; Cavalli, G. Organization and function of the 3D genome. Nat. Rev. Genet. 2016, 17, 661–678. [Google Scholar] [CrossRef]

- Rao, S.S.; Huntley, M.H.; Durand, N.C.; Stamenova, E.K.; Bochkov, I.D.; Robinson, J.T.; Sanborn, A.L.; Machol, I.; Omer, A.D.; Lander, E.S.; et al. A 3D map of the human genome at kilobase resolution reveals principles of chromatin looping. Cell 2014, 159, 1665–1680. [Google Scholar] [CrossRef] [PubMed]

- Weintraub, A.S.; Li, C.H.; Zamudio, A.V.; Sigova, A.A.; Hannett, N.M.; Day, D.S.; Abraham, B.J.; Cohen, M.A.; Nabet, B.; Buckley, D.L.; et al. YY1 Is a Structural Regulator of Enhancer-Promoter Loops. Cell 2017, 171, 1573–1588. [Google Scholar] [CrossRef] [PubMed]

- Dixon, J.R.; Selvaraj, S.; Yue, F.; Kim, A.; Li, Y.; Shen, Y.; Hu, M.; Liu, J.S.; Ren, B. Topological domains in mammalian genomes identified by analysis of chromatin interactions. Nature 2012, 485, 376–380. [Google Scholar] [CrossRef] [PubMed]

- Nora, E.P.; Lajoie, B.R.; Schulz, E.G.; Giorgetti, L.; Okamoto, I.; Servant, N.; Piolot, T.; Van Berkum, N.L.; Meisig, J.; Sedat, J.; et al. Spatial partitioning of the regulatory landscape of the X-inactivation centre. Nature 2012, 485, 381–385. [Google Scholar] [CrossRef] [PubMed]

- Lieberman-Aiden, E.; Van Berkum, N.L.; Williams, L.; Imakaev, M.; Ragoczy, T.; Telling, A.; Amit, I.; Lajoie, B.R.; Sabo, P.J.; Dorschner, M.O.; et al. Comprehensive mapping of long-range interactions reveals folding principles of the human genome. Science 2009, 326, 289–293. [Google Scholar] [CrossRef]

- Zheng, H.; Xie, W. The role of 3D genome organization in development and cell differentiation. Nat. Rev. Mol. Cell Biol. 2019, 20, 535–550. [Google Scholar] [CrossRef]

- Lupiáñez, D.G.; Kraft, K.; Heinrich, V.; Krawitz, P.; Brancati, F.; Klopocki, E.; Horn, D.; Kayserili, H.; Opitz, J.M.; Laxova, R.; et al. Disruptions of topological chromatin domains cause pathogenic rewiring of gene-enhancer interactions. Cell 2015, 161, 1012–1025. [Google Scholar] [CrossRef]

- Kragesteen, B.K.; Spielmann, M.; Paliou, C.; Heinrich, V.; Schöpflin, R.; Esposito, A.; Annunziatella, C.; Bianco, S.; Chiariello, A.M.; Jerković, I.; et al. Dynamic 3D chromatin architecture contributes to enhancer specificity and limb morphogenesis. Nat. Genet. 2018, 50, 1463–1473. [Google Scholar] [CrossRef]

- Li, Y.; Hu, M.; Shen, Y. Gene regulation in the 3D genome. Hum. Mol. Genet. 2018, 27, R228–R233. [Google Scholar] [CrossRef] [PubMed]

- Schoenfelder, S.; Fraser, P. Long-range enhancer–promoter contacts in gene expression control. Nat. Rev. Genet. 2019, 20, 437–455. [Google Scholar] [CrossRef] [PubMed]

- Sanborn, A.L.; Rao, S.S.P.; Huang, S.C.; Durand, N.C.; Huntley, M.H.; Jewett, A.I.; Bochkov, I.D.; Chinnappan, D.; Cutkosky, A.; Li, J.; et al. Chromatin extrusion explains key features of loop and domain formation in wild-type and engineered genomes. Proc. Natl. Acad. Sci. USA 2015, 112, E6456–E6465. [Google Scholar] [CrossRef] [PubMed]

- Fudenberg, G.; Imakaev, M.; Lu, C.; Goloborodko, A.; Abdennur, N.; Mirny, L.A. Formation of Chromosomal Domains by Loop Extrusion. Cell Rep. 2016, 15, 2038–2049. [Google Scholar] [CrossRef] [PubMed]

- Bouwman, B.A.; de Laat, W. Getting the genome in shape: The formation of loops, domains and compartments. Genome Biol. 2015, 16, 154. [Google Scholar] [CrossRef] [PubMed]

- Nichols, M.H.; Corces, V.G. A CTCF Code for 3D Genome Architecture. Cell 2015, 162, 703–705. [Google Scholar] [CrossRef]

- Busslinger, G.A.; Stocsits, R.R.; Van Der Lelij, P.; Axelsson, E.; Tedeschi, A.; Galjart, N.; Peters, J.M. Cohesin is positioned in mammalian genomes by transcription, CTCF and Wapl. Nature 2017, 544, 503–507. [Google Scholar] [CrossRef]

- Dekker, J.; Marti-Renom, M.A.; Mirny, L.A. Exploring the three-dimensional organization of genomes: Interpreting chromatin interaction data. Nat. Rev. Genet. 2013, 14, 390–403. [Google Scholar] [CrossRef]

- Vian, L.; Pȩkowska, A.; Rao, S.S.; Kieffer-Kwon, K.R.; Jung, S.; Baranello, L.; Huang, S.C.; El Khattabi, L.; Dose, M.; Pruett, N.; et al. The Energetics and Physiological Impact of Cohesin Extrusion. Cell 2018, 73, 1165–1178. [Google Scholar] [CrossRef]

- Huang, J.; Marco, E.; Pinello, L.; Yuan, G.C. Predicting chromatin organization using histone marks. Genome Biol. 2015, 16, 162. [Google Scholar] [CrossRef]

- Mourad, R.; Cuvier, O. Computational Identification of Genomic Features That Influence 3D Chromatin Domain Formation. PLoS Comput. Biol. 2016, 12, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Chen, Z.; Zhang, K.; Wang, M.; Medovoy, D.; Whitaker, J.W.; Ding, B.; Li, N.; Zheng, L.; Wang, W. Constructing 3D interaction maps from 1D epigenomes. Nat. Commun. 2016, 7, 10812. [Google Scholar] [CrossRef] [PubMed]

- Kai, Y.; Andricovich, J.; Zeng, Z.; Zhu, J.; Tzatsos, A.; Peng, W. Predicting CTCF-mediated chromatin interactions by integrating genomic and epigenomic features. Nat. Commun. 2018, 9, 4221. [Google Scholar] [CrossRef] [PubMed]

- Al Bkhetan, Z.; Plewczynski, D. Three-dimensional Epigenome Statistical Model: Genome-wide Chromatin Looping Prediction. Sci. Rep. 2018, 8, 5217. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Chasman, D.; Knaack, S.; Roy, S. In silico prediction of high-resolution Hi-C interaction matrices. Nat. Commun. 2019, 10, 5449. [Google Scholar] [CrossRef]

- Consortium, E.P. An integrated encyclopedia of DNA elements in the human genome. Nature 2012, 489, 57–74. [Google Scholar] [CrossRef]

- Handoko, L.; Xu, H.; Li, G.; Ngan, C.Y.; Chew, E.; Schnapp, M.; Lee, C.W.H.; Ye, C.; Ping, J.L.H.; Mulawadi, F.; et al. CTCF-mediated functional chromatin interactome in pluripotent cells. Nat. Genet. 2011, 43, 630–638. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Buitinck, L.; Louppe, G.; Blondel, M.; Pedregosa, F.; Mueller, A.; Grisel, O.; Niculae, V.; Prettenhofer, P.; Gramfort, A.; Grobler, J.; et al. API design for machine learning software: Experiences from the scikit-learn project. arXiv 2013, arXiv:1309.0238. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation, 2nd ed.; Prentice Hall PTR: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Chollet, F. Keras: The Python Deep Learning Library; Record ascl:1806.022; Astrophysics Source Code Library, 2018; p. 1806. [Google Scholar]

- Hearst, M.A. Support Vector Machines. IEEE Intell. Syst. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Chang, Y.W.; Hsieh, C.J.; Chang, K.W.; Ringgaard, M.; Lin, C.J. Training and Testing Low-degree Polynomial Data Mappings via Linear SVM. J. Mach. Learn. Res. 2010, 11, 1471–1490. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and f-measure to roc., informedness, markedness & correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Haering, C.H.; Löwe, J.; Hochwagen, A.; Nasmyth, K. Molecular architecture of SMC proteins and the yeast cohesin complex. Mol. Cell 2002, 9, 773–788. [Google Scholar] [CrossRef]

- Ivanov, D.; Nasmyth, K. A topological interaction between cohesin rings and a circular minichromosome. Cell 2005, 122, 849–860. [Google Scholar] [CrossRef]

- Arvey, A.; Agius, P.; Noble, W.S.; Leslie, C. Sequence and chromatin determinants of cell-type-specific transcription factor binding. Genome Res. 2012, 22, 1723–1734. [Google Scholar] [CrossRef]

- Rockowitz, S.; Lien, W.H.; Pedrosa, E.; Wei, G.; Lin, M.; Zhao, K.; Lachman, H.M.; Fuchs, E.; Zheng, D. Comparison of REST Cistromes across Human Cell Types Reveals Common and Context-Specific Functions. PLoS Comput. Biol. 2014, 10, 1–17. [Google Scholar] [CrossRef]

- Stempor, P.; Ahringer, J. SeqPlots—Interactive software for exploratory data analyses, pattern discovery and visualization in genomics. Wellcome Open Res. 2016, 1, 14. [Google Scholar] [CrossRef]

| Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Decision Trees | 0.8698 | 0.8707 | 0.8699 | 0.8698 |

| Random Forests | 0.8424 | 0.8469 | 0.8425 | 0.8419 |

| XGBoost | 0.9634 | 0.9638 | 0.9635 | 0.9635 |

| SVM | 0.8219 | 0.8224 | 0.8219 | 0.8218 |

| MLP | 0.8226 | 0.8231 | 0.8227 | 0.8226 |

| Deep learning ANN | 0.7930 | 0.7944 | 0.7930 | 0.7928 |

| Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Decision Trees | 0.8313 | 0.8314 | 0.8313 | 0.8313 |

| Random Forests | 0.8262 | 0.8284 | 0.8263 | 0.8261 |

| XGBoost | 0.9474 | 0.9485 | 0.9475 | 0.9474 |

| SVM | 0.8087 | 0.8088 | 0.8088 | 0.8087 |

| MLP | 0.8322 | 0.8328 | 0.8323 | 0.8322 |

| Deep learning ANN | 0.8064 | 0.8065 | 0.8065 | 0.8065 |

| Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Decision Trees | 0.8257 | 0.8259 | 0.8257 | 0.8257 |

| Random Forests | 0.7846 | 0.7877 | 0.7846 | 0.7840 |

| XGBoost | 0.9467 | 0.9469 | 0.9467 | 0.9467 |

| SVM | 0.7264 | 0.7264 | 0.7237 | 0.7228 |

| MLP | 0.7168 | 0.7202 | 0.7169 | 0.7157 |

| Deep learning ANN | 0.6872 | 0.6873 | 0.6872 | 0.6871 |

| Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Decision Trees | 0.6806 | 0.6817 | 0.6806 | 0.6805 |

| Random Forests | 0.7626 | 0.7648 | 0.7627 | 0.7624 |

| XGBoost | 0.8327 | 0.8334 | 0.8327 | 0.8327 |

| SVM | 0.7046 | 0.7068 | 0.7046 | 0.7042 |

| MLP | 0.7018 | 0.7061 | 0.7018 | 0.7008 |

| Deep learning ANN | 0.6608 | 0.6613 | 0.6608 | 0.6608 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vanhaeren, T.; Divina, F.; García-Torres, M.; Gómez-Vela, F.; Vanhoof, W.; Martínez-García, P.M. A Comparative Study of Supervised Machine Learning Algorithms for the Prediction of Long-Range Chromatin Interactions. Genes 2020, 11, 985. https://doi.org/10.3390/genes11090985

Vanhaeren T, Divina F, García-Torres M, Gómez-Vela F, Vanhoof W, Martínez-García PM. A Comparative Study of Supervised Machine Learning Algorithms for the Prediction of Long-Range Chromatin Interactions. Genes. 2020; 11(9):985. https://doi.org/10.3390/genes11090985

Chicago/Turabian StyleVanhaeren, Thomas, Federico Divina, Miguel García-Torres, Francisco Gómez-Vela, Wim Vanhoof, and Pedro Manuel Martínez-García. 2020. "A Comparative Study of Supervised Machine Learning Algorithms for the Prediction of Long-Range Chromatin Interactions" Genes 11, no. 9: 985. https://doi.org/10.3390/genes11090985

APA StyleVanhaeren, T., Divina, F., García-Torres, M., Gómez-Vela, F., Vanhoof, W., & Martínez-García, P. M. (2020). A Comparative Study of Supervised Machine Learning Algorithms for the Prediction of Long-Range Chromatin Interactions. Genes, 11(9), 985. https://doi.org/10.3390/genes11090985