REU-YOLO: A Context-Aware UAV-Based Rice Ear Detection Model for Complex Field Scenes

Abstract

1. Introduction

2. Materials and Methods

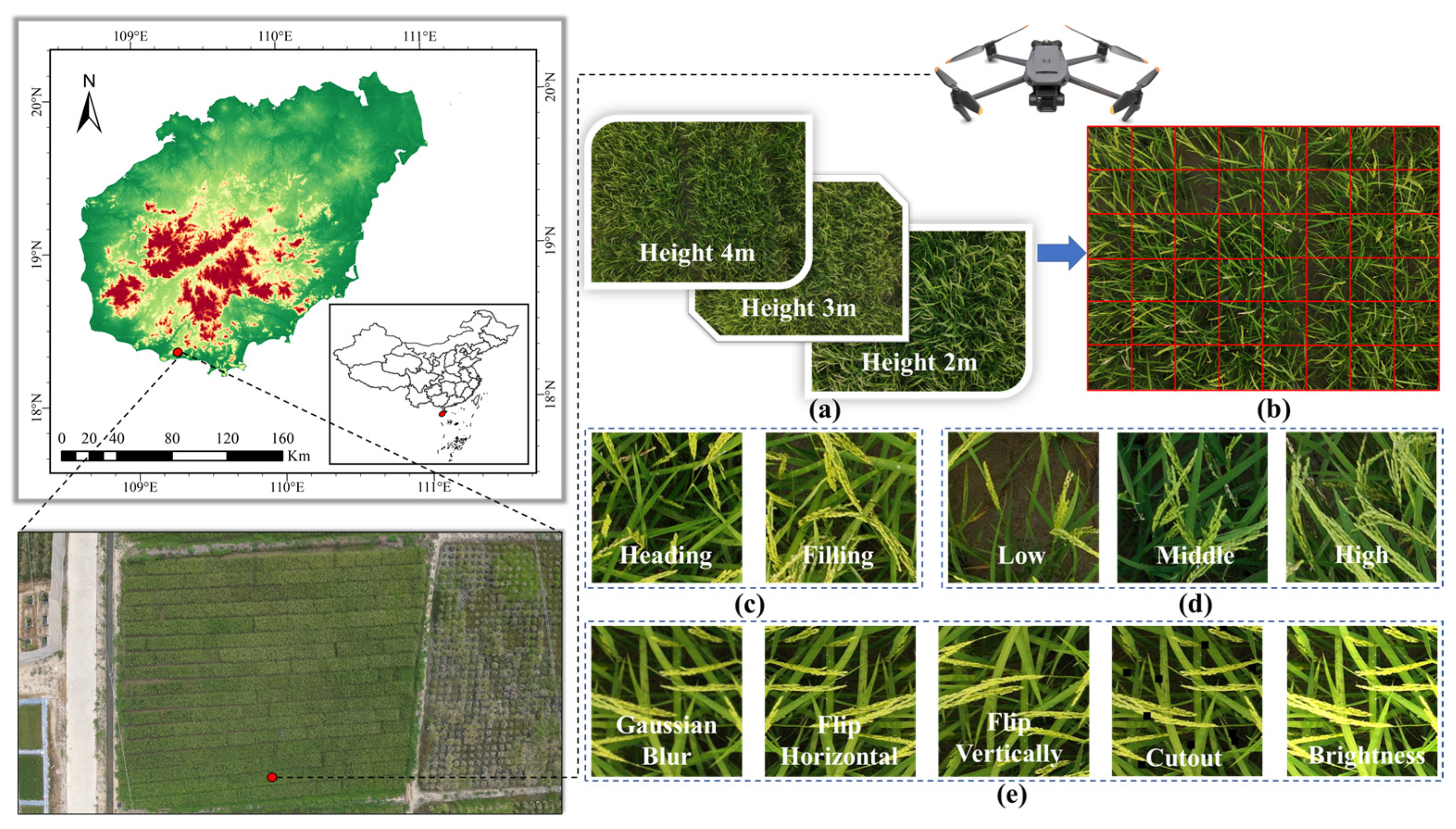

2.1. Field Data Collection

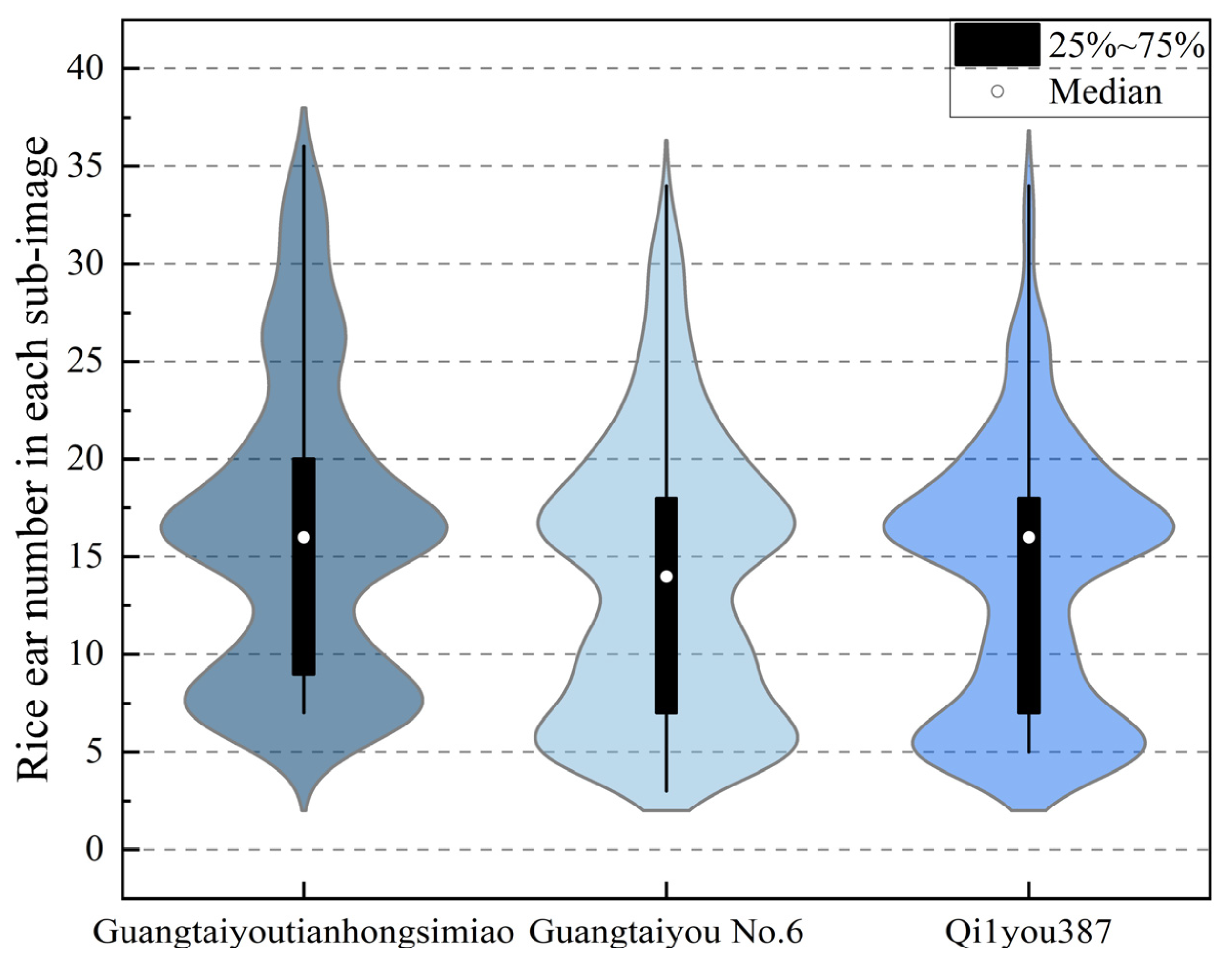

2.2. UAVR Dataset

2.2.1. Field Data Processing

2.2.2. Data Augmentation

2.3. Other Datasets

2.4. YOLOv8 Algorithm Principle

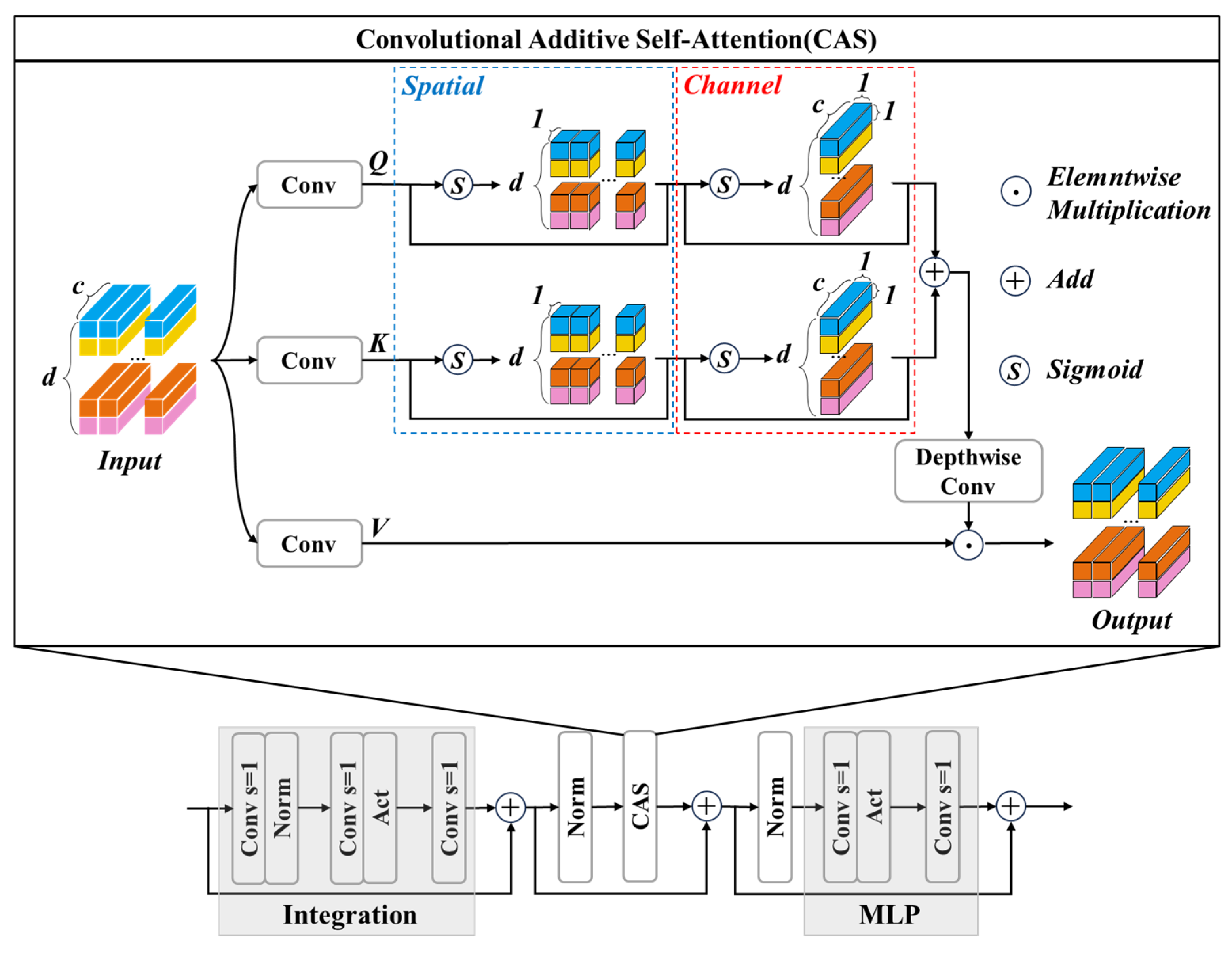

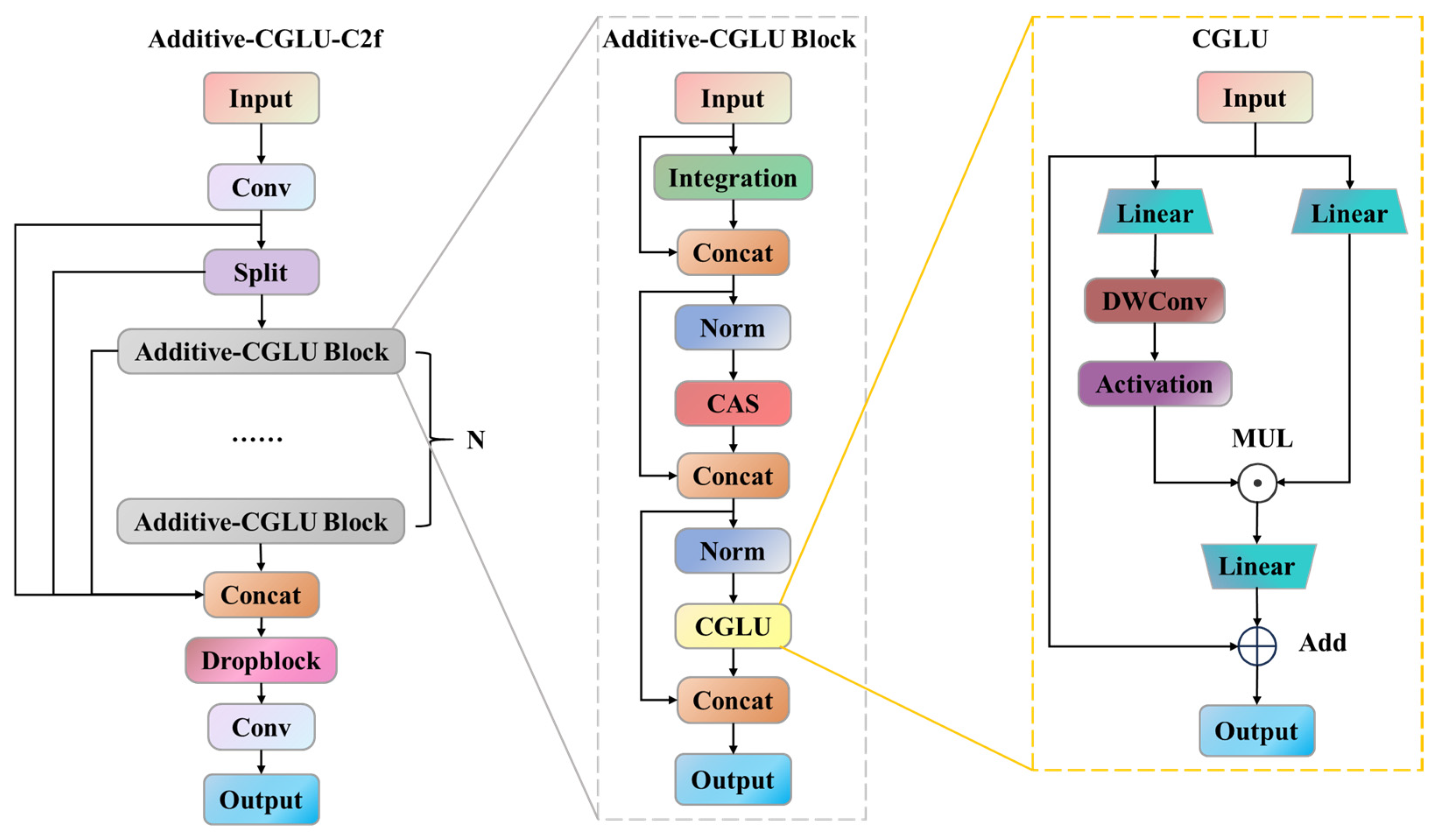

2.5. Improvement of YOLOv8

2.5.1. Improved Feature Extraction Module AC-C2f

2.5.2. Spatial Pyramid Pooling with Cross Stage Partial Convolutions

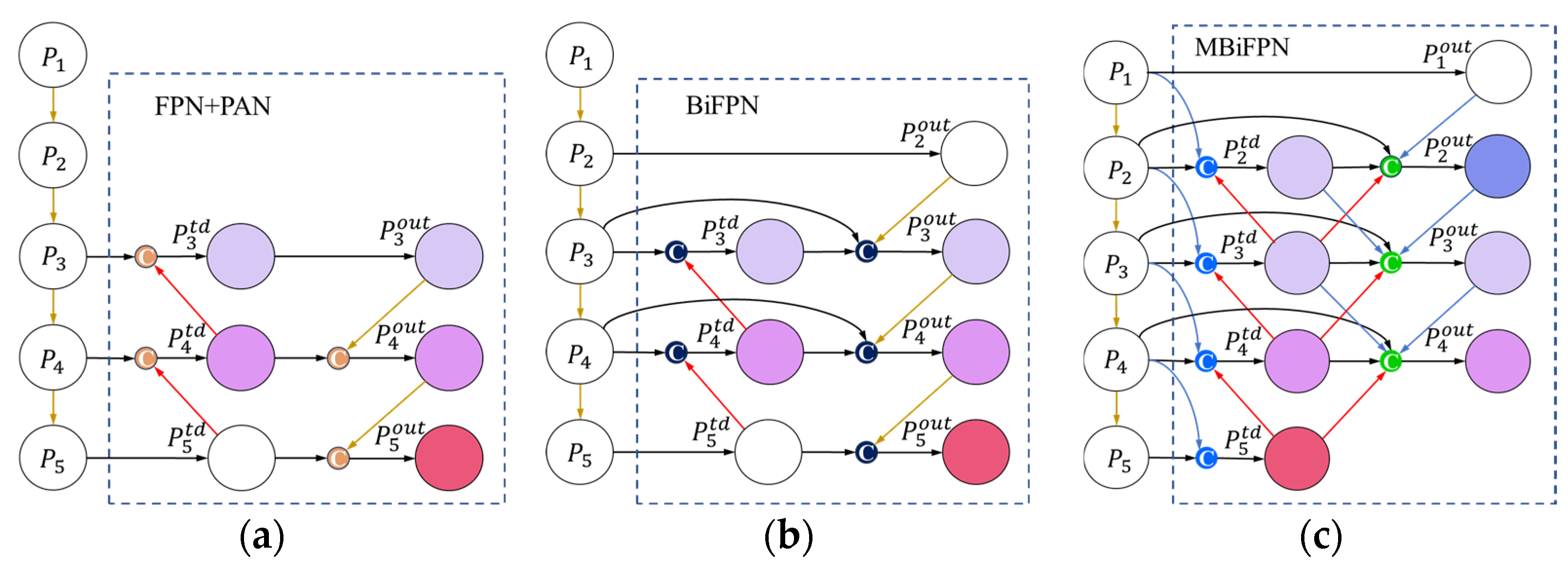

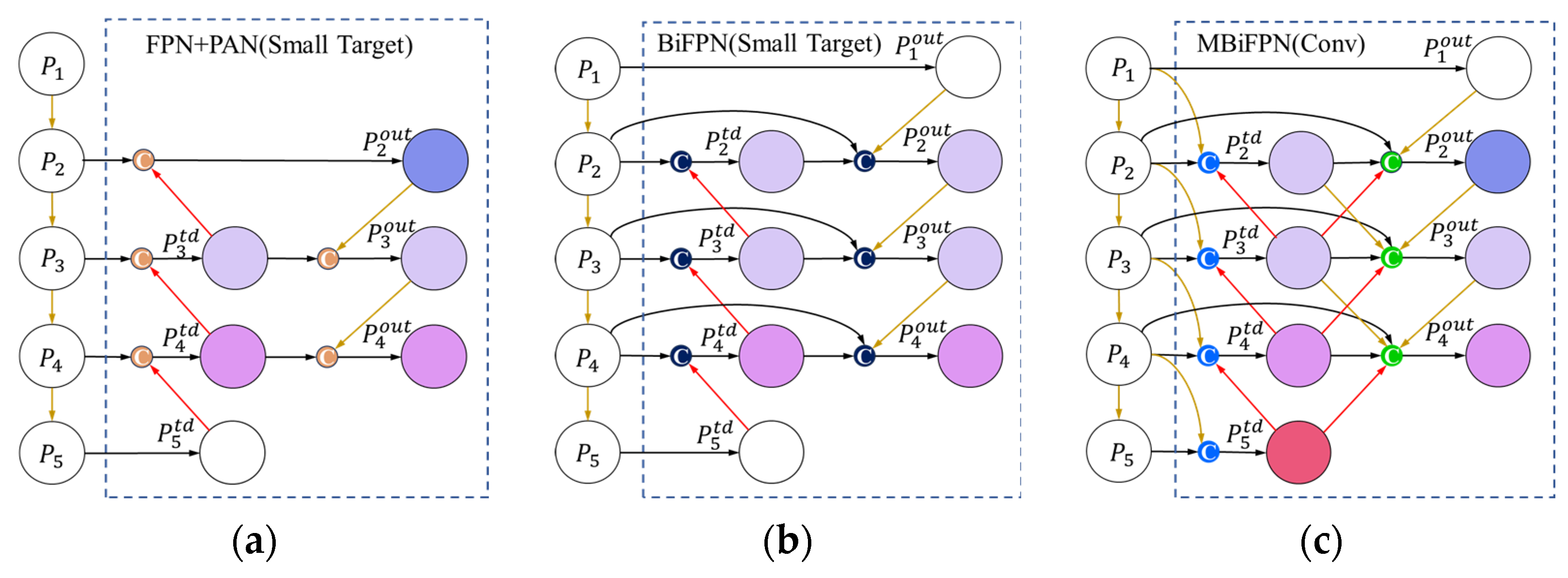

2.5.3. Multi-Branch Bidirectional Feature Pyramid Network

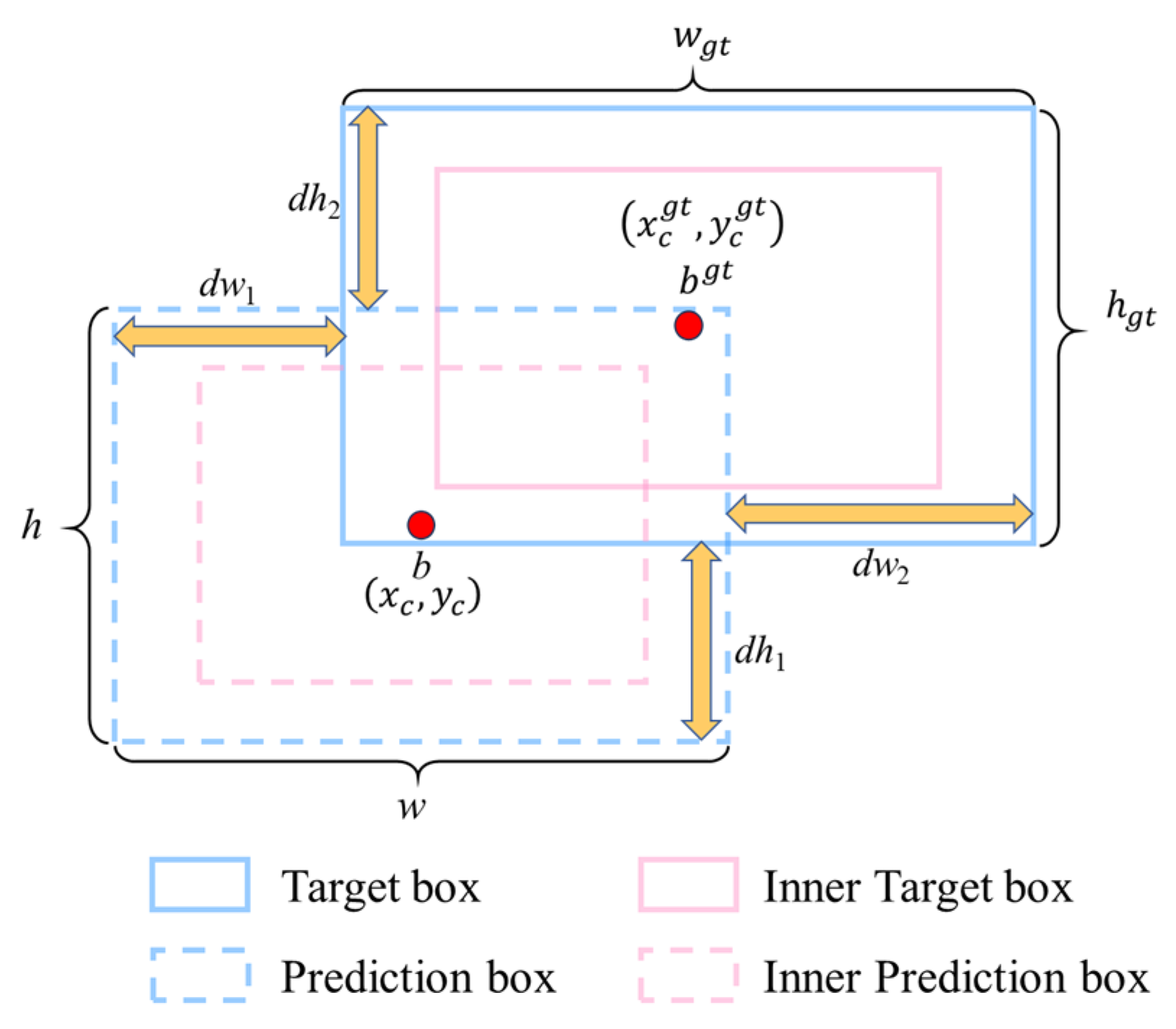

2.5.4. Inner-PloU Loss Function

2.6. Evaluation Metrics

3. Results

3.1. Experimental Environment and Parameters

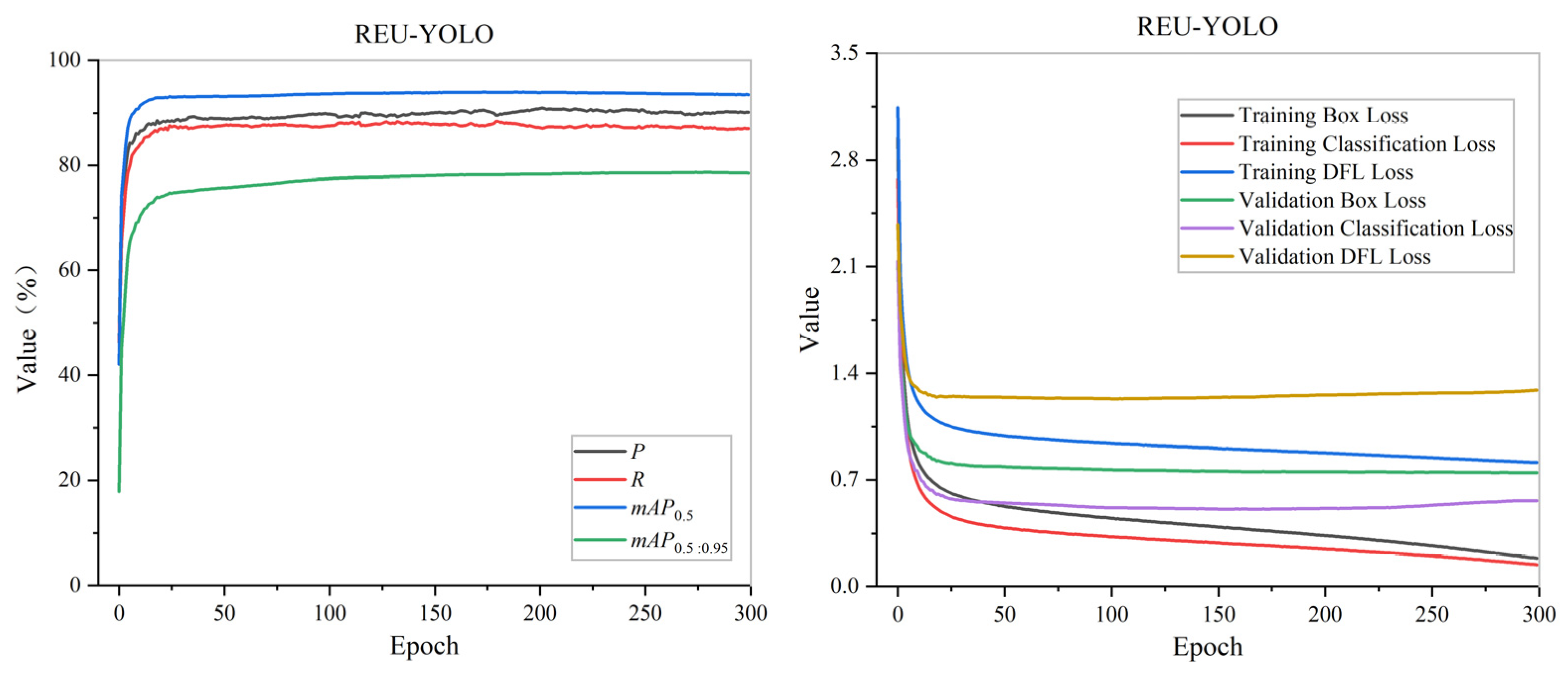

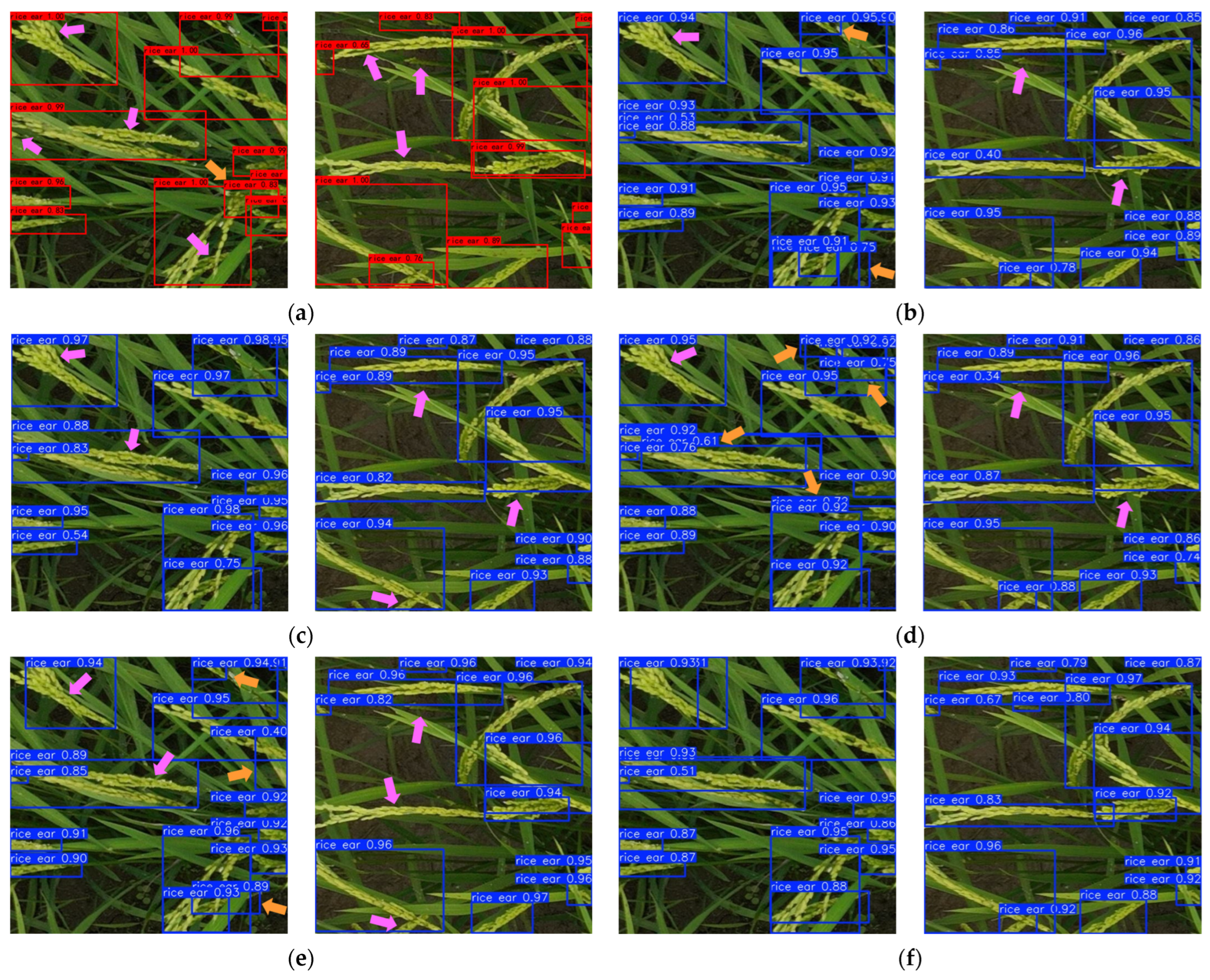

3.2. Experiments on UAVR Dataset

3.2.1. Analysis of MBiFPN Performance

3.2.2. Ablation Experiments

3.2.3. Comparison Experiments with Different Detection Models

3.3. Experiments on Other Datasets

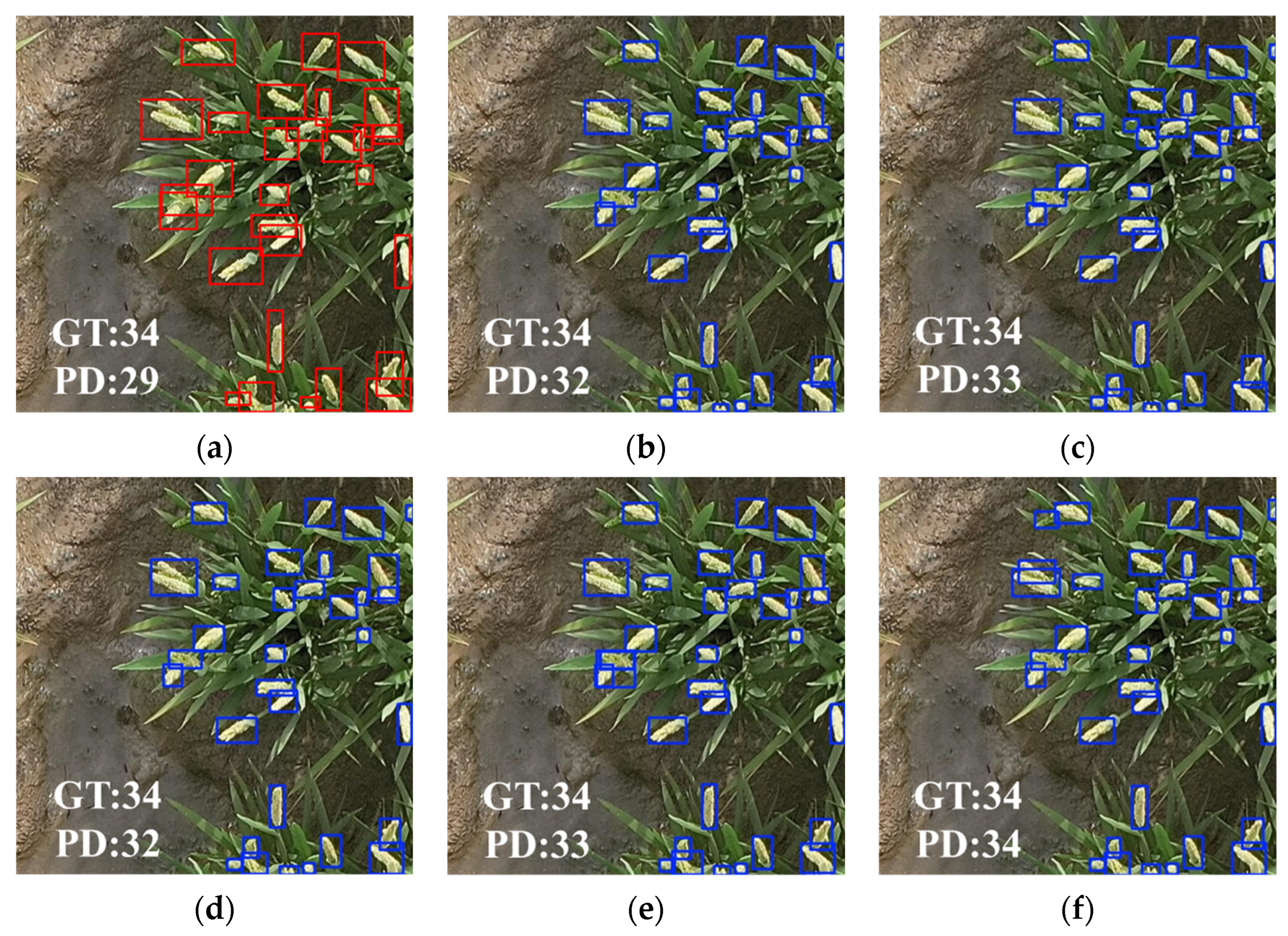

3.3.1. Experiments on DRPD Dataset

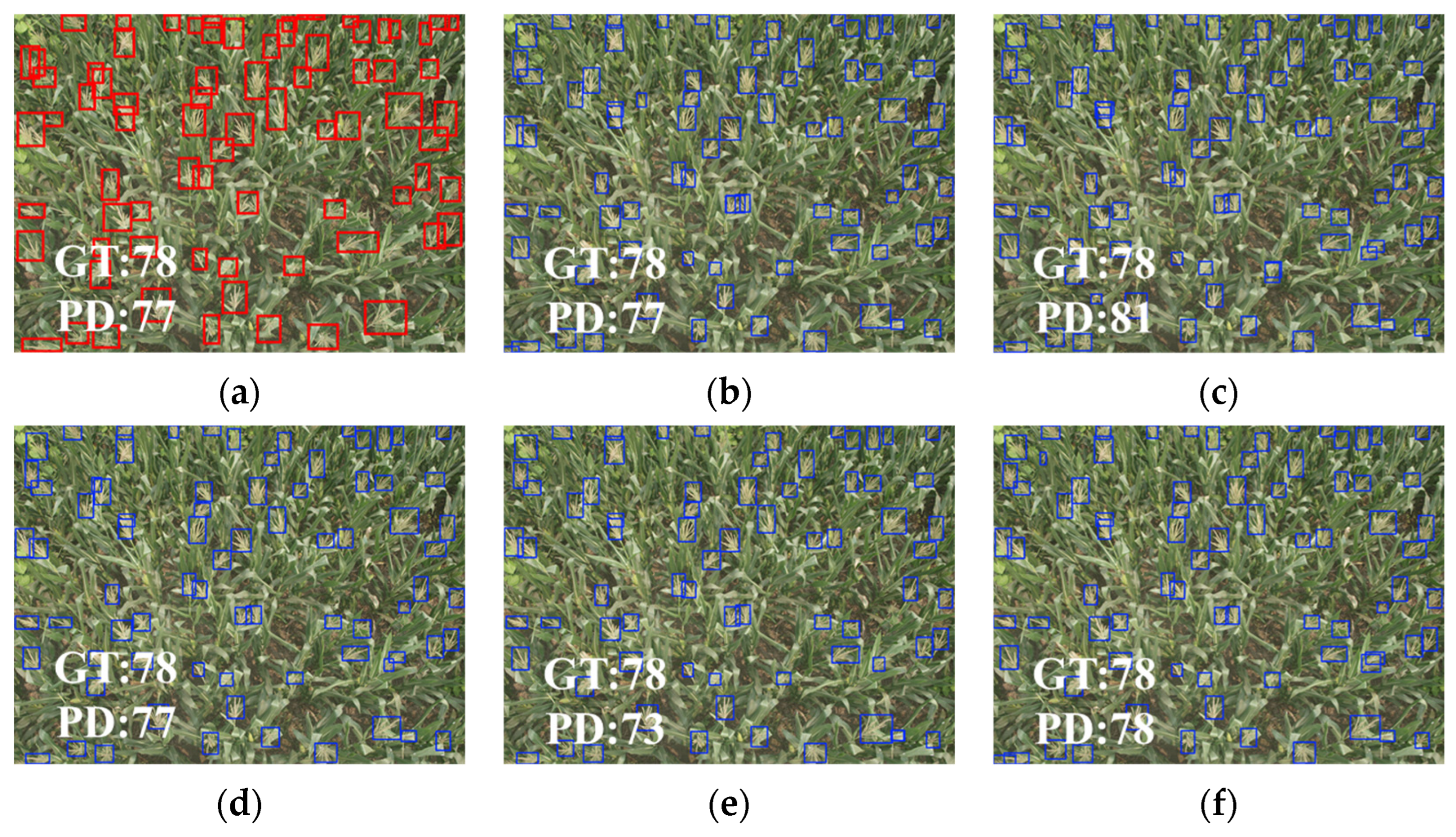

3.3.2. Experiments on MrMT Dataset

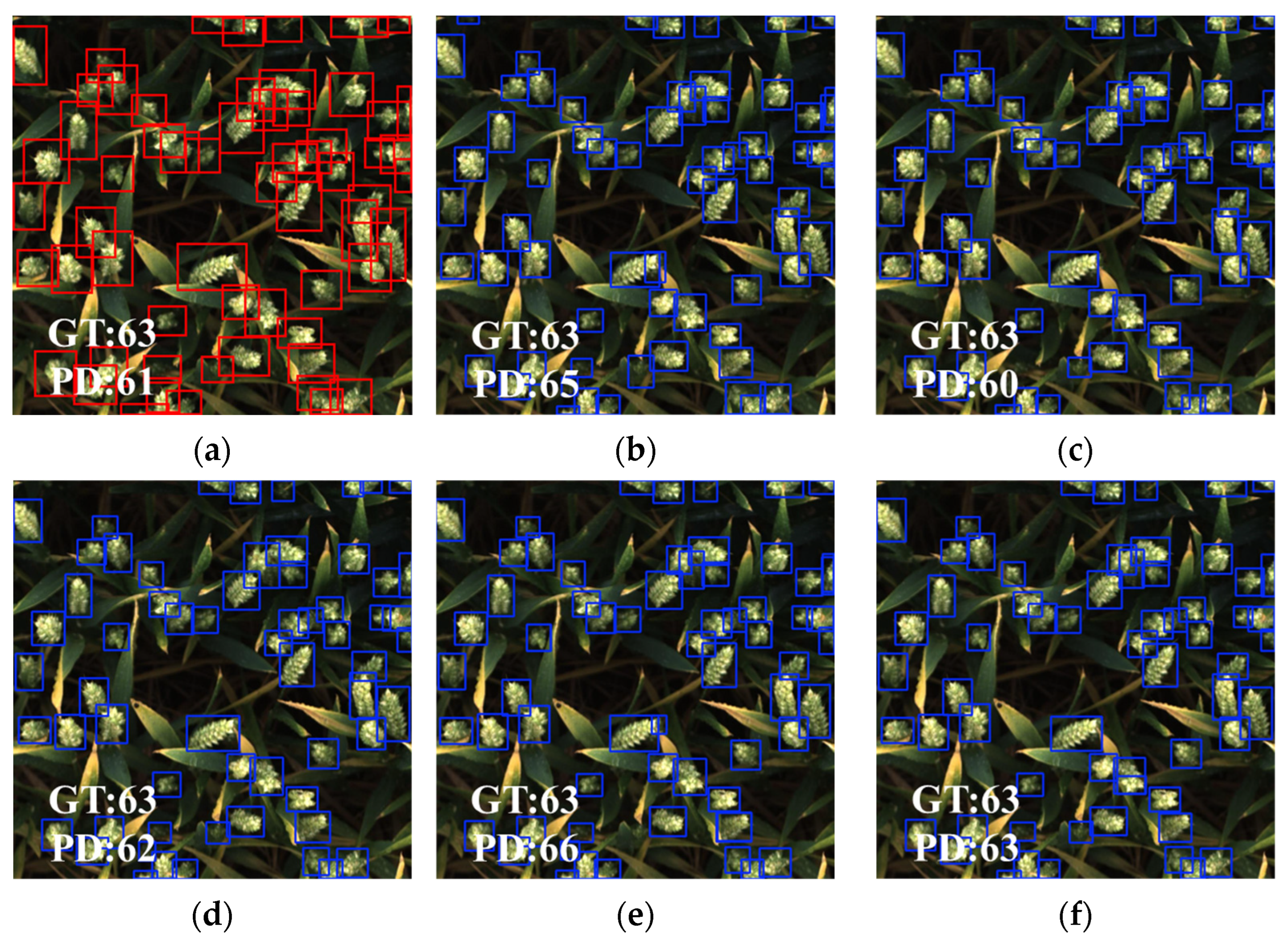

3.3.3. Experiments on GWHD Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuan, L. Progress in super-hybrid rice breeding. Crop J. 2017, 5, 100–102. [Google Scholar] [CrossRef]

- Shang, S.; Yin, Y.; Guo, P.; Yang, R.; Sun, Q. Current situation and development trend of mechanization of field experiments. Trans. Chin. Soc. Agric. Eng. 2010, 26, 5–8. Available online: http://tcsae.org/en/article/id/20101302 (accessed on 1 August 2025).

- Li, X.; Yue, H.; Liu, J.; Cheng, A. AMS-YOLO: Asymmetric Multi-Scale Fusion Network for Cannabis Detection in UAV Imagery. Drones 2025, 9, 629. [Google Scholar] [CrossRef]

- Moldvai, L.; Mesterházi, P.Á.; Teschner, G.; Nyéki, A. Aerial Image-Based Crop Row Detection and Weed Pressure Mapping Method. Agronomy 2025, 15, 1762. [Google Scholar] [CrossRef]

- Zhu, Z.; Gao, Z.; Zhuang, J.; Huang, D.; Huang, G.; Wang, H.; Pei, J.; Zheng, J.; Liu, C. MSMT-RTDETR: A Multi-Scale Model for Detecting Maize Tassels in UAV Images with Complex Field Backgrounds. Agriculture 2025, 15, 1653. [Google Scholar] [CrossRef]

- Zhu, Y.; Cao, Z.; Lu, H.; Li, Y.; Xiao, Y. In-field automatic observation of wheat heading stage using computer vision. Biosyst. Eng. 2016, 143, 28–41. [Google Scholar] [CrossRef]

- Xiong, X.; Duan, L.; Liu, L.; Tu, H.; Yang, P.; Wu, D.; Chen, G.; Xiong, L.; Yang, W.; Liu, Q. Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 2017, 13, 104. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Cao, Z.; Zhao, L.; Zhang, J.; Lv, C.; Li, C.; Xie, J. Rice heading stage automatic observation by multi-classifier cascade based rice spike detection method. Agric. For. Meteorol. 2018, 259, 260–270. [Google Scholar] [CrossRef]

- Zhou, C.; Liang, D.; Yang, X.; Yang, H.; Yue, J.; Yang, G. Wheat ears counting in field conditions based on multi-feature optimization and TWSVM. Front. Plant Sci. 2018, 9, 1024. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Wheat ear counting in-field conditions: High throughput and low-cost approach using RGB images. Plant Methods 2018, 14, 22. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Buchaillot, M.L.; Aparicio Gutiérrez, N.; Nieto-Taladriz, M.T.; Araus, J.L.; Kefauver, S.C. Automatic Wheat Ear Counting Using Thermal Imagery. Remote Sens. 2019, 11, 751. [Google Scholar] [CrossRef]

- Xu, X.; Li, H.; Yin, F.; Xi, L.; Qiao, H.; Ma, Z.; Shen, S.; Jiang, B.; Ma, X. Wheat ear counting using K-means clustering segmentation and convolutional neural network. Plant Methods 2020, 16, 1–13. [Google Scholar] [CrossRef]

- Ji, M.; Yang, Y.; Zheng, Y.; Zhu, Q.; Huang, M.; Guo, Y. In-field automatic detection of maize tassels using computer vision. Inf. Process. Agric. 2021, 8, 87–95. [Google Scholar] [CrossRef]

- Chen, Y.; Xin, R.; Jiang, H.; Liu, Y.; Zhang, X.; Yu, J. Refined feature fusion for in-field high-density and multi-scale rice panicle counting in UAV images. Comput. Electron. Agric. 2023, 211, 108032. [Google Scholar] [CrossRef]

- Teng, Z.; Chen, J.; Wang, J.; Wu, S.; Chen, R.; Lin, Y.; Shen, L.; Jackson, R.; Zhou, J.; Yang, C. Panicle-Cloud: An Open and AI-Powered Cloud Computing Platform for Quantifying Rice Panicles from Drone-Collected Imagery to Enable the Classification of Yield Production in Rice. Plant Phenomics 2023, 5, 0105. [Google Scholar] [CrossRef] [PubMed]

- Tan, S.; Lu, H.; Yu, J.; Lan, M.; Hu, X.; Zheng, H.; Peng, Y.; Wang, Y.; Li, Z.; Qi, L.; et al. In-field rice panicles detection and growth stages recognition based on RiceRes2Net. Comput. Electron. Agric. 2023, 206, 7704. [Google Scholar] [CrossRef]

- Wei, J.; Tian, X.; Ren, W.; Gao, R.; Ji, Z.; Kong, Q.; Su, Z. A Precise Plot-Level Rice Yield Prediction Method Based on Panicle Detection. Agronomy 2024, 14, 1618. [Google Scholar] [CrossRef]

- Liang, Y.; Li, H.; Wu, H.; Zhao, Y.; Liu, Z.; Liu, D.; Liu, Z.; Fan, G.; Pan, Z.; Shen, Z.; et al. A rotated rice spike detection model and a crop yield estimation application based on UAV images. Comput. Electron. Agric. 2024, 224, 109188. [Google Scholar] [CrossRef]

- Lan, M.; Liu, C.; Zheng, H.; Wang, Y.; Cai, W.; Peng, Y.; Xu, C.; Tan, S. RICE-YOLO: In-Field Rice Spike Detection Based on Improved YOLOv5 and Drone Images. Agronomy 2024, 14, 836. [Google Scholar] [CrossRef]

- Song, Z.; Ban, S.; Hu, D.; Xu, M.; Yuan, T.; Zheng, X.; Sun, H.; Zhou, S.; Tian, M.; Li, L. A Lightweight YOLO Model for Rice Panicle Detection in Fields Based on UAV Aerial Images. Drones 2025, 9, 1. [Google Scholar] [CrossRef]

- Guo, Y.; Zhan, W.; Zhang, Z.; Zhang, Y.; Guo, H. FRPNet: A Lightweight Multi-Altitude Field Rice Panicle Detection and Counting Network Based on Unmanned Aerial Vehicle Images. Agronomy 2025, 15, 1396. [Google Scholar] [CrossRef]

- Zheng, H.; Liu, C.; Zhong, L.; Wang, J.; Huang, J.; Lin, F.; Ma, X.; Tan, S. An android-smartphone application for rice panicle detection and rice growth stage recognition using a lightweight YOLO network. Front. Plant Sci. 2025, 16, 1561632. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Ye, J.; Li, C.; Zhou, H.; Li, X. TasselLFANet: A novel lightweight multibranch feature aggregation neural network for high-throughput image-based maize tassels detection and counting. Front. Plant Sci. 2023, 14, 1158940. [Google Scholar] [CrossRef] [PubMed]

- David, E.; Madec, S.; Sadeghi-Tehran, P.; Aasen, H.; Zheng, B.; Liu, S.; Kirchgessner, N.; Ishikawa, G.; Nagasawa, K.; Badhon, M.A.; et al. Global wheat head detection(GWHD) dataset: A large and diverse dataset of high-resolution RGB-labelled images to develop and benchmark wheat head detection methods. Plant Phenomics 2020, 2020, 3521852. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Shi, D. TransNeXt: Robust Foveal Visual Perception for Vision Transformers. arXiv 2024, arXiv:2311.17132. [Google Scholar]

- Zhang, T.; Li, L.; Zhou, Y. CAS-ViT: Convolutional Additive Self-attention Vision Transformers for Efficient Mobile Applications. arXiv 2024, arXiv:2408.03703. [Google Scholar]

- Wang, C.; Liao, H.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, C.; Wang, K.; Li, Q.; Zhao, F.; Zhao, K.; Ma, H. Powerful-IoU: More straightforward and faster bounding box regression loss with a nonmonotonic focusing mechanism. Neural Netw. 2024, 170, 276–284. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Ye, J.; Yu, Z.; Wang, Y.; Lu, D.; Zhou, H. PlantBiCNet: A new paradigm in plant science with bi-directional cascade neural network for detection and counting. Eng. Appl. Artif. Intell. 2024, 130, 107704. [Google Scholar] [CrossRef]

| Model | Feature Fusion Network | mAP0.5 (%) | mAP0.5:0.95 (%) | Params (M) | FLOPs (G) | Model Size (MB) |

|---|---|---|---|---|---|---|

| 1 | FPN + PAN (Figure 7a) | 93.21 | 77.33 | 10.45 | 24.50 | 20.40 |

| 2 | FPN + PAN (small target ver) (Figure 10a) | 93.36 | 78.10 | 7.26 | 29.70 | 14.48 |

| 3 | BiFPN (Figure 7b) | 93.22 | 77.4 | 10.66 | 26.50 | 20.84 |

| 4 | BiFPN (small target ver) (Figure 10b) | 93.51 | 78.22 | 7.38 | 32.00 | 14.74 |

| 5 | MBiFPN with Conv (Figure 10c) | 93.66 | 78.45 | 8.29 | 34.30 | 16.52 |

| 6 | MBiFPN (Figure 7c) | 93.61 | 78.68 | 7.76 | 32.30 | 15.50 |

| Model | AC-C2f | SPPFCSPC_G | MBiFPN | Inner-PIoU | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) |

|---|---|---|---|---|---|---|---|---|

| YOLOv8 s | × | × | × | × | 85.75 | 83.41 | 88.76 | 70.41 |

| Improvement 1 | √ | × | × | × | 89.02 | 86.75 | 92.79 | 75.63 |

| Improvement 2 | √ | √ | × | × | 89.50 | 86.50 | 93.06 | 77.29 |

| Improvement 3 | √ | √ | √ | × | 90.08 | 86.89 | 93.47 | 78.18 |

| Improvement 4 | √ | √ | √ | √ | 89.97 | 87.17 | 93.61 | 78.68 |

| Model | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) | R2 | MAE | RMSE |

|---|---|---|---|---|---|---|---|

| SSD | 70.8 | 62.11 | 84.26 | 49.00 | 0.8926 | 1.14 | 1.57 |

| YOLOv5 s | 86.11 | 81.82 | 88.18 | 69.13 | 0.9143 | 0.95 | 1.41 |

| YOLOv8 s | 85.75 | 83.41 | 88.76 | 70.41 | 0.9225 | 0.90 | 1.34 |

| YOLOv9 s | 87.51 | 85.49 | 90.33 | 72.85 | 0.9395 | 0.78 | 1.18 |

| YOLOv10 s | 87.93 | 81.50 | 89.26 | 72.31 | 0.9117 | 0.97 | 1.43 |

| REU-YOLO | 89.97 | 87.17 | 93.61 | 78.68 | 0.9502 | 0.68 | 1.07 |

| Model | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) | R2 | MAE | RMSE |

|---|---|---|---|---|---|---|---|

| SSD | 66.70 | 58.67 | 77.90 | 32.60 | 0.8828 | 2.92 | 3.83 |

| YOLOv5 s | 87.25 | 83.23 | 89.21 | 55.06 | 0.9247 | 2.39 | 3.03 |

| YOLOv8 s | 88.33 | 81.98 | 88.98 | 55.34 | 0.9183 | 2.42 | 3.16 |

| YOLOv9 s | 85.63 | 80.68 | 88.03 | 55.14 | 0.9071 | 2.50 | 3.32 |

| YOLOv10 s | 87.02 | 79.67 | 87.14 | 53.95 | 0.9068 | 2.60 | 3.34 |

| REU-YOLO | 87.24 | 85.31 | 90.06 | 56.72 | 0.9271 | 2.33 | 2.94 |

| Model | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) | R2 | MAE | RMSE |

|---|---|---|---|---|---|---|---|

| SSD | 64.18 | 54.94 | 82.40 | 34.30 | 0.9761 | 3.34 | 4.56 |

| YOLOv5 s | 94.17 | 91.77 | 95.73 | 58.17 | 0.9851 | 2.65 | 3.63 |

| YOLOv8 s | 93.78 | 92.41 | 96.23 | 58.88 | 0.9834 | 3.04 | 4.04 |

| YOLOv9 s | 94.10 | 91.82 | 95.78 | 58.23 | 0.9845 | 2.97 | 3.92 |

| YOLOv10 s | 92.51 | 90.77 | 95.05 | 57.76 | 0.9838 | 2.75 | 3.72 |

| REU-YOLO | 93.90 | 93.17 | 96.34 | 58.98 | 0.9902 | 2.35 | 3.08 |

| Model | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) | R2 | MAE | RMSE |

|---|---|---|---|---|---|---|---|

| SSD | 64.18 | 54.94 | 85.60 | 38.20 | 0.9280 | 3.66 | 4.89 |

| YOLOv5 s | 90.25 | 85.35 | 91.30 | 50.48 | 0.9519 | 2.94 | 3.89 |

| YOLOv8 s | 90.92 | 85.2 | 91.78 | 50.91 | 0.9488 | 2.80 | 3.78 |

| YOLOv9 s | 89.80 | 85.77 | 91.15 | 50.78 | 0.9477 | 3.13 | 4.18 |

| YOLOv10 s | 89.33 | 83.57 | 90.54 | 50.34 | 0.9444 | 3.13 | 4.20 |

| REU-YOLO | 90.58 | 87.41 | 92.10 | 51.44 | 0.9611 | 2.67 | 3.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, D.; Xu, K.; Sun, W.; Lv, D.; Yang, S.; Yang, R.; Zhang, J. REU-YOLO: A Context-Aware UAV-Based Rice Ear Detection Model for Complex Field Scenes. Agronomy 2025, 15, 2225. https://doi.org/10.3390/agronomy15092225

Chen D, Xu K, Sun W, Lv D, Yang S, Yang R, Zhang J. REU-YOLO: A Context-Aware UAV-Based Rice Ear Detection Model for Complex Field Scenes. Agronomy. 2025; 15(9):2225. https://doi.org/10.3390/agronomy15092225

Chicago/Turabian StyleChen, Dongquan, Kang Xu, Wenbin Sun, Danyang Lv, Songmei Yang, Ranbing Yang, and Jian Zhang. 2025. "REU-YOLO: A Context-Aware UAV-Based Rice Ear Detection Model for Complex Field Scenes" Agronomy 15, no. 9: 2225. https://doi.org/10.3390/agronomy15092225

APA StyleChen, D., Xu, K., Sun, W., Lv, D., Yang, S., Yang, R., & Zhang, J. (2025). REU-YOLO: A Context-Aware UAV-Based Rice Ear Detection Model for Complex Field Scenes. Agronomy, 15(9), 2225. https://doi.org/10.3390/agronomy15092225