3.2. Training Results

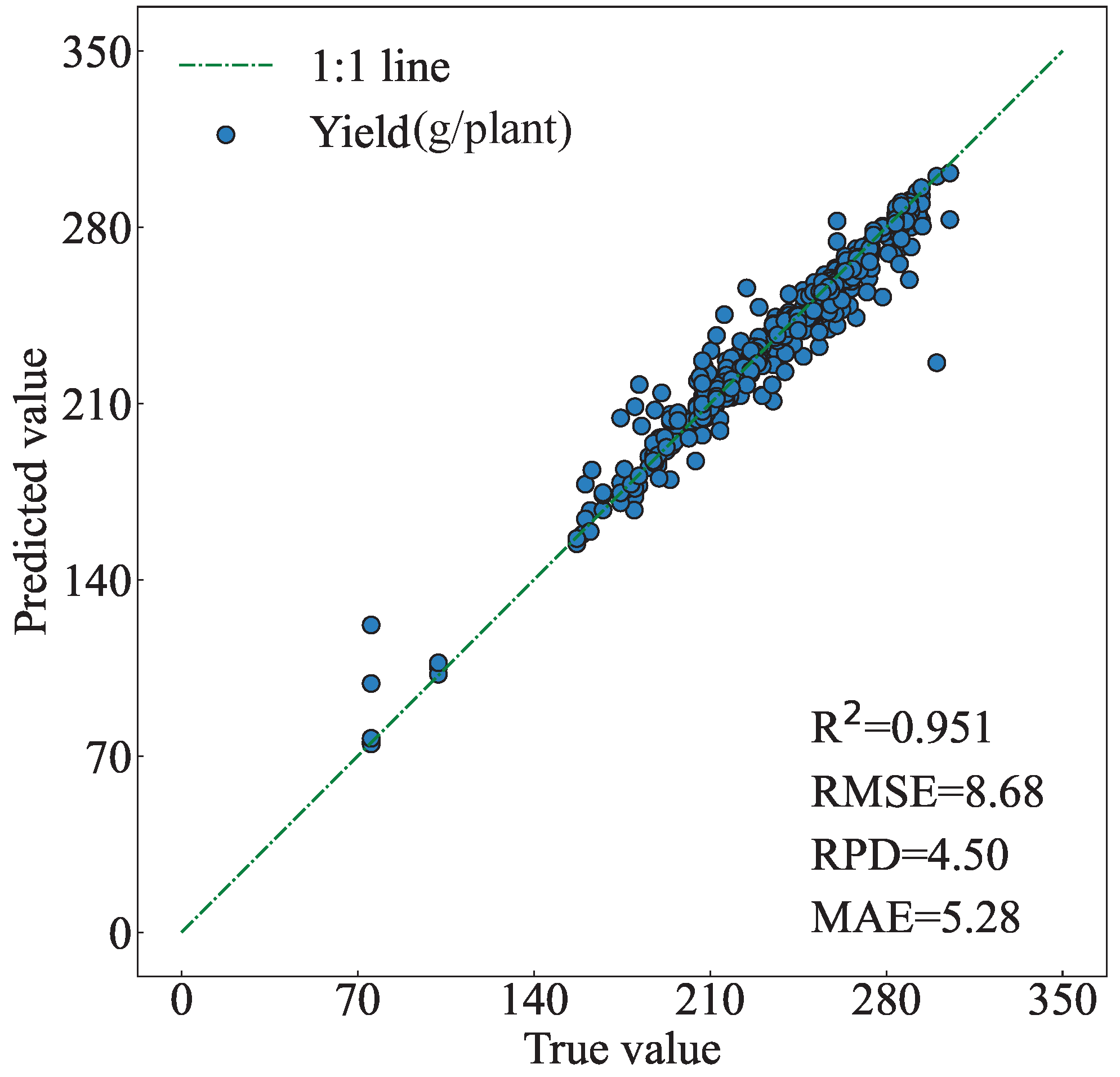

Figure 17 shows the scatter plot of maize yield results obtained using the test set data, achieving an R2 of 0.951, an RMSE of 8.68, an RPD of 4.50, and an MAE of 5.28. The data points are tightly distributed around the 1:1 reference line, demonstrating a high consistency between predicted and actual values, which confirms the superior predictive performance of the CA-MFFNet model.

Further analysis of the data distribution reveals that, while most yield values in the test set fall within the normal range of 140–350 g/plant, there are a few abnormal low-yield samples with yield values below 140 g/plant. Field investigation records indicate that these outliers primarily result from special growth conditions, such as pest and disease damage or improper water and fertilizer management, leading to significantly reduced yields. Notably, despite these abnormal samples deviating from the main distribution, the model maintains stable prediction accuracy, highlighting the robustness and practical value of CA-MFFNet.

Figure 18 compares the predicted and actual maize yields for selected samples in the test set. The results show that the predicted values closely match the actual values, indicating that the model accurately captures maize yield variations and exhibits strong predictive capability.

To ensure statistical rigor and robustness in model performance evaluation, this study employed the Bootstrap resampling method to compute confidence intervals for performance metrics and conducted stratified analysis of prediction errors based on yield quantiles. This approach allows for a comprehensive assessment of model performance across different yield levels.

The results in

Table 4 demonstrate that the model achieves high predictive accuracy and reliability overall. The Bootstrap confidence intervals indicate that all performance metrics remain at high levels with narrow intervals, reflecting robust estimation and strong generalization capability. Specifically, the coefficient of determination R

2 reaches 0.951 (95% CI: 0.929–0.967), RMSE is 8.75 (95% CI: 7.00–10.15), MAE is 5.25 (95% CI: 4.55–5.95), and RPD reaches 4.56—significantly exceeding the threshold of 3.0. These results confirm the model’s high predictive precision and reliability.

As shown in

Table 5, the distribution of prediction errors across different yield groups indicates that the model performs best for the medium-yield group, with an MAE of 4.31 and an RMSE of 6.53. In contrast, relatively higher errors are observed in the low-yield and high-yield groups, with MAE values of 5.93 and 5.60, respectively, and RMSE values exceeding 9.5 in both cases. These results suggest that the model offers more robust predictions for medium yield levels, while its ability to capture extremely high or low yield scenarios is slightly weaker. This limitation may stem from sample distribution bias or the complex agronomic and environmental factors associated with extreme yields that are not fully captured by the features. Nevertheless, the error rates across all yield groups remain within acceptable limits, demonstrating that the CA-MFFNet model maintains strong applicability and stability under varying yield conditions.

Additionally, this study conducted yield prediction using multimodal data from four key growth stages (V6, VT, R3, and R6) to investigate their correlation with maize yield. As shown in

Table 6, the prediction results based on single growth stage data showed significant limitations, with R

2 values all below 0.9 for models built using individual stages. This may be attributed to the limited sample size from single growth stages, making it difficult for the model to fully learn the complex patterns of yield formation, resulting in insufficient generalization ability and reduced prediction accuracy.

In contrast, integrating data from all four key growth stages significantly increased the sample size, effectively avoided the complexity associated with cross-stage feature fusion. Improving the model’s

2 to 0.951, representing a 0.052 increase compared to the best single growth stage model.

Figure 19 visually compares the yield prediction results from different growth stages. Notably, when using single stage data for yield prediction, the R6 growth stage data showed abnormal performance, with significantly increased RMSE and a 0.05 decrease in R

2 compared to the R3 stage. This suggests that R6 stage data may have weak correlation with maize yield, which contradicts conclusions from previous studies [

38,

39]. Based on our analysis, no abnormal precipitation occurred during the early growth stages such as V6, VT, and R3. However, prior to data collection at the R6 stage (from mid-August to late September), the experimental area experienced sustained heavy rainfall combined with excessive irrigation, leading to a significant increase in soil volumetric water content. This resulted in localized soil supersaturation and root zone hypoxia, which adversely affected crop physiology. Consequently, both leaf physiological indicators and spectral features exhibited a negative correlation with final yield, making it difficult to accurately predict maize yield during the R6 stage under these conditions.

3.3. Comparative Experiments

To validate the effectiveness of multimodal data fusion and demonstrate the performance advantages of the CA-MFFNet model in maize yield prediction, this study designed multiple comparative experiments.

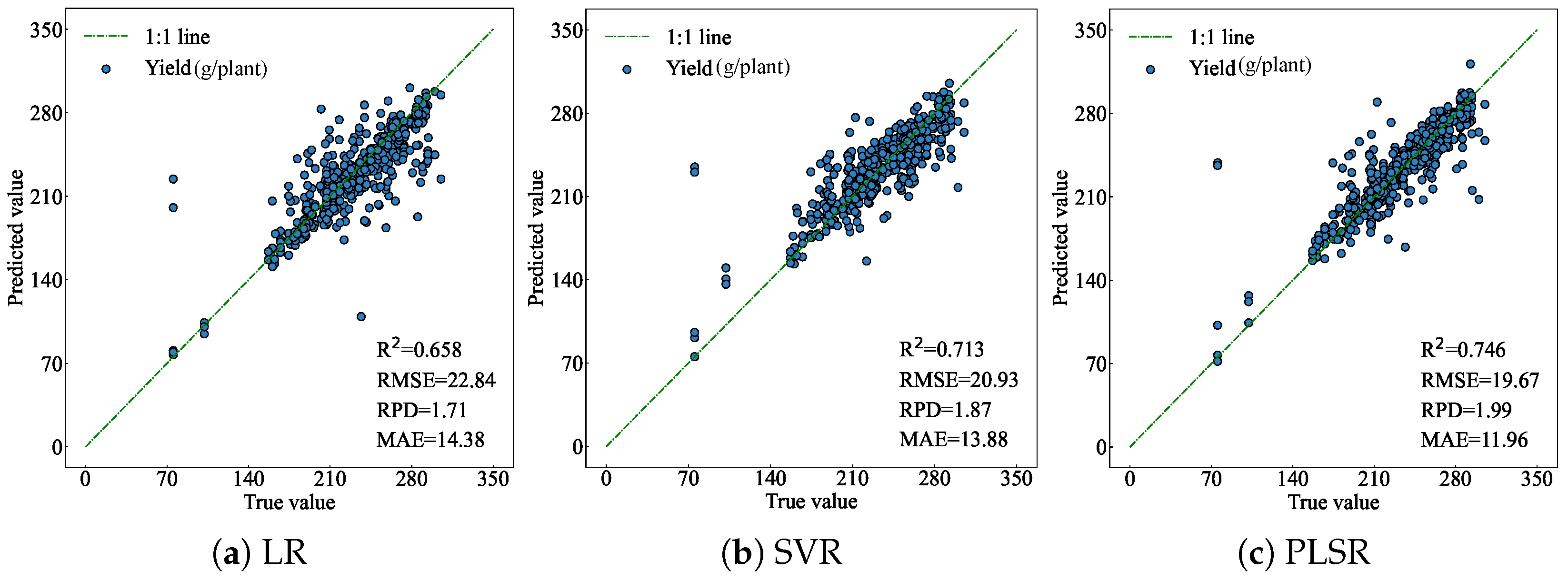

To systematically evaluate the predictive performance of the CA-MFFNet model, it was compared with traditional machine learning methods, including logistic regression (LR), partial least squares regression (PLSR), and support vector regression (SVR). As shown in

Figure 20, the prediction results obtained using traditional models exhibited significant dispersion, with R

2 values not exceeding 0.75. The scatter points deviated markedly from the 1:1 reference line compared to those of the CA-MFFNet model. These results indicate that traditional machine learning models, constrained by their shallow architectures, struggle to effectively capture the complex nonlinear features in hyperspectral data. In contrast, the CA-MFFNet model, leveraging its deep learning framework, excels at extracting and utilizing deep feature information from high-dimensional spectral data, thereby significantly improving yield prediction accuracy.

To comprehensively evaluate the superior performance of the CA-MFFNet model, it was compared against traditional baseline methods including Random Forest, XGBoost, and Elastic Net. As illustrated in the

Figure 21, the prediction results obtained by these conventional models exhibit notably scattered distributions, with R

2 values none exceeding 0.85. Their deviation from the 1:1 reference line is significantly greater than that of CA-MFFNet, which may be attributed to the limited capacity of traditional models to adaptively learn complex, nonlinear interactions among multimodal data. These results demonstrate that, compared to conventional baseline models, CA-MFFNet shows remarkable advantages in yield prediction tasks. By leveraging an end-to-end deep learning framework, it achieves direct mapping from raw input data to prediction targets. The integration of multiple attention mechanisms allows the model to automatically weigh the importance of different modal information and uncover deep-level relationships between spectral features and yield formation, ultimately leading to more accurate and reliable yield predictions.

To further validate the superiority of the CA-MFFNet model, it was compared with two recently proposed one-dimensional models: TCNA [

40] and Multi-task 1DCNN [

33]. As shown in

Figure 22, the CA-MFFNet model demonstrates the best agreement between predicted and actual values, with its scatter points showing the closest distribution to the 1:1 reference line, achieving R

2 of 0.951 and RPD of 4.50, indicating the highest prediction accuracy. In comparison, the TCNA model has the weakest performance, with R

2 not exceeding 0.8, only suitable for rough yield prediction of maize. Although the Multi-task 1DCNN model outperforms TCNA, its prediction accuracy remains significantly lower than the model proposed in this study. These comparative results fully demonstrate the advancement and reliability of the CA-MFFNet model in using multimodal data for maize yield prediction.

To systematically demonstrate the superior performance of attention mechanisms in feature extraction, three control experiments were designed: removing both SAM and CAM, removing only SAM, and removing only CAM. As shown in

Figure 23, the results showed that the complete CA-MFFNet model achieved the best alignment with the 1:1 reference line, outperforming all the variant models. Specifically, removing both SAM and CAM caused the most significant performance degradation, RMSE increased by 2.76, while removing SAM or CAM alone reduced R

2 by 0.025 and 0.019, respectively. These results indicate that SAM and CAM effectively enhance feature extraction by capturing correlation features in leaf spectra and optimizing channel-wise feature representations. Their synergistic effect maximizes the extraction of yield-related spectral features, significantly improving prediction accuracy.

Control experiments using only leaf spectral data, fused soil and leaf spectral data, and fused biochemical parameters and leaf spectral data were designed to validate the effectiveness of different multimodal data fusions for yield prediction. As shown in

Figure 24, different input combinations produced significantly different prediction results. The experimental results demonstrate that the model incorporating the multimodal data fusion achieves optimal predictive performance, showing significantly better agreement between the predicted and actual values compared to using single leaf spectral data or other control experimental schemes, with predictions more closely aligned to the 1:1 reference line. This indicates that multimodal data fusion can fully leverage complementary information from different data sources, effectively enhancing both the prediction accuracy and the generalization capability of the model.

Further analysis reveals that incorporating either biochemical parameters or soil spectral data provides auxiliary benefits for maize yield prediction based on leaf spectral data. Notably, the enhancement effect of leaf biochemical parameters on yield prediction performance is markedly superior to that of soil spectral features. This difference may be attributed to the more direct correlation between leaf biochemical parameters and crop physiological processes related to yield formation. Chlorophyll concentration determines light energy capture efficiency and photosynthetic potential; meanwhile, nitrogen, as a key component of proteins and enzymes, directly regulates photosynthetic function and grain development during yield formation. These biochemical parameters thus serve as quantitative representations of the crop’s physiological state, providing biologically interpretable and direct explanatory variables for yield prediction. In contrast, the influence of soil on yield is indirect, primarily mediated through its regulation of root development, water availability, and nutrient supply, which collectively shape the physiological status of the crop. Furthermore, soil spectral data constitute a high-dimensional dataset containing numerous bands, many of which exhibit weak correlations with yield and may introduce noise or redundant information, thereby complicating feature extraction and model generalization.

To evaluate the effectiveness of different preprocessing methods for hyperspectral data, a comparative analysis was conducted between the Z-Score normalization used in this study, Savitzky–Golay (SG) smoothing, and Standard Normal Variate Transformation (SNV). As shown in the

Figure 25, the prediction results of the CA-MFFNet model using Z-Score normalization exhibited strong agreement with the measured values, with scatter points distributed most closely to the 1:1 reference line. In contrast, the scatter plots obtained using SG and SNV showed greater dispersion, with SG in particular achieving an R

2 of only 0.823, suggesting that the SG method may have introduced waveform distortion or derivative noise. The Z-Score method, meanwhile, effectively eliminated scale differences and mitigated the influence of outliers while preserving the original spectral morphology to the greatest extent. As a result, it provided more stable and interpretable input variables, ultimately enhancing the accuracy of the yield prediction model.

Figure 26 presents the prediction results from ablation experiments conducted on the leaf spectral branch of the CA-MFFNet model. As shown in

Figure 26a,b, comparison with data processing approaches that exclude DWT and preprocessing reveals that the scatter plots obtained without DWT and preprocessing exhibit greater dispersion compared to the CA-MFFNet model. Particularly, the prediction results from raw data without preprocessing show the poorest performance, with R

2 reaching only 0.733. This highlights the critical role of preprocessing in enhancing hyperspectral data quality. The absence of DWT reduced the model’s ability to capture spectral detail features, decreasing R

2 by 0.028, demonstrating that its multi-scale decomposition effectively extracts both high-frequency details and low-frequency features from the spectra, thereby enriching feature representation and providing more comprehensive feature information for yield prediction.

The scatter plot of prediction results obtained by removing SFE is shown in

Figure 26c. The results indicate that, compared to the complete CA-MFFNet model, eliminating SFE significantly reduced prediction accuracy, producing more scattered data points relative to the 1:1 reference line, with R

2 reaching 0.921. This confirms that SFE, as a feature extraction method, effectively isolates yield-related spectral information from leaf spectral characteristics while suppressing interference from background noise and redundant data, substantially improving the accuracy of maize yield predictions.

The SE module significantly improves soil spectral feature extraction.

Figure 27a shows the prediction results after removing SE from the model. Compared with predictions without SE, the CA-MFFNet model demonstrates a tighter distribution of predicted versus actual values along the 1:1 reference line, with R

2 increasing by 0.017 and RMSE decreasing by 1.35. These results indicate that SE effectively enhances key yield-related features in soil spectra while suppressing noise interference in raw data, thereby improving maize yield prediction performance.

To validate the superiority of the cross-attention mechanism in multimodal data fusion, we compared it with direct feature concatenation of leaf features with soil and biochemical parameter features from the multimodal feature extraction module. As shown in

Figure 27b, predictions without cross-attention exhibit more scattered data points, with R

2 decreasing by 0.02 and RMSE increasing by 1.57. In contrast, the CA-MFFNet model with cross-attention demonstrates superior prediction performance, showing tighter clustering of data points around the 1:1 reference line. This comparison proves the advantage of cross-attention in multimodal data fusion, as it effectively integrates multimodal data by establishing dynamic weight relationships between modalities, significantly improving yield prediction reliability.